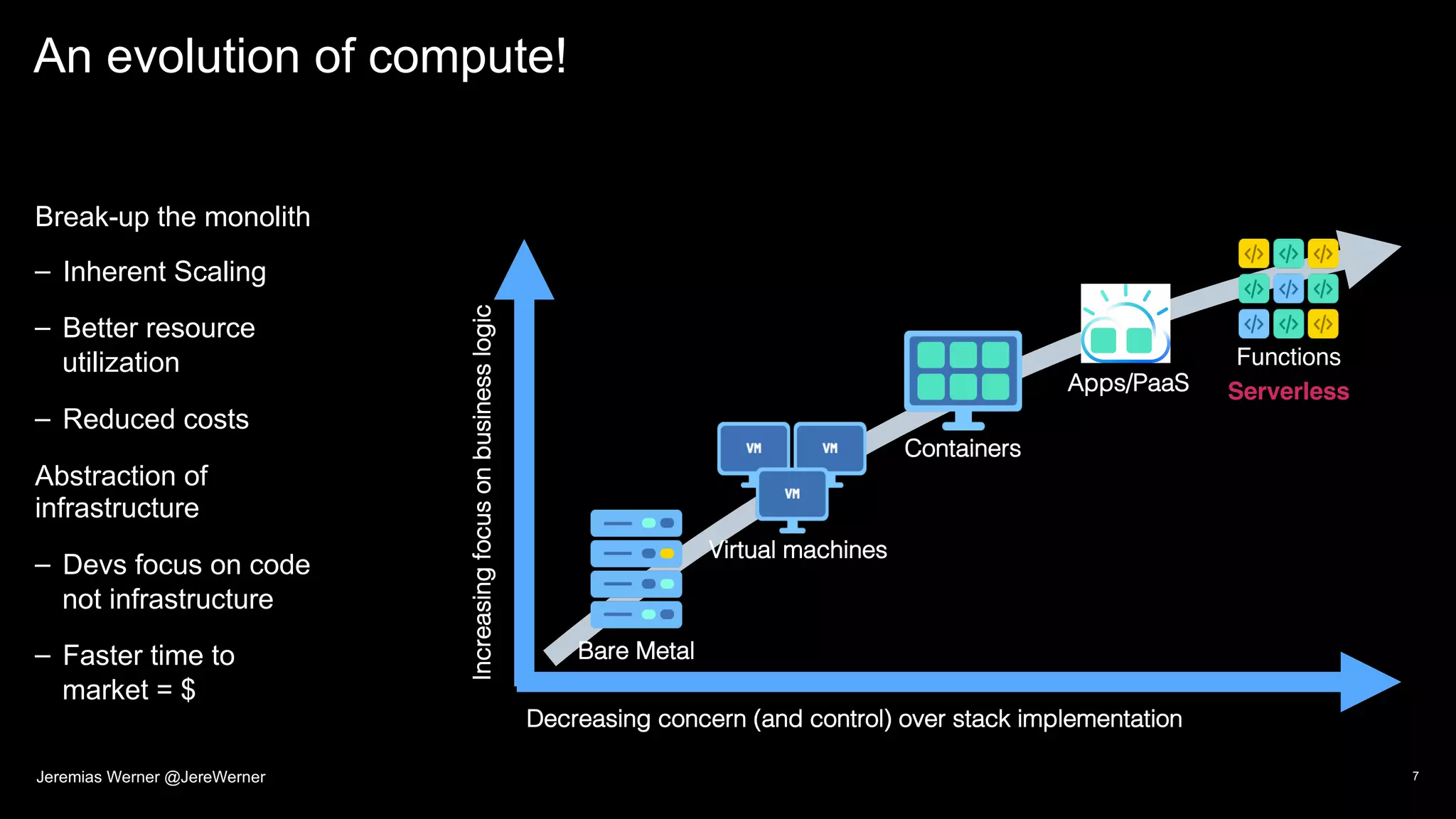

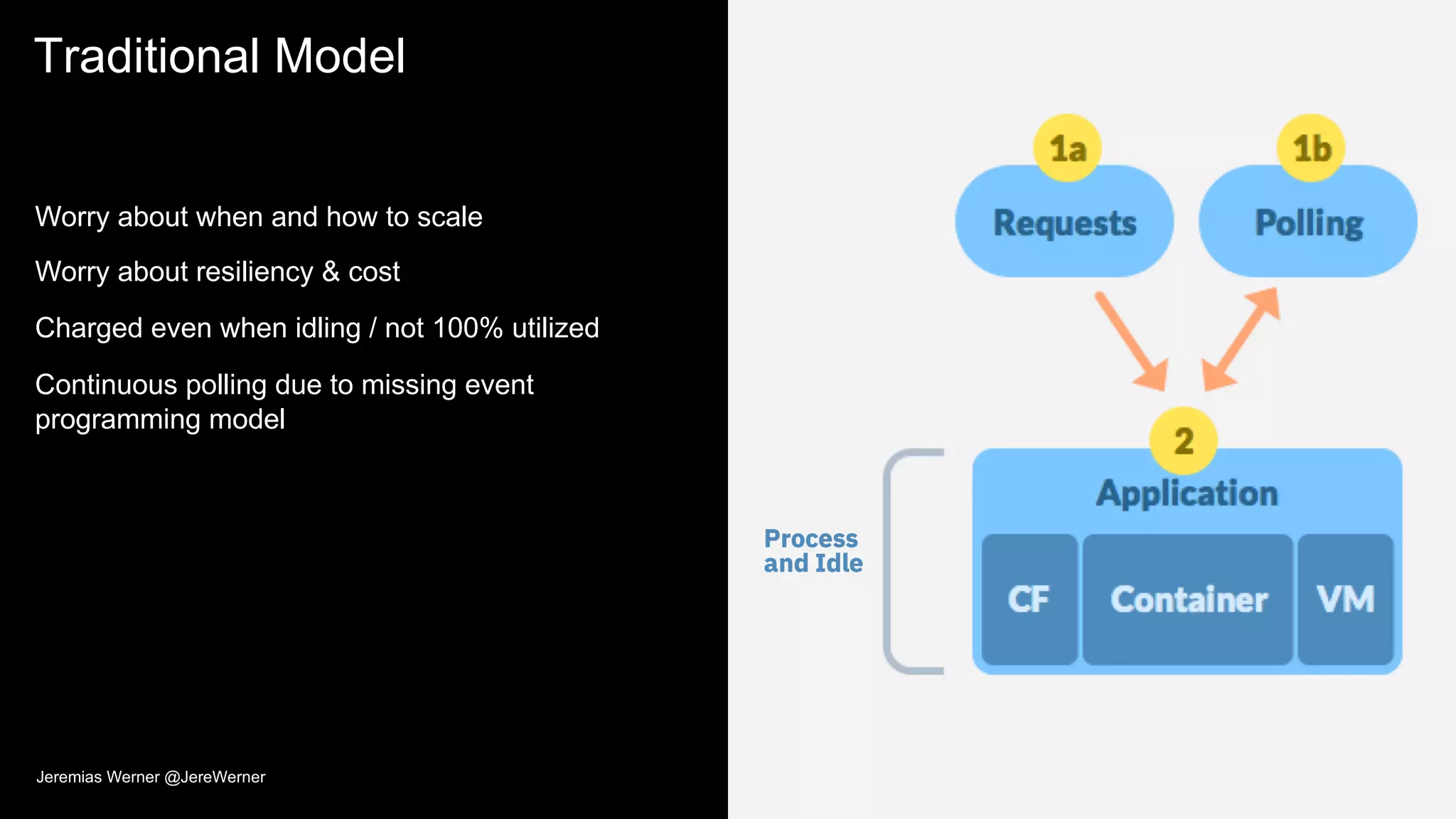

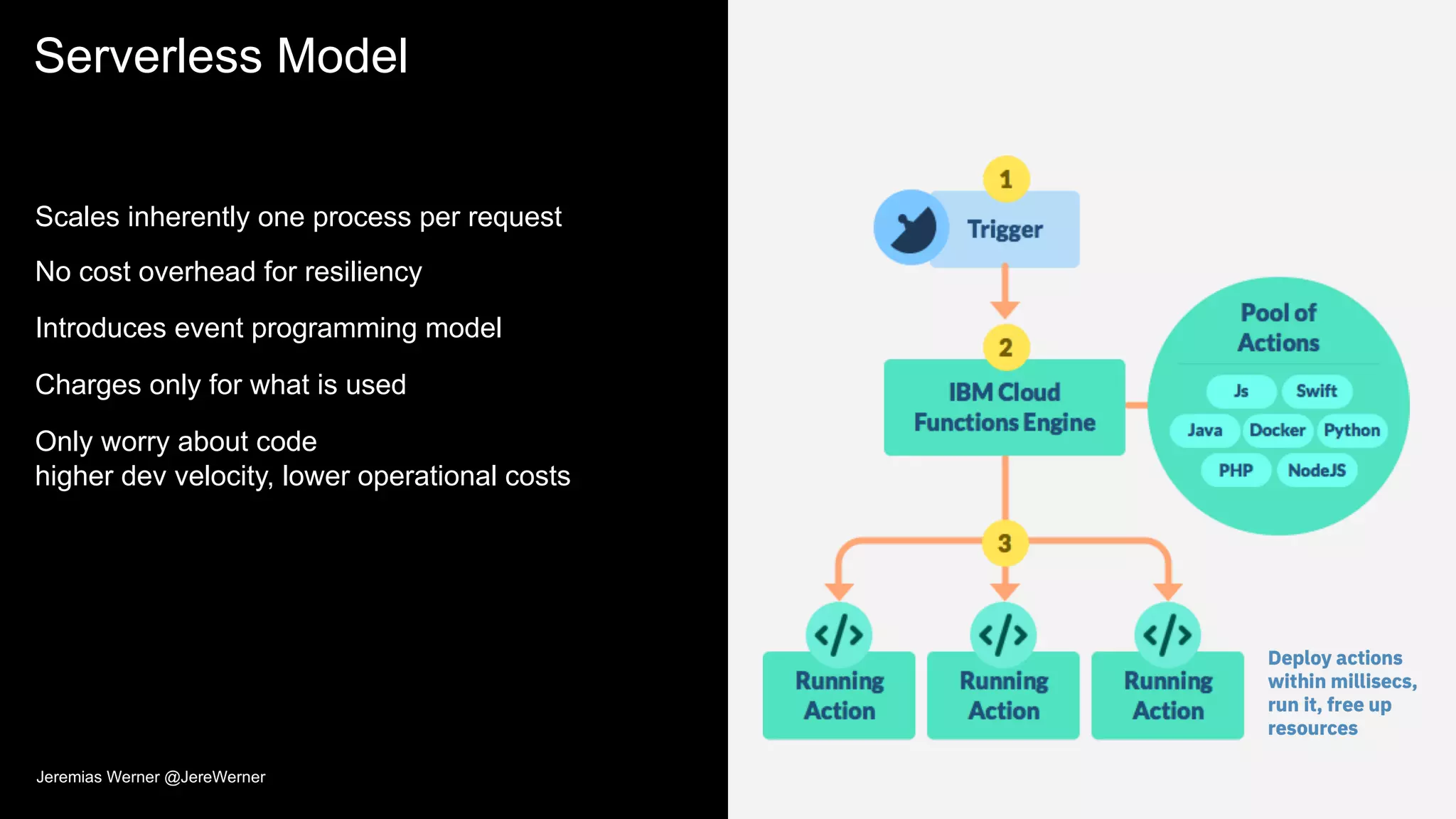

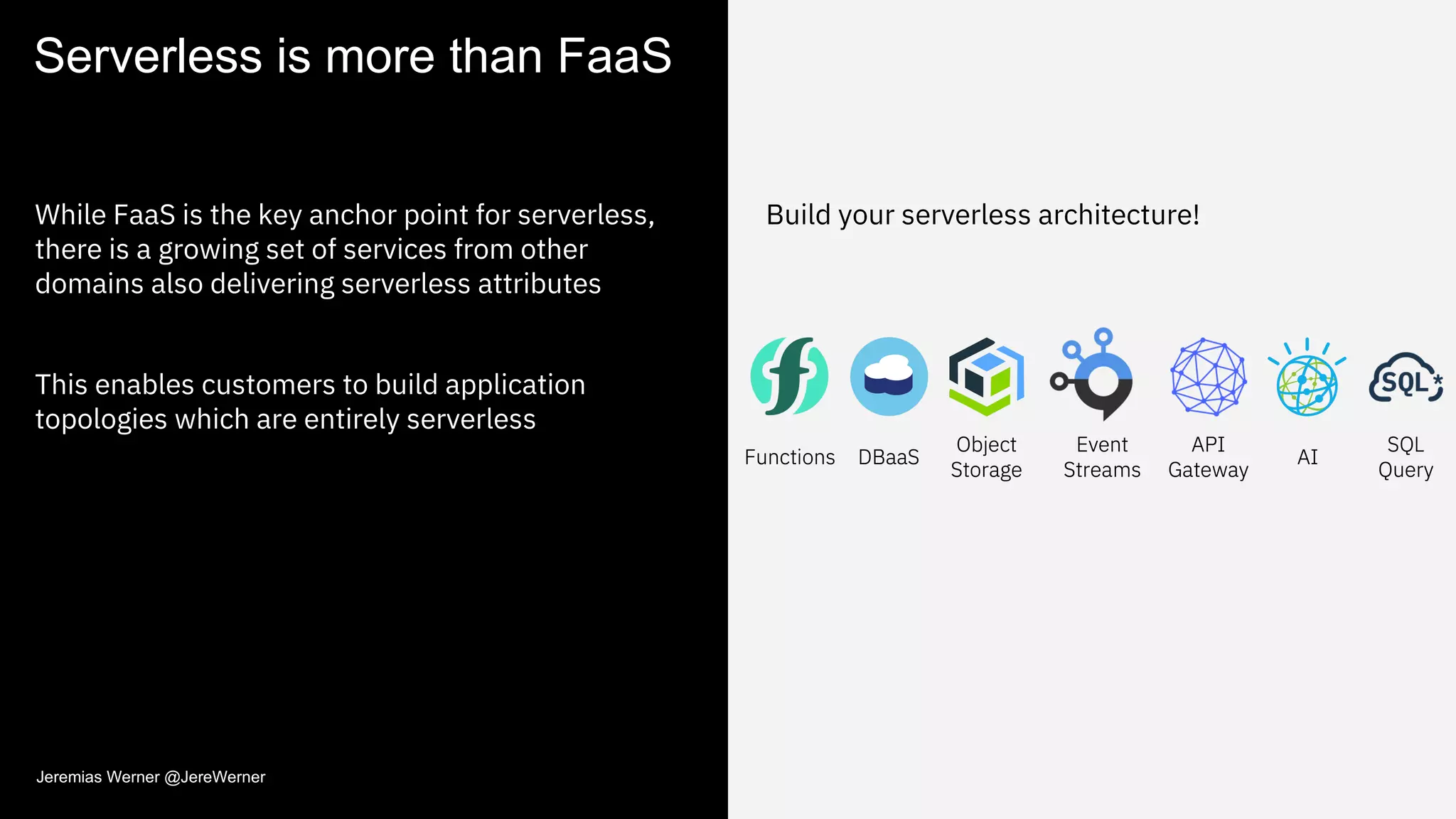

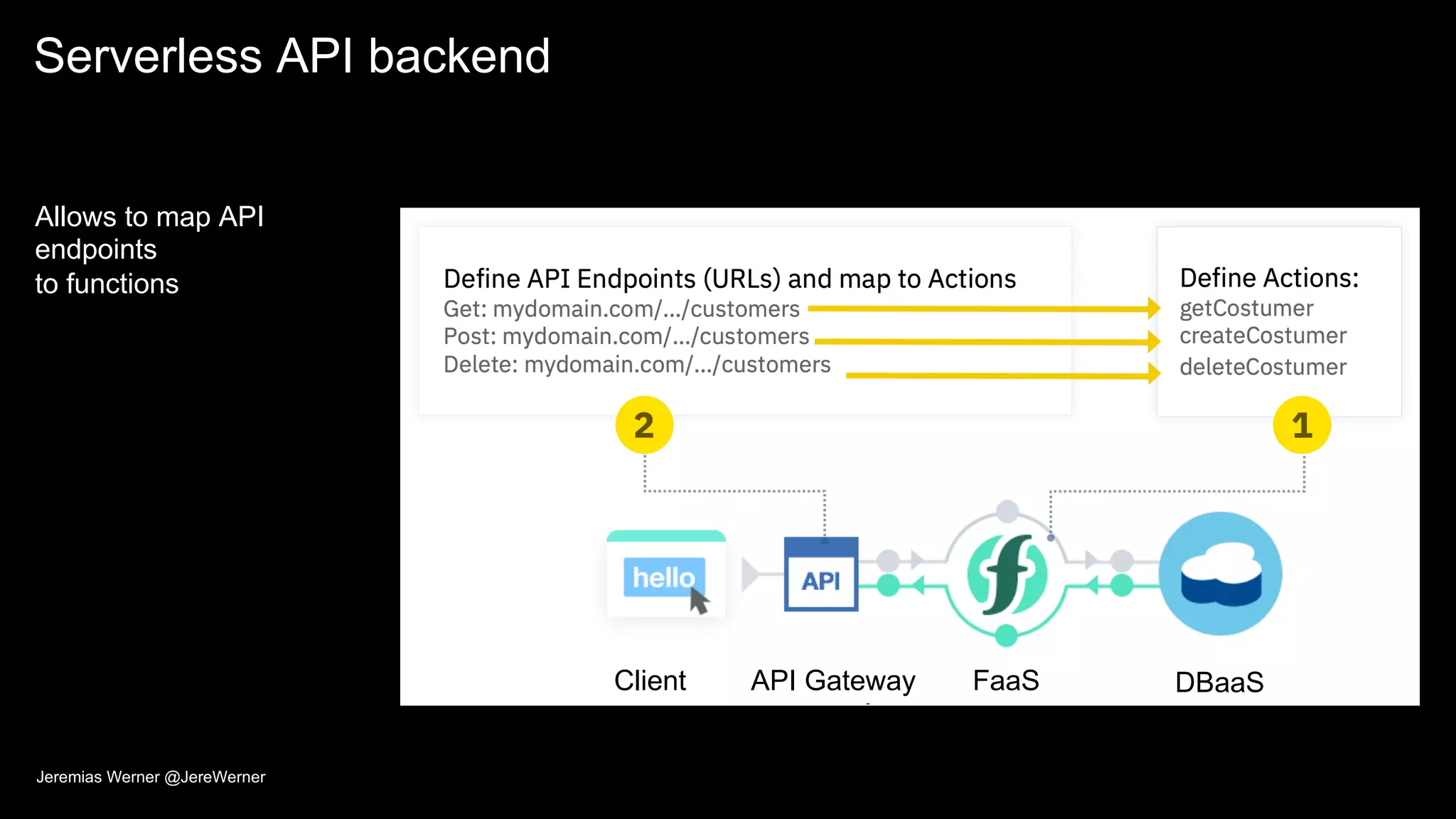

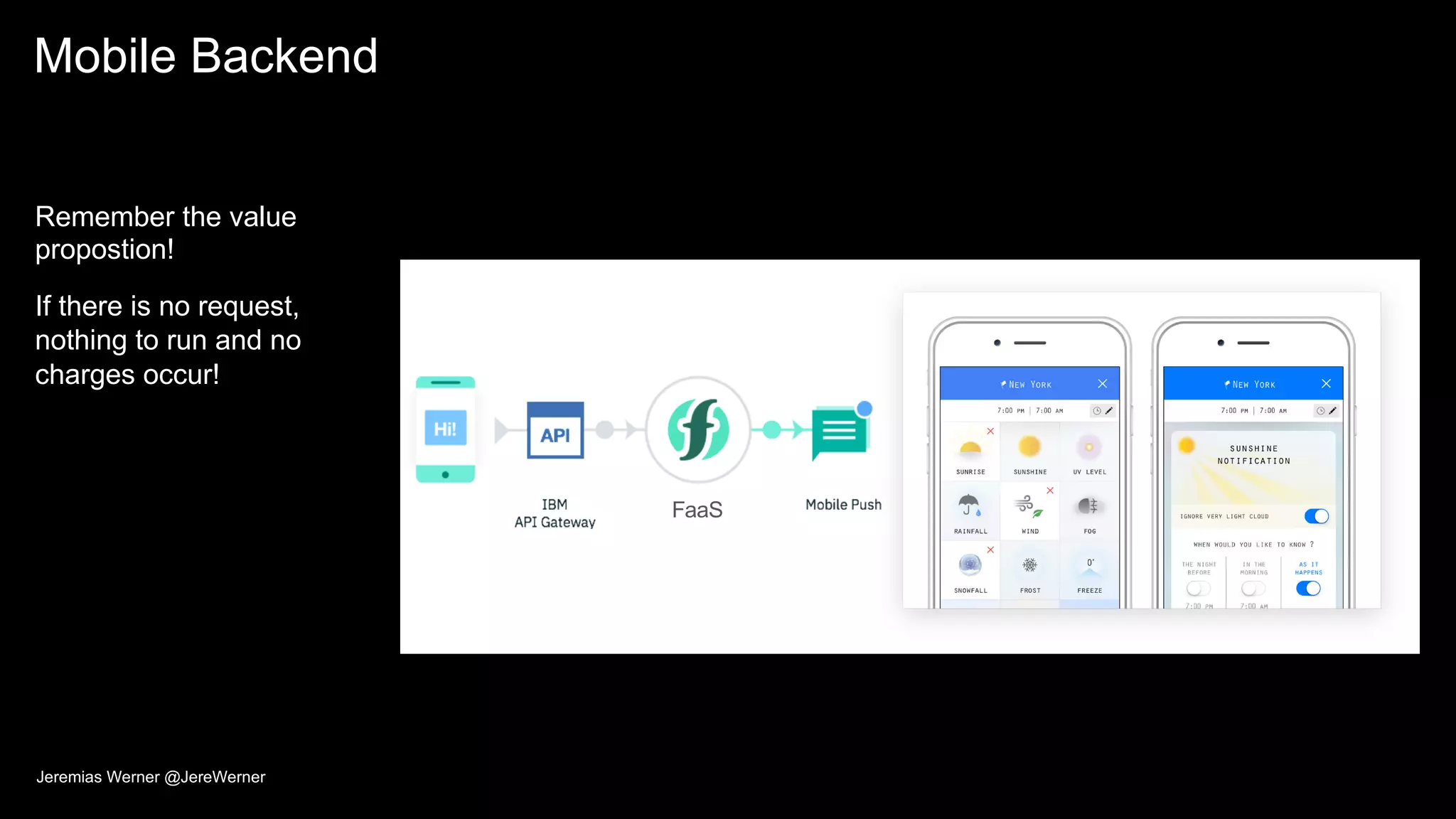

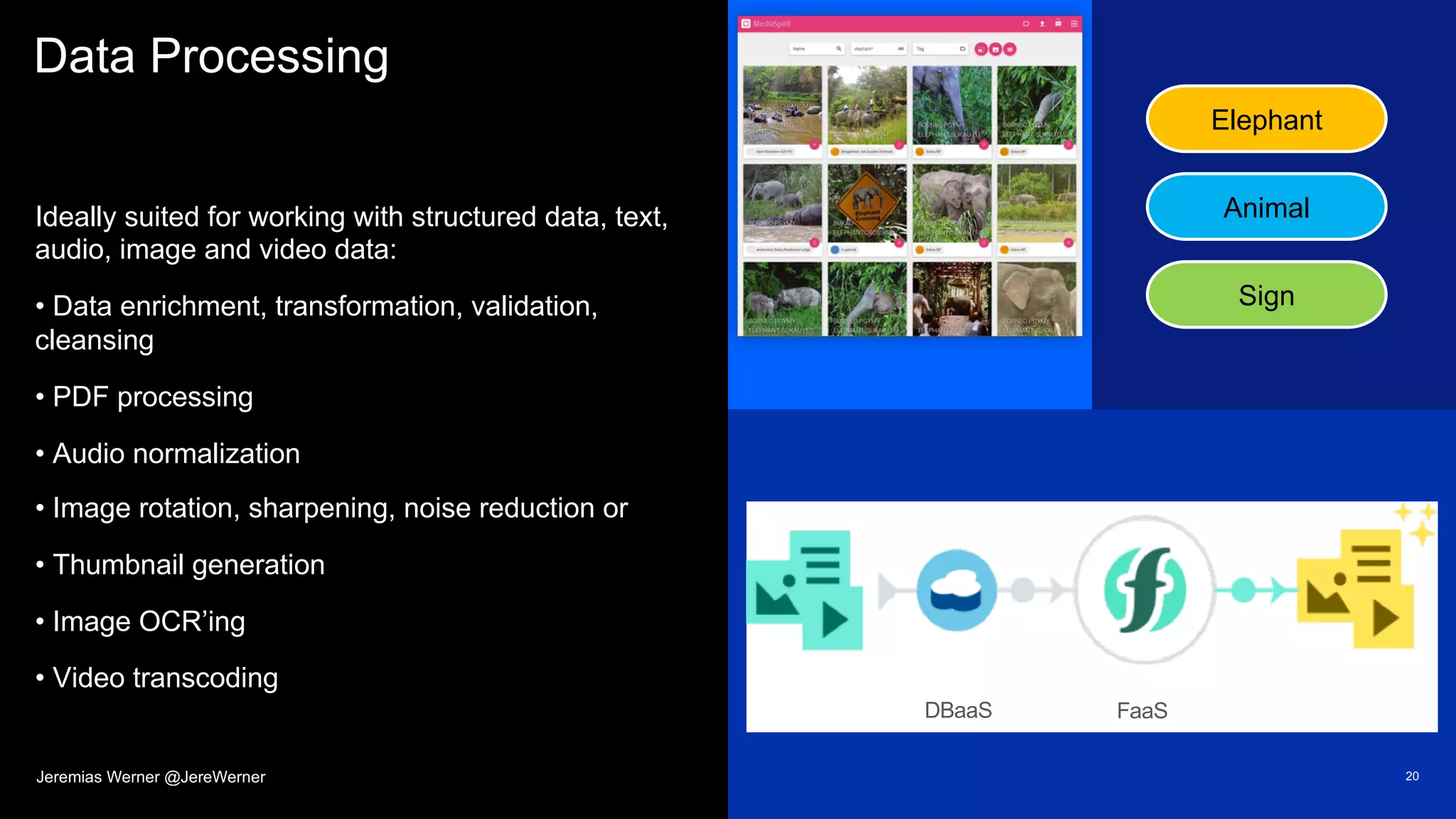

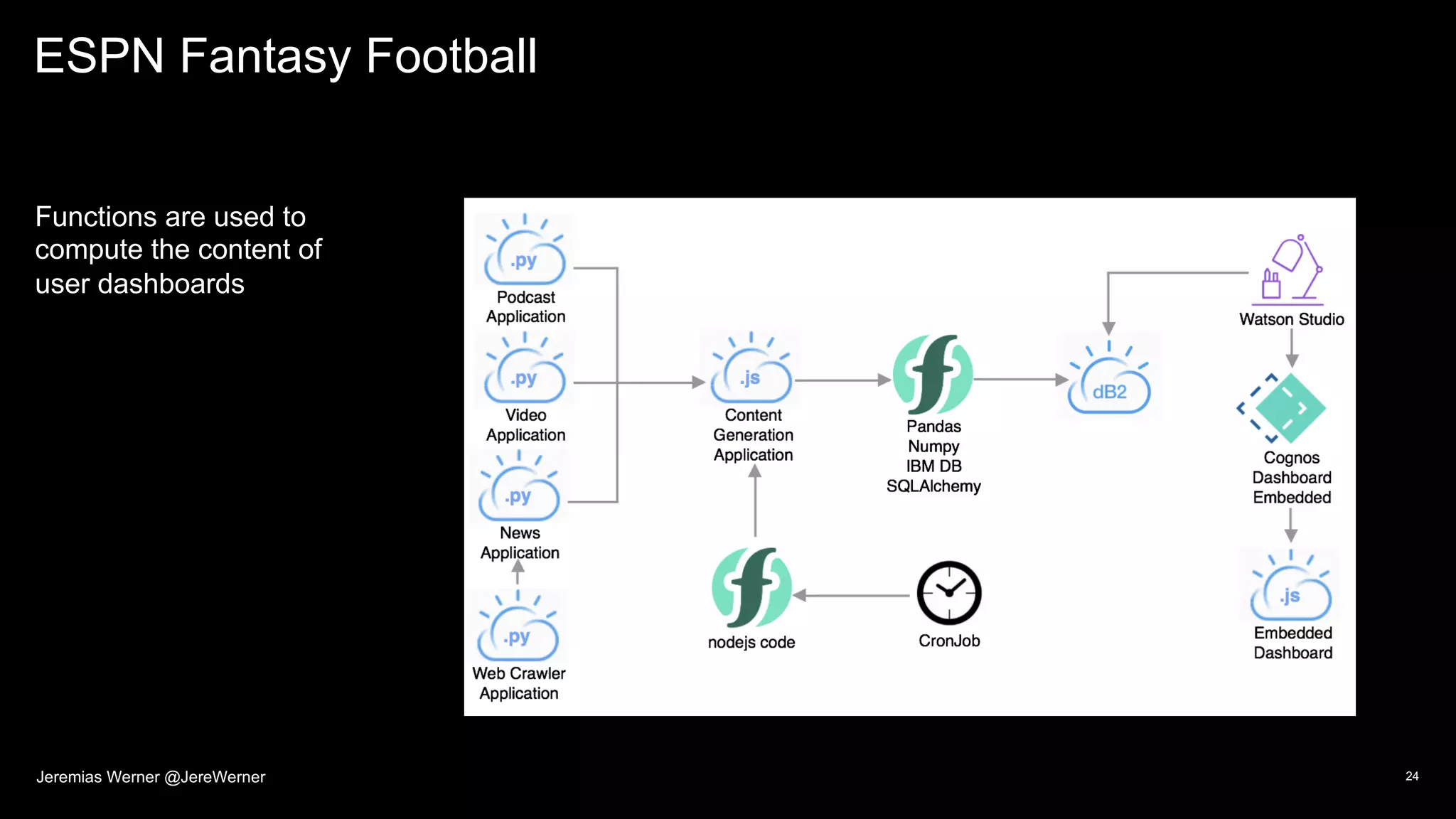

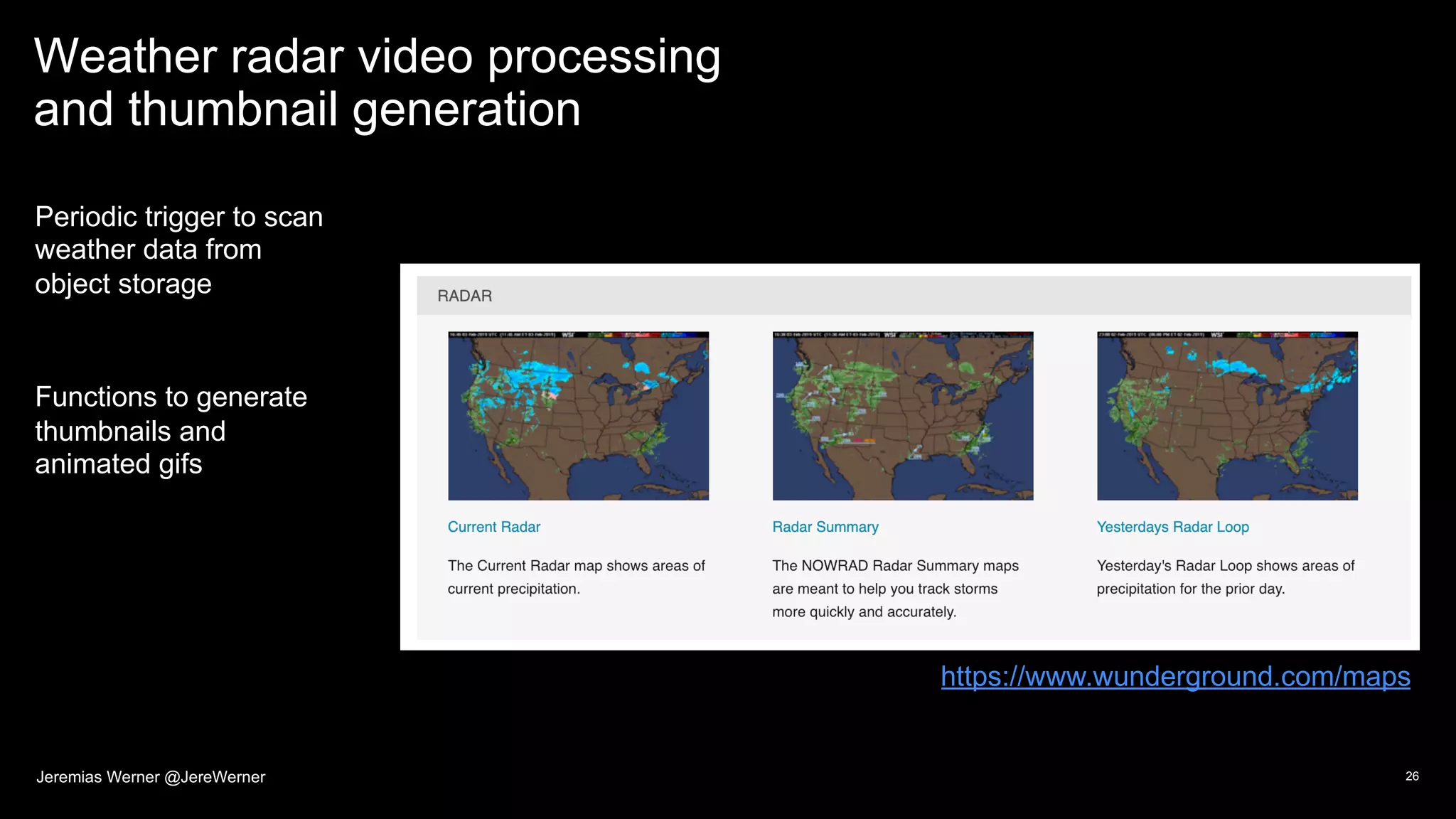

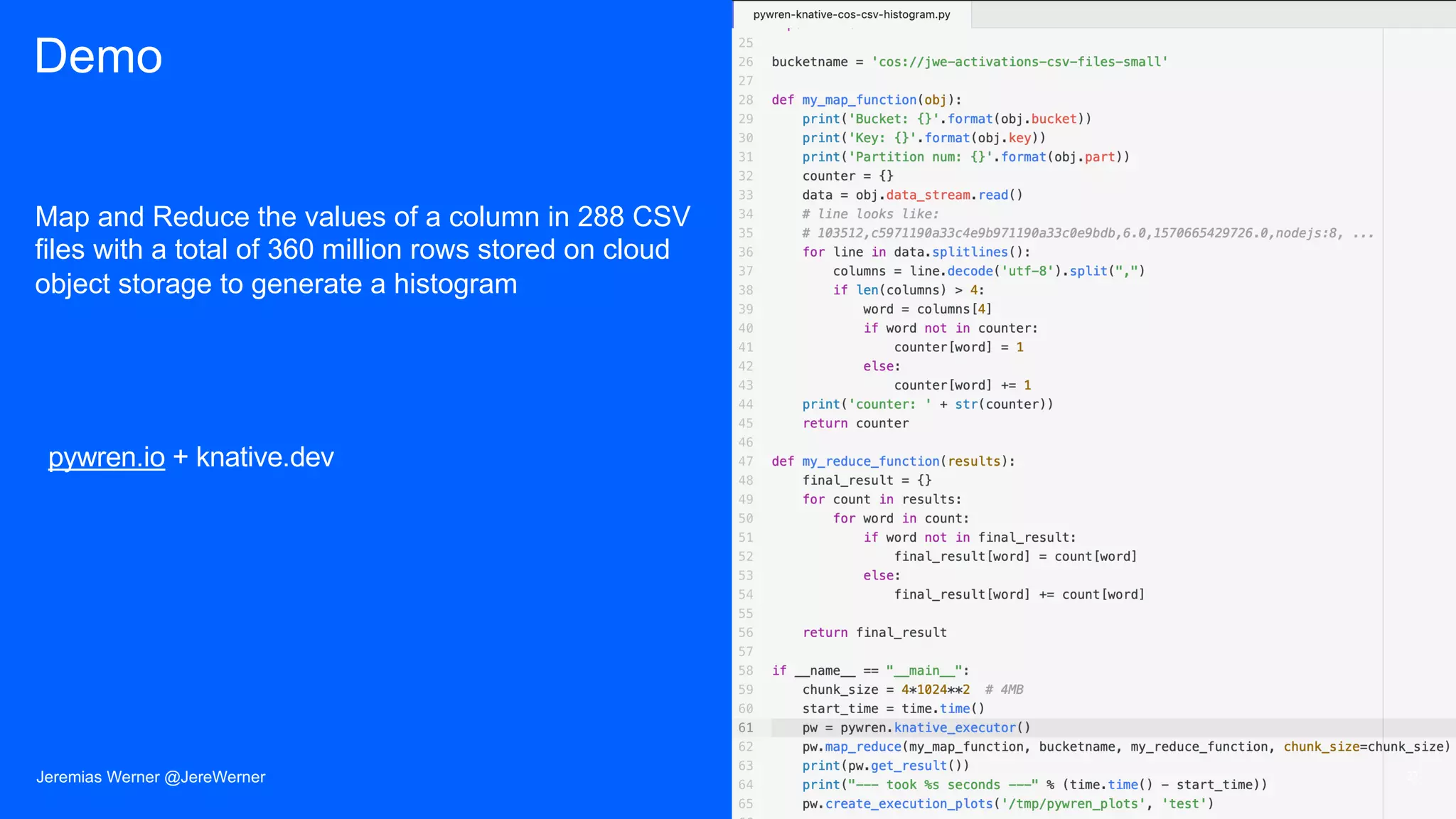

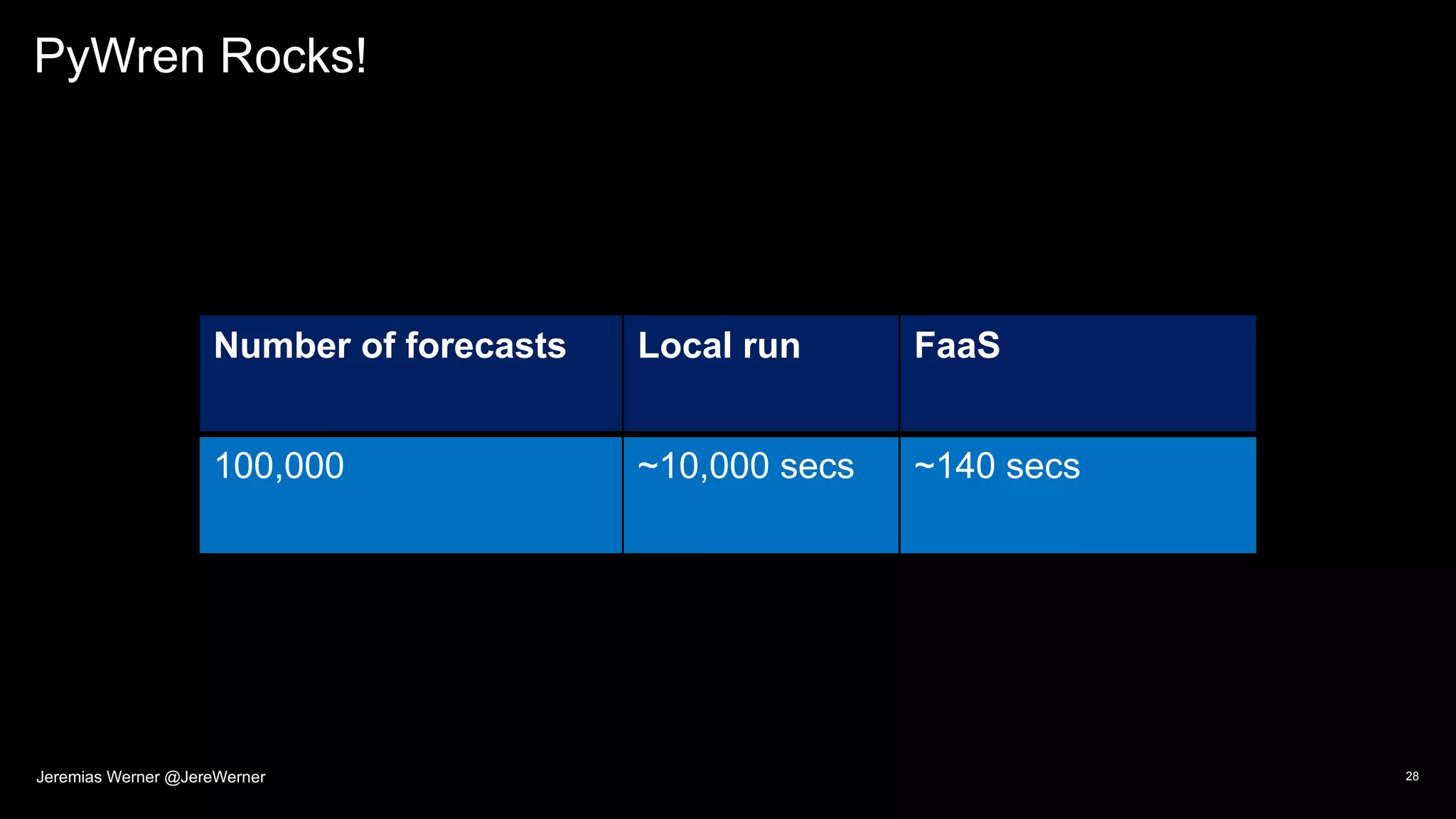

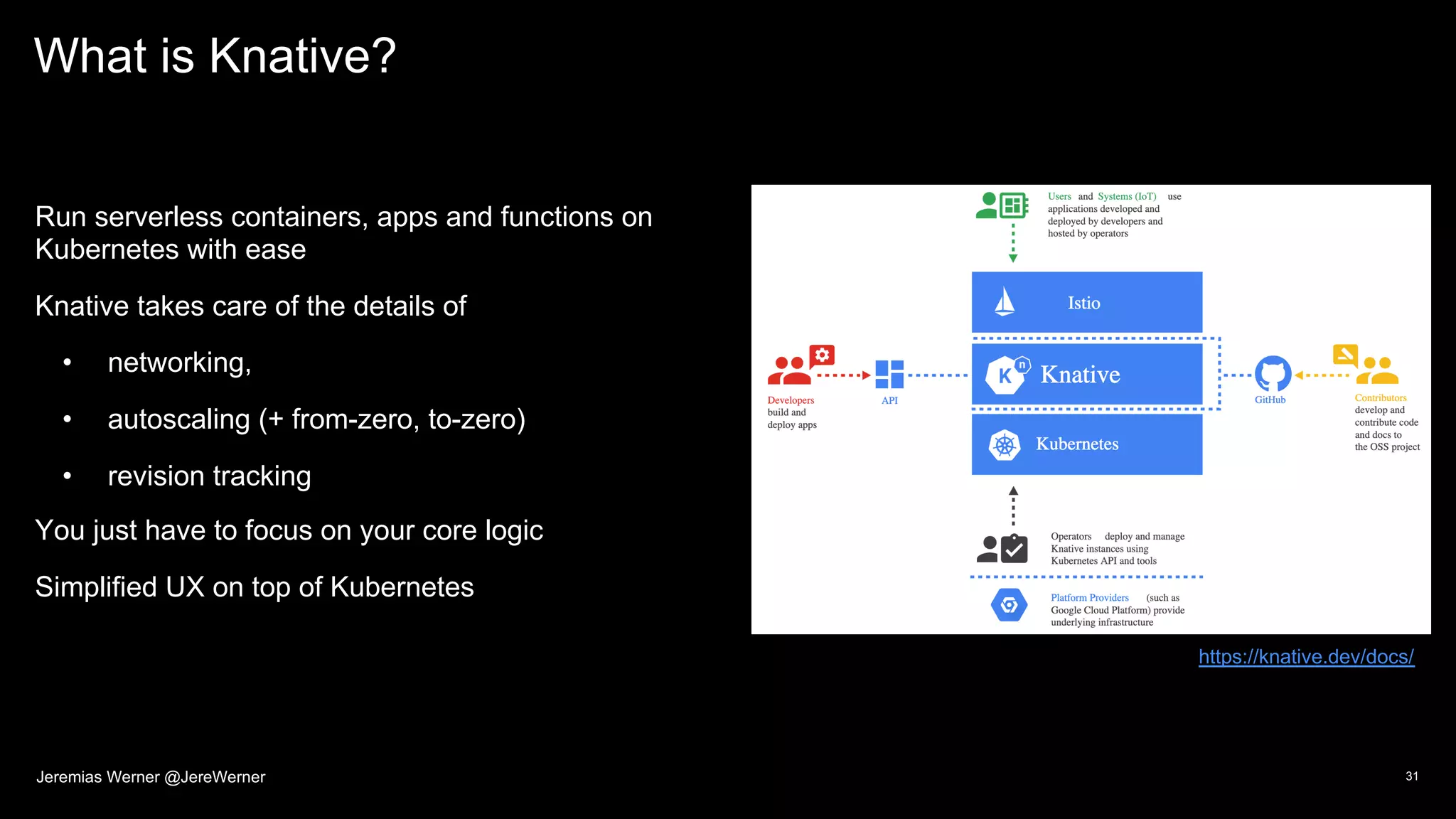

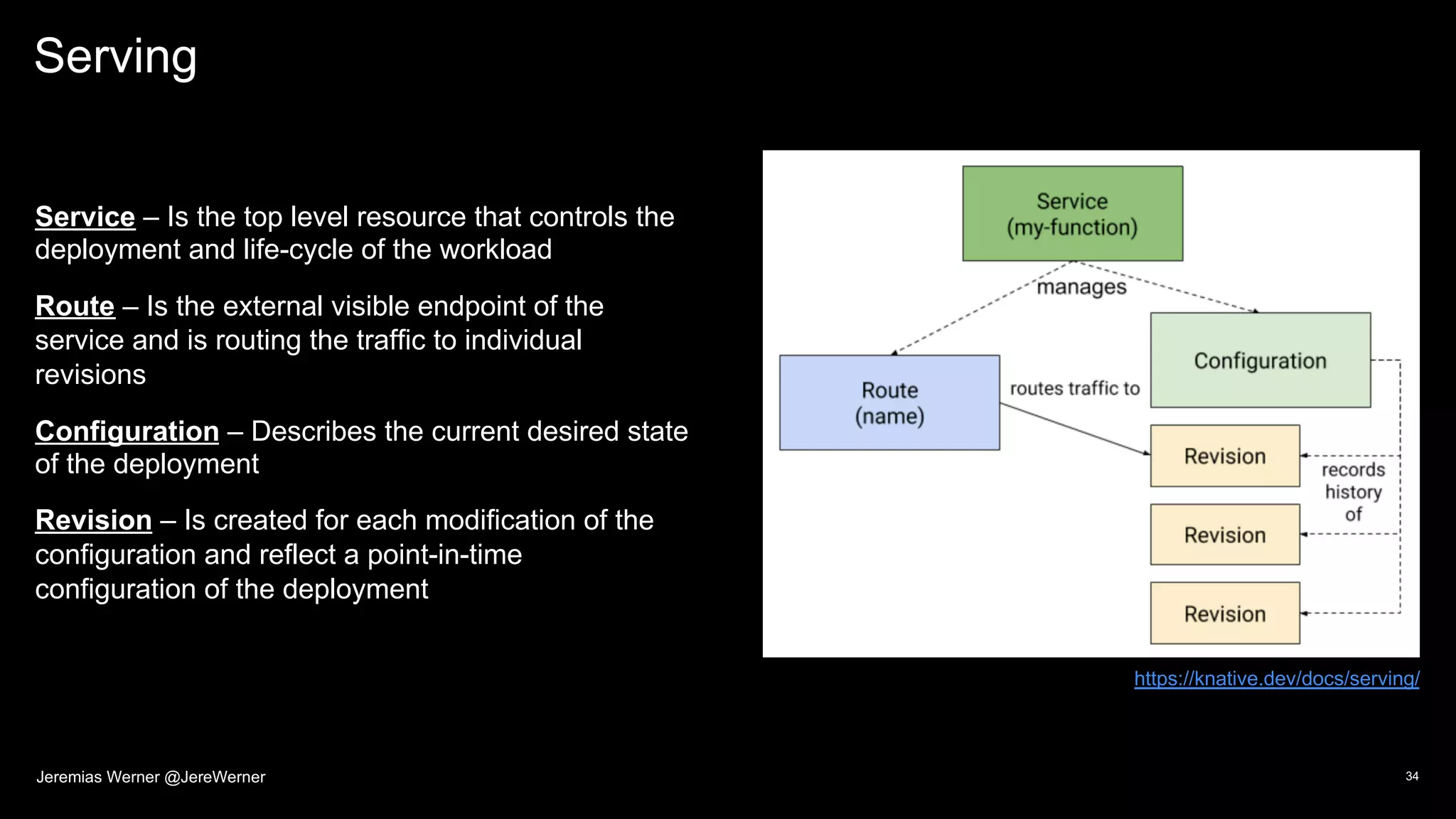

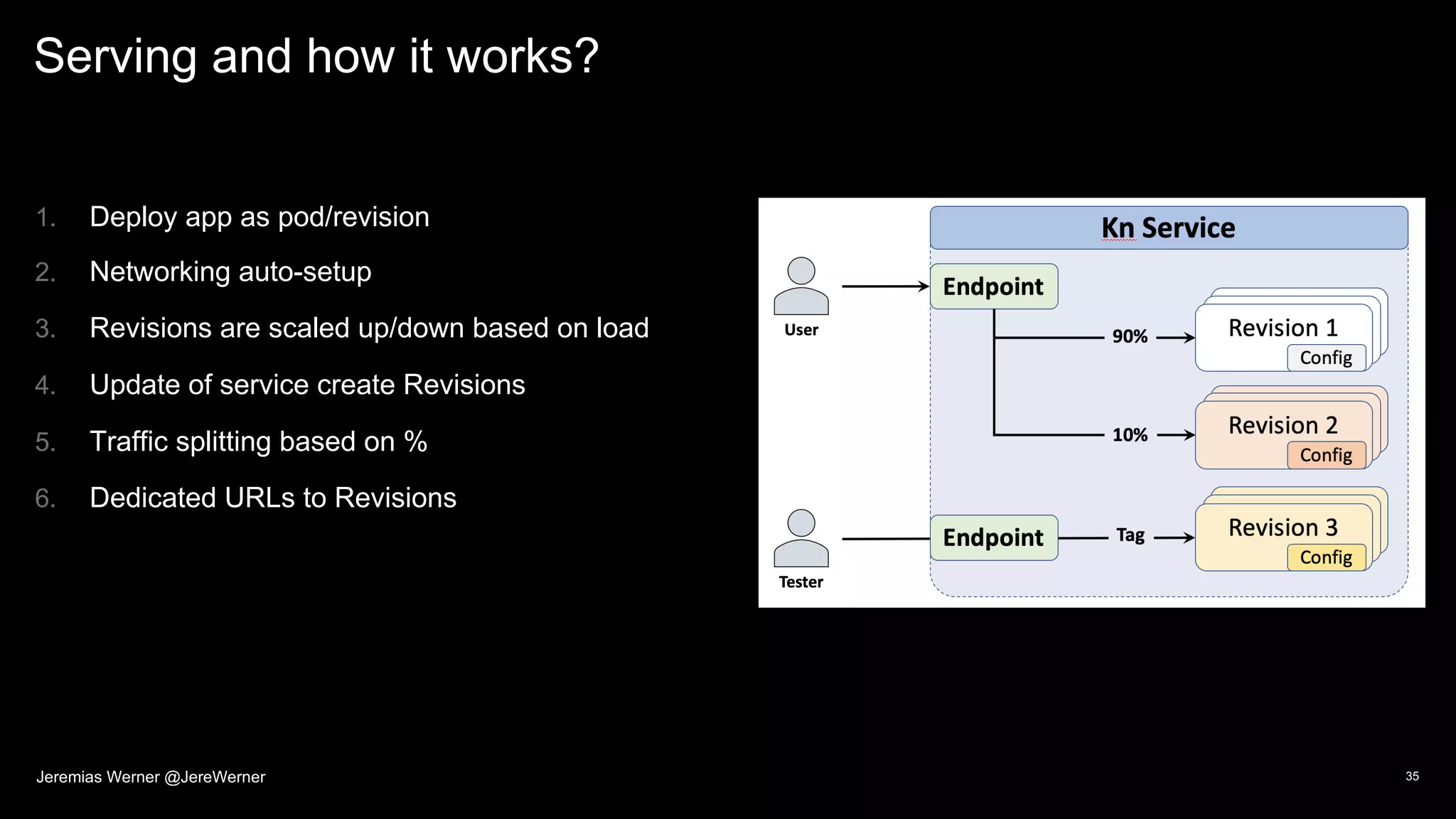

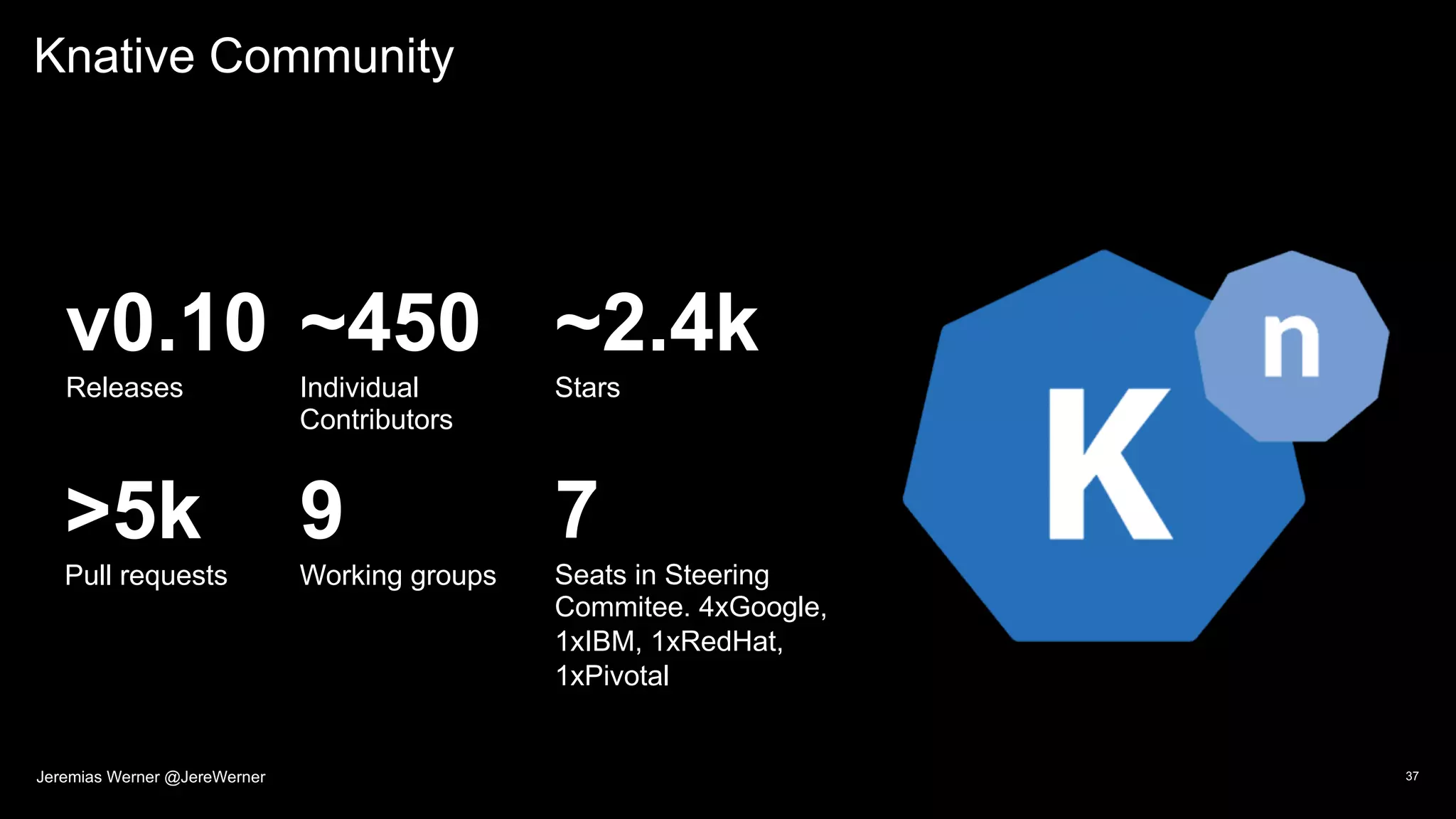

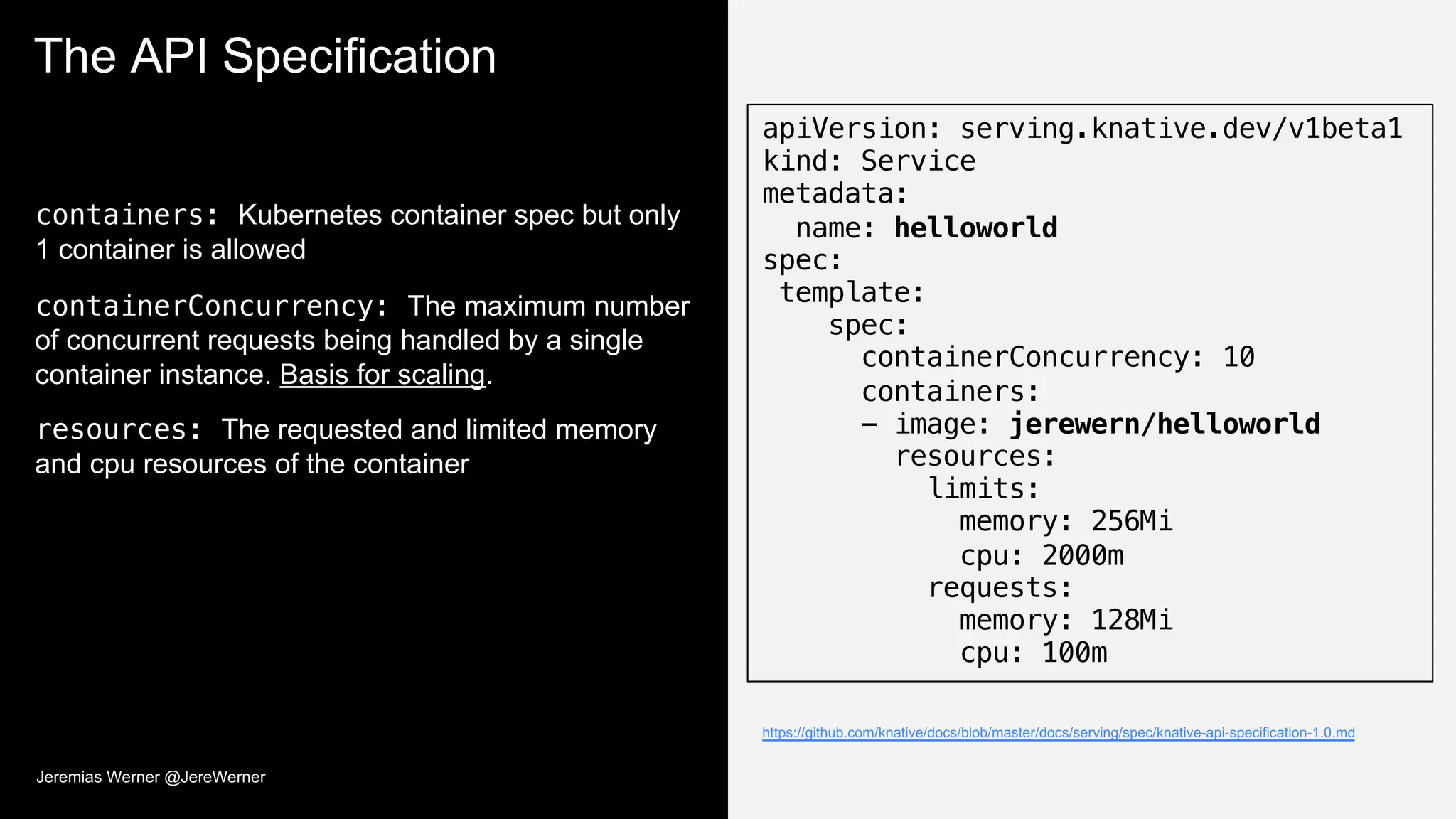

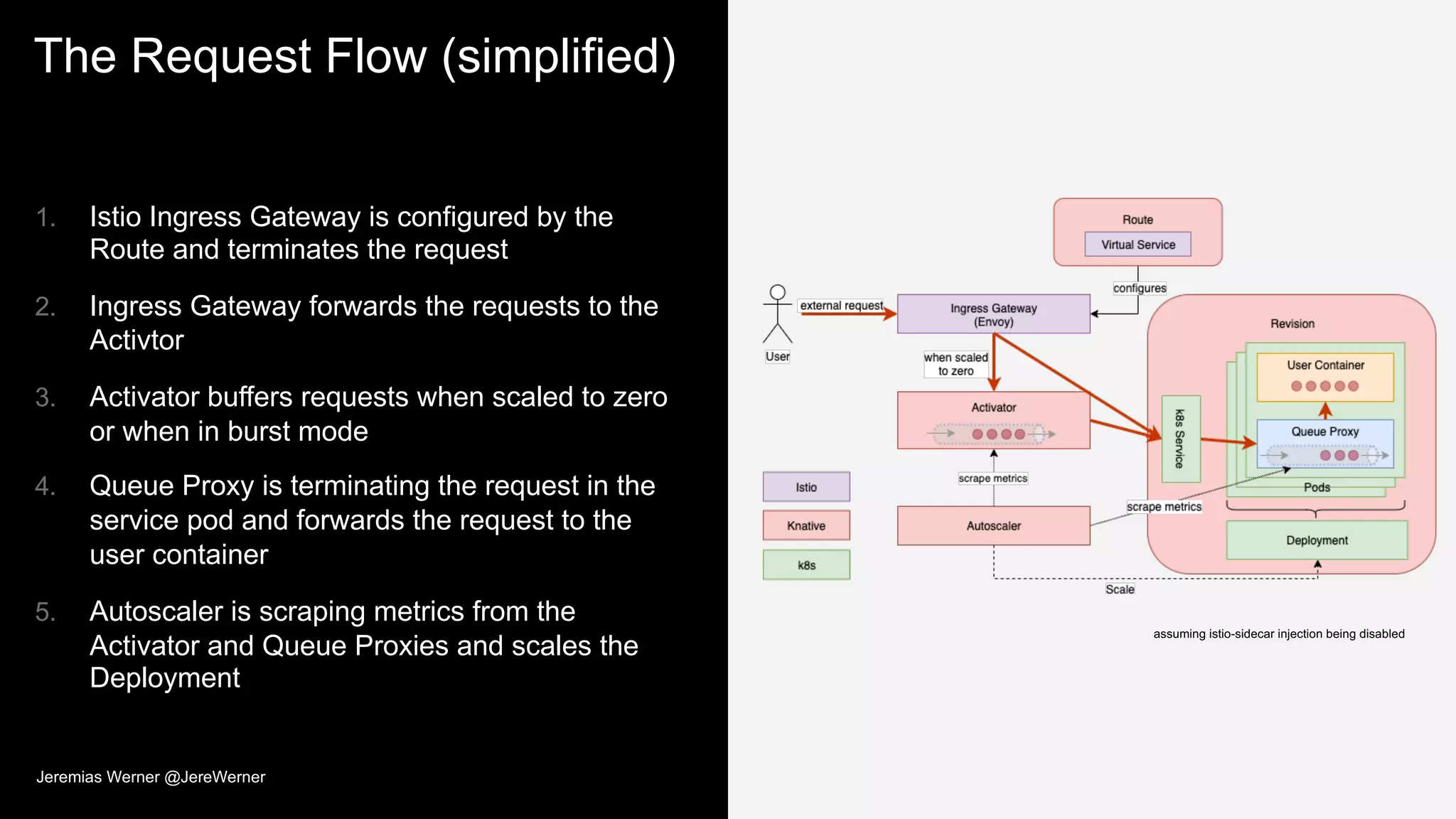

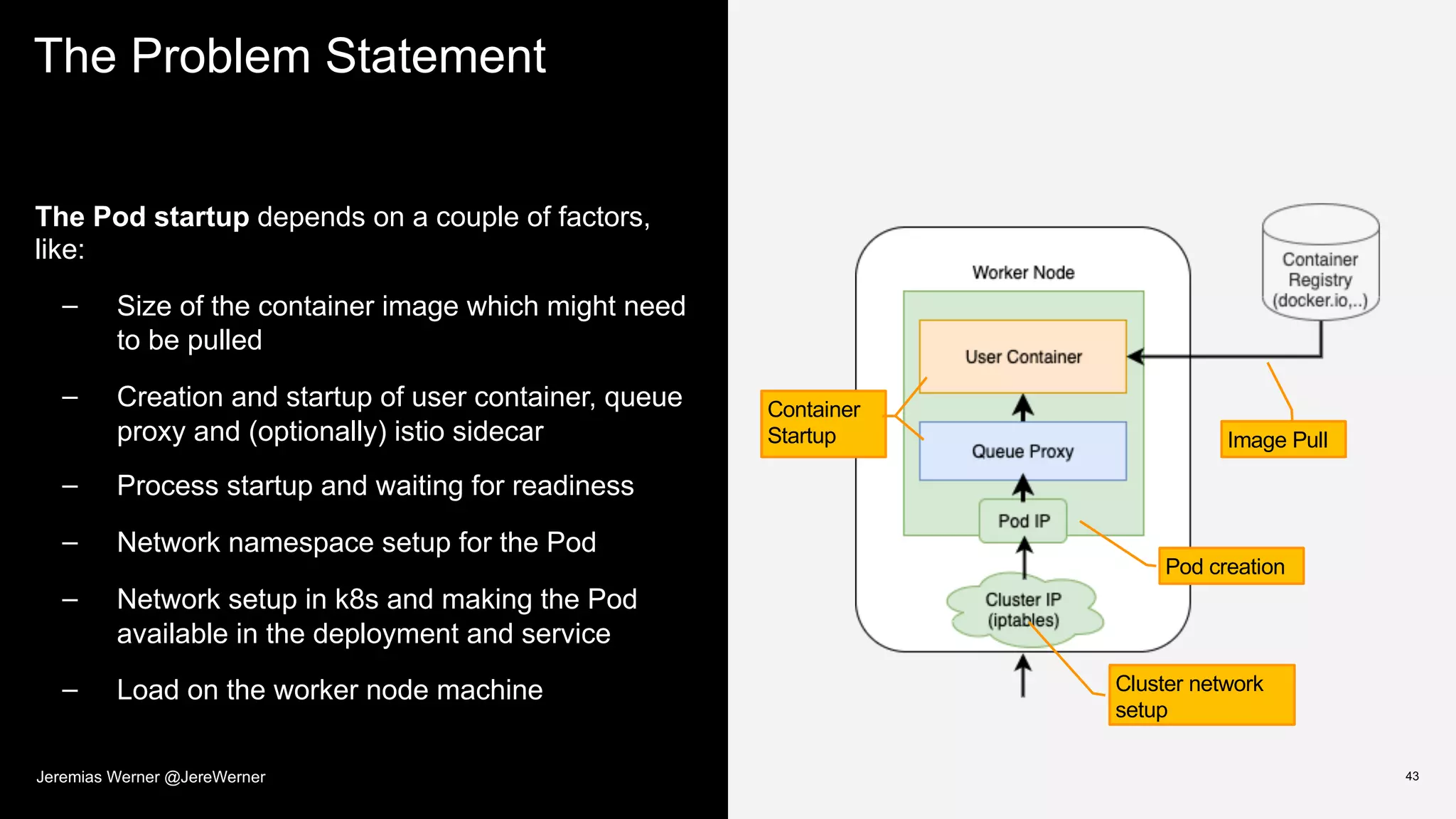

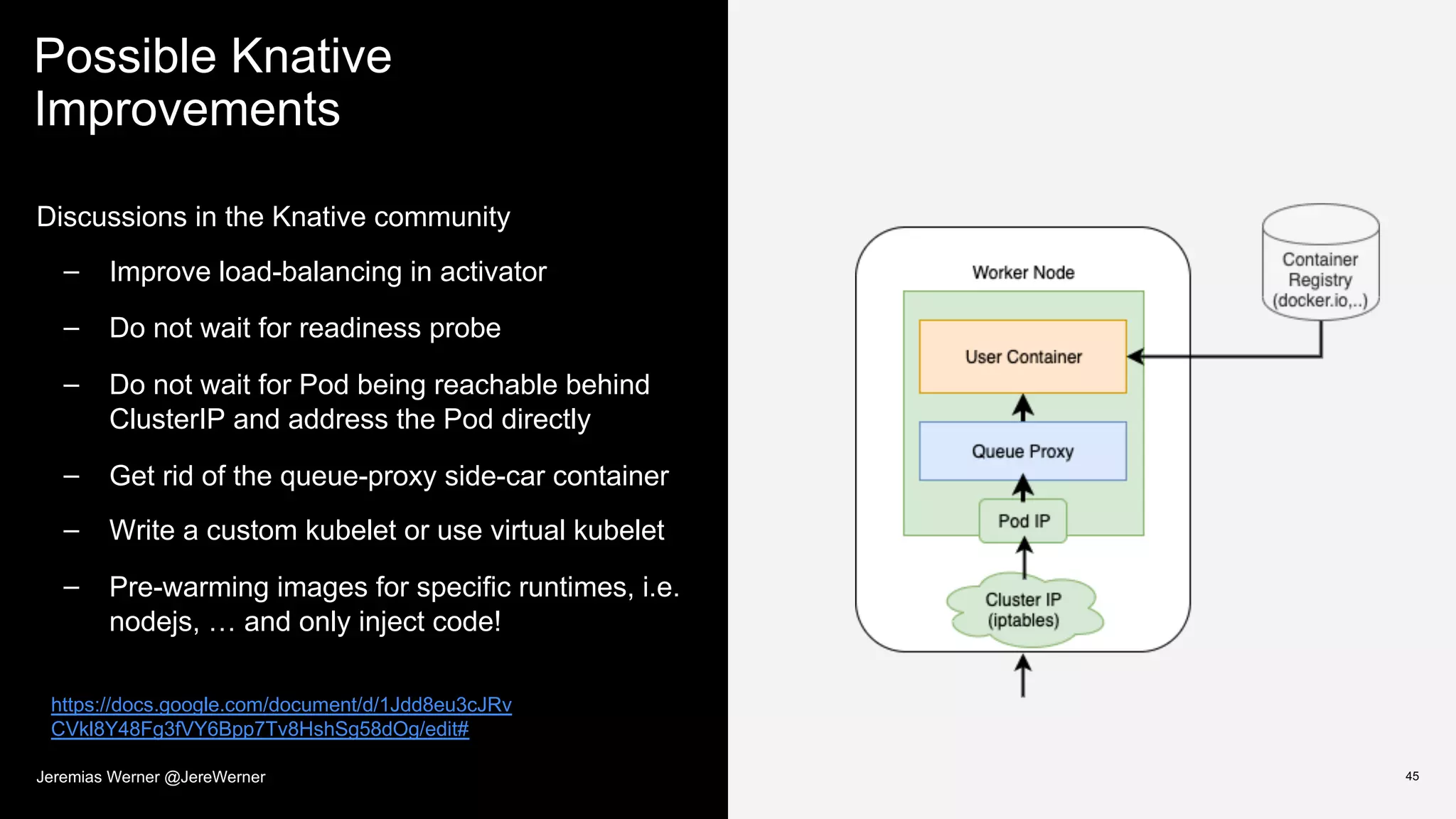

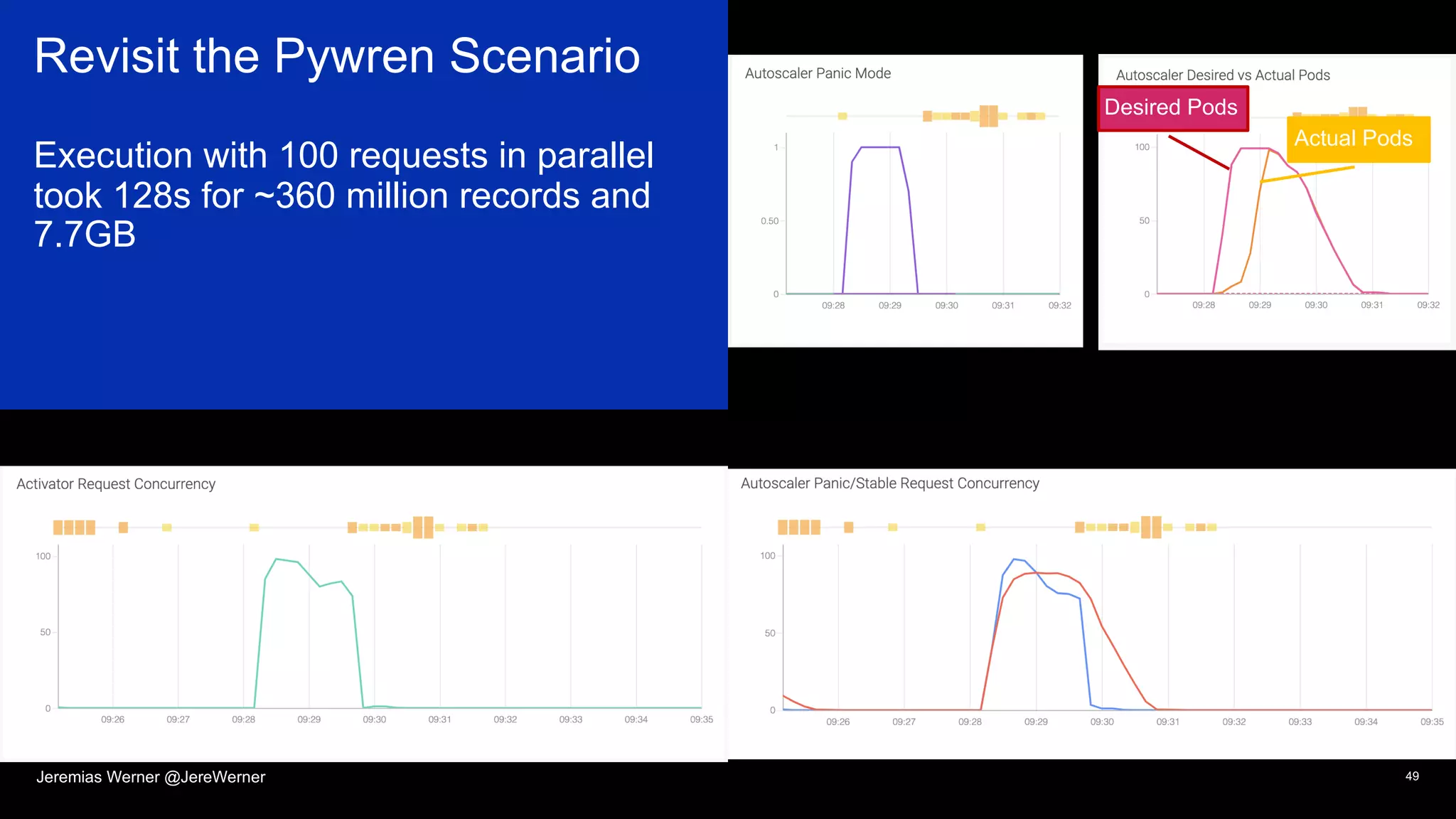

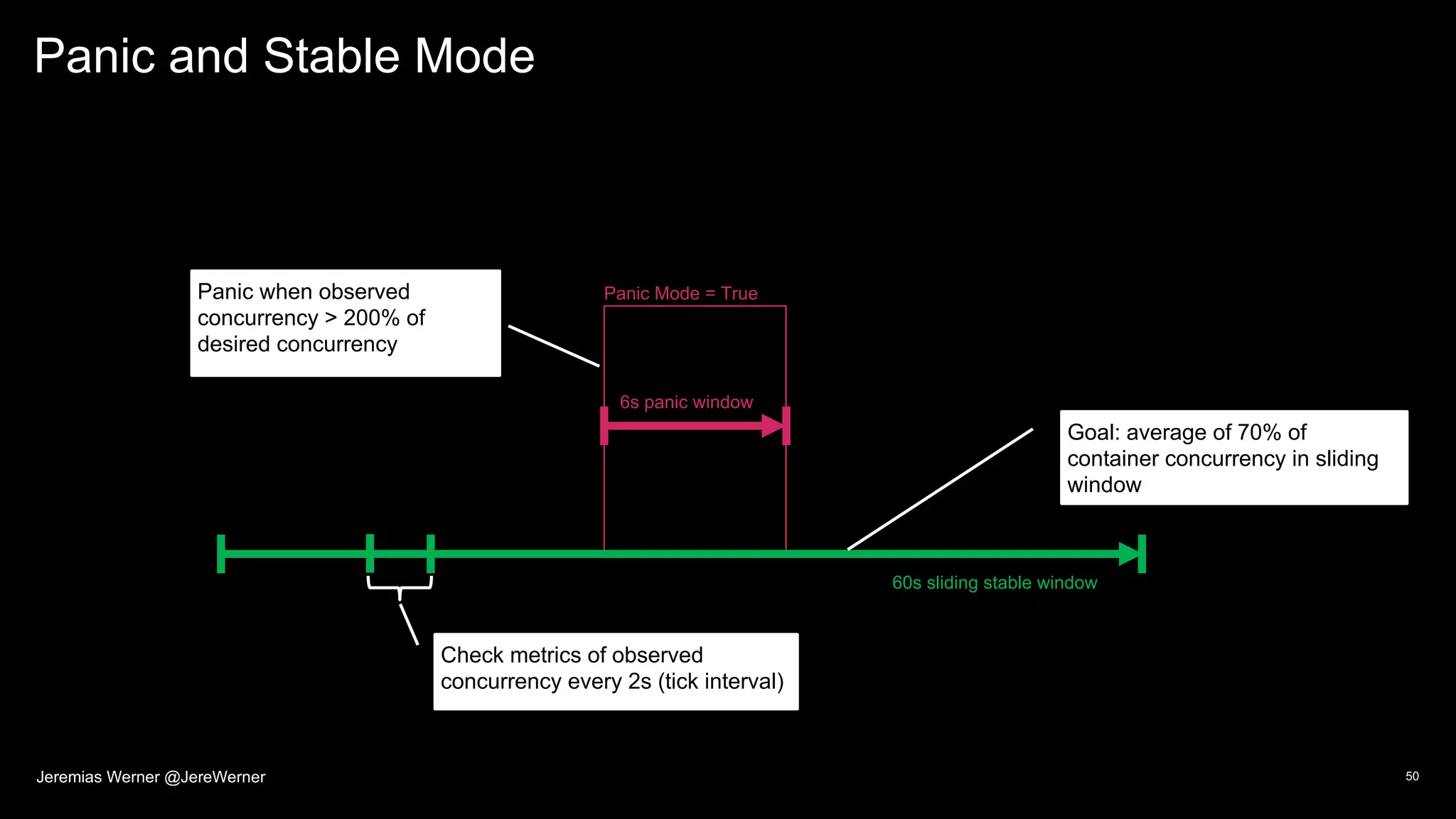

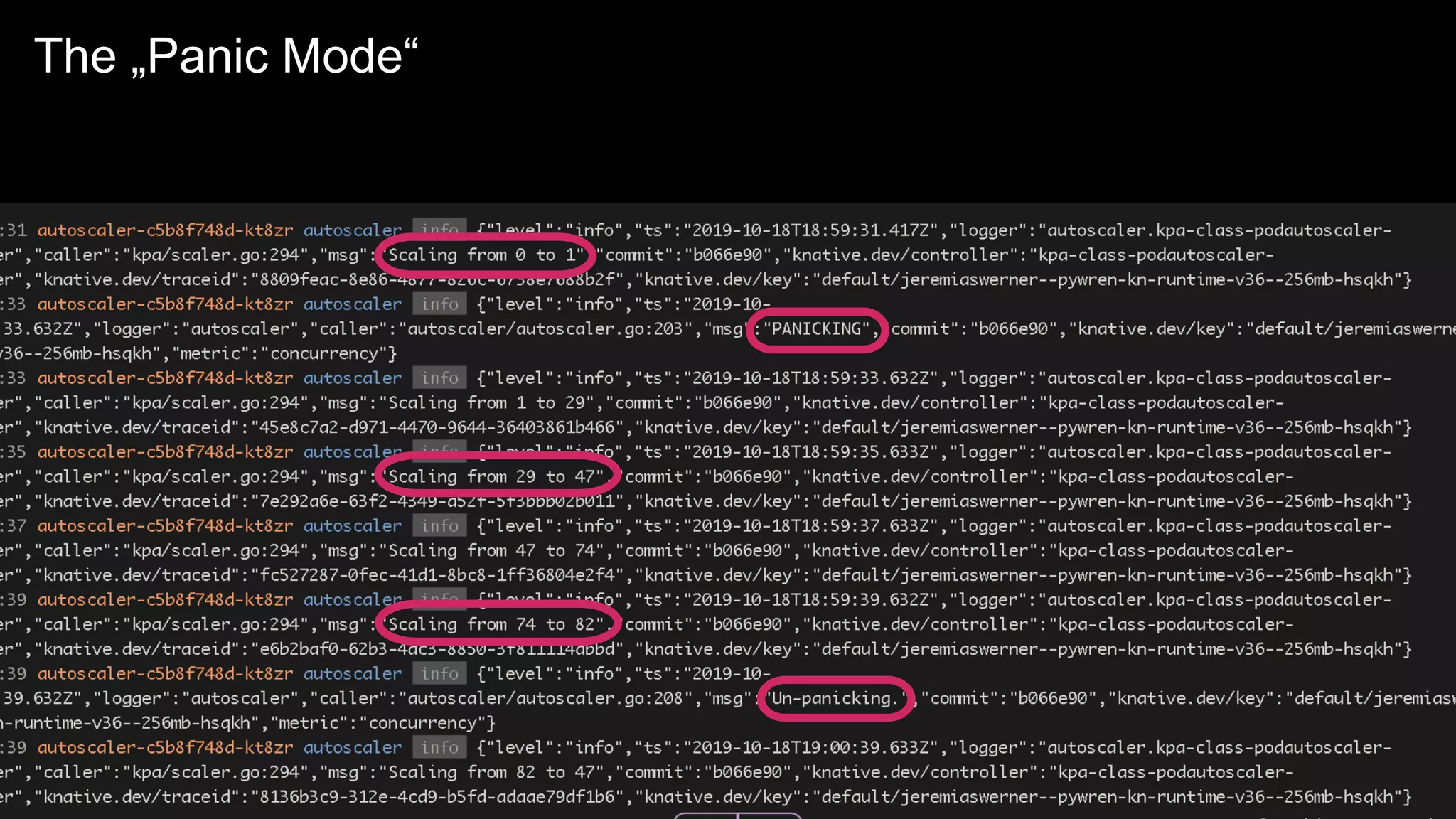

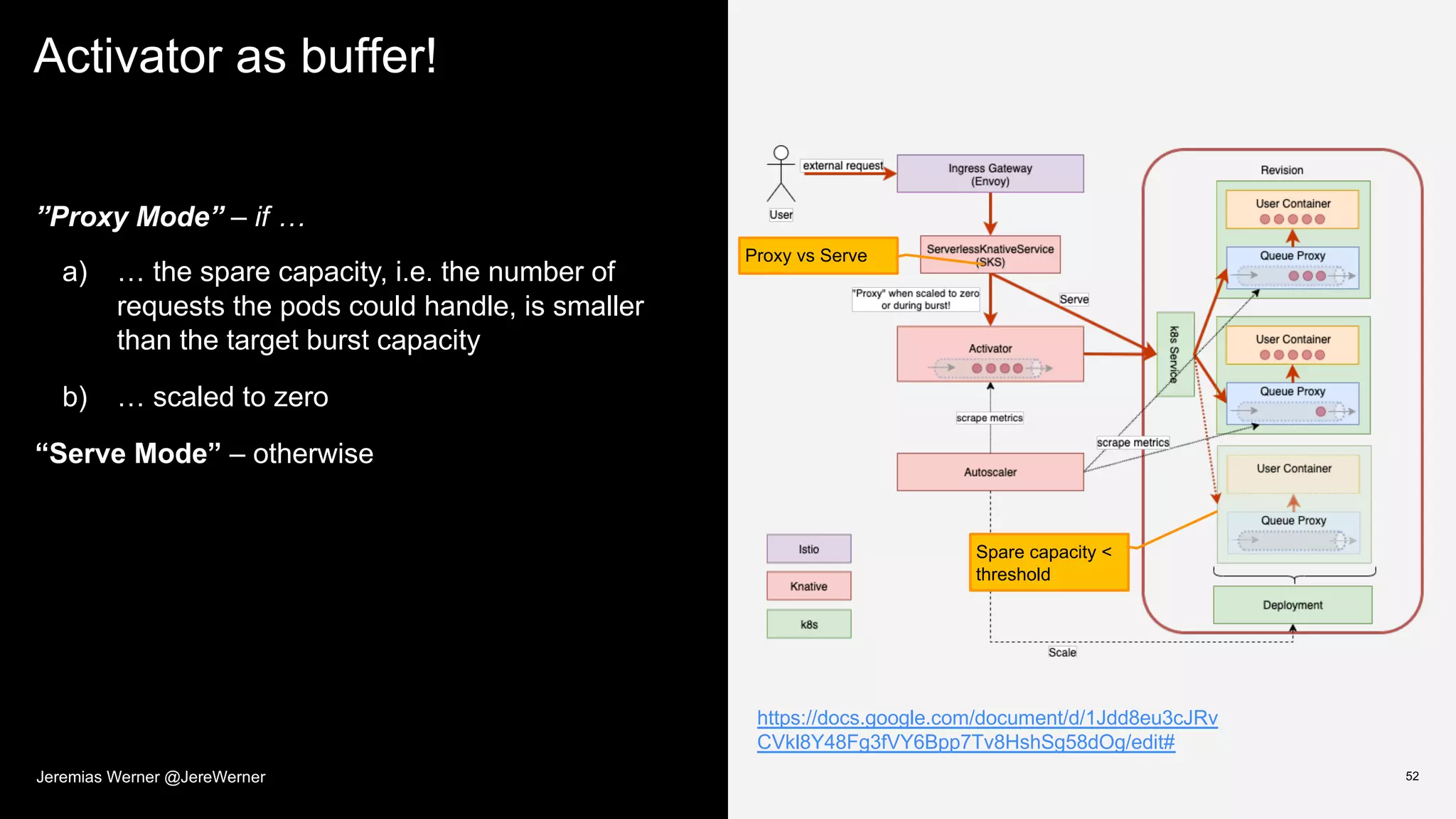

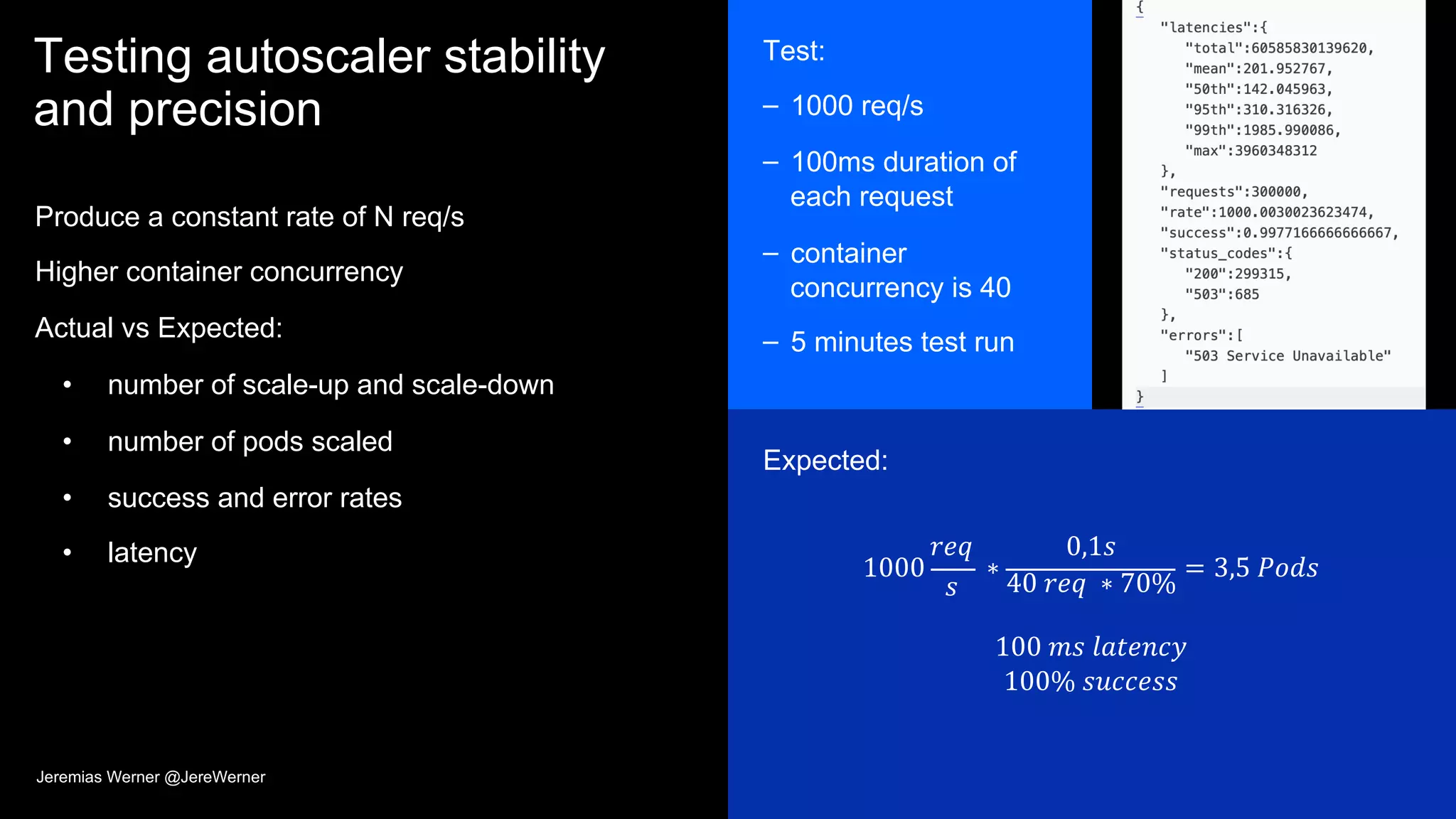

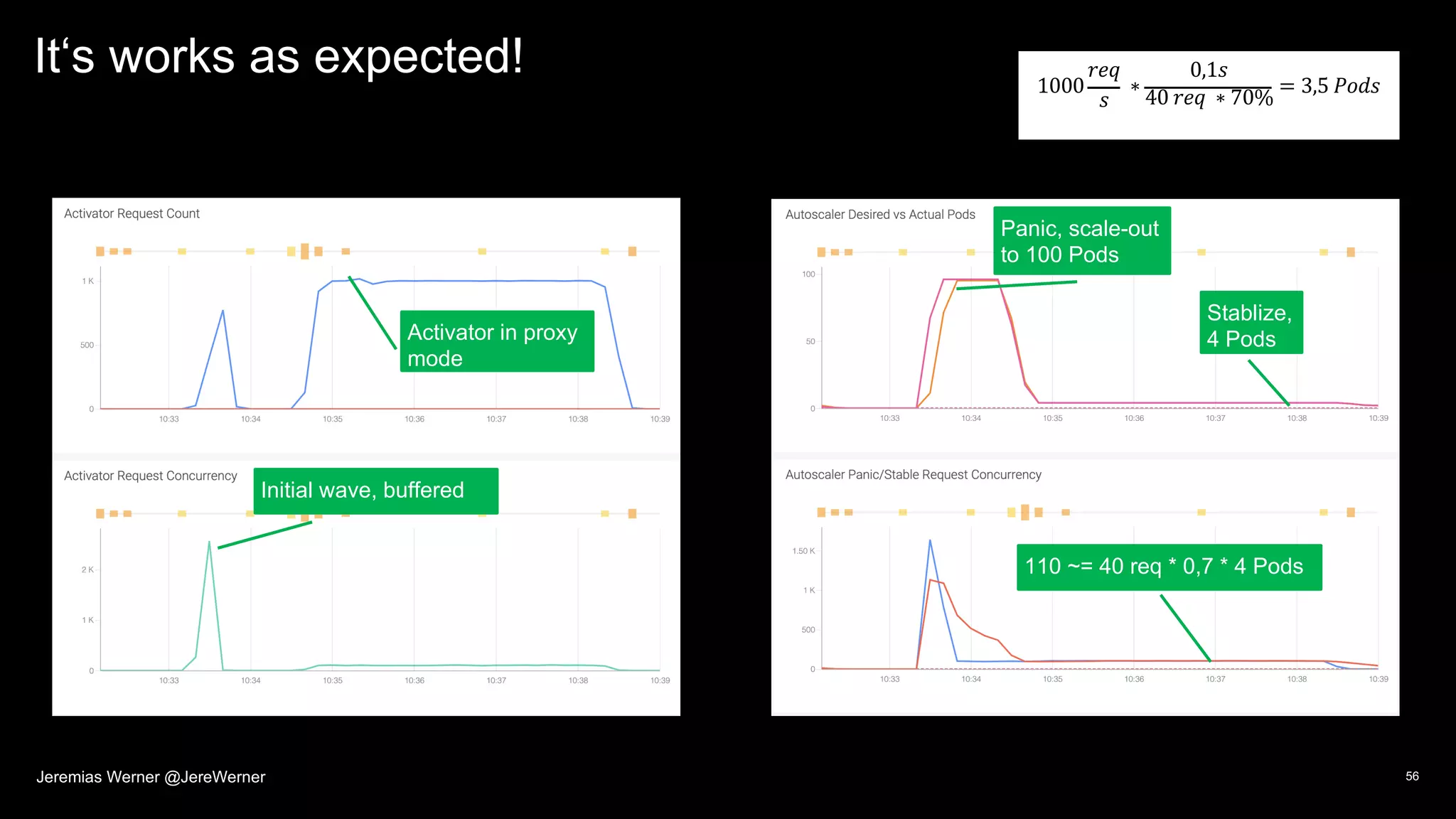

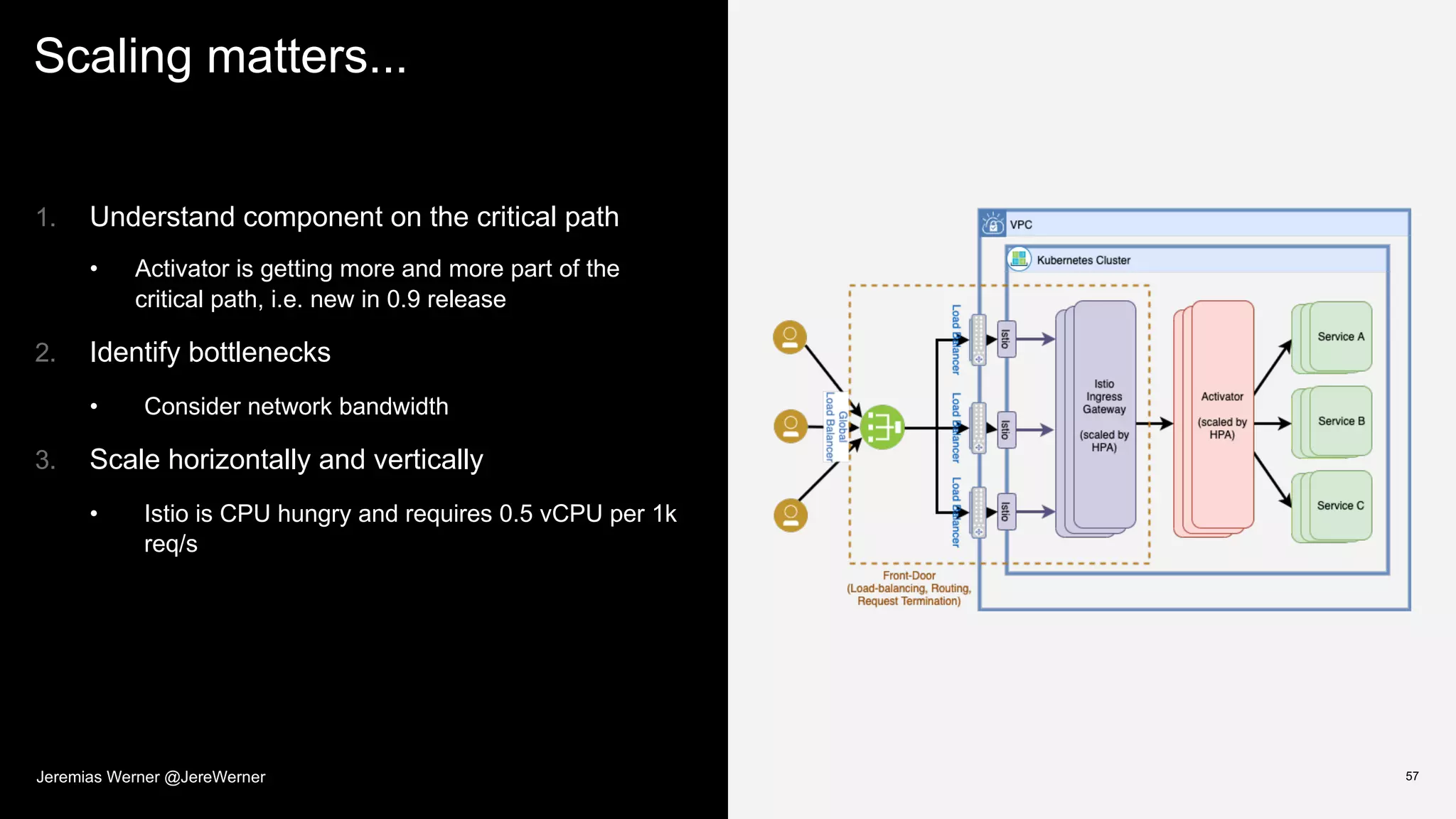

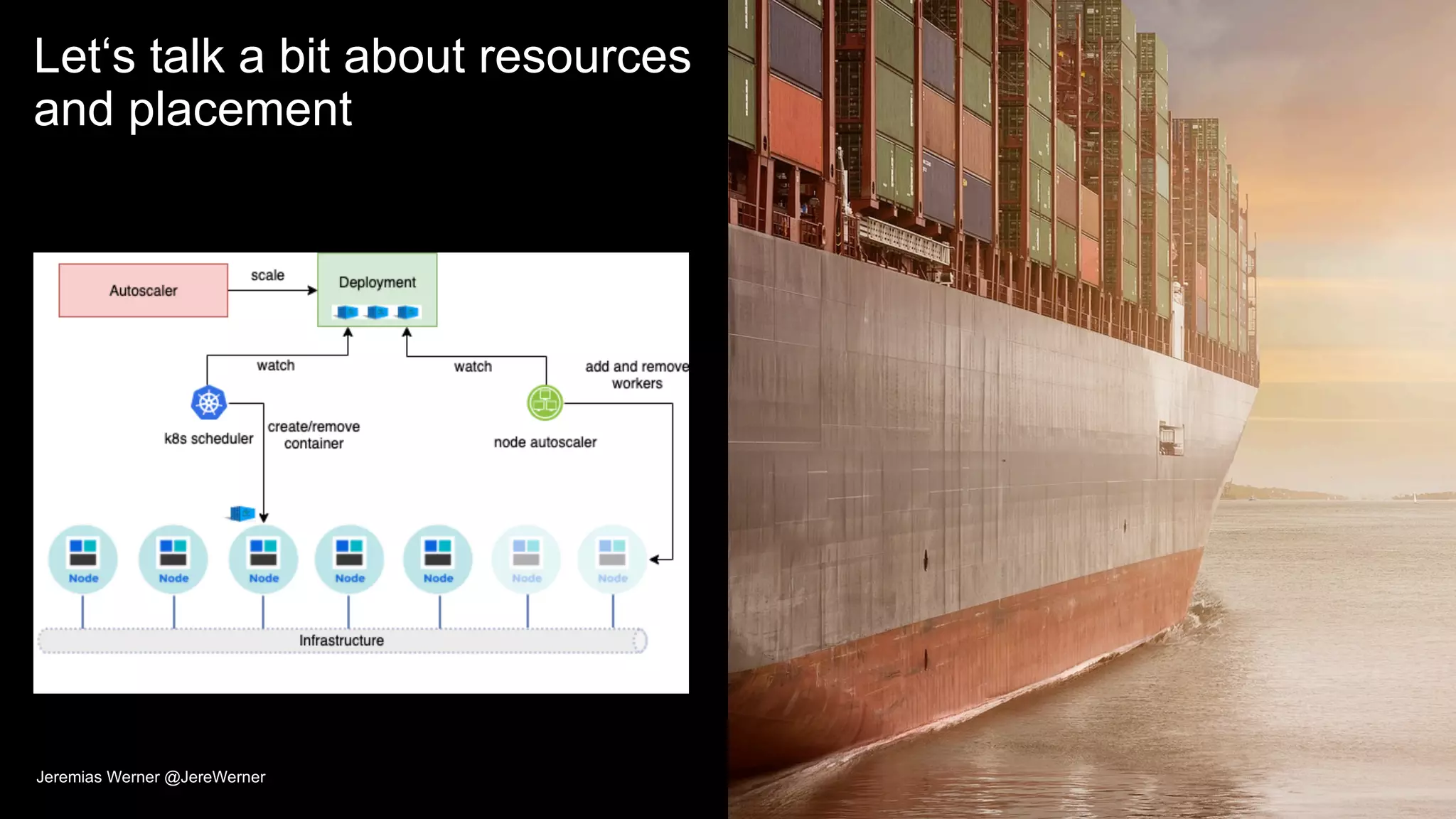

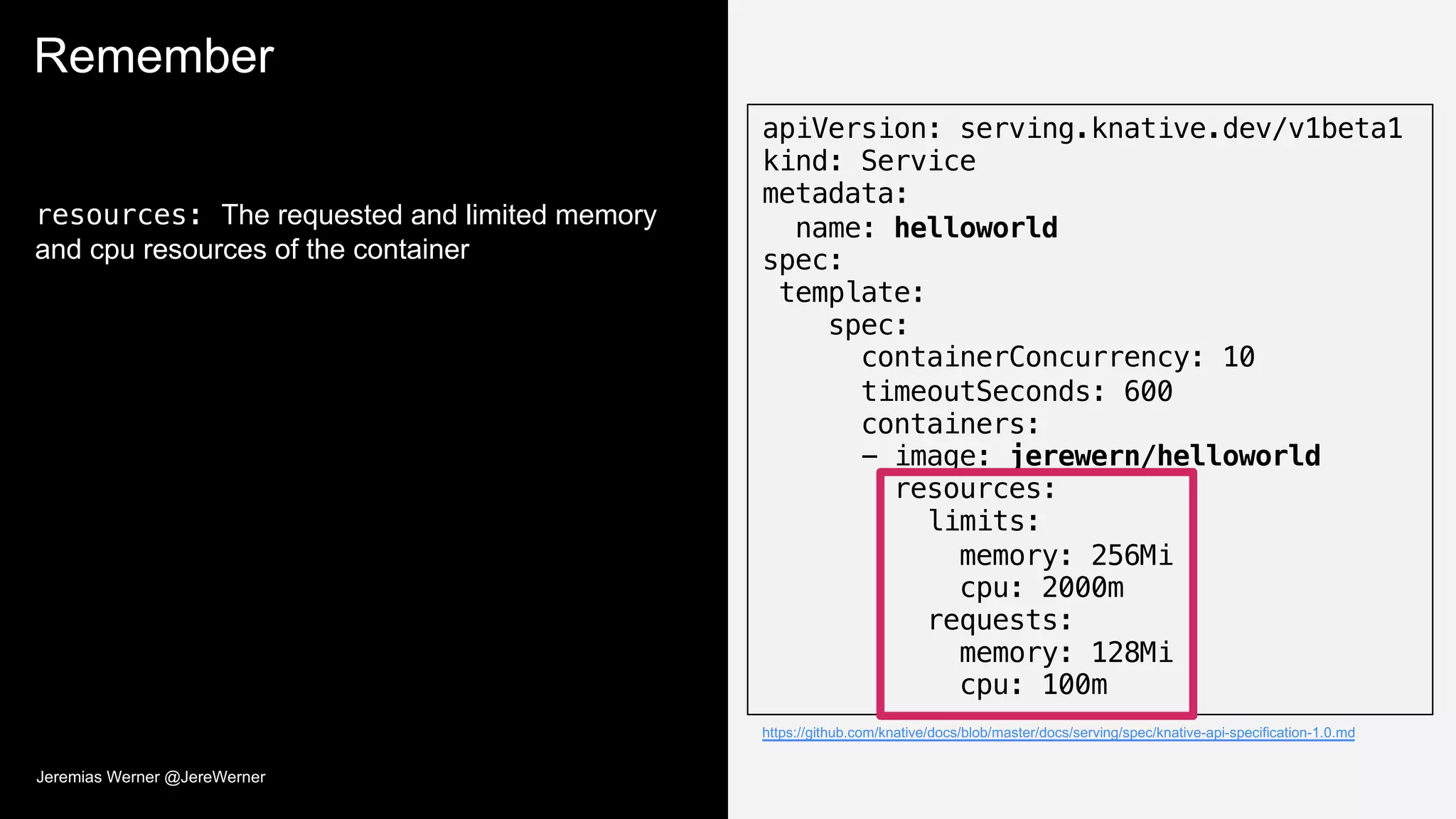

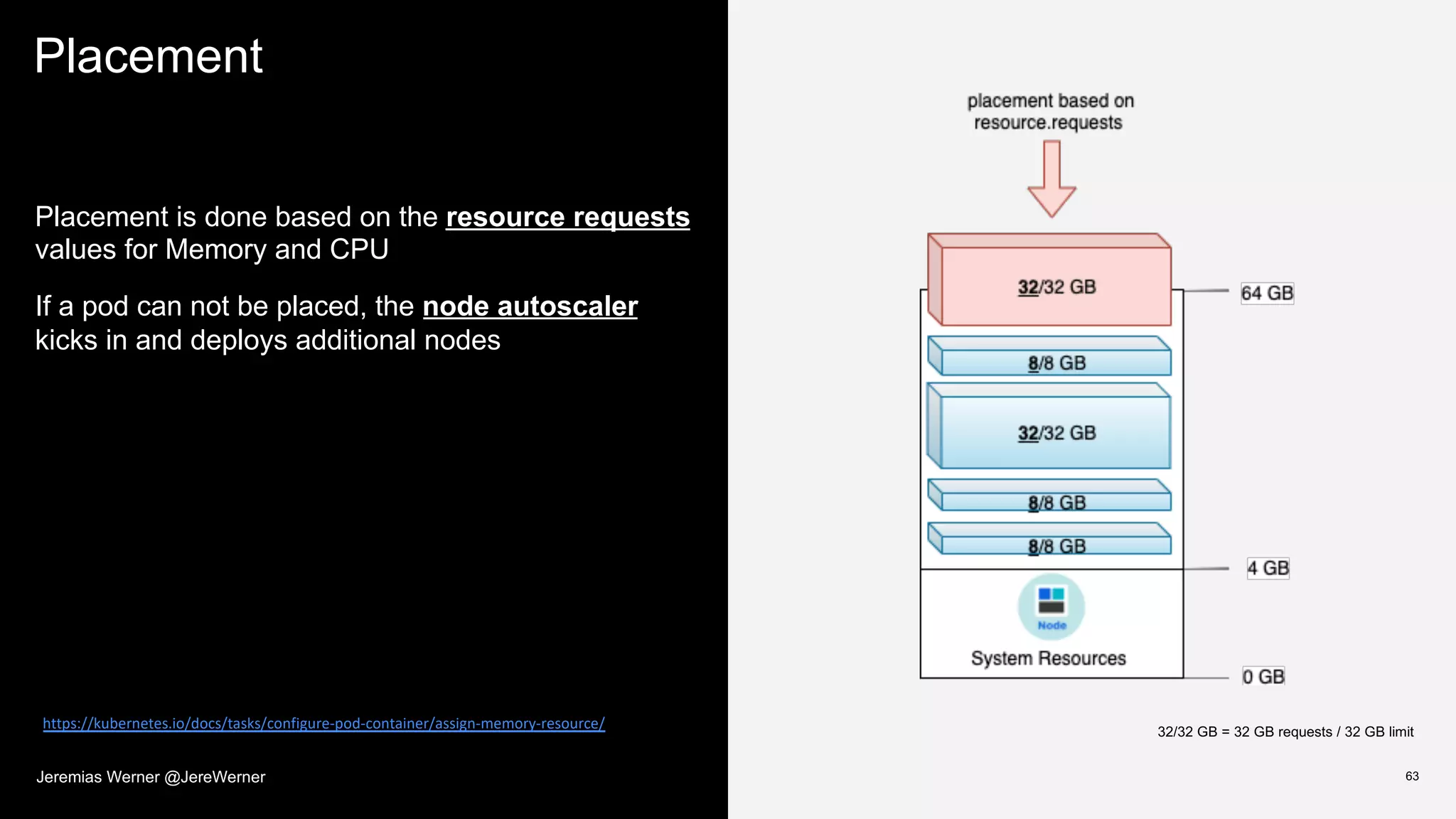

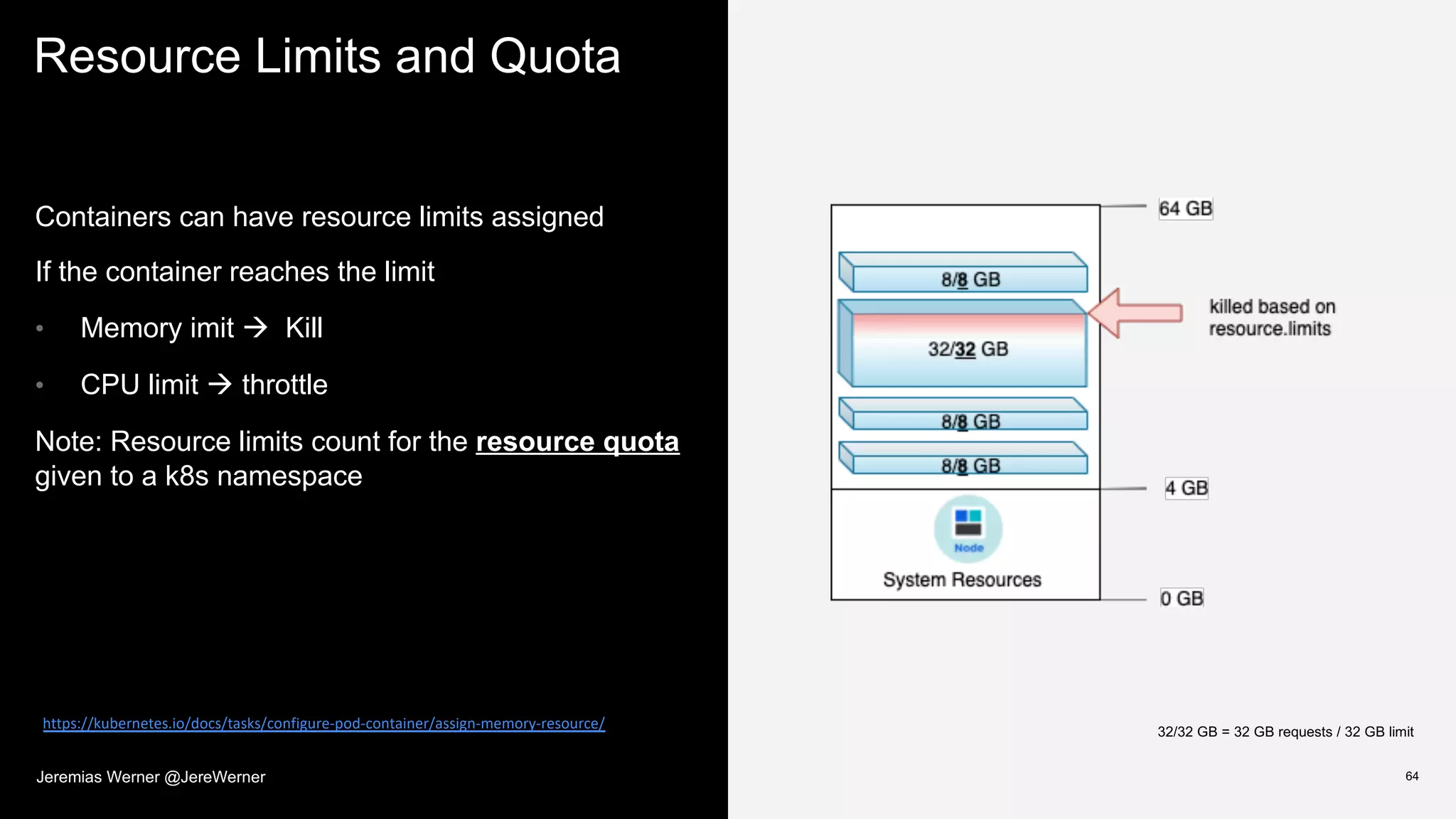

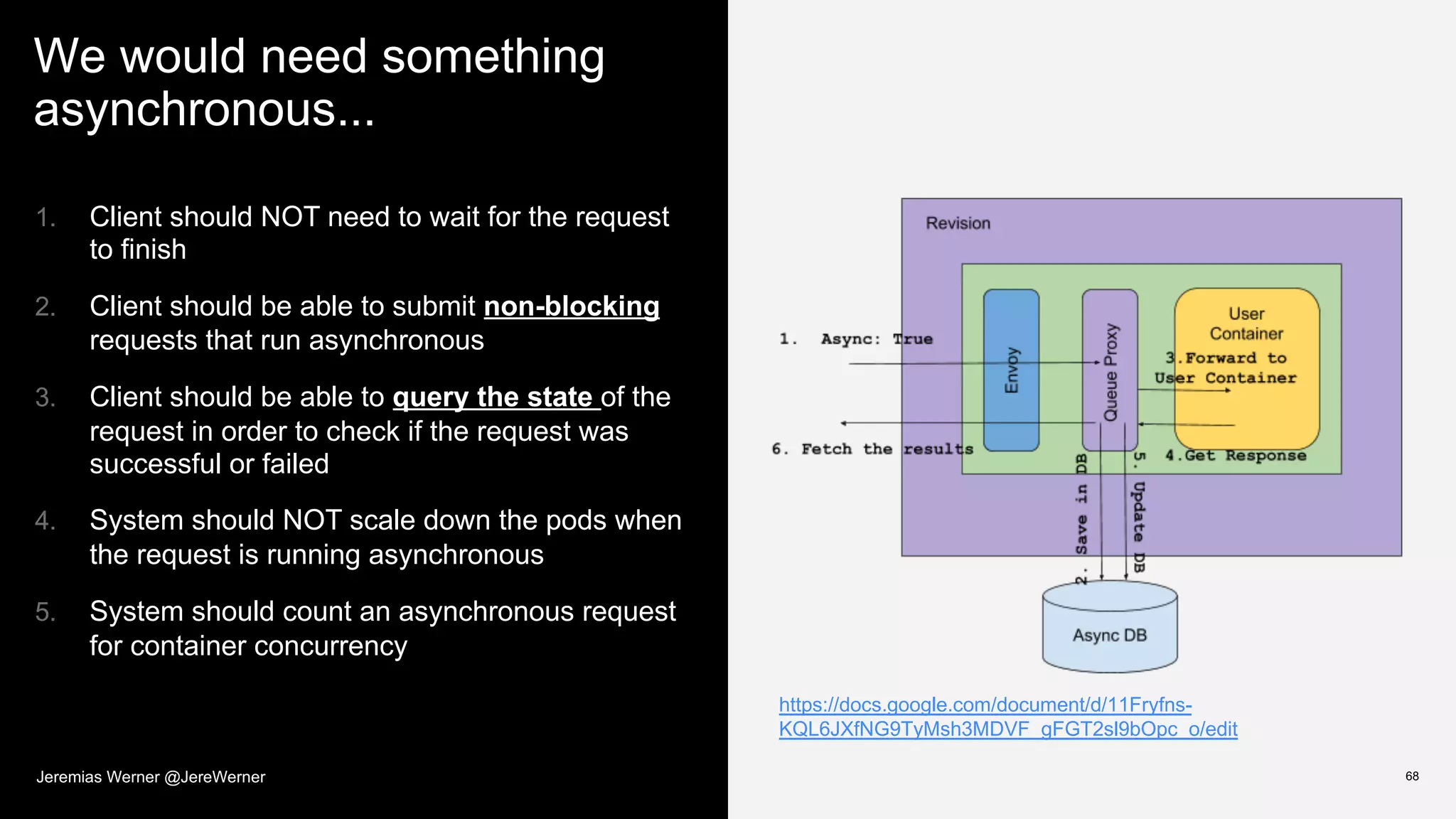

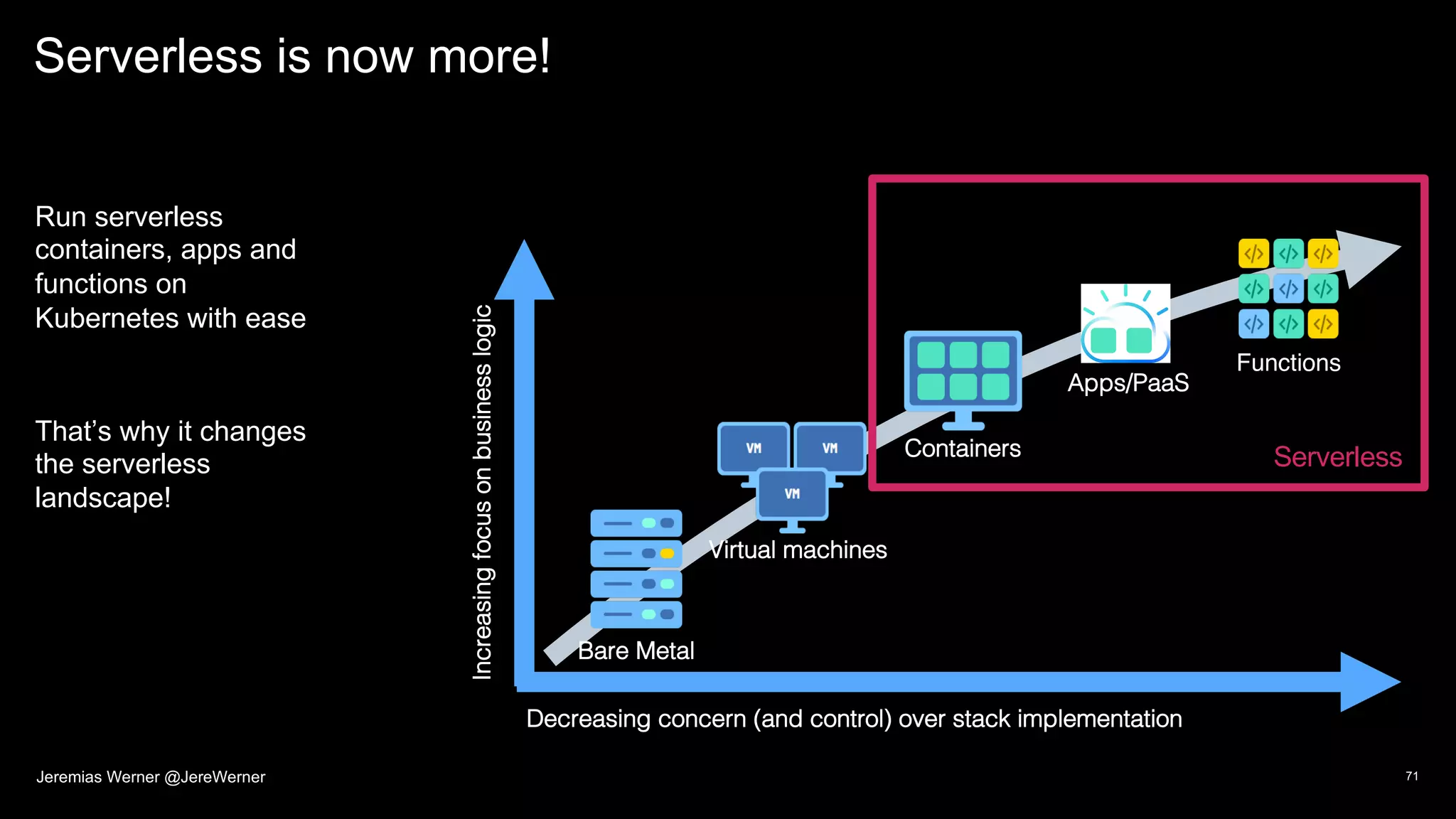

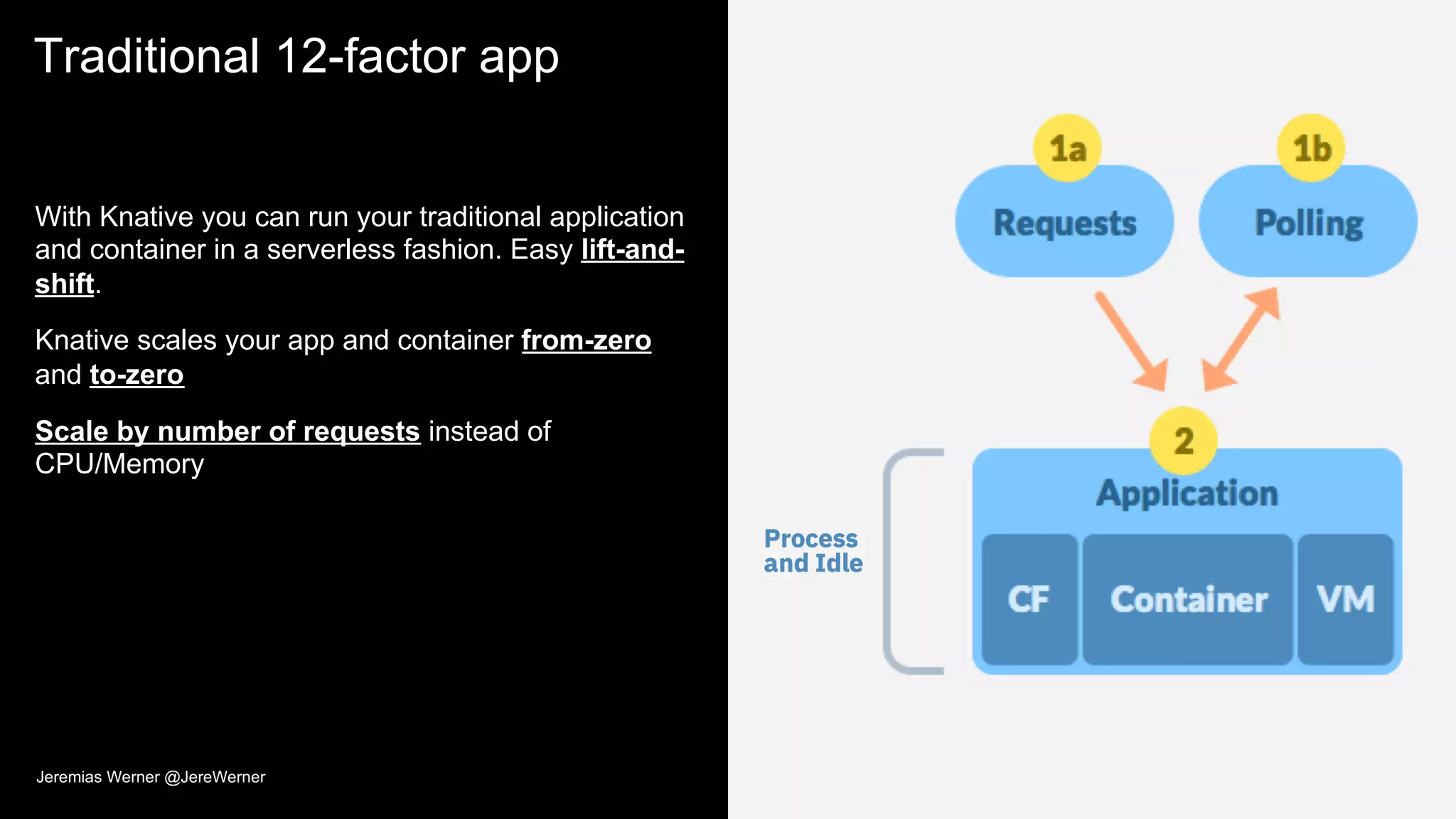

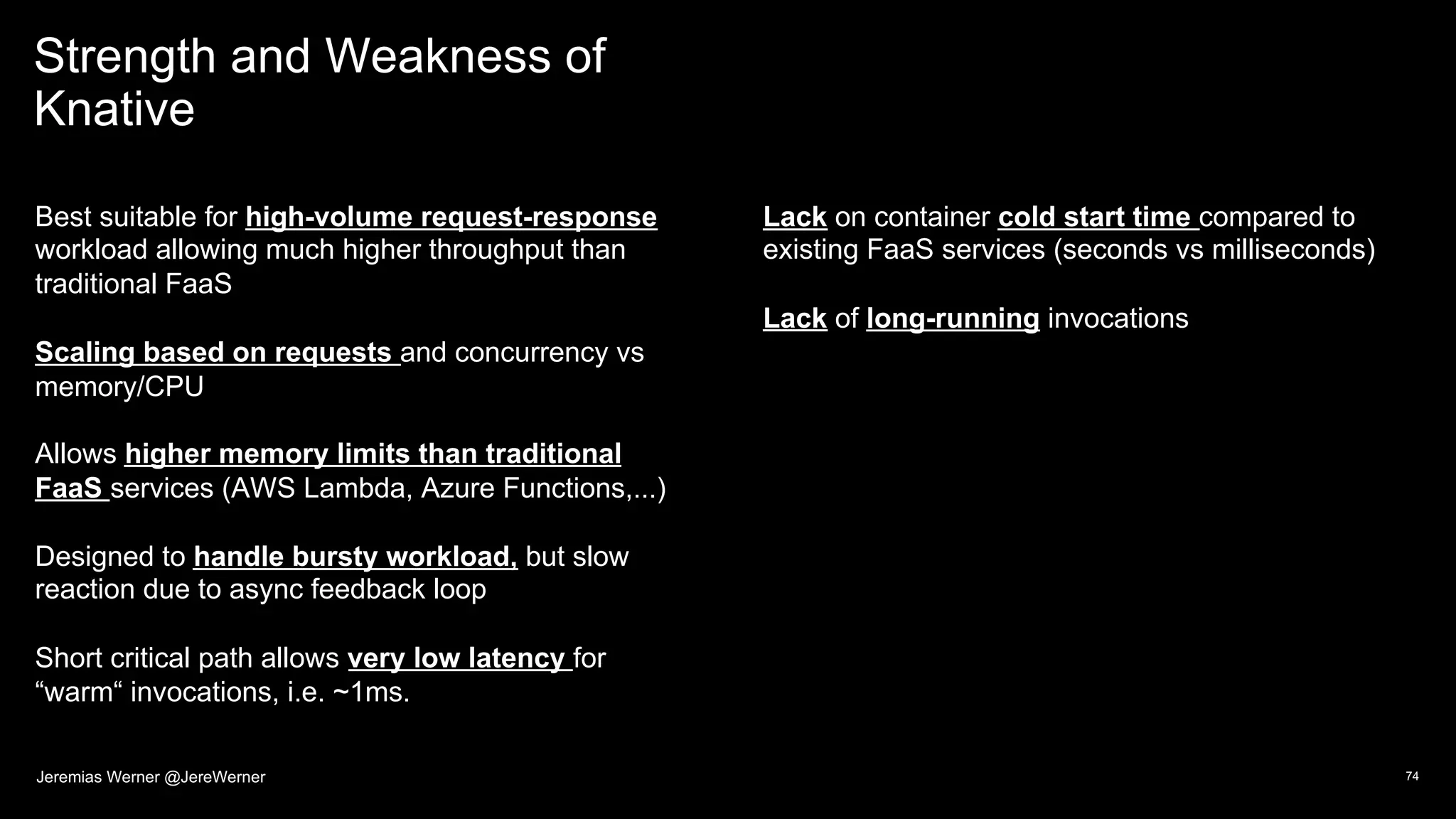

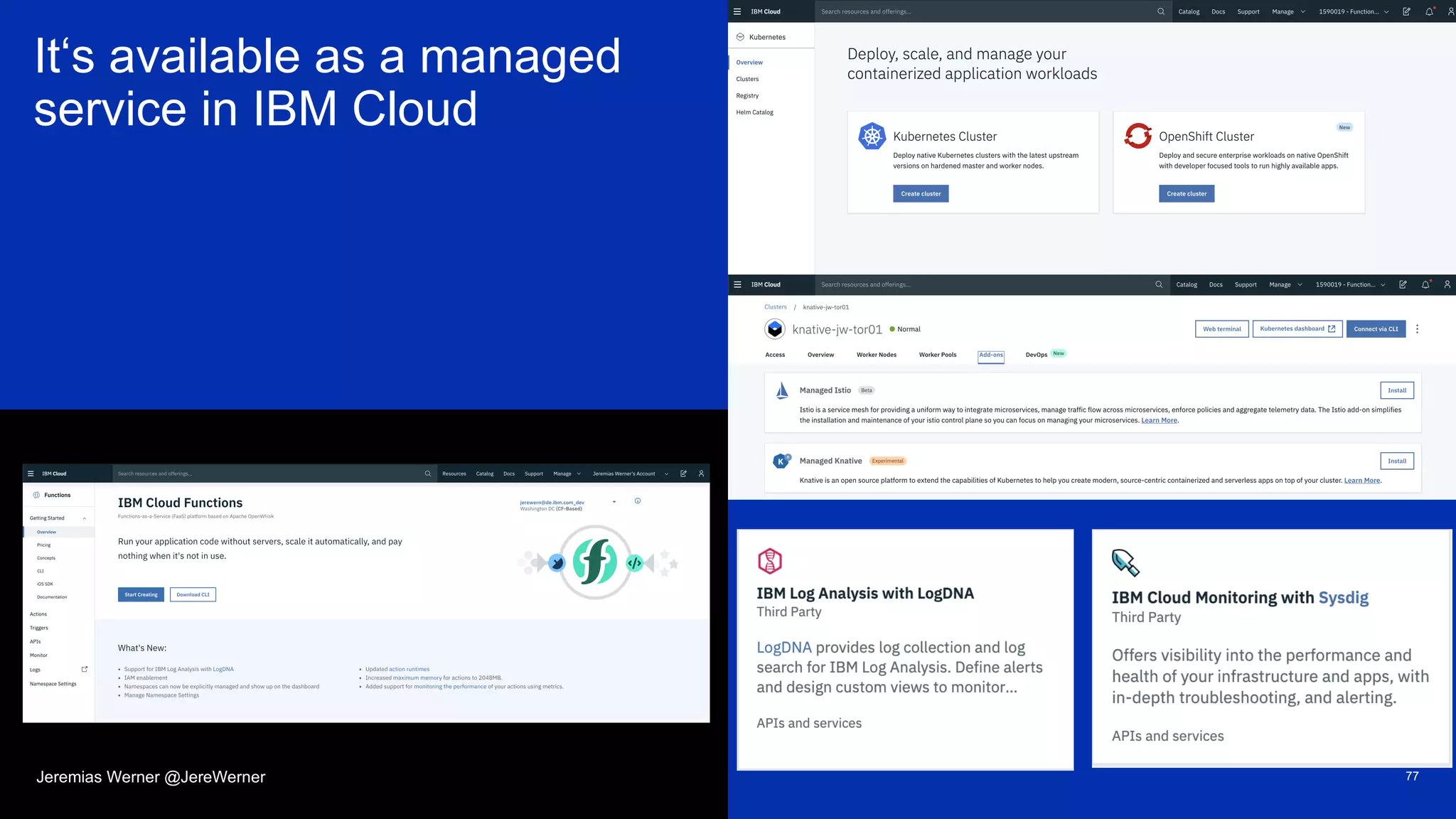

The document discusses serverless technology, particularly focusing on Knative, and how it transforms the serverless landscape by simplifying deployment and scaling of applications on Kubernetes. It highlights the advantages and use cases of serverless architectures, along with comparisons between traditional and serverless models in terms of cost and resource utilization. Moreover, it presents insights on Knative's components, functionalities, and customer examples that leverage its capabilities.