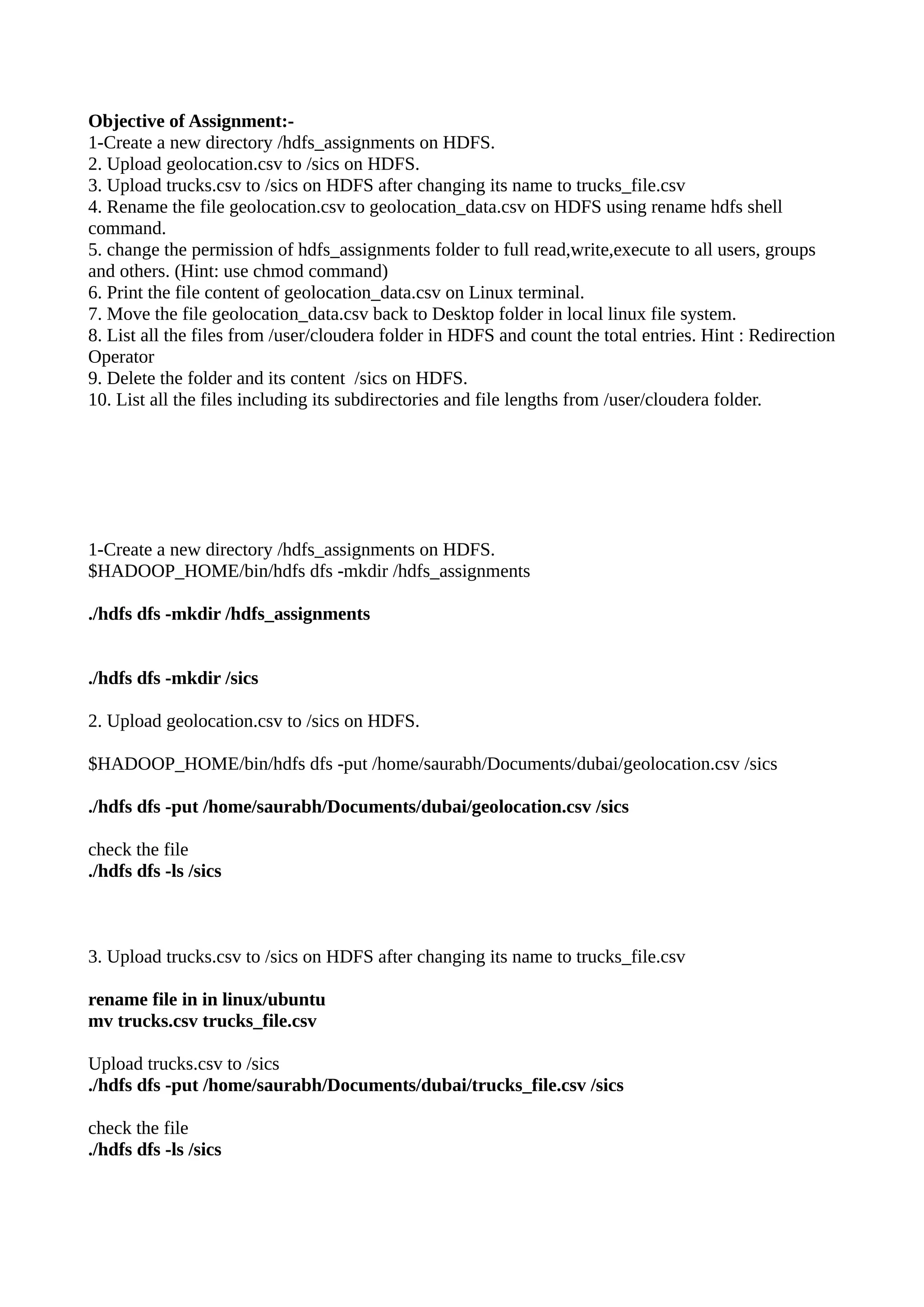

1. The document outlines steps to manage files and directories in HDFS including creating a new directory, uploading and renaming files, changing permissions, printing file contents, moving files between HDFS and local file system, listing files and counting entries, and deleting a folder.

2. It provides commands to create a new directory in HDFS, upload two CSV files to that directory changing one filename, rename a file, change permissions, print and move file contents, list and count files, and delete the directory.

3. The objective is completed through a series of HDFS management tasks using hdfs commands like hdfs dfs, hdfs mkdir, hdfs put, hdfs mv, hdfs ls, hdfs