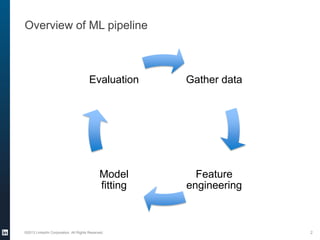

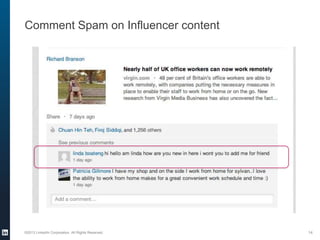

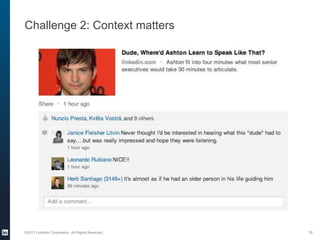

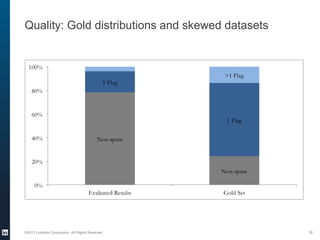

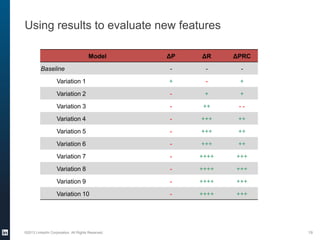

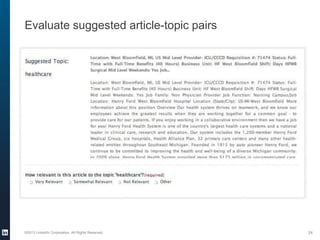

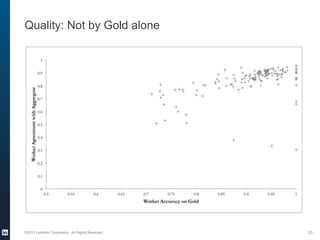

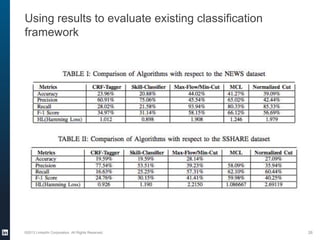

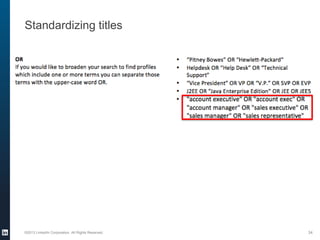

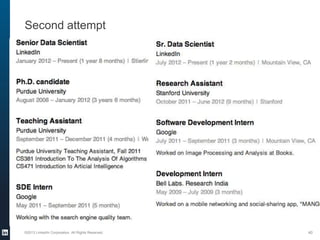

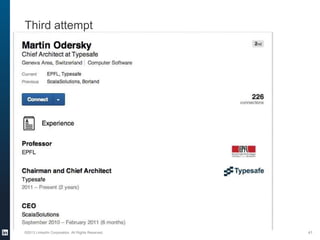

The document provides an overview of the machine learning pipeline, emphasizing data gathering, feature engineering, and model evaluation. Key points include the importance of context in tasks, the challenges of binary tasks, and the significance of quality data. It concludes with best practices for working with data and tips for improving classification models.