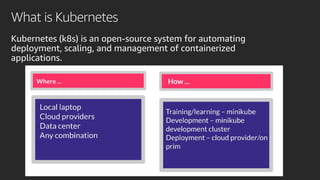

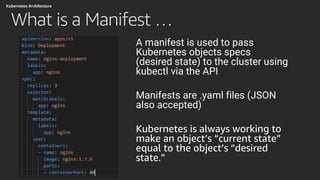

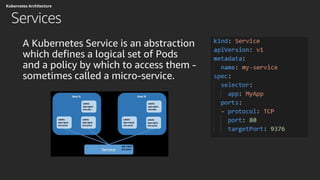

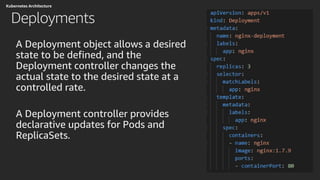

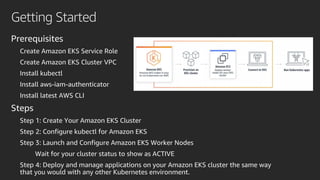

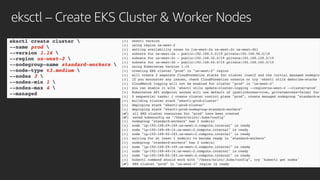

This document discusses containers, Docker, Kubernetes, and Amazon EKS. It defines containers as software packages with all dependencies, and notes that Docker allows for easy creation and management of containerized applications. Kubernetes is introduced as an open-source system for automating deployment and management of containerized applications. Amazon EKS is defined as AWS's managed Kubernetes service, which handles the Kubernetes control plane and allows customers to manage worker nodes. The document provides an overview of key Kubernetes concepts like pods, services, deployments and explains how EKS integrates with other AWS services.