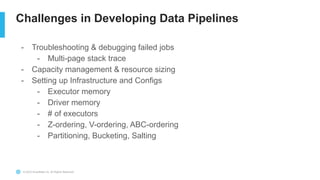

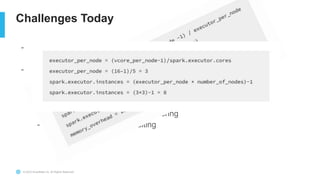

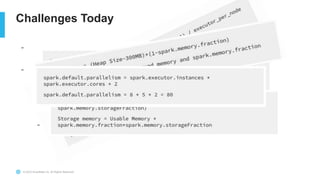

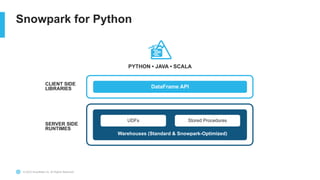

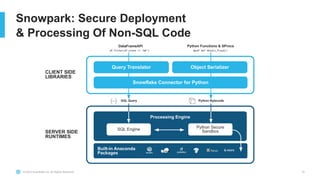

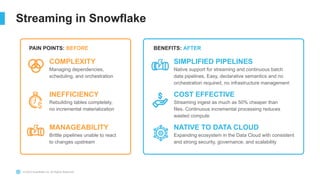

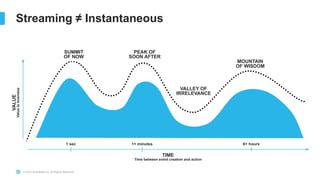

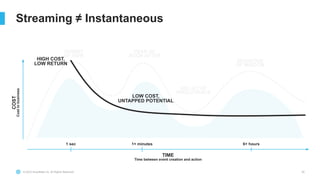

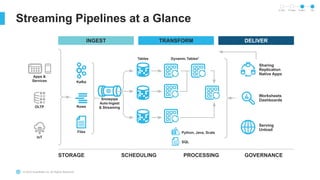

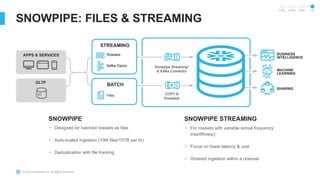

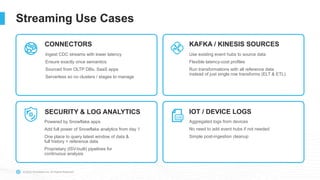

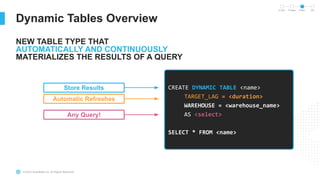

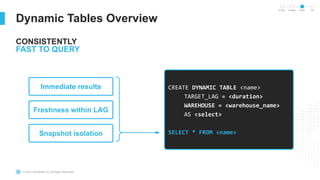

The document outlines the challenges involved in developing data pipelines, especially troubleshooting and debugging failed jobs related to Spark and infrastructure setup. It introduces Snowpark for Python, which simplifies the deployment and processing of non-SQL code, and highlights the benefits of data streaming with dynamic tables in Snowflake. Additionally, it details various ingestion options and the use of dynamic tables for efficient and continuous data management.