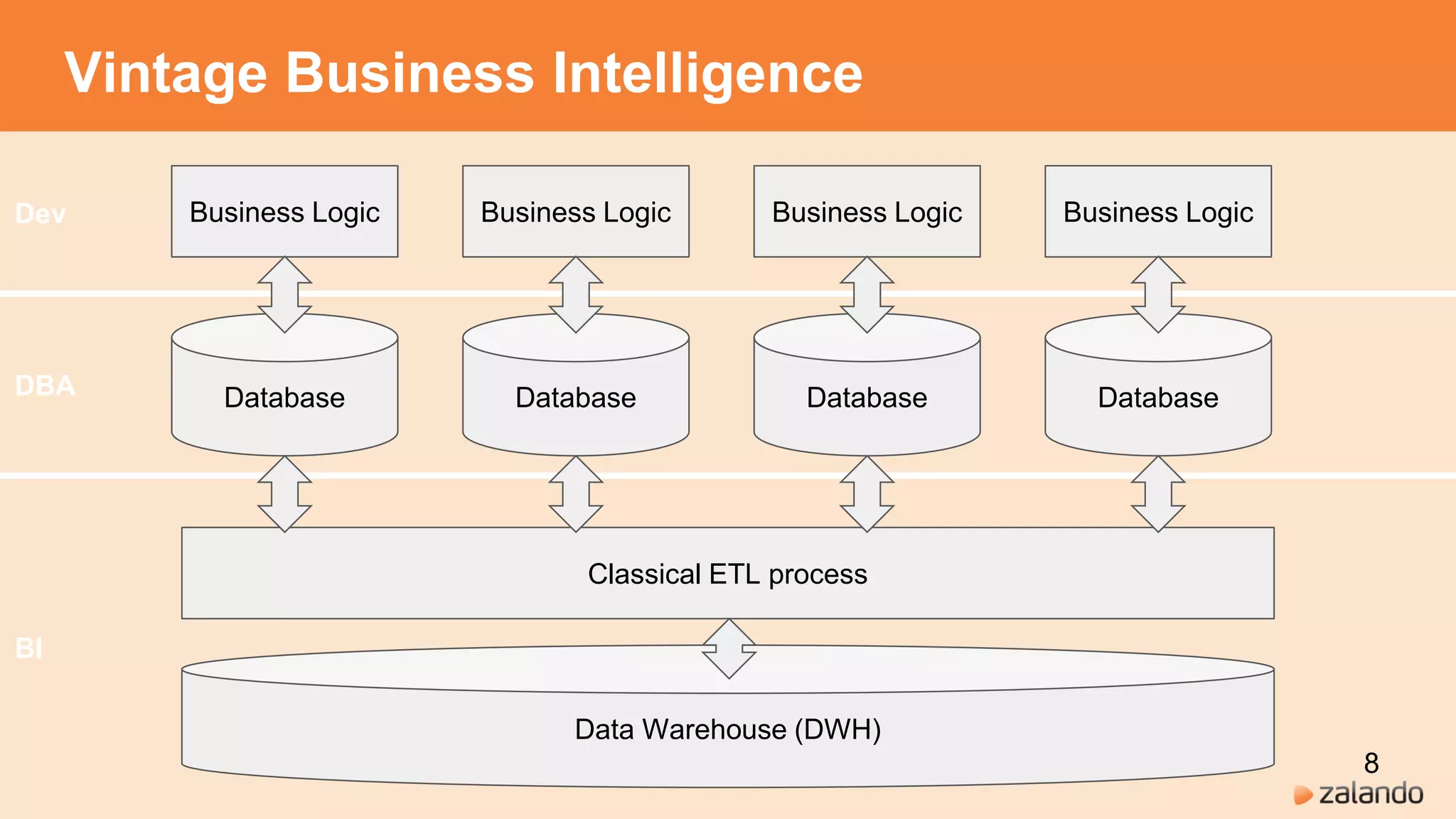

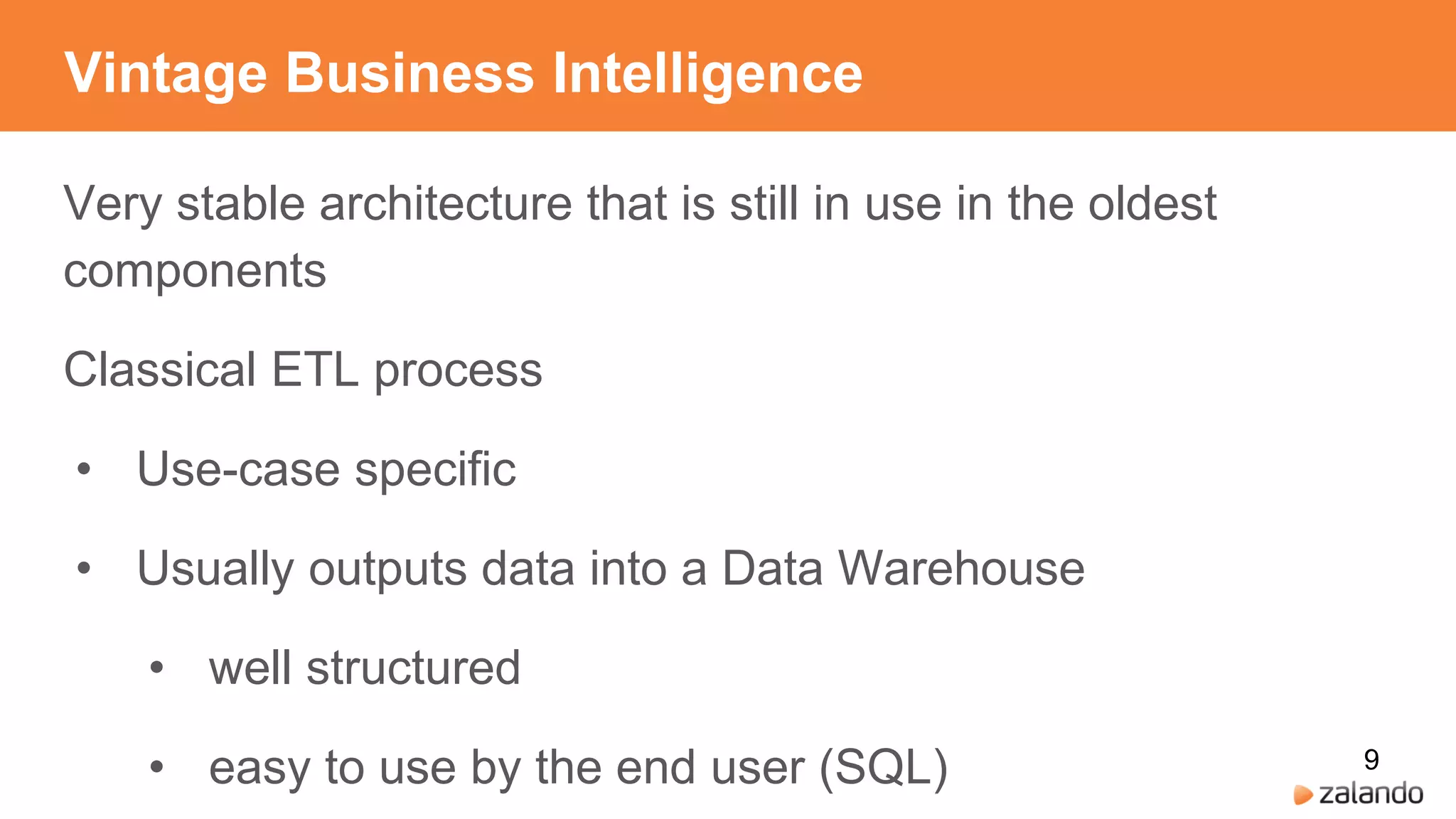

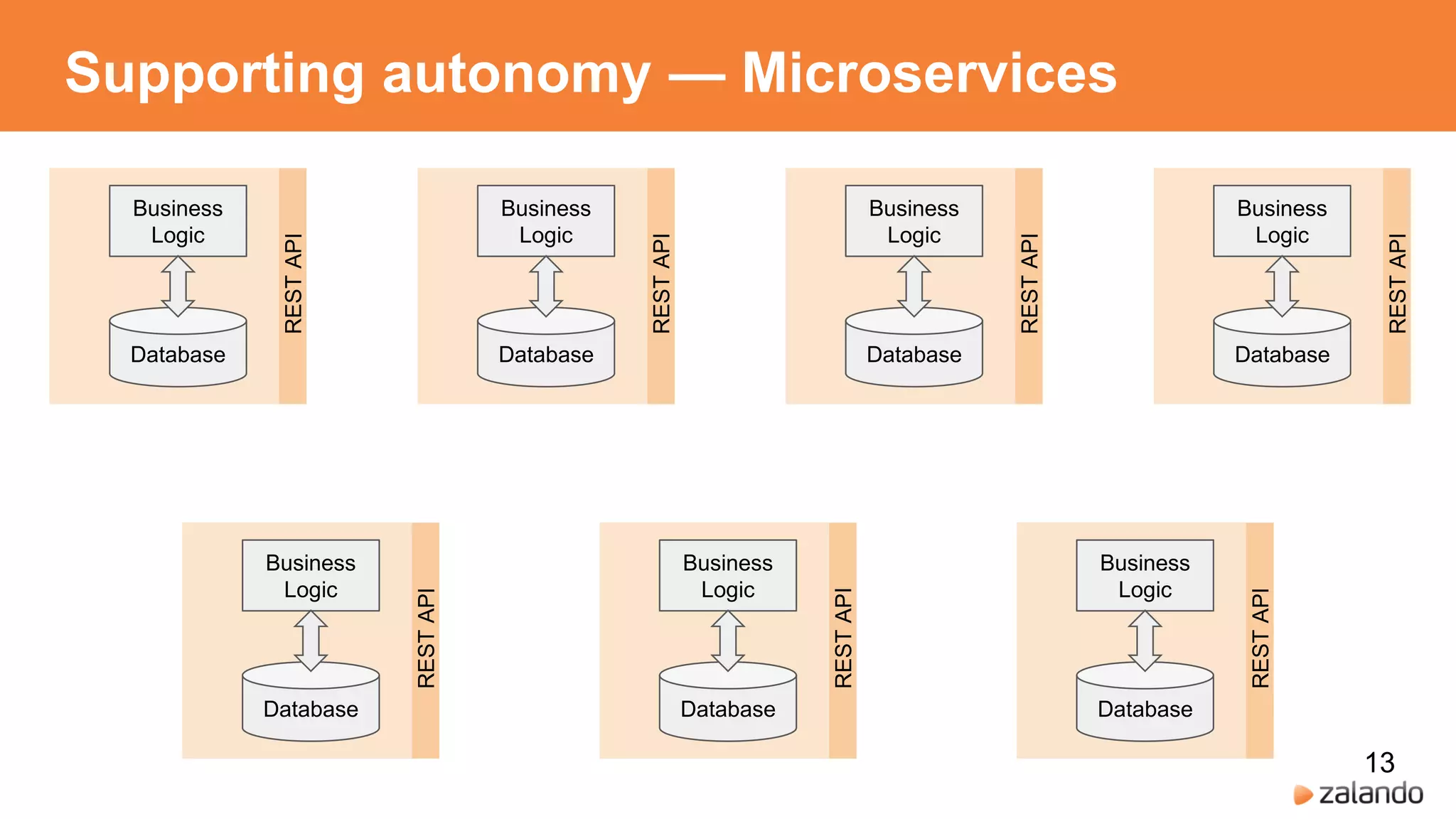

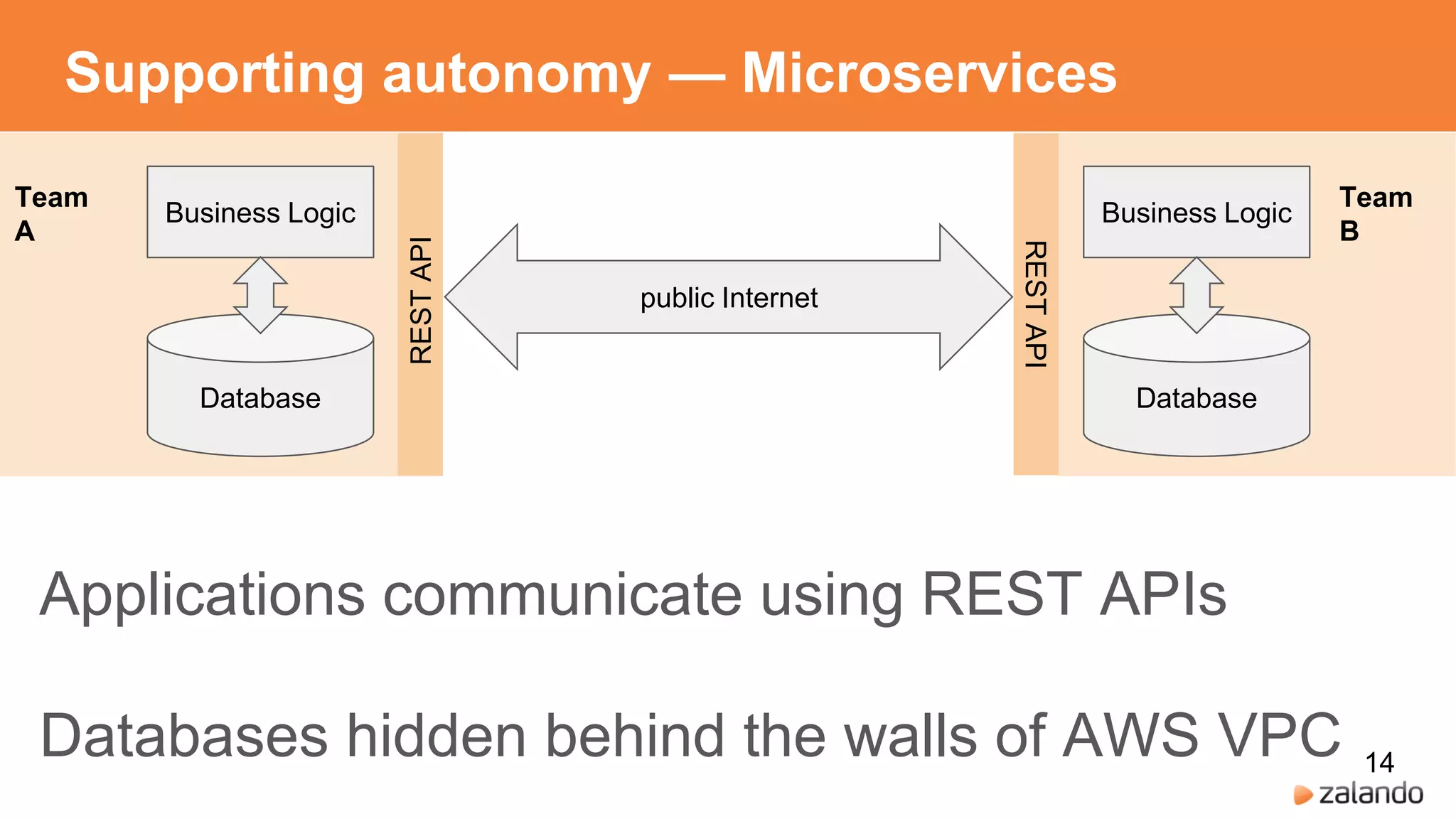

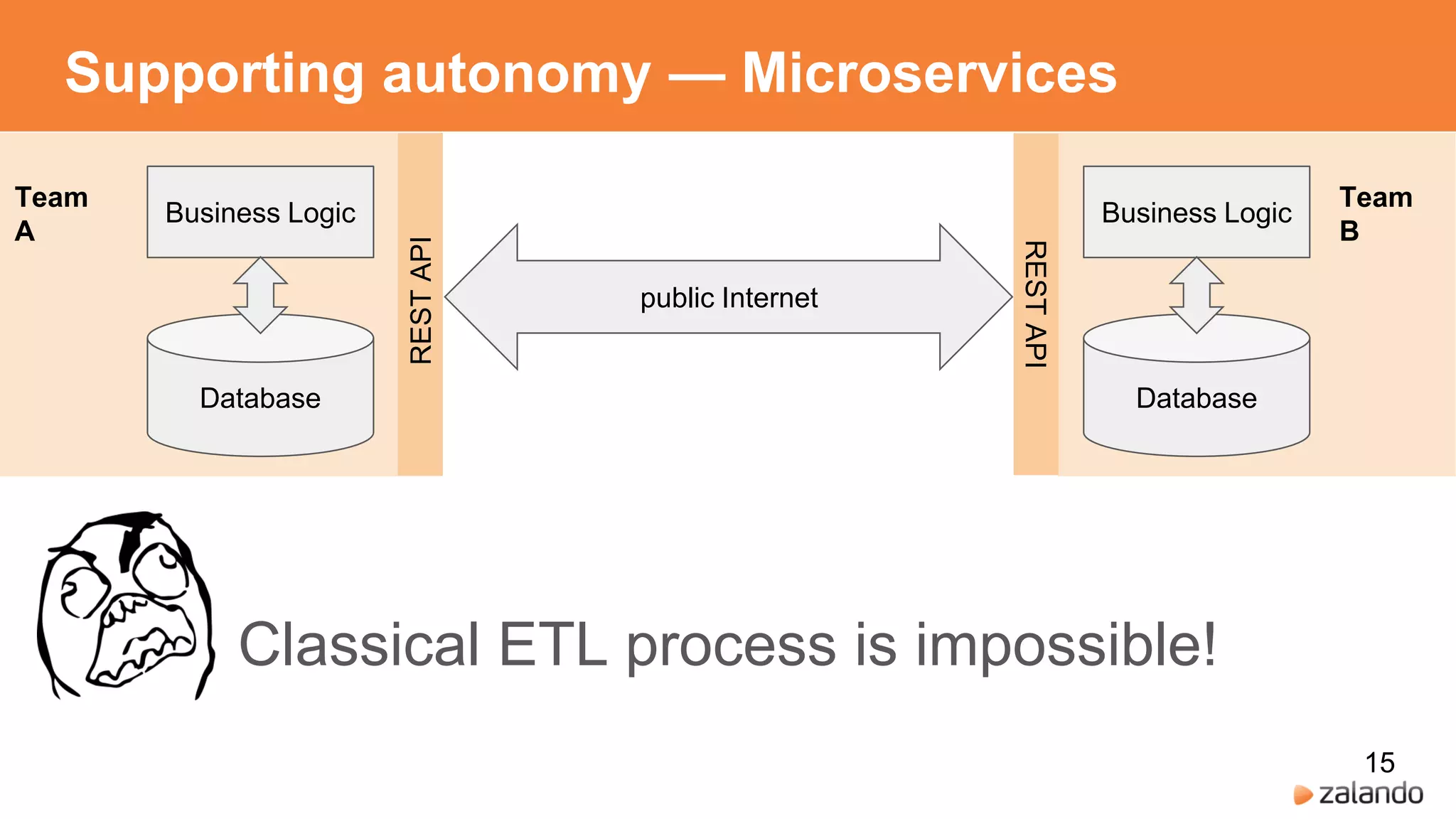

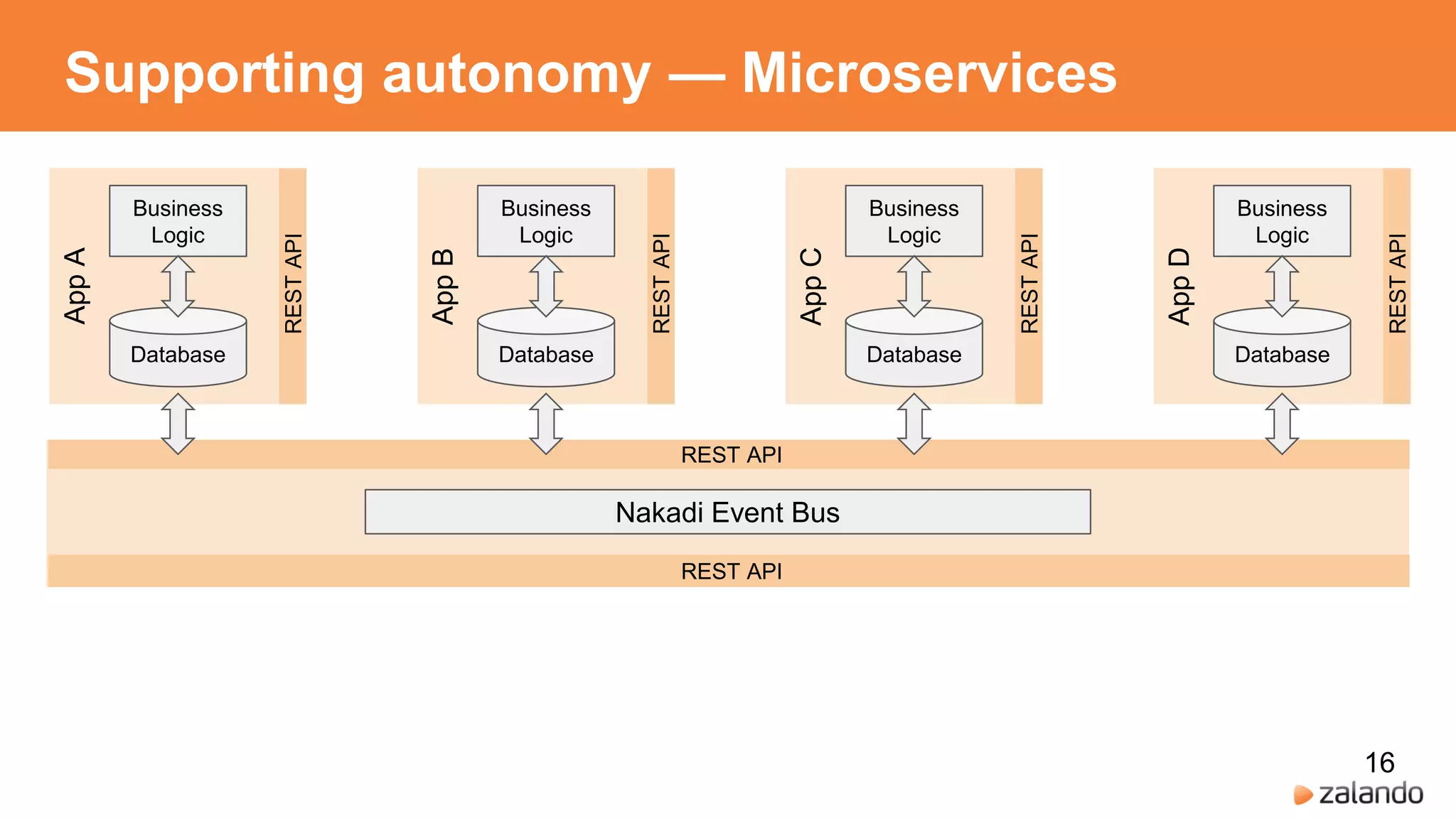

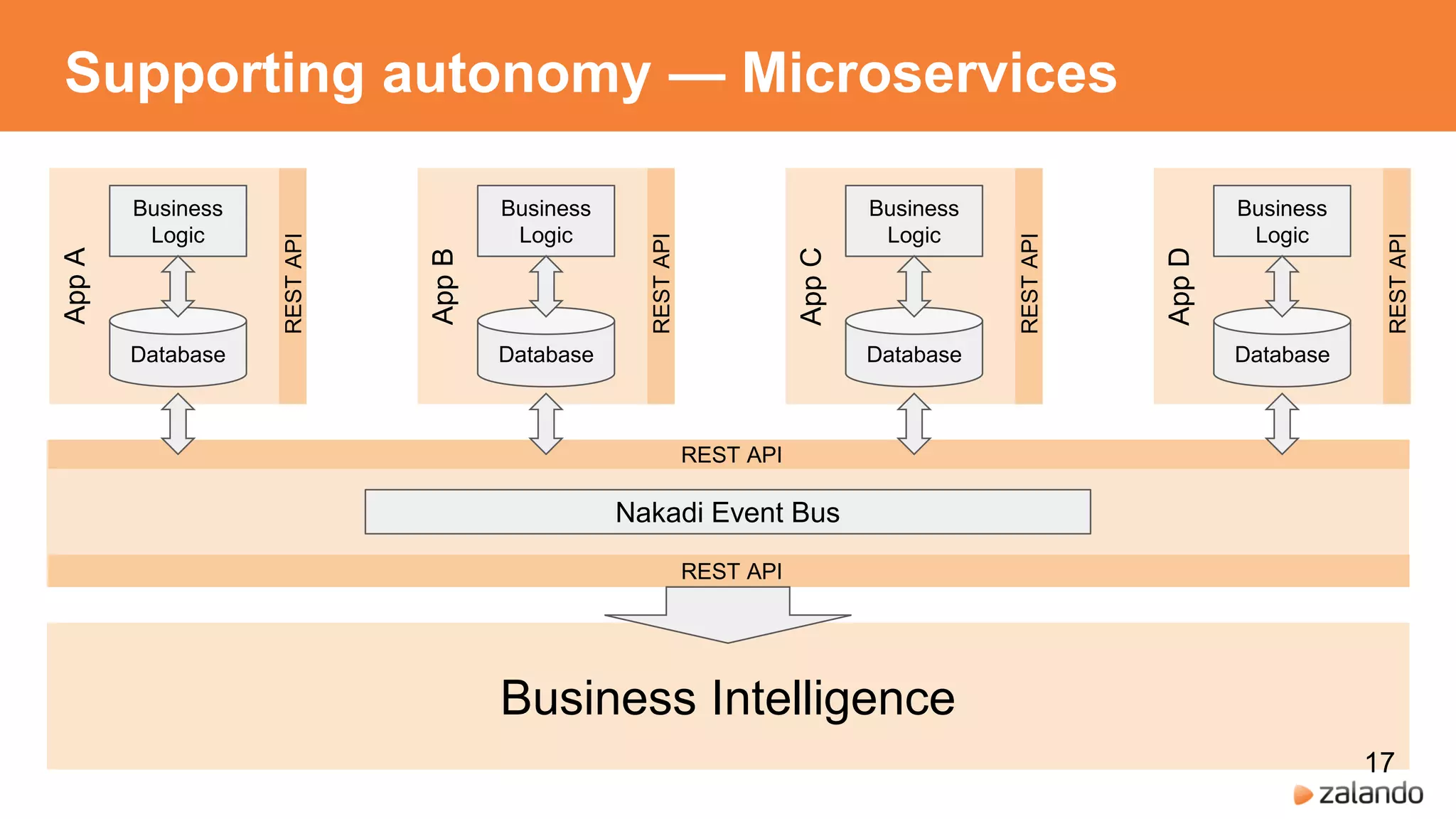

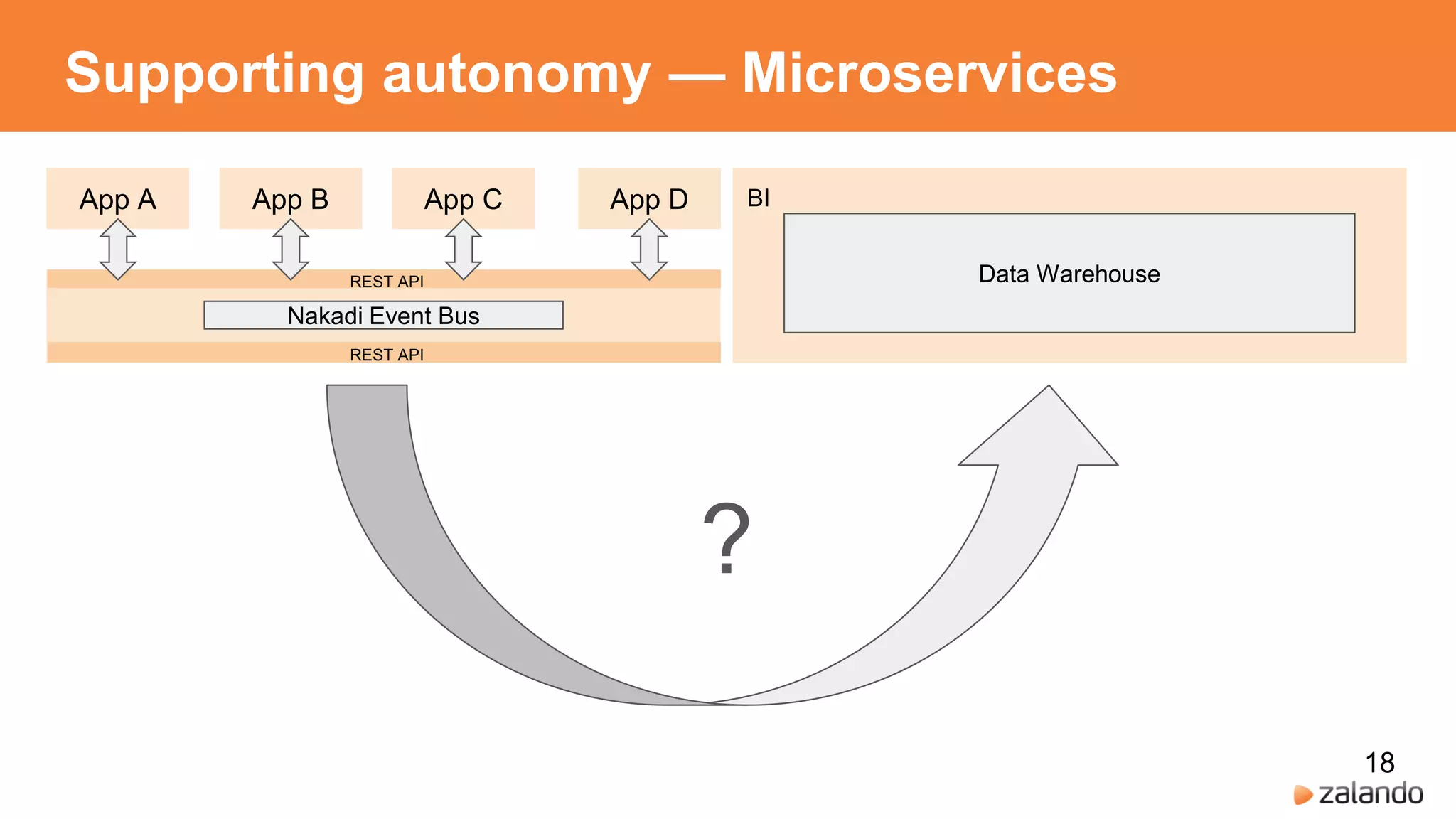

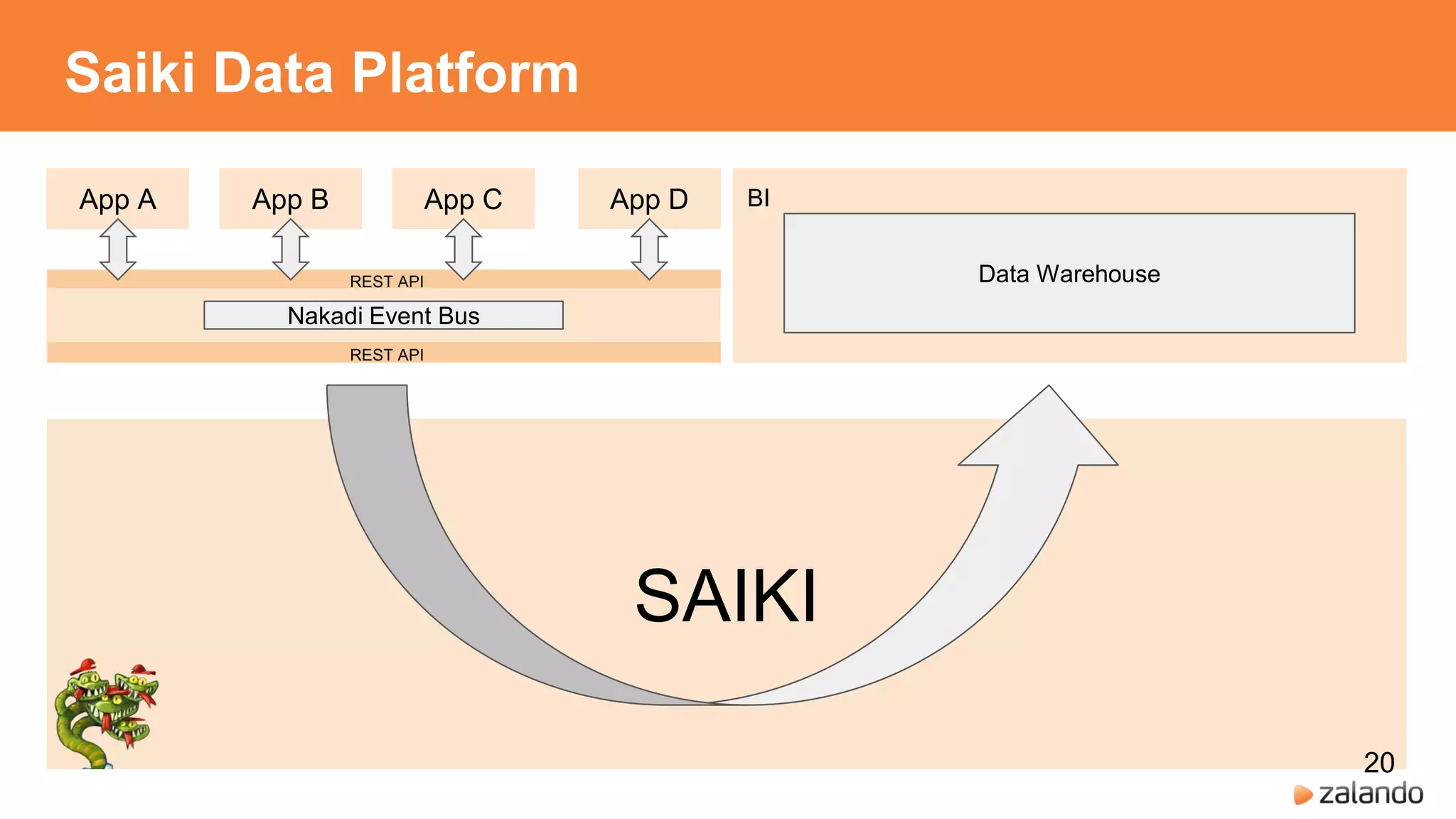

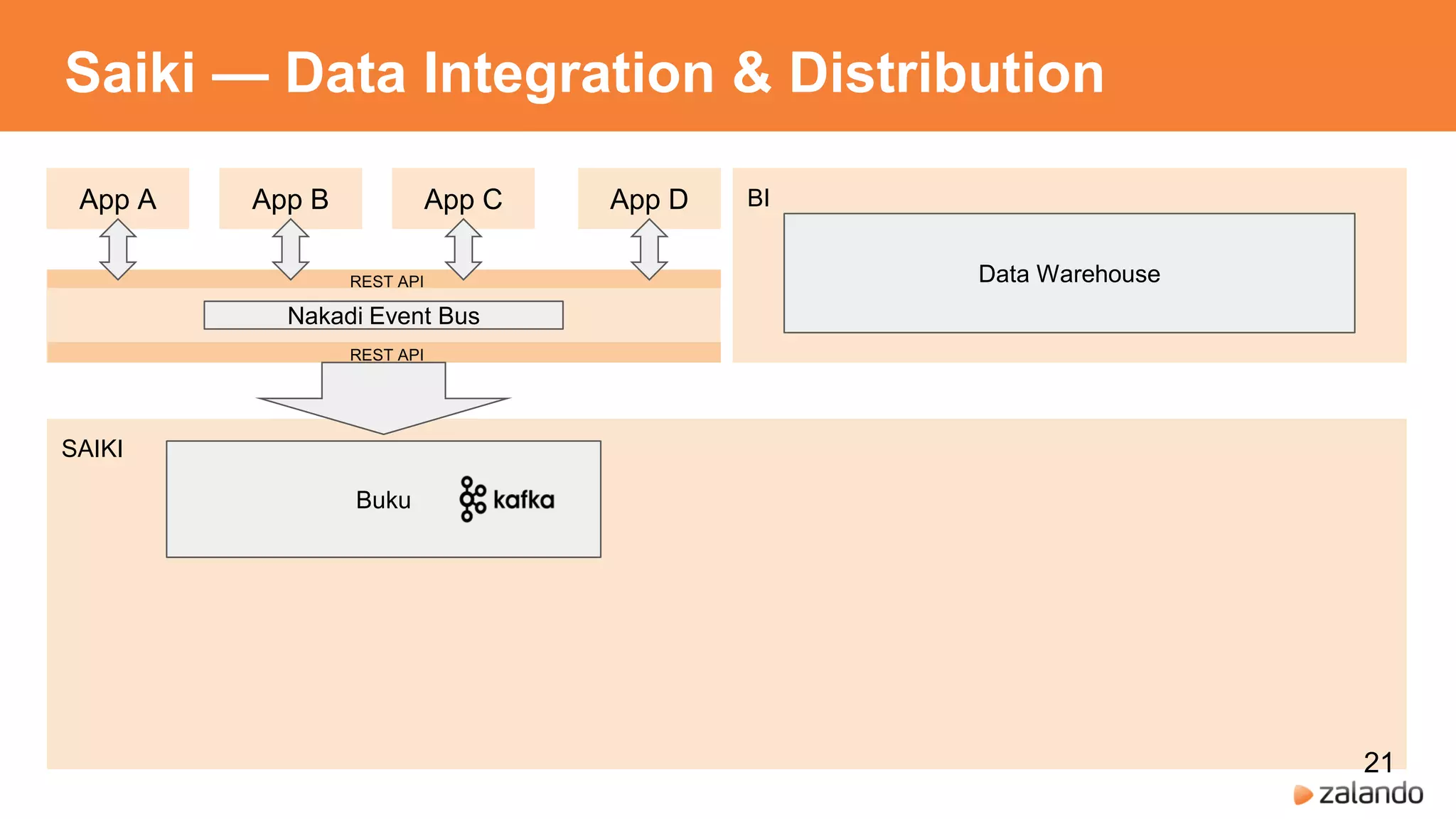

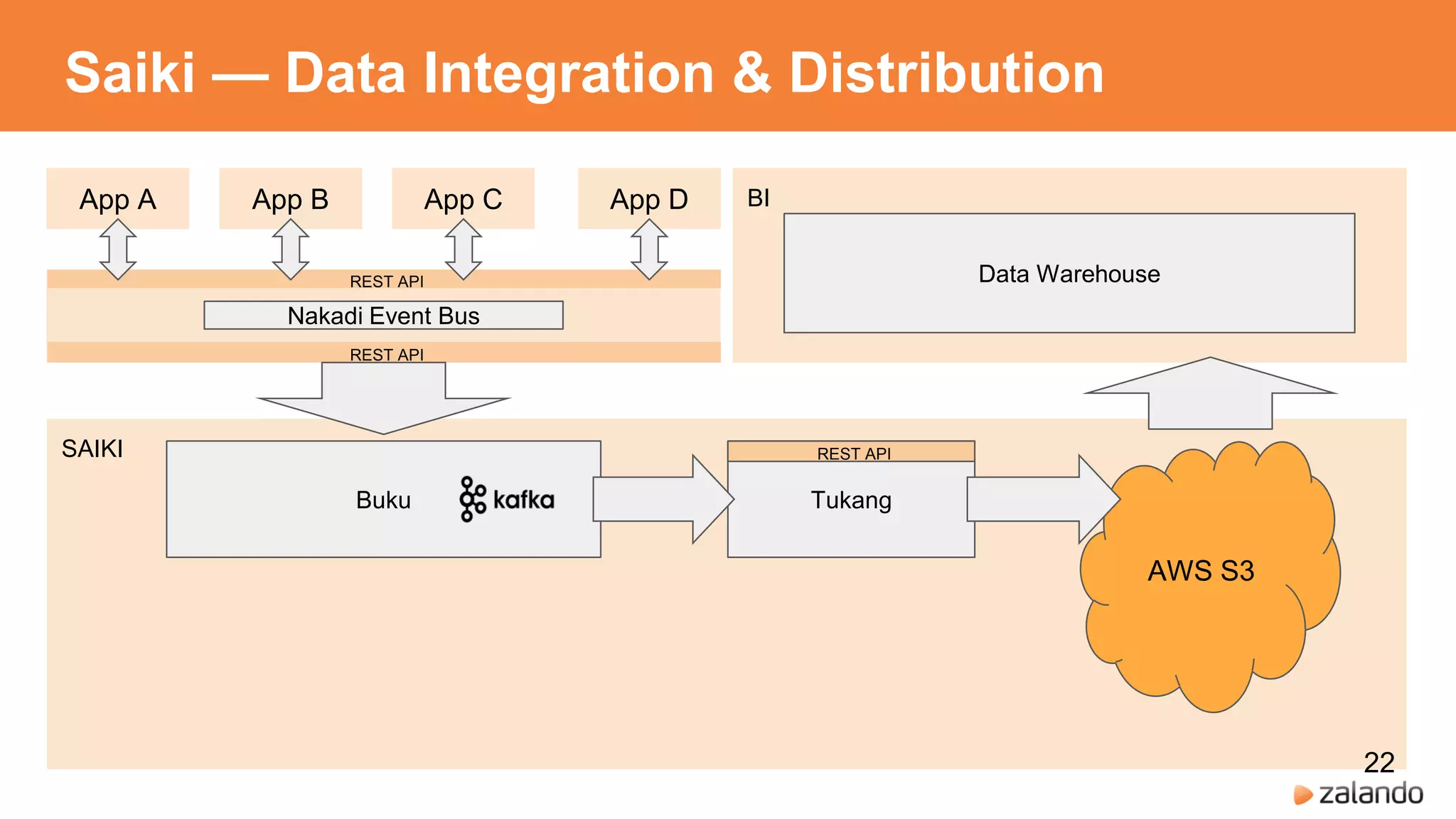

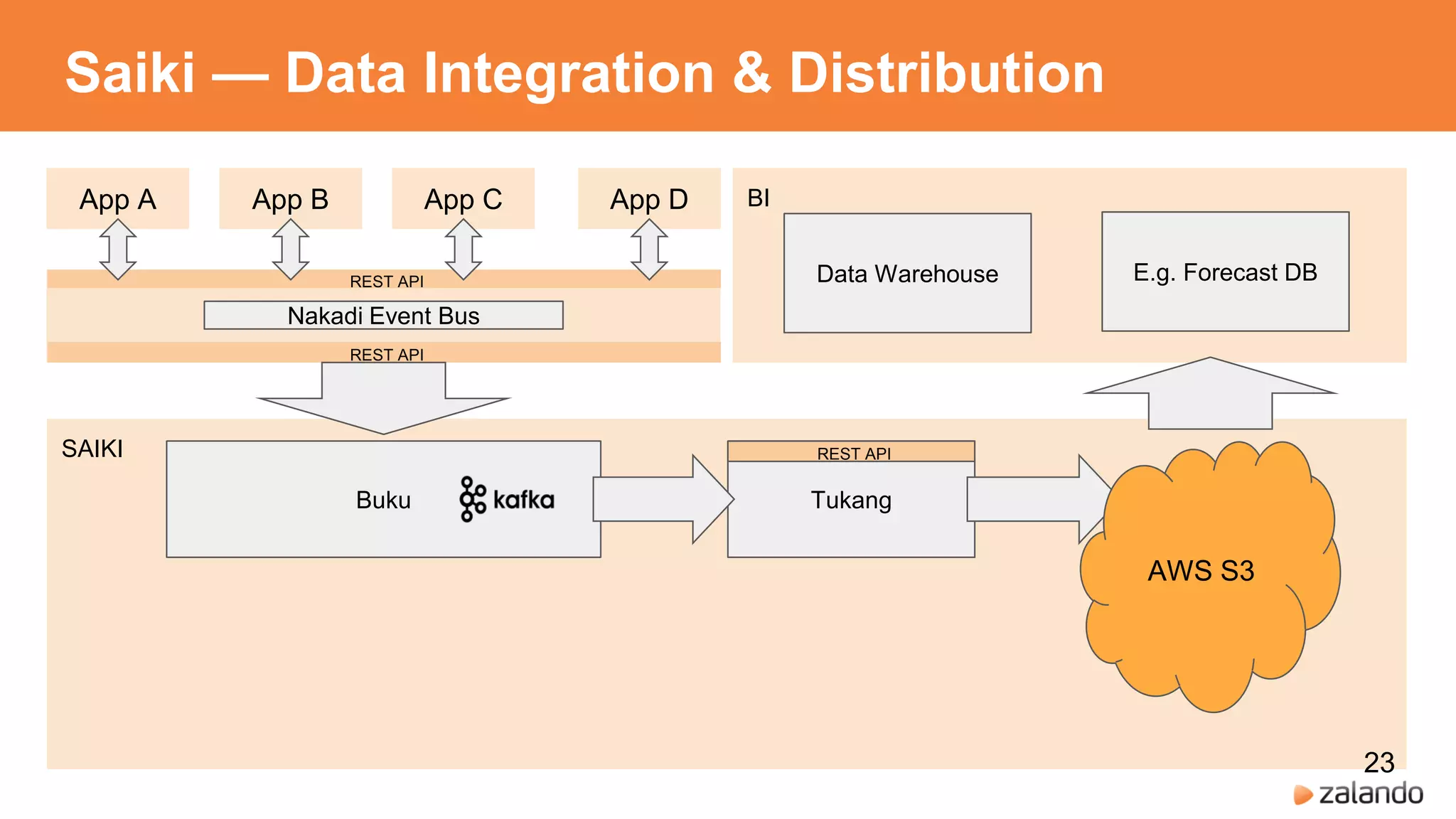

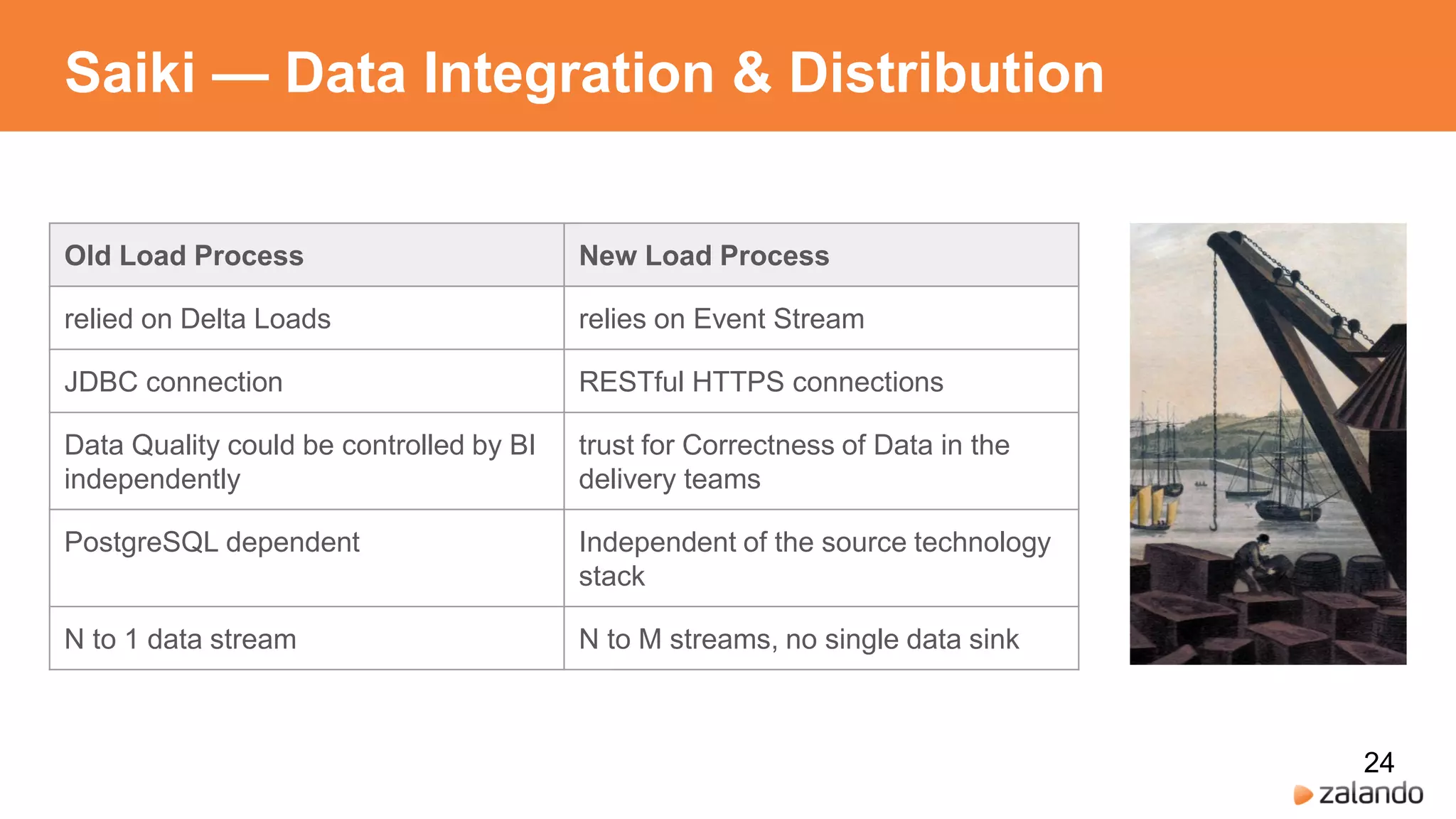

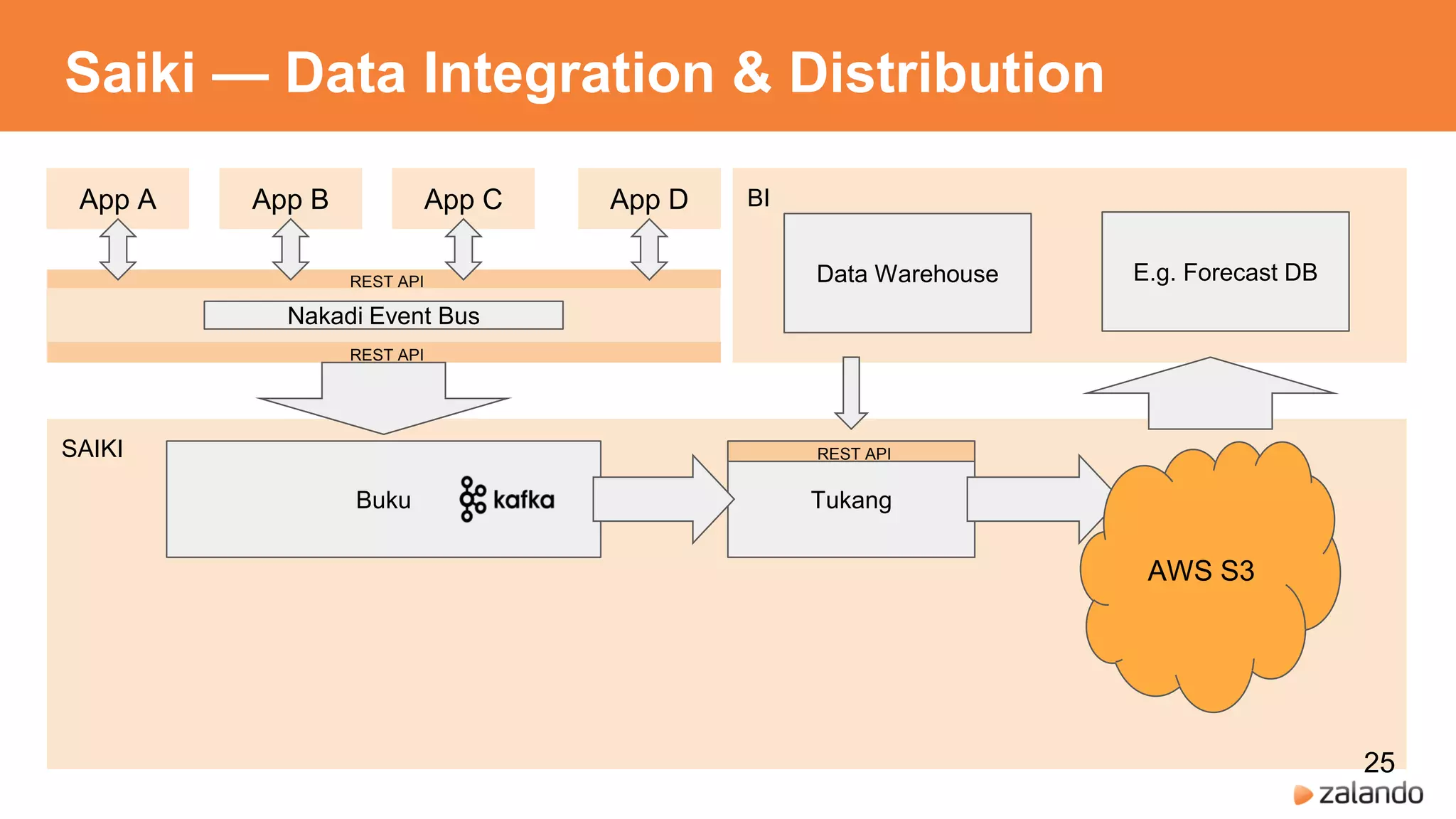

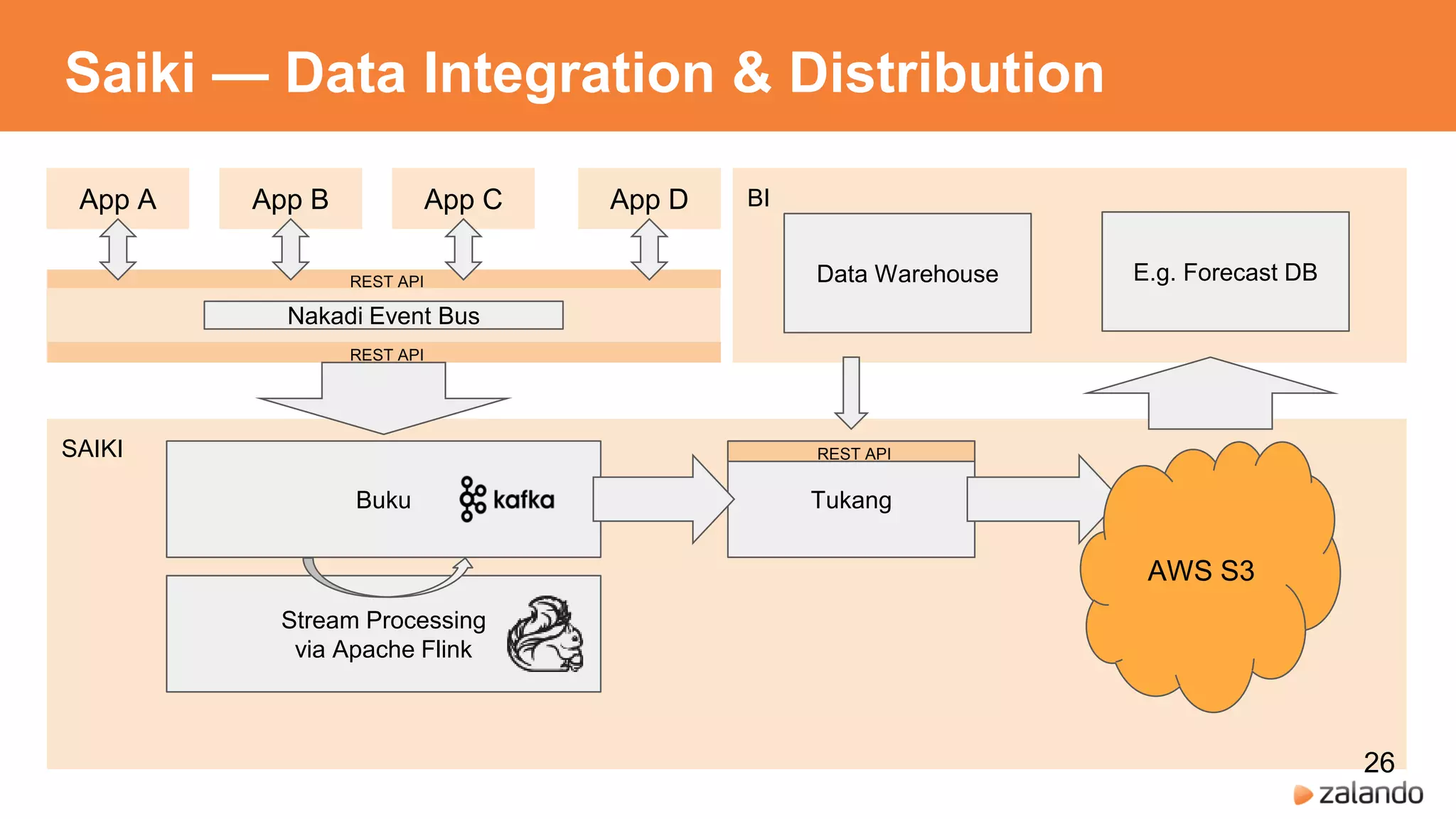

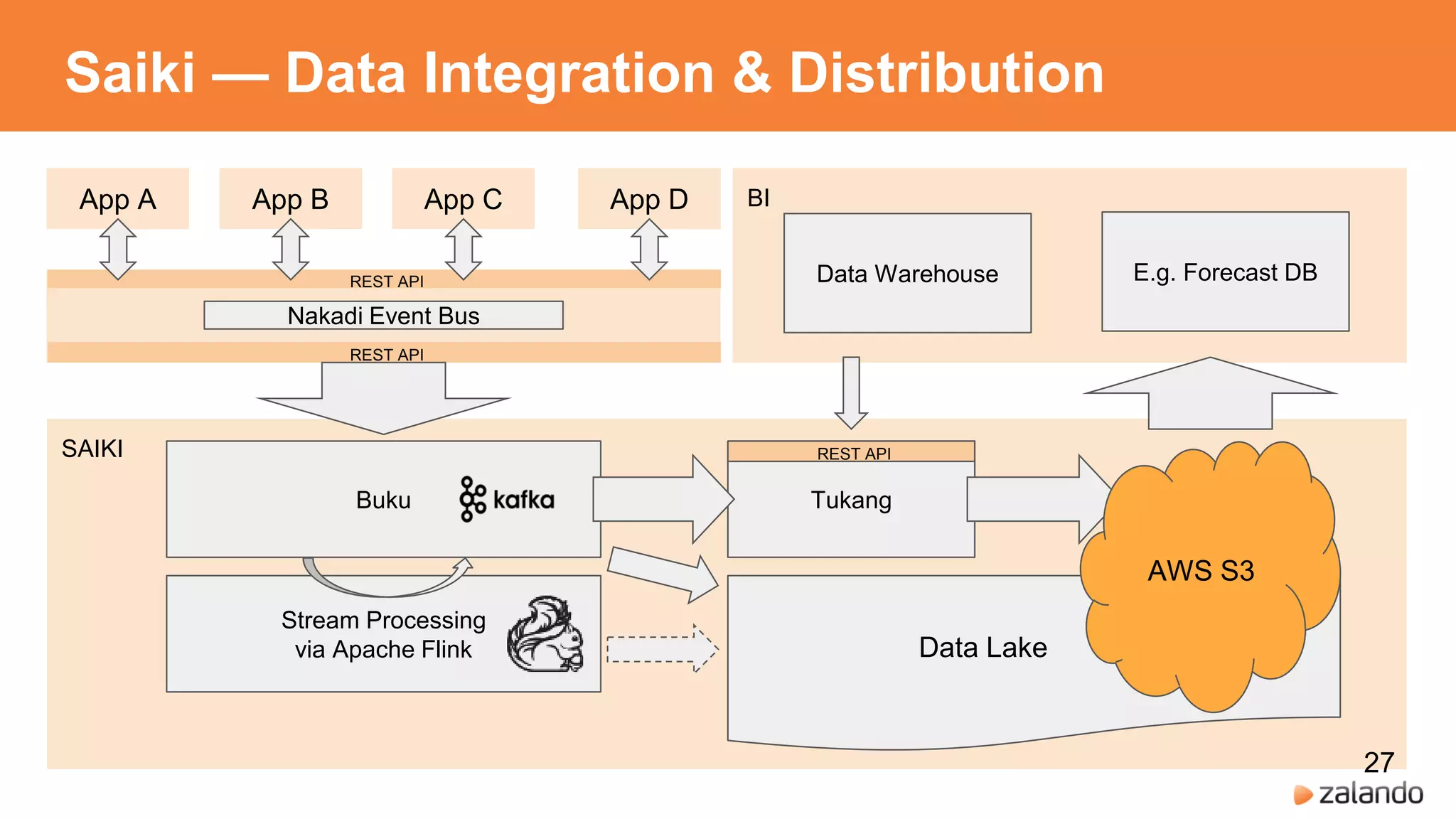

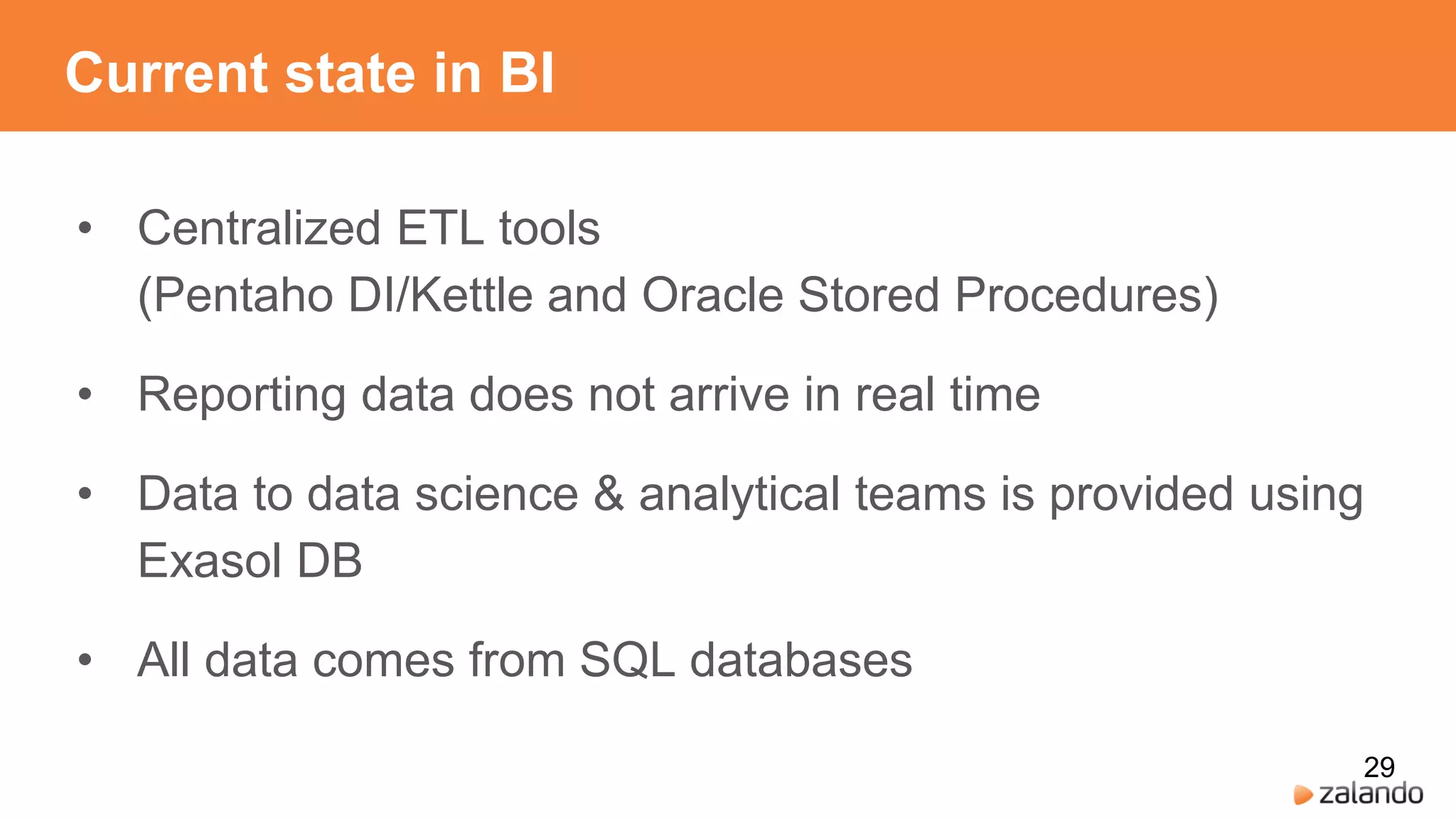

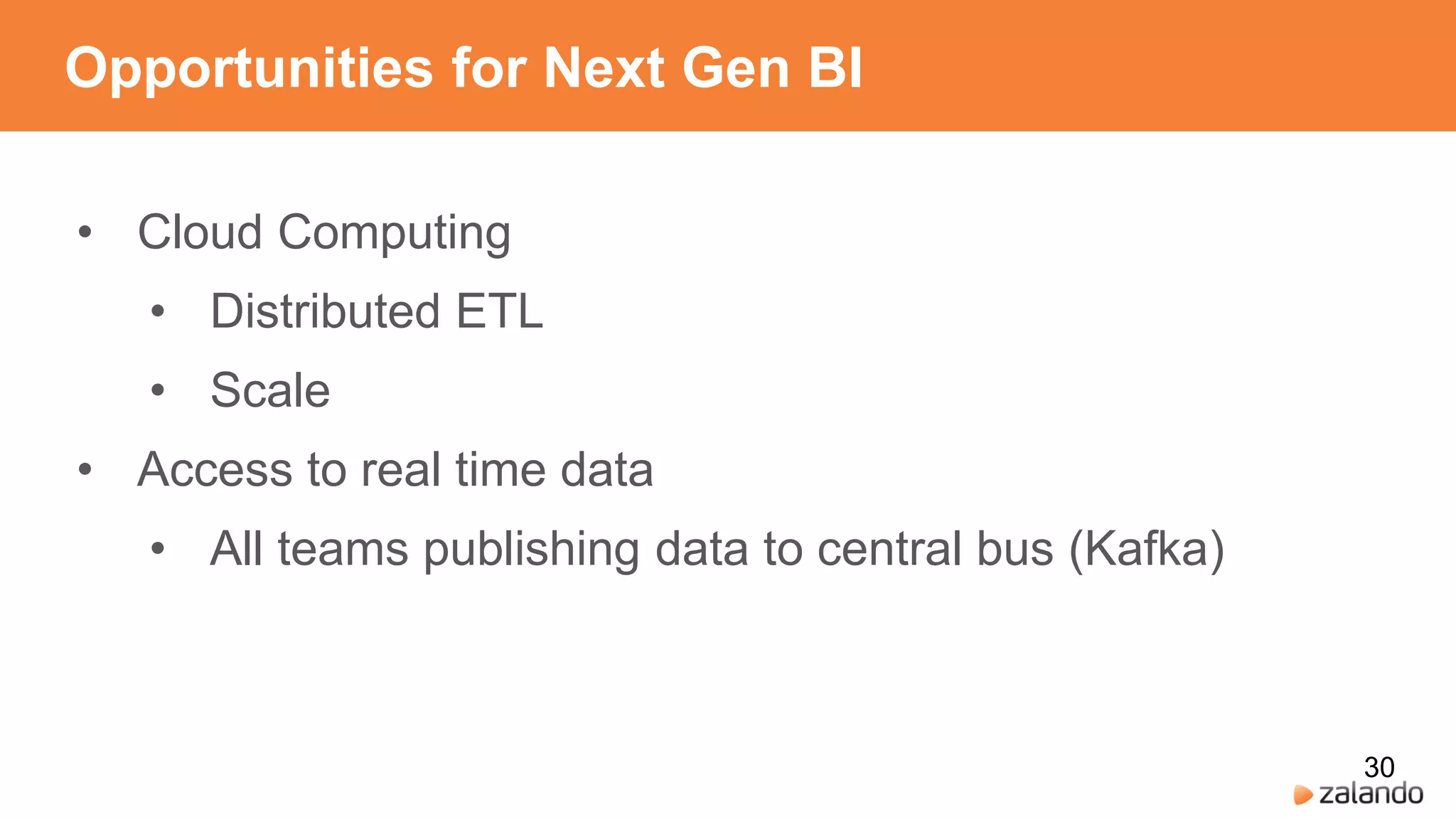

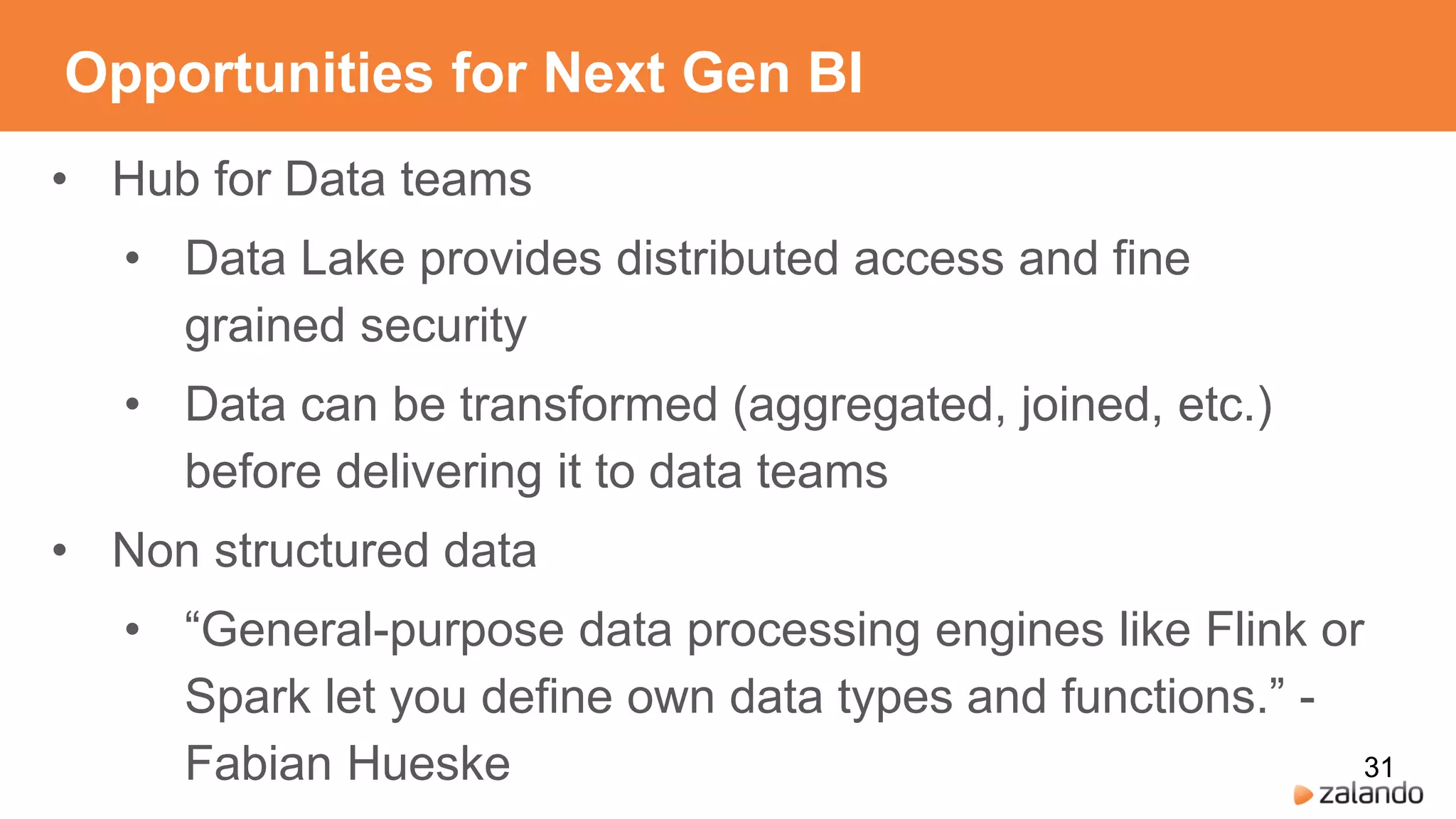

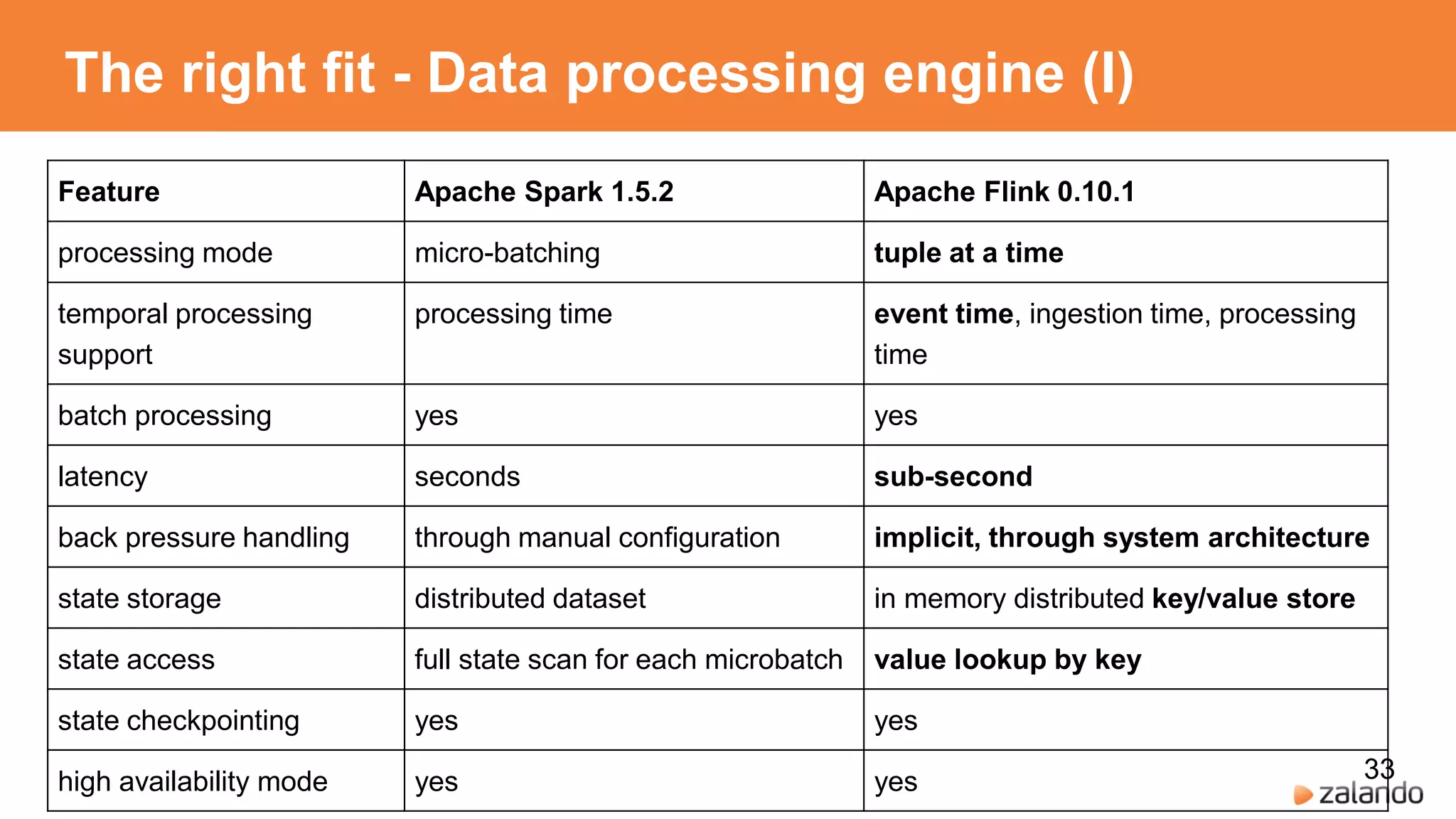

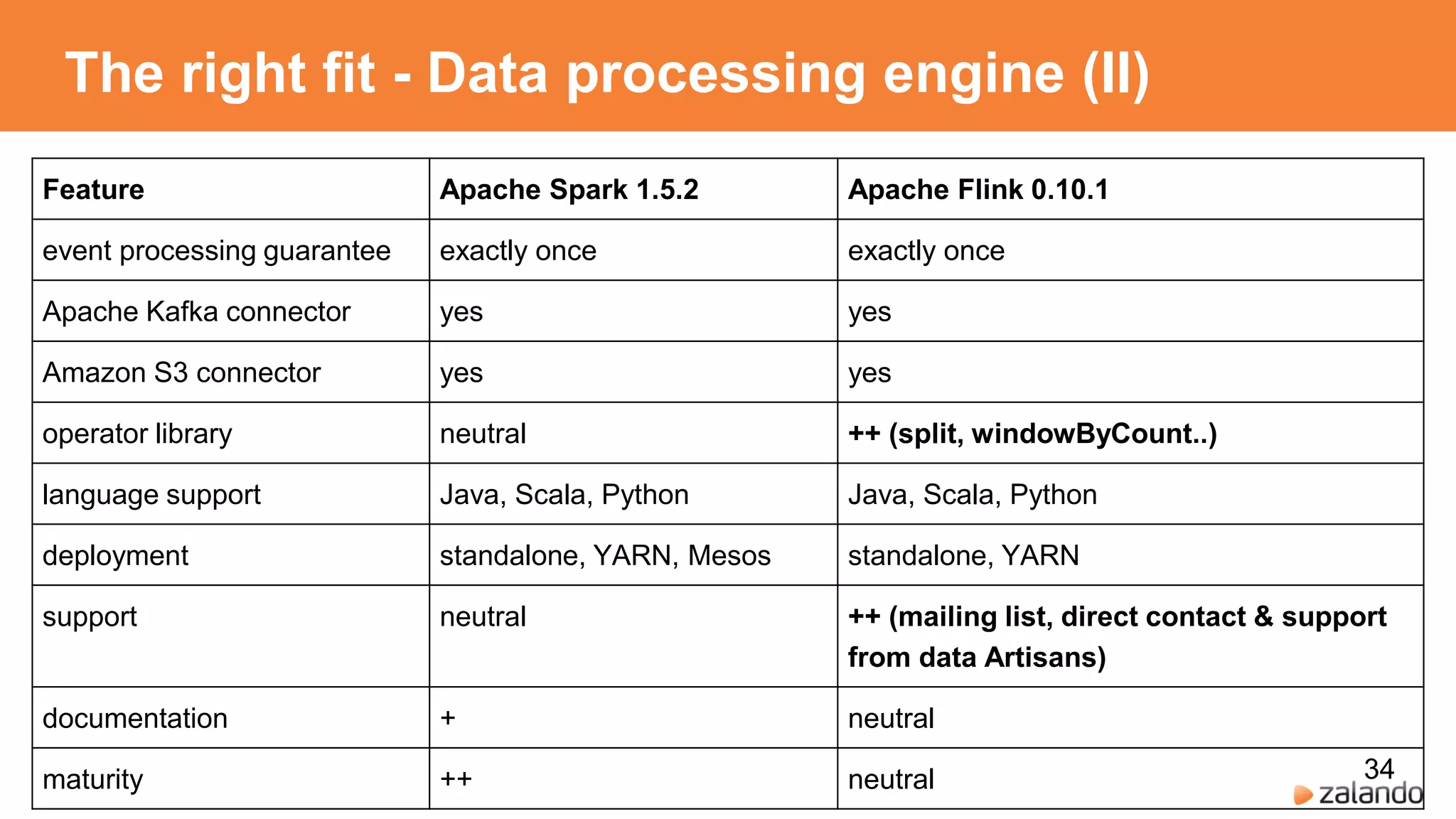

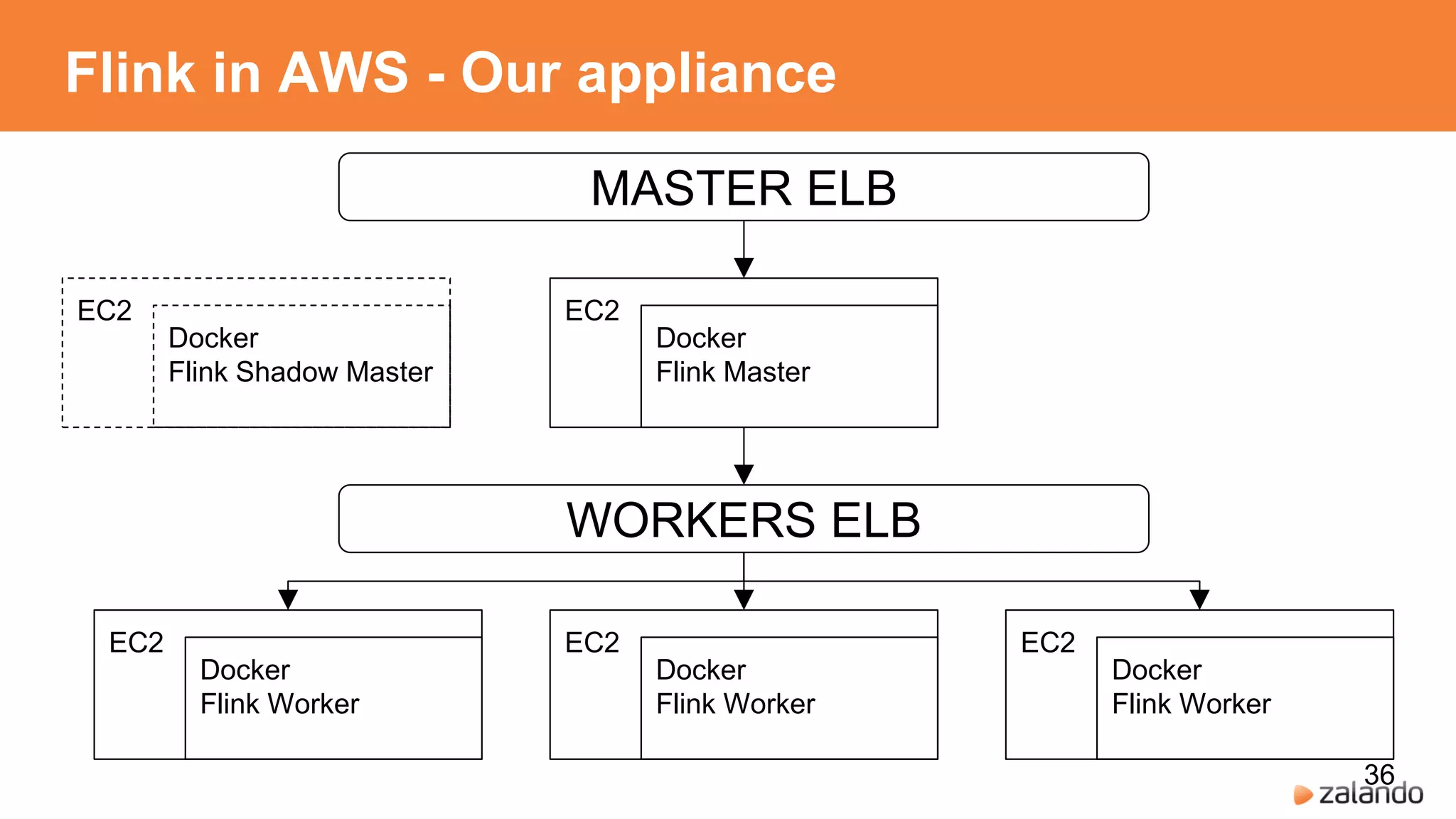

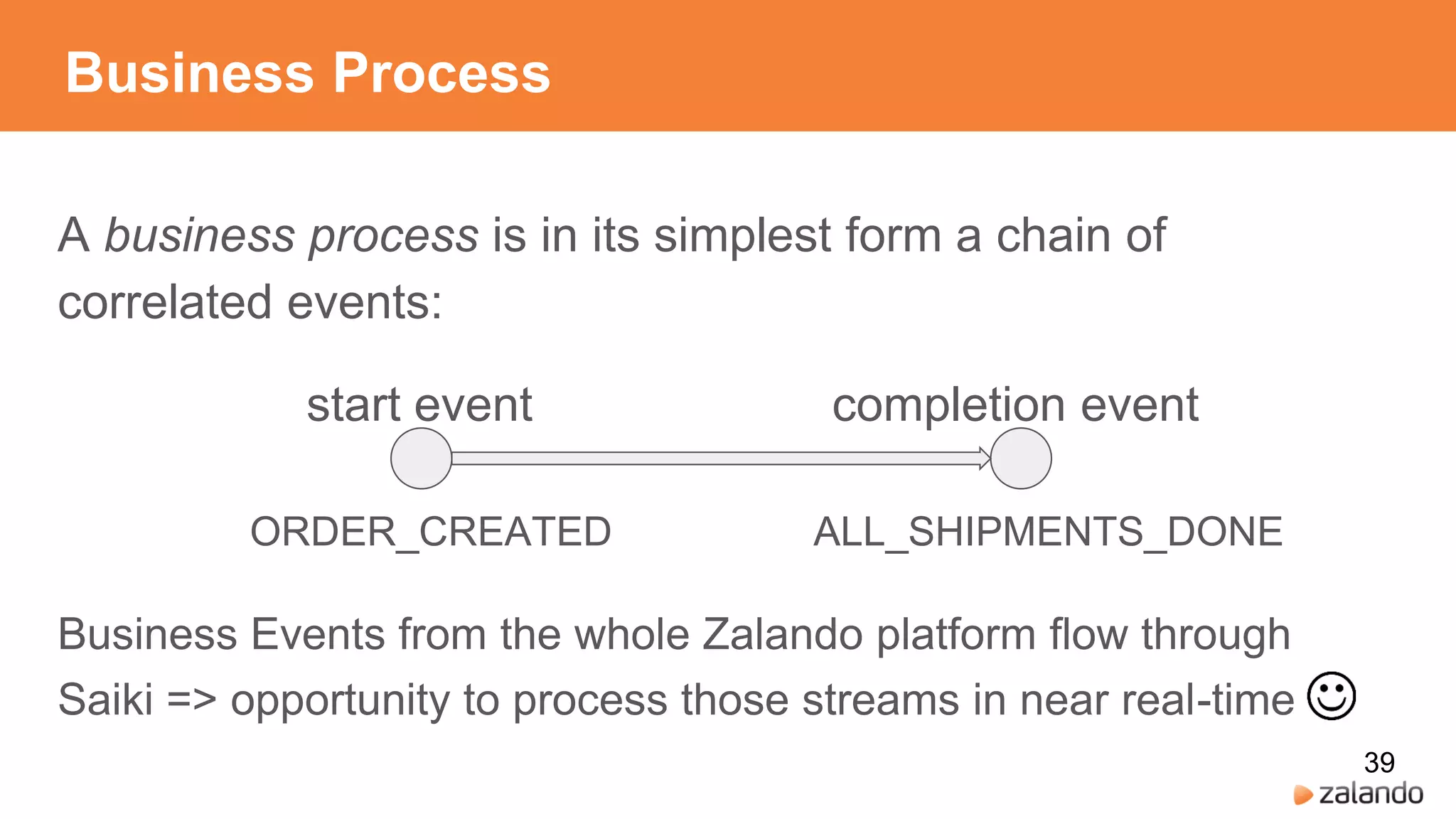

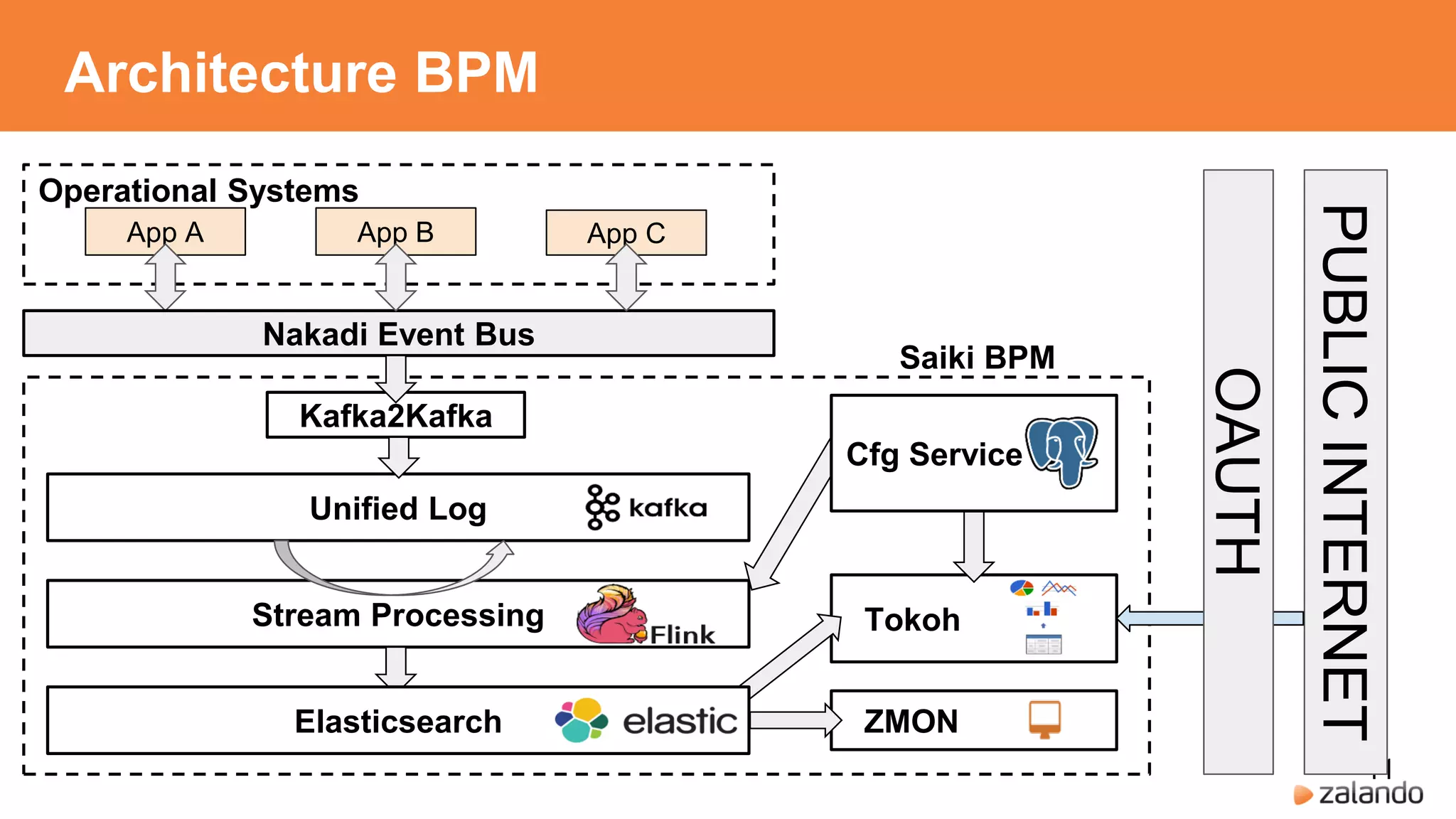

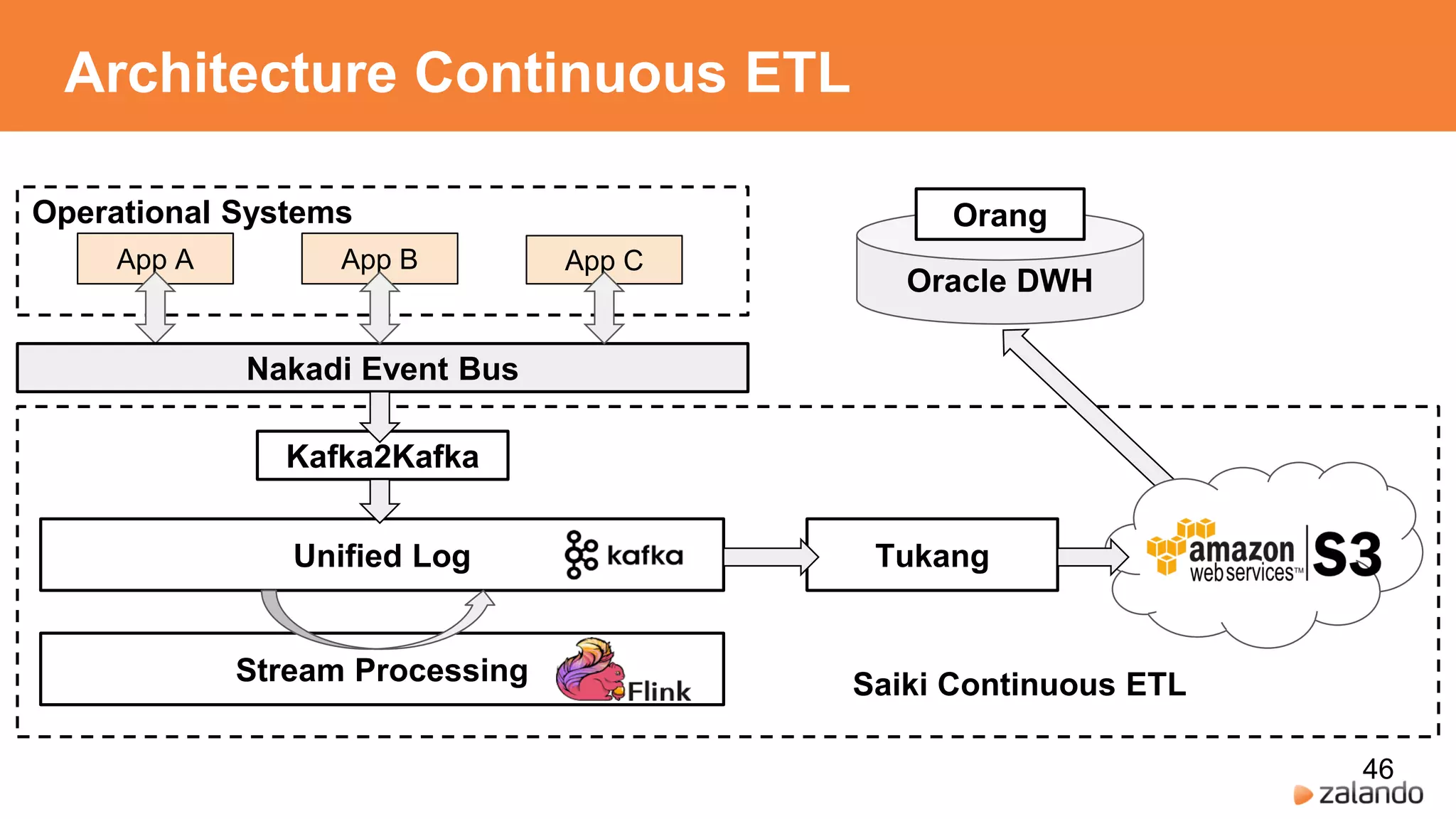

Zalando's microservices architecture leverages Apache Flink for data integration and streaming processes, enhancing agility and real-time data access. The transition from classical ETL approaches to a more distributed, event-driven model enables efficient business process monitoring and continuous ETL. Future work includes scaling complex event processing and integrating more robust data pipeline management solutions.