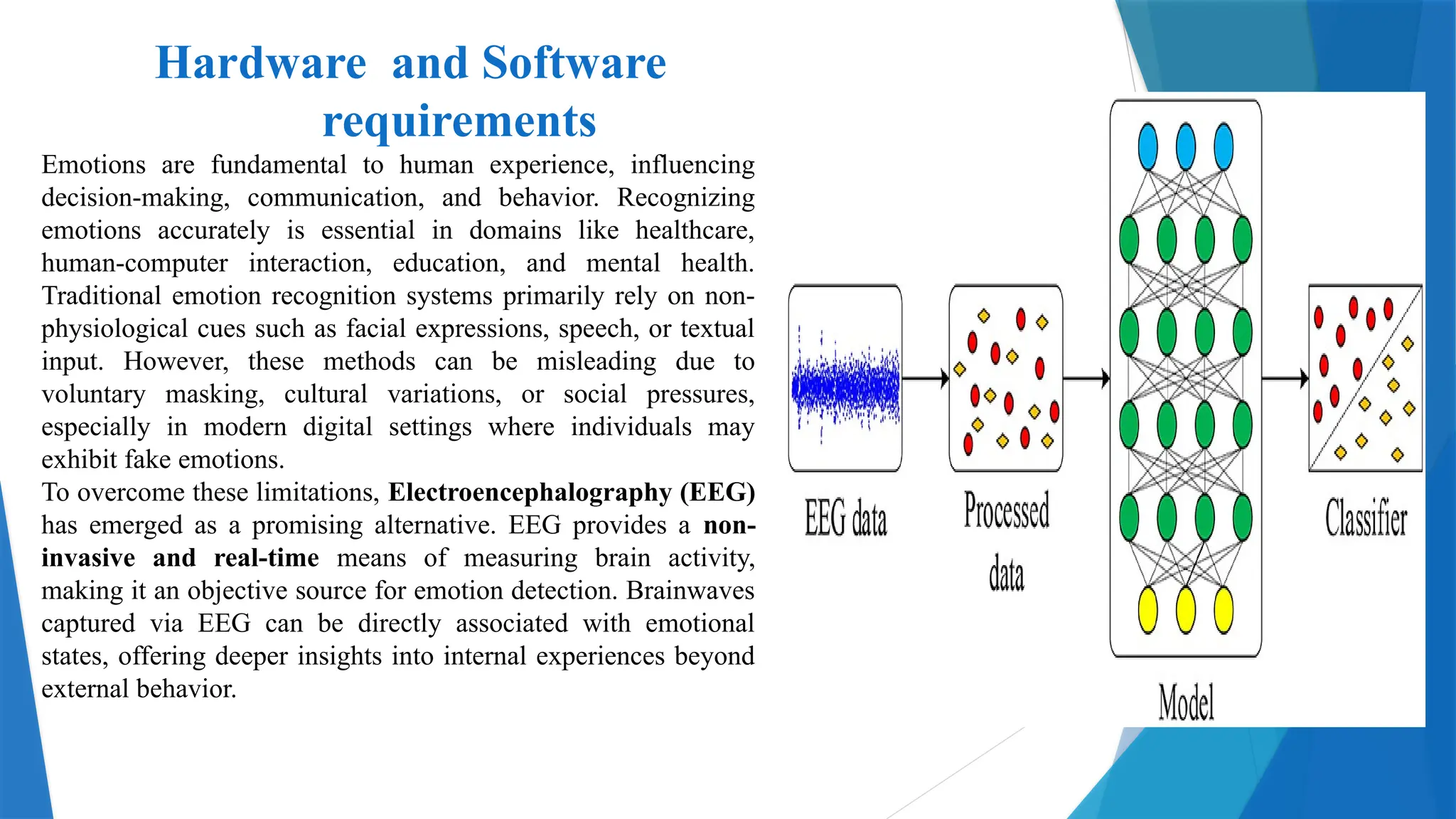

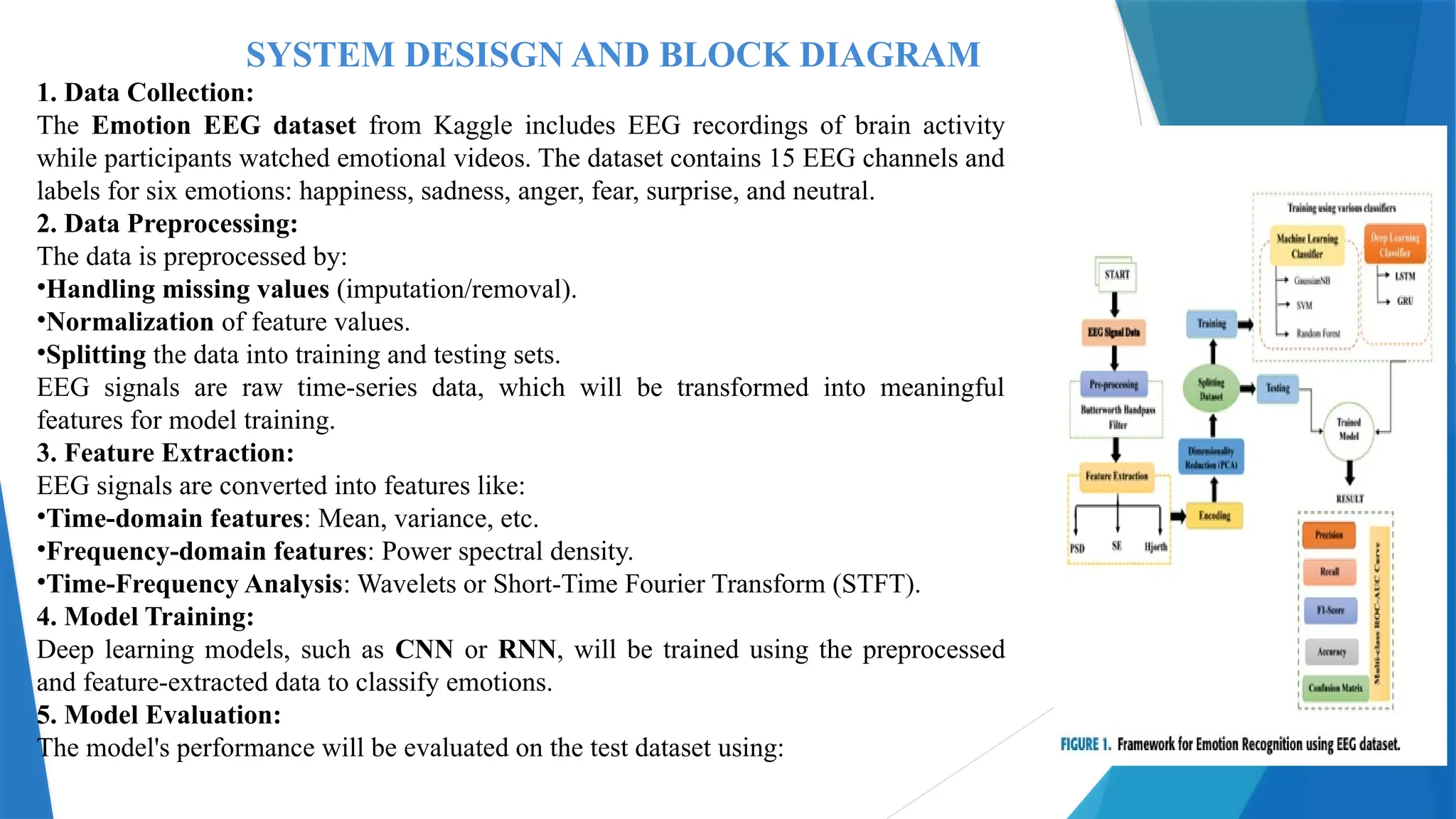

In this presentation, we explore the fundamentals and practical applications of deep learning, a subset of machine learning inspired by the structure and function of the human brain. Our project delves into how deep neural networks are designed, trained, and applied to solve complex problems across various domains.

We provide a comprehensive overview of key architectures such as Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), and Transformers, highlighting their strengths and use cases. The presentation includes a detailed case study of our implemented model, which addresses [insert your project focus – e.g., image classification, sentiment analysis, object detection, etc.], showcasing the workflow from data preprocessing to model evaluation.