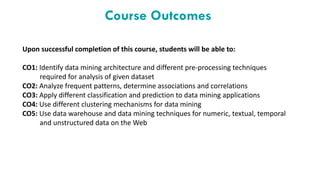

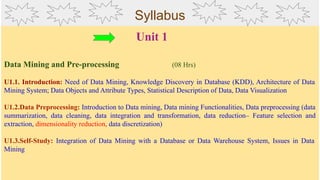

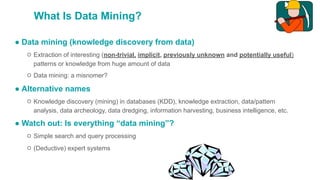

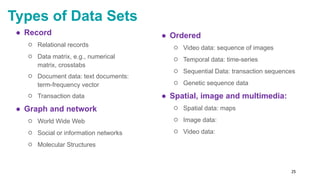

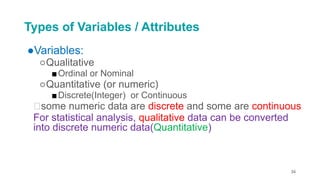

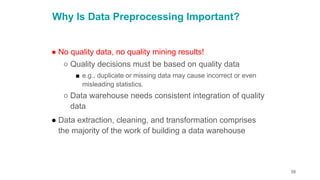

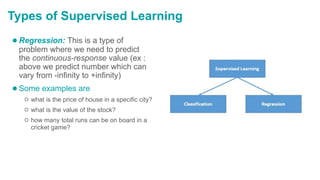

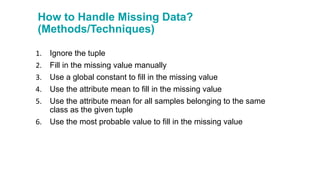

This document provides information about a course on data mining and data warehousing. It includes the vision and mission statements of the university and computer science department. It outlines the program outcomes, program educational objectives, and course outcomes. Finally, it provides a detailed syllabus covering topics like data preprocessing, frequent pattern mining, classification, clustering, and data warehousing. The goal is to teach students how to extract useful patterns from data through techniques like association rule mining, classification, and clustering.

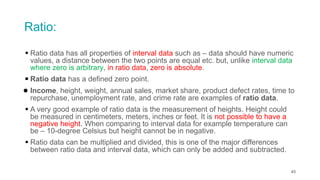

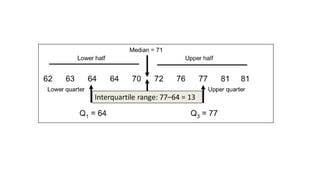

![1960s

Data Collection

[Computers, Tape]

1970s

Data Access

Relational Database

1980s

Application oriented RDBMS

Object Oriented Model

1990s

Data Mining

Data Warehousing

2000s

Big Data Analytics

No-SQL

Evolution of Data Mining](https://image.slidesharecdn.com/dmdwunit1-221012065903-d1d22c93/85/DMDW-Unit-1-pdf-12-320.jpg)

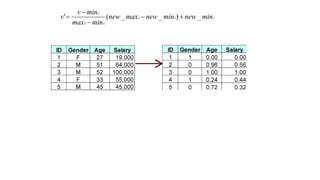

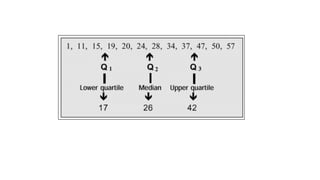

![Data Transformation: Normalization

●Min-max normalization: to [new_minA

, new_maxA

]

○ Ex. Let income range $12,000 to $98,000 normalized to [0.0, 1.0]. Then

$73,600 is mapped to](https://image.slidesharecdn.com/dmdwunit1-221012065903-d1d22c93/85/DMDW-Unit-1-pdf-117-320.jpg)