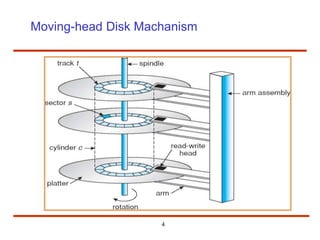

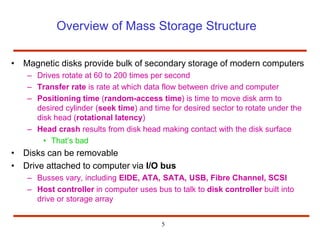

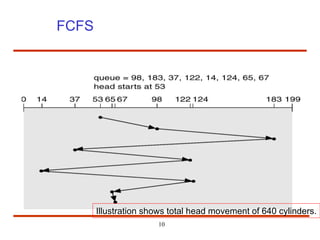

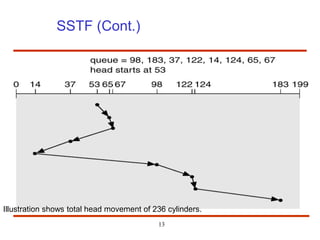

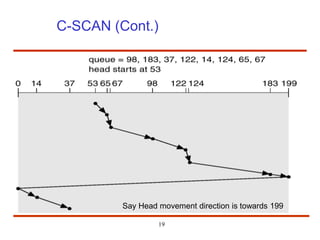

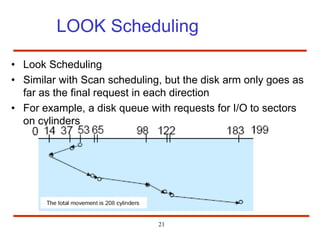

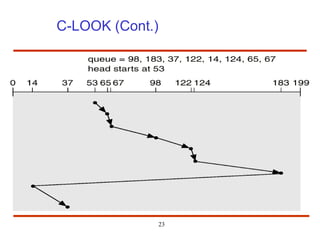

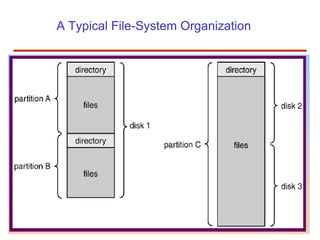

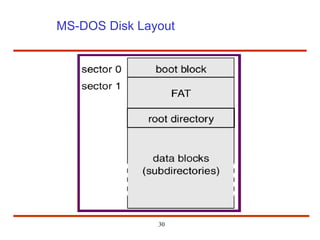

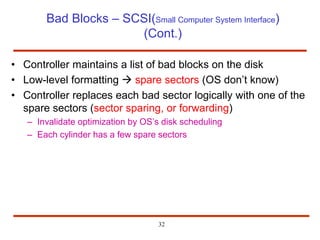

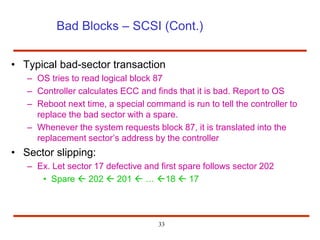

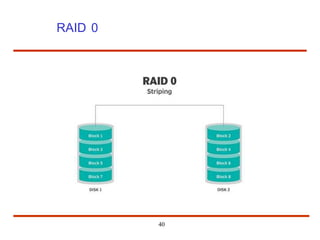

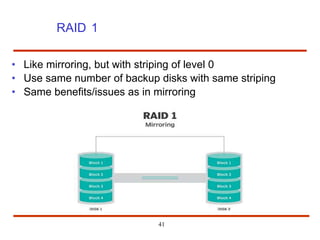

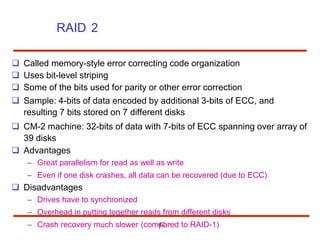

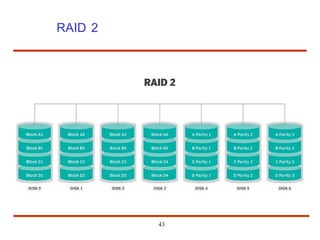

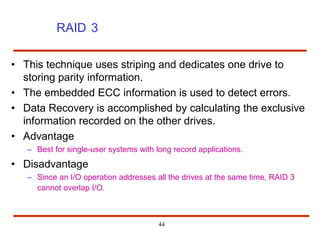

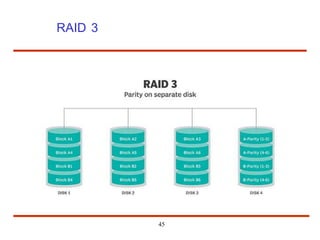

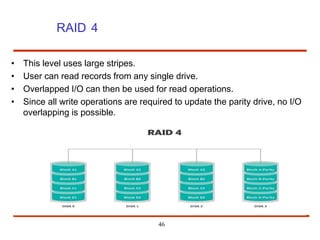

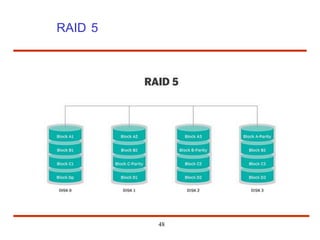

The document discusses the management and structure of secondary storage in computer systems, emphasizing the importance of disks for virtual memory management and file storage. It outlines various disk scheduling algorithms, disk formatting, partitioning, RAID systems, and the implications of bad blocks on disk reliability. The document also examines swap-space management as part of memory management, highlighting different perspectives on the allocation of swap space.