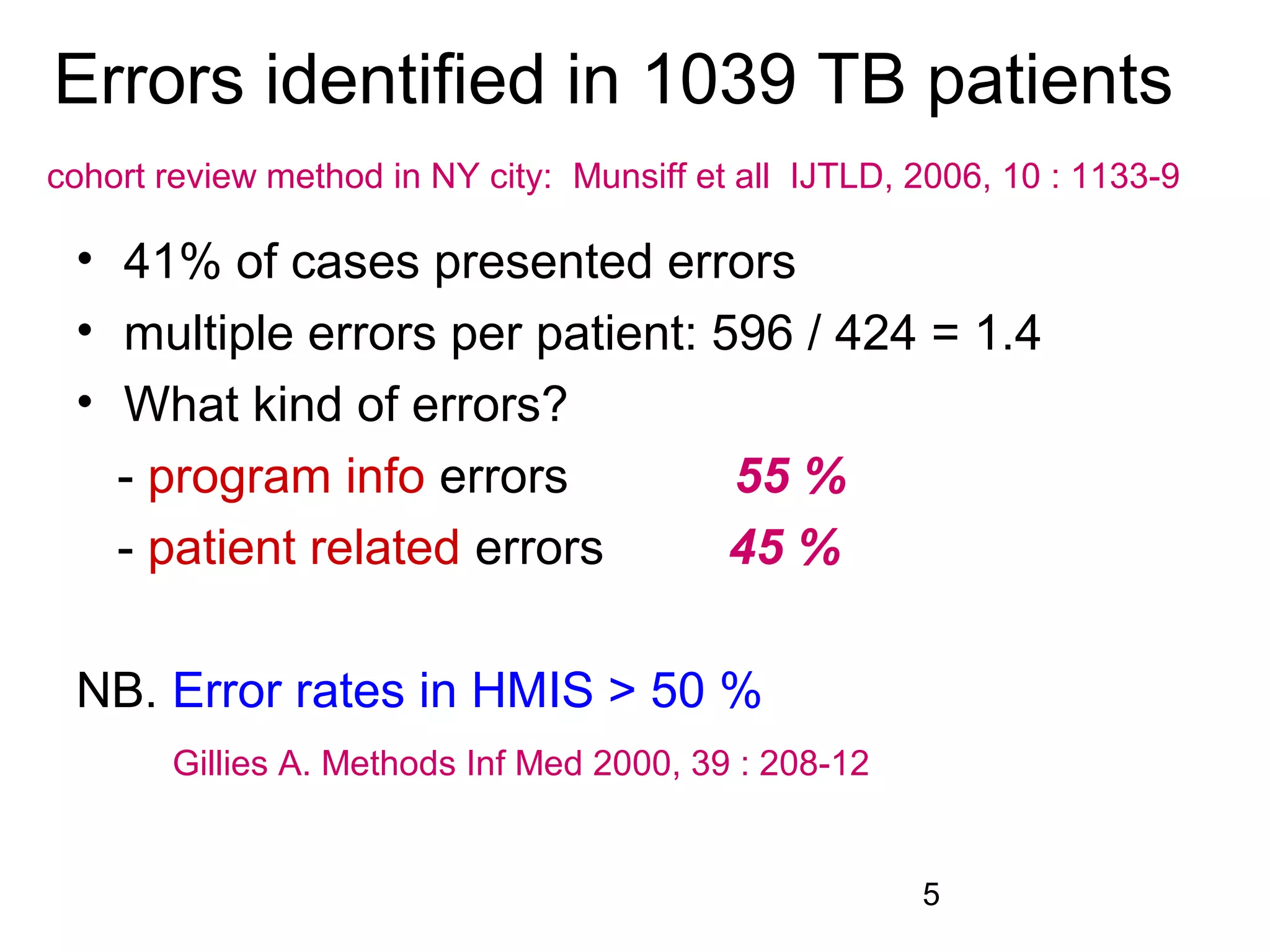

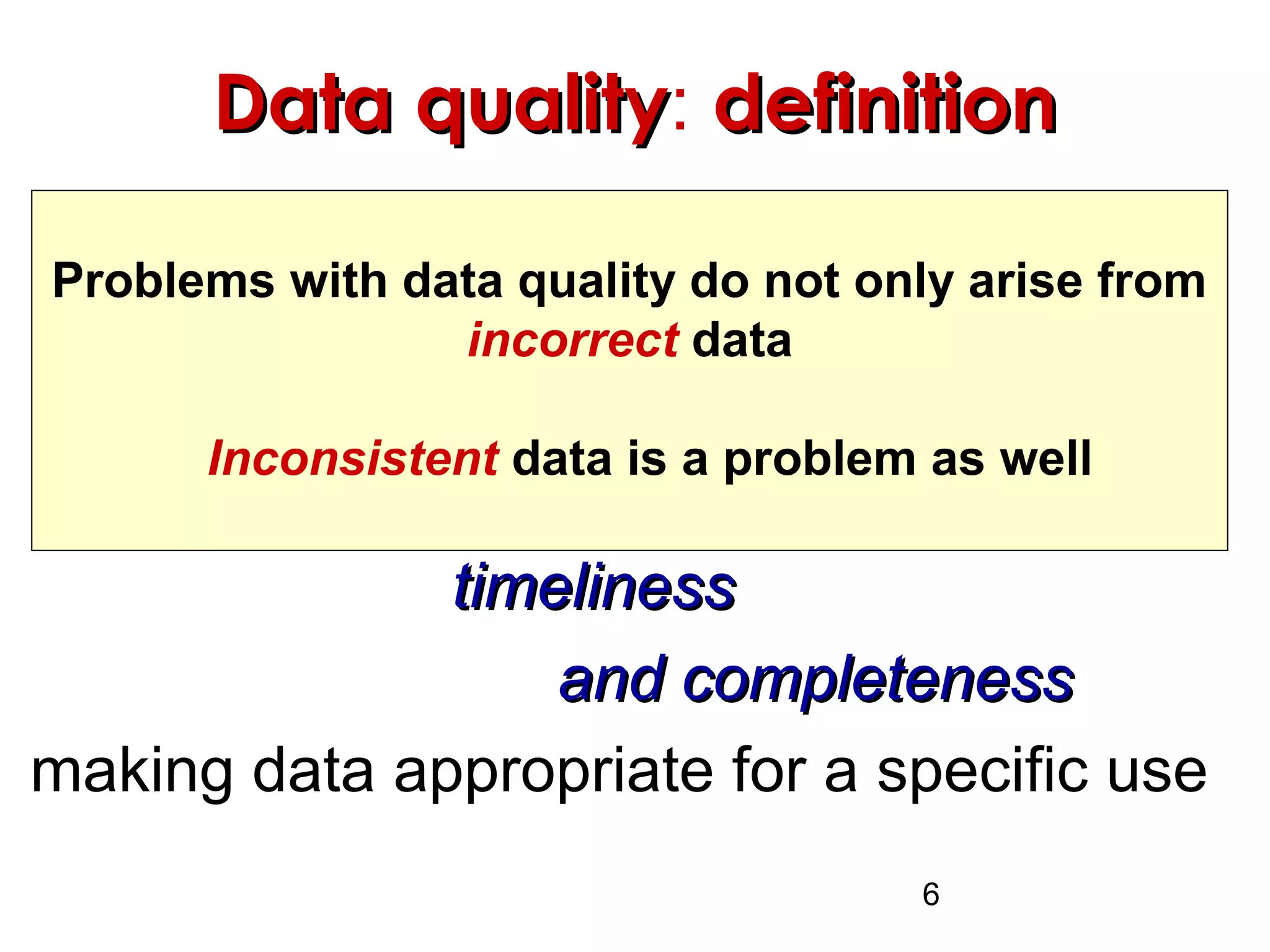

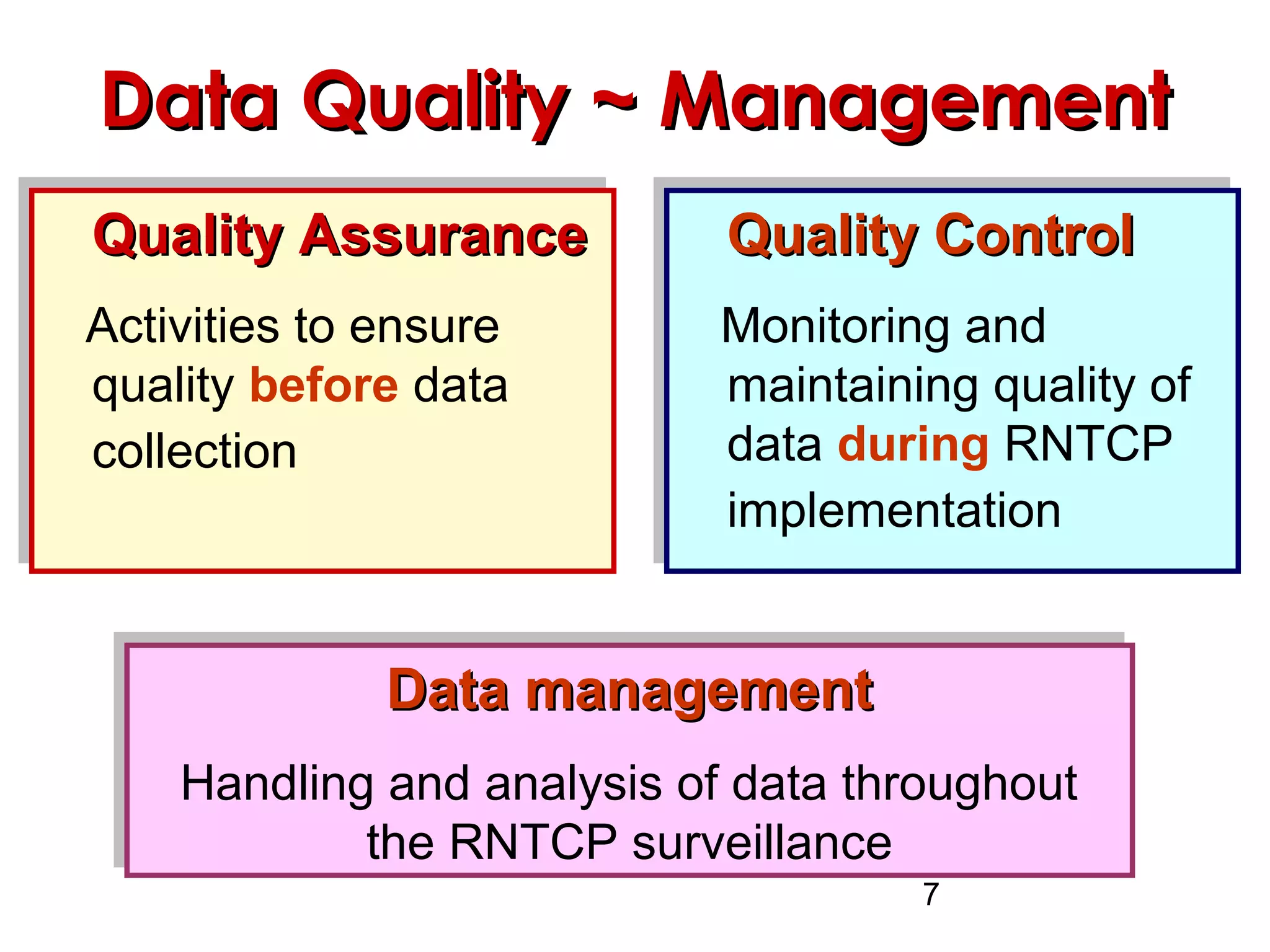

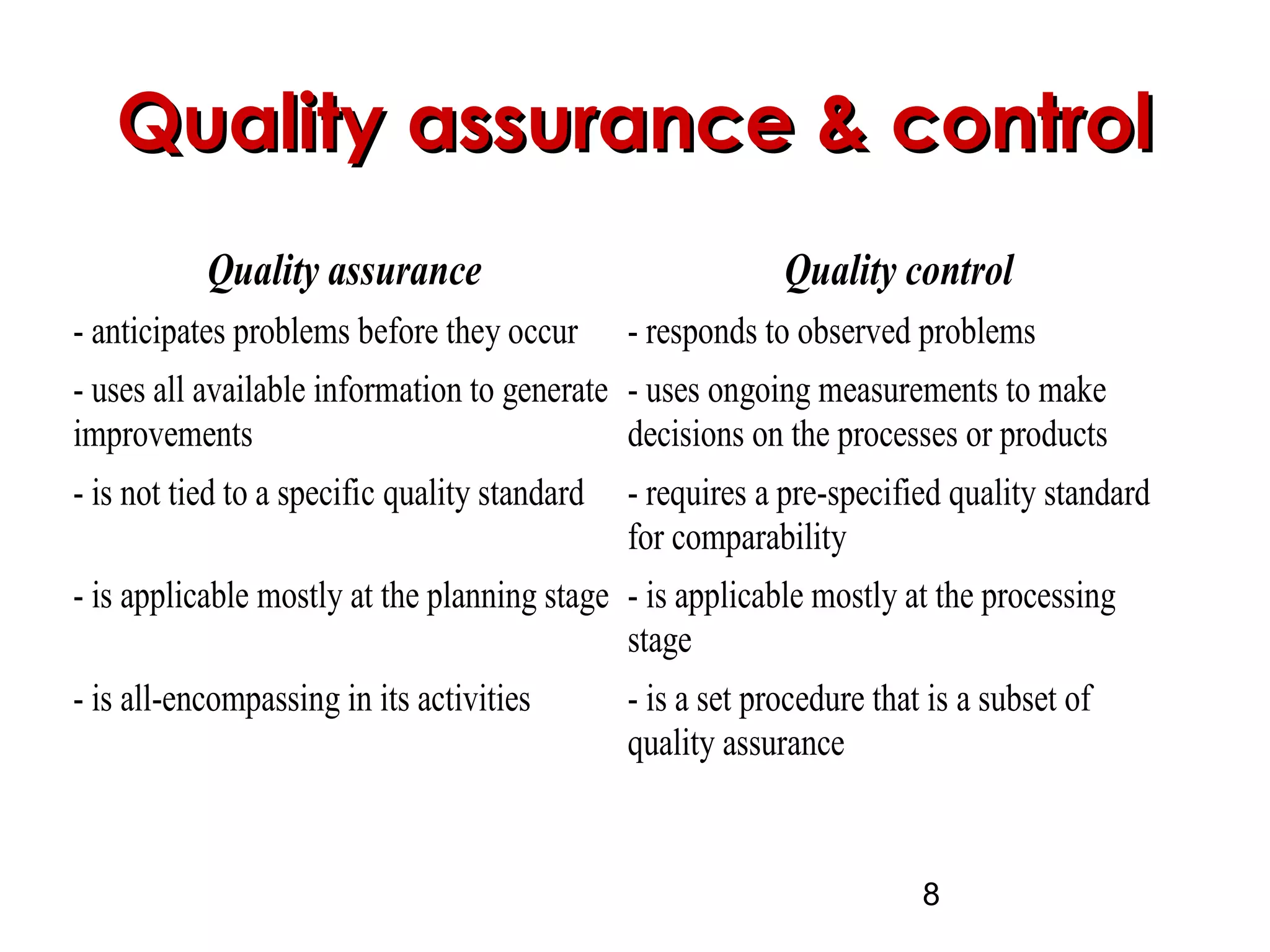

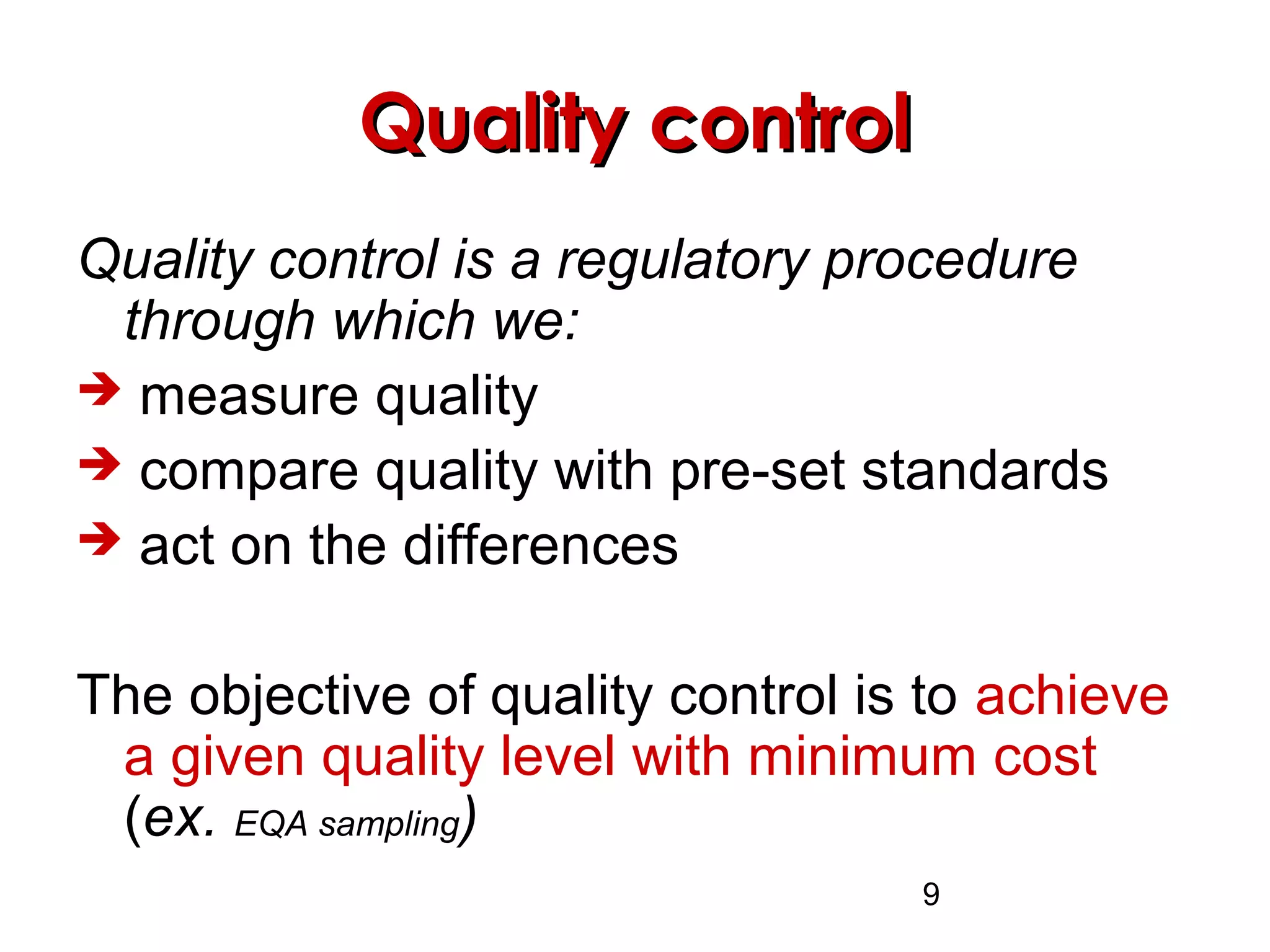

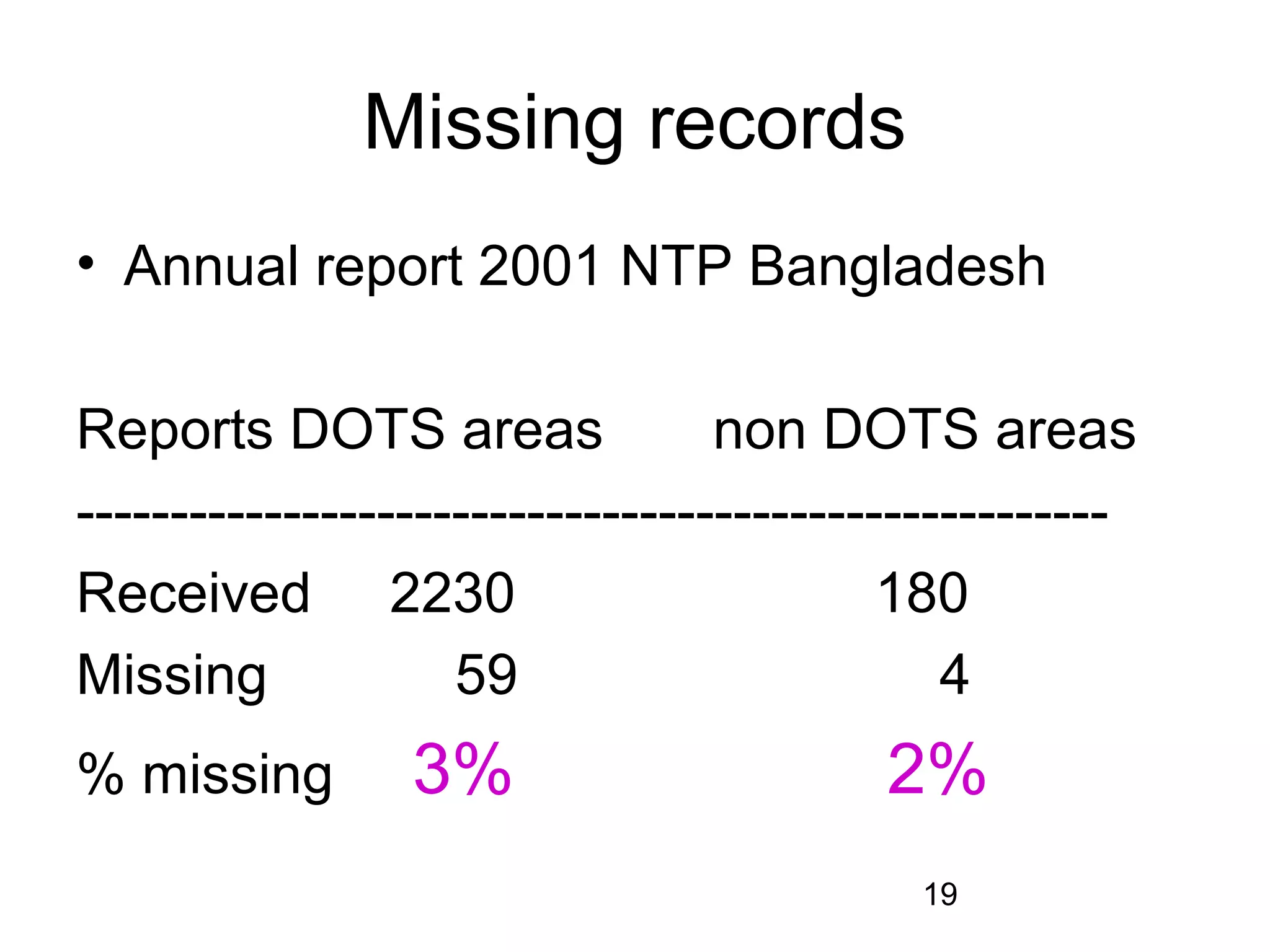

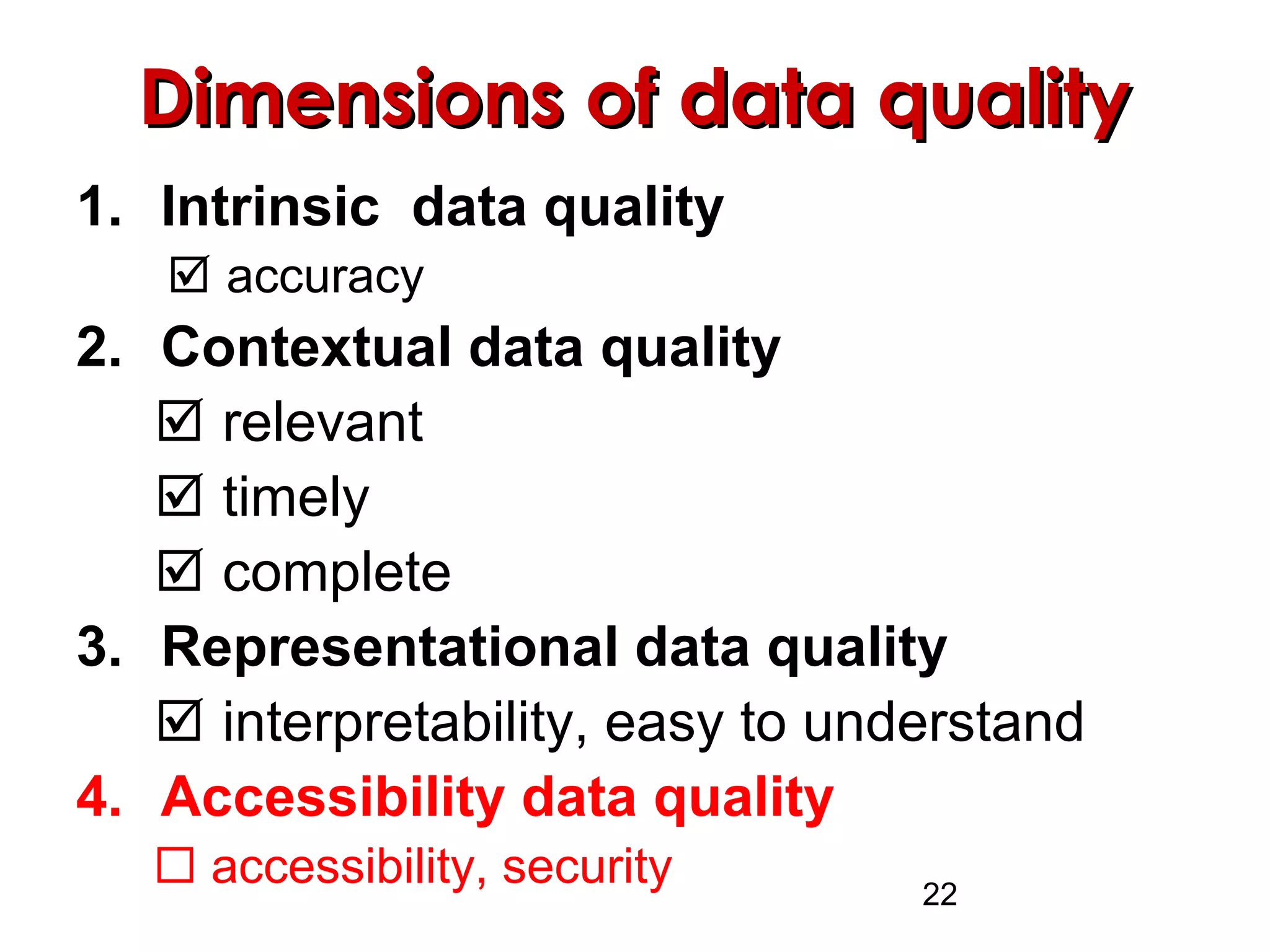

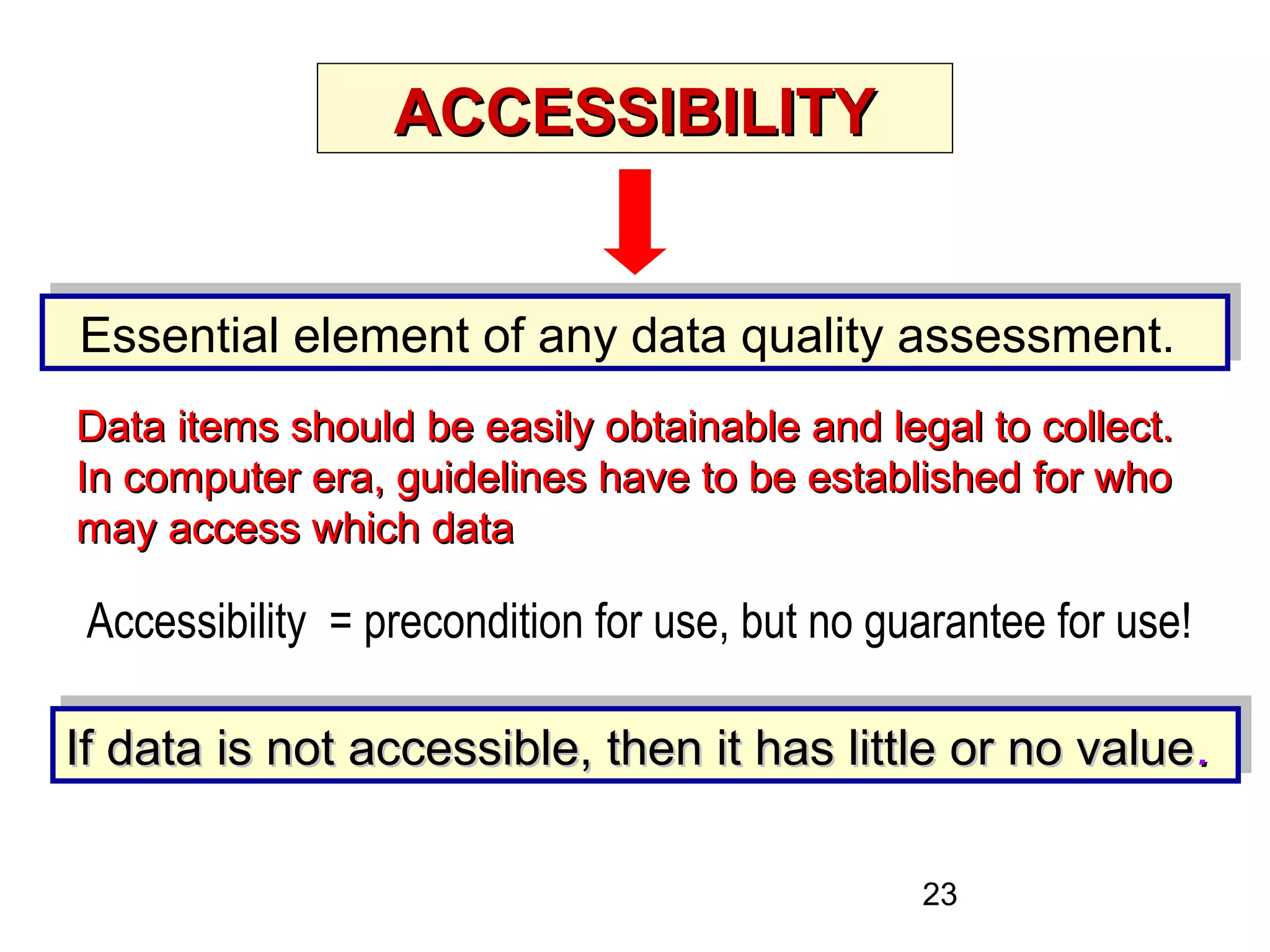

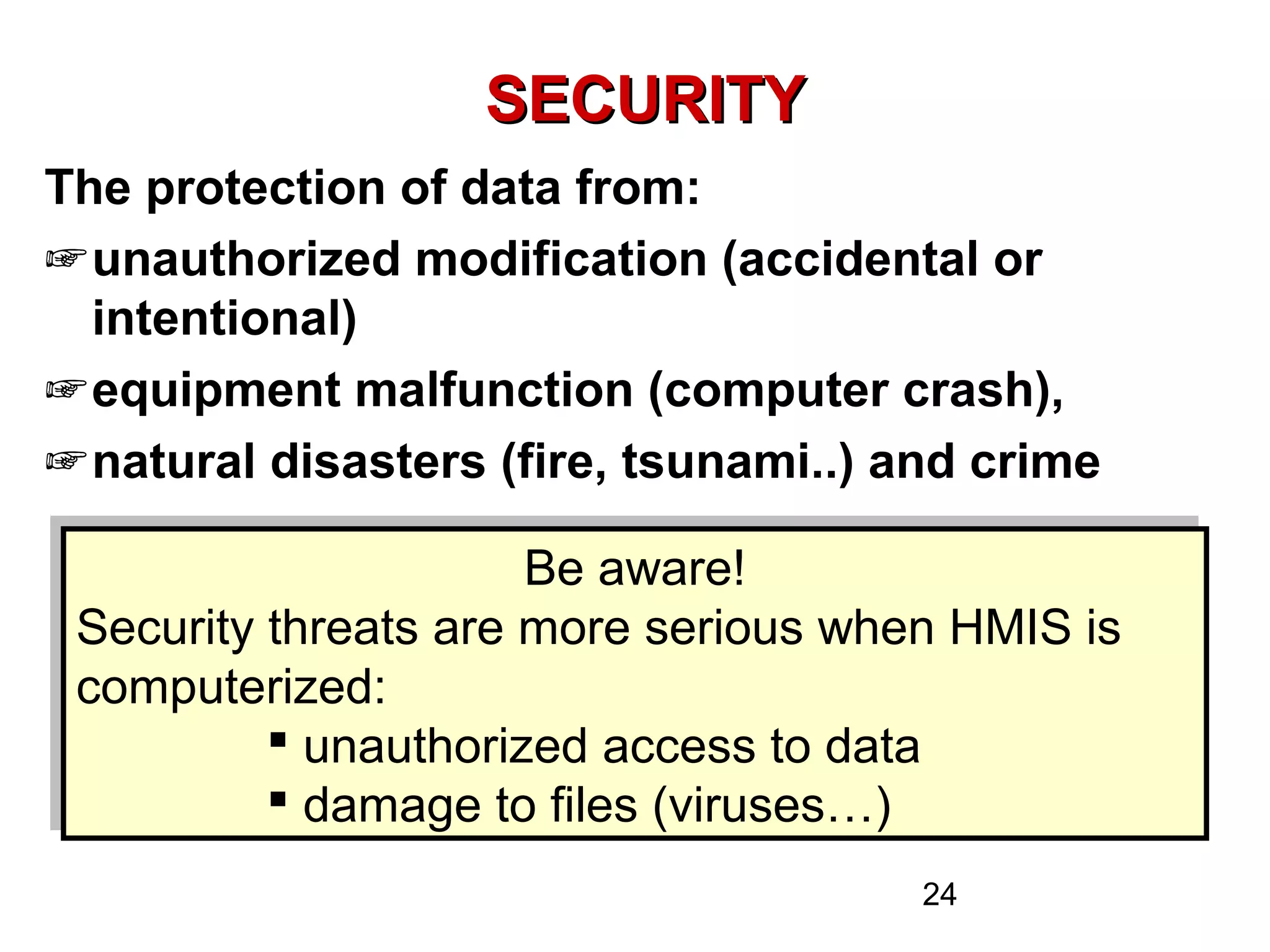

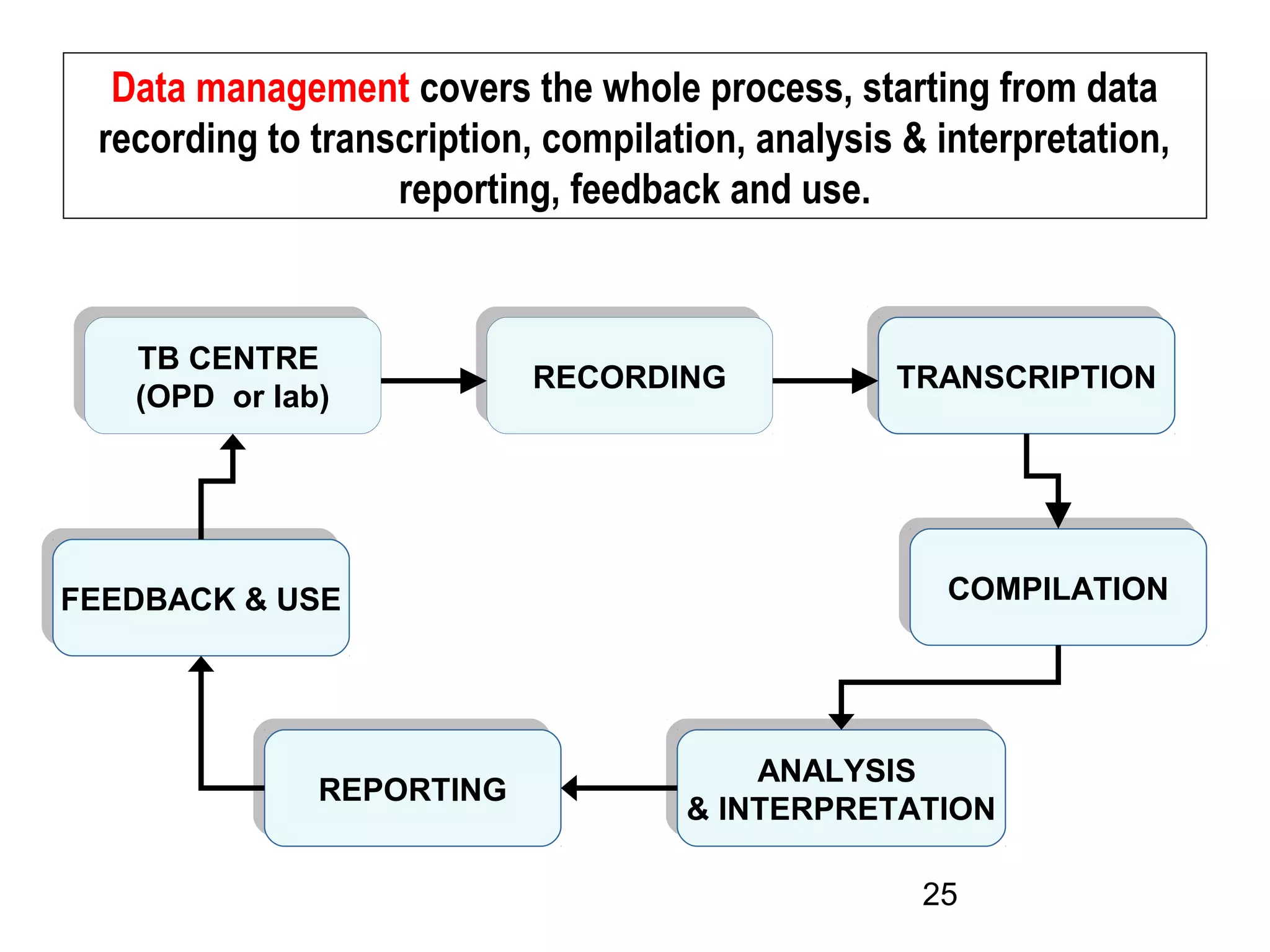

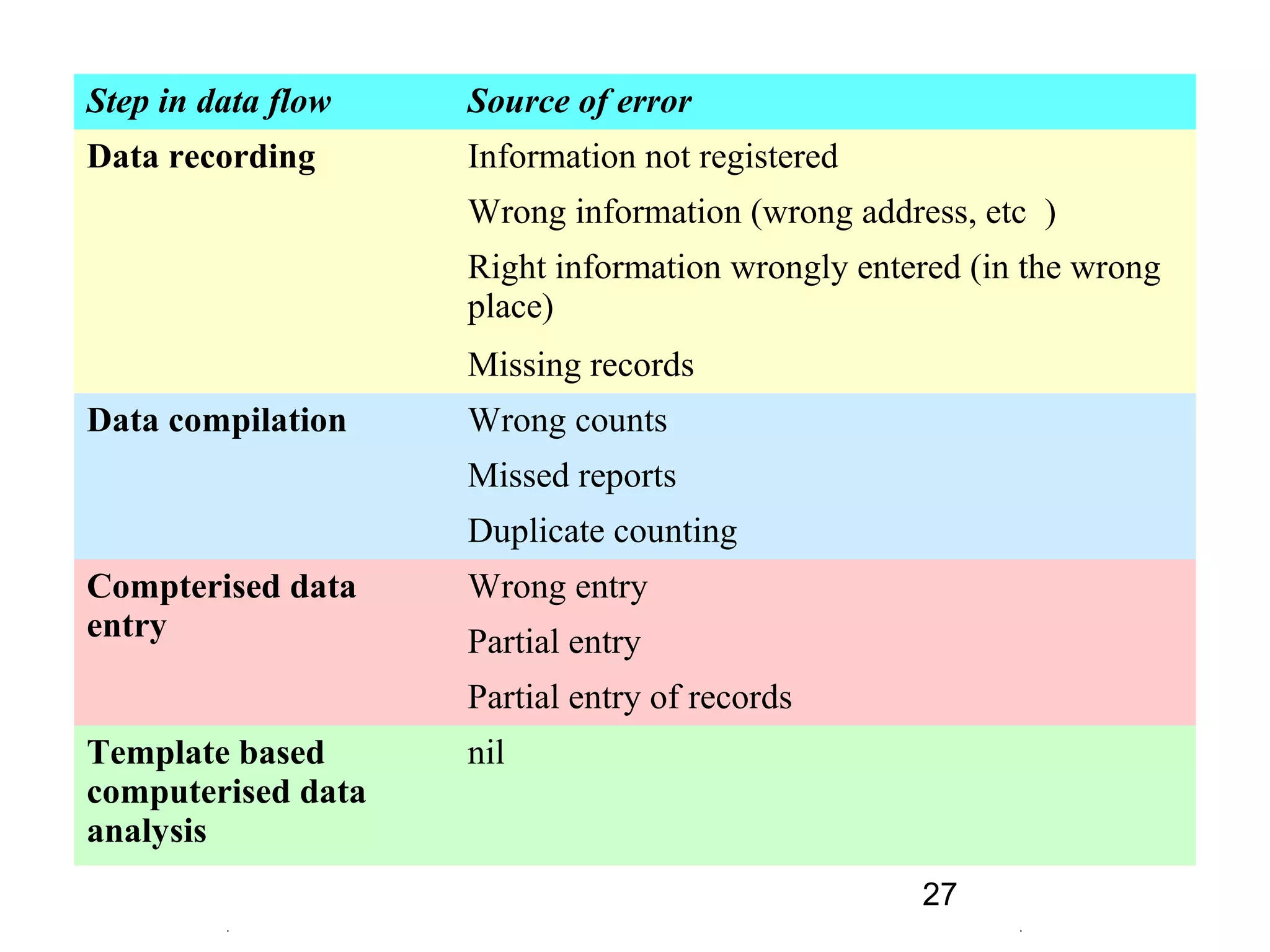

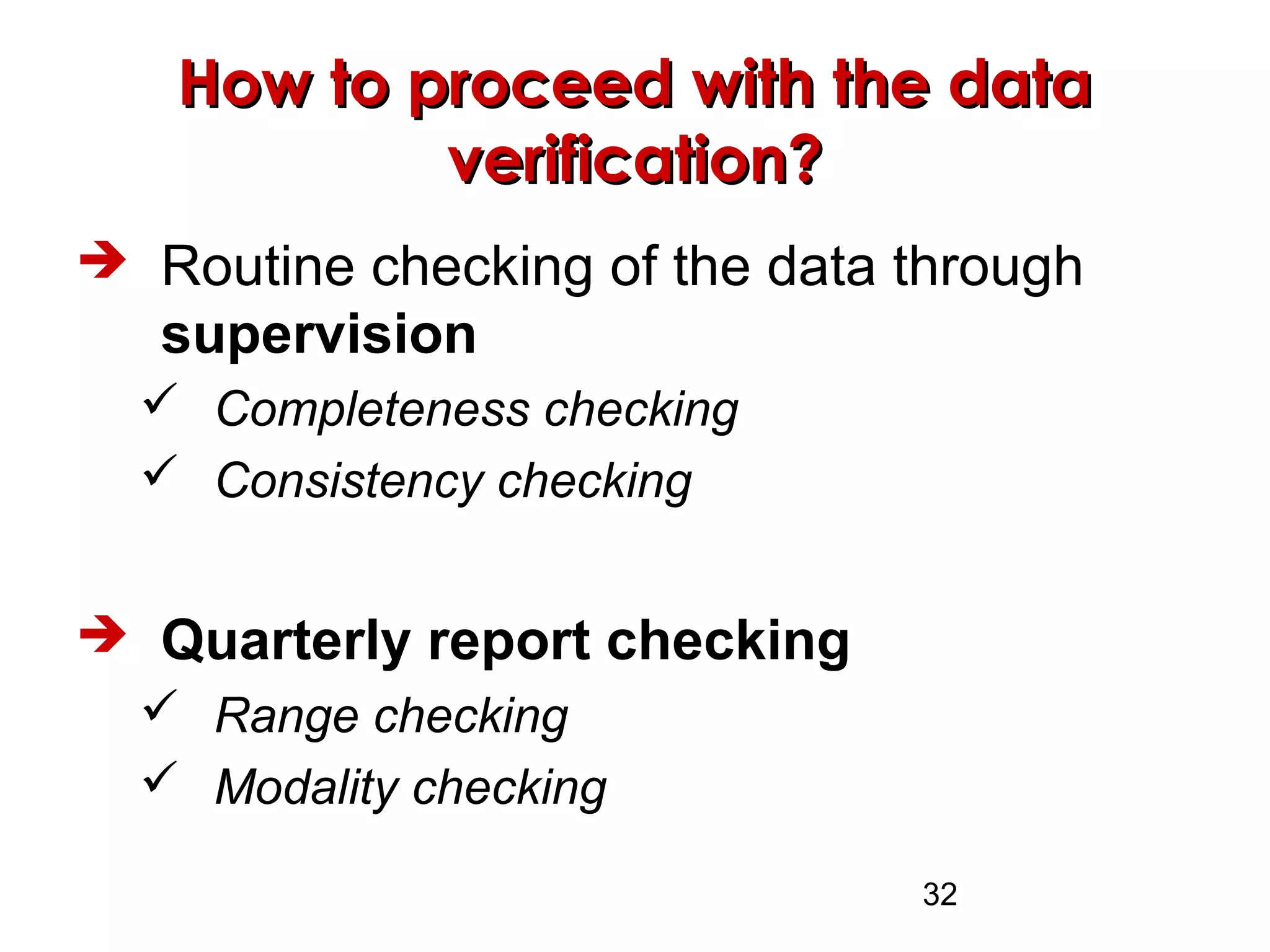

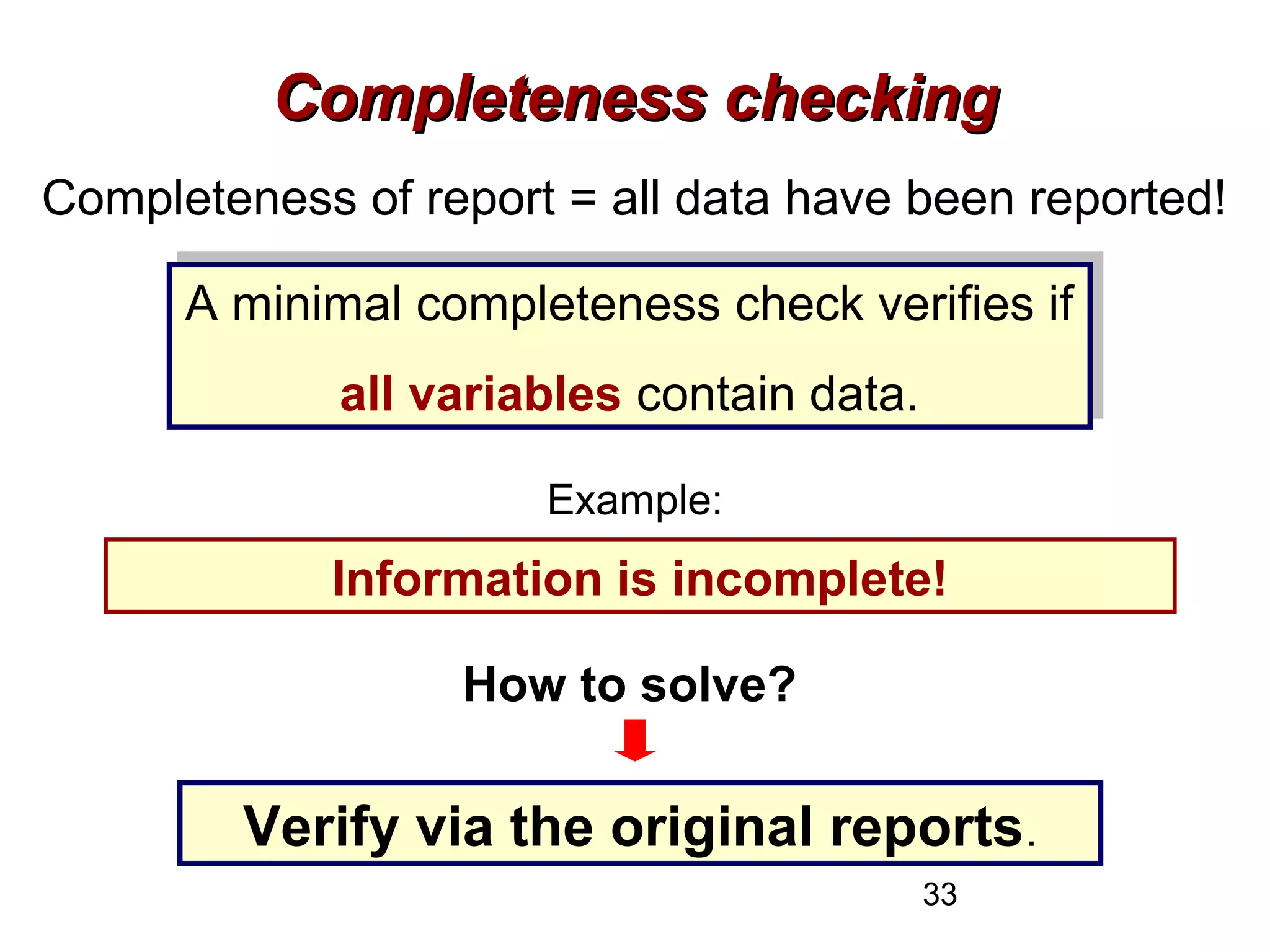

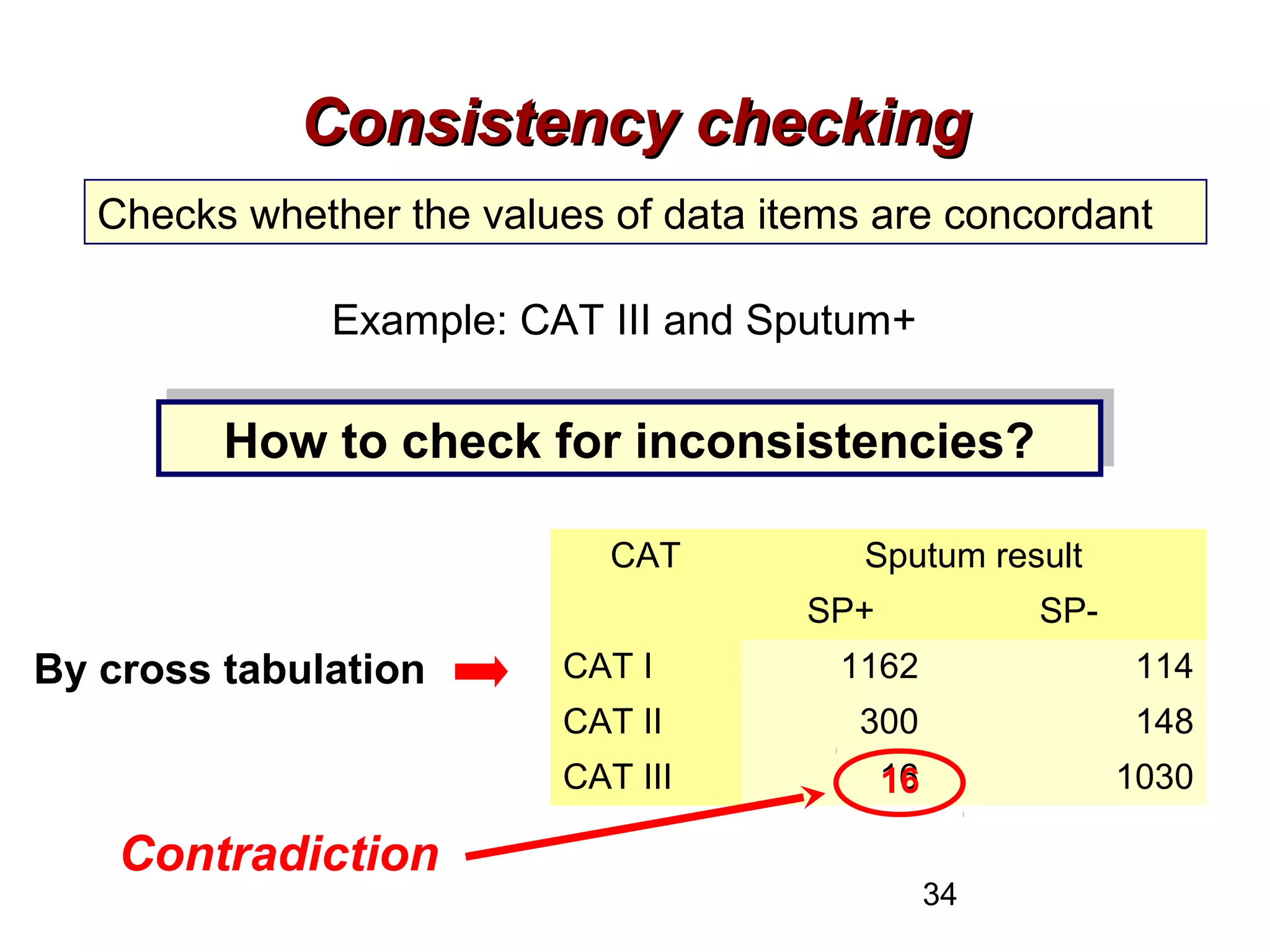

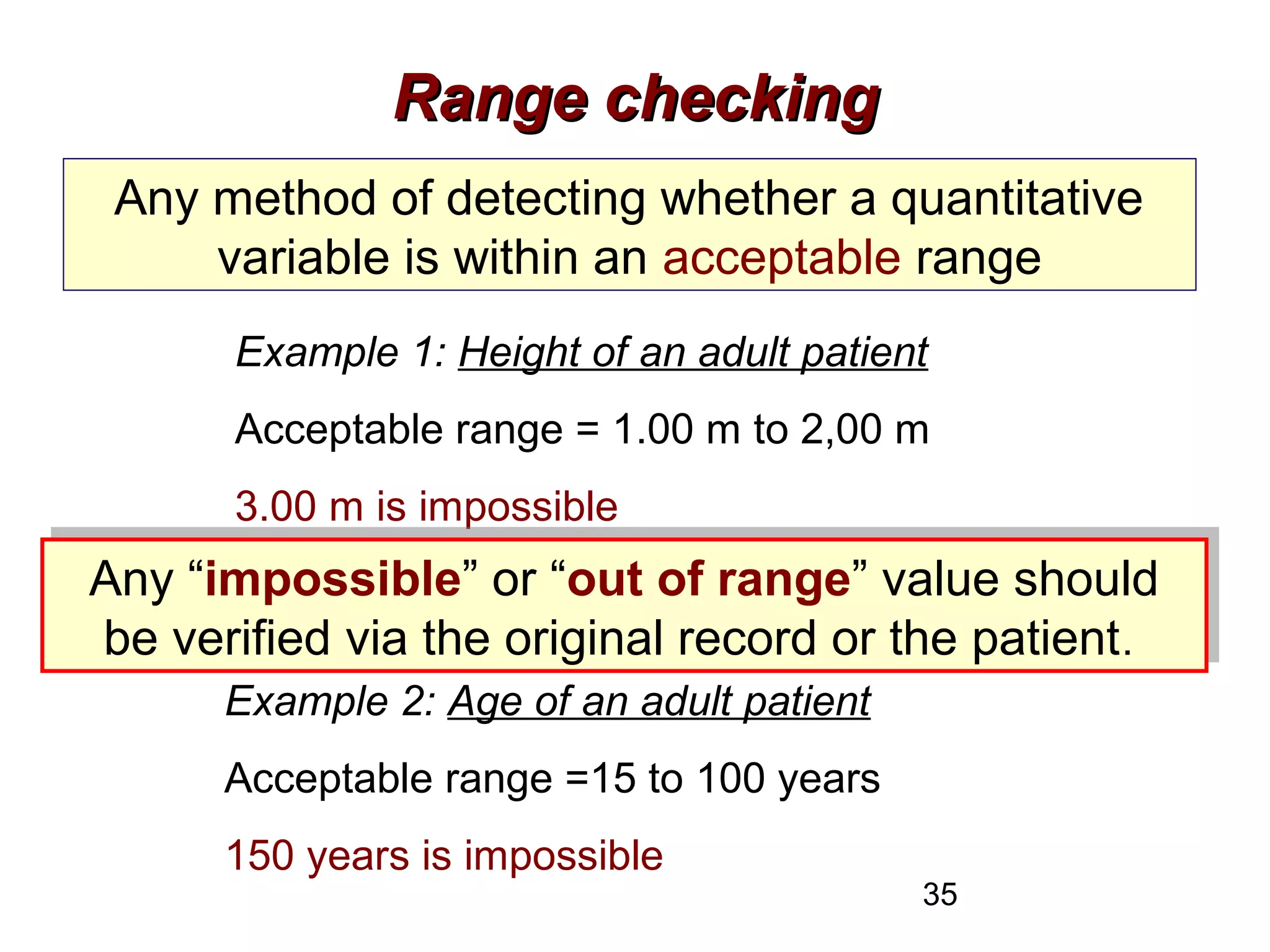

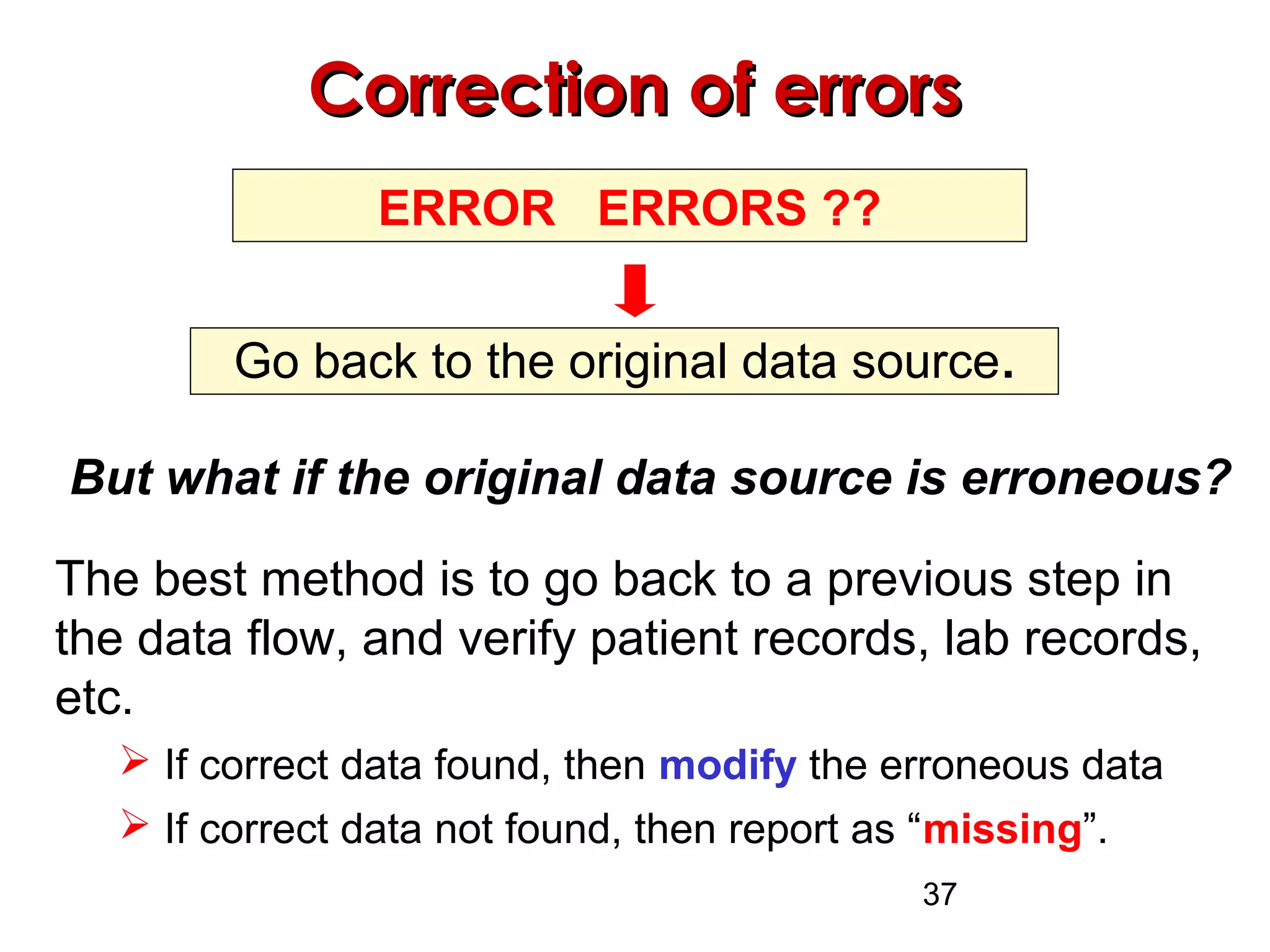

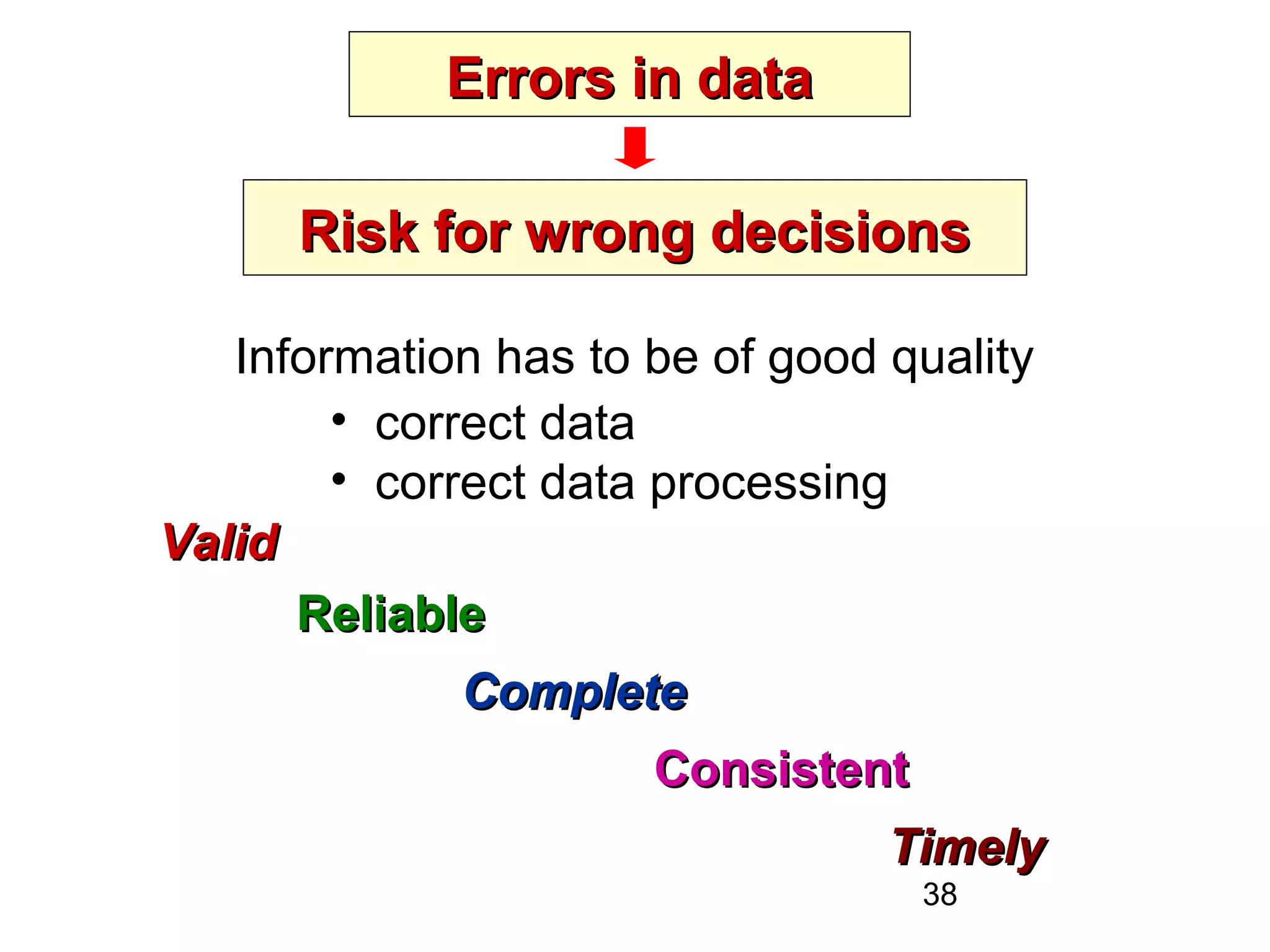

This document discusses the importance of data quality and identifies various types of errors that can occur in tuberculosis (TB) program data. It defines data quality and outlines its key dimensions including intrinsic accuracy, contextual relevance and timeliness, representational interpretability, and accessibility. Sources of errors are identified at different stages of data management from recording to analysis. Strategies for ensuring data quality include training, supervision, computerization, and verification procedures such as routine data checking. Maintaining data quality is important for accurate program management and performance assessment in TB control.