The document covers various advanced Splunk commands and techniques for data analysis, including timechart evaluations, dynamic evaluations, contingency, map, and xyseries functionalities. It provides specific syntax, usage examples, and potential applications in fields like web analytics, security, and resource monitoring. Additionally, it emphasizes caution regarding forward-looking statements and the dynamic nature of the product roadmap presented by the author, who is an infrastructure analyst at The Hershey Company.

![Dynamic

Eval

–

Subsearch

! Not

a

search

command

! NOTE:

It’s

a

Splunk

hack,

so

it

might

not

work

in

the

future

<your_search> | eval subsearch = if(host==[ <subsearch> | head 1 | rename host as query |

fields query | eval query=""".query.""" ],”setting_1”,”setting_2”)

8](https://image.slidesharecdn.com/conf2014kylesmiththehersheycompanyusingtrack-150522171150-lva1-app6892/85/Splunk-conf2014-Lesser-Known-Commands-in-Splunk-Search-Processing-Language-SPL-8-320.jpg)

![con?ngency

9

! Web

analy?cs

-‐

browsers

with

versions

! Demographics

-‐

ages

with

loca?ons

or

genders

! Security

-‐

usernames

with

proxy

categories

! Compare

categorical

fields

contingency [<contingency-option>]* <field> <field>

Builds a contingency table for two fields

A contingency table is a table showing the distribution

(count) of one variable in rows and another in columns, and

is used to study the association between the two variables](https://image.slidesharecdn.com/conf2014kylesmiththehersheycompanyusingtrack-150522171150-lva1-app6892/85/Splunk-conf2014-Lesser-Known-Commands-in-Splunk-Search-Processing-Language-SPL-9-320.jpg)

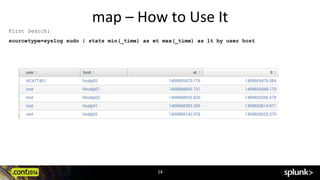

![map

13

! Uses

“tokens”

($field$)

to

pass

values

into

the

search

! Best

with

either:

Very

small

input

set

And/Or

very

specific

search.

Can

take

a

long

amount

of

?me

! Map

is

a

type

of

subsearch

! Is

“?me

agnos?c”

–

?me

is

not

necessarily

linear,

and

can

be

based

off

of

the

master

search

map (<searchoption>|<savedsplunkoption>) [maxsearches=int]

Looping operator, performs a search over each search result](https://image.slidesharecdn.com/conf2014kylesmiththehersheycompanyusingtrack-150522171150-lva1-app6892/85/Splunk-conf2014-Lesser-Known-Commands-in-Splunk-Search-Processing-Language-SPL-13-320.jpg)

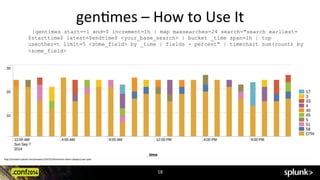

![gen?mes

16

! Useful

for

genera?ng

?me

buckets

not

present

due

to

lack

of

events

within

those

?me

buckets

! Must

be

the

first

command

of

a

search

(useful

with

map

)

! “Suppor?ng

Search”

-‐

no

real

use

case

for

basic

searching

! Can

be

used

to

show

different

“top”

fields

over

a

?mechart!

| gentimes start=<timestamp> [end=<timestamp>] [<increment>]

Generates time range results. This command is useful in

conjunction with the map command](https://image.slidesharecdn.com/conf2014kylesmiththehersheycompanyusingtrack-150522171150-lva1-app6892/85/Splunk-conf2014-Lesser-Known-Commands-in-Splunk-Search-Processing-Language-SPL-16-320.jpg)

![xyseries

23

! Email

flow

[

xyseries

email_domain

email_direc?on

count

]

! One

to

many

rela?onships

[

example

Weather

Icons

]

! Any

data

that

has

values

INDEPENDENT

of

the

field

name

– host=myhost

domain=splunk.com

metric=kbps

metric_value=100

– xyseries

domain

metric

metric_value

! Works

great

for

categorical

field

comparison

xyseries [grouped=<bool>] <x-field> <y-name-field> <y-data-field>...

[sep=<string>] [format=<string>]

Converts results into a format suitable for graphing](https://image.slidesharecdn.com/conf2014kylesmiththehersheycompanyusingtrack-150522171150-lva1-app6892/85/Splunk-conf2014-Lesser-Known-Commands-in-Splunk-Search-Processing-Language-SPL-23-320.jpg)

![foreach

25

! Rapidly

perform

evalua?ons

and

other

commands

on

a

series

of

fields

! Can

help

calculate

Z

scores

(sta?s?cal

inference

comparison)

! Reduces

the

number

of

evals

required

foreach <wc-field> [fieldstr=<string>] [matchstr=<string>]

[matchseg1=<string>] [matchseg2=<string>] [matchseg3=<string>]

<subsearch>

Runs a templated streaming subsearch for each field in a

wildcarded field list.

Example. Equivalent to ... | eval foo="foo" | eval bar="bar" | eval

baz="baz"

... | foreach foo bar baz [eval <<FIELD>> = "<<FIELD>>"]](https://image.slidesharecdn.com/conf2014kylesmiththehersheycompanyusingtrack-150522171150-lva1-app6892/85/Splunk-conf2014-Lesser-Known-Commands-in-Splunk-Search-Processing-Language-SPL-25-320.jpg)

![foreach

–

How

to

Use

It

`per60m_firewall_actions`

| timechart span=60m sum(countaction) by action

| streamstats window=720 mean(*) as MEAN* stdev(*) as STDEV*

| foreach * [eval Z_<<FIELD>> = ((<<FIELD>>-MEAN<<MATCHSTR>>) / STDEV<<MATCHSTR>>)] | fields

_time Z*

26](https://image.slidesharecdn.com/conf2014kylesmiththehersheycompanyusingtrack-150522171150-lva1-app6892/85/Splunk-conf2014-Lesser-Known-Commands-in-Splunk-Search-Processing-Language-SPL-26-320.jpg)

![foreach

–

How

to

Use

It

`per60m_firewall_actions`

| timechart span=60m sum(countaction) by action

| streamstats window=720 mean(*) as MEAN* stdev(*) as STDEV*

| foreach * [eval Z_<<FIELD>> = ((<<FIELD>>-MEAN<<MATCHSTR>>) / STDEV<<MATCHSTR>>)] | eval

Z_PROB=3.2 | fields _time Z*

27](https://image.slidesharecdn.com/conf2014kylesmiththehersheycompanyusingtrack-150522171150-lva1-app6892/85/Splunk-conf2014-Lesser-Known-Commands-in-Splunk-Search-Processing-Language-SPL-27-320.jpg)

![cluster

28

! Find

common

and/or

rare

events

! Great

for

“WAG”

searching

! Finds

anomalies

(outliers)

in

your

web

logs,

security

logs,

etc

by

checking

for

cluster_counts

! Find

common

errors

in

event

logs

cluster [slc-option]*

Cluster similar events together](https://image.slidesharecdn.com/conf2014kylesmiththehersheycompanyusingtrack-150522171150-lva1-app6892/85/Splunk-conf2014-Lesser-Known-Commands-in-Splunk-Search-Processing-Language-SPL-28-320.jpg)

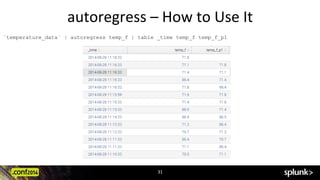

![autoregress

30

! Allows

advanced

sta?s?cal

calcula?ons

based

on

previous

values

! Moving

averages

of

numerical

fields

– Network

bandwidth

trending

-‐

kbps,

latency,

dura?on

of

connec?ons

– Web

analy?cs

trending

-‐

number

of

visits,

dura?on

of

visits,

average

download

size

– Malicious

traffic

trending

-‐

excessive

connec?on

failures

autoregress field [AS <newfield>] [p=<p_start>[-<p_end>]]

Sets up data for calculating the moving average.

A Moving Average is a succession of averages calculated from

successive events (typically of constant size and

overlapping) of a series of values.](https://image.slidesharecdn.com/conf2014kylesmiththehersheycompanyusingtrack-150522171150-lva1-app6892/85/Splunk-conf2014-Lesser-Known-Commands-in-Splunk-Search-Processing-Language-SPL-30-320.jpg)

![CLI

Commands

34

! $SPLUNK_HOME/bin/splunk

cmd

pcregextest

– Useful

for

tes?ng

modular

regular

expressions

for

extrac?ons

splunk cmd pcregextest mregex="[[ip:src_]] [[ip:dst_]]" ip="(?<ip>d+[[dotnum]]{3})"

dotnum=".d+" test_str="1.1.1.1 2.2.2.2"

Original Pattern: '[[ip:src_]] [[ip:dst_]]'

Expanded Pattern: '(?<src_ip>d+(?:.d+){3}) (?<dst_ip>d+(?:.d+){3})'

Regex compiled successfully. Capture group count = 2. Named capturing groups = 2.

SUCCESS - match against: '1.1.1.1 2.2.2.2'

#### Capturing group data #####

Group | Name | Value

--------------------------------------

1 | src_ip | 1.1.1.1

2 | dst_ip | 2.2.2.2](https://image.slidesharecdn.com/conf2014kylesmiththehersheycompanyusingtrack-150522171150-lva1-app6892/85/Splunk-conf2014-Lesser-Known-Commands-in-Splunk-Search-Processing-Language-SPL-34-320.jpg)

![CLI

Commands

35

! $SPLUNK_HOME/bin/splunk

cmd

btool

ê Btool

allows

you

to

inspect

configura?ons

and

what

is

actually

being

applied

to

your

sourcetypes

! splunk

cmd

btool

-‐-‐debug

props

list

wunderground

|

grep

-‐v

"system/default"

/opt/splunk/etc/apps/TA-wunderground/default/props.conf [wunderground]

/opt/splunk/etc/apps/TA-wunderground/default/props.conf KV_MODE = json

/opt/splunk/etc/apps/TA-wunderground/default/props.conf MAX_EVENTS = 100000

/opt/splunk/etc/apps/TA-wunderground/default/props.conf MAX_TIMESTAMP_LOOKAHEAD = 30

/opt/splunk/etc/apps/TA-wunderground/default/props.conf REPORT-extjson = wunder_ext_json

/opt/splunk/etc/apps/TA-wunderground/default/props.conf SHOULD_LINEMERGE = true

/opt/splunk/etc/apps/TA-wunderground/default/props.conf TIME_PREFIX = observation_epoch

/opt/splunk/etc/apps/TA-wunderground/default/props.conf TRUNCATE = 1000000](https://image.slidesharecdn.com/conf2014kylesmiththehersheycompanyusingtrack-150522171150-lva1-app6892/85/Splunk-conf2014-Lesser-Known-Commands-in-Splunk-Search-Processing-Language-SPL-35-320.jpg)