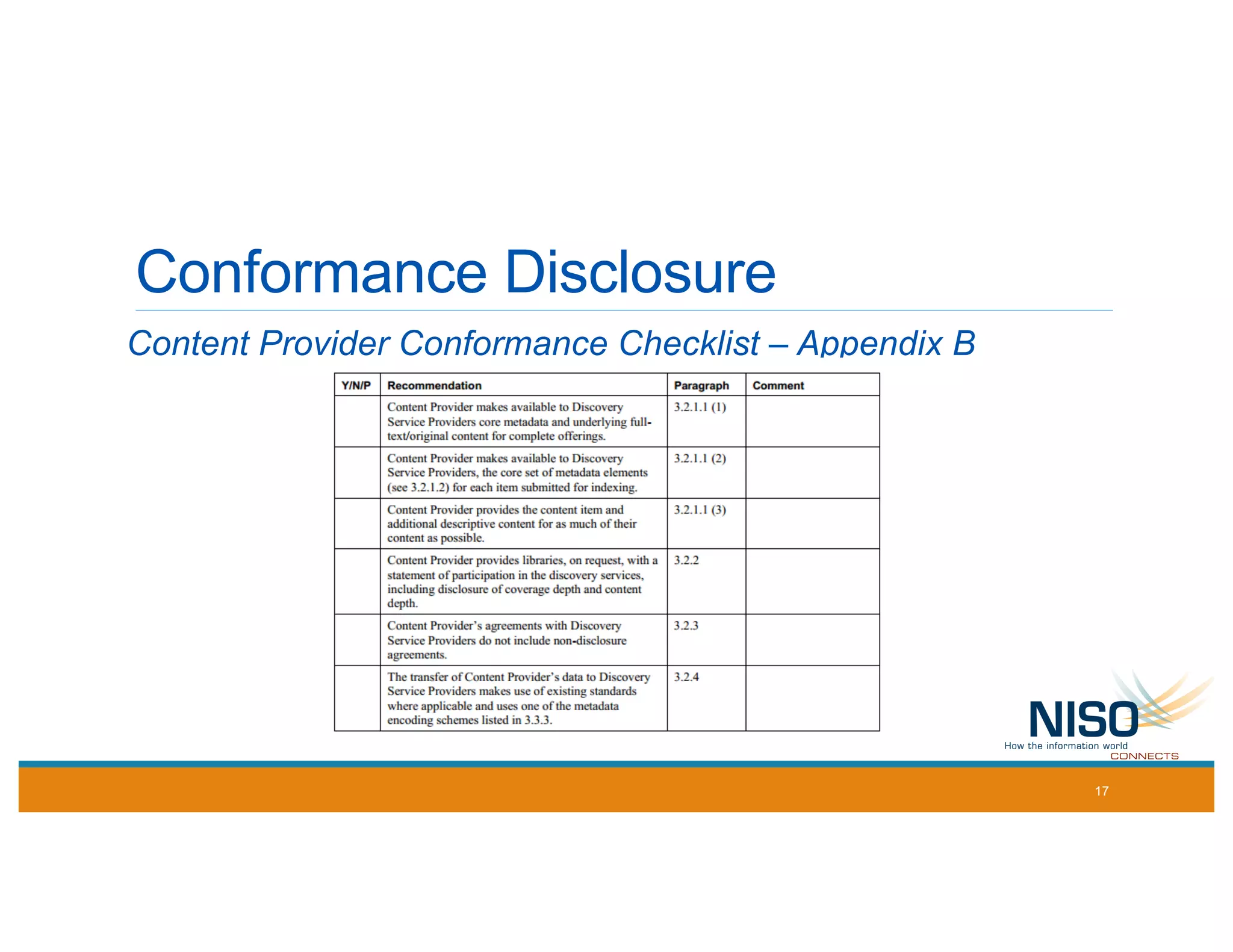

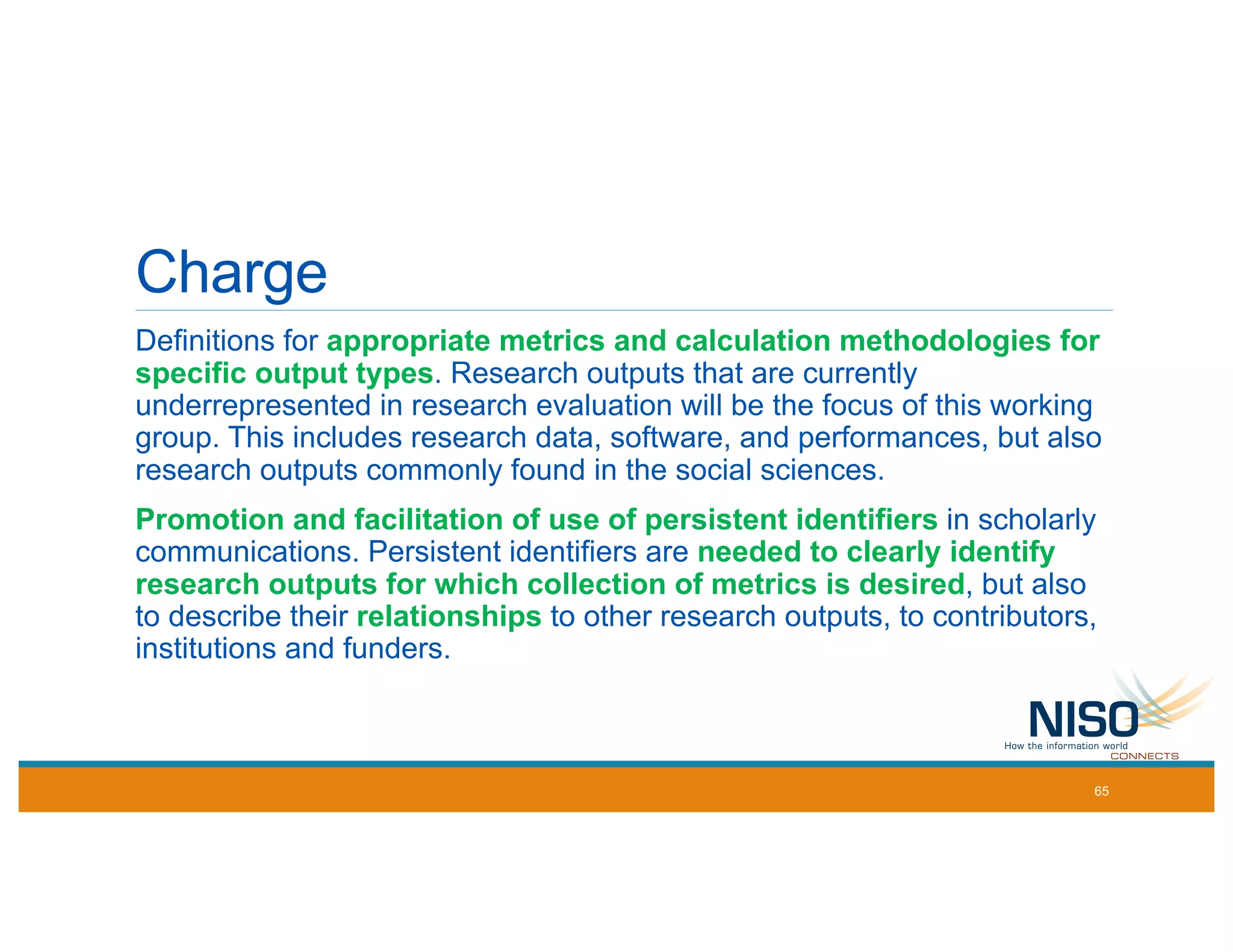

The document outlines the mission and activities of the National Information Standards Organization (NISO), focusing on the development and maintenance of standards related to information, documentation, and media. It discusses various topic committees, working groups, and their projects, emphasizing the importance of compliance, alternative assessment metrics, and the role of altmetrics in scholarly communication. Additionally, it highlights challenges faced by stakeholders, barriers to standard uptake, and the need for collaboration among content providers and library services.

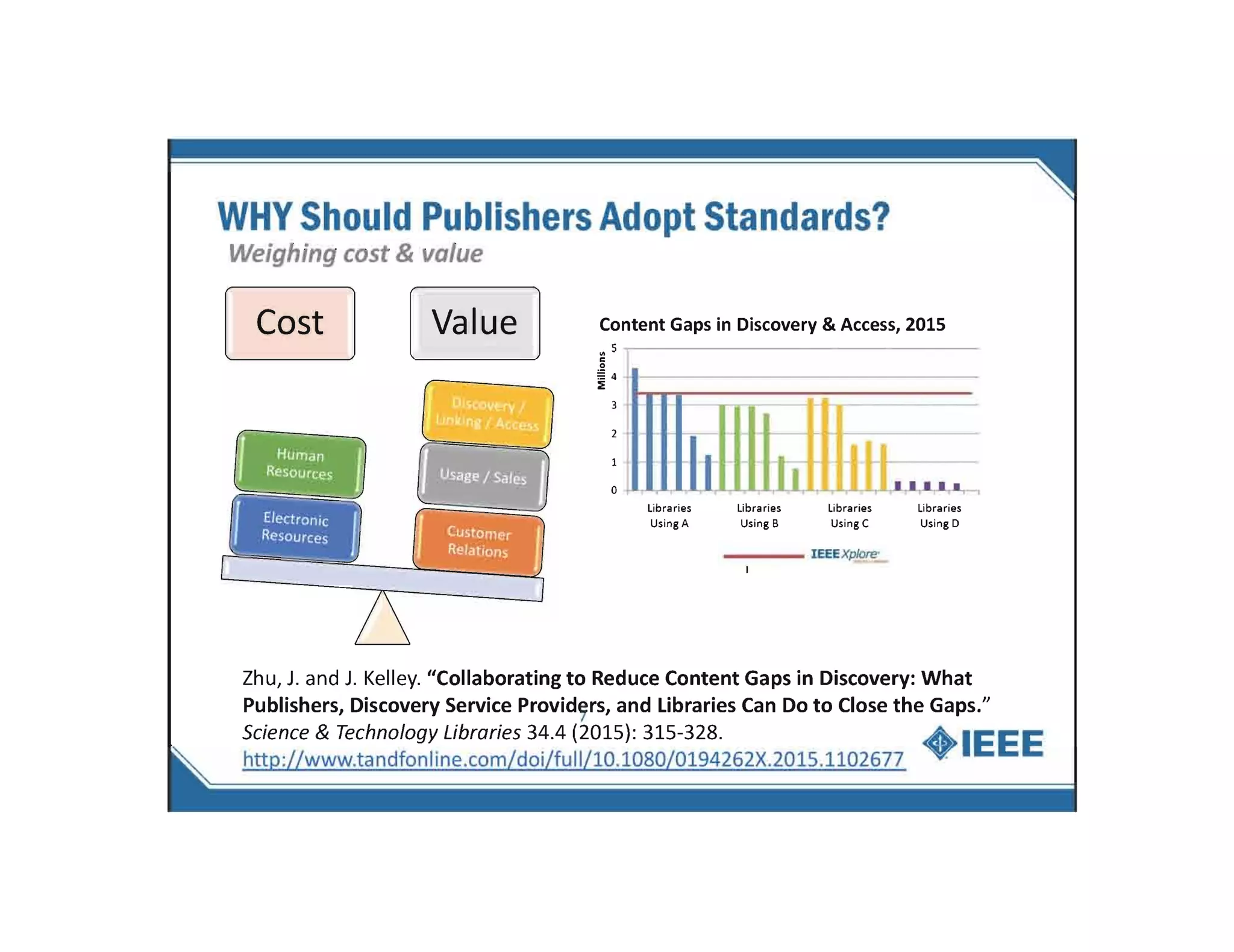

![Costs for Publishers/Vendors

NISO publications are free – but other barriers exist

Time to understand

Time to talk to others in the company

Time to code – or find/evaluate a vendor

Time to update documentation

VERSUS…..

Lower costs of exchange with other vendors/libraries

Prevent customers from being confused

More easily/quickly allow end users to access data/information [increasing usage]

Minimize support/questions/issues – more self-sufficiency](https://image.slidesharecdn.com/lagaceermbworkshop2018-03-20-180326140439/75/Communicating-About-Standards-Creating-A-Technical-Infrastructure-That-Everyone-Can-Use-10-2048.jpg)

![What About NISO Compliance?

• NISO does nor formally measure compliance/create scores – all standards and

recommended practices are voluntary [not regulatory]

• Some NISO Standing Committees maintain Registries

• ODI

• KBART

• SUSHI

• Other compliance-related activities

• Circulate surveys

• Education sessions

• Include adherence to standards in RFPs

• Good Citizen](https://image.slidesharecdn.com/lagaceermbworkshop2018-03-20-180326140439/75/Communicating-About-Standards-Creating-A-Technical-Infrastructure-That-Everyone-Can-Use-13-2048.jpg)