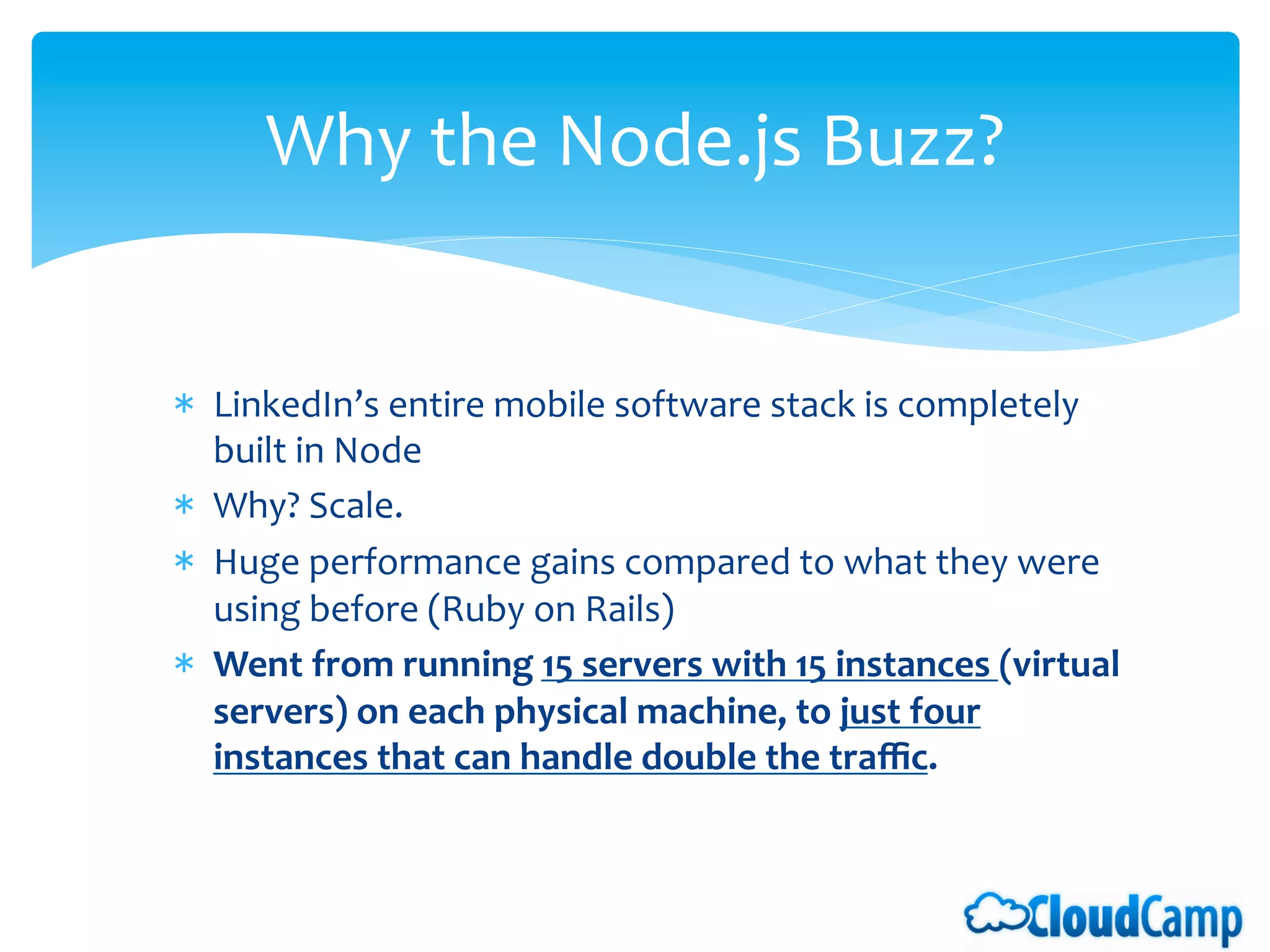

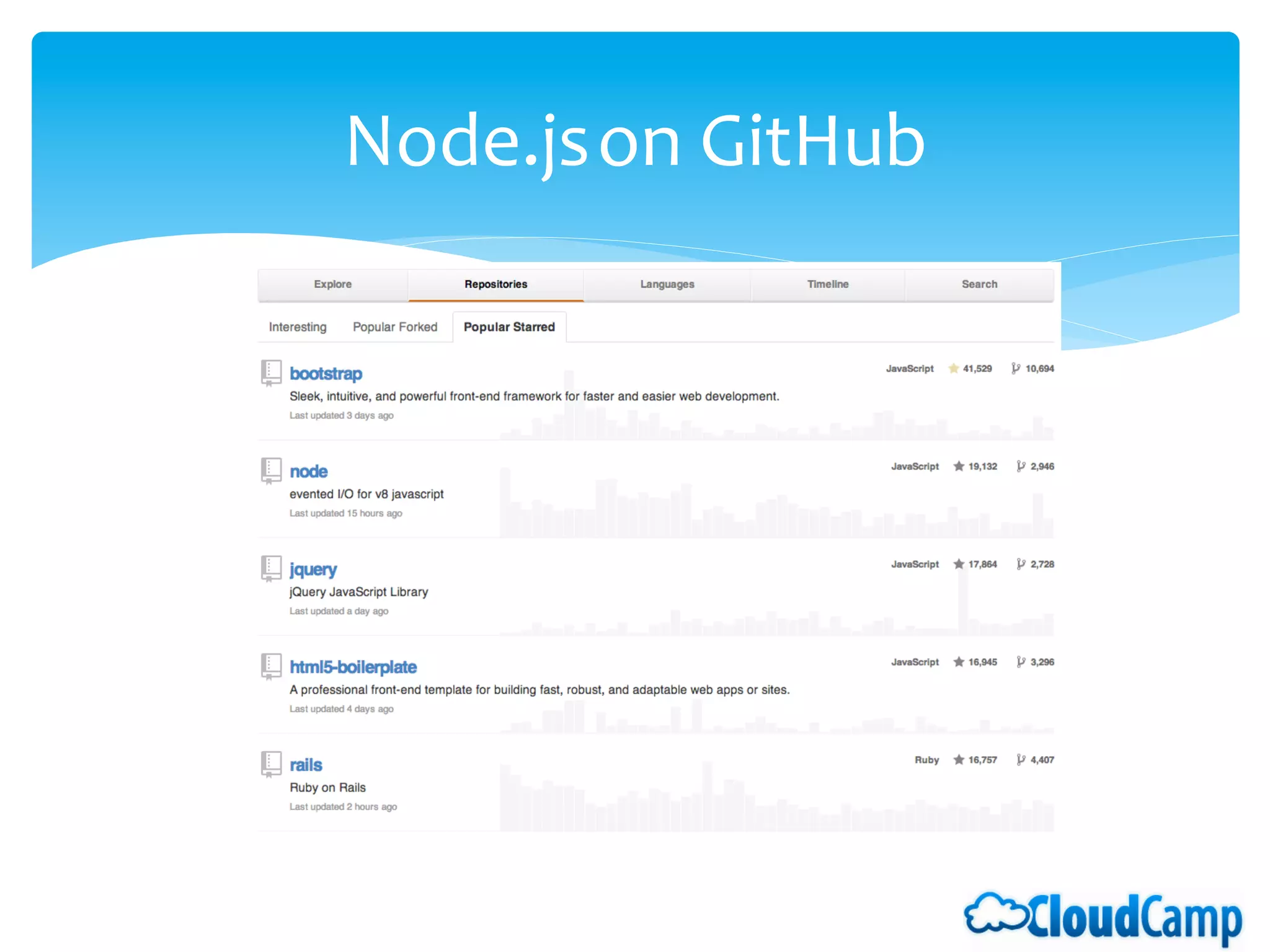

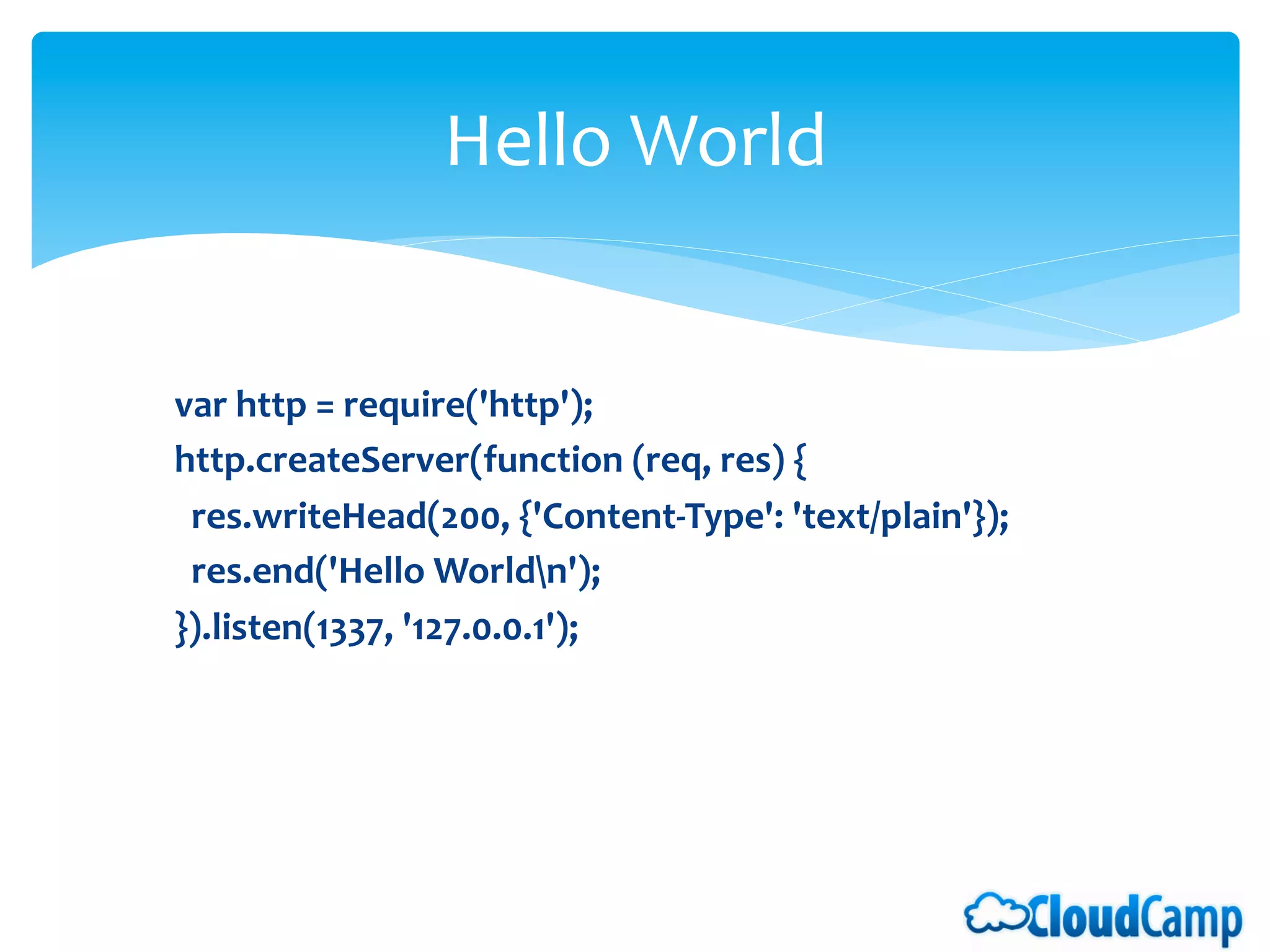

The document outlines the agenda for CloudCamp Chicago held on December 13, 2012, featuring various speakers discussing topics such as Node.js on AWS, APIs in cloud environments, big data with Hadoop, and managing private clouds. Each speaker highlighted the benefits and challenges related to their subjects, advocating for tools like Node.js and Hadoop for scalable applications and data analysis. Additionally, an unpanel discussion focused on cloud security and visibility concerns in relation to control over cloud services.