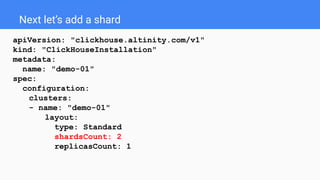

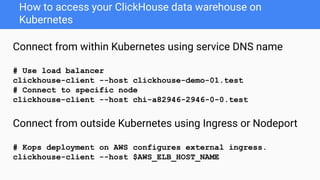

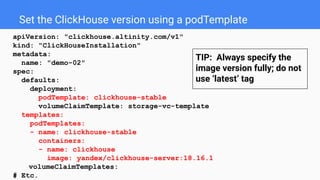

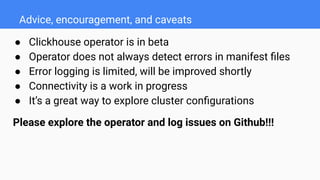

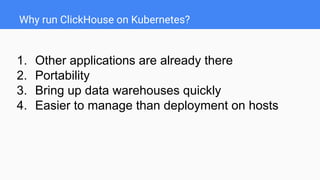

The document provides an overview of running ClickHouse on Kubernetes using the ClickHouse operator developed by Altinity. It details the setup process, including configuration files for single-node and multi-node clusters, and how to manage resources like storage and zookeeper. It also highlights the benefits of using Kubernetes for ClickHouse deployments and invites users to explore the operator and report issues on GitHub.

![Installing and removing the ClickHouse operator

[Optional] Get sample files from github repo:

git clone https://github.com/Altinity/clickhouse-operator

Install the operator:

kubectl apply -f clickhouse-operator-install.yaml

Remove the operator:

kubectl delete -f clickhouse-operator-install.yaml](https://image.slidesharecdn.com/unahfclatwa4vb5cbfwb-signature-53488a6077ab305bbbe5bbae9a78684a9a901f75eafa4c878f6695d2e4ddd9ba-poli-190416180030/85/ClickHouse-on-Kubernetes-By-Robert-Hodges-Altinity-CEO-8-320.jpg)