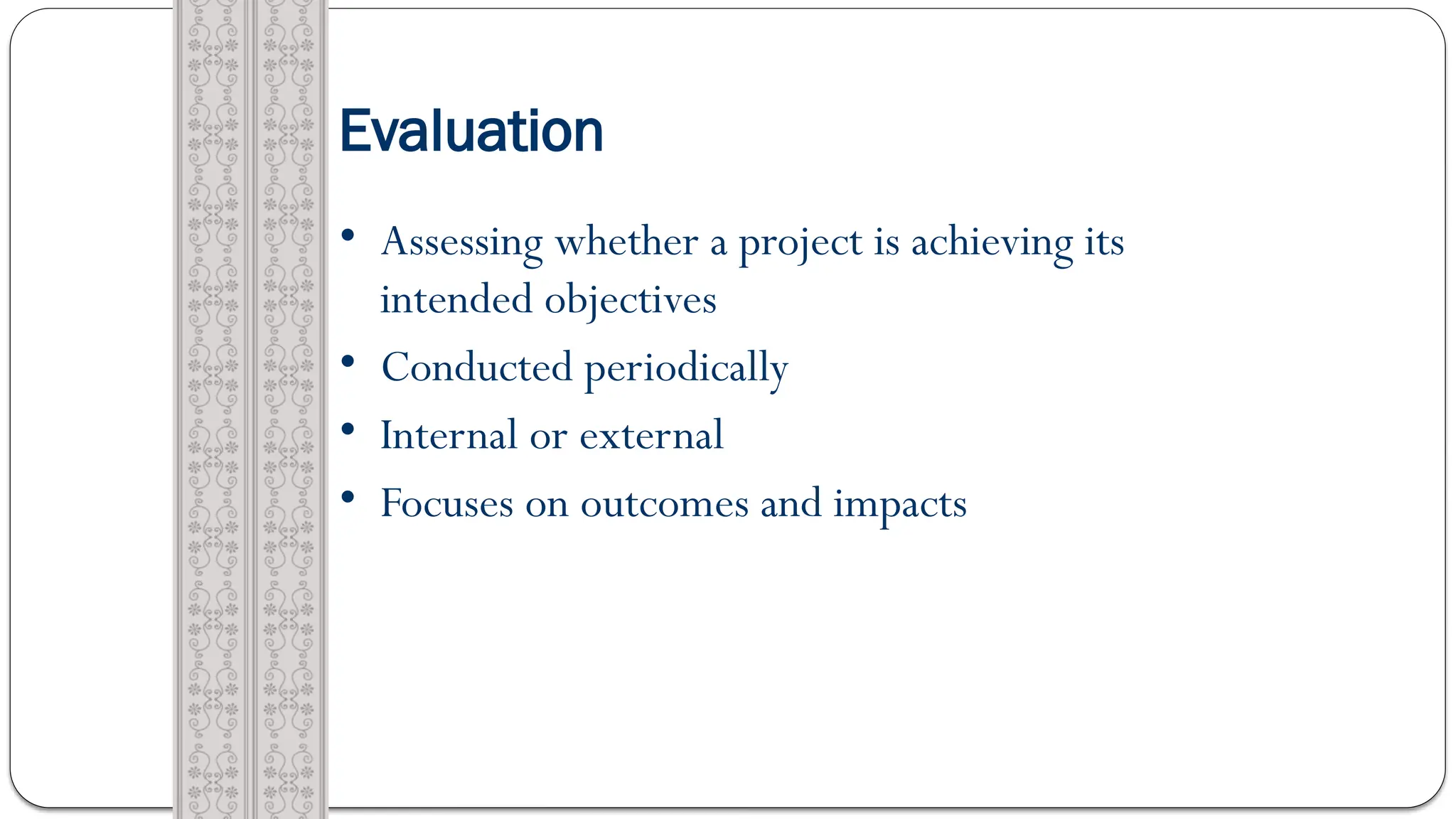

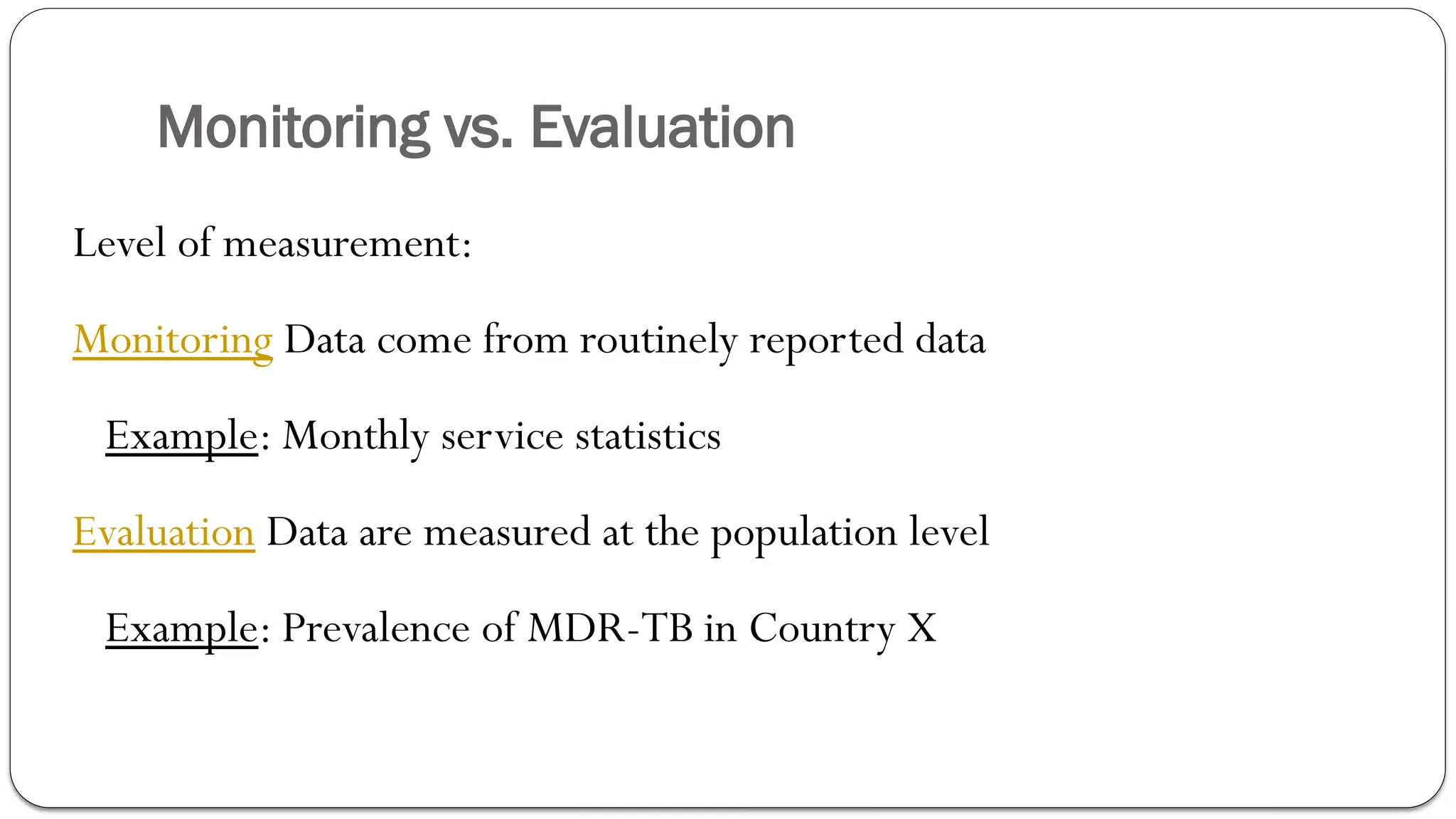

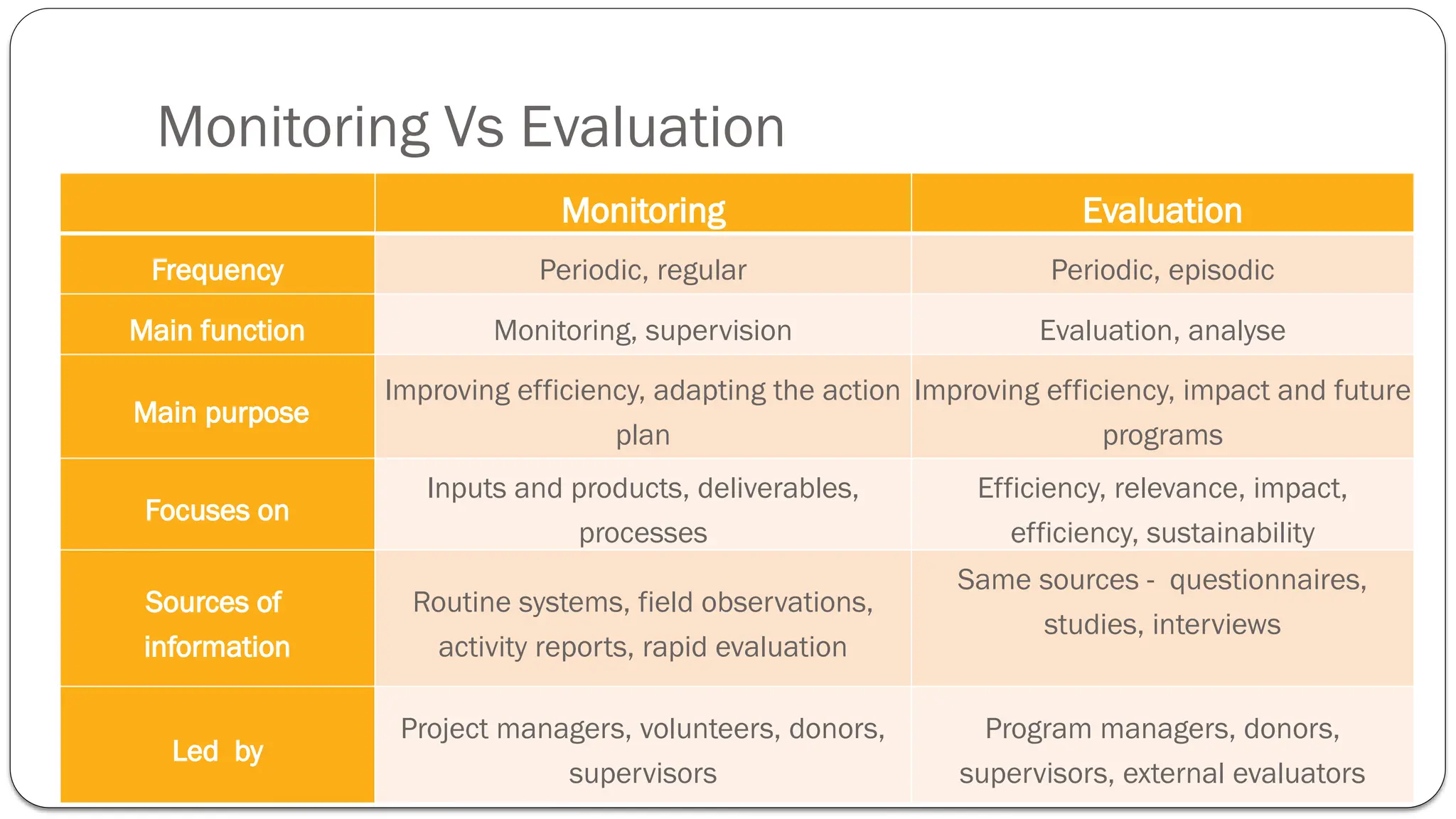

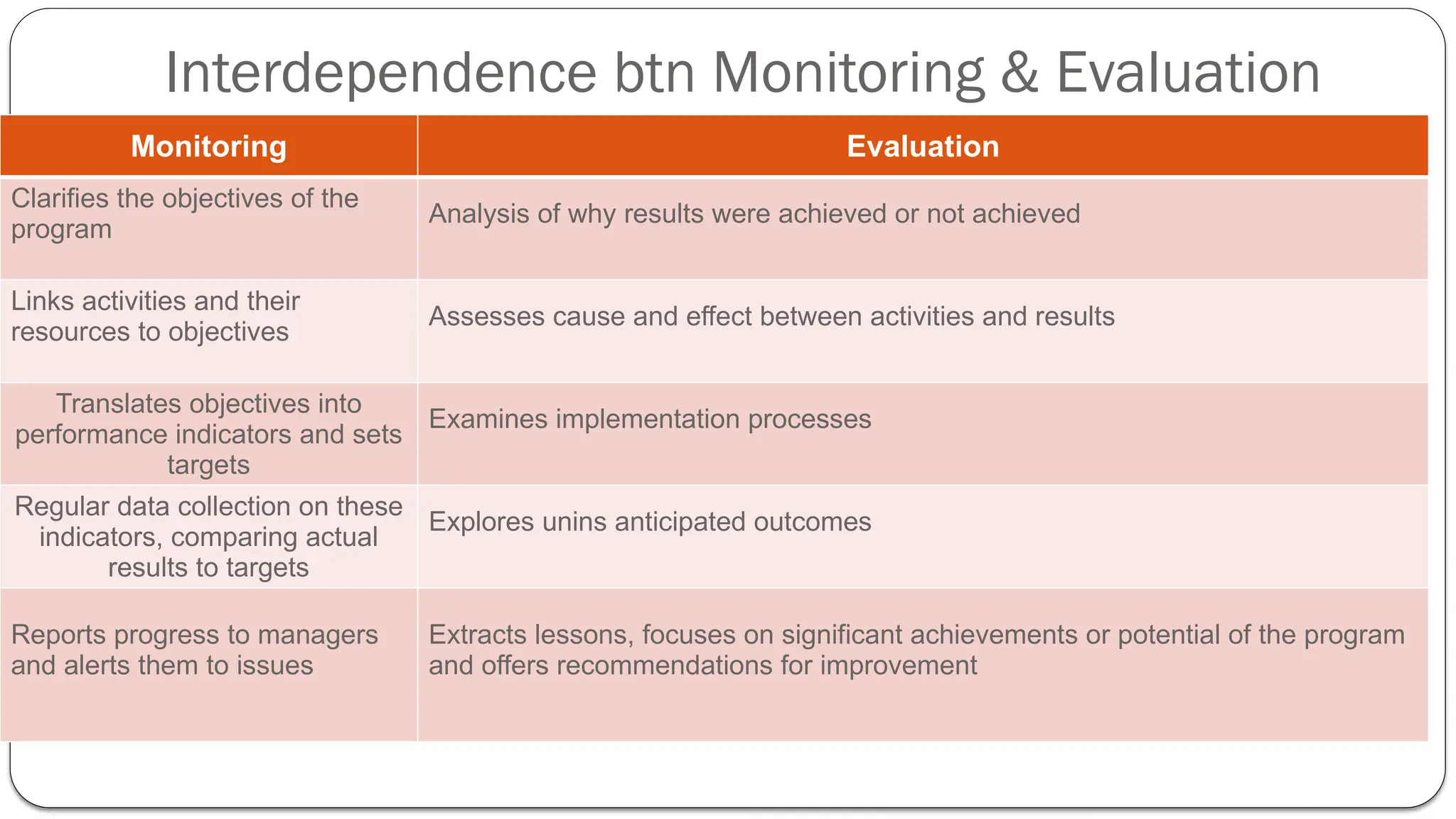

The document outlines the concepts and significance of monitoring and evaluation (M&E) in project management, emphasizing their role in assessing project performance and impact. It details the M&E process, which includes defining objectives, identifying indicators, collecting and analyzing data, and utilizing findings for decision-making and improvement. The document stresses the importance of stakeholder engagement and the continuous learning aspect of M&E to enhance project effectiveness.