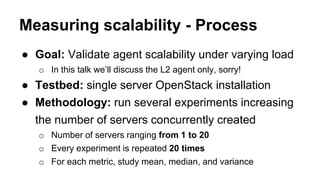

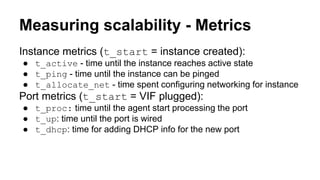

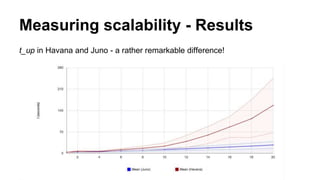

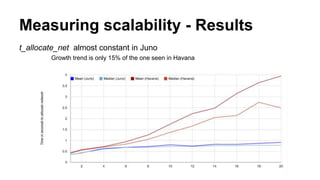

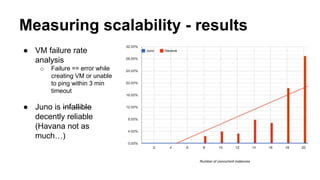

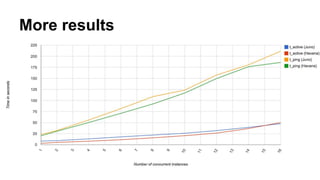

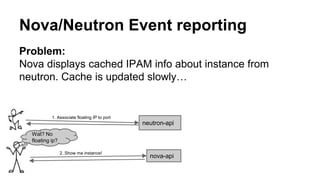

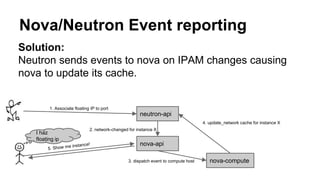

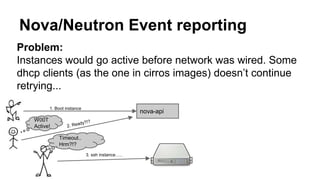

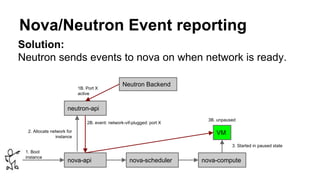

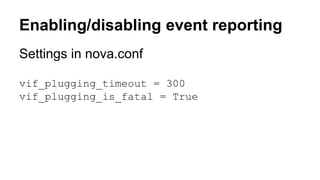

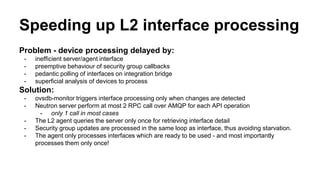

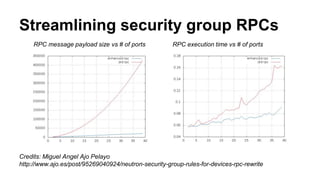

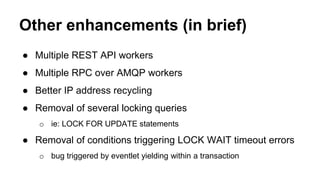

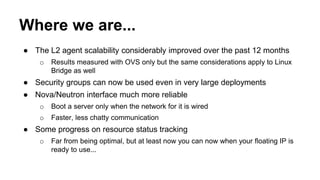

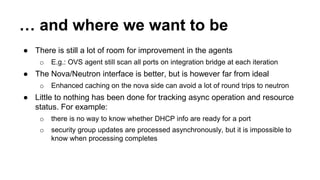

The document discusses improvements in scalability and reliability of OpenStack's Neutron service from the Havana to Juno releases, highlighting 1,672 commits made over 12 months. Key enhancements include better handling of instance creation, reduced failure rates, and more efficient processing of network interfaces and security groups. Despite notable progress, including a zero failure rate in Juno, challenges remain in tracking asynchronous operations and optimizing overall scalability.