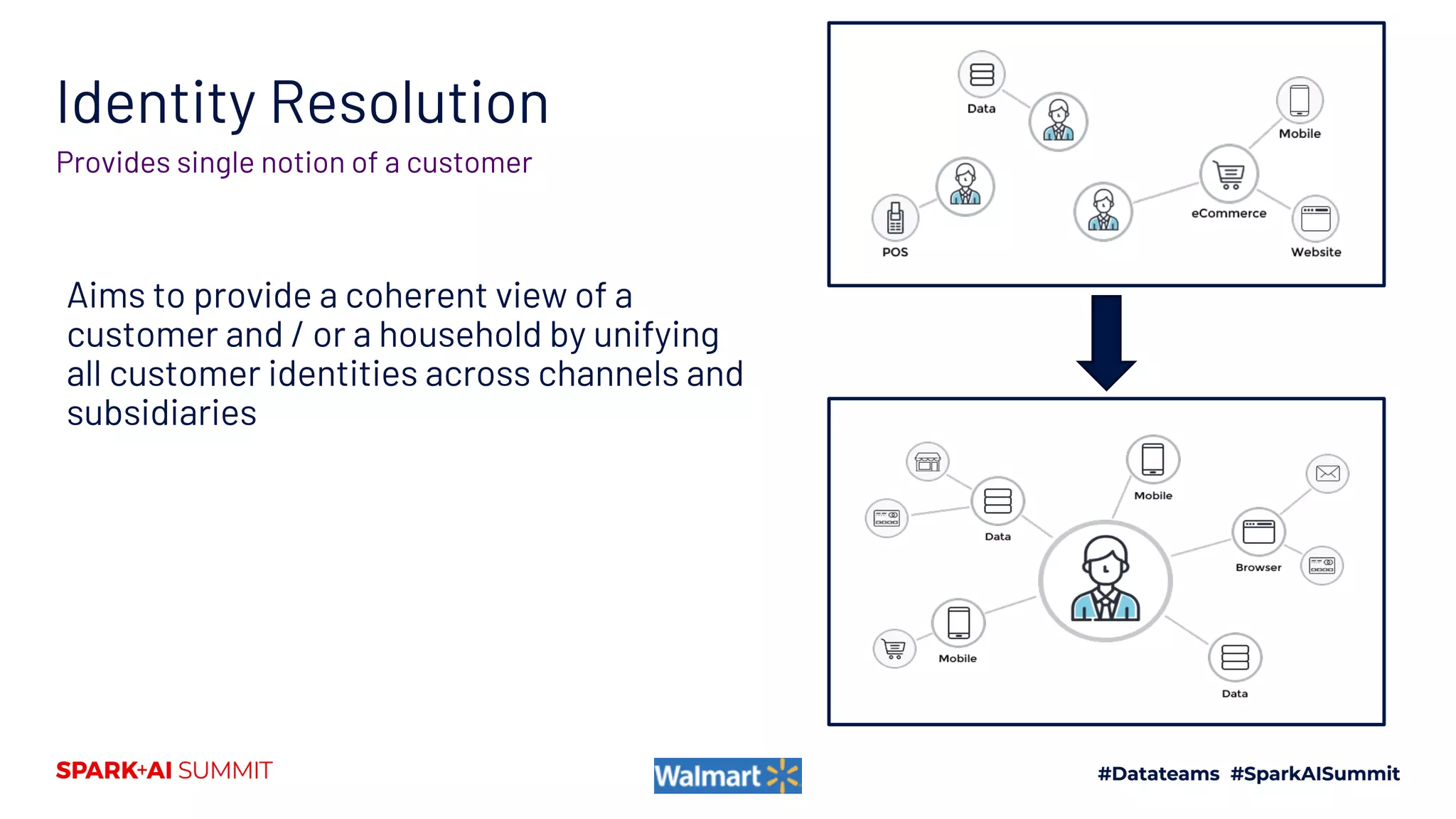

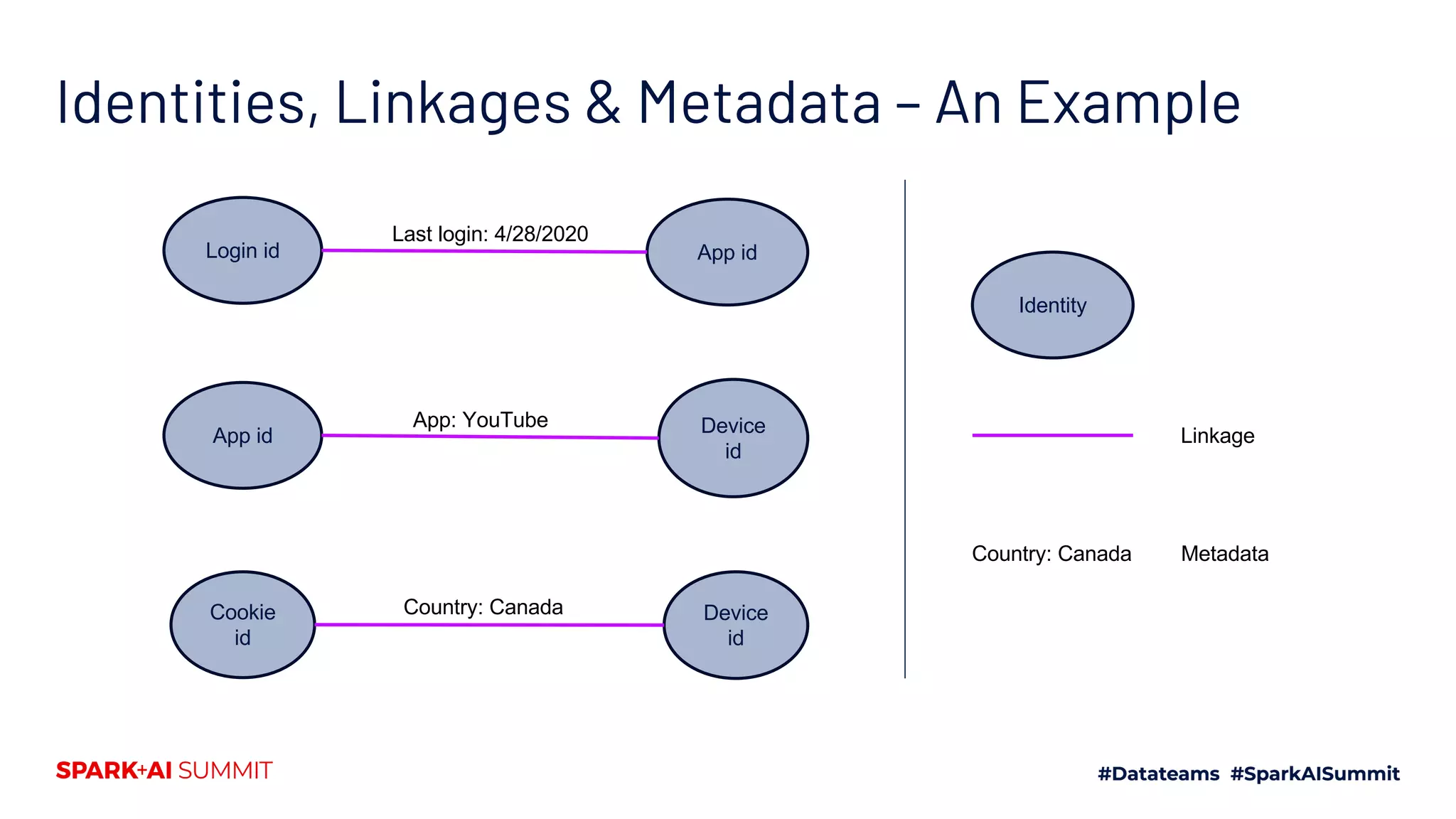

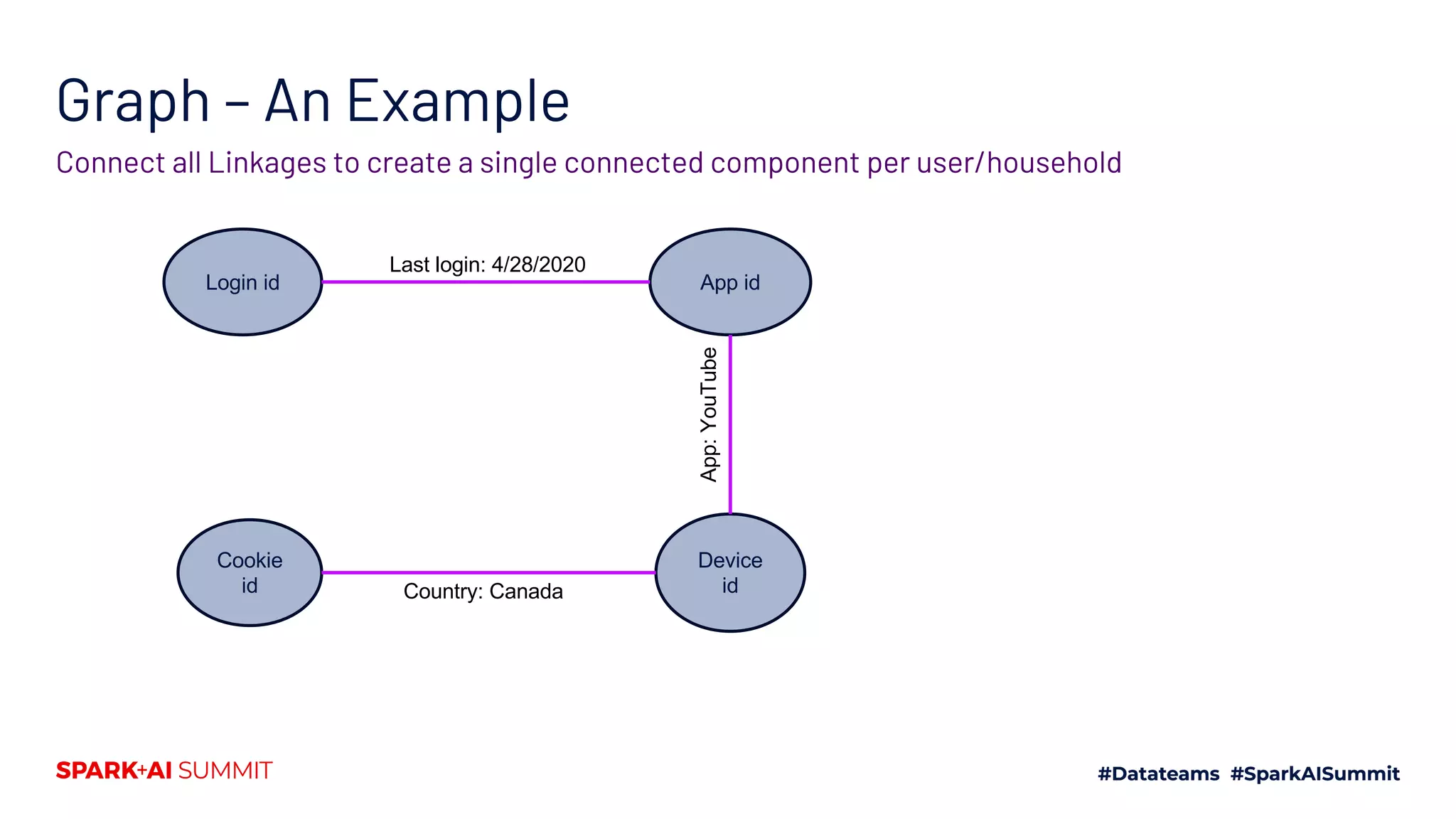

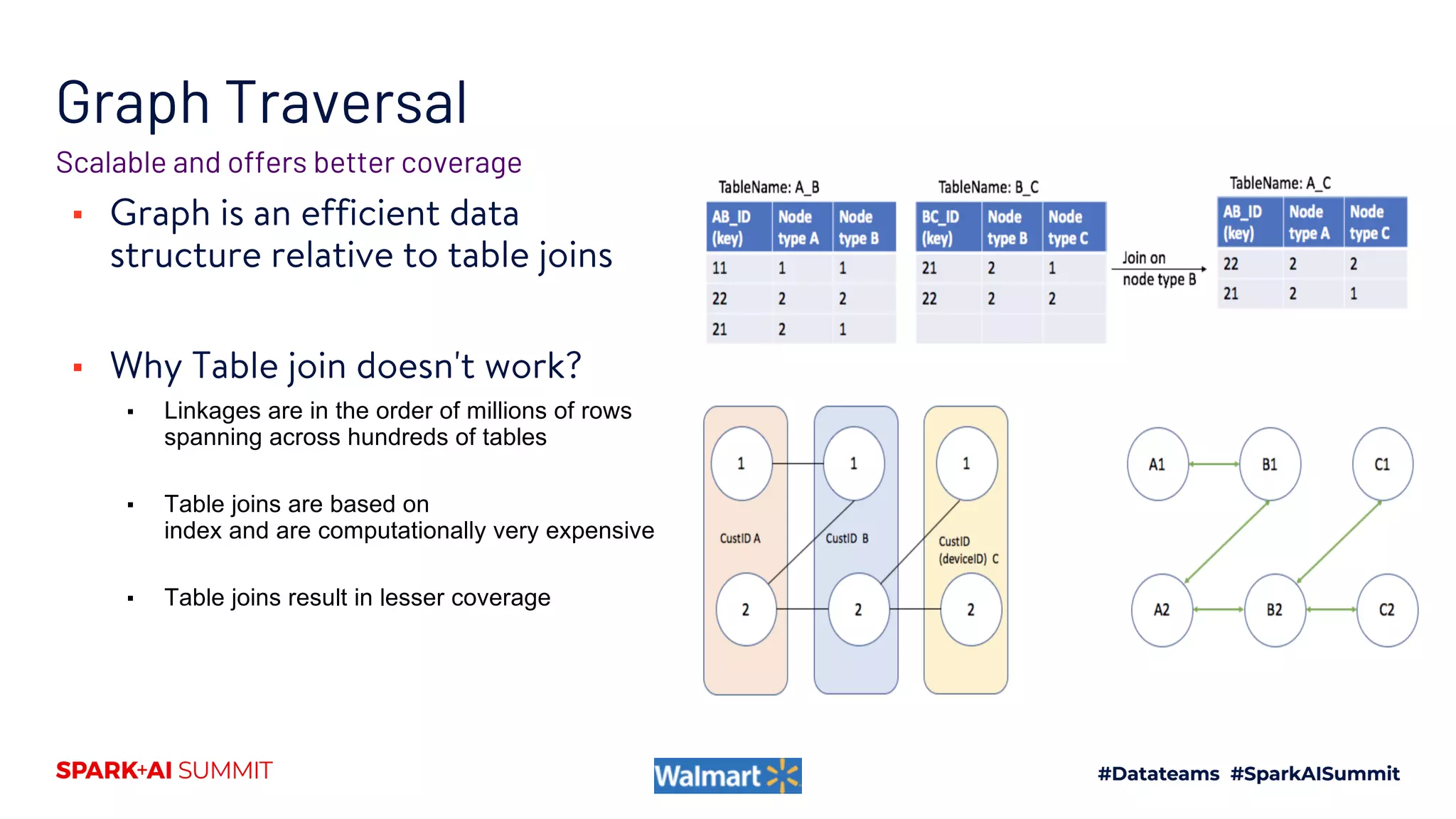

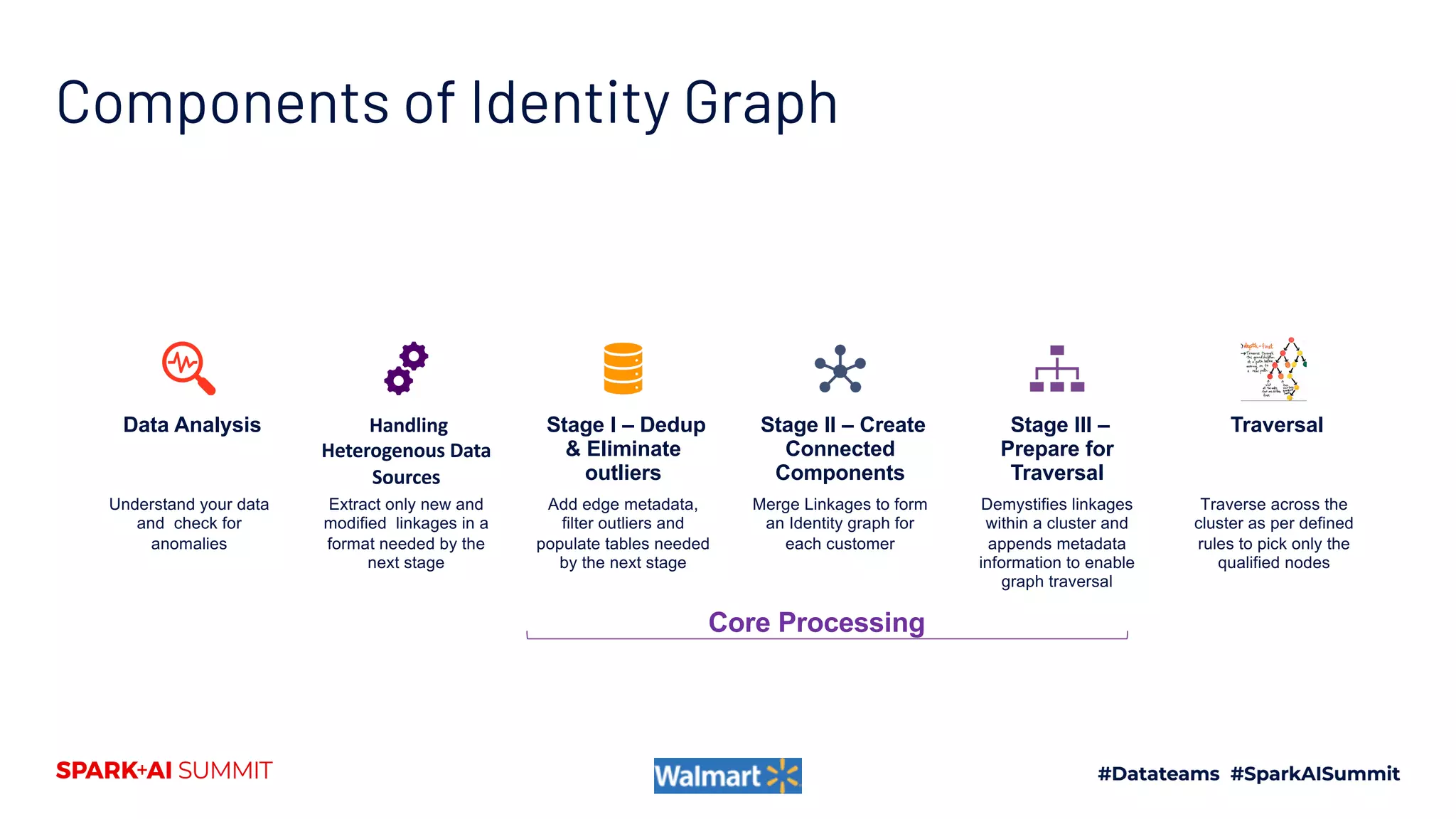

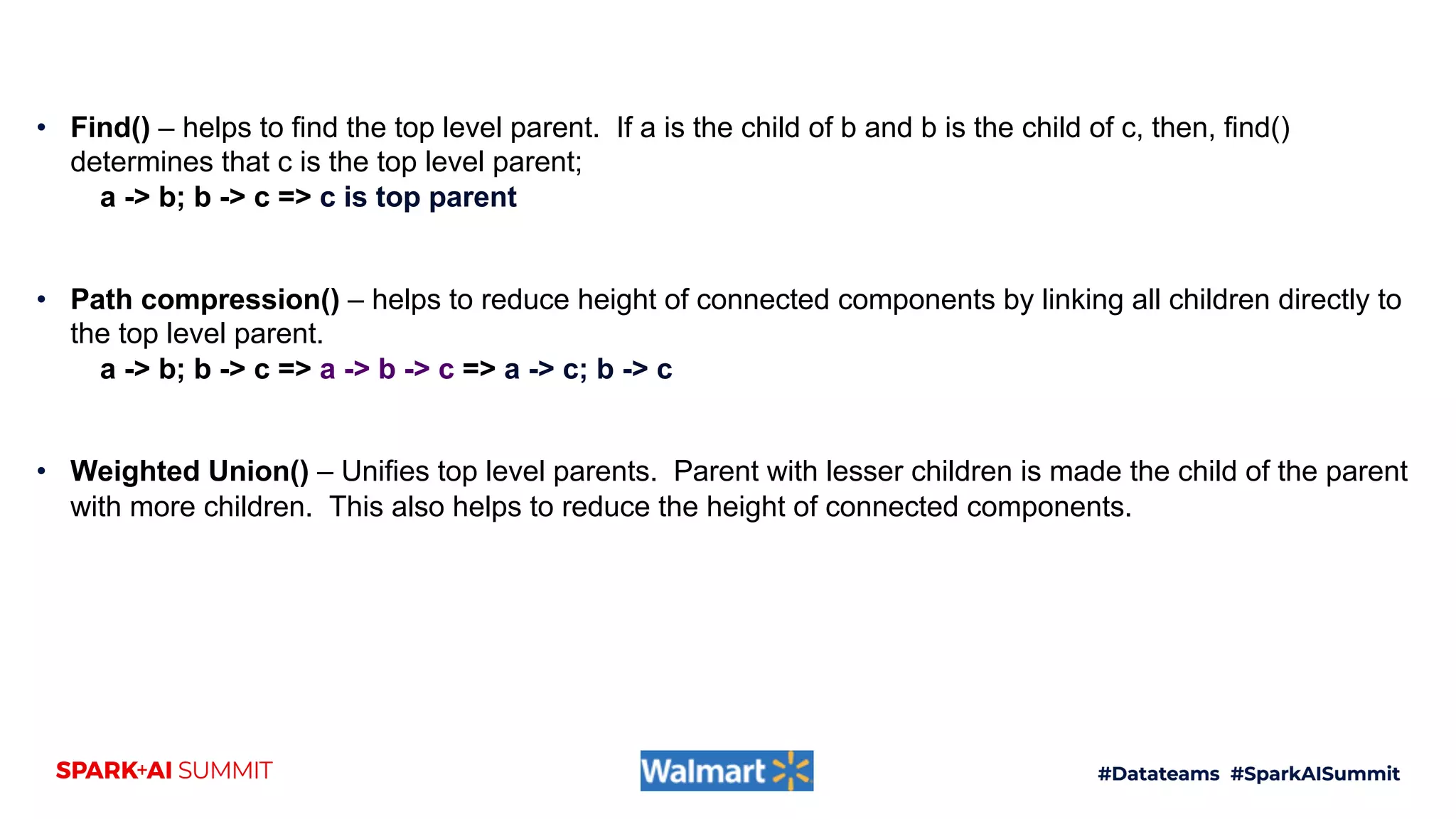

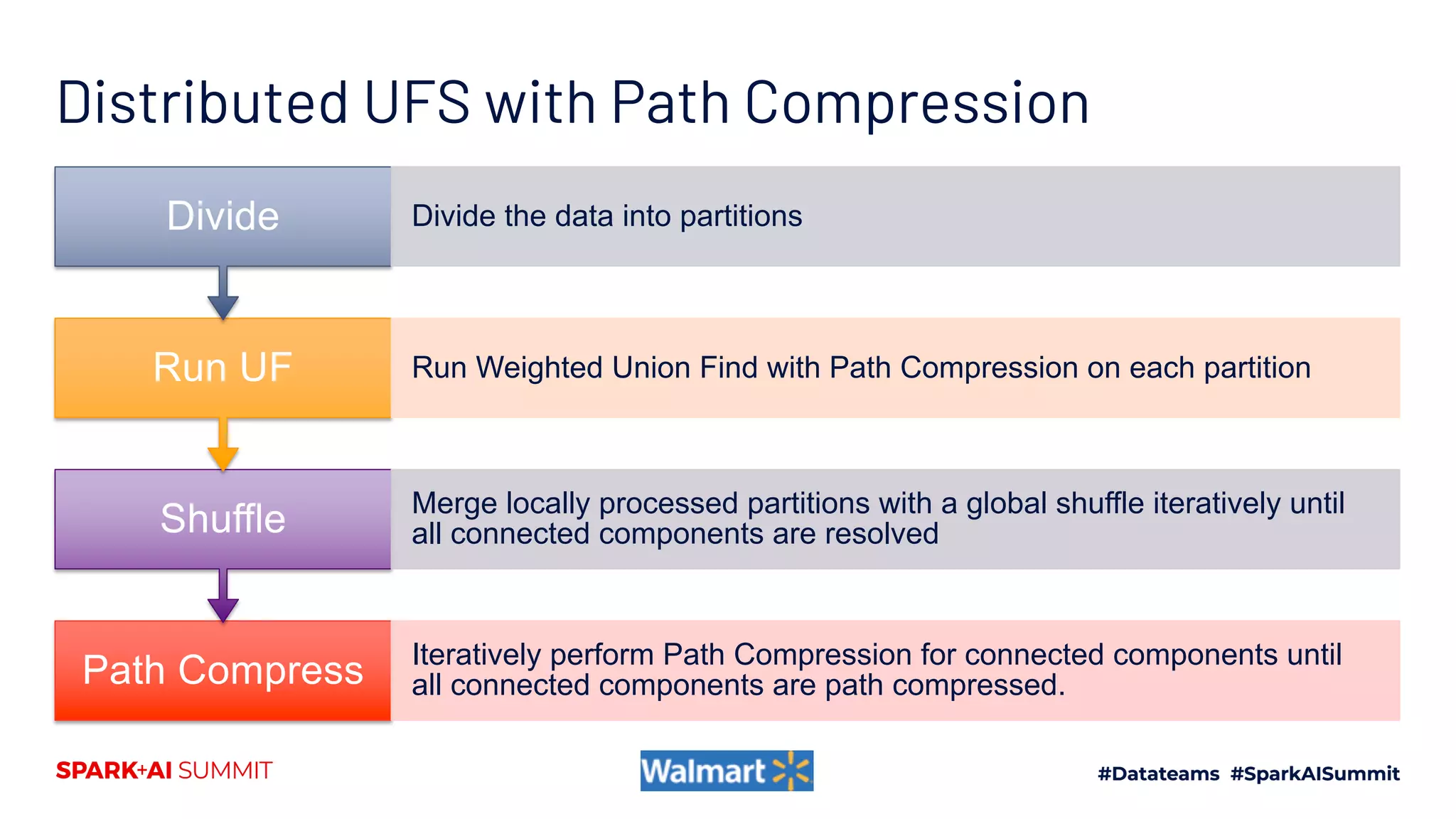

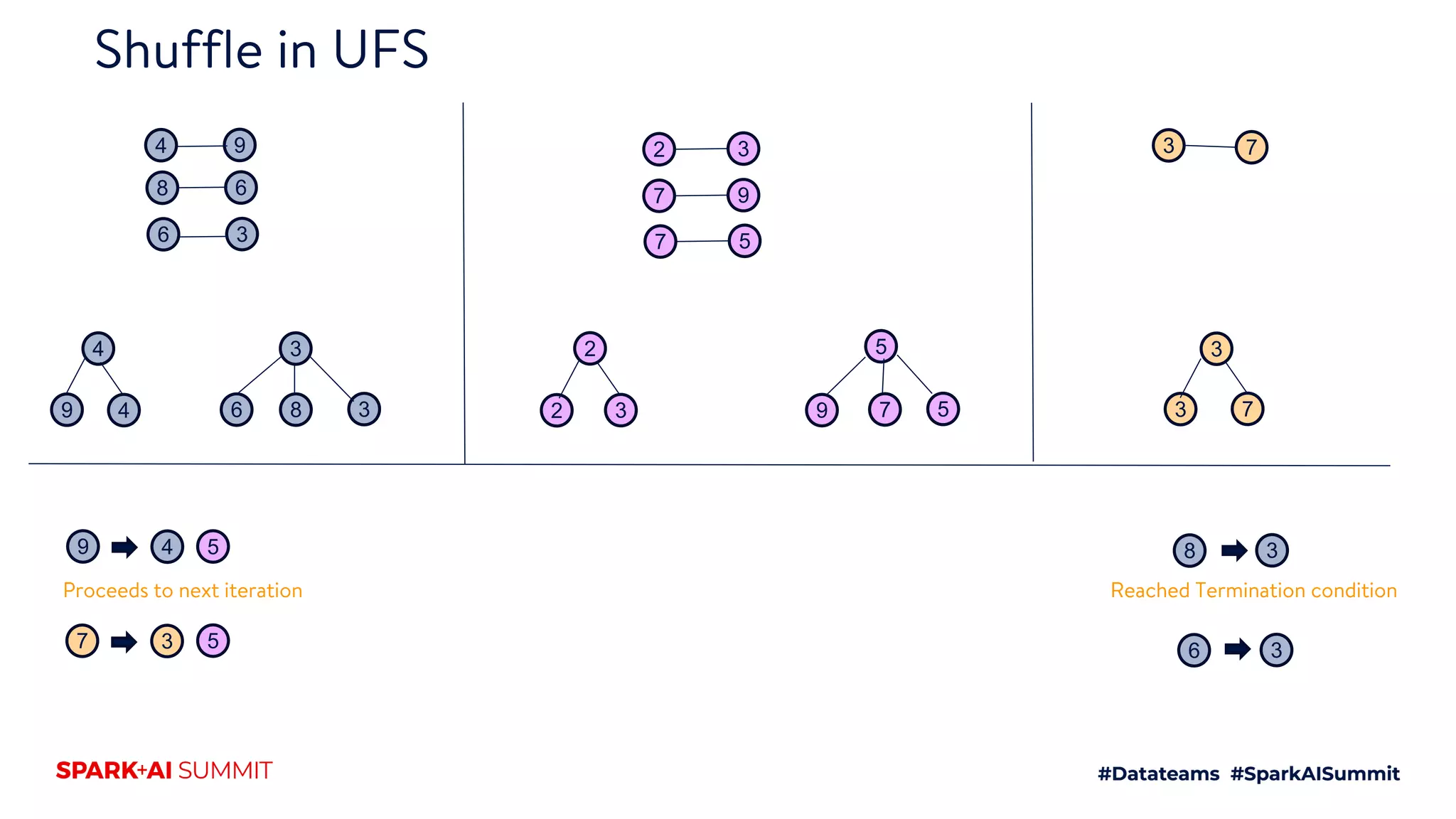

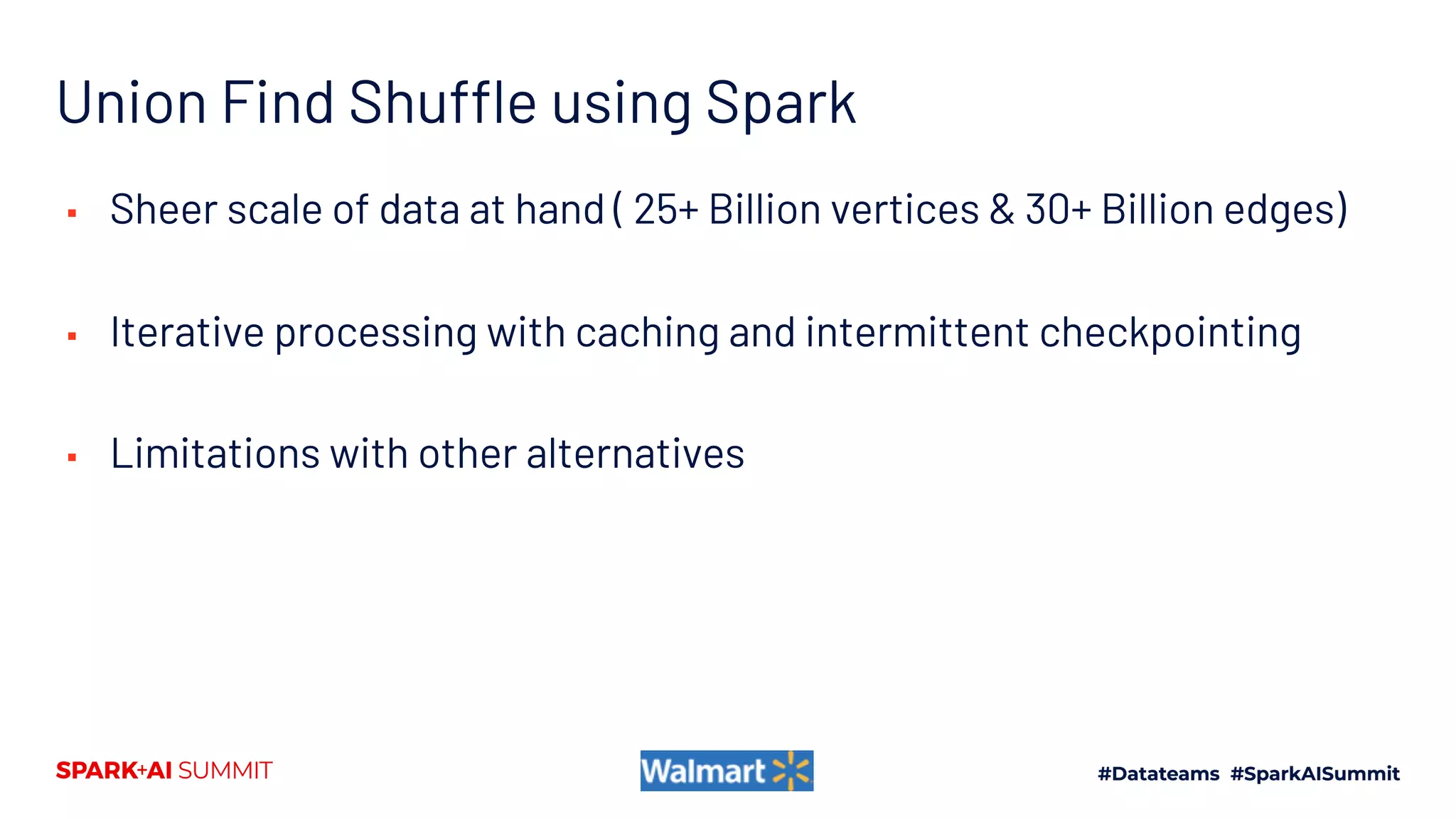

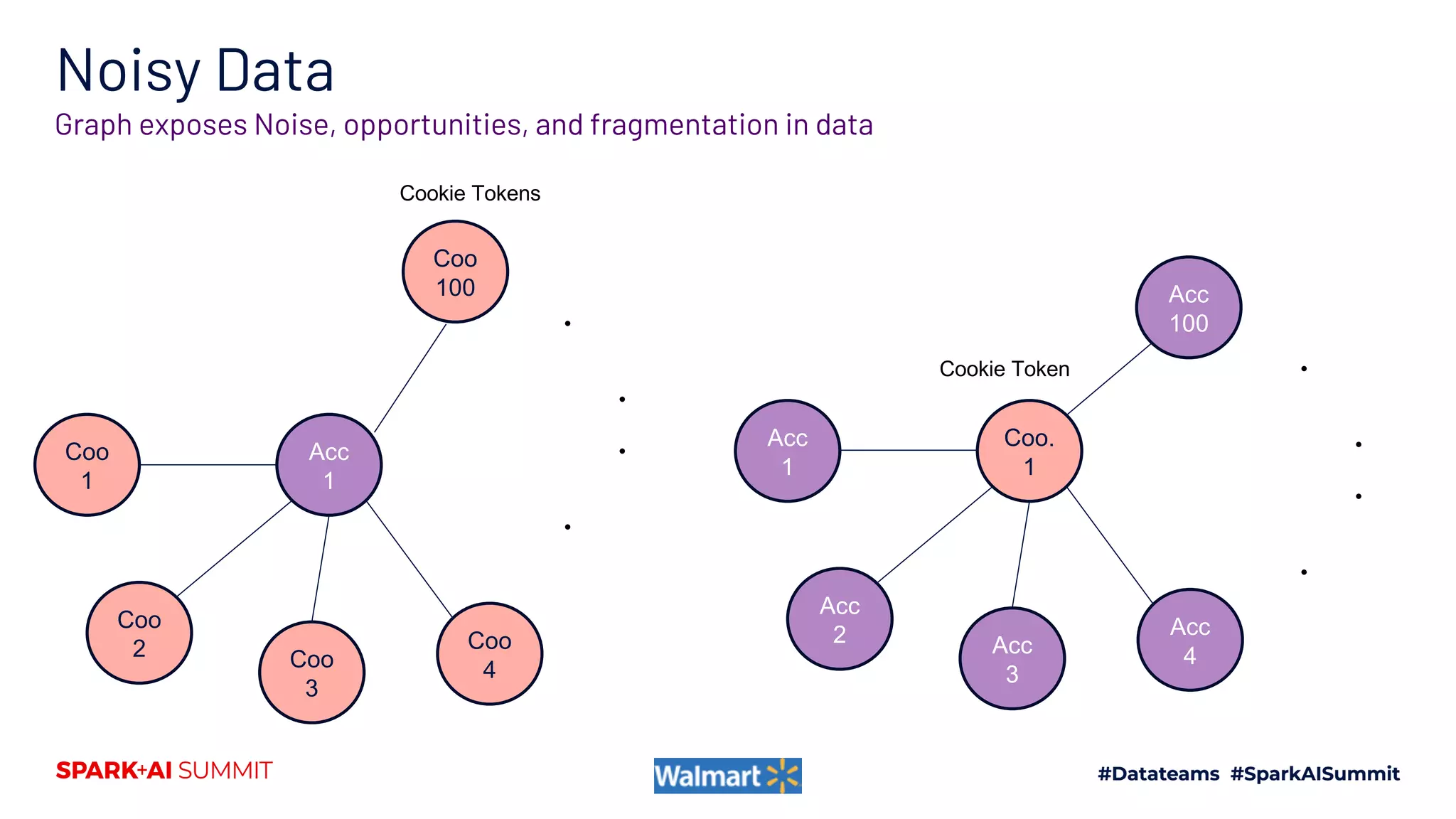

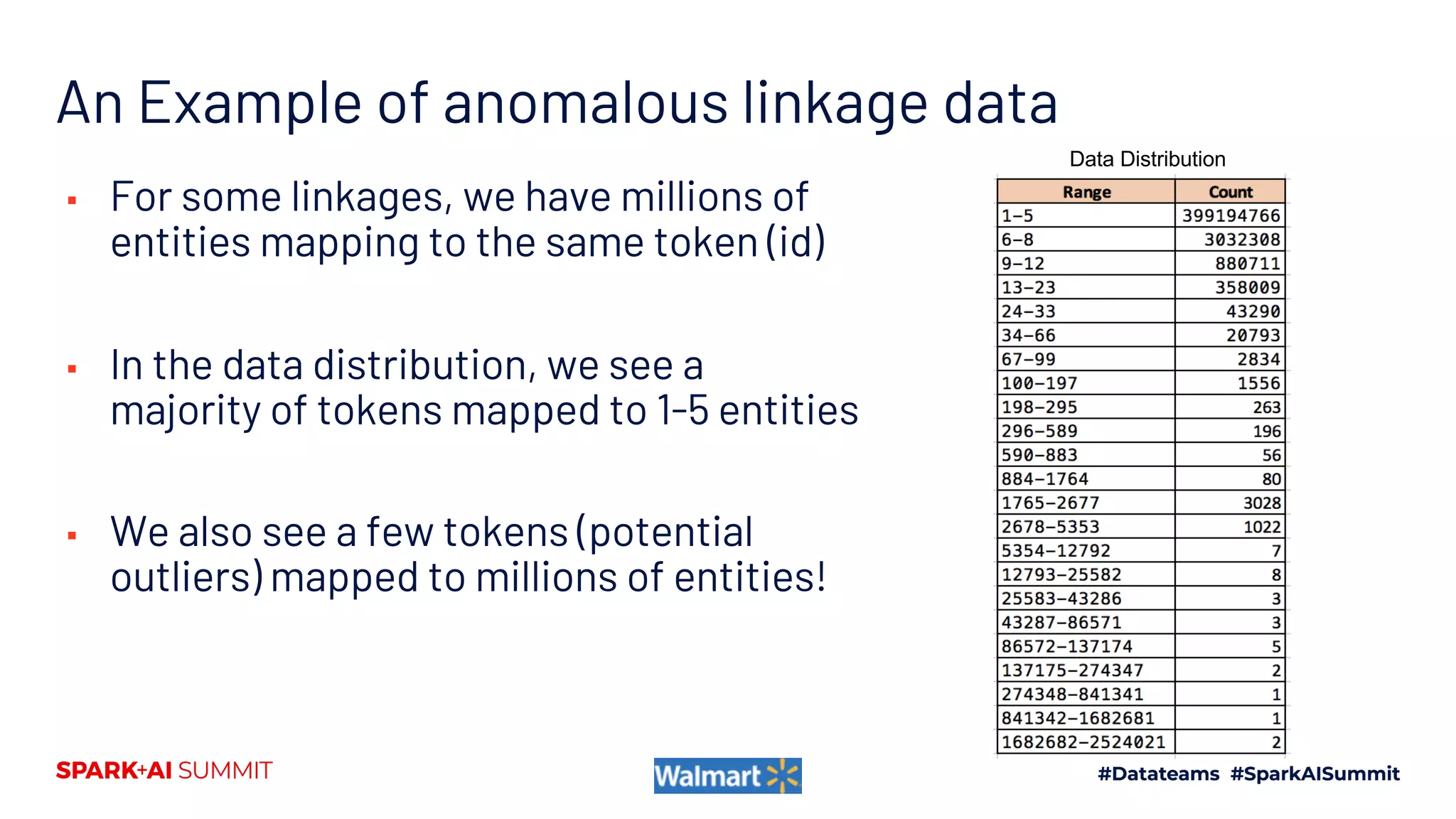

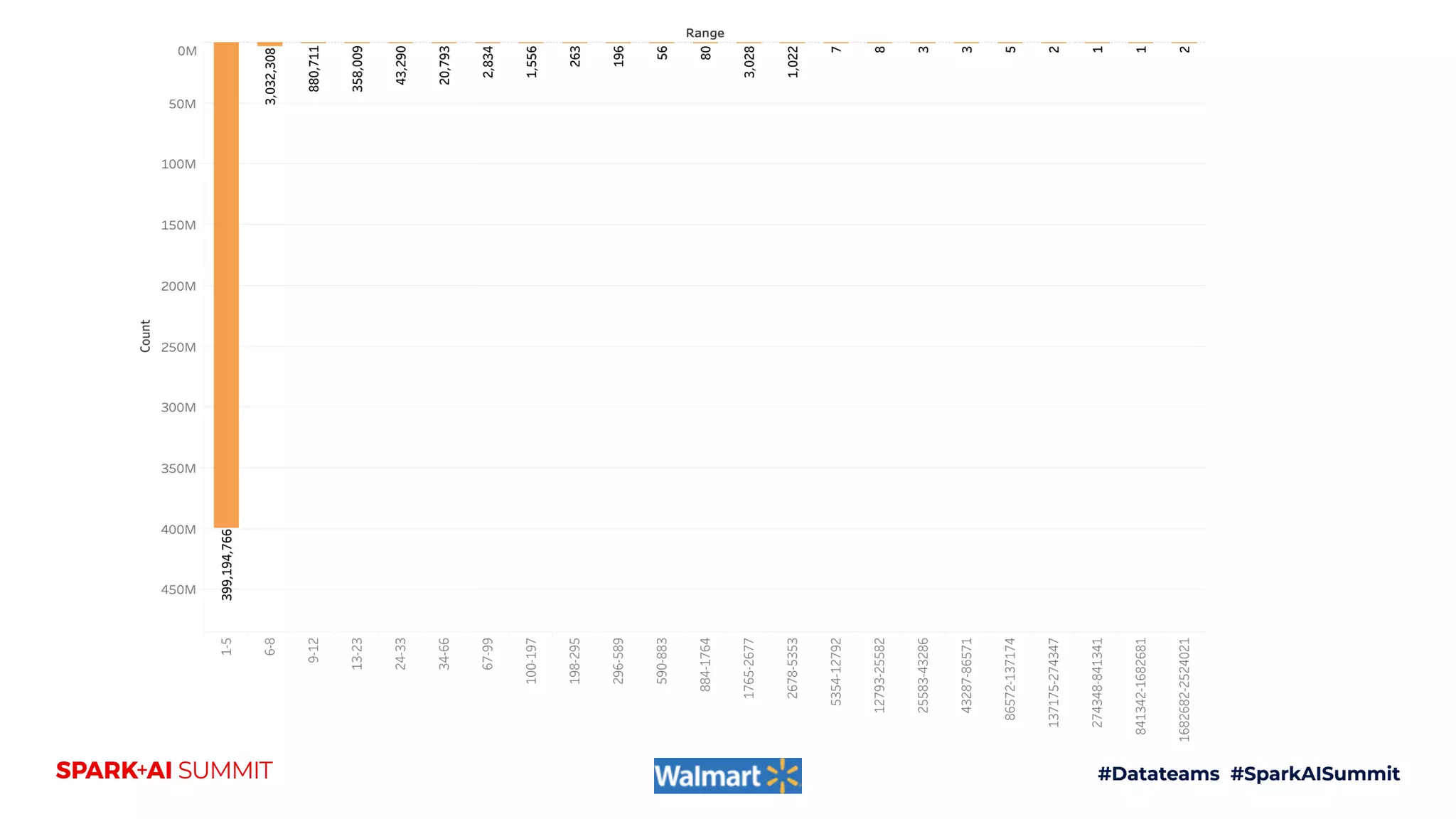

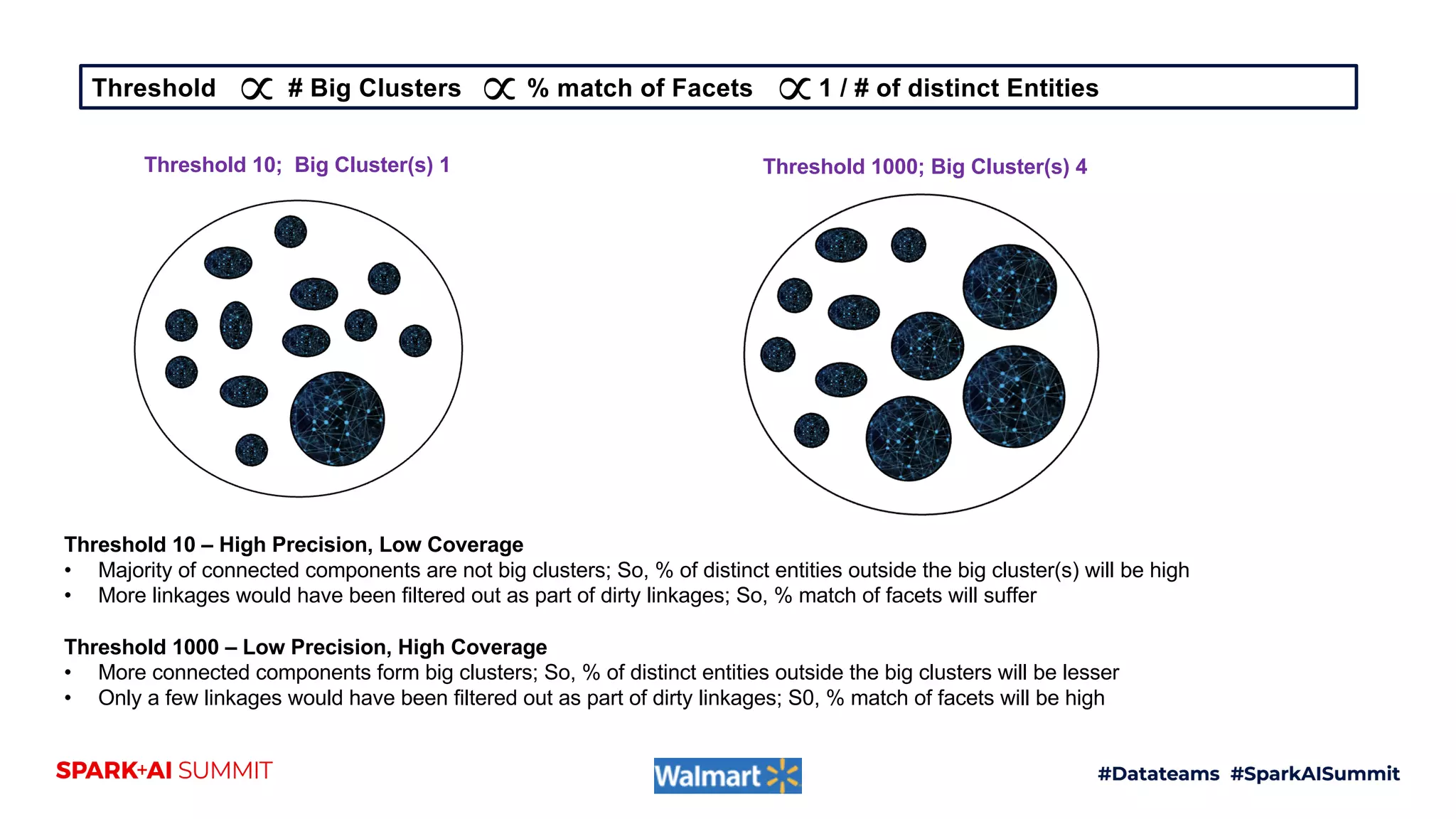

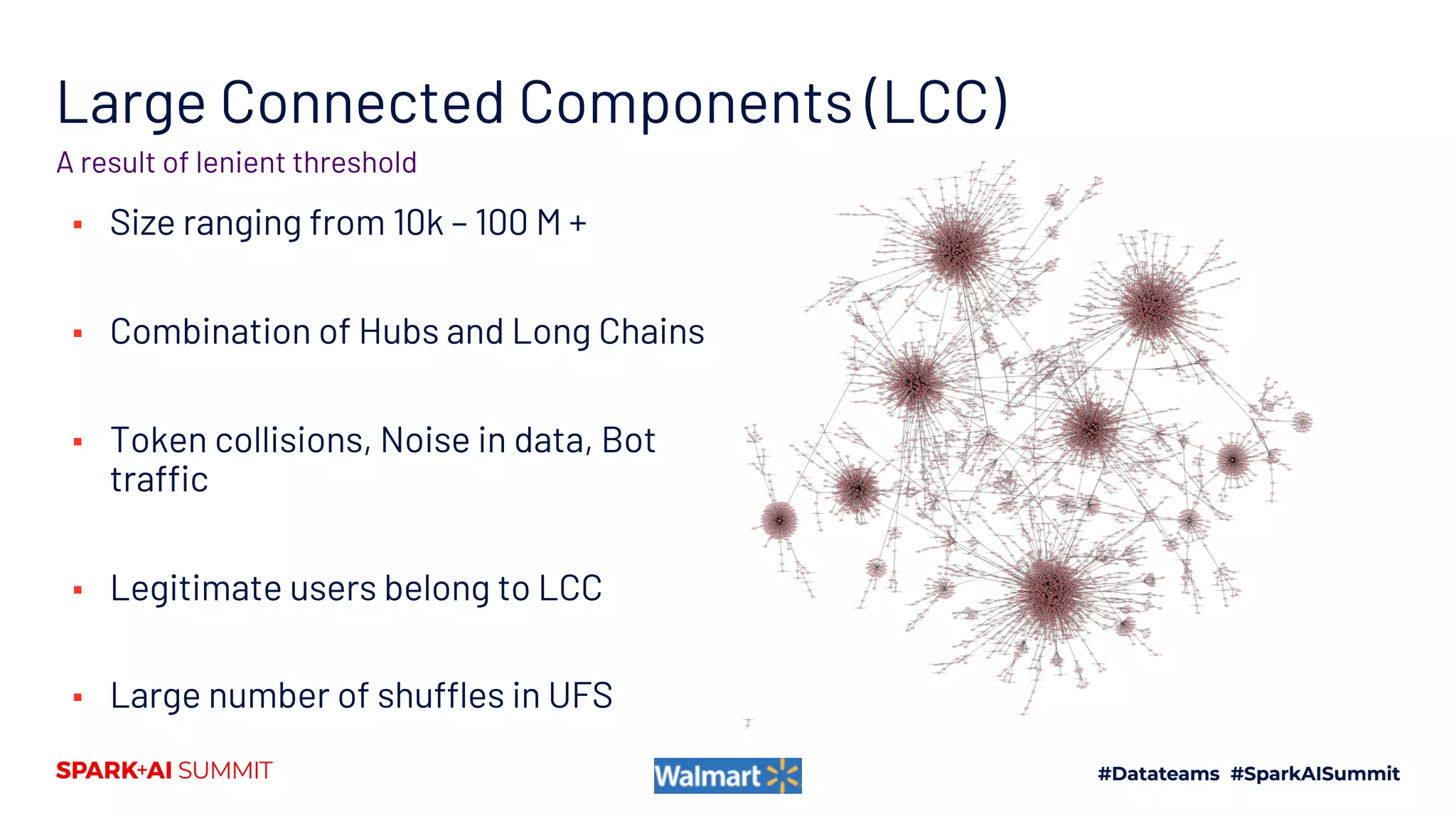

The document discusses the construction and scaling of identity graphs using heterogeneous data to achieve coherent customer identities across multiple channels. It outlines the benefits of using graph structures over traditional table joins, detailing the stages of building and processing identity linkages and the challenges faced, such as data quality issues and the need for real-time updates. Additionally, the paper emphasizes the importance of handling vast amounts of data efficiently while maintaining both precision and coverage in identity resolution.

![tid1 tid2 Linkage

metadata

a b tid1 tid

2

c

a b tid1 tid

2

c

a b tid1 tid

2

c

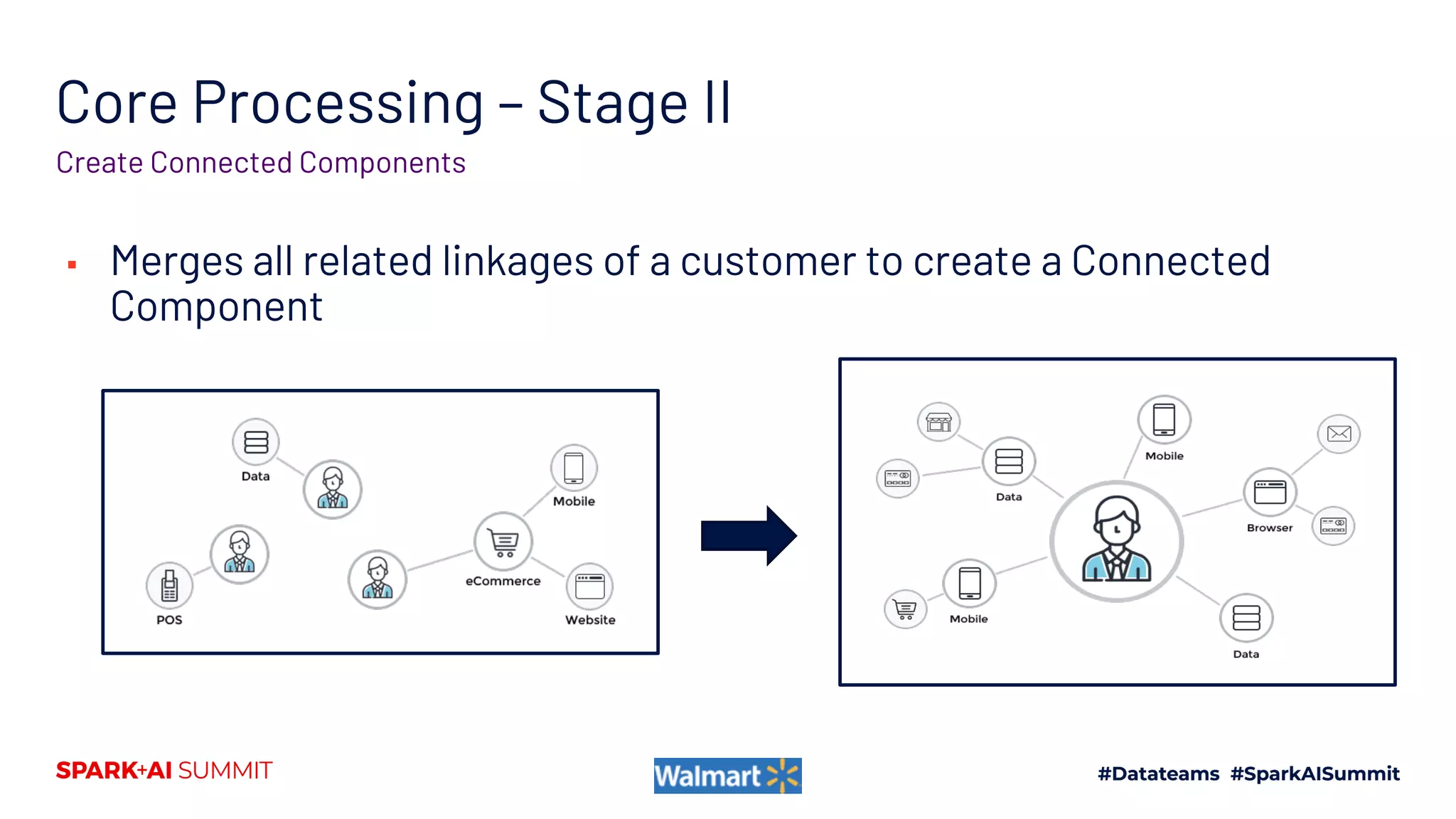

Graph Pipeline – Powered by

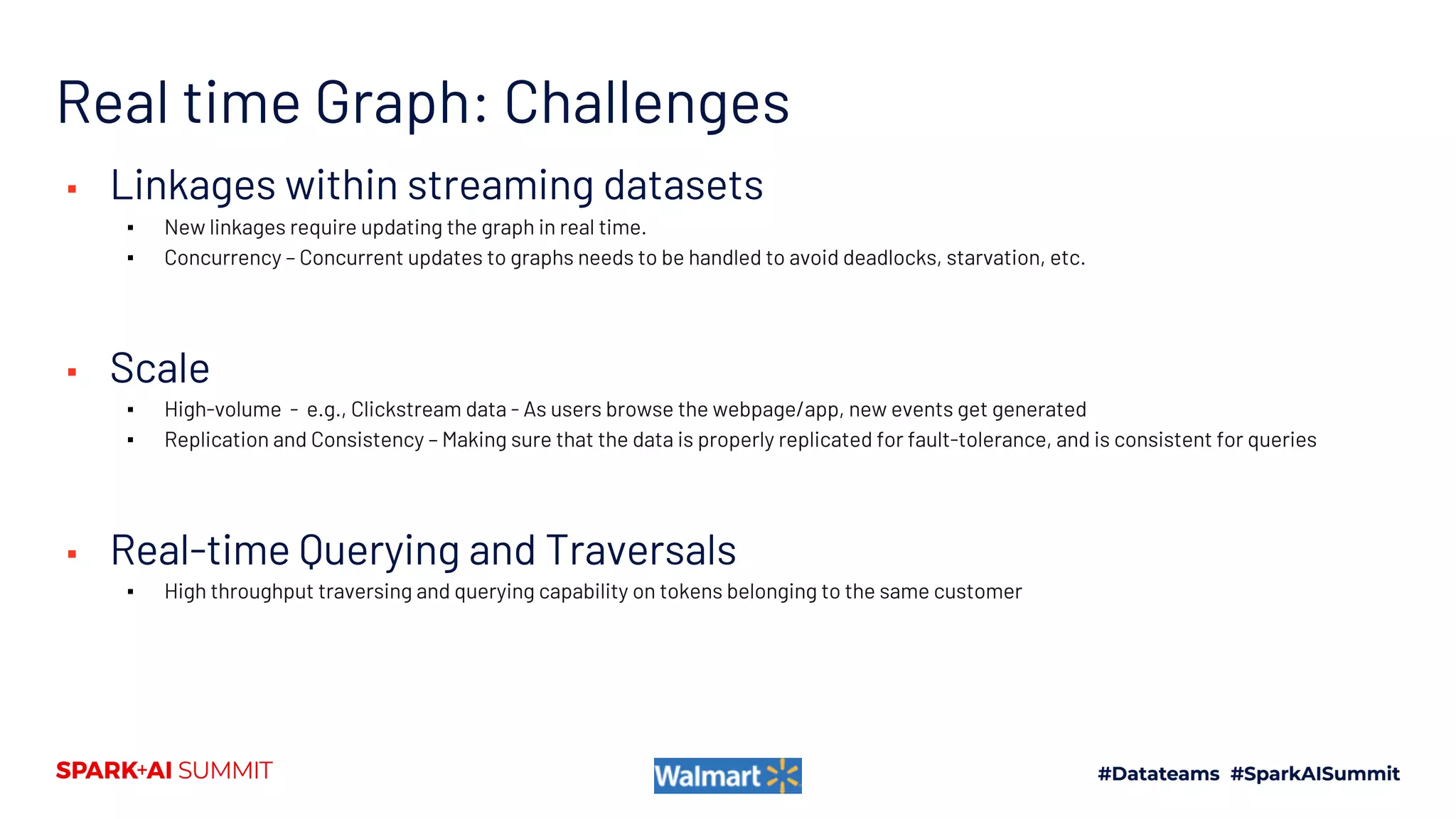

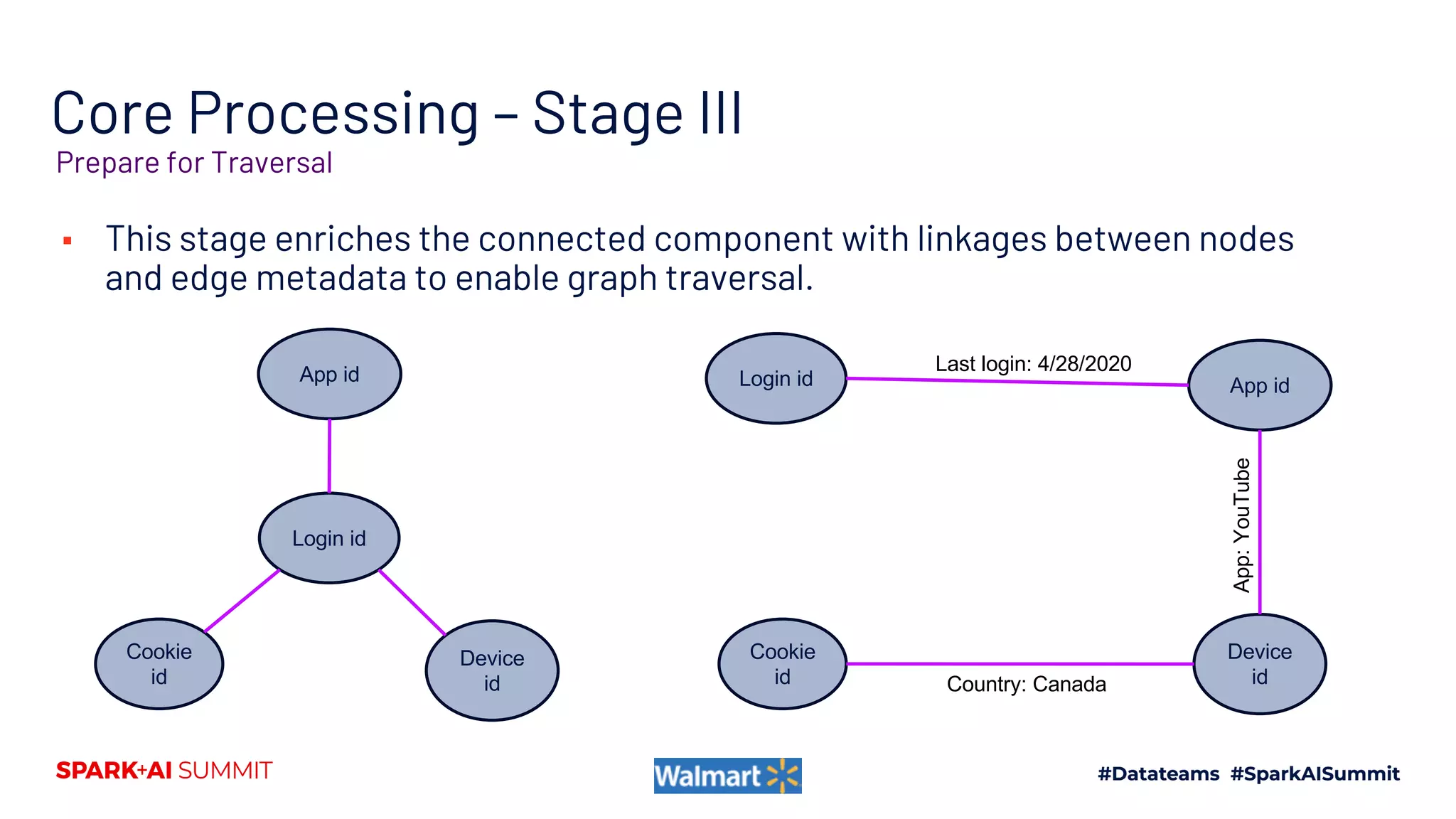

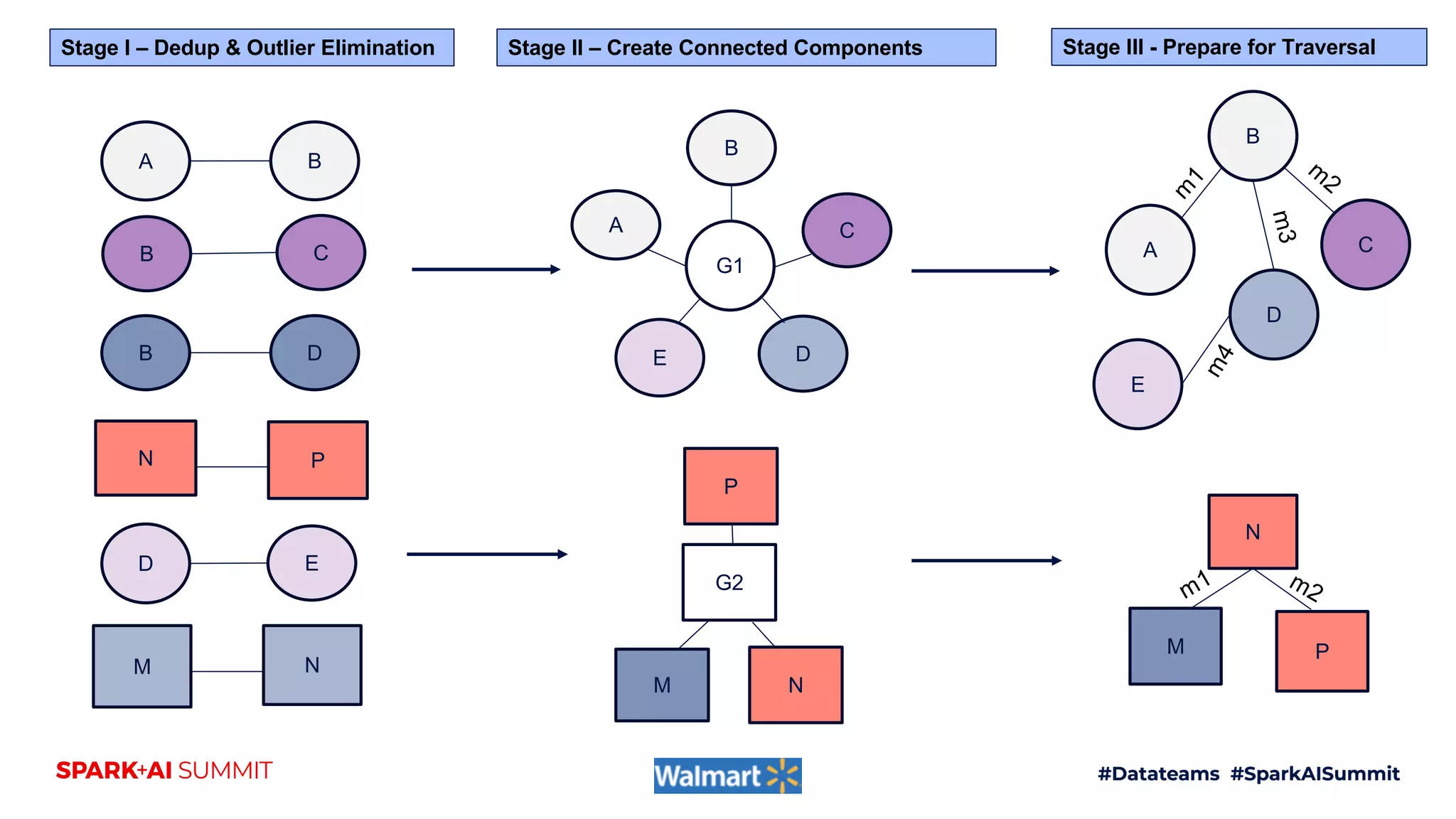

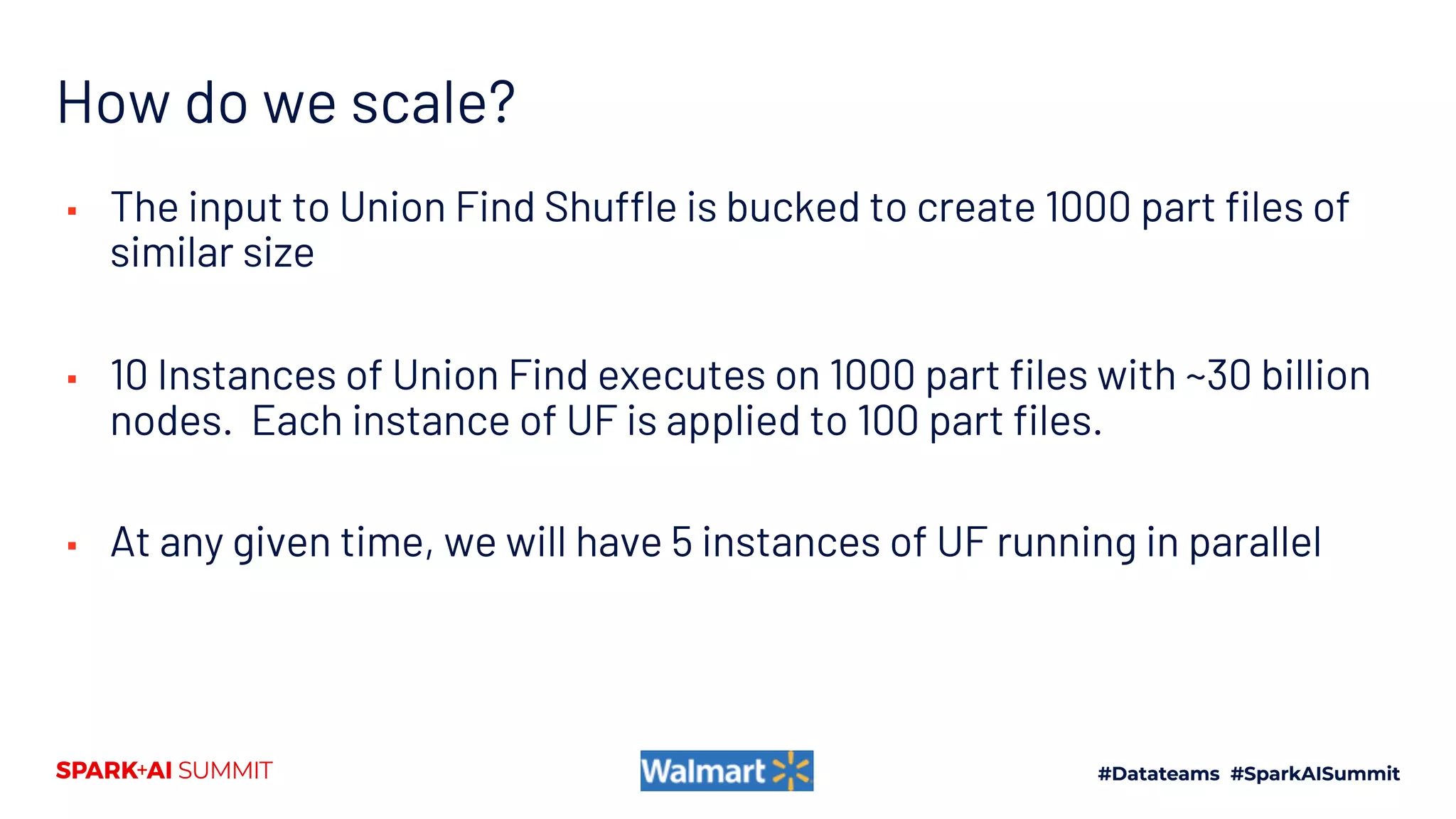

Handling Heterogenous Linkages Stage I Stage II

Stage III - LCC

Stage III - SCC

a b tid1 tid

2

c

a b tid1 tid2 c

15 upstream tables

p q tid6 tid9 r

25B+ Raw Linkages &

30B+ Nodes

tid1 tid2 Linkage

metadata

tid1 tid2 Linkage

metadata

tid1_long tid2_long Linkage

metadata

tid6_long tgid120

tid1_long tgid1

tgid Linkages with Metadata

tgid1 {tgid: 1, tid: [aid,bid],

edges:[srcid,

destid,metadata],[] }

1-2

hrs

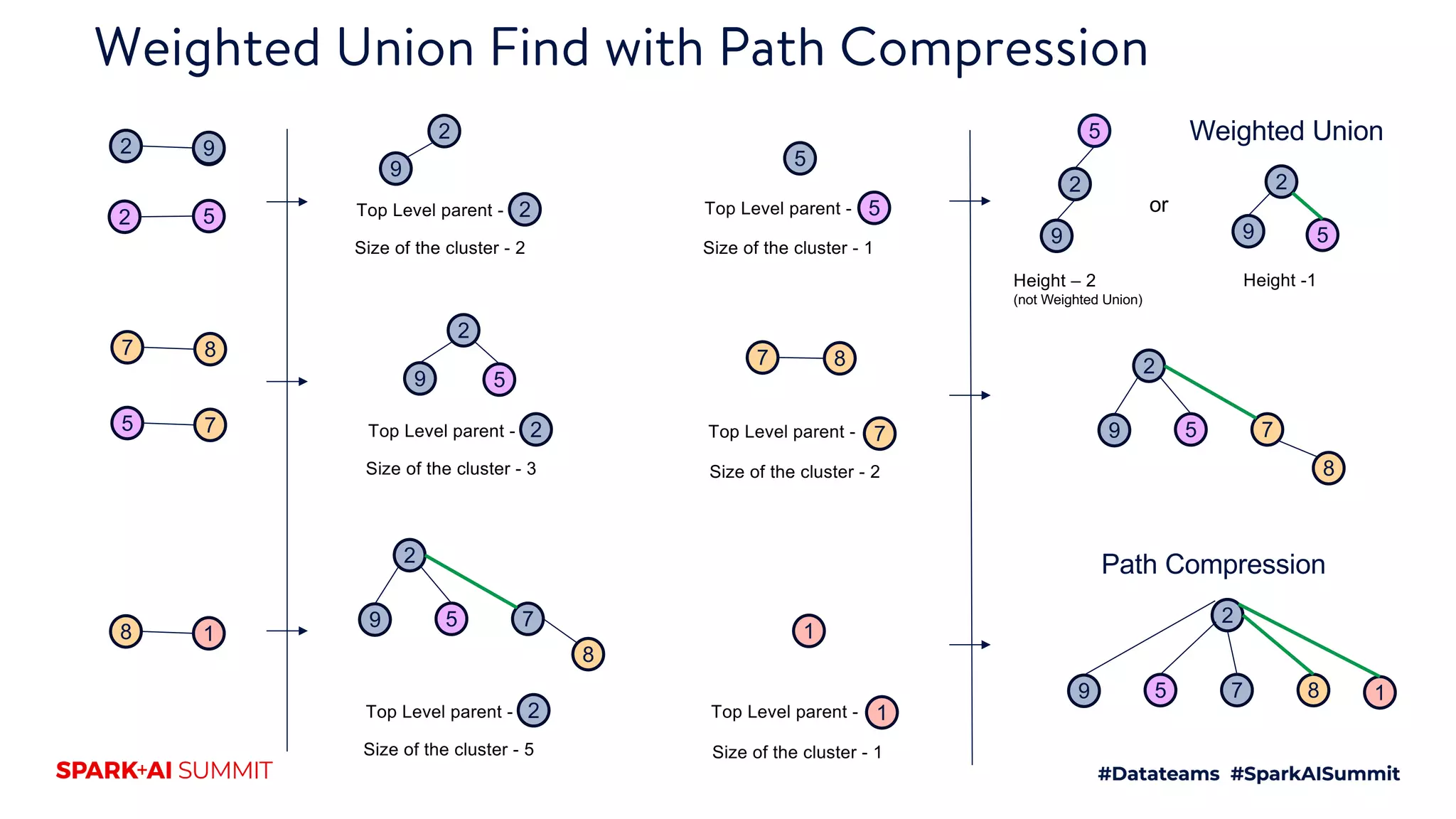

UnionFindShuffle

8-10hrs

1-2

hrs

Subgraphcreation

4-5hrs

tgid tid Linkages

(adj_list)

3 A1 [C1:m1, B1:m2, B2:m3]

2 C1 [A1:m1]

2 A2 B2:m2

3 B1 [A1:m2]

3 B2 [A1:m3]

tid Linkages

A1 [C1:m1, B1:m2]

A2 [B2:m2]

C1 [A1:m1]

B1 [A1:m2]

Give all aid-bid linkages which go via

cid

Traversal request

Give all A– B linkages where

criteria= m1,m2

Traversal request on LCC

Filter tids on

m1,m2

Select

count(*)

by tgid

MR on

filtered

tgid

partitions

Dump

LCC table

> 5k

CC

startnode=A, endnode=B, criteria=m1,m2

tid Linkage

A1 B1

A2 B2

For each tid do

a bfs

(unidirected/bidi

rected)

Map

Map

Map

1 map per tgid

traversal

tid1 tid1_long

tid6 tid6_long

tid6 tgid120

tid1 tgid1

Tableextraction&

transformation30mins

20-30

mins

2.5

hrs

30

mins

20-30

mins](https://image.slidesharecdn.com/129sudhaviswanathansaigopalthota-200707194704/75/Building-Identity-Graphs-over-Heterogeneous-Data-38-2048.jpg)