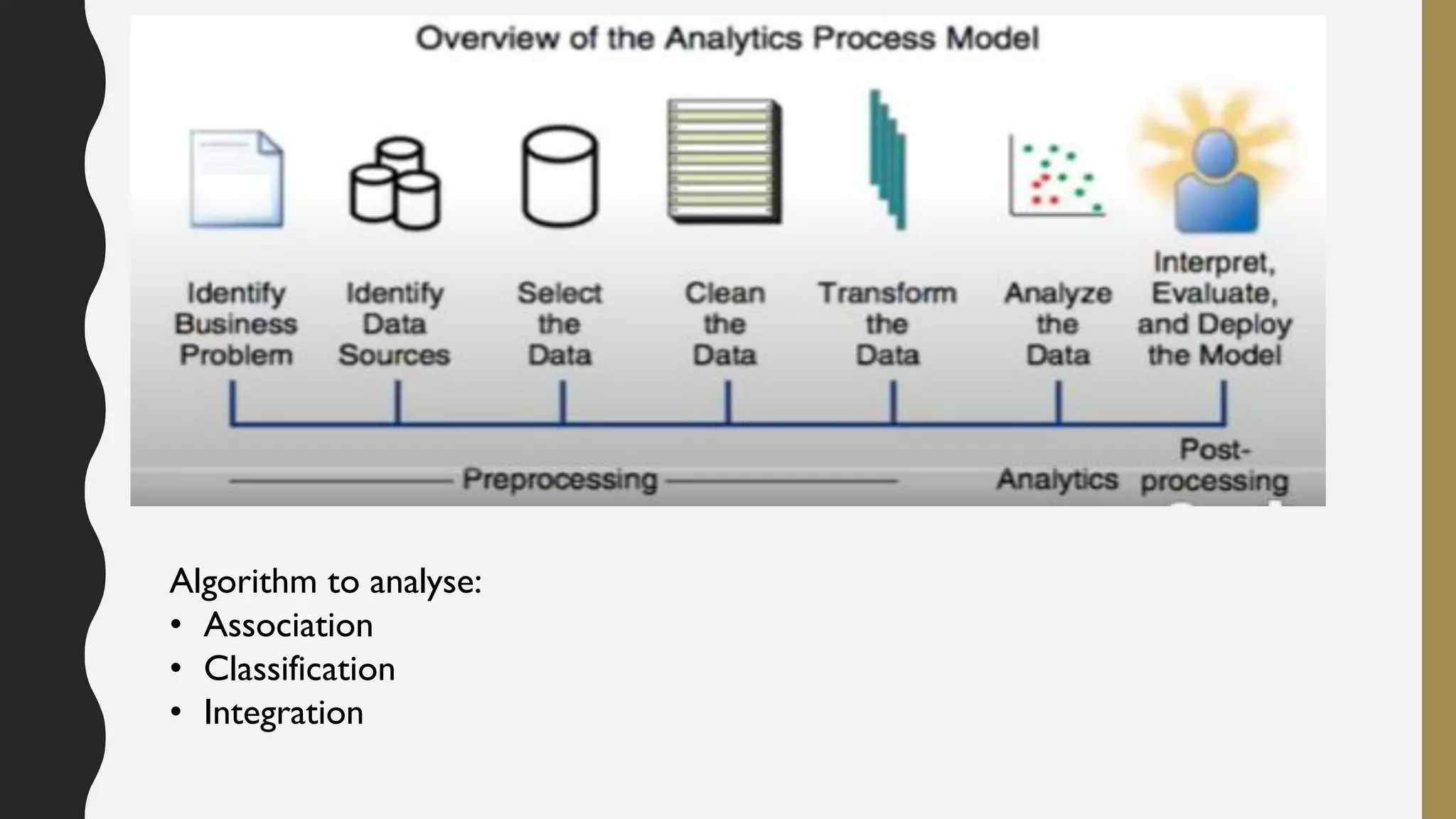

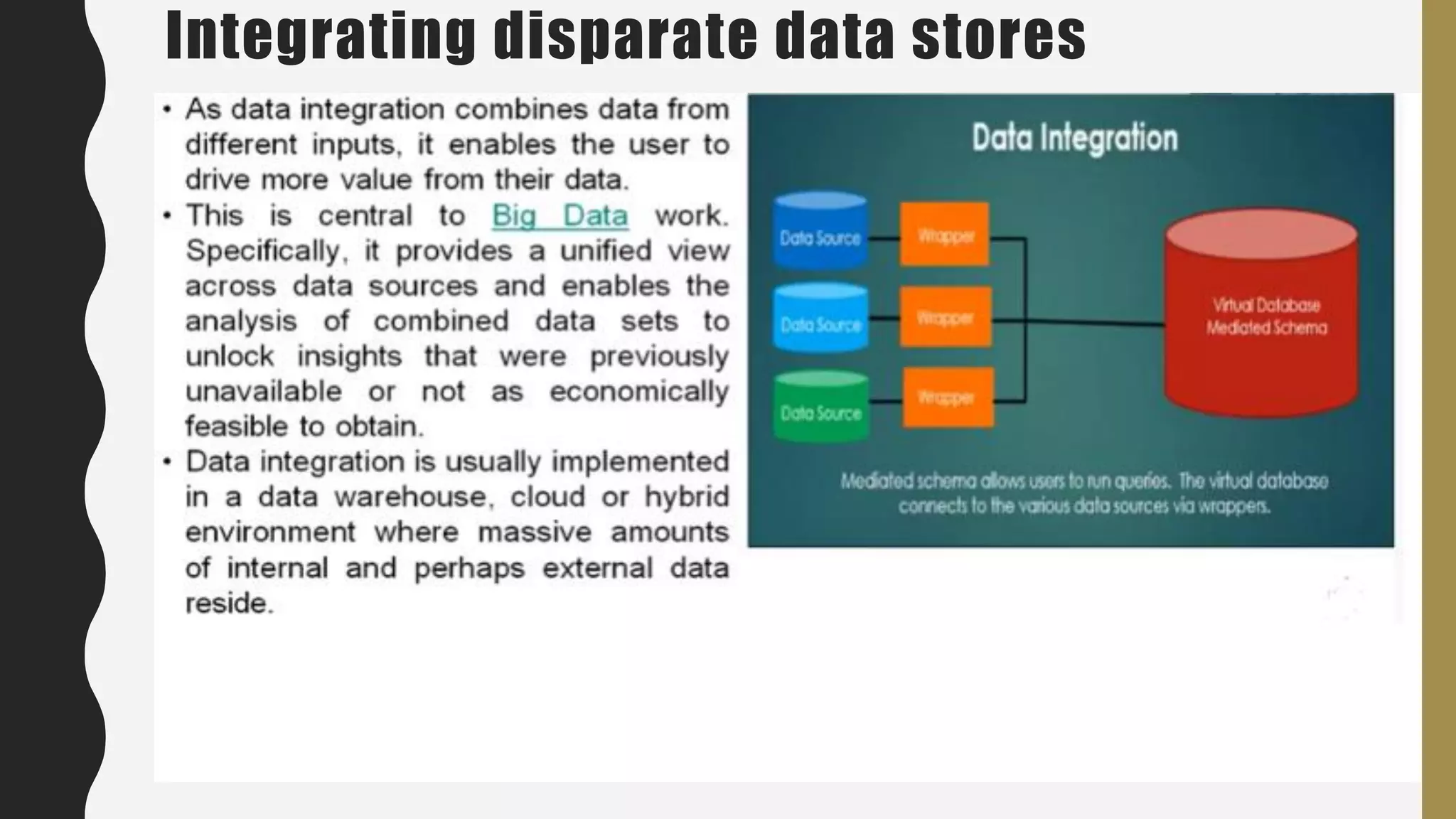

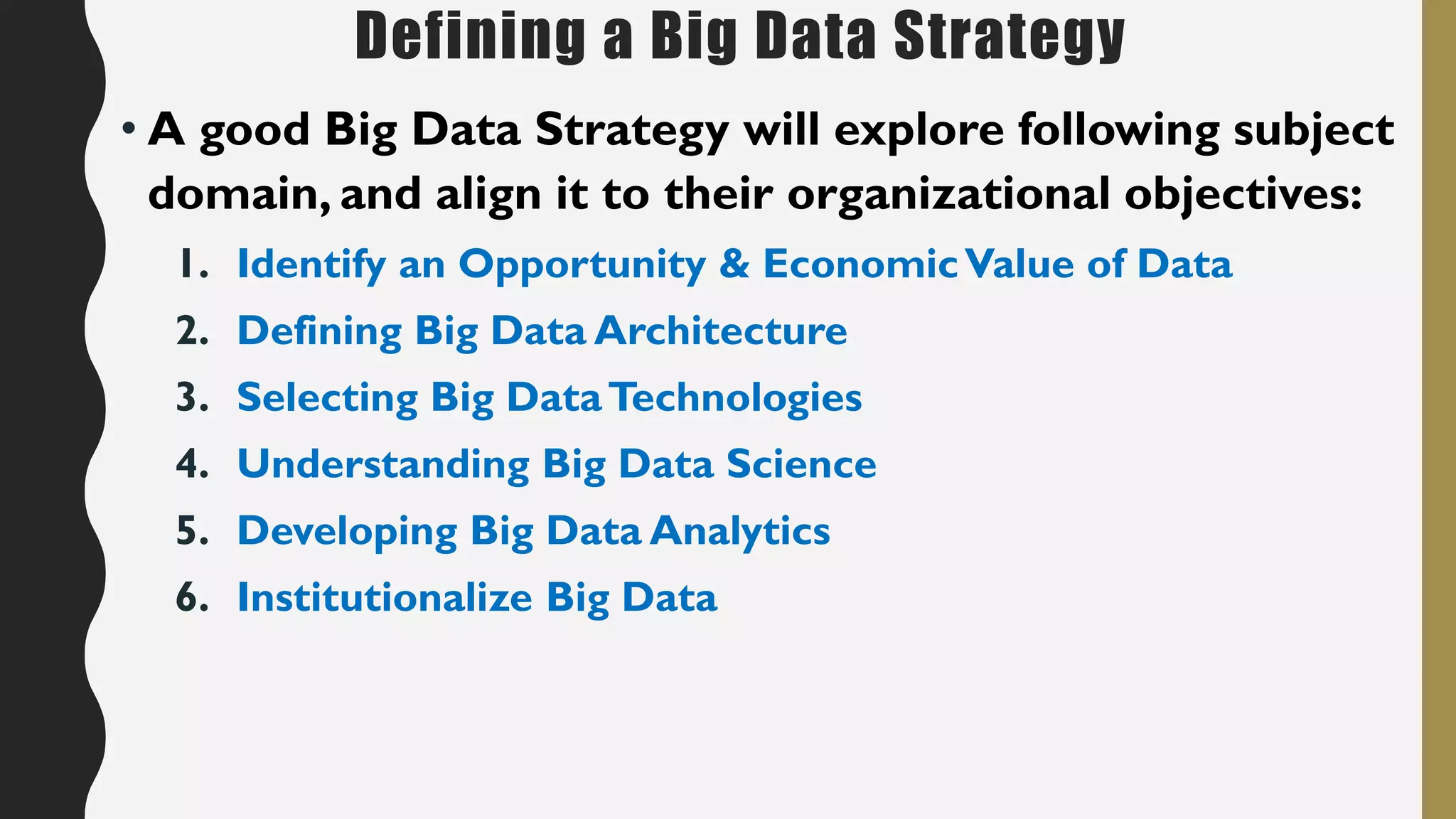

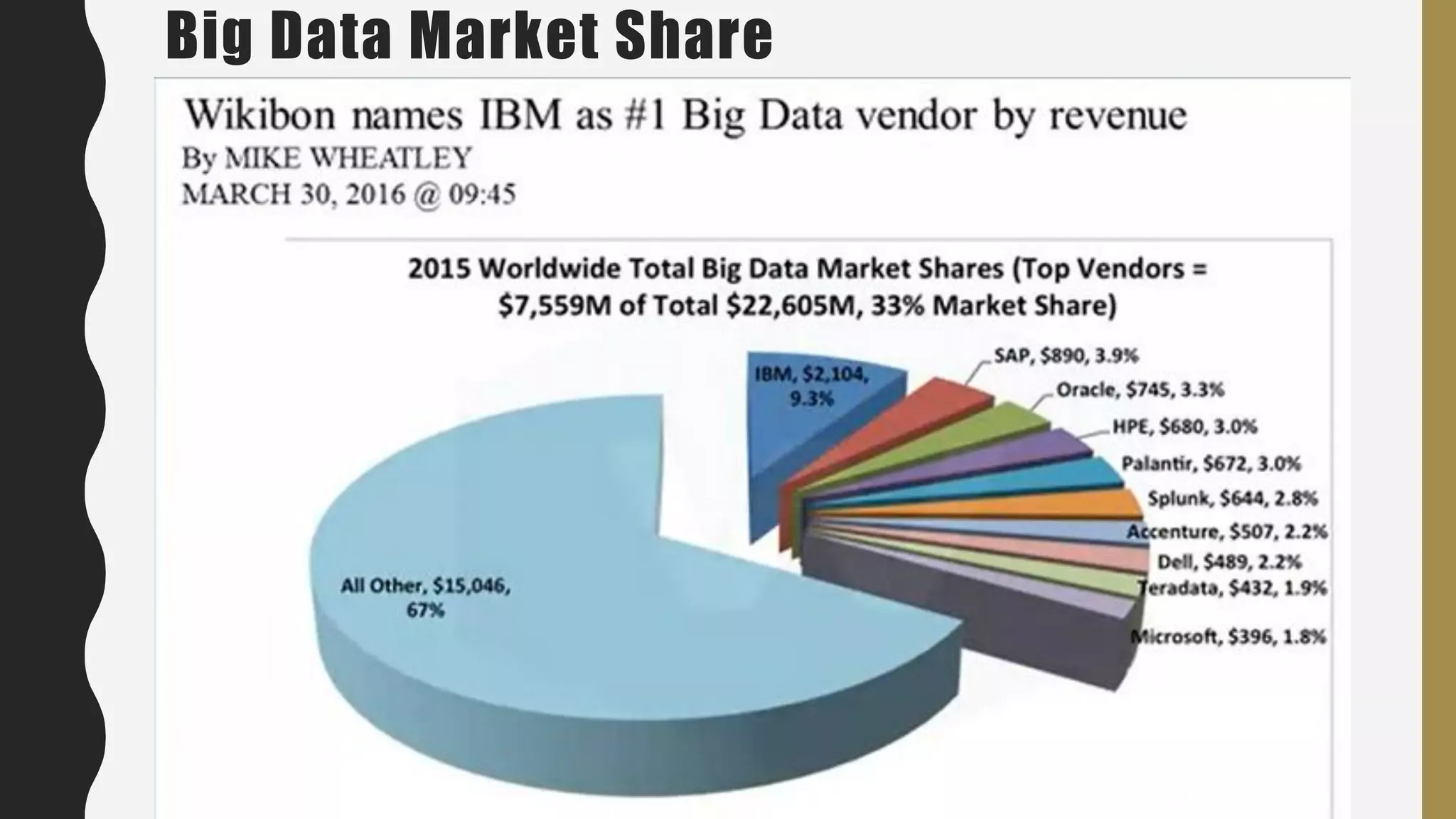

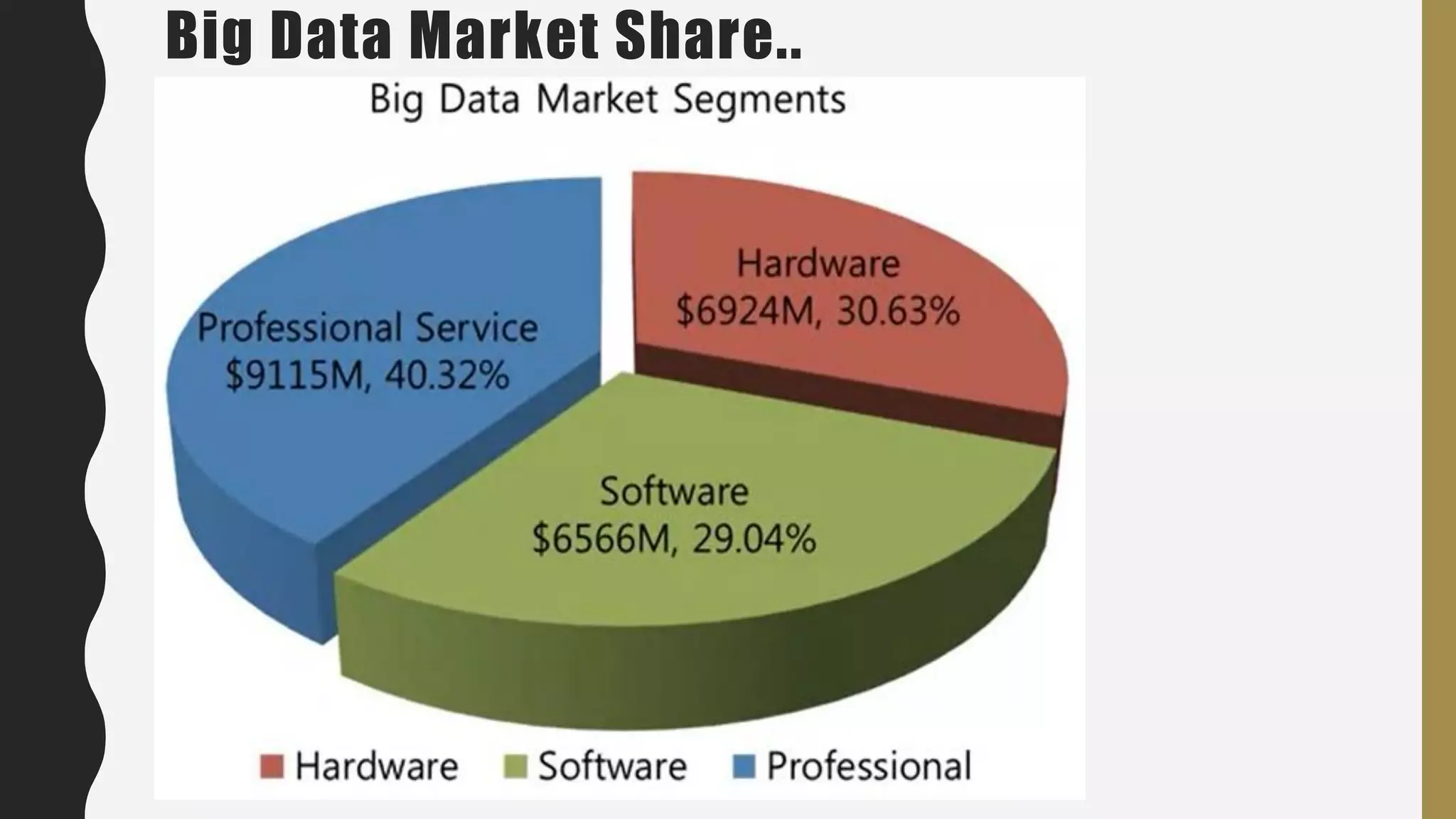

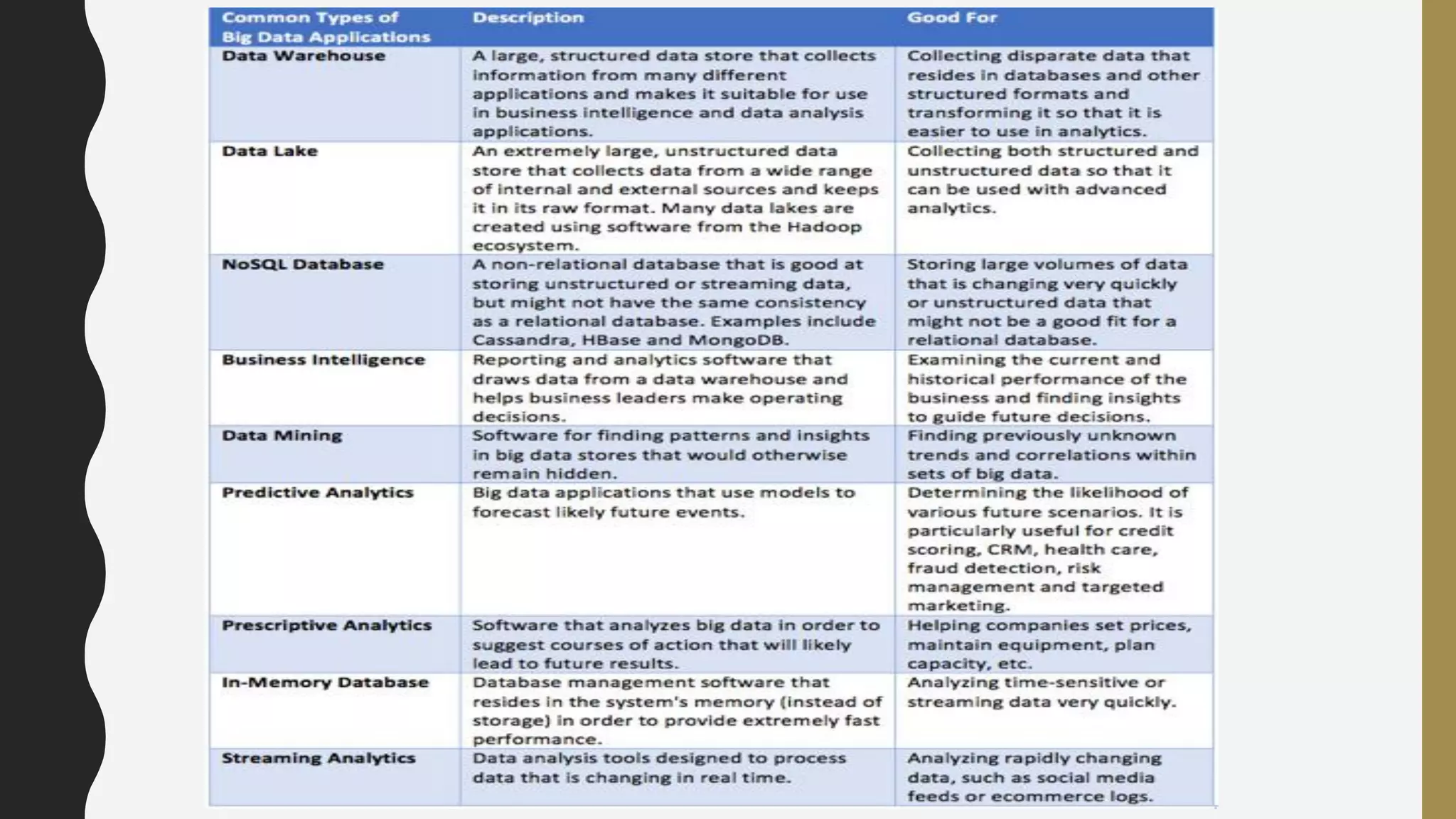

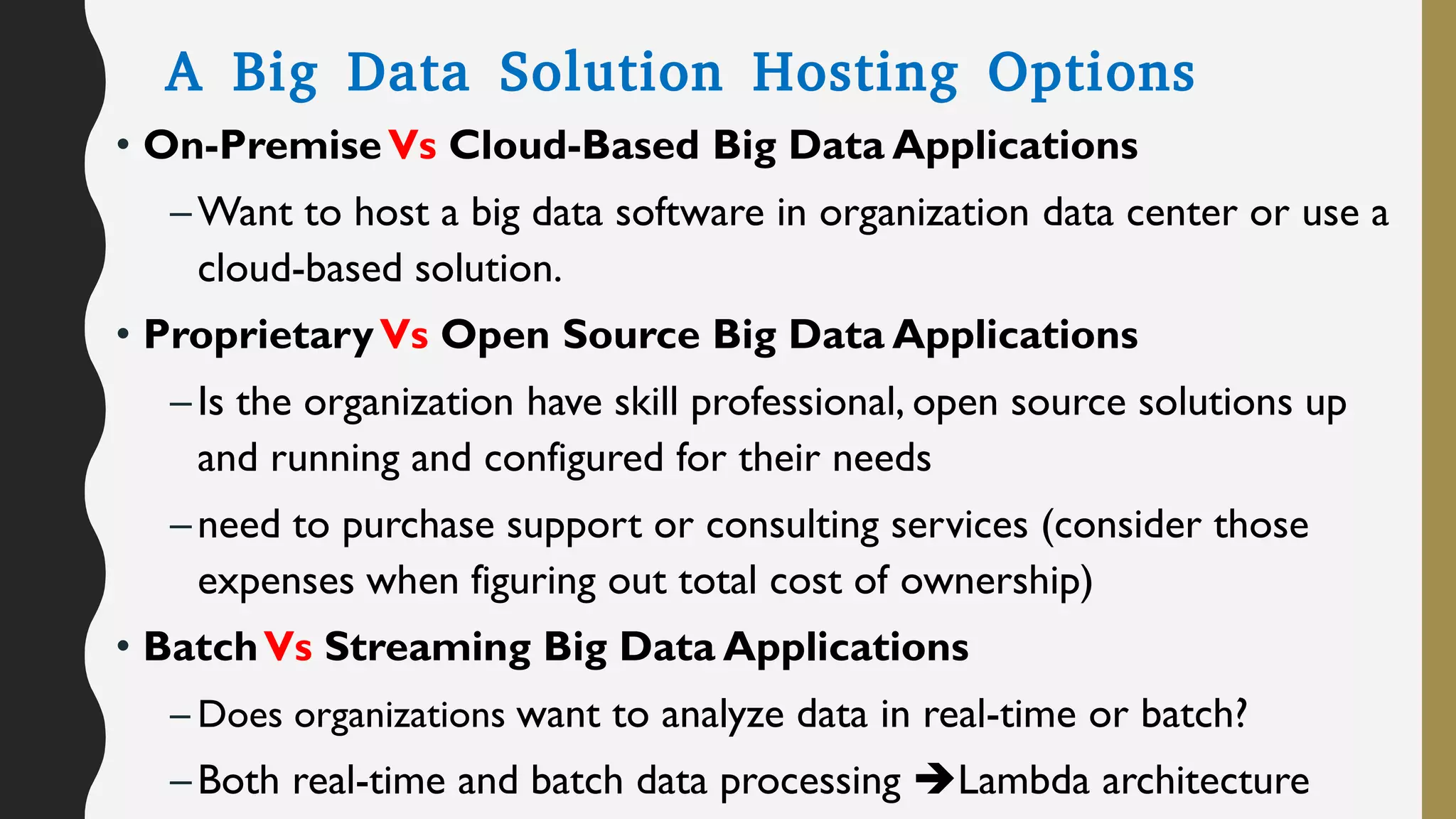

The document provides an overview of developing a big data strategy. It discusses defining a big data strategy by identifying opportunities and economic value of data, defining a big data architecture, selecting technologies, understanding data science, developing analytics, and institutionalizing big data. A good strategy explores these subject domains and aligns them to organizational objectives to accomplish a data-driven vision and direct the organization.