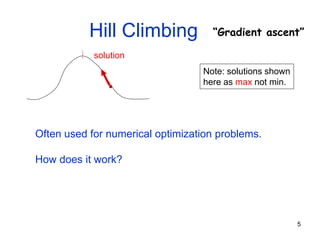

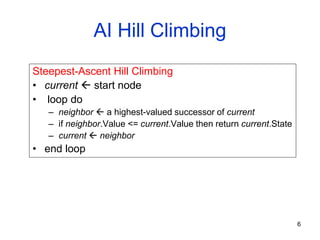

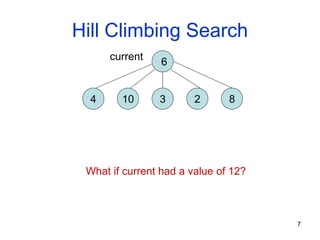

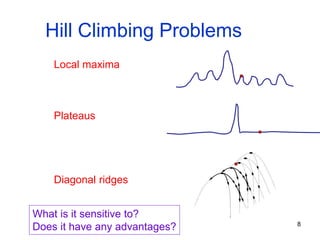

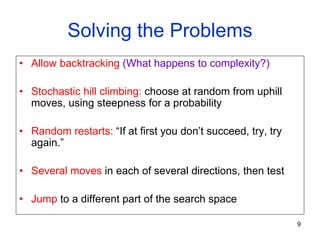

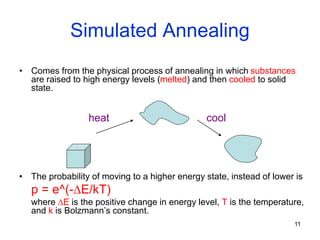

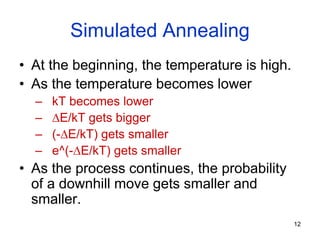

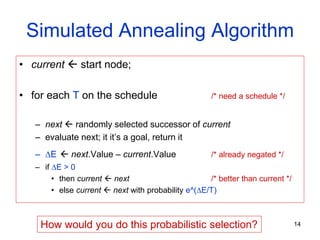

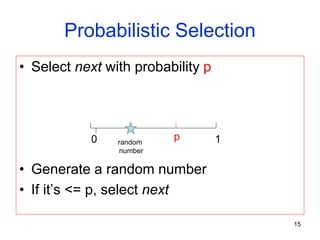

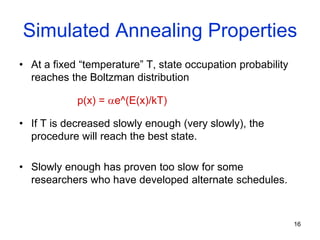

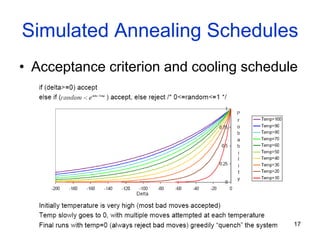

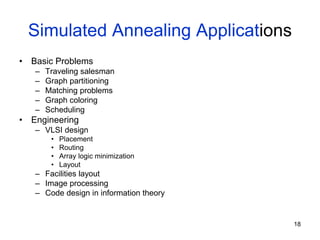

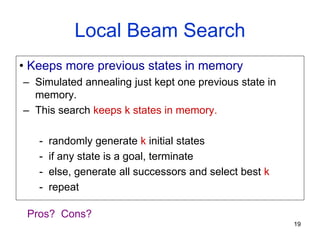

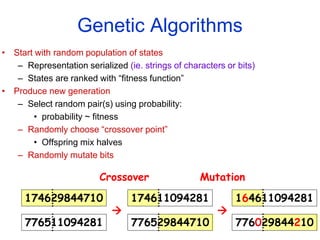

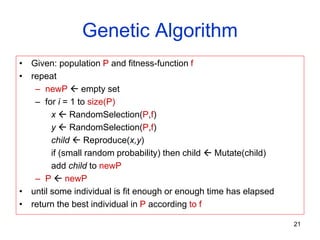

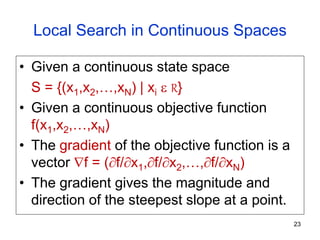

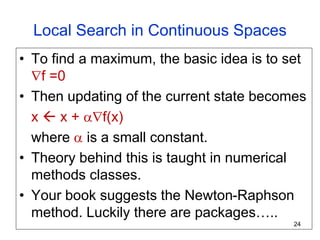

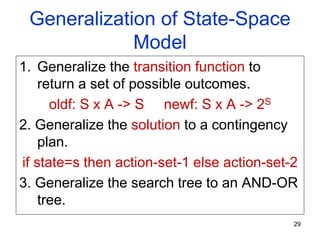

The document discusses various search methods including local search techniques like hill climbing, simulated annealing, beam search, and genetic search. It also covers searching continuous state spaces, nondeterministic actions, and online search where the agent performs actions and acquires sensor data. Local search methods start from an initial state and try to improve the current state to reach the goal. Simulated annealing allows occasional downhill moves to help explore more of the search space.