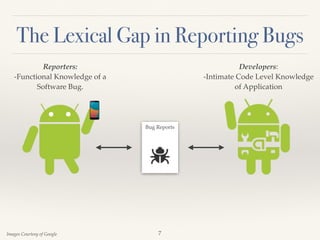

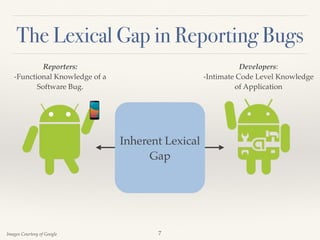

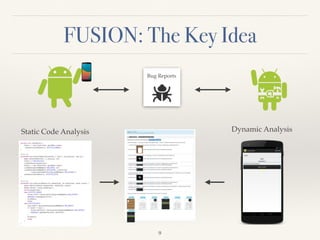

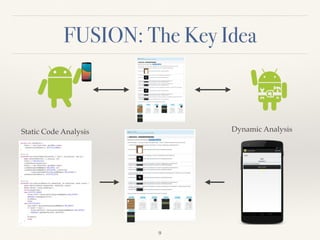

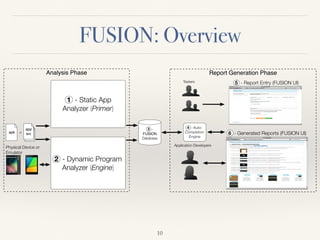

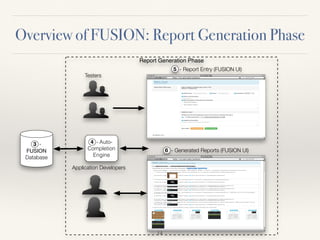

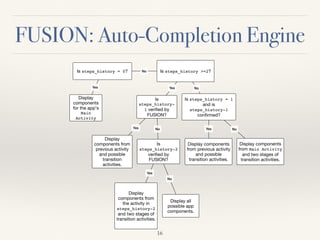

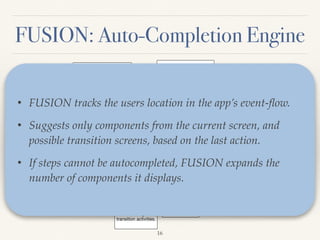

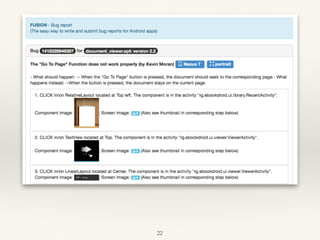

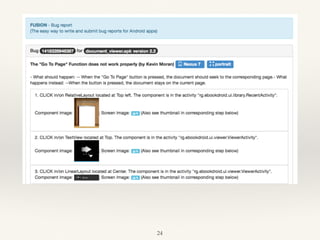

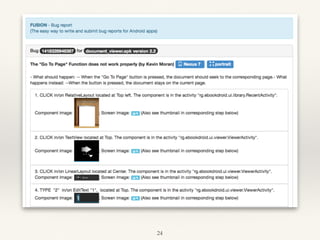

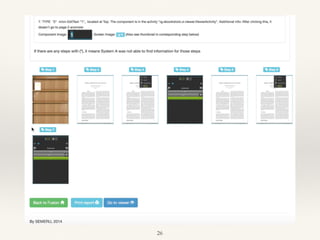

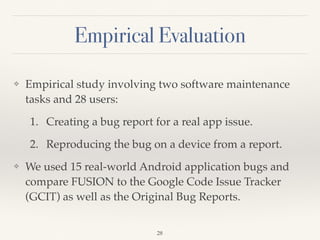

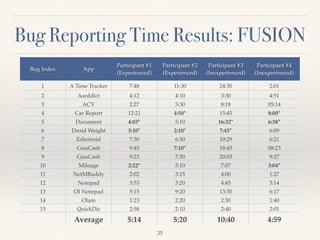

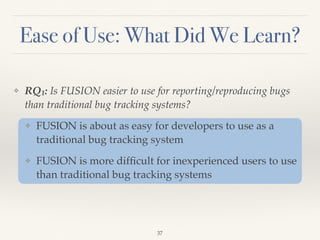

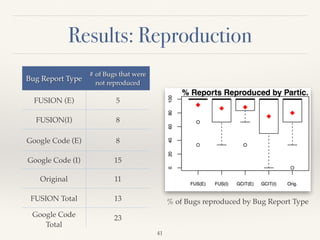

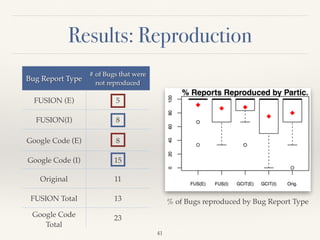

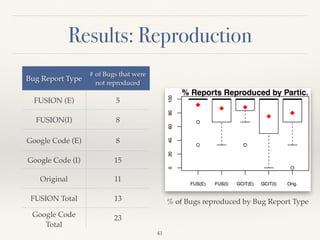

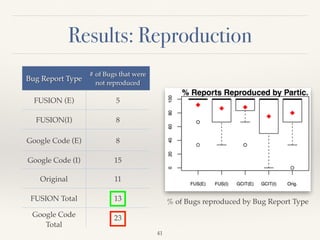

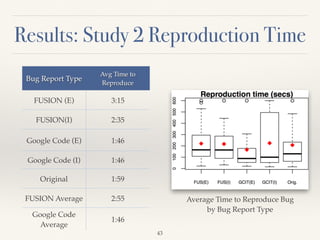

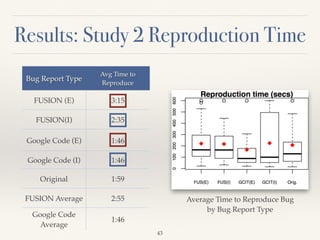

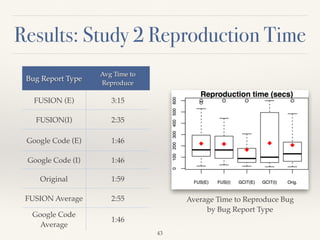

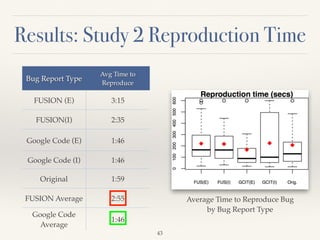

The document discusses a method for auto-completing bug reports in Android applications to facilitate better software maintenance and enhance user experience. It highlights the challenges in reporting bugs due to the lexical gap between users and developers and introduces a system named Fusion that employs static and dynamic analysis for effective bug report generation. The empirical evaluation has demonstrated the system's potential in improving the speed and quality of bug reporting compared to existing issue trackers.

![In-Field Failure Reproduction

❖ Allows for in-house debugging of field failures[1].

4

Application

Instrumenter

Instrumented

Application

Debugging

Information

about Program

Failure

Field Users

Application Developers

Wei Jin and Alessandro Orso. 2012. BugRedux: reproducing field failures for

in-house debugging. In Proceedings of the 34th International Conference on

Software Engineering (ICSE '12)](https://image.slidesharecdn.com/fusionfse15presentationfinal-171009043656/85/Auto-completing-Bug-Reports-for-Android-Applications-9-320.jpg)

![In-Field Failure Reproduction

❖ Allows for in-house debugging of field failures[1].

4

Application

Instrumenter

Instrumented

Application

Debugging

Information

about Program

Failure

Field Users

Application Developers

Wei Jin and Alessandro Orso. 2012. BugRedux: reproducing field failures for

in-house debugging. In Proceedings of the 34th International Conference on

Software Engineering (ICSE '12)](https://image.slidesharecdn.com/fusionfse15presentationfinal-171009043656/85/Auto-completing-Bug-Reports-for-Android-Applications-10-320.jpg)

![Results: Reproduction UP Responses

❖ What information from this type of Bug Report did you

find useful for reproducing the bug?

❖ “The detail[ed] steps to find where the next steps [were]

was really useful and speeded up things.”

❖ What elements did like least from this type of bug

report?

❖ “Sometimes the steps were too overly specific/detailed.”

46](https://image.slidesharecdn.com/fusionfse15presentationfinal-171009043656/85/Auto-completing-Bug-Reports-for-Android-Applications-83-320.jpg)