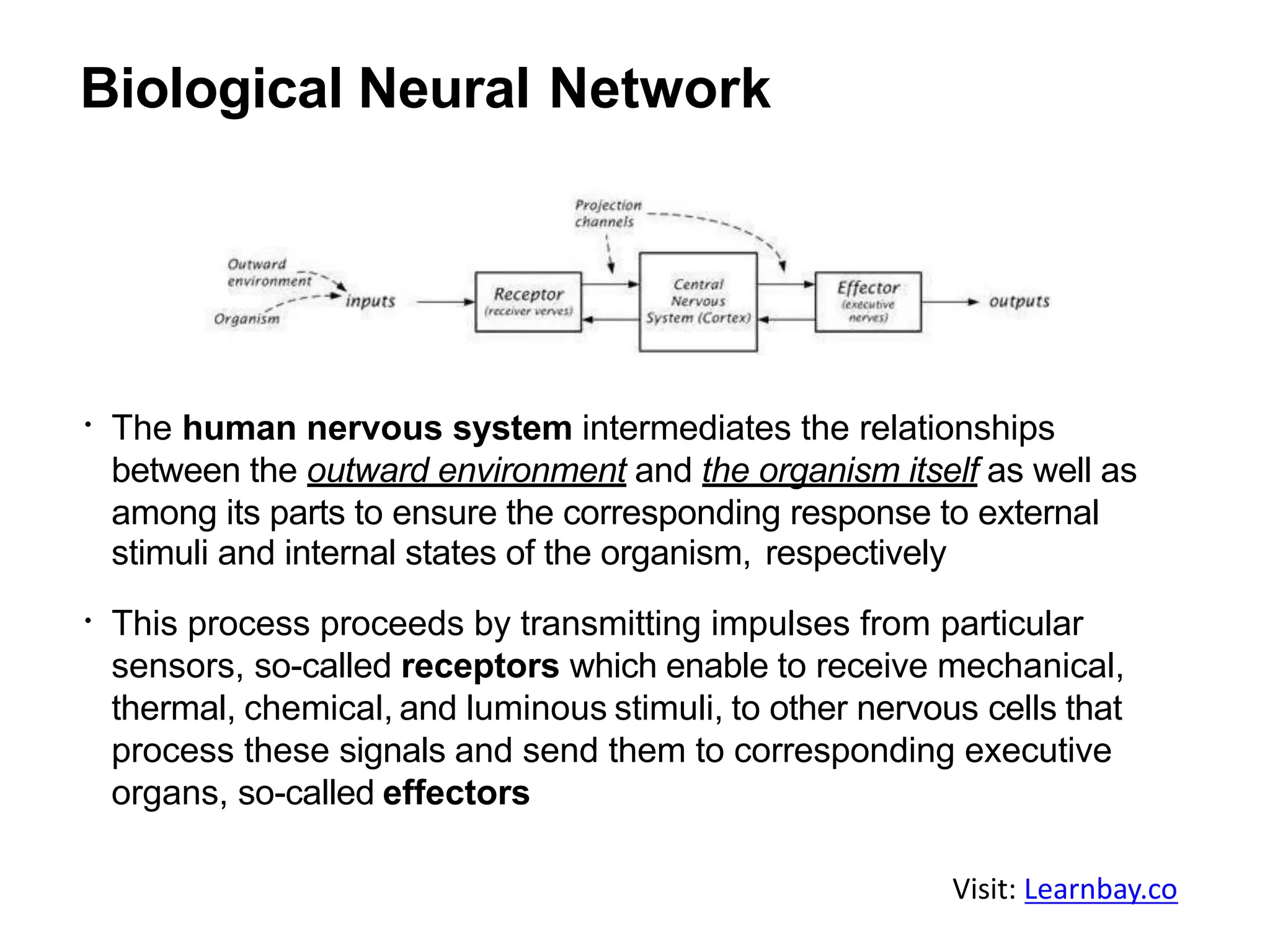

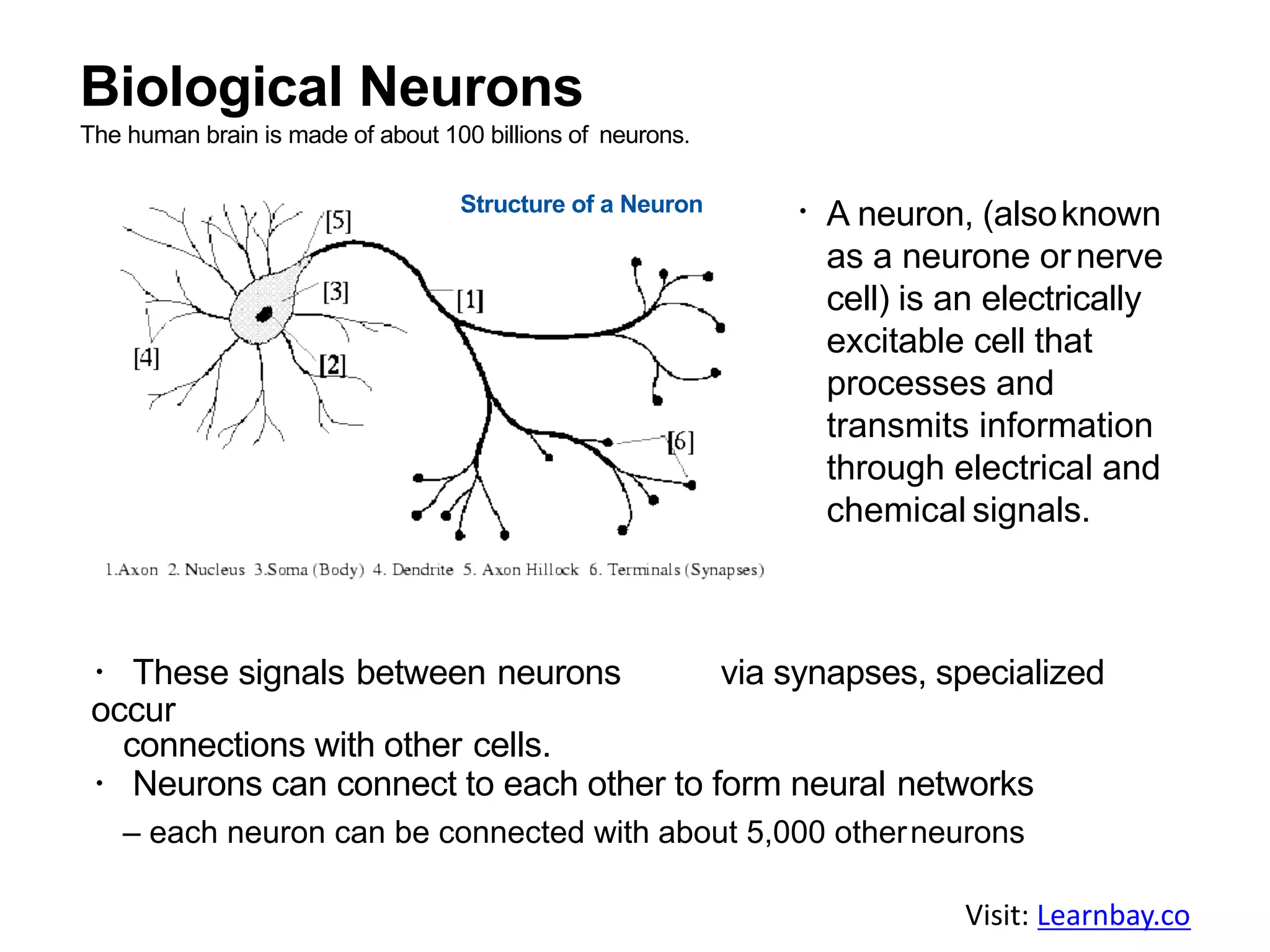

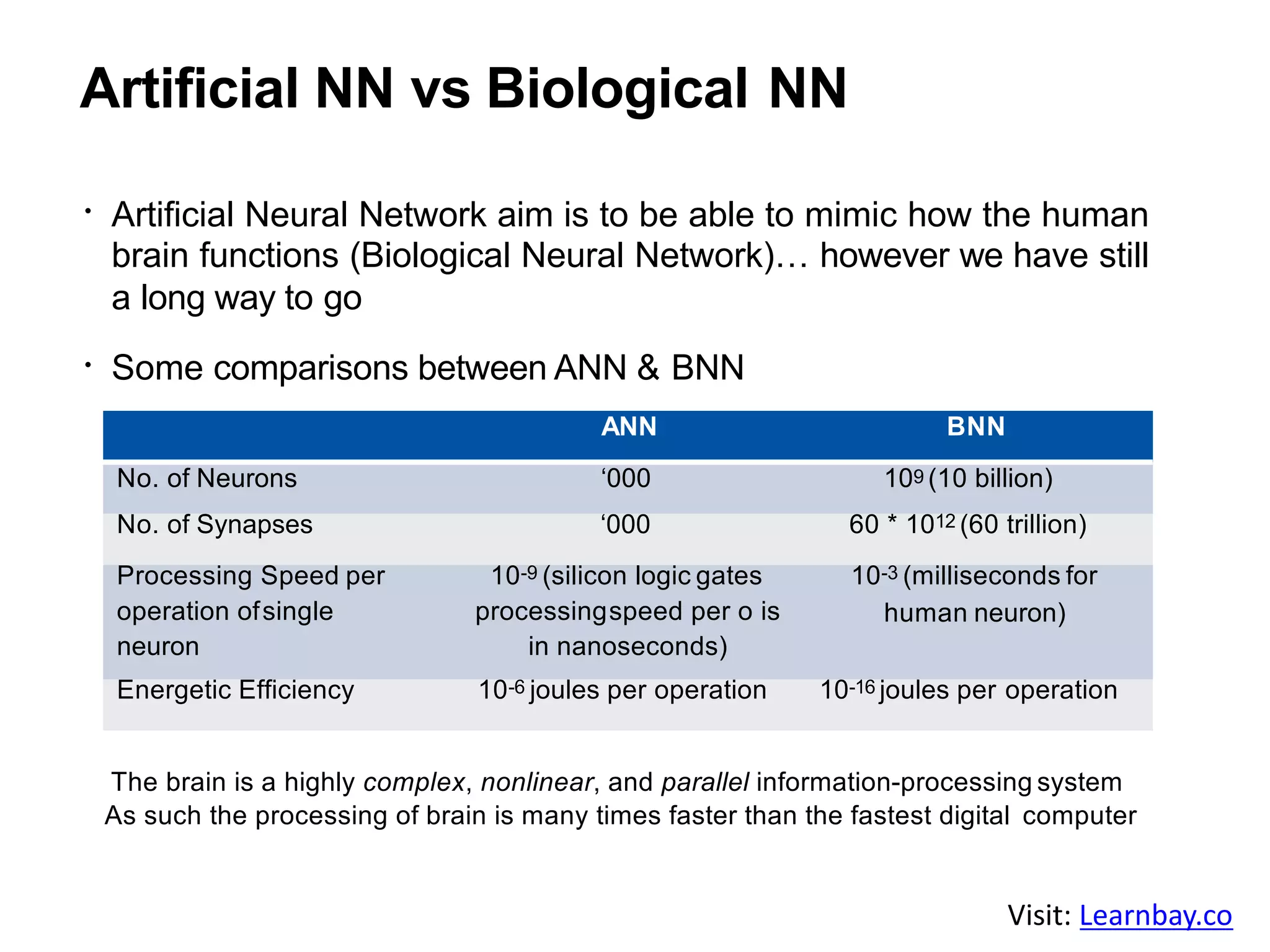

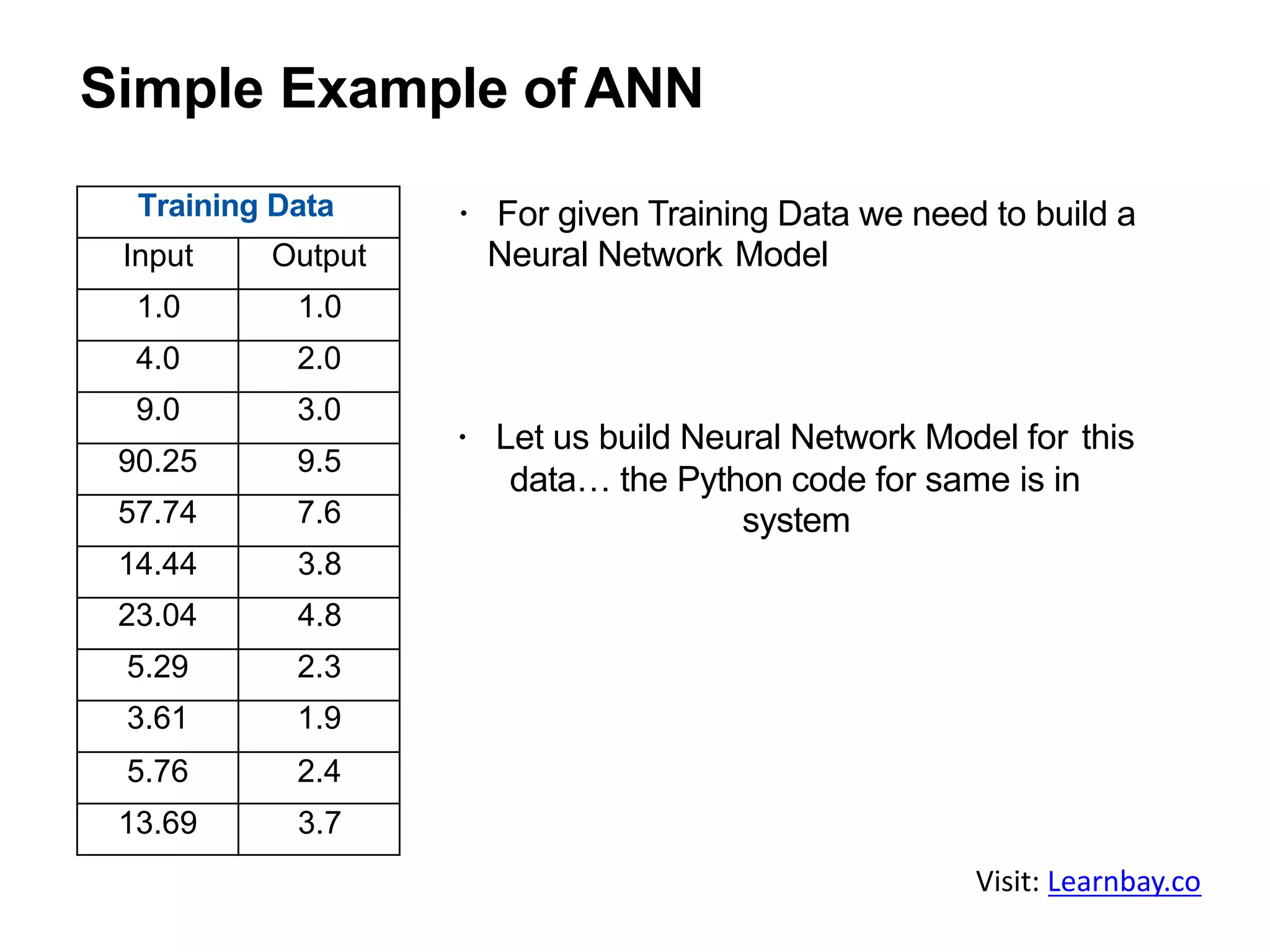

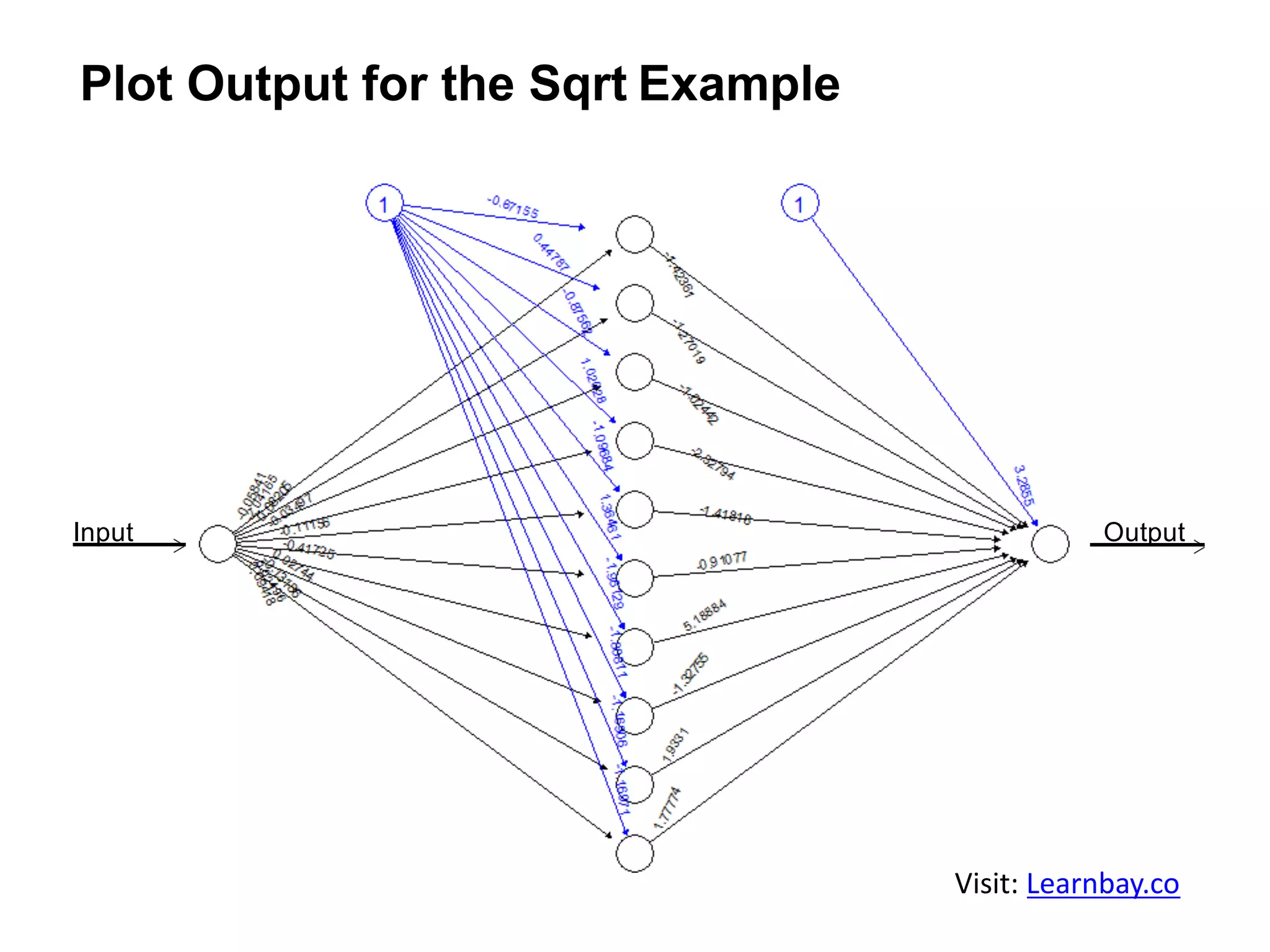

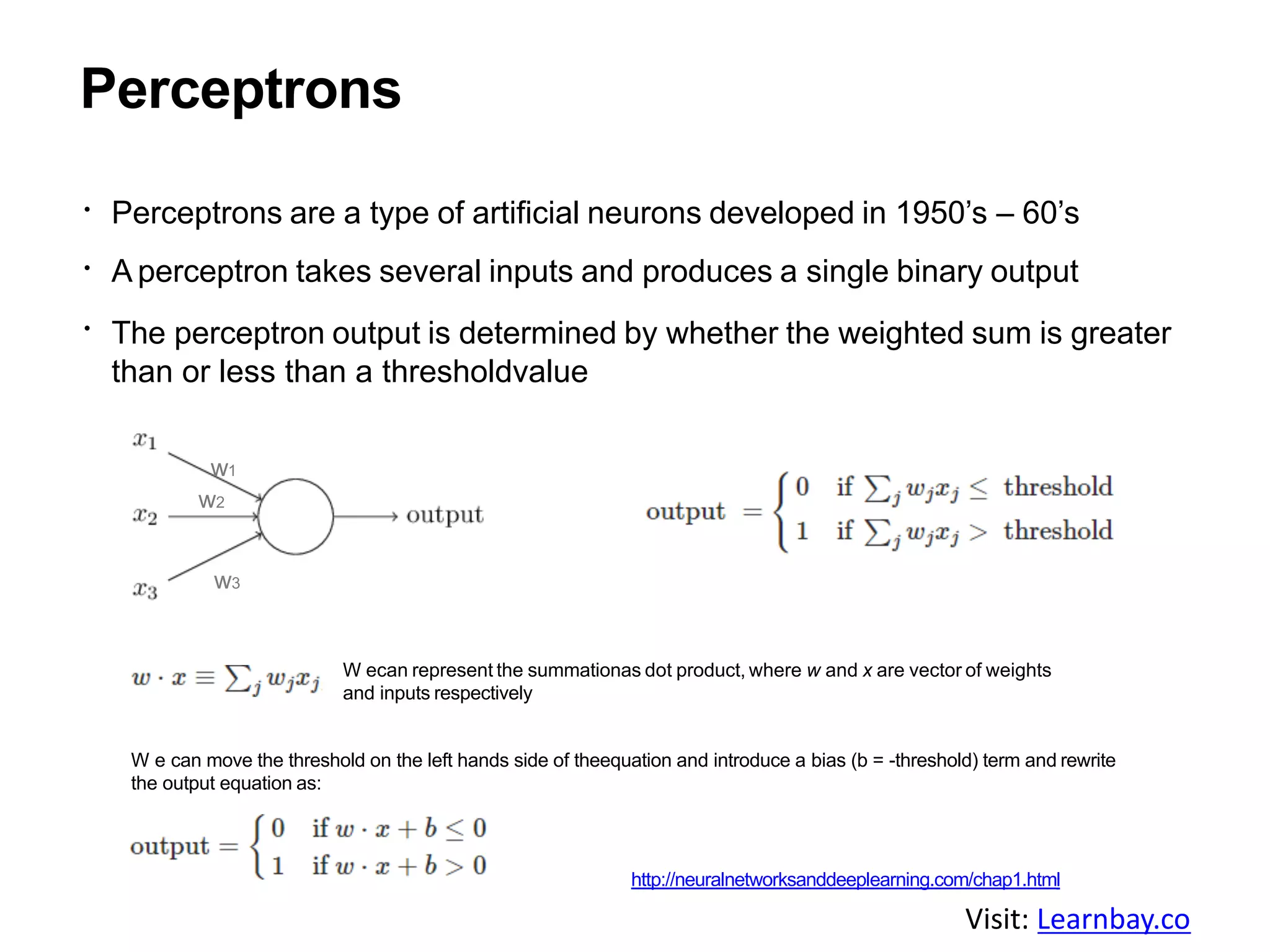

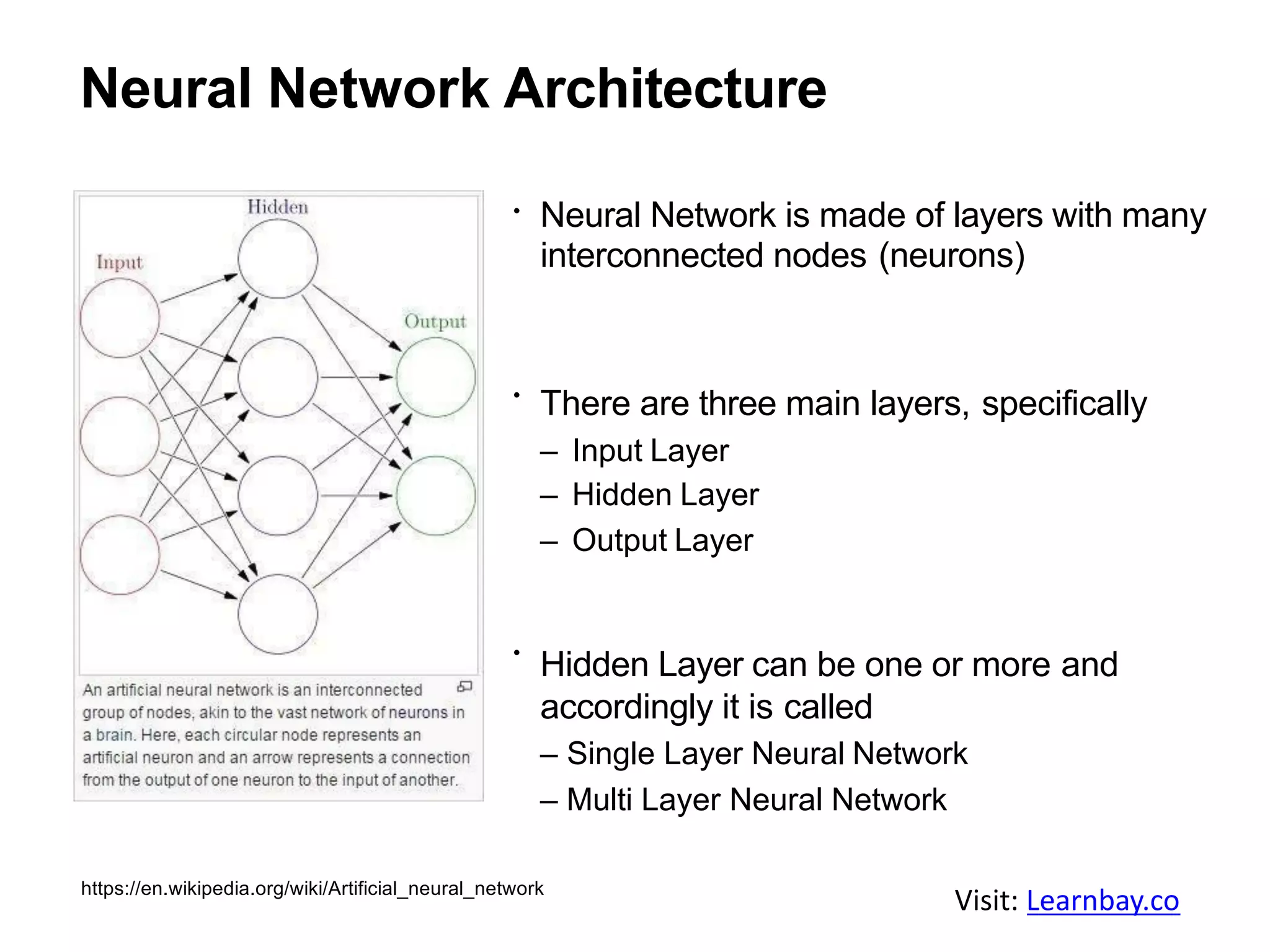

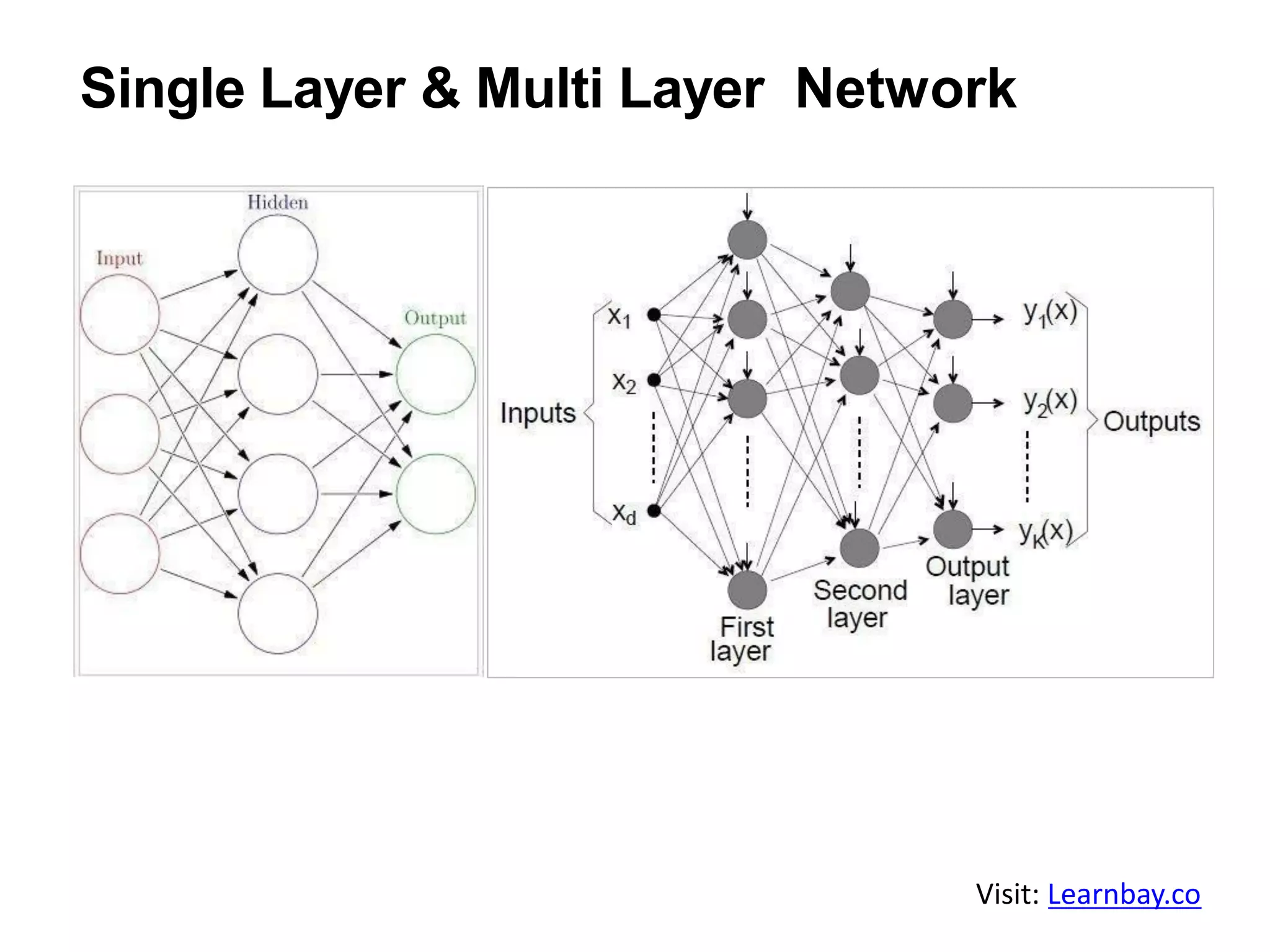

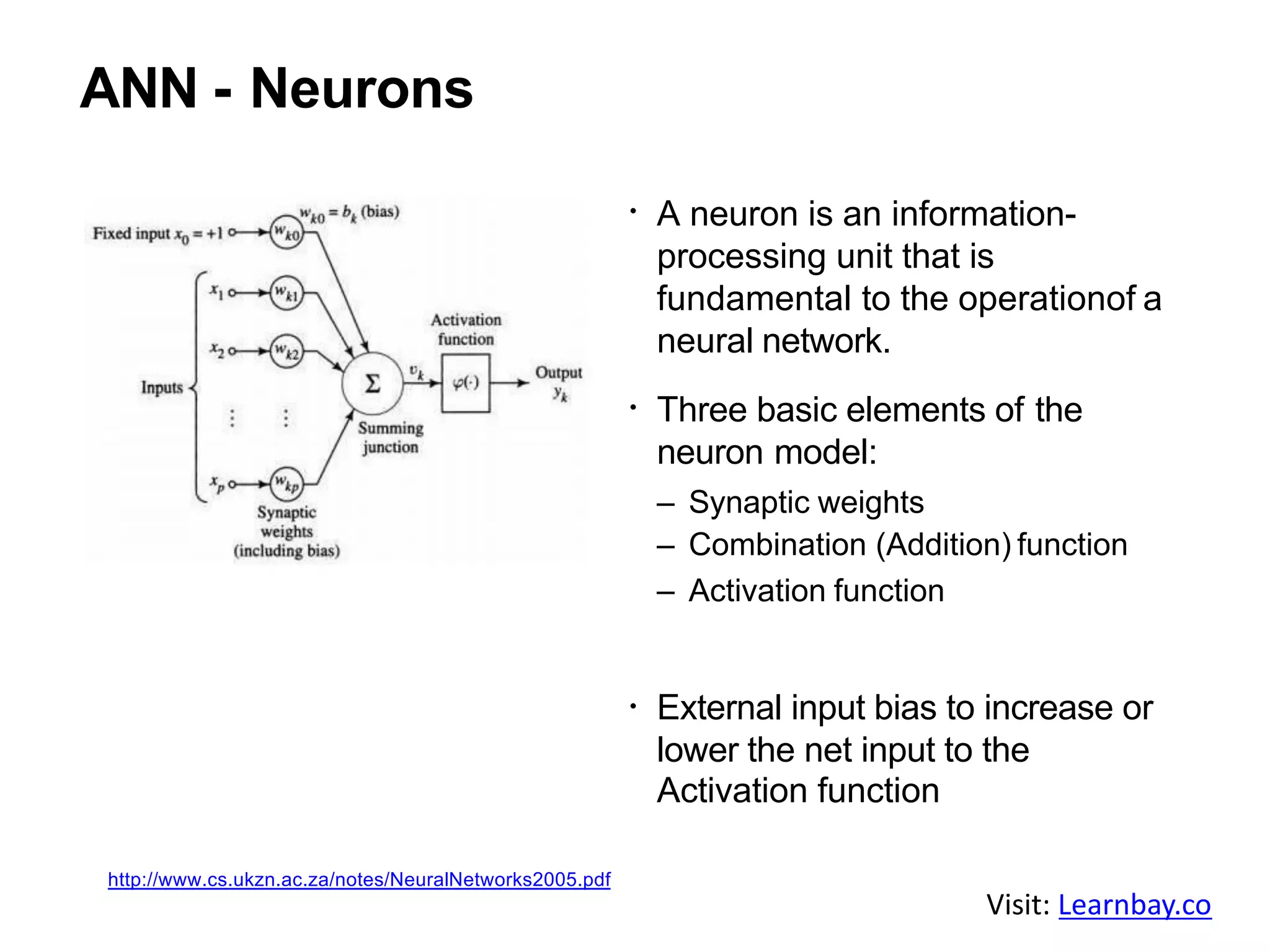

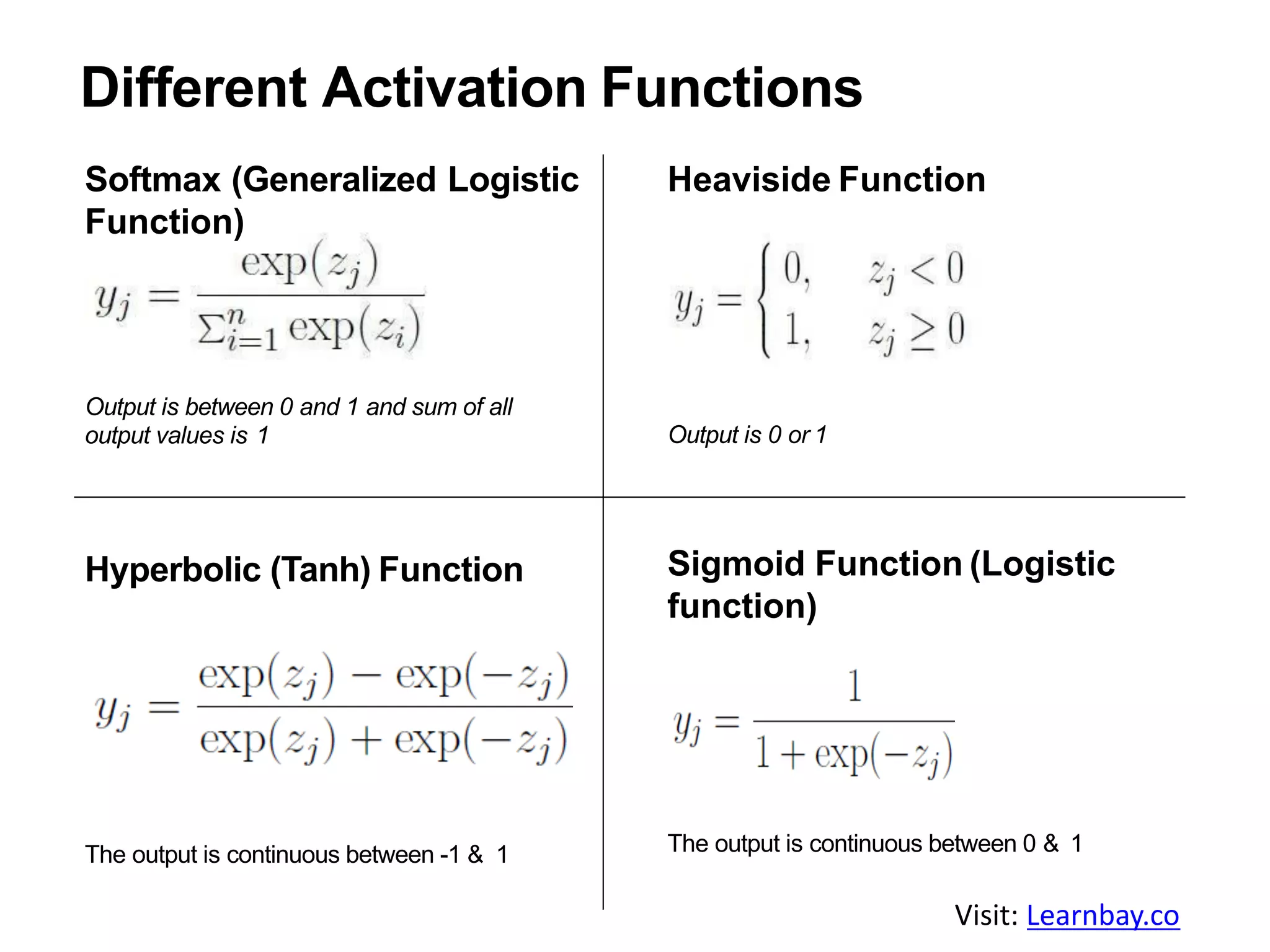

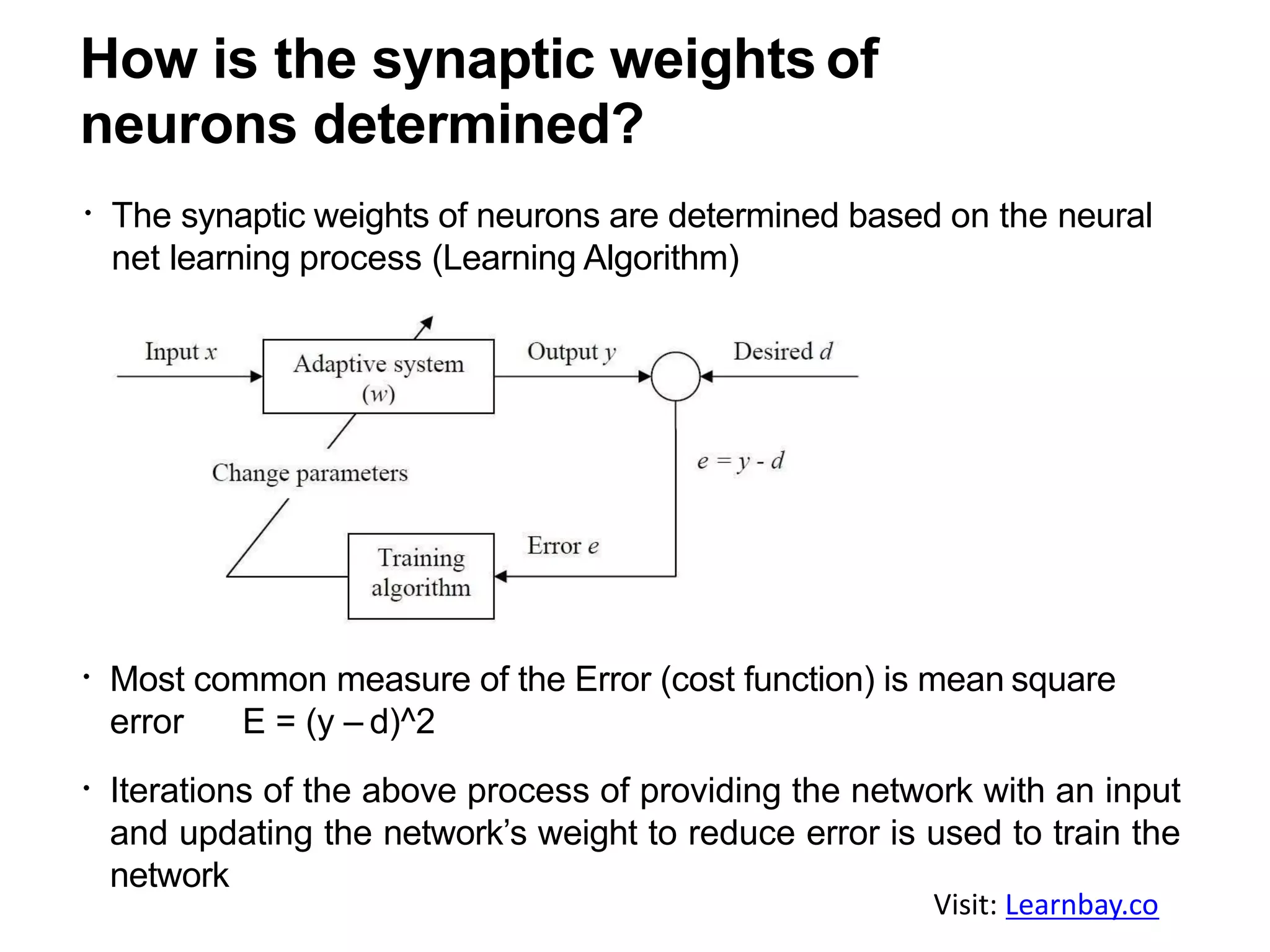

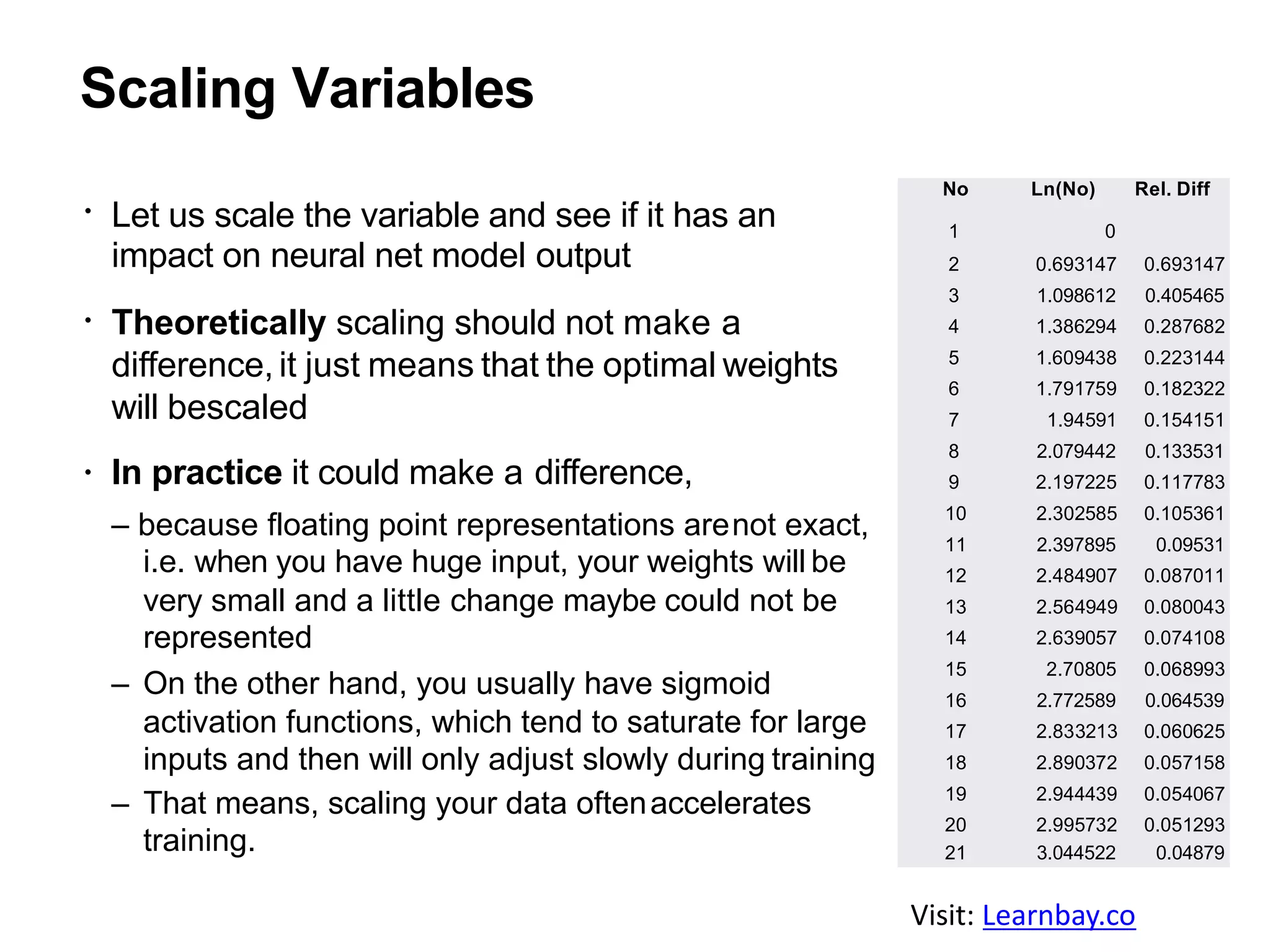

The document provides an overview of artificial neural networks (ANNs), explaining their structure, functionality, and comparison to biological neural networks (BNNs). It discusses characteristics of both systems, applications of neural networks in fields like AI and business analytics, and technical aspects such as training methods, activation functions, and issues like overfitting. The text emphasizes the potential of neural networks to learn, generalize, and adapt to new conditions, while also outlining challenges encountered in their development.