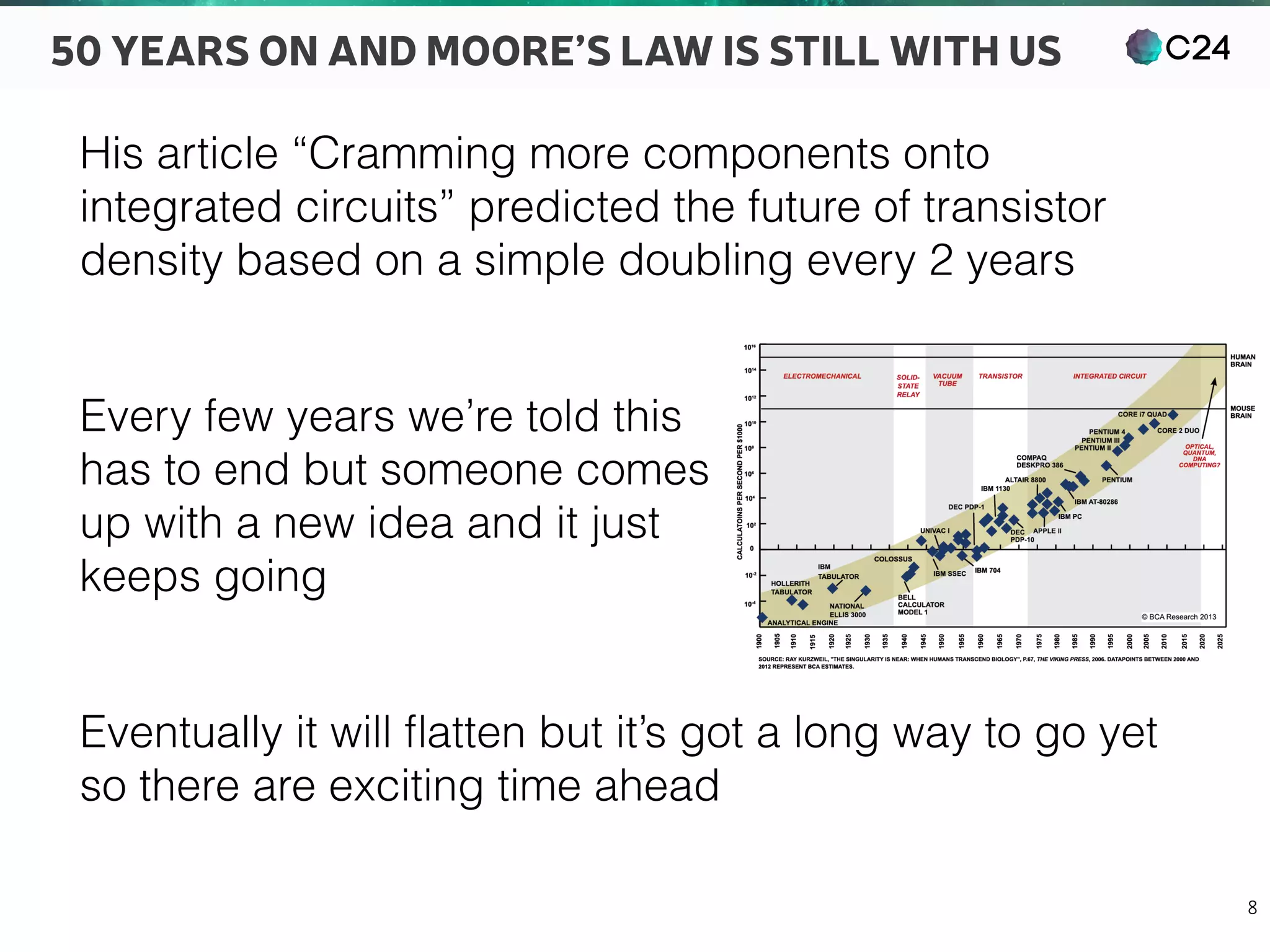

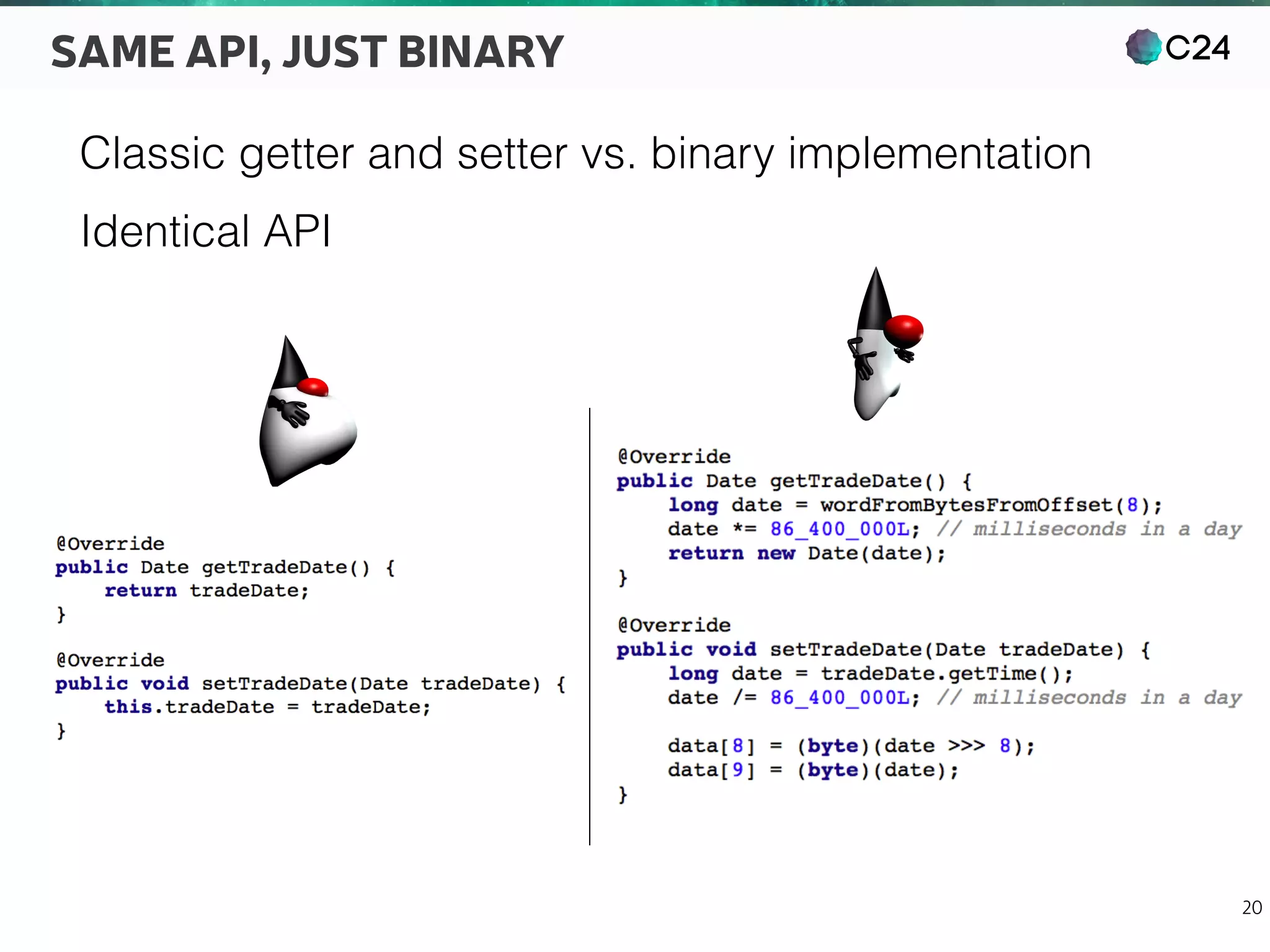

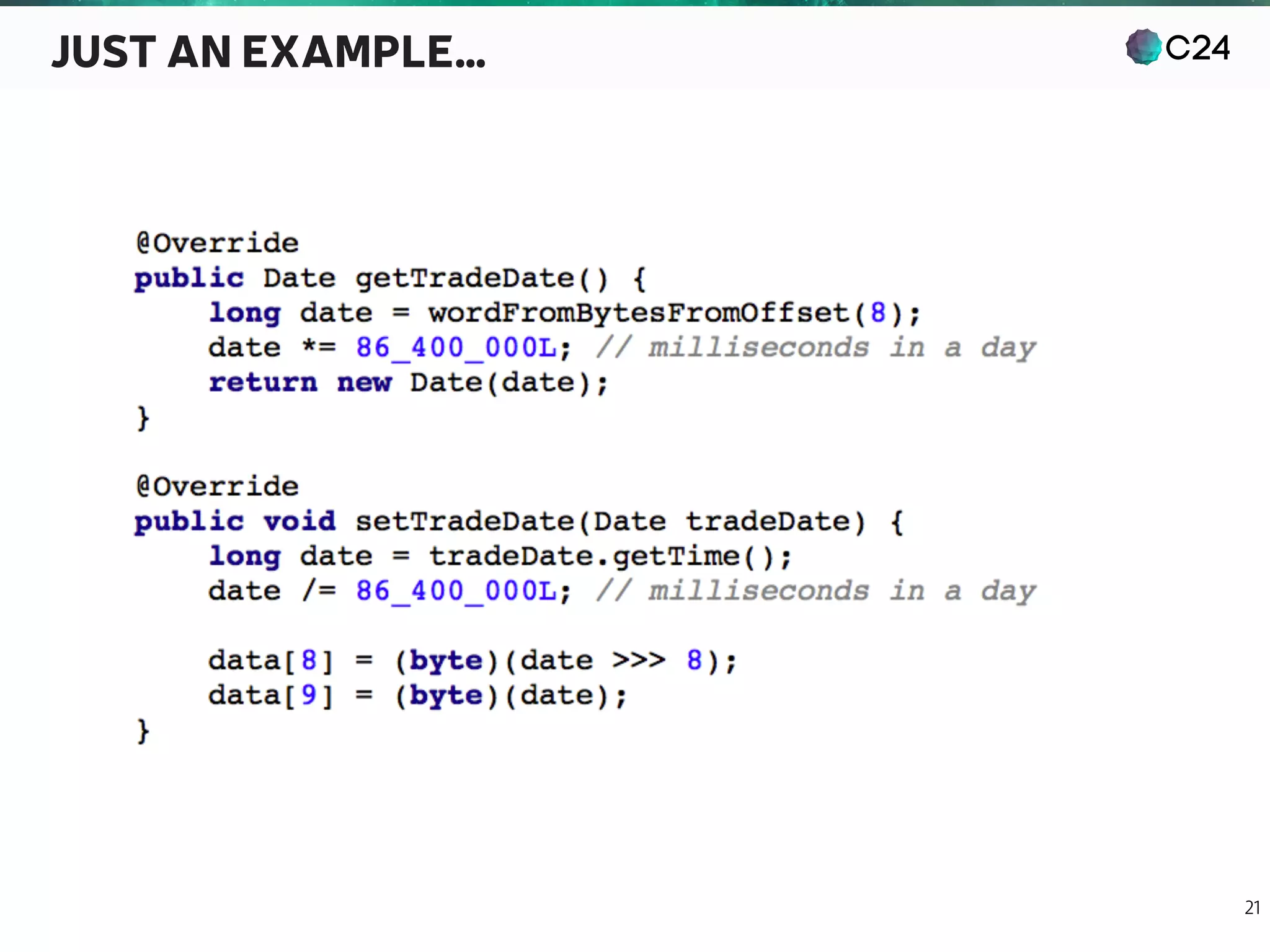

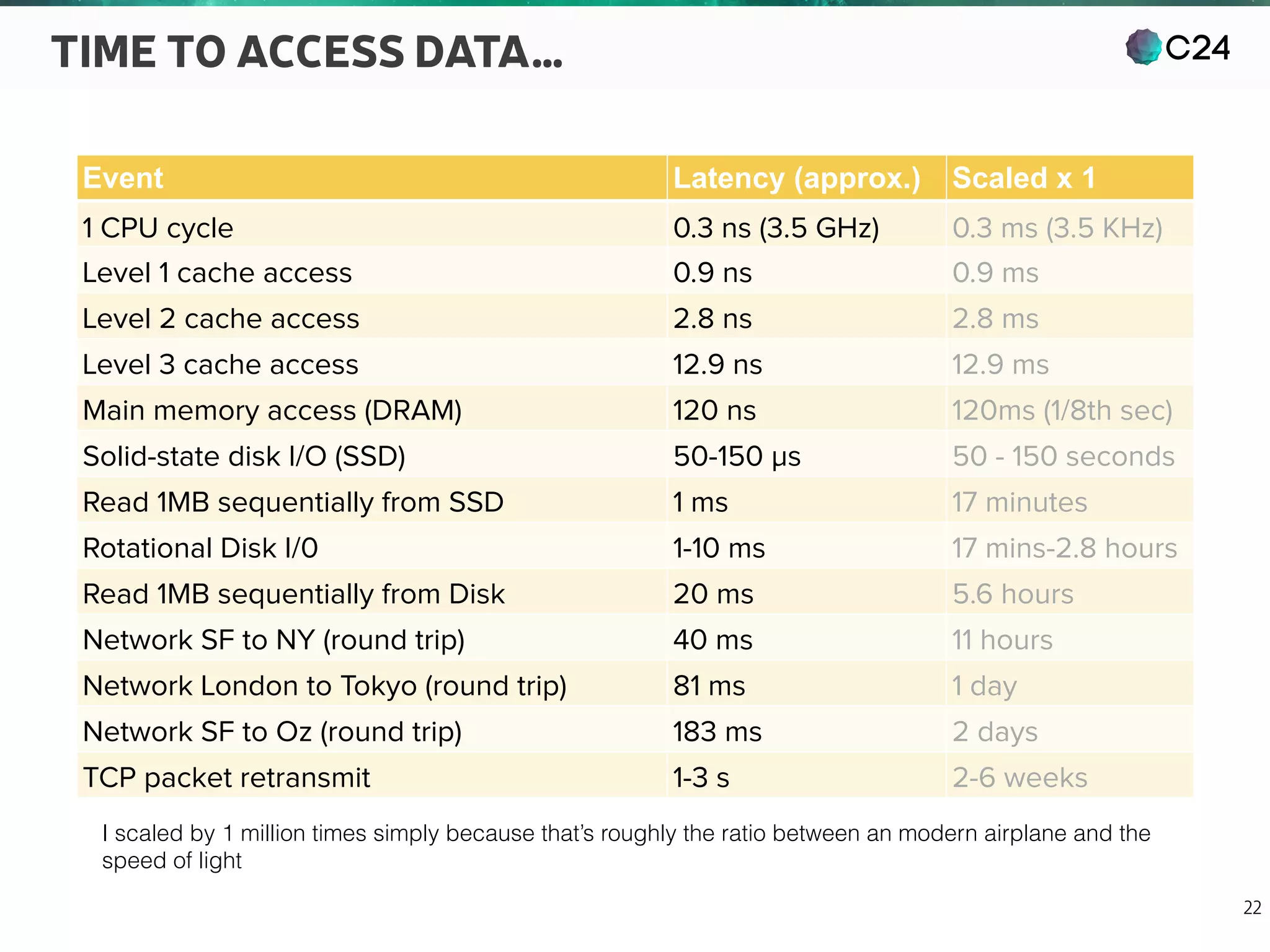

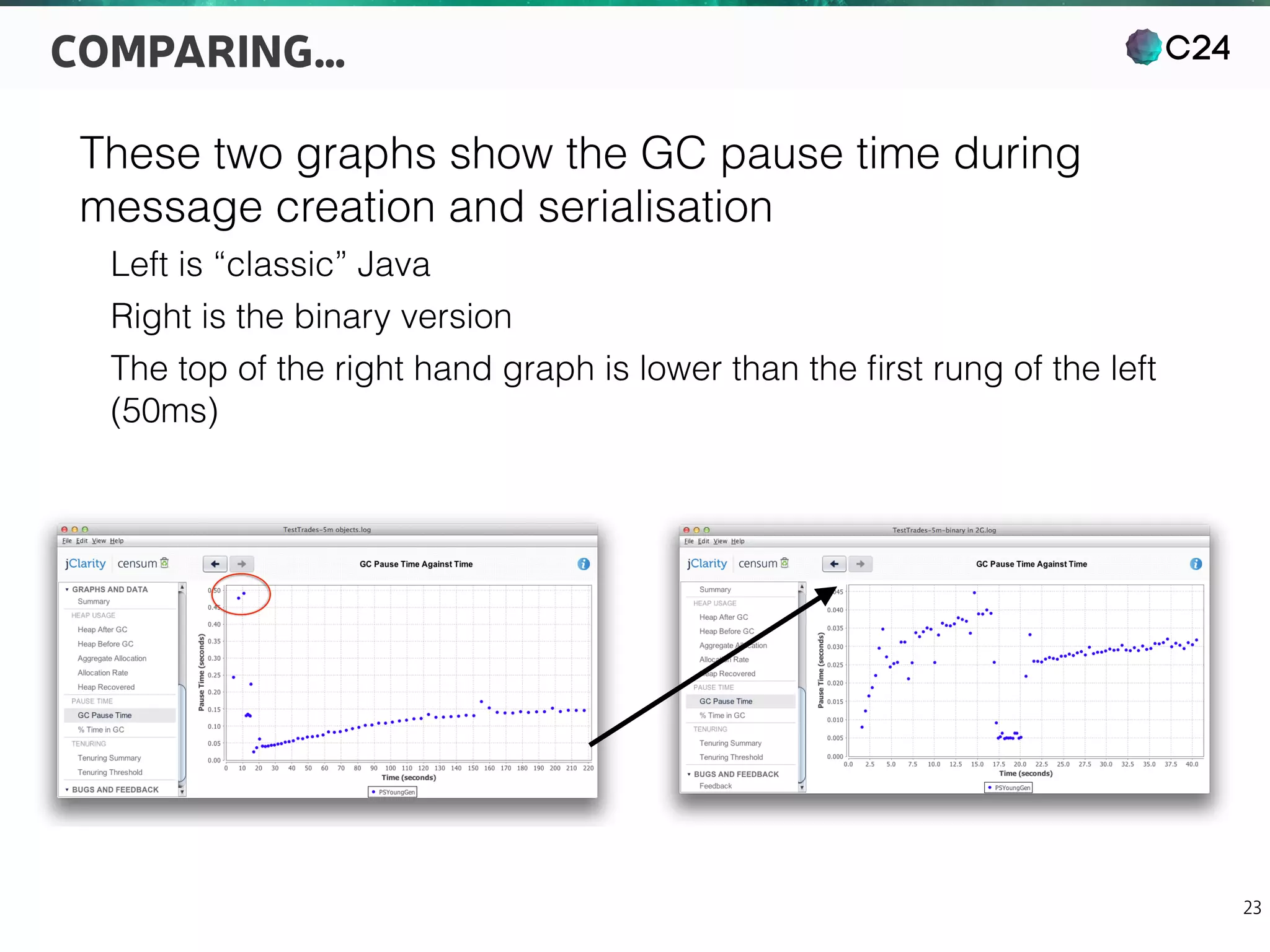

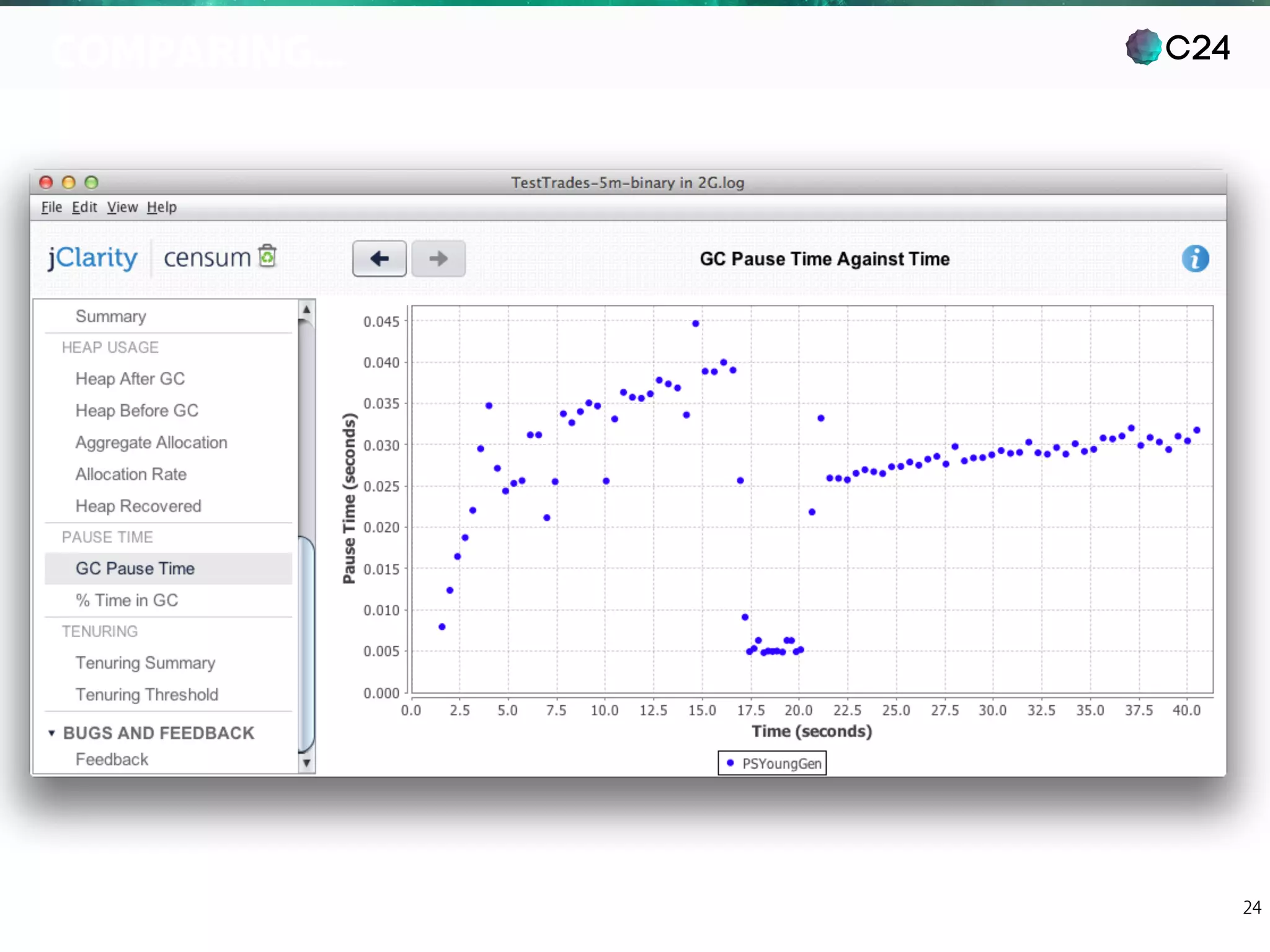

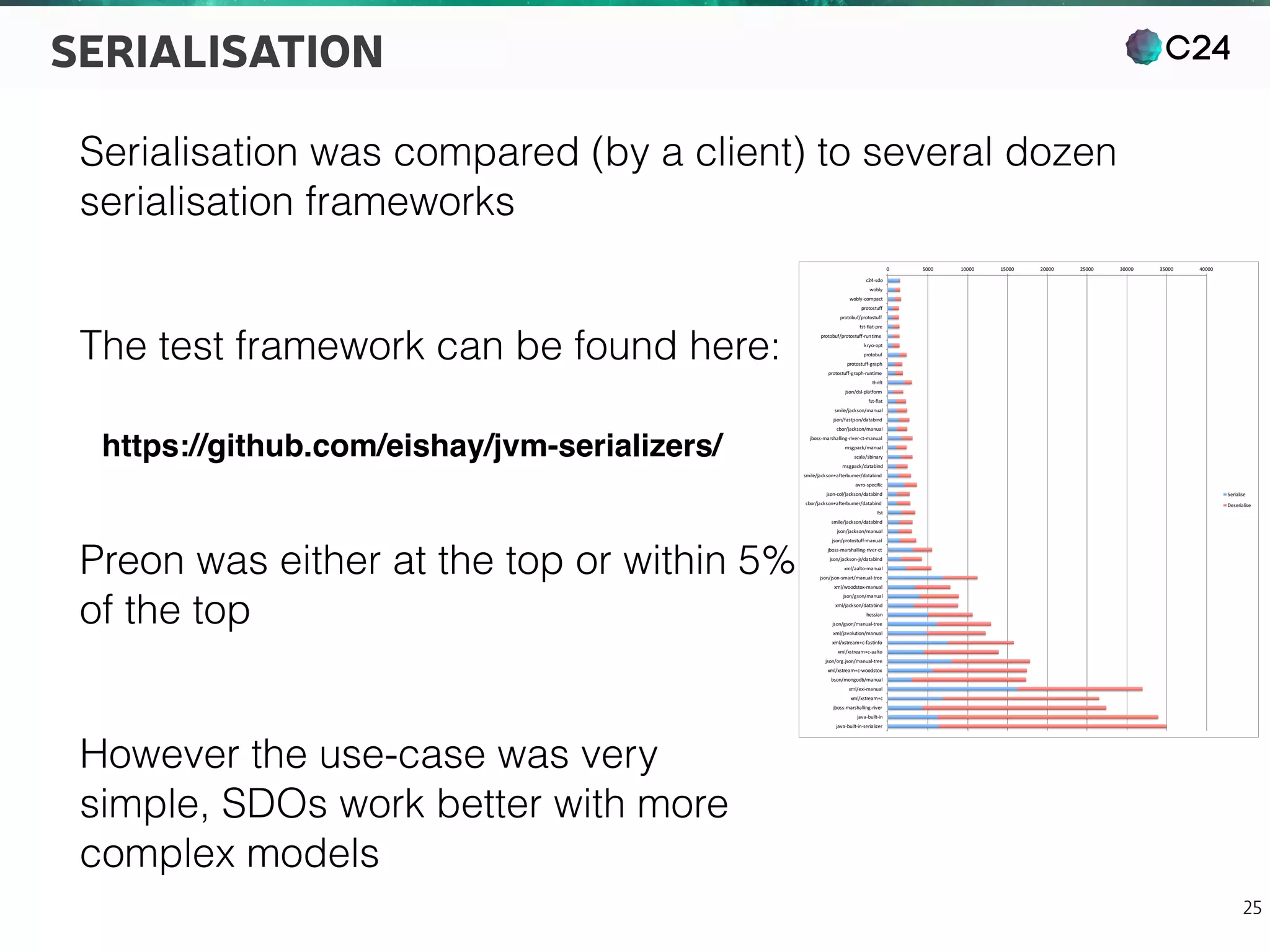

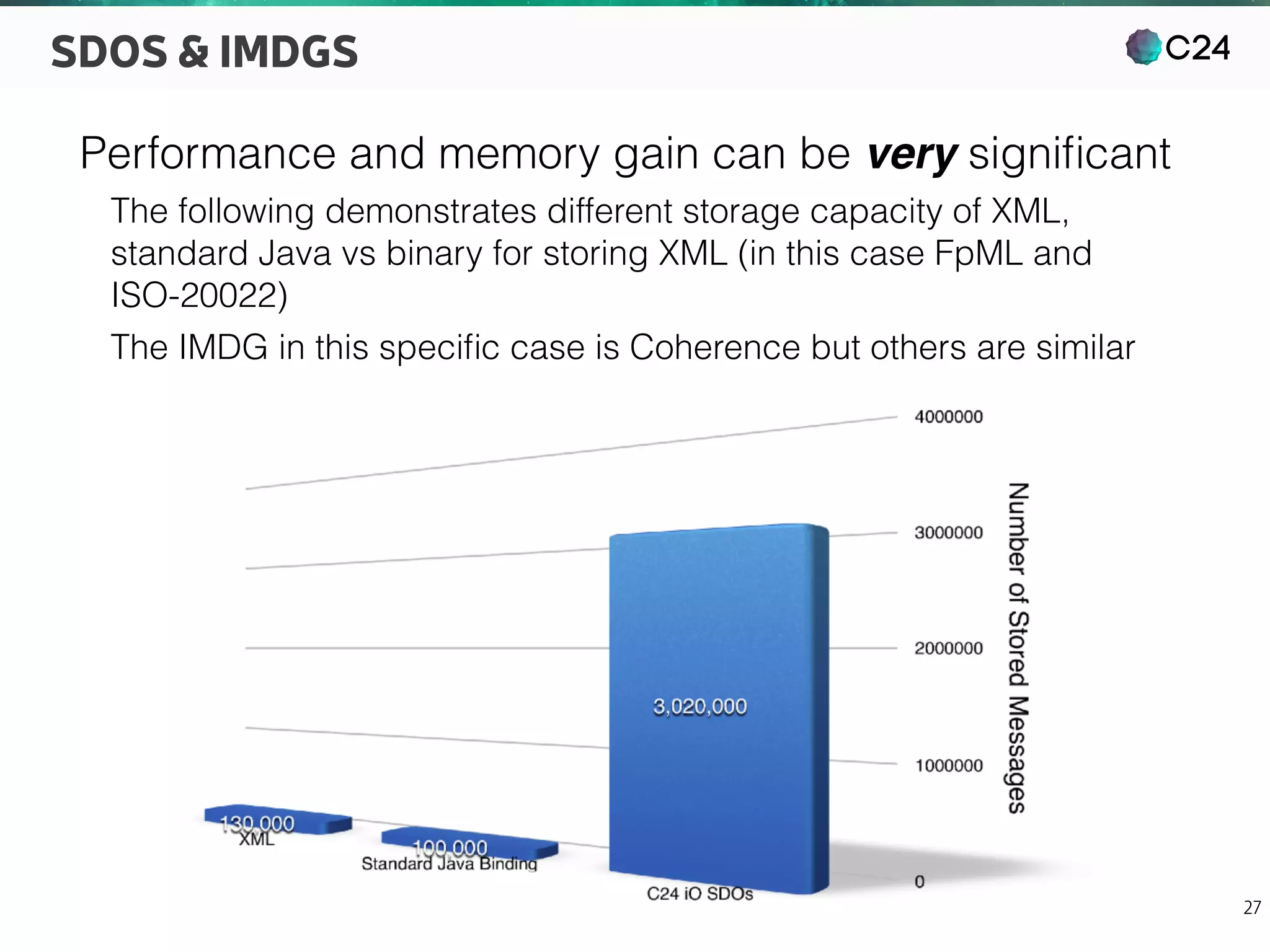

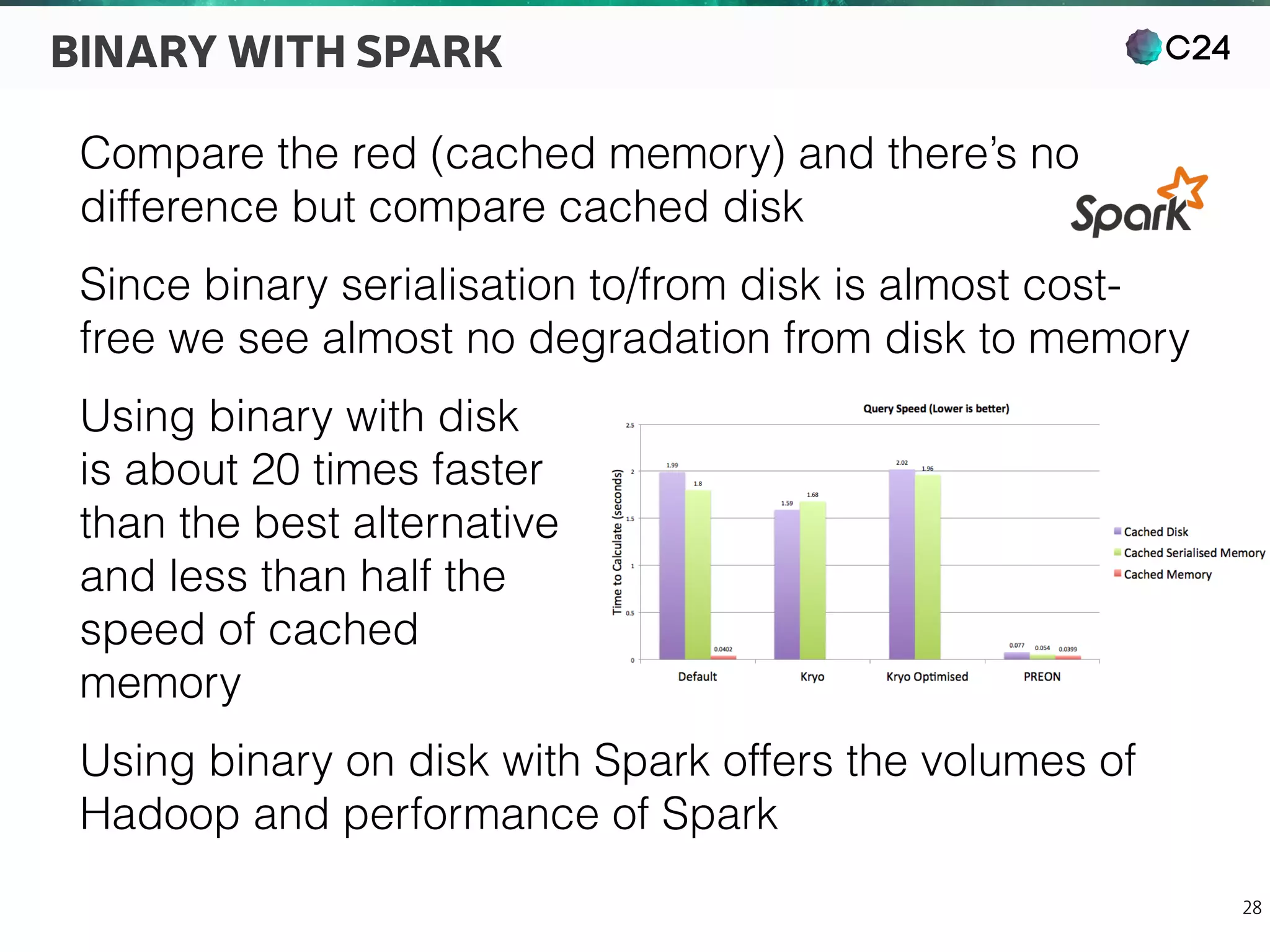

This document discusses challenges with scaling applications and analyzing large volumes of data. It describes how problems have remained the same over 30 years, such as parsing, filtering, and analyzing large amounts of data, despite hardware advances. The document advocates using binary representations and serialization instead of standard Java objects to improve performance for tasks like data processing, distributed computing and analytics. It provides examples showing how this approach can significantly reduce latency and improve throughput.