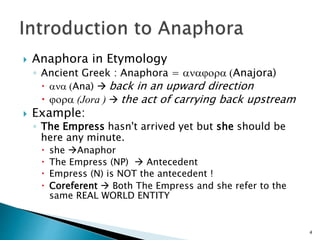

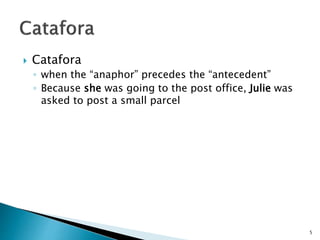

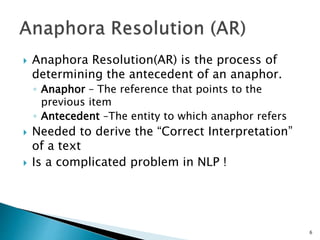

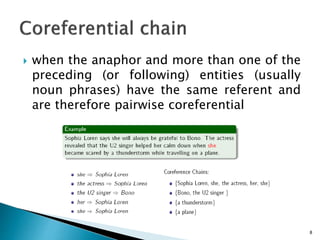

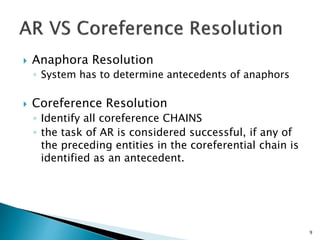

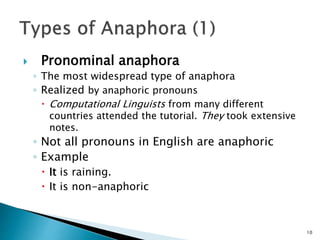

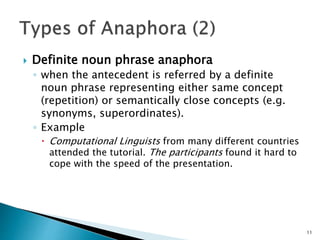

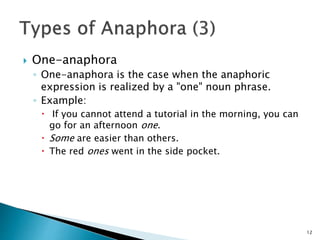

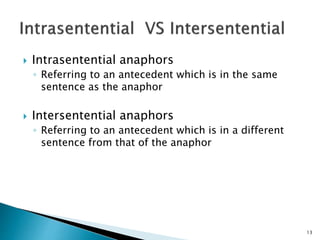

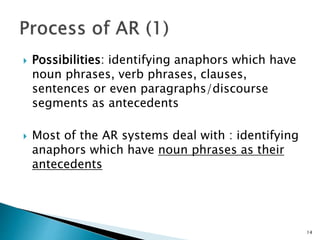

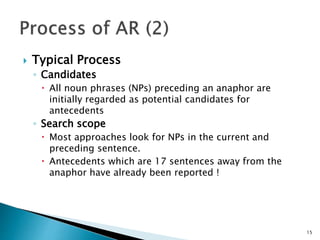

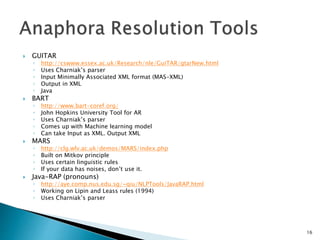

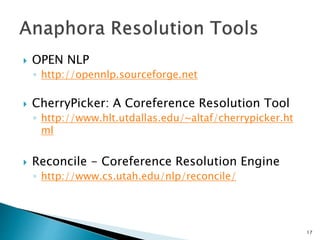

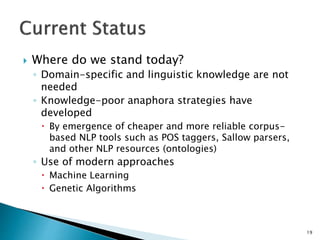

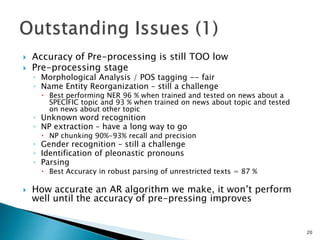

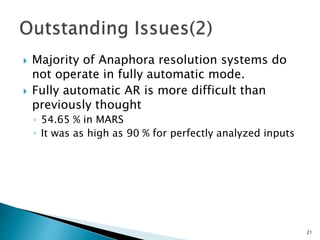

This document discusses anaphora resolution, including types of anaphora, the anaphora resolution process, tools for anaphora resolution, and challenges. It defines anaphora resolution as determining the antecedent of an anaphor and discusses pronominal, definite noun phrase, and one-anaphora. Common tools discussed are GUITAR, BART, MARS, and Java-RAP. Issues addressed include the accuracy of pre-processing, fully automatic resolution, need for annotated corpora, and evaluation challenges.