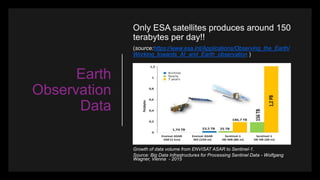

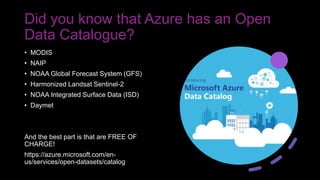

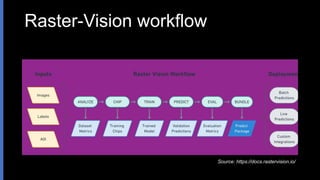

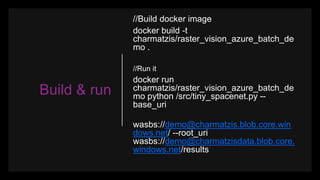

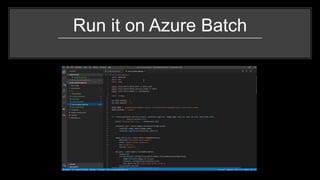

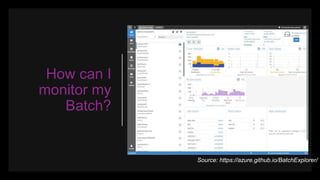

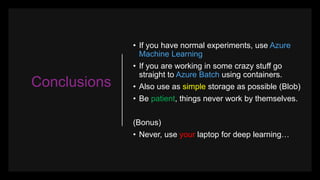

The document outlines a presentation on utilizing AI with Earth observation data through Microsoft Azure, focusing on project steps, data wrangling, and practical tools like Docker and Raster Vision. It emphasizes the importance of using appropriate systems and frameworks, recommending Azure Machine Learning for normal experiments and Azure Batch for more complex tasks. Key takeaways include the necessity of handling spatial data effectively and the significance of cloud resources over local machines for deep learning projects.