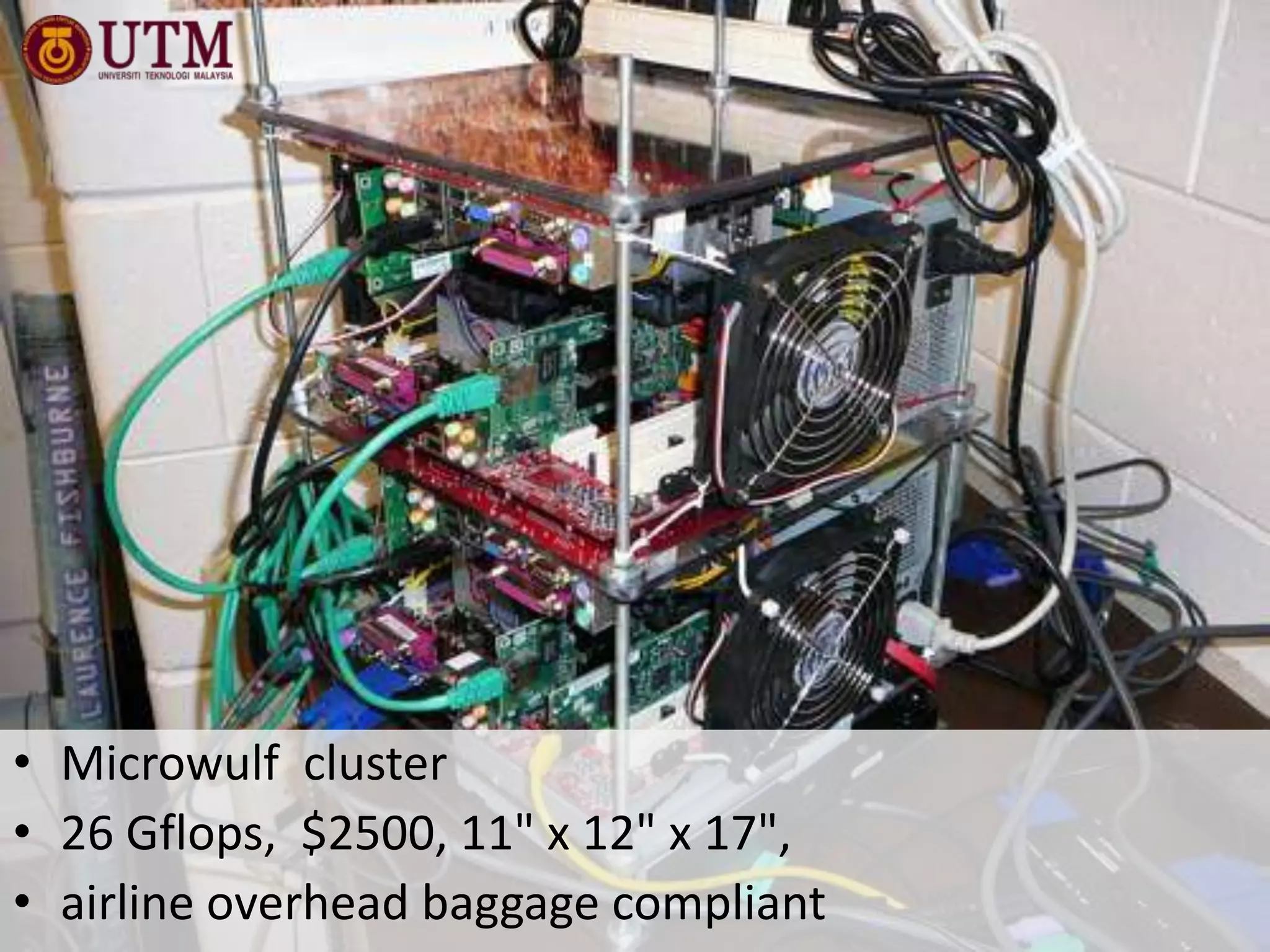

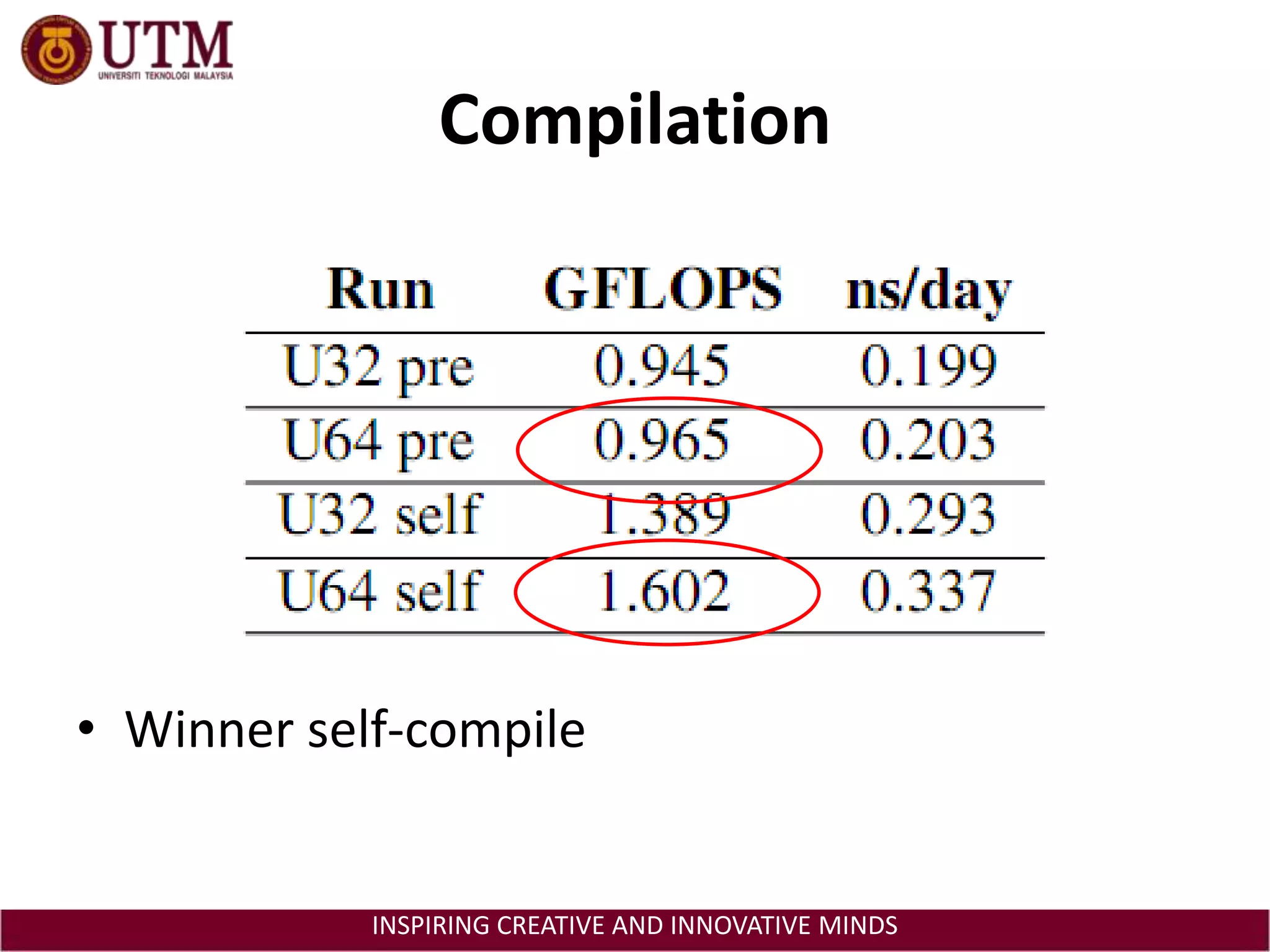

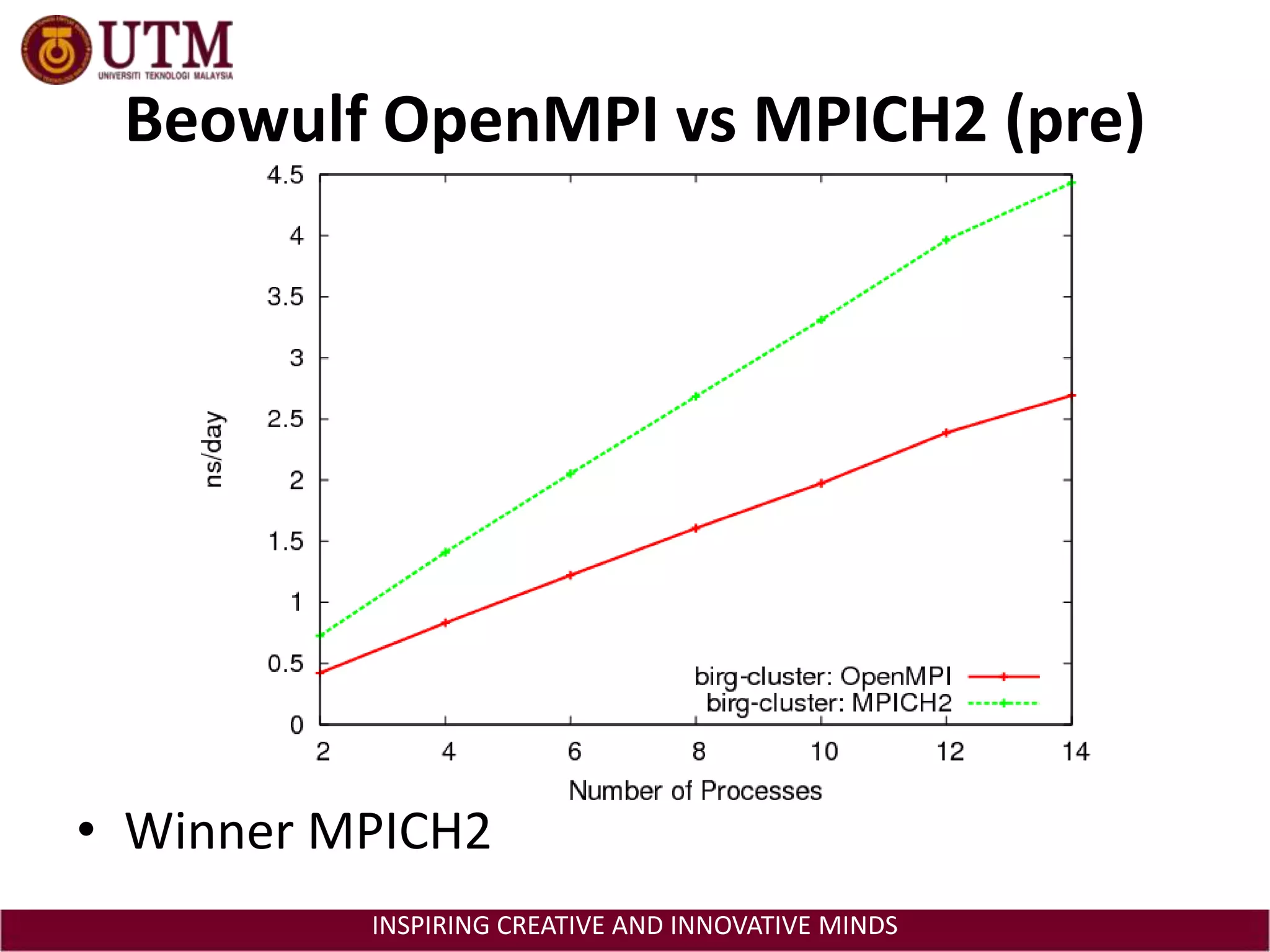

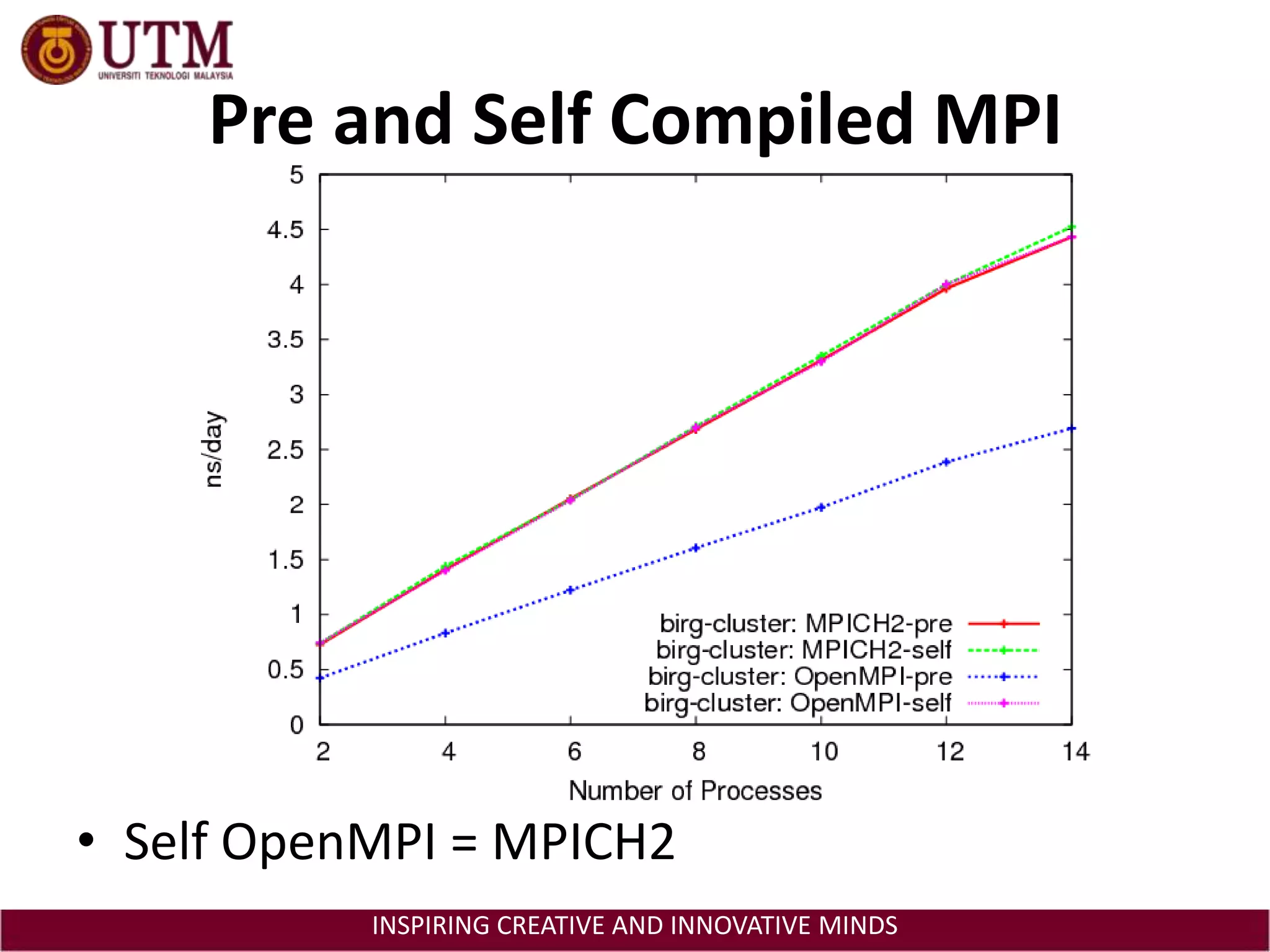

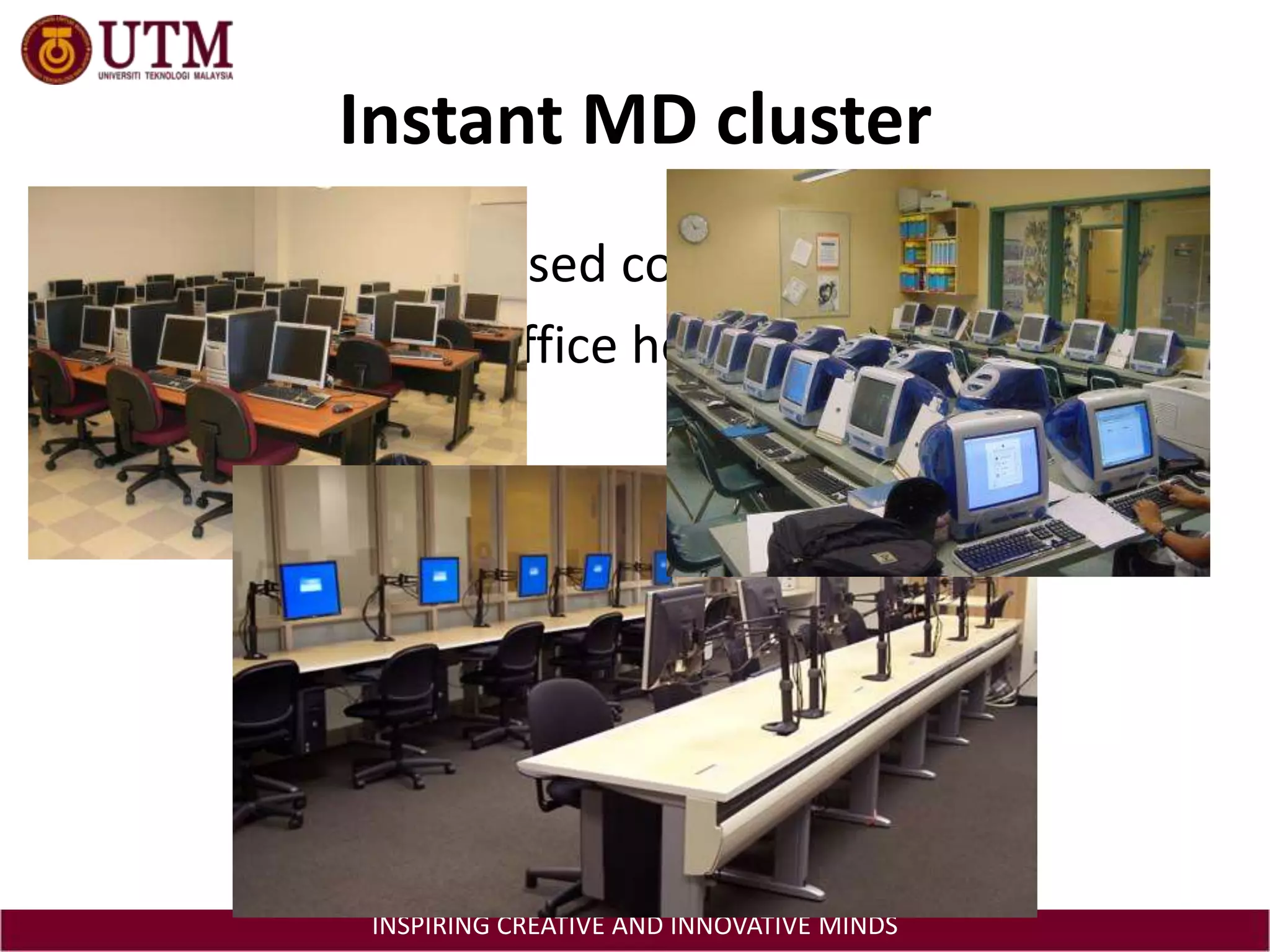

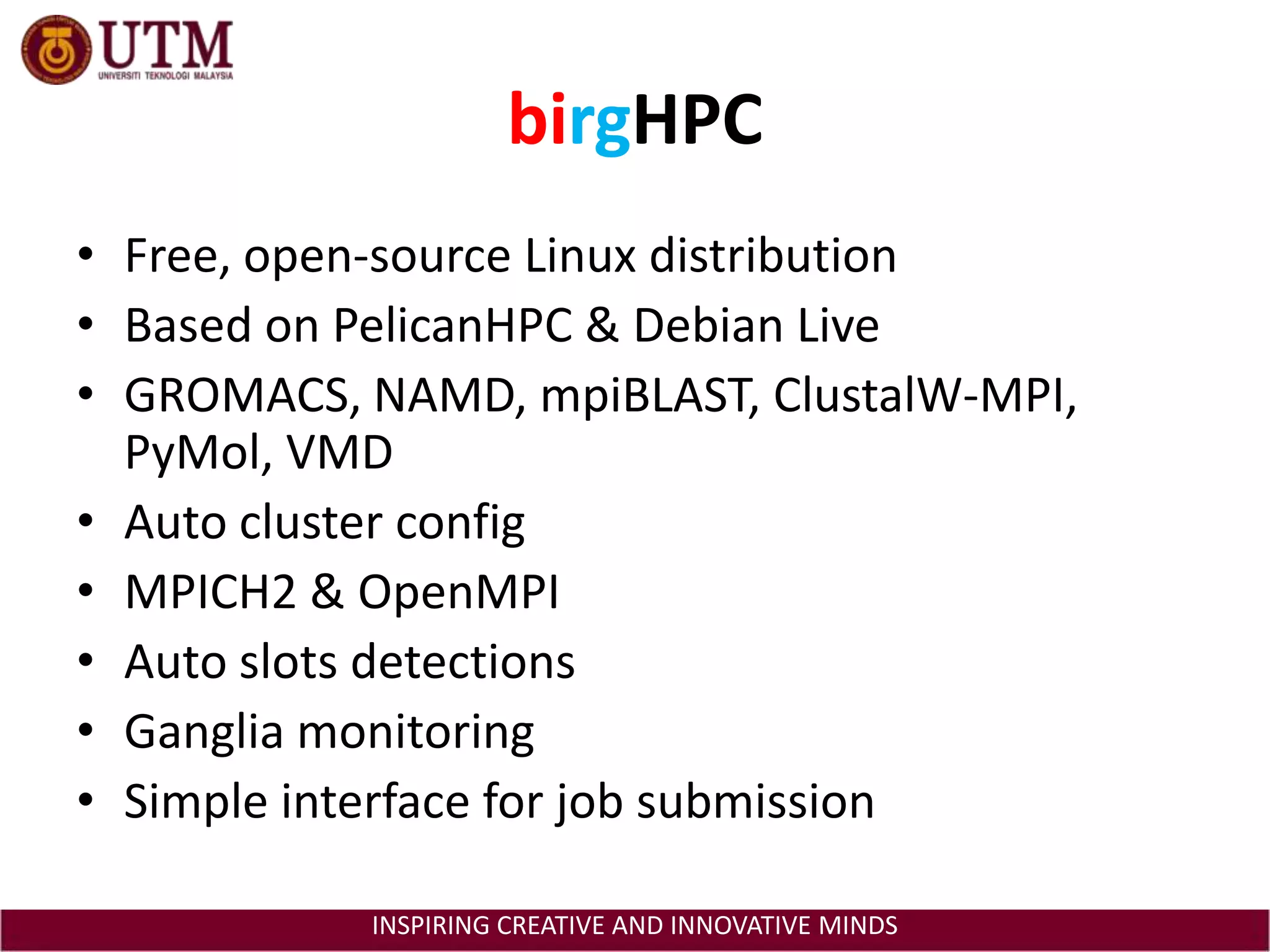

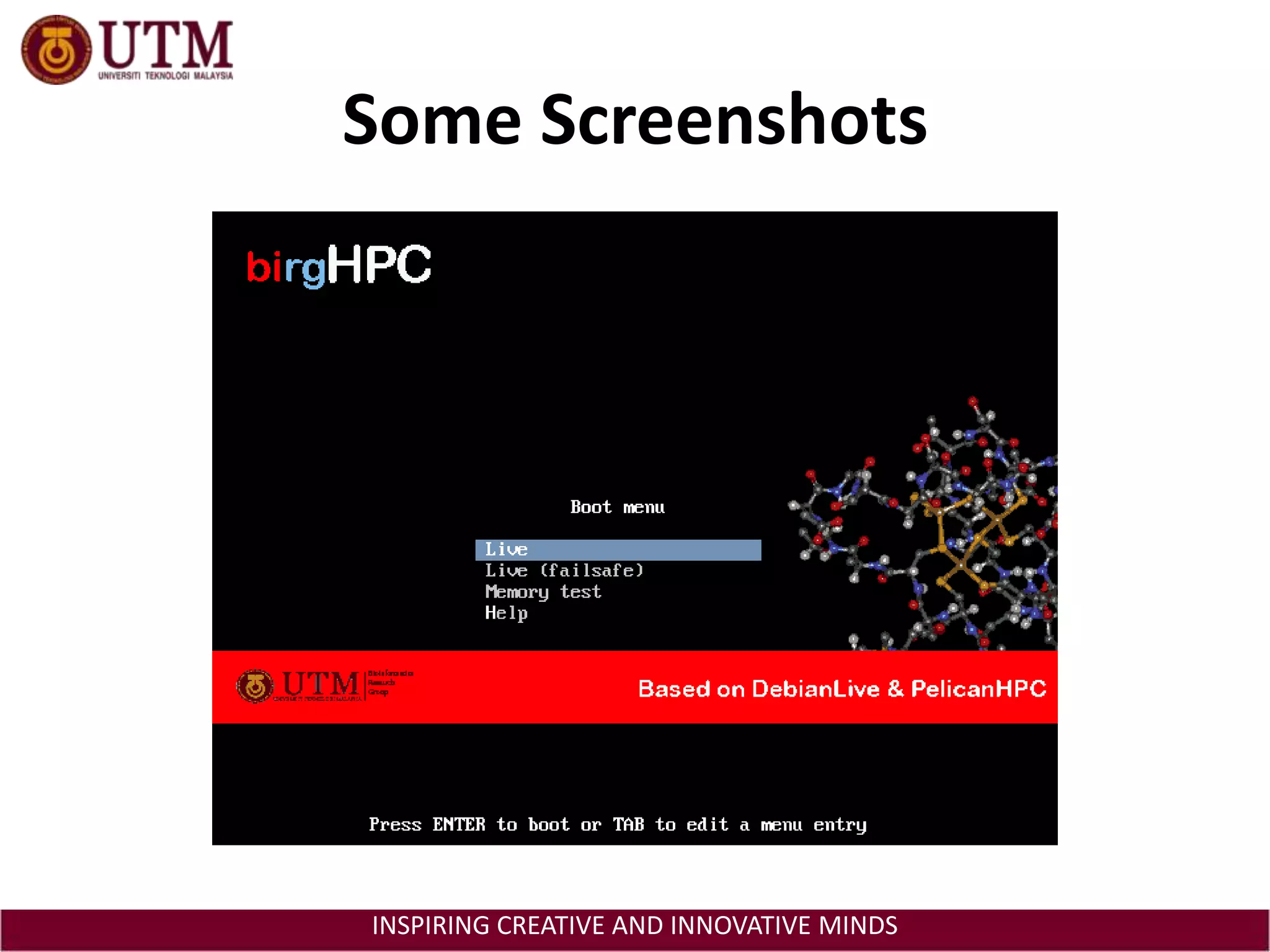

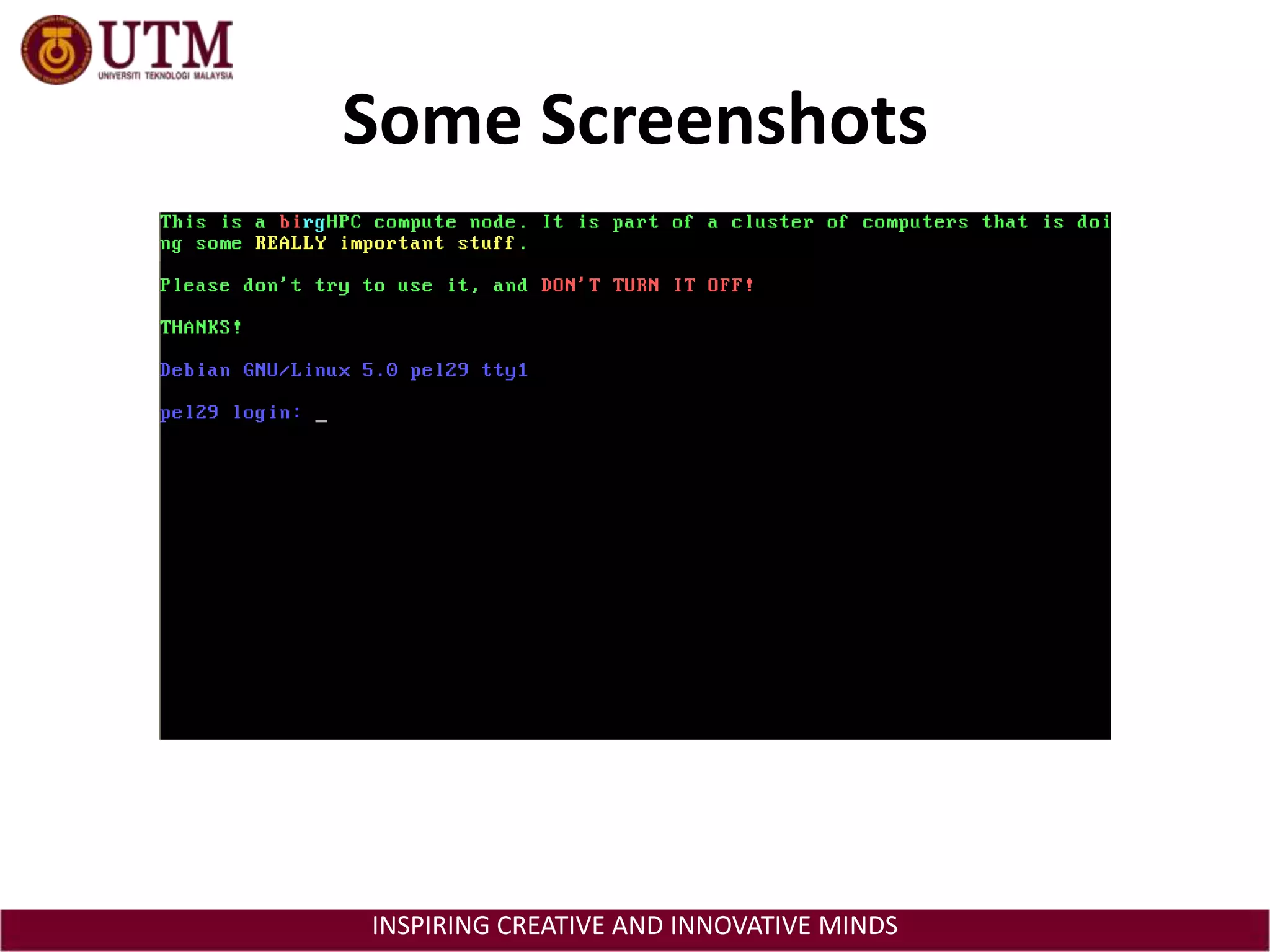

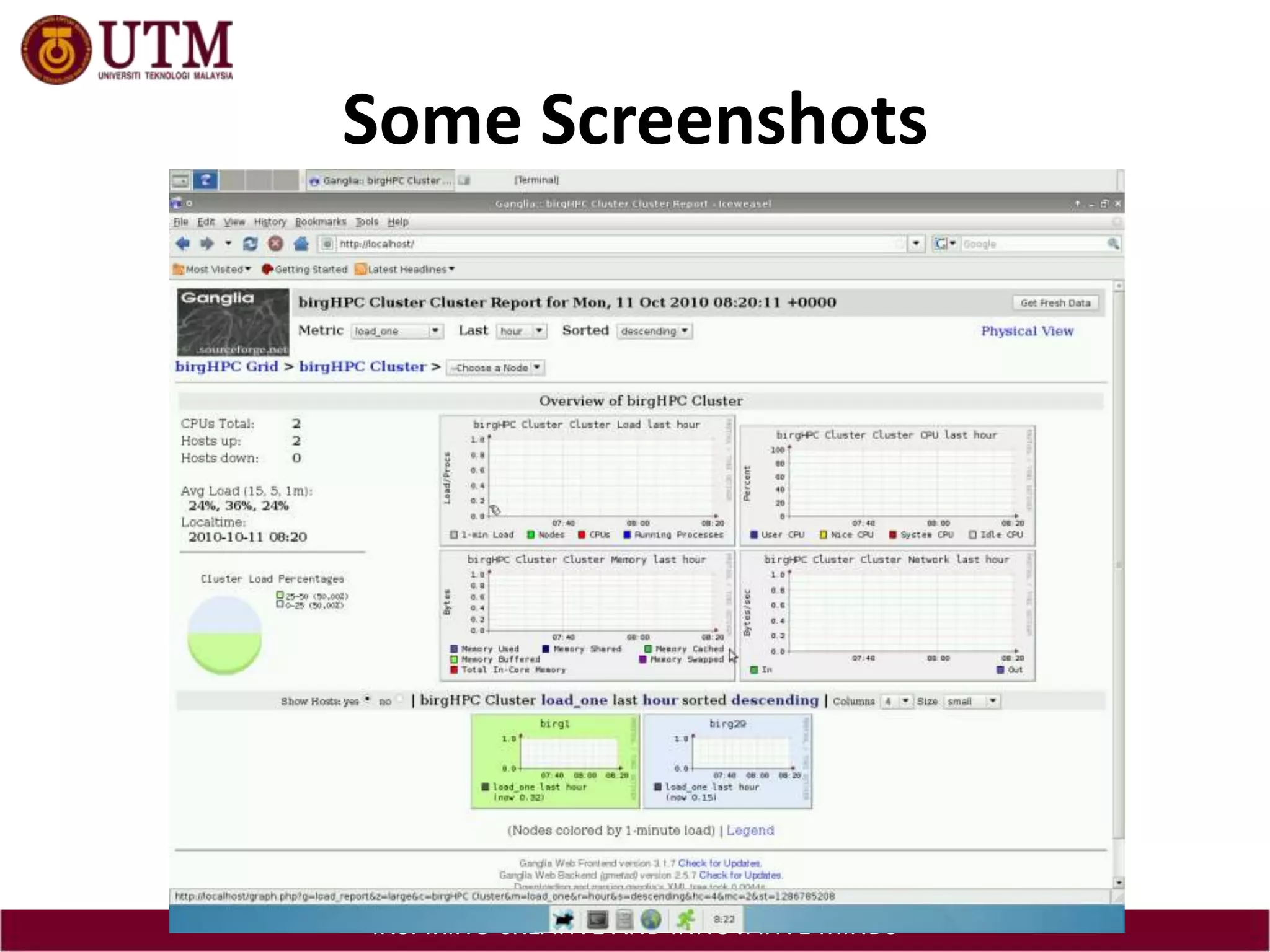

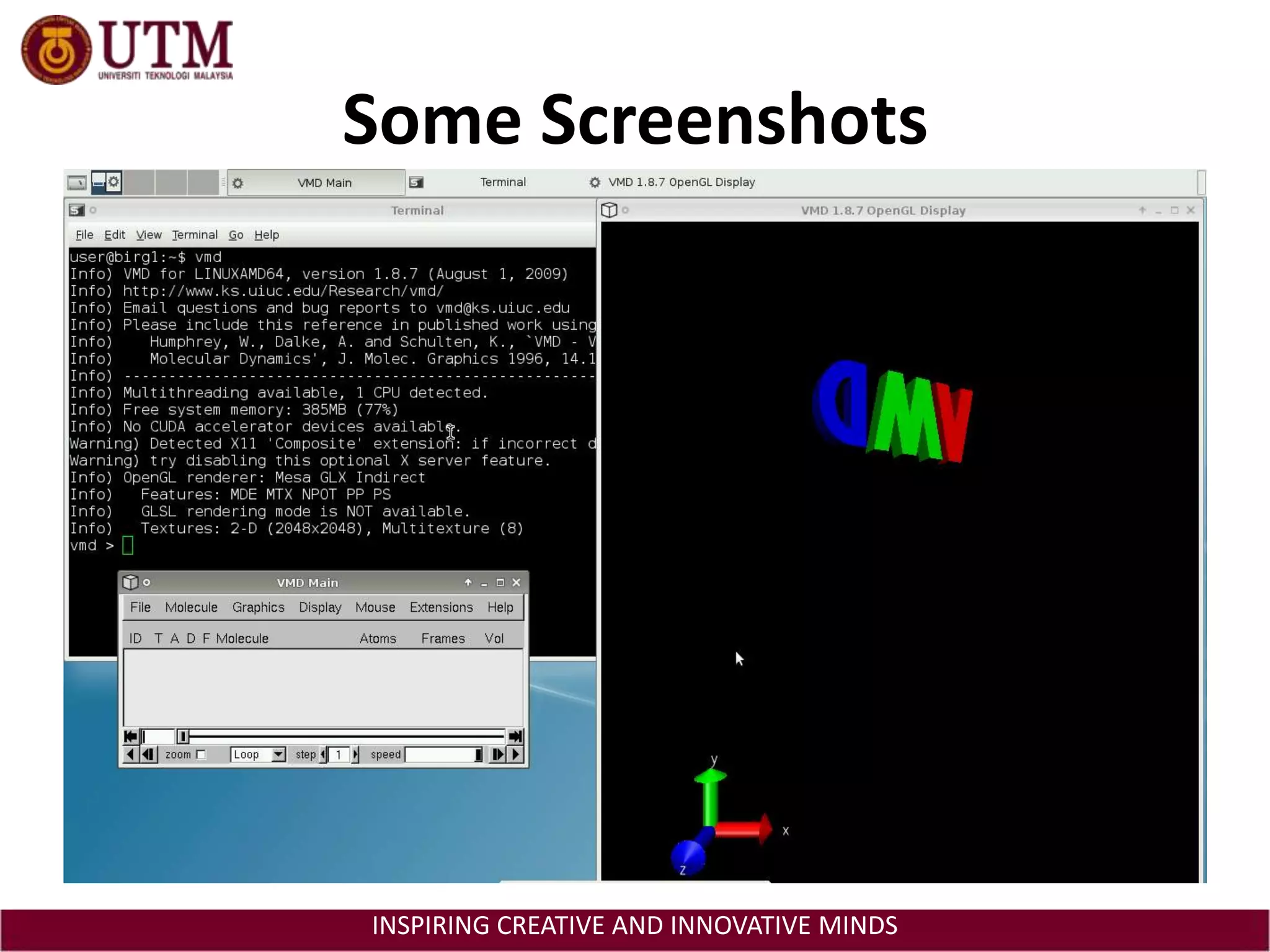

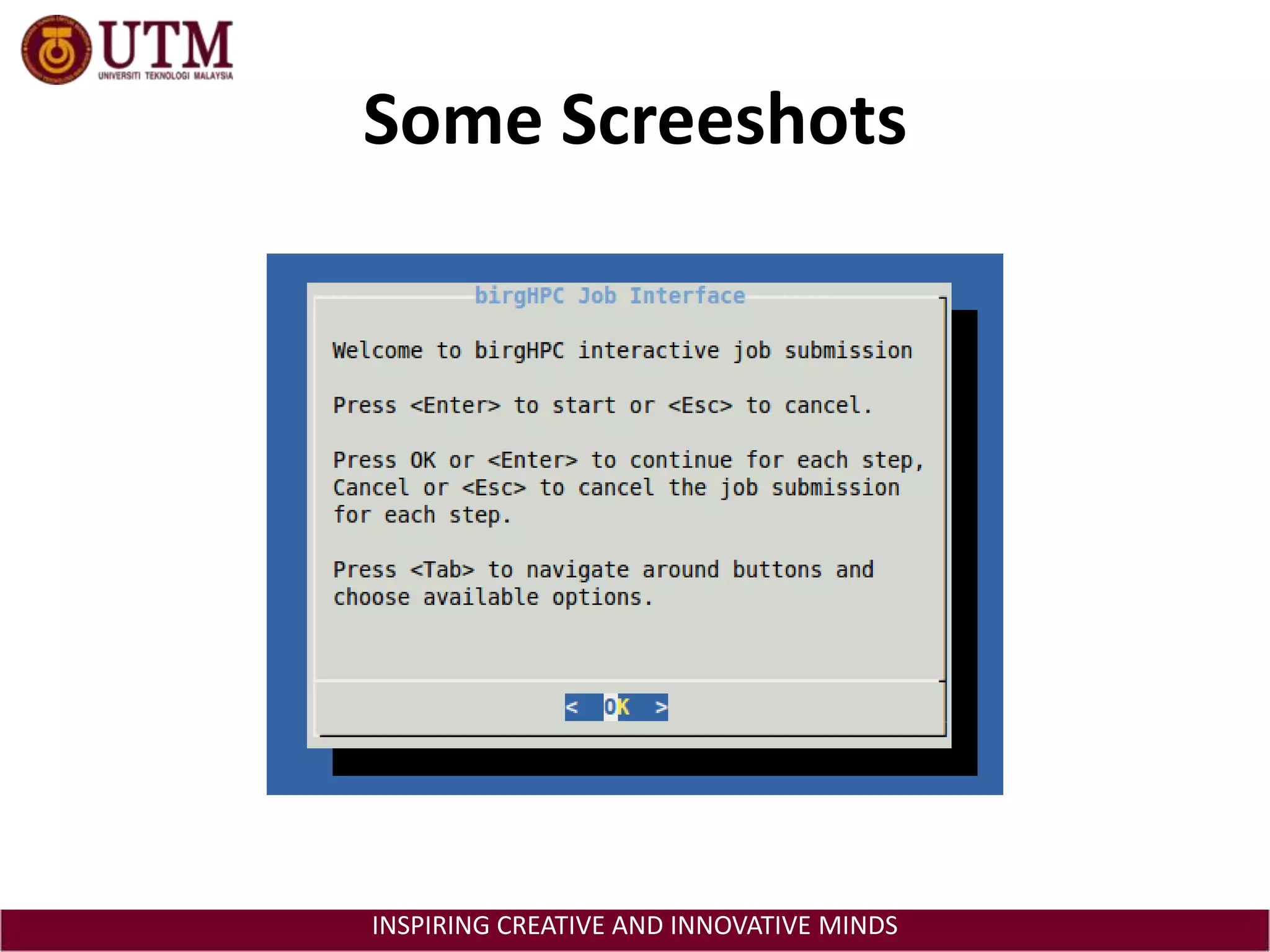

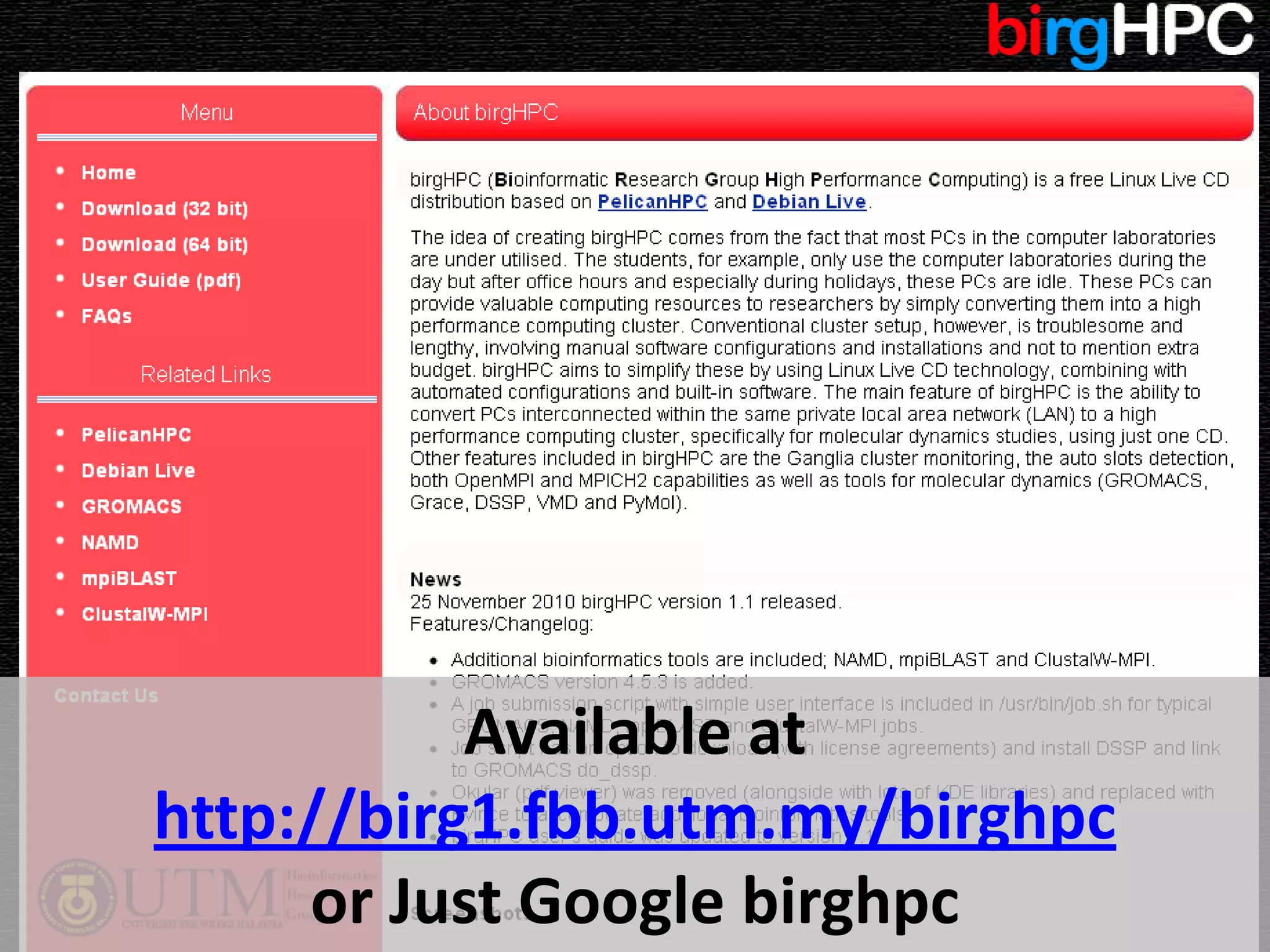

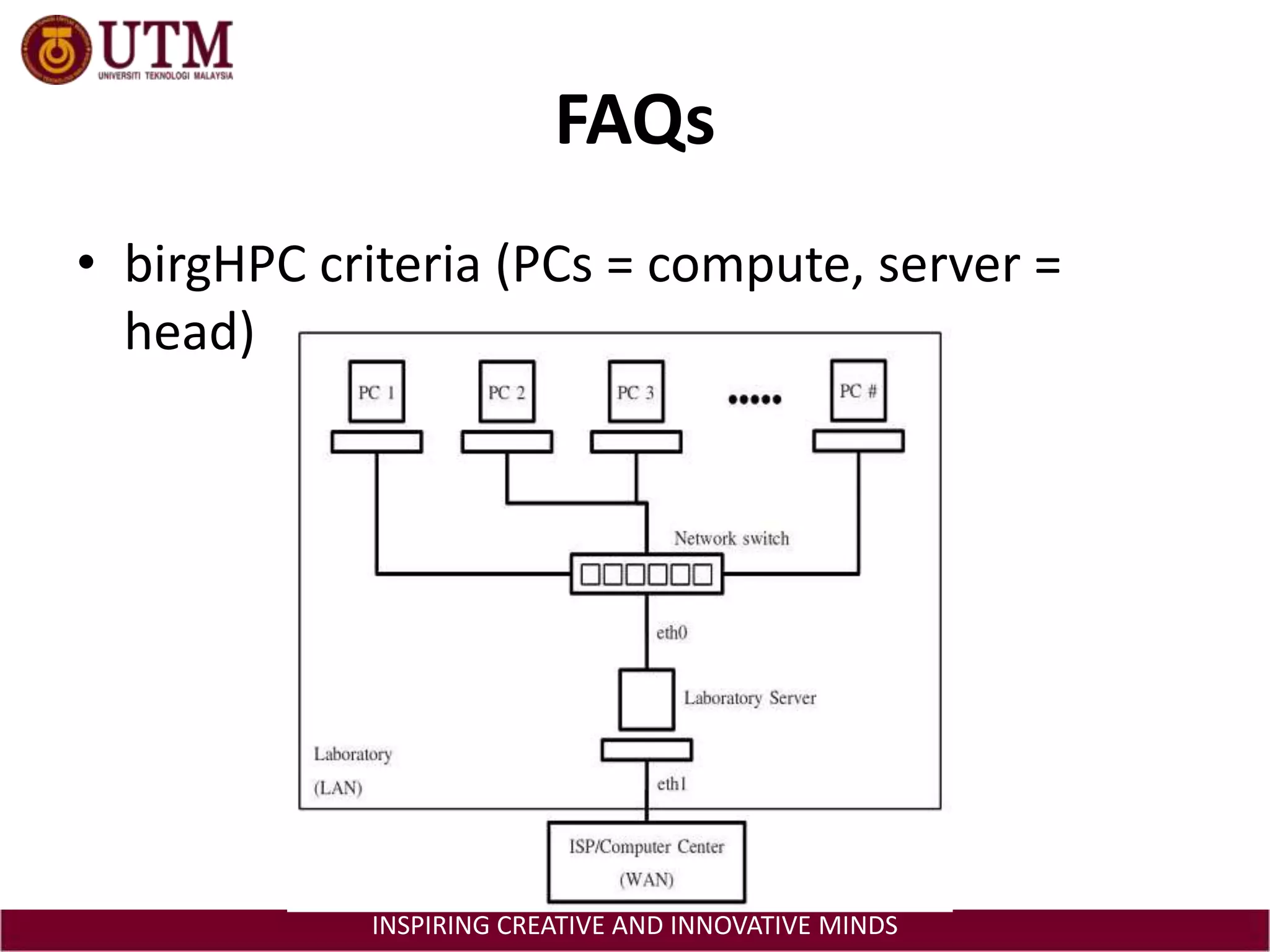

This document discusses using existing idle computers to create an instant computing cluster for molecular dynamics (MD) simulations called birgHPC. It describes how birgHPC is a Linux distribution that allows various computers connected via LAN to be automatically configured as a computing cluster with tools for MD, bioinformatics and monitoring. Simple instructions and screenshots are provided on setting up and using birgHPC to perform MD simulations using idle computers with no additional cost.