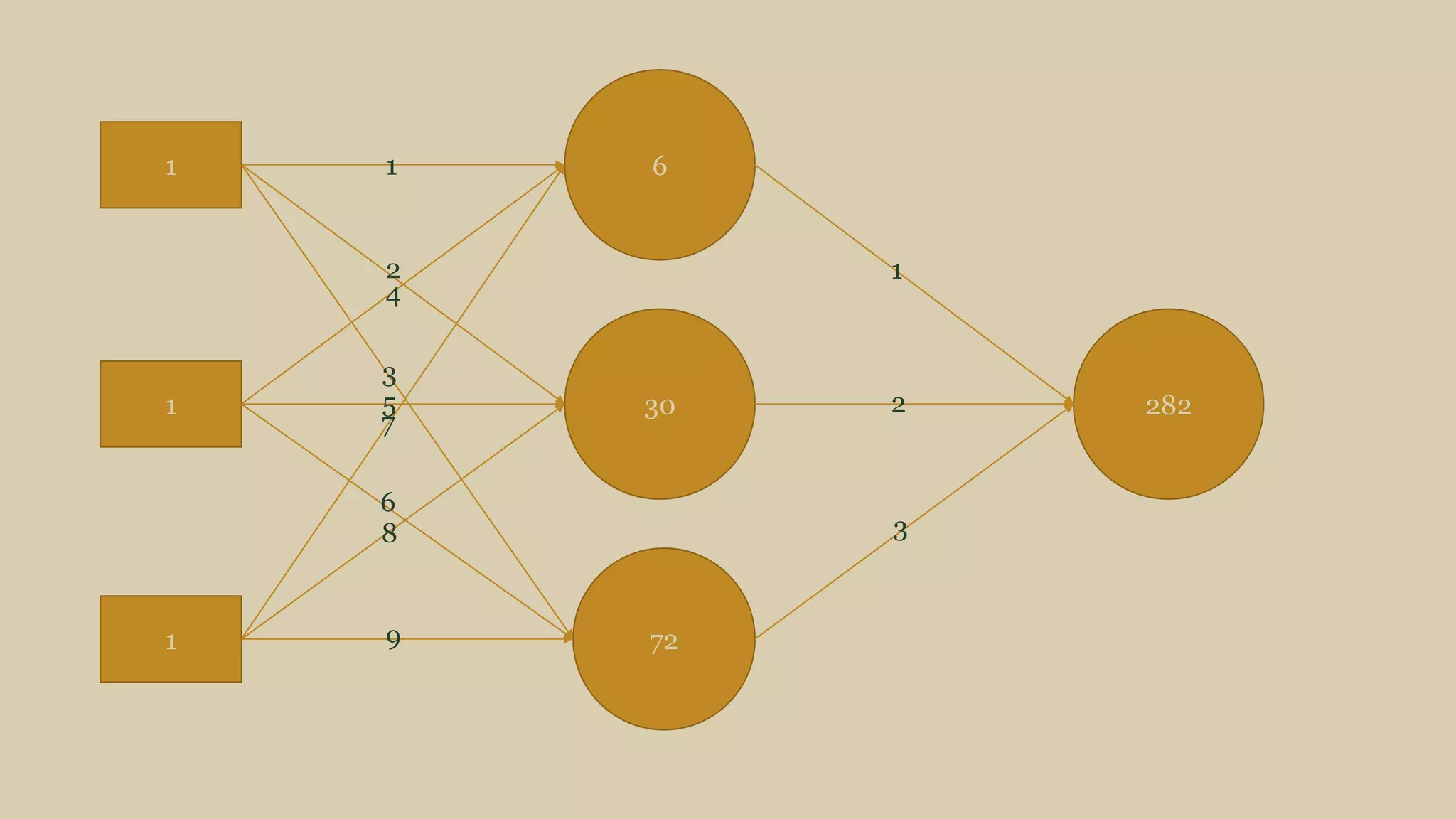

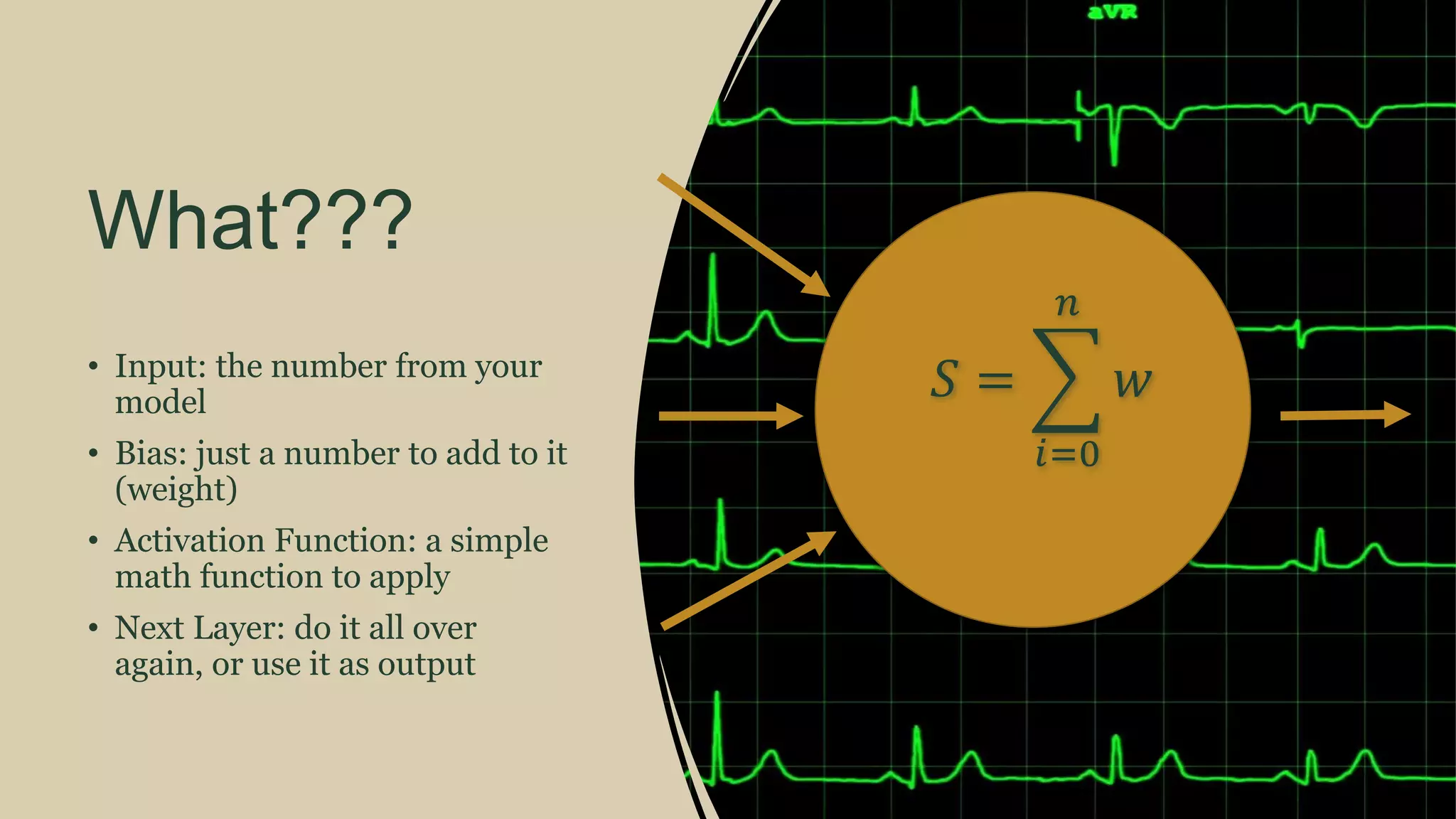

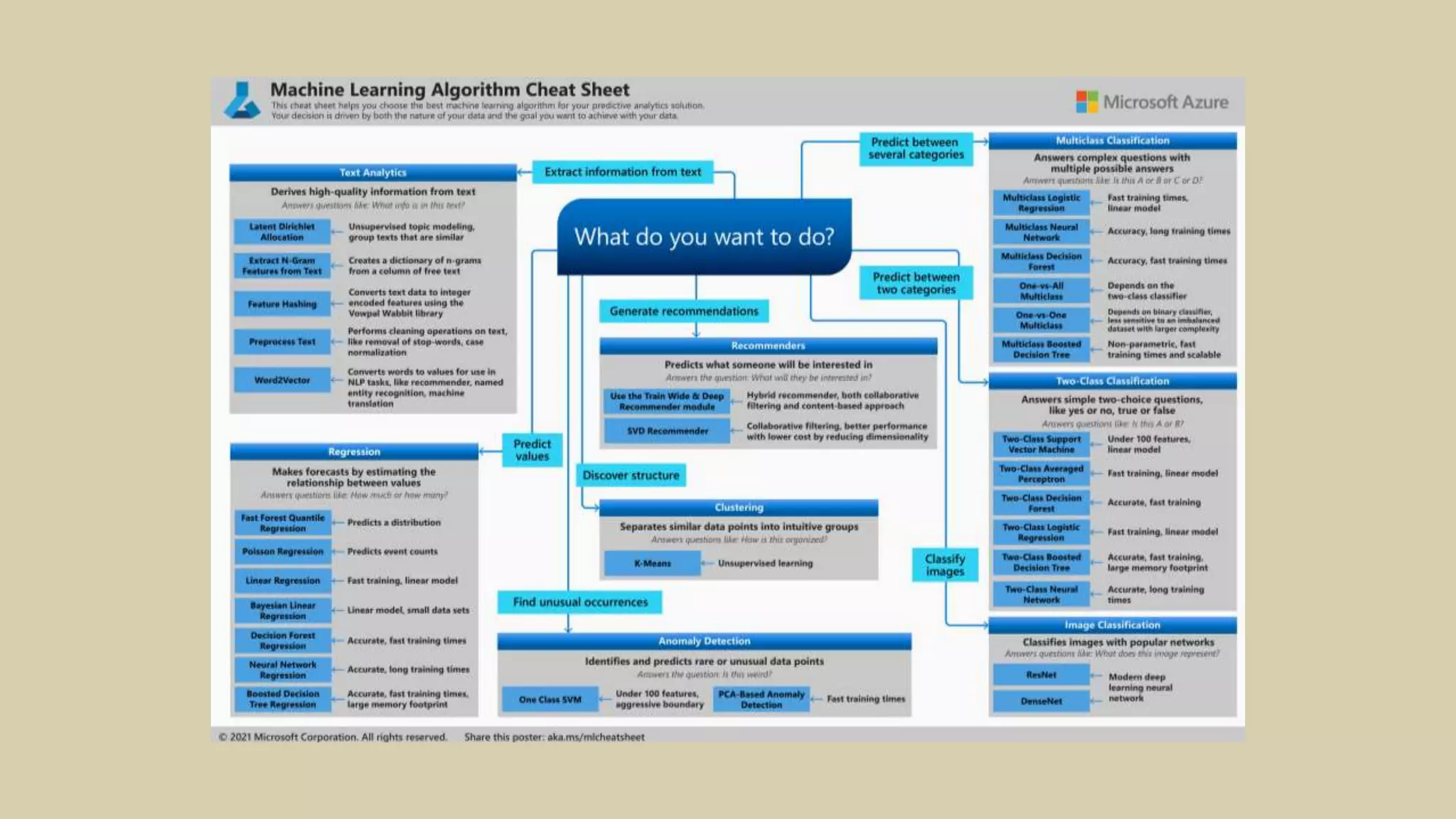

The document discusses the historical development and current impact of artificial intelligence (AI) and machine learning (ML), emphasizing the rapid growth of these technologies and their projected market value exceeding $60 billion by 2025. It highlights the potential of AI in various applications, such as traffic safety, cancer detection, and workplace automation, alongside the concerns about job displacement. The author also outlines the foundational concepts of neural networks and provides insights into machine learning workflows and generative AI models.

![Transformative

• The model.

• Lots of jargon about dual layer, each

with 2 sub-layers, that feed on each

other…

• If you really, really, want to

know:

[1706.03762] Attention Is All You

Need (arxiv.org)](https://image.slidesharecdn.com/2023-04-20aidotned-230601130025-3d02bc48/75/A-leap-around-AI-56-2048.jpg)