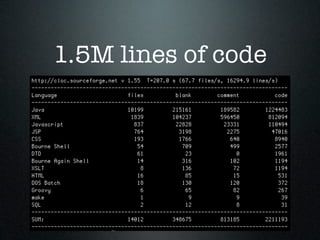

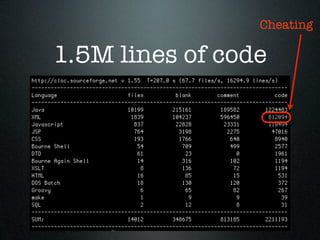

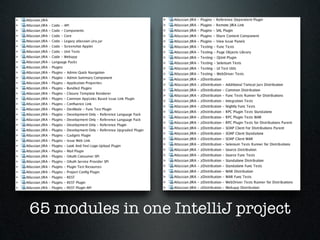

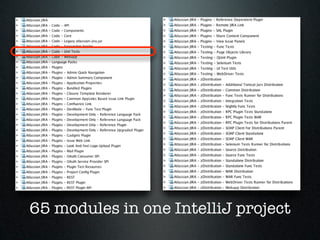

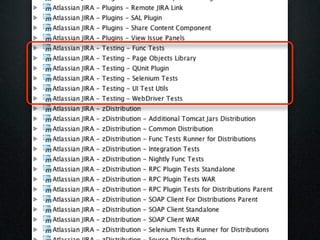

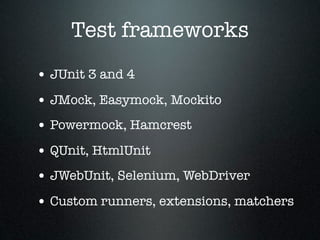

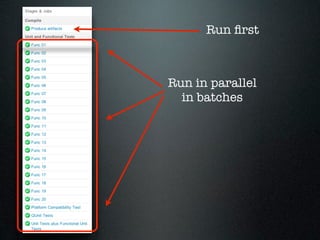

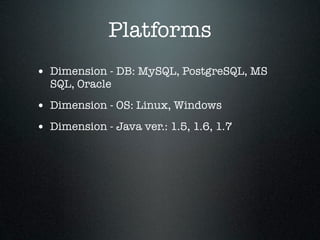

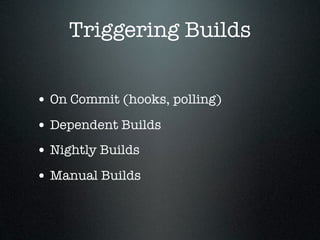

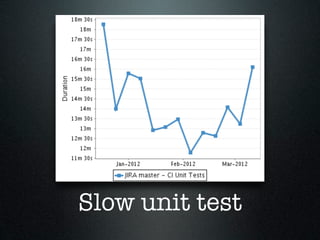

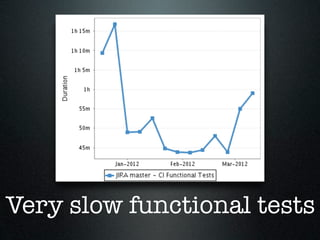

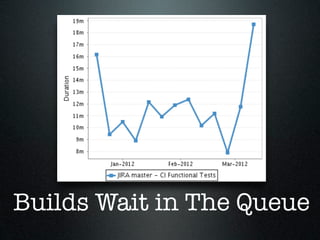

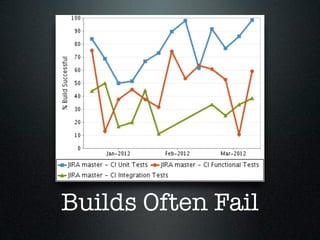

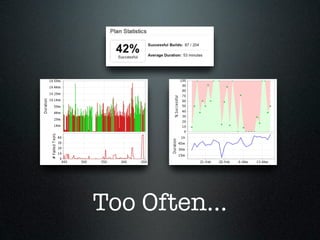

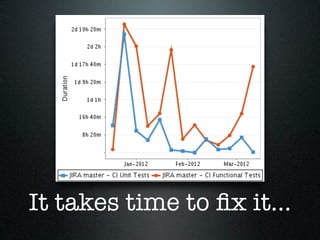

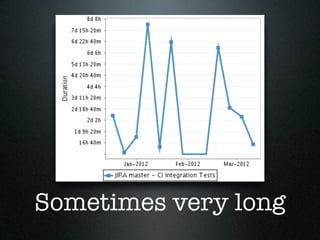

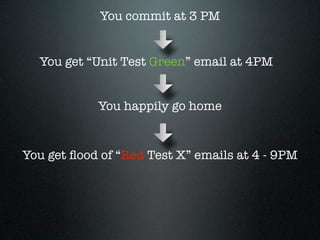

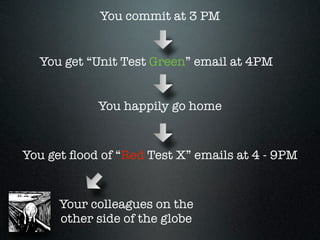

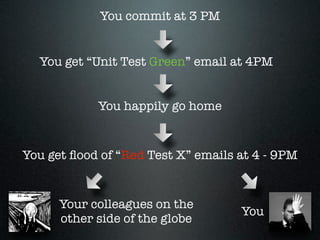

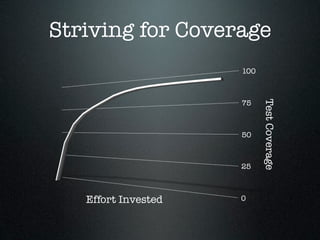

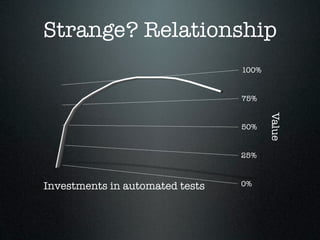

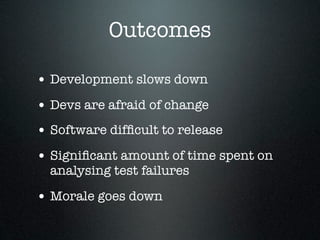

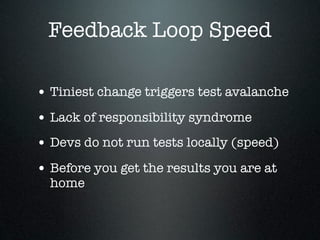

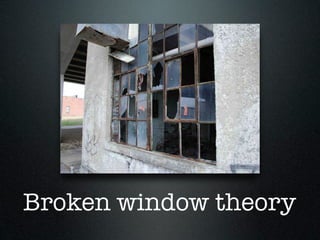

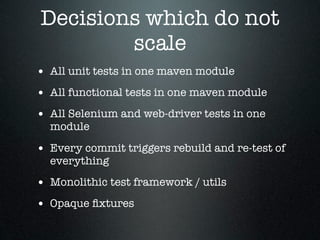

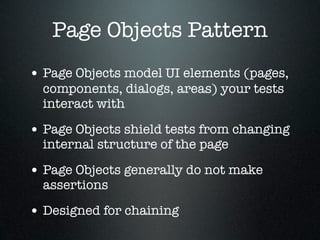

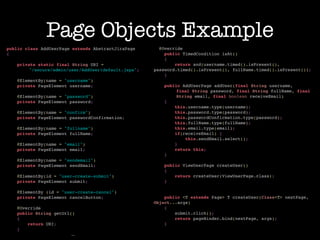

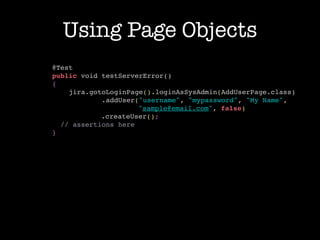

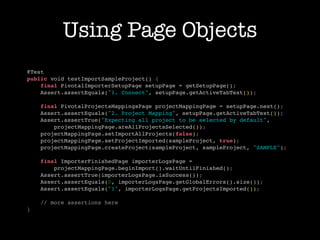

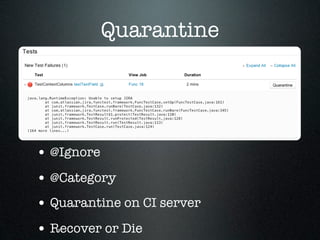

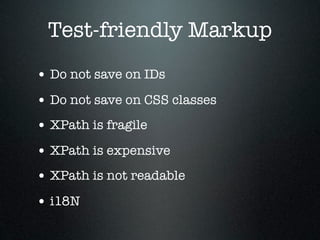

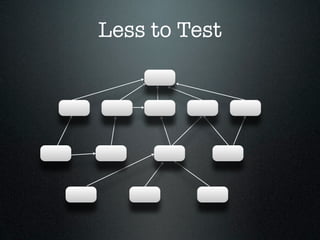

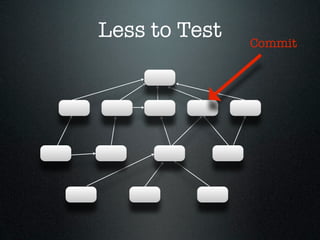

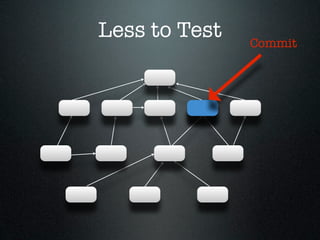

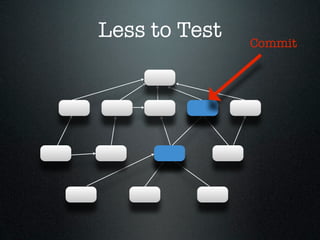

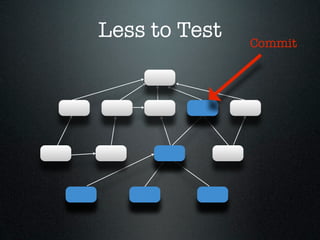

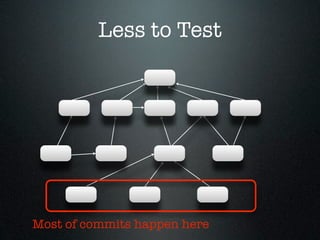

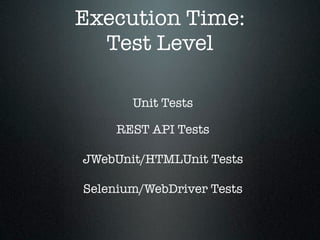

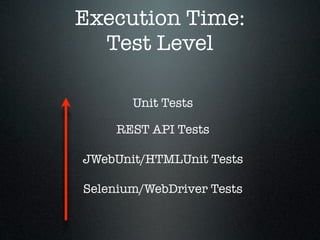

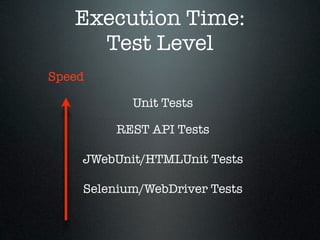

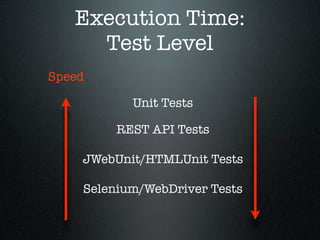

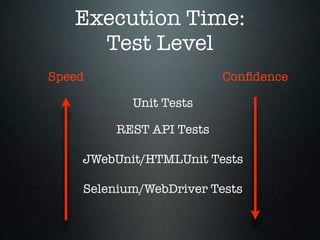

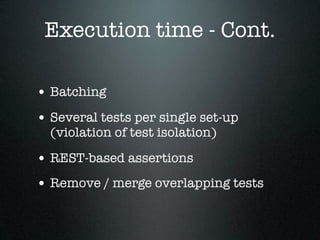

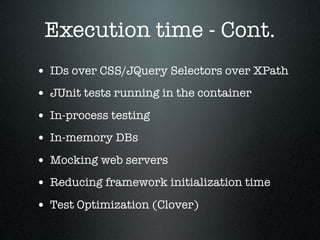

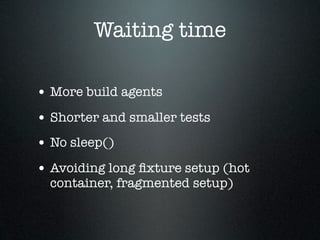

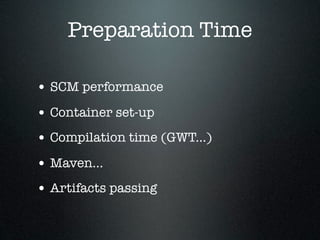

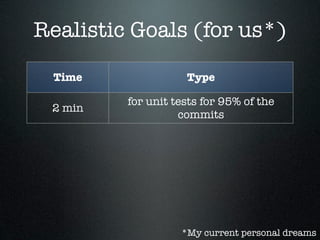

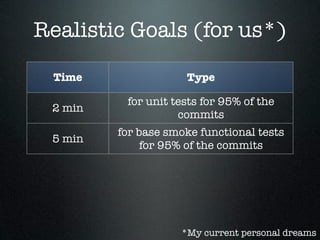

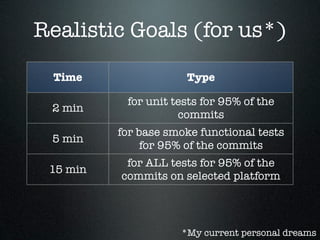

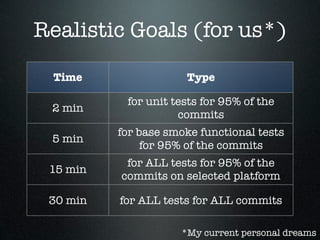

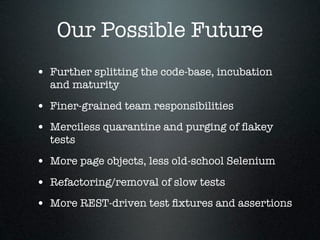

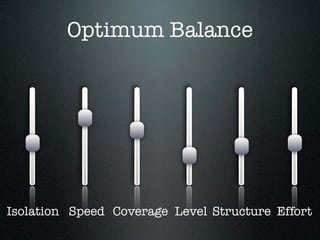

The document discusses challenges faced with automated testing at scale for a large codebase with many dependencies, technologies, and test types. It describes how the large number of tests led to slow build times, failures blocking changes, and reduced developer productivity. Specific issues mentioned include monolithic test organization, slow unit/functional tests, non-deterministic races/timeouts, and re-running all tests on every commit. The document advocates for strategies like separating test types, running in parallel, and using page objects to isolate tests from UI changes.