The document discusses Transformers and sequence-to-sequence learning. It provides an overview of:

- The encoder-decoder architecture of sequence-to-sequence models including self-attention.

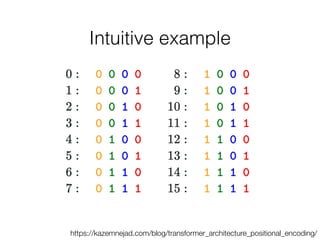

- Key components of the Transformer model including multi-head self-attention, positional encodings, and residual connections.

- Training procedures like label smoothing and techniques used to improve Transformer performance like adding feedforward layers and stacking multiple Transformer blocks.

![Self-attention (in encoder)

15

committee awards Strickland advanced optics

who

Layer p

Q

K

V

[Vaswani et al. 2017]

Nobel

Slides by Emma Strubell!](https://image.slidesharecdn.com/05-transformers-230711084049-7e2dd85a/85/05-transformers-pdf-15-320.jpg)

![16

Layer p

Q

K

V

[Vaswani et al. 2017]

committee awards Strickland advanced optics

who

Nobel

Slides by Emma Strubell!

Self-attention (in encoder)](https://image.slidesharecdn.com/05-transformers-230711084049-7e2dd85a/85/05-transformers-pdf-16-320.jpg)

![17

Layer p

Q

K

V

optics

advanced

who

Strickland

awards

committee

Nobel

[Vaswani et al. 2017]

committee awards Strickland advanced optics

who

Nobel

Slides by Emma Strubell!

Self-attention (in encoder)](https://image.slidesharecdn.com/05-transformers-230711084049-7e2dd85a/85/05-transformers-pdf-17-320.jpg)

![optics

advanced

who

Strickland

awards

committee

Nobel

18

Layer p

Q

K

V

A

[Vaswani et al. 2017]

committee awards Strickland advanced optics

who

Nobel

Slides by Emma Strubell!

Self-attention (in encoder)](https://image.slidesharecdn.com/05-transformers-230711084049-7e2dd85a/85/05-transformers-pdf-18-320.jpg)

![19

Layer p

Q

K

V

[Vaswani et al. 2017]

committee awards Strickland advanced optics

who

Nobel

optics

advanced

who

Strickland

awards

committee

Nobel

A

Slides by Emma Strubell!

Self-attention (in encoder)](https://image.slidesharecdn.com/05-transformers-230711084049-7e2dd85a/85/05-transformers-pdf-19-320.jpg)

![20

Layer p

Q

K

V

[Vaswani et al. 2017]

committee awards Strickland advanced optics

who

Nobel

optics

advanced

who

Strickland

awards

committee

Nobel

A

Slides by Emma Strubell!

Self-attention (in encoder)](https://image.slidesharecdn.com/05-transformers-230711084049-7e2dd85a/85/05-transformers-pdf-20-320.jpg)

![21

Layer p

Q

K

V

M

[Vaswani et al. 2017]

committee awards Strickland advanced optics

who

Nobel

optics

advanced

who

Strickland

awards

committee

Nobel

A

Slides by Emma Strubell!

Self-attention (in encoder)](https://image.slidesharecdn.com/05-transformers-230711084049-7e2dd85a/85/05-transformers-pdf-21-320.jpg)

![22

Layer p

Q

K

V

M

[Vaswani et al. 2017]

committee awards Strickland advanced optics

who

Nobel

optics

advanced

who

Strickland

awards

committee

Nobel

A

Slides by Emma Strubell!

Self-attention (in encoder)](https://image.slidesharecdn.com/05-transformers-230711084049-7e2dd85a/85/05-transformers-pdf-22-320.jpg)

![Multi-head self-attention

23

Layer p

Q

K

V

M

M1

MH

[Vaswani et al. 2017]

committee awards Strickland advanced optics

who

Nobel

optics

advanced

who

Strickland

awards

committee

Nobel

A

Slides by Emma Strubell!](https://image.slidesharecdn.com/05-transformers-230711084049-7e2dd85a/85/05-transformers-pdf-23-320.jpg)

![24

Layer p

Q

K

V

MH

M1

[Vaswani et al. 2017]

committee awards Strickland advanced optics

who

Nobel

optics

advanced

who

Strickland

awards

committee

Nobel

A

Multi-head self-attention

Slides by Emma Strubell!](https://image.slidesharecdn.com/05-transformers-230711084049-7e2dd85a/85/05-transformers-pdf-24-320.jpg)

![25

Layer p

Q

K

V

MH

M1

Layer

p+1

Feed

Forward

Feed

Forward

Feed

Forward

Feed

Forward

Feed

Forward

Feed

Forward

Feed

Forward

committee awards Strickland advanced optics

who

Nobel

[Vaswani et al. 2017]

optics

advanced

who

Strickland

awards

committee

Nobel

A

Multi-head self-attention

Slides by Emma Strubell!](https://image.slidesharecdn.com/05-transformers-230711084049-7e2dd85a/85/05-transformers-pdf-25-320.jpg)

![26

committee awards Strickland advanced optics

who

Nobel

[Vaswani et al. 2017]

p+1

Multi-head self-attention

Slides by Emma Strubell!](https://image.slidesharecdn.com/05-transformers-230711084049-7e2dd85a/85/05-transformers-pdf-26-320.jpg)

![Multi-head self-attention + feed forward

Multi-head self-attention + feed forward

27

Layer 1

Layer p

Multi-head self-attention + feed forward

Layer J

committee awards Strickland advanced optics

who

Nobel

[Vaswani et al. 2017]

Multi-head self-attention

Slides by Emma Strubell!](https://image.slidesharecdn.com/05-transformers-230711084049-7e2dd85a/85/05-transformers-pdf-27-320.jpg)