The document discusses the semantics of propositional logic, including:

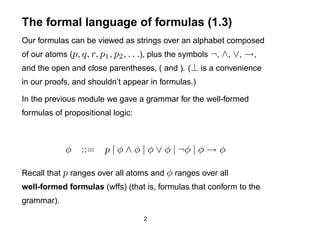

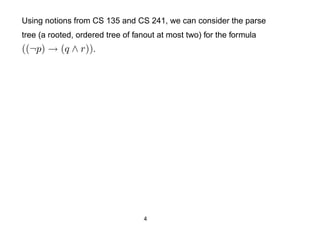

1) Defining logical formulas using a formal language and grammar;

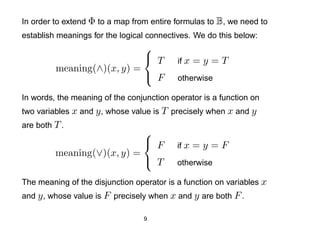

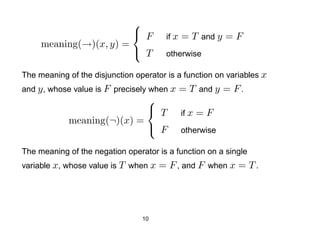

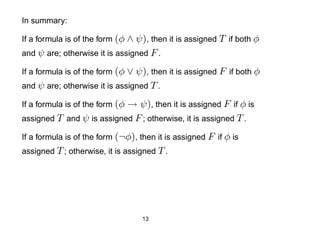

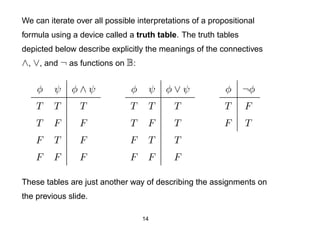

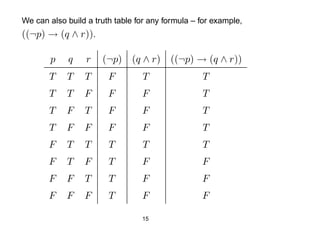

2) Describing the meaning of logical connectives like conjunction and negation through truth tables;

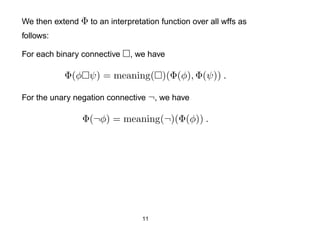

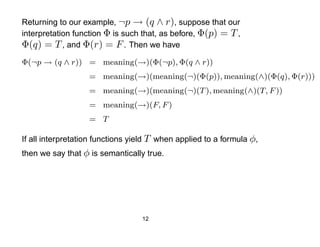

3) Explaining how interpretations assign truth values to formulas based on the truth values of their components.