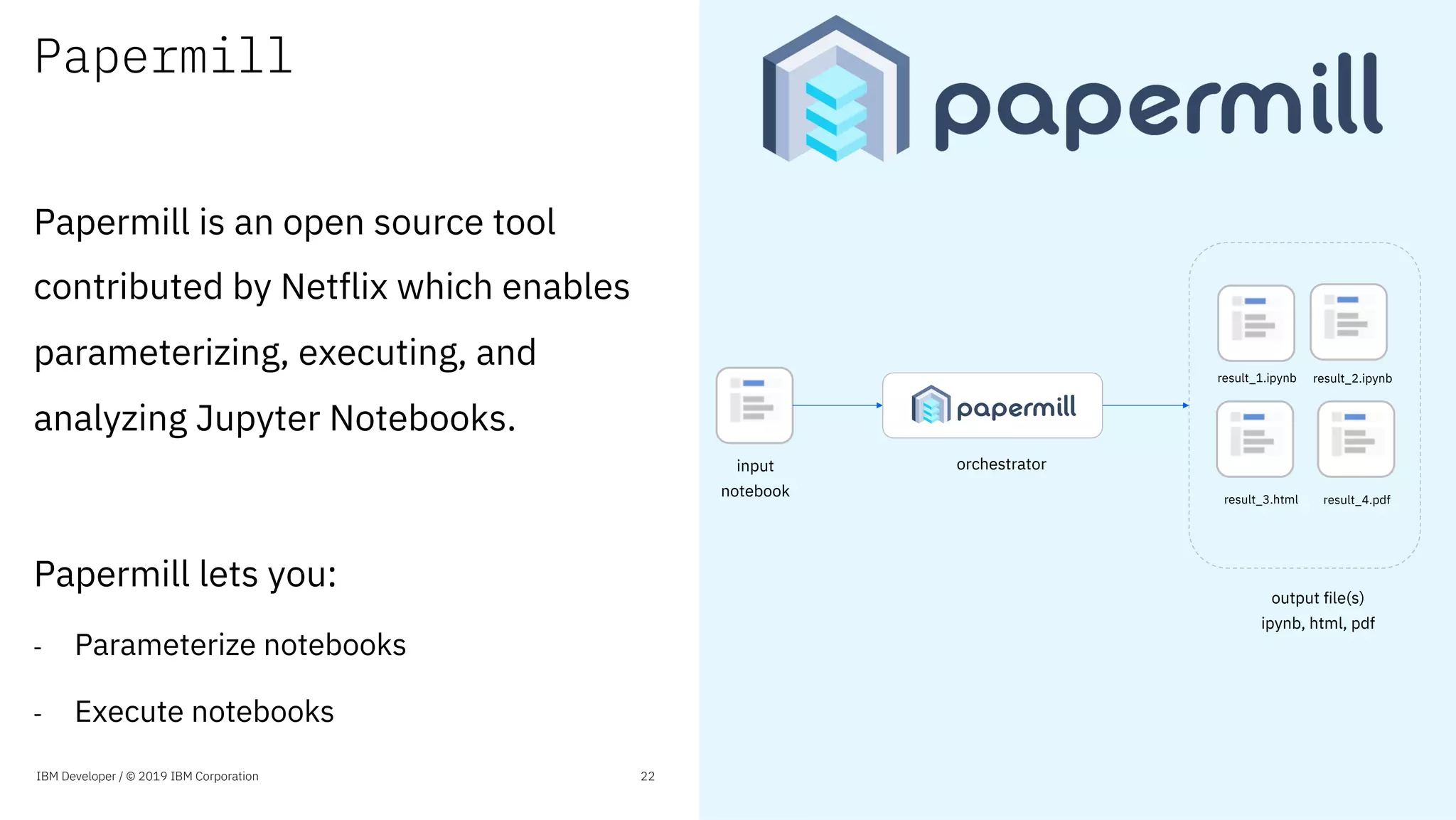

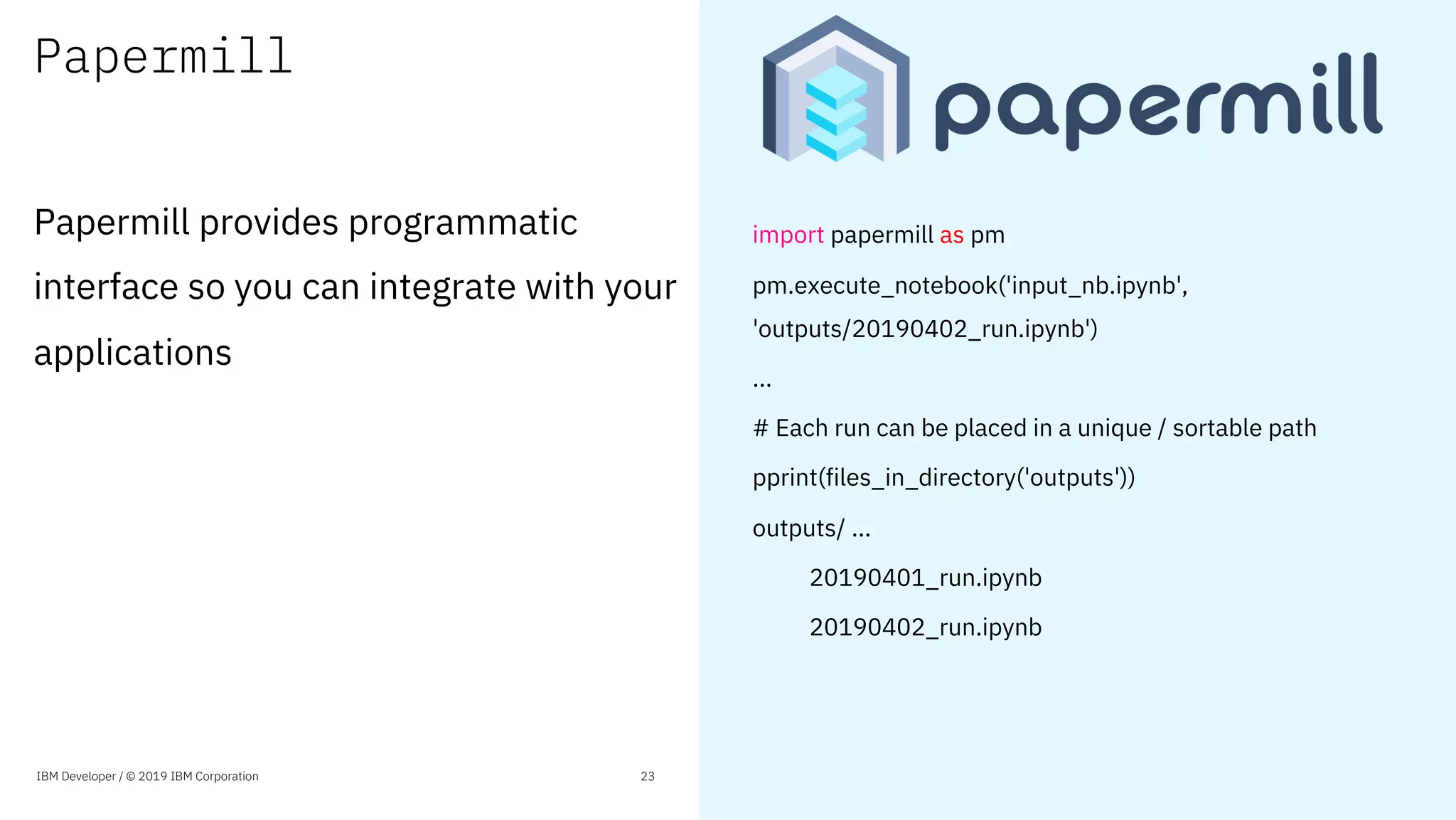

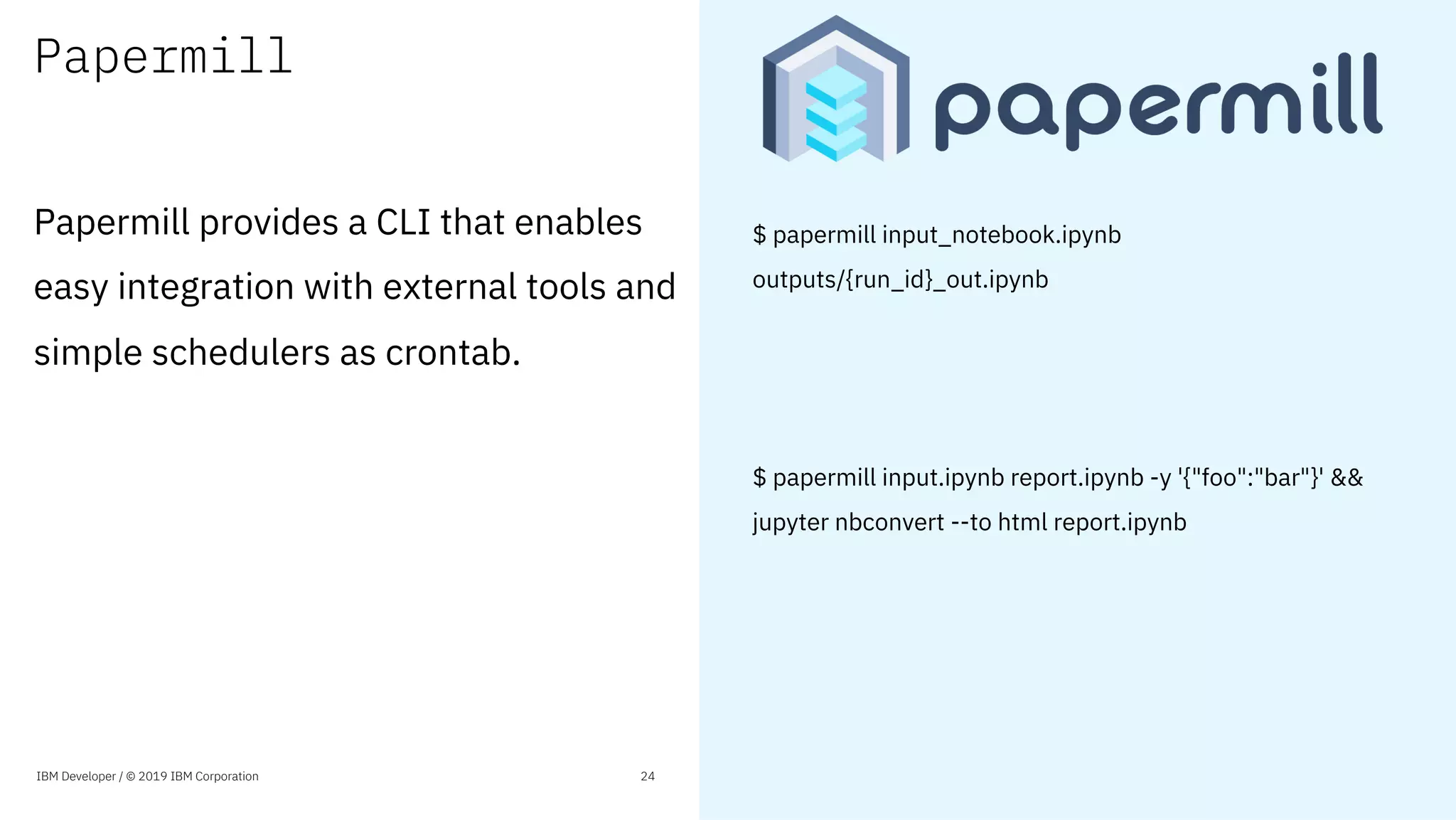

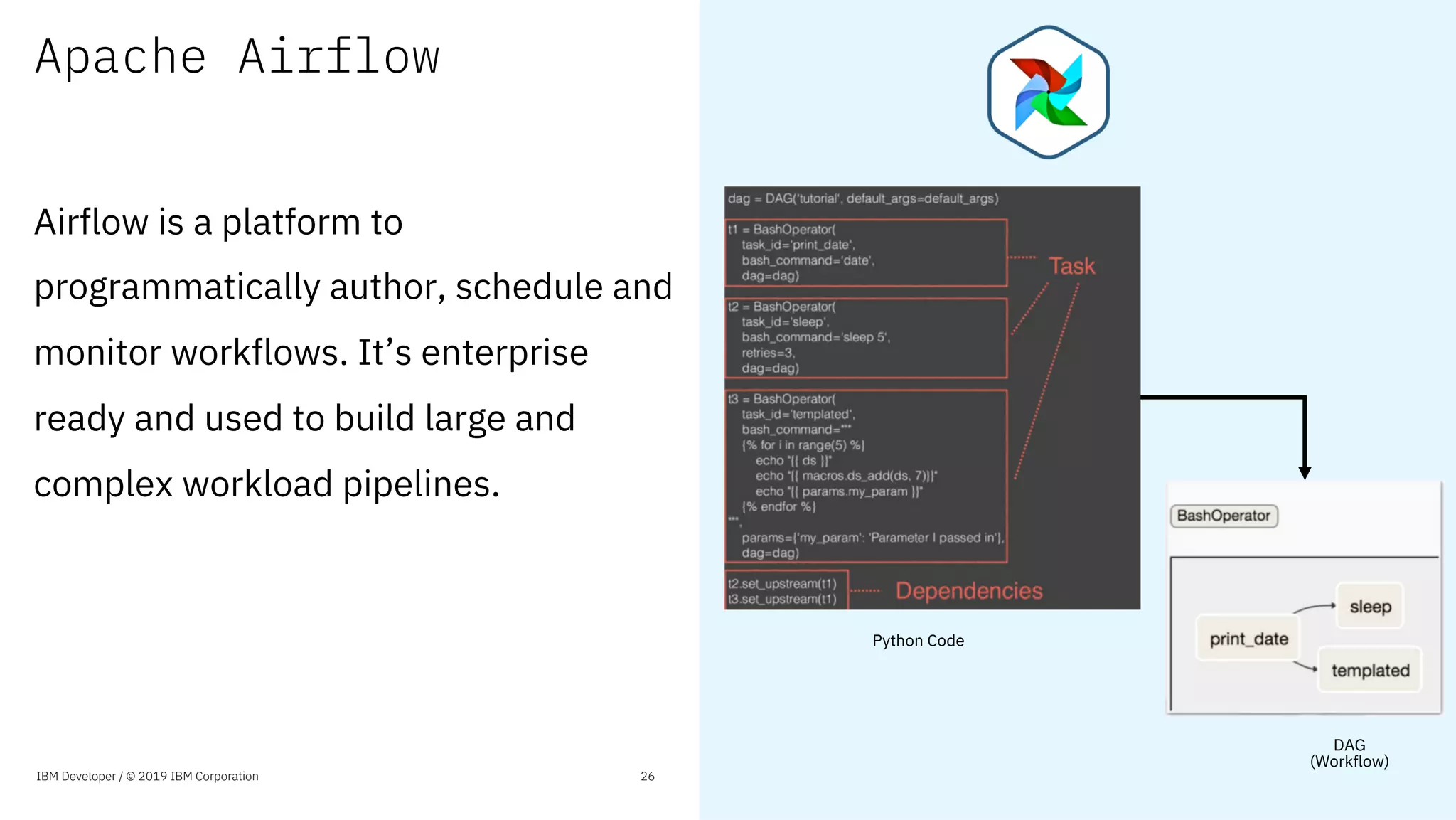

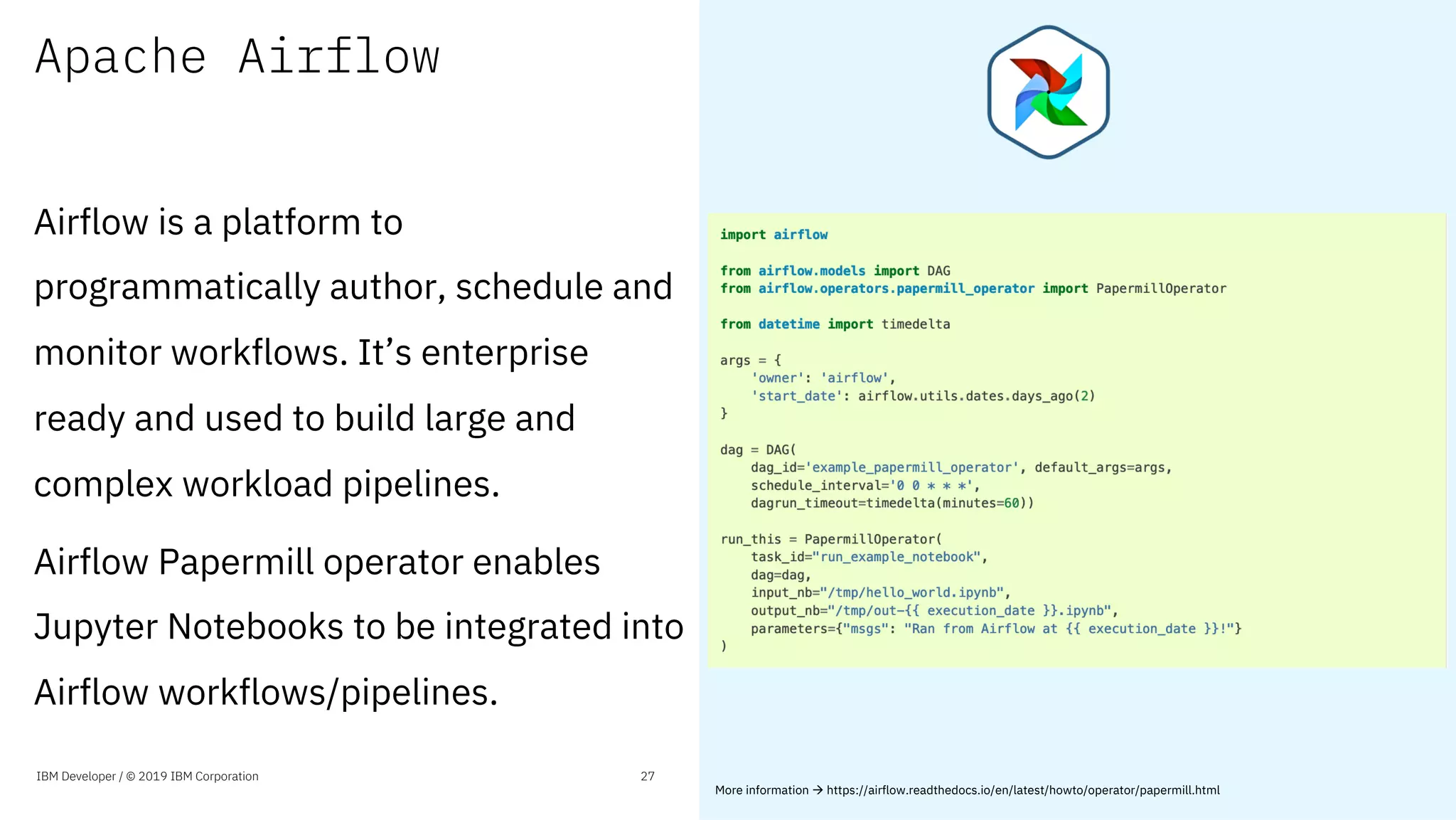

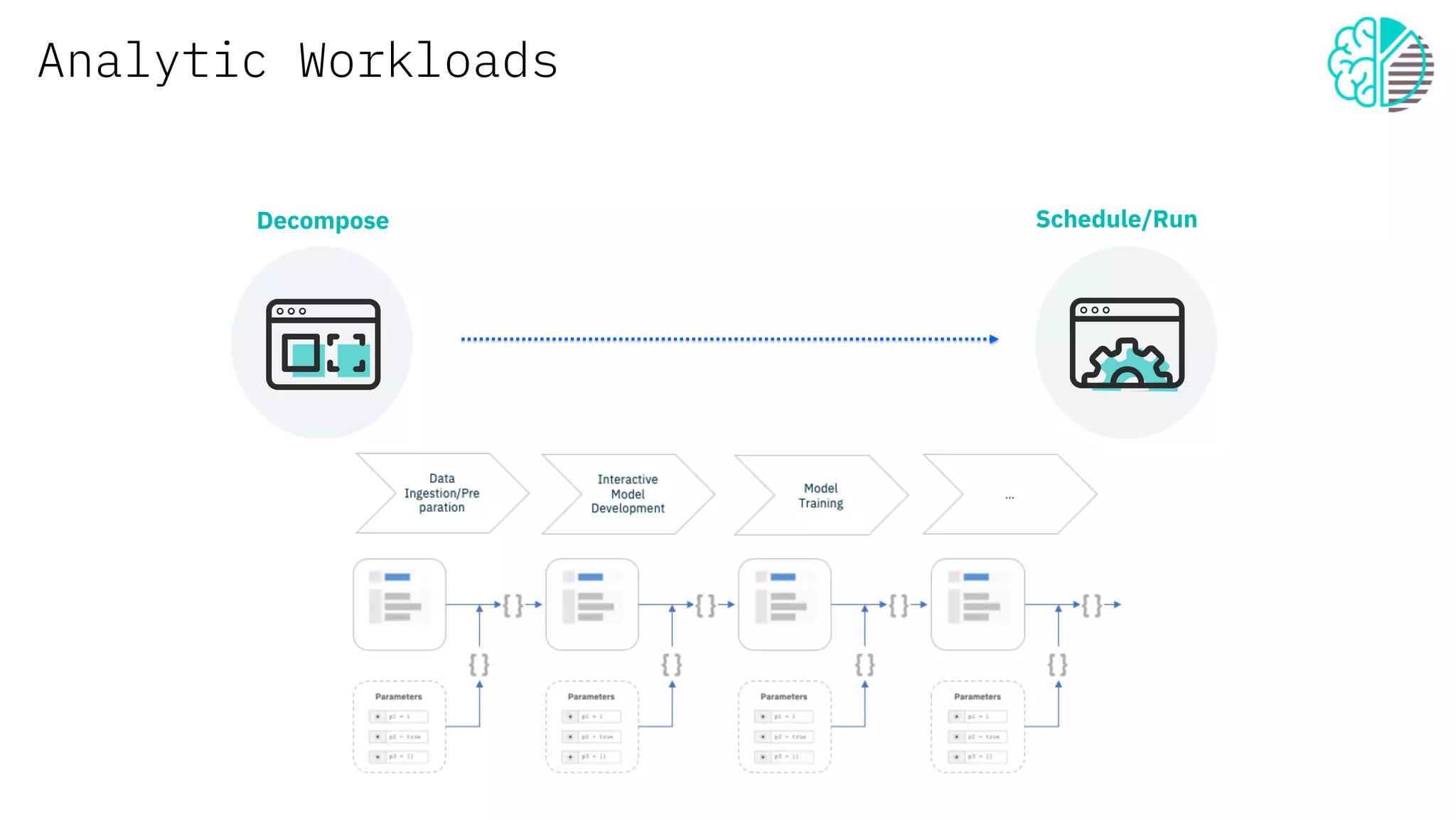

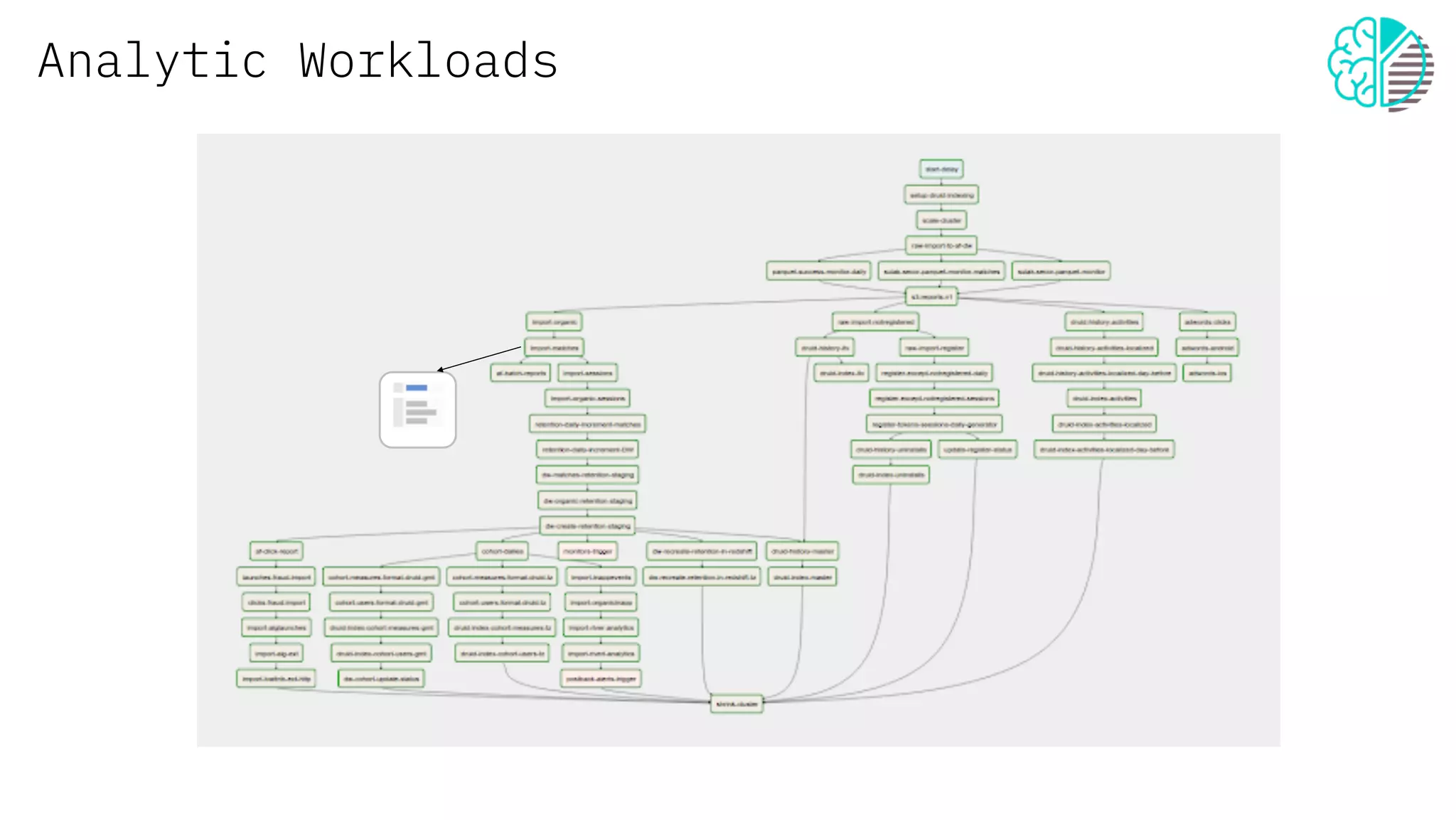

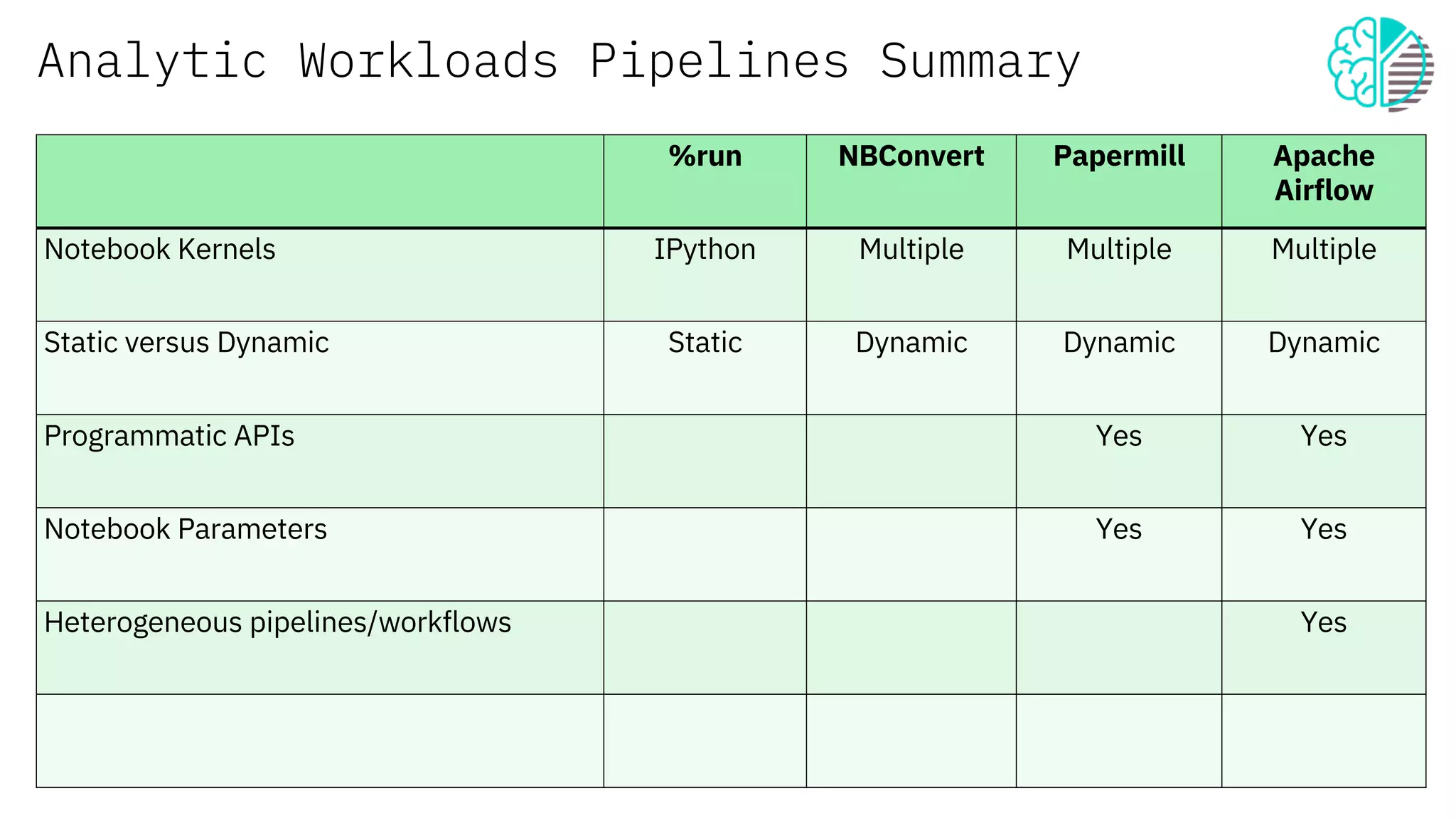

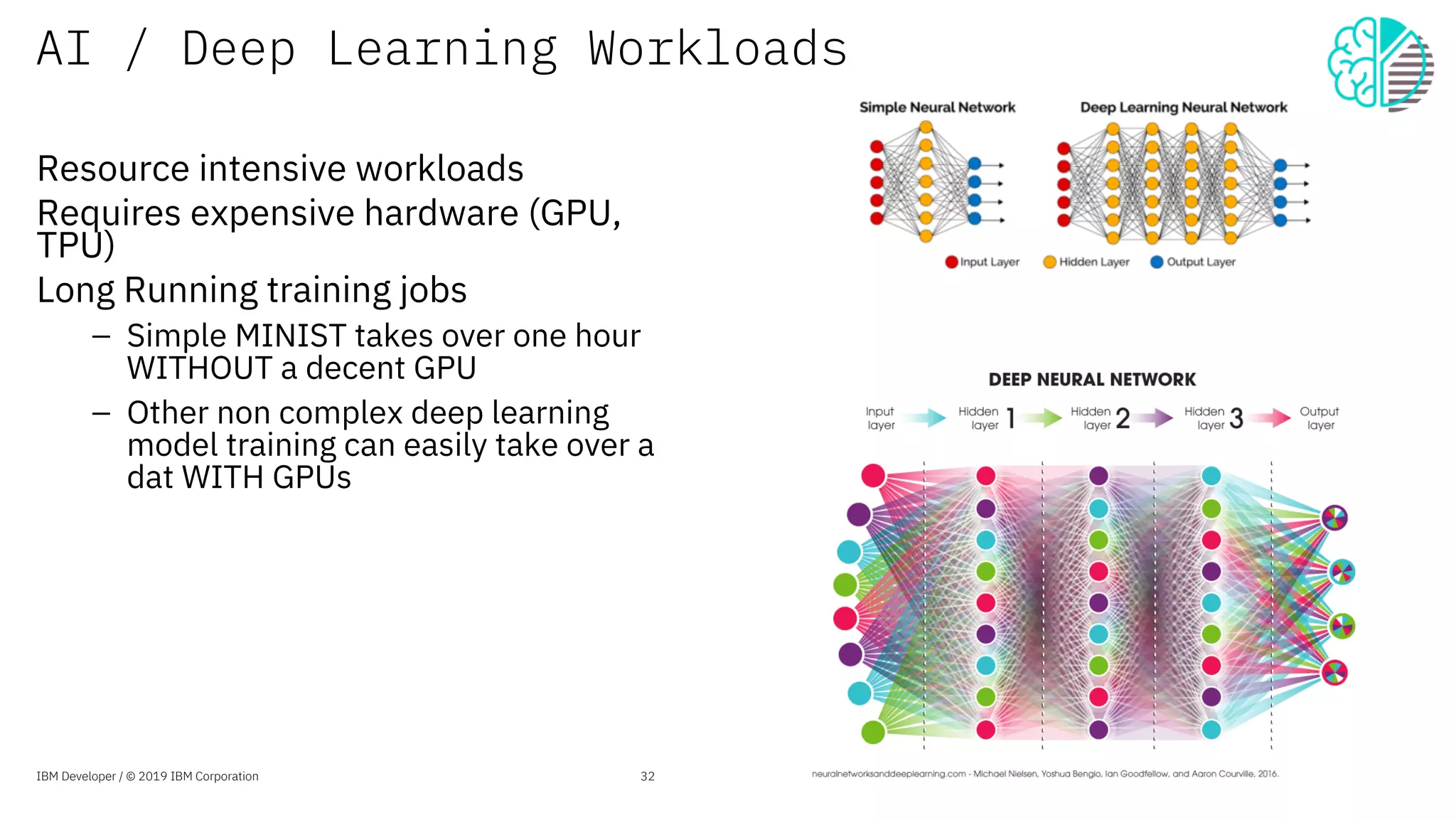

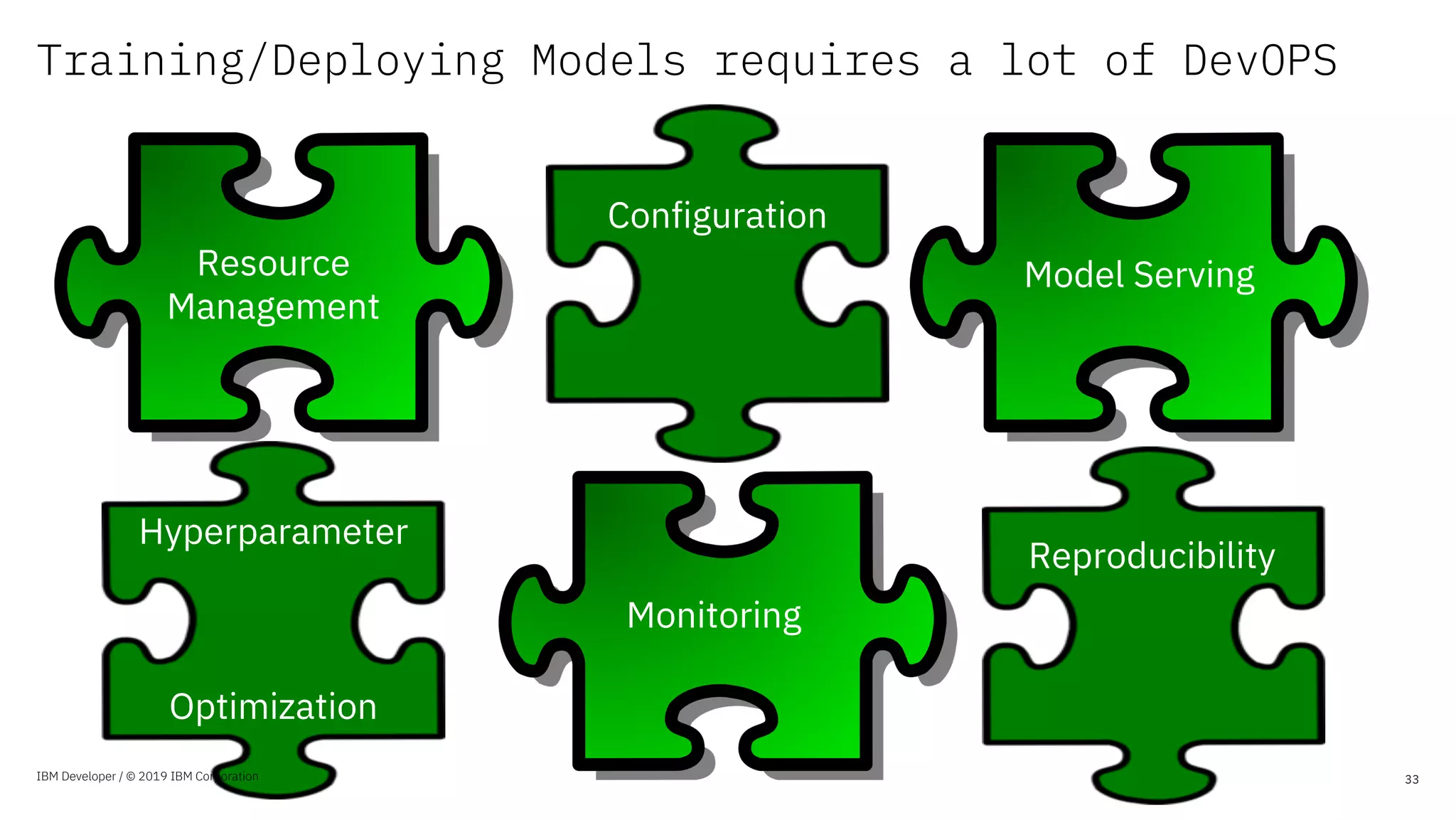

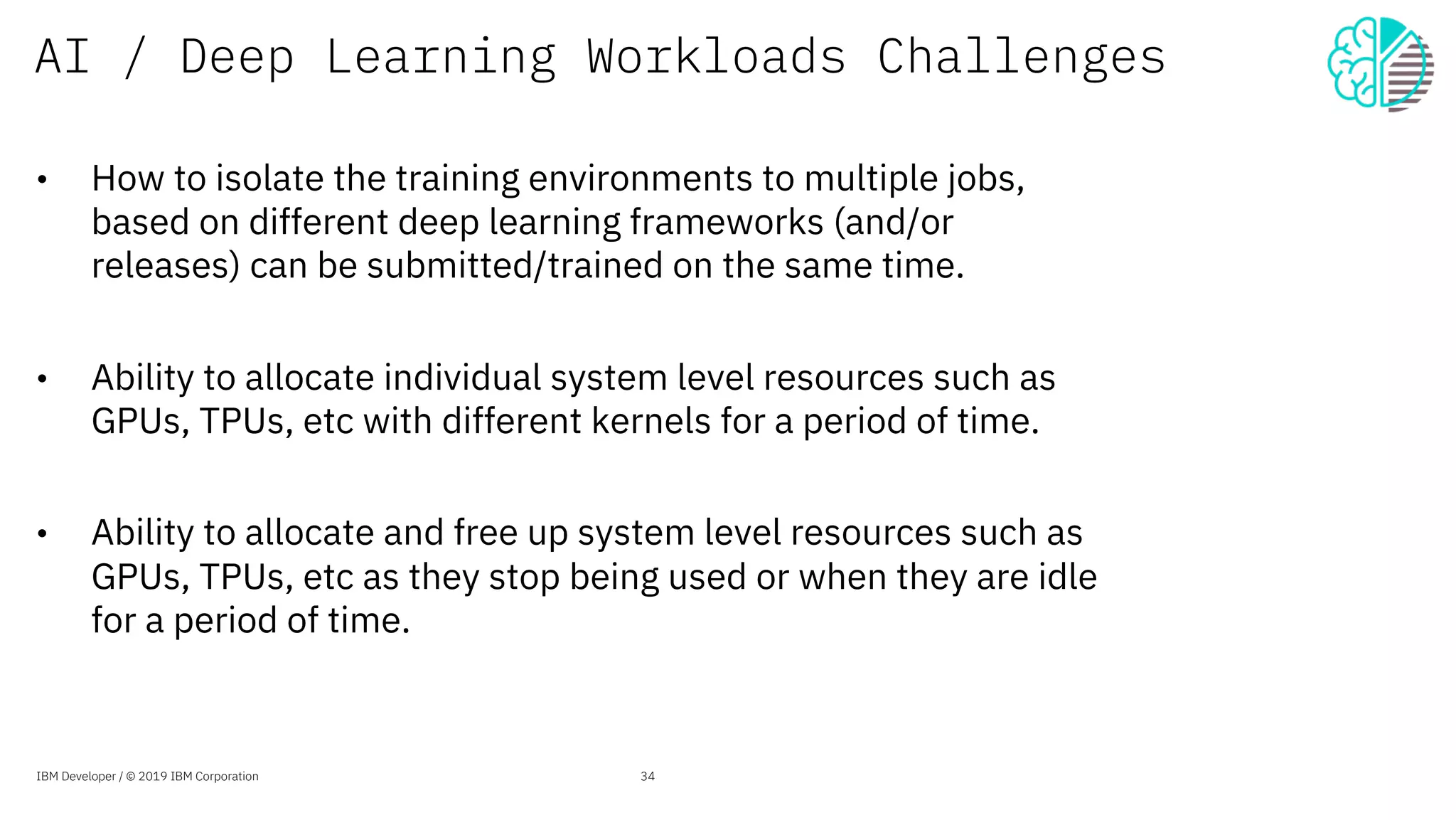

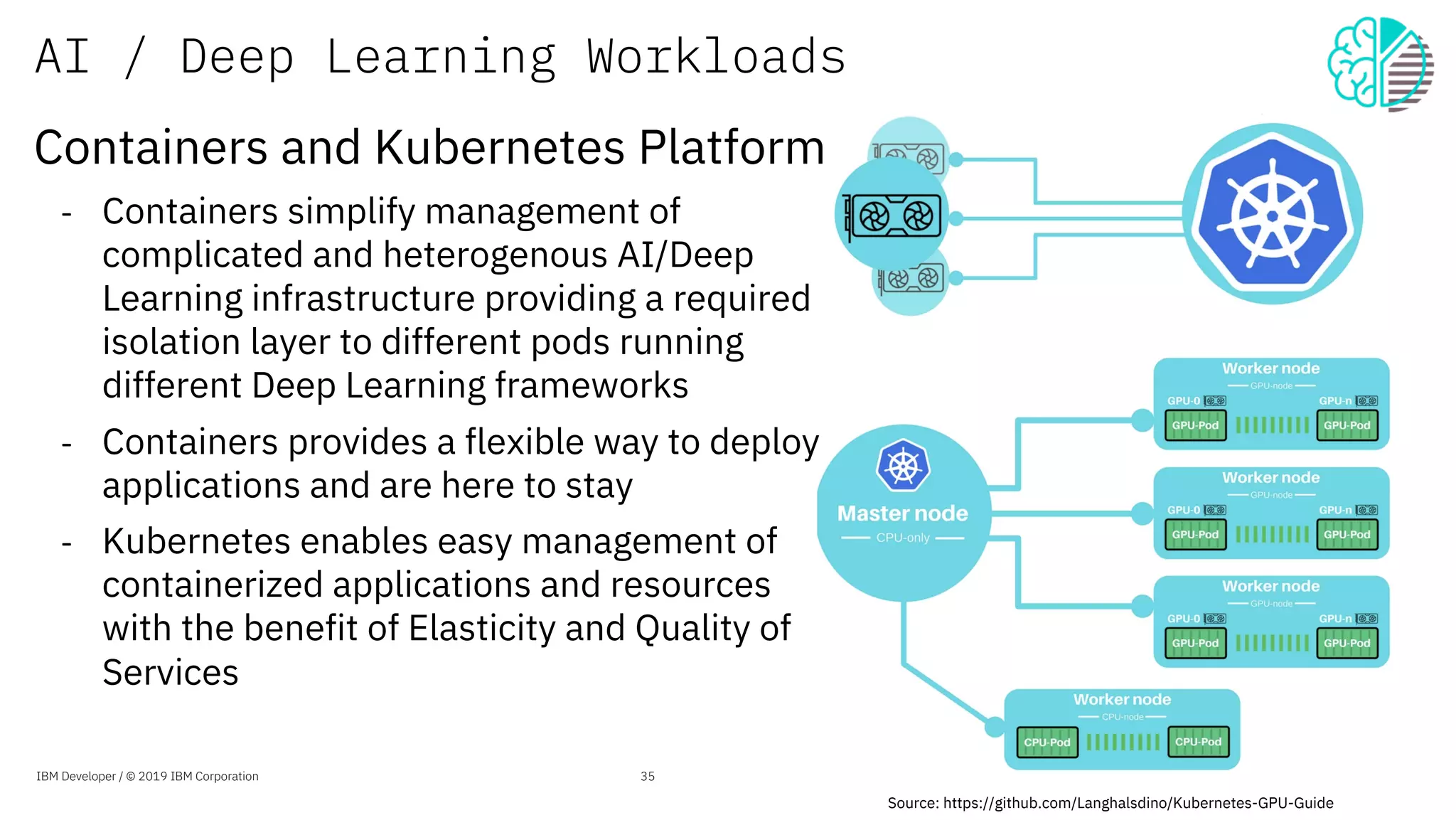

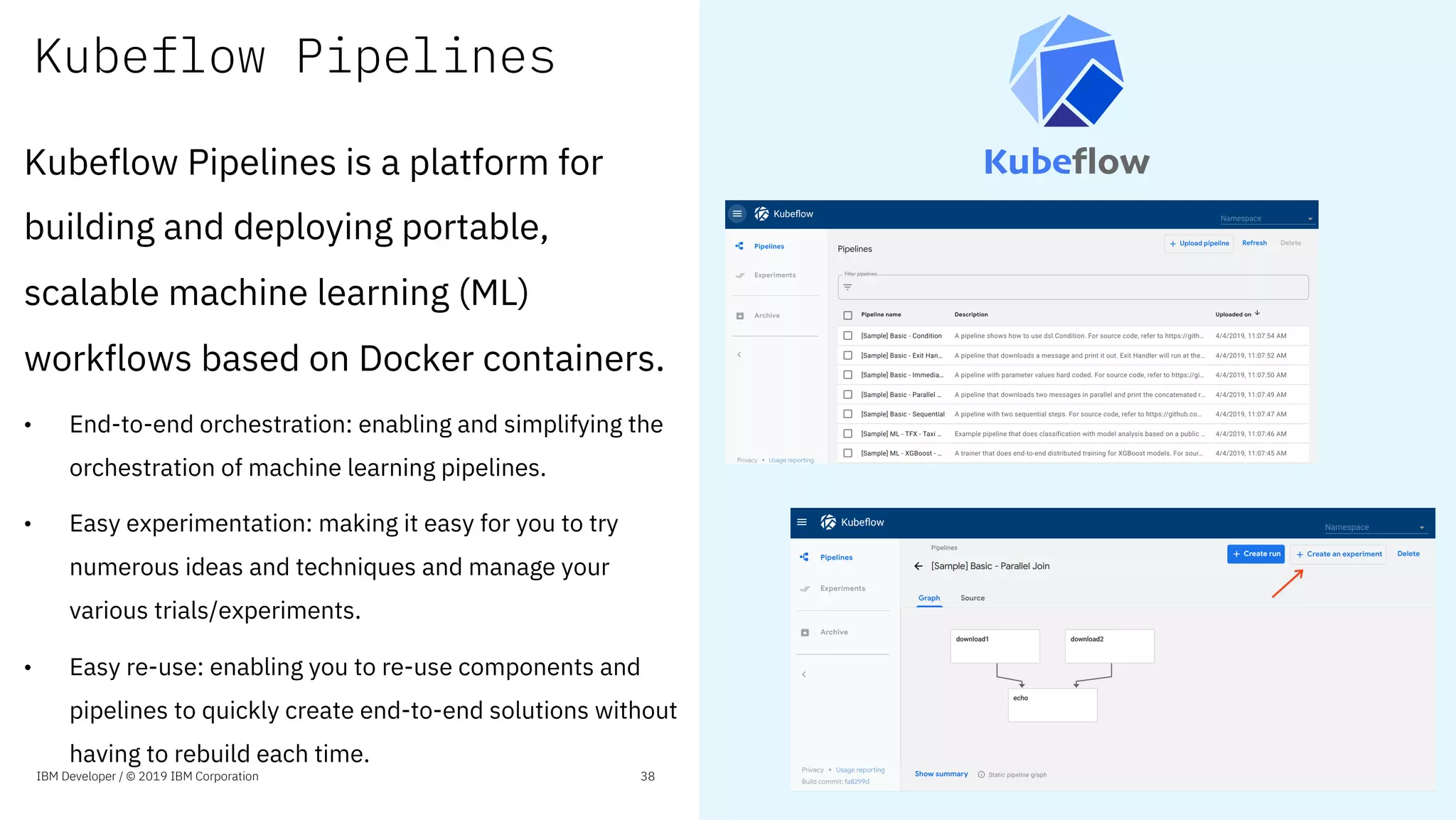

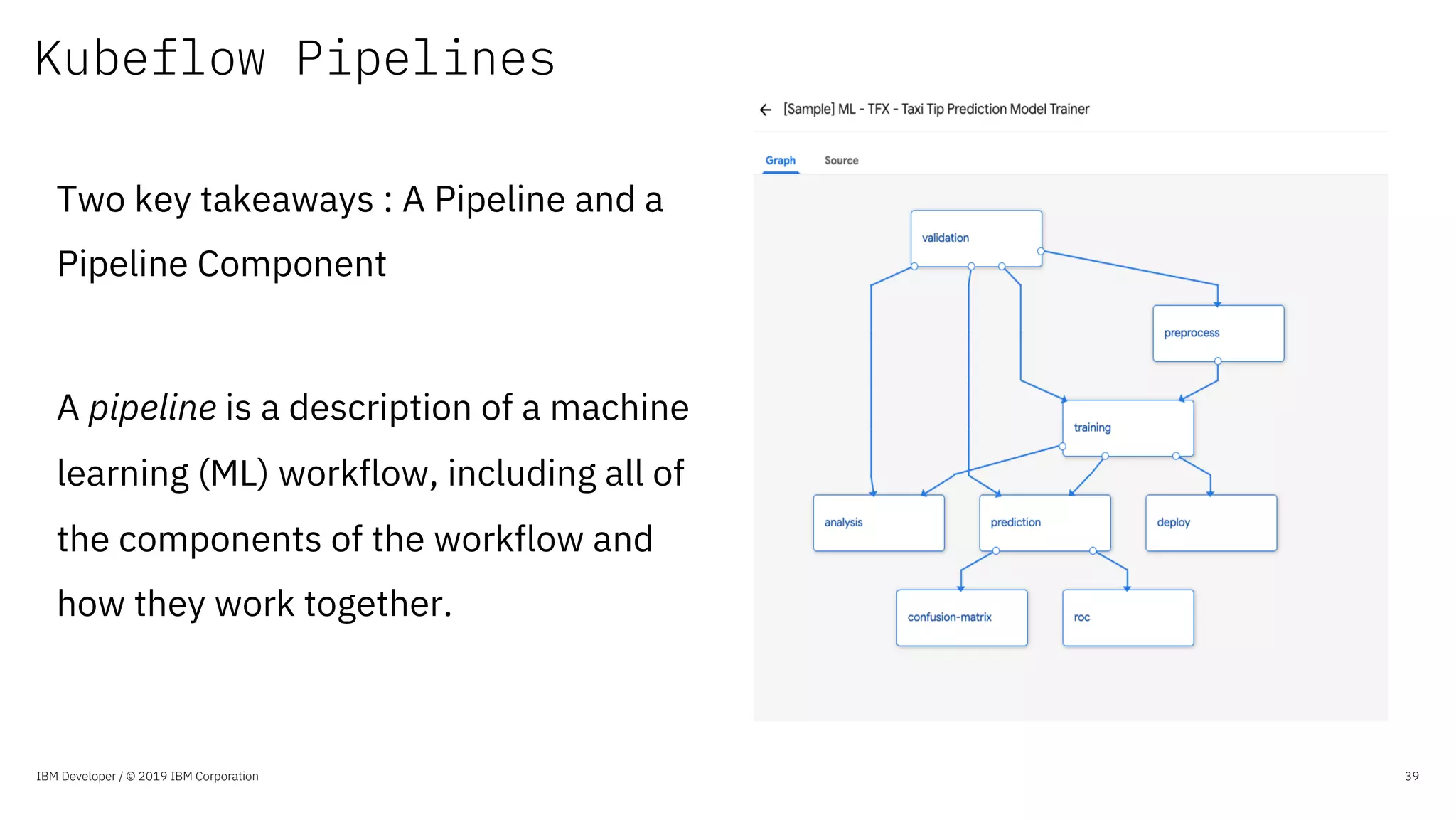

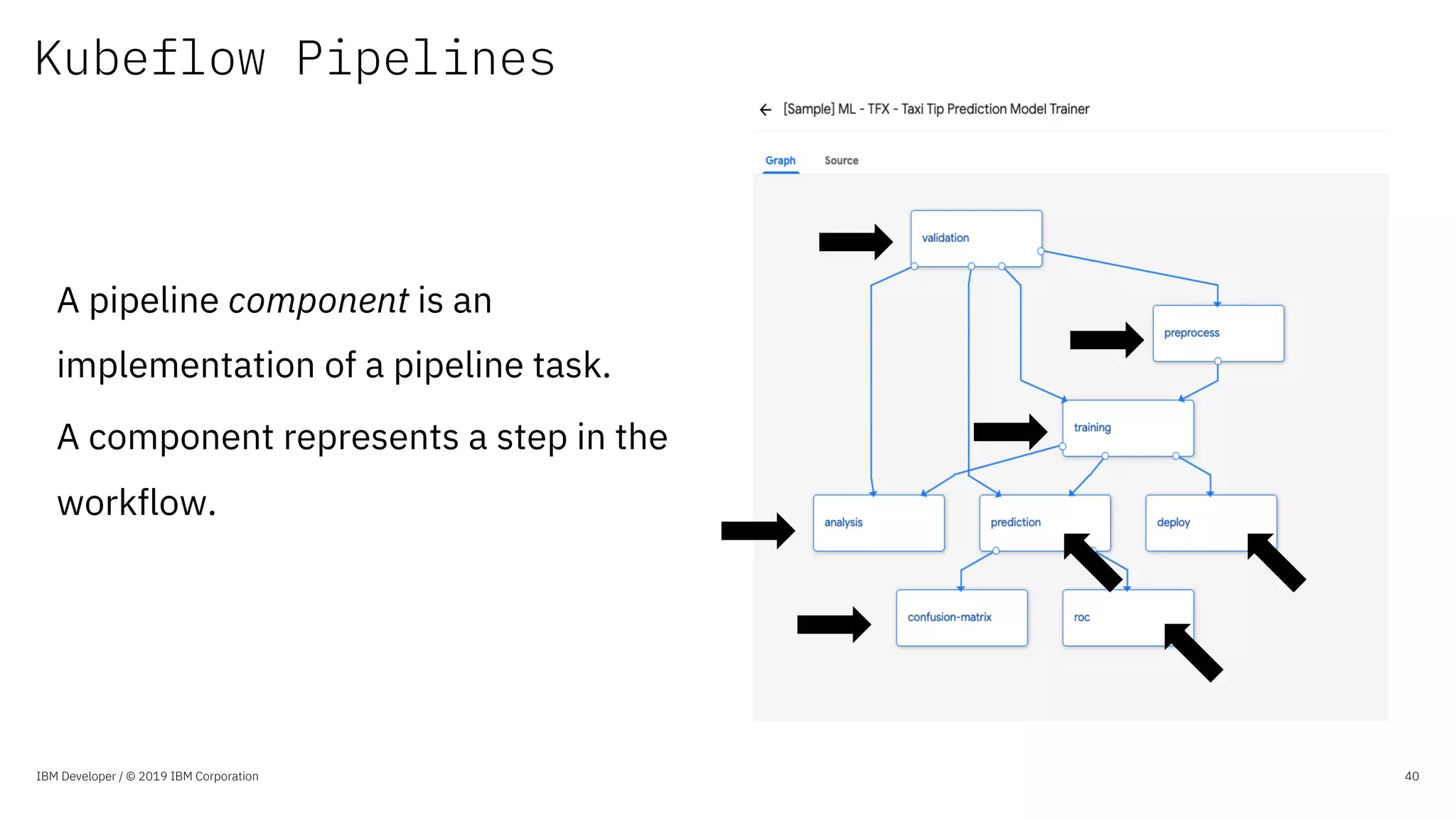

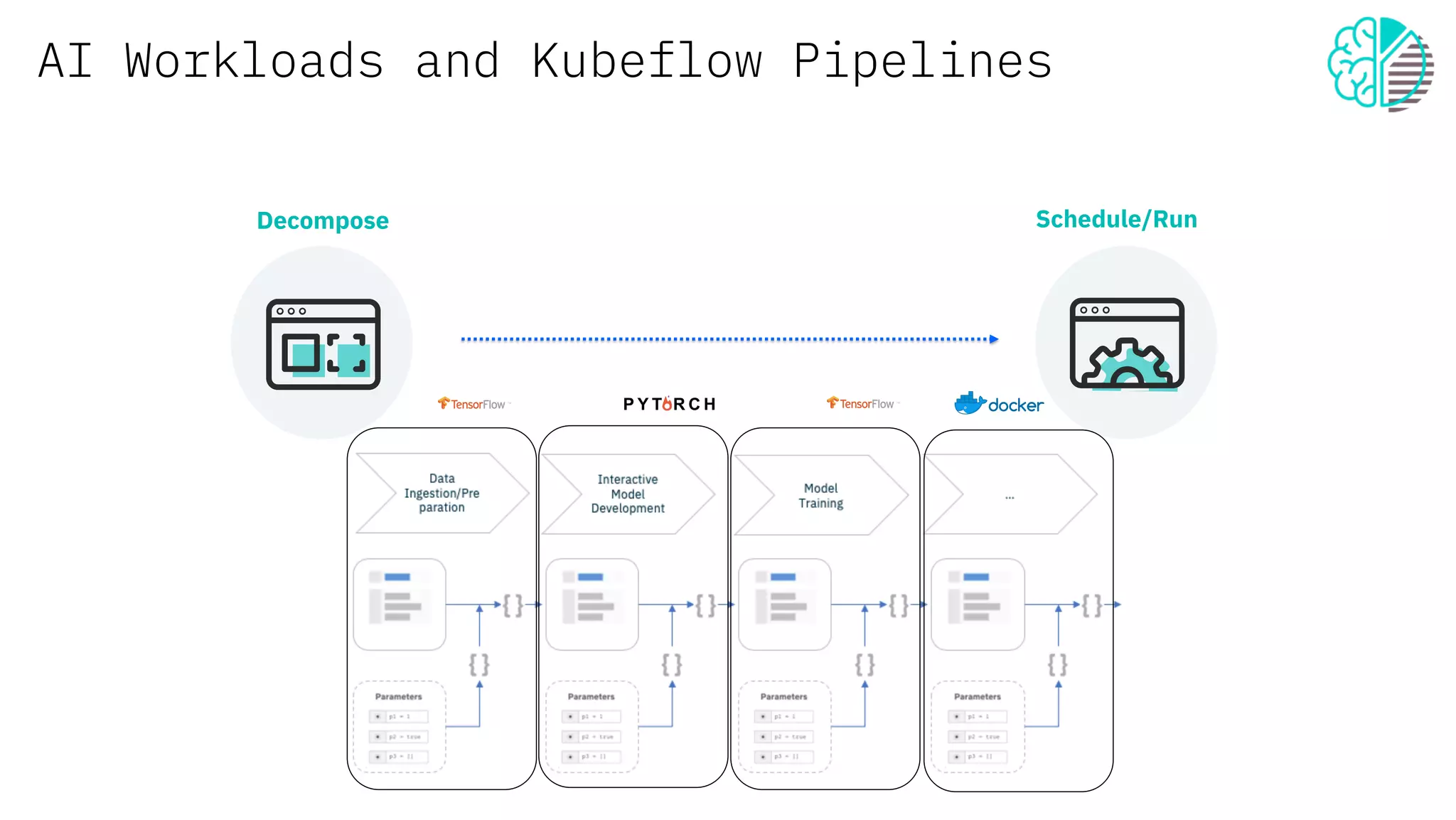

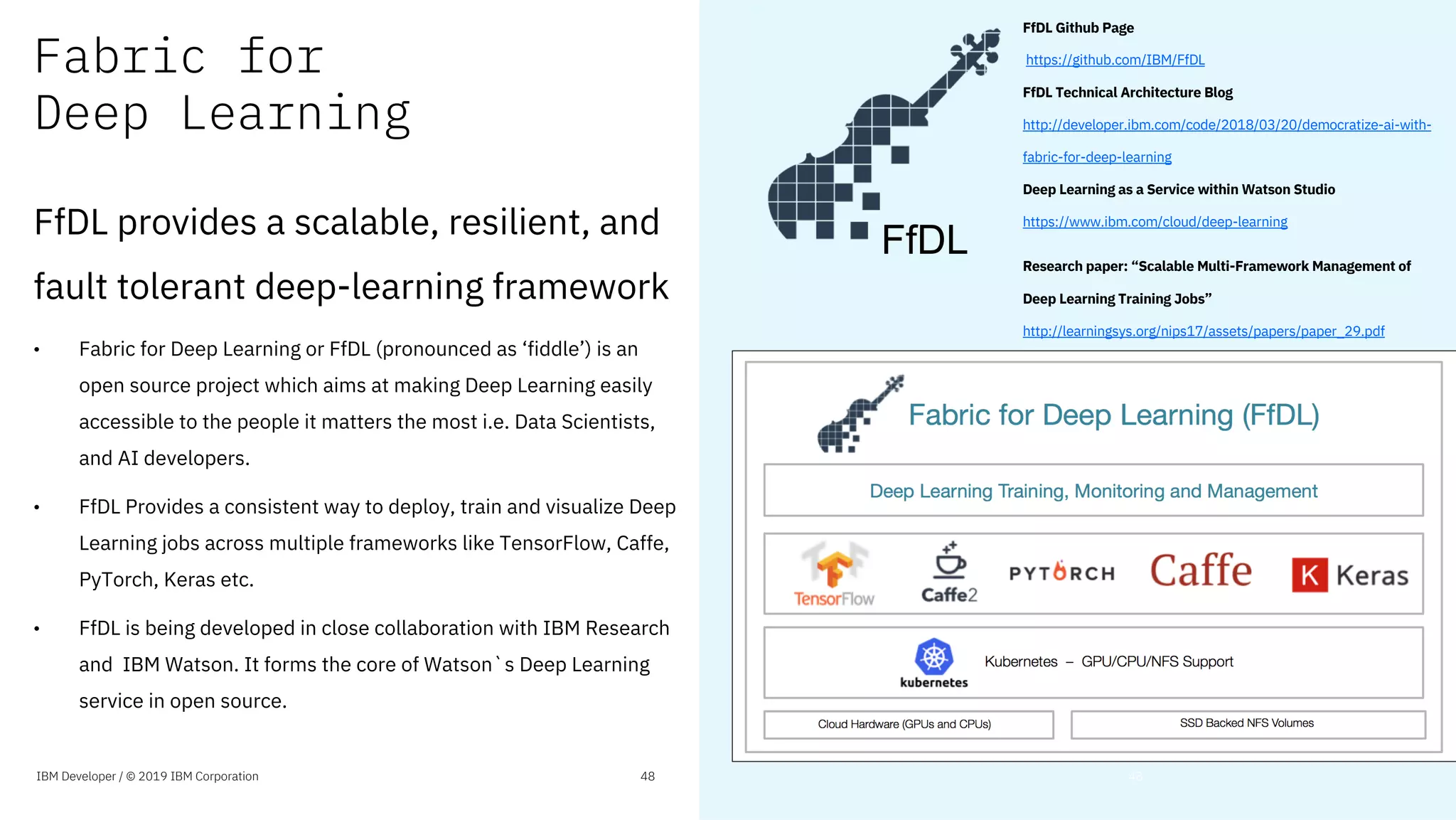

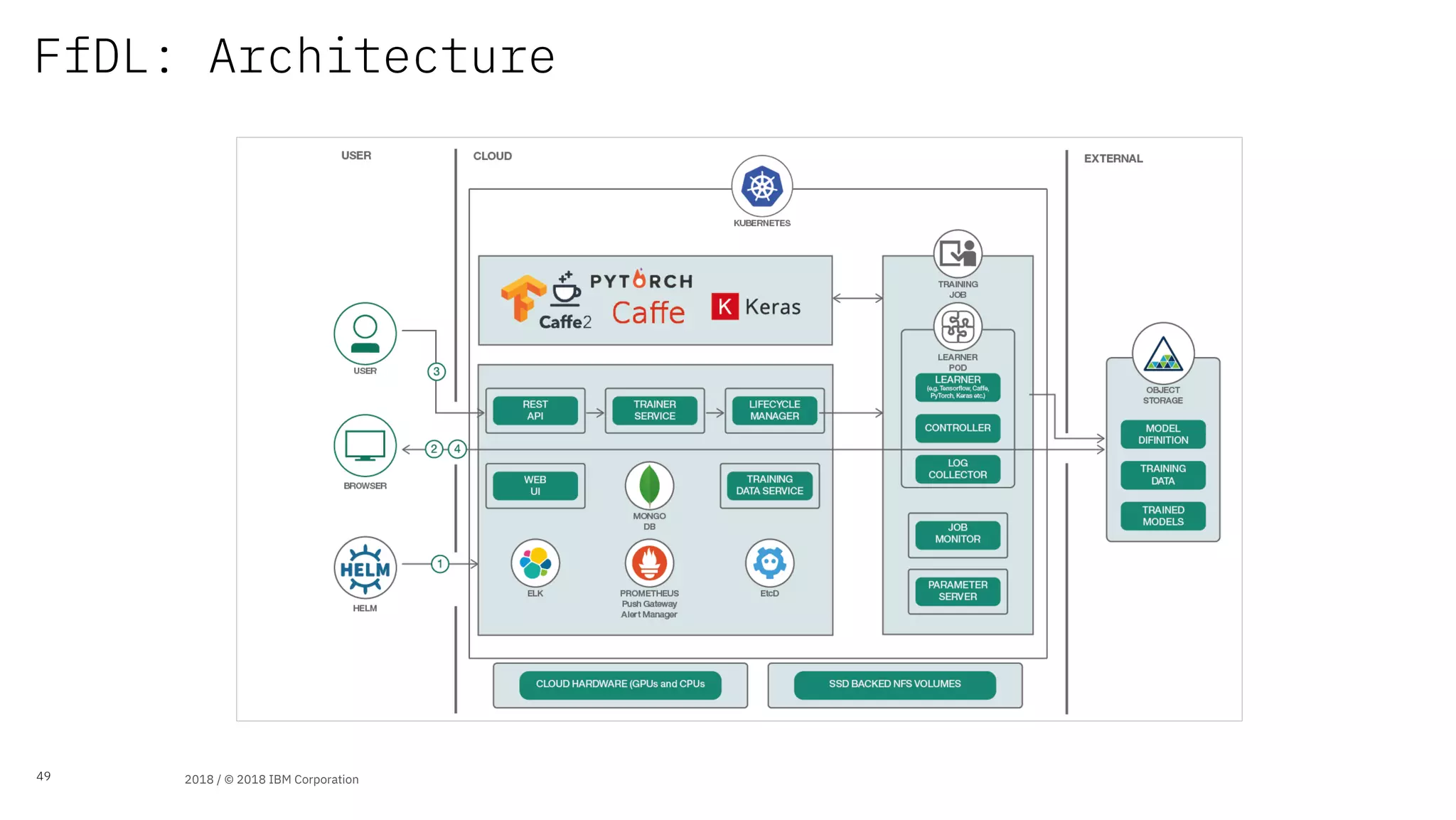

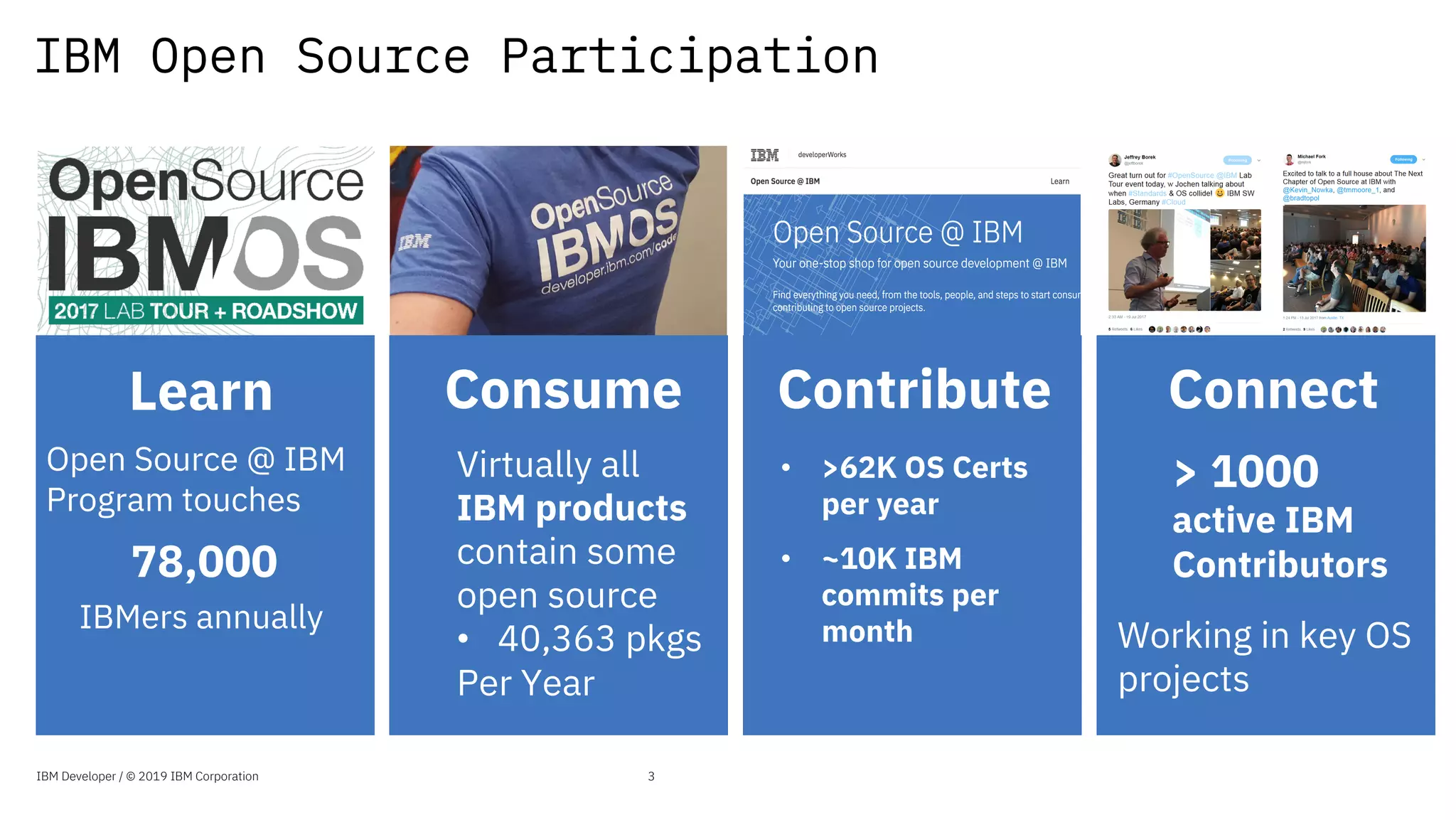

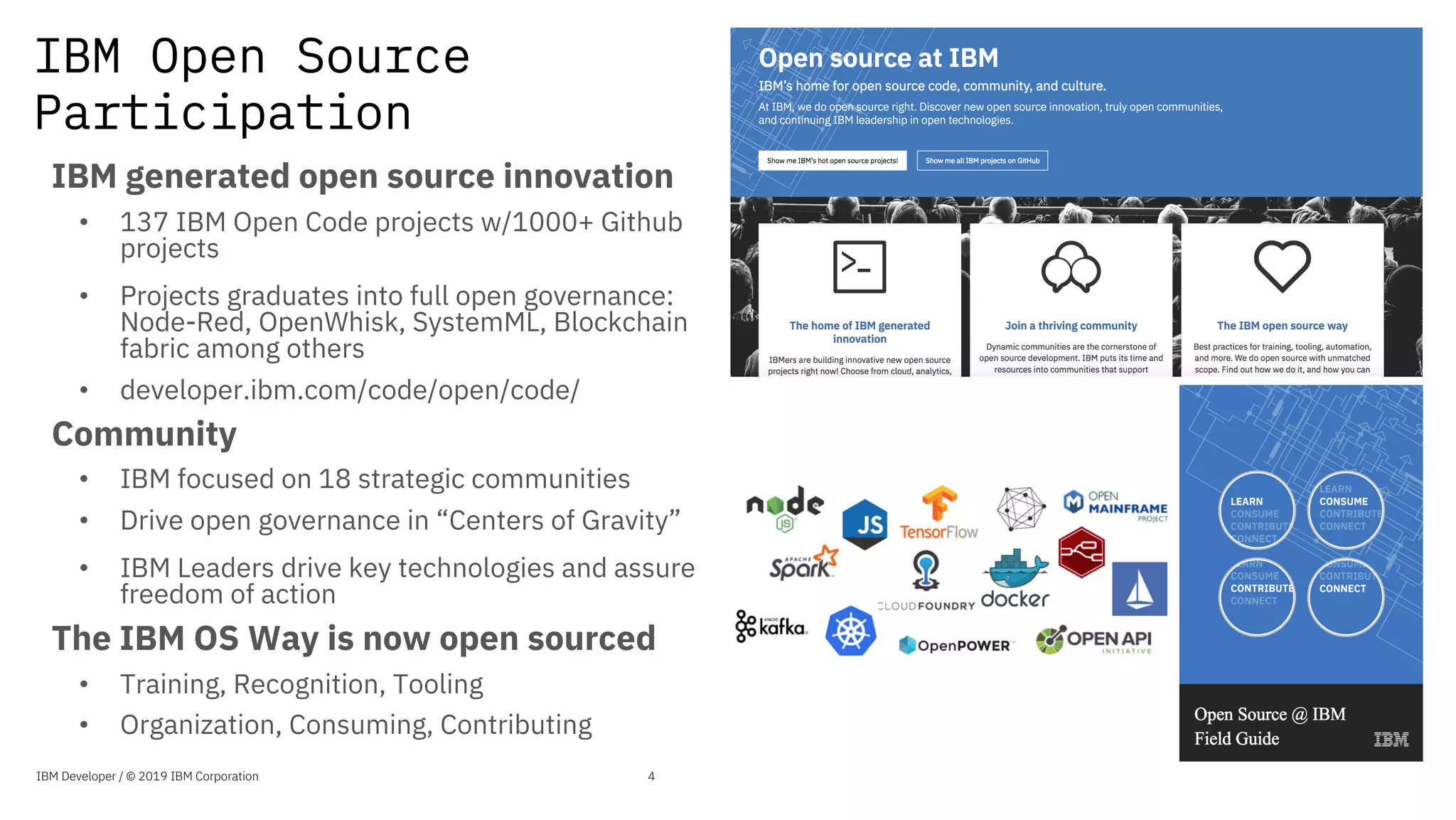

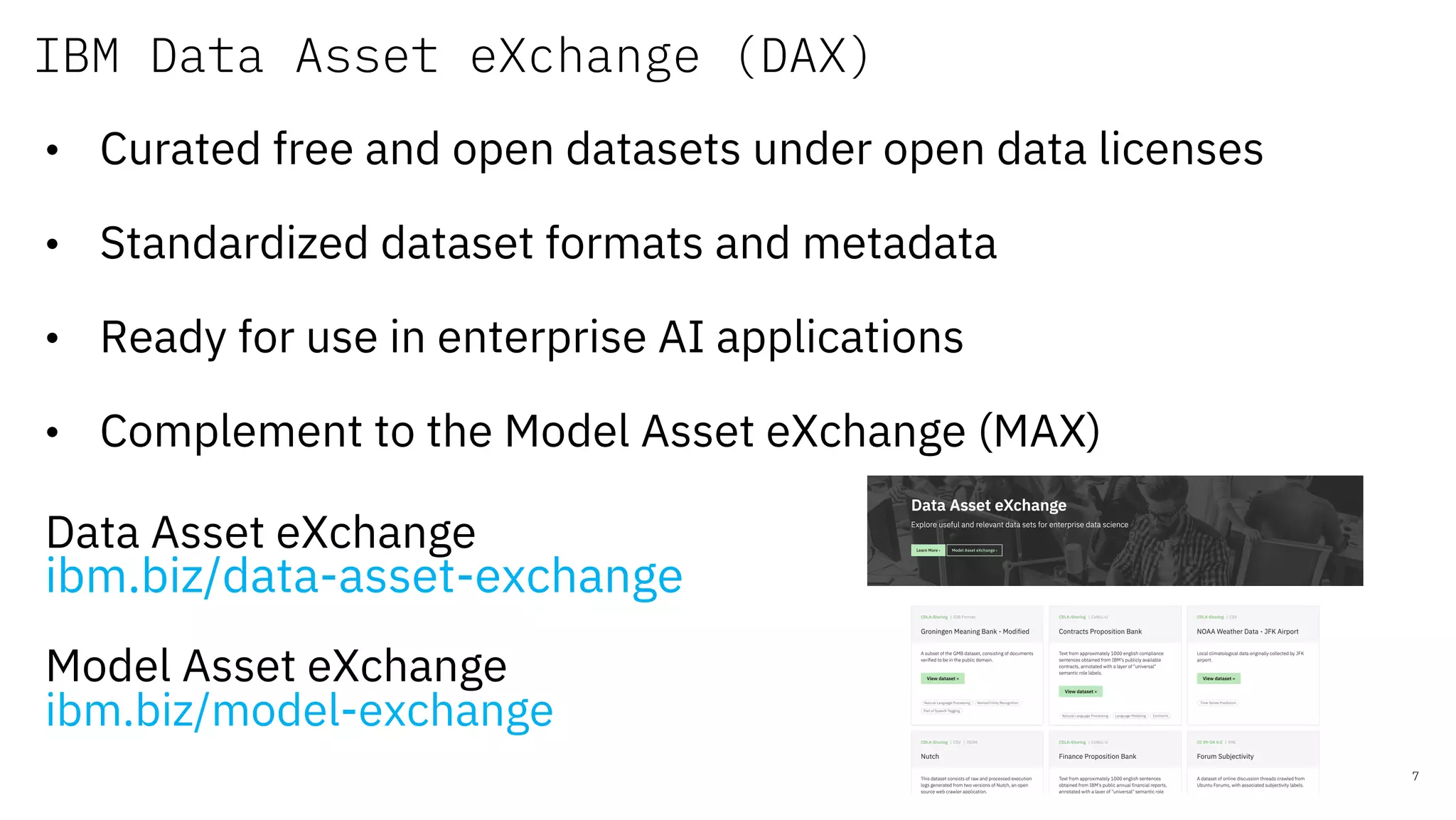

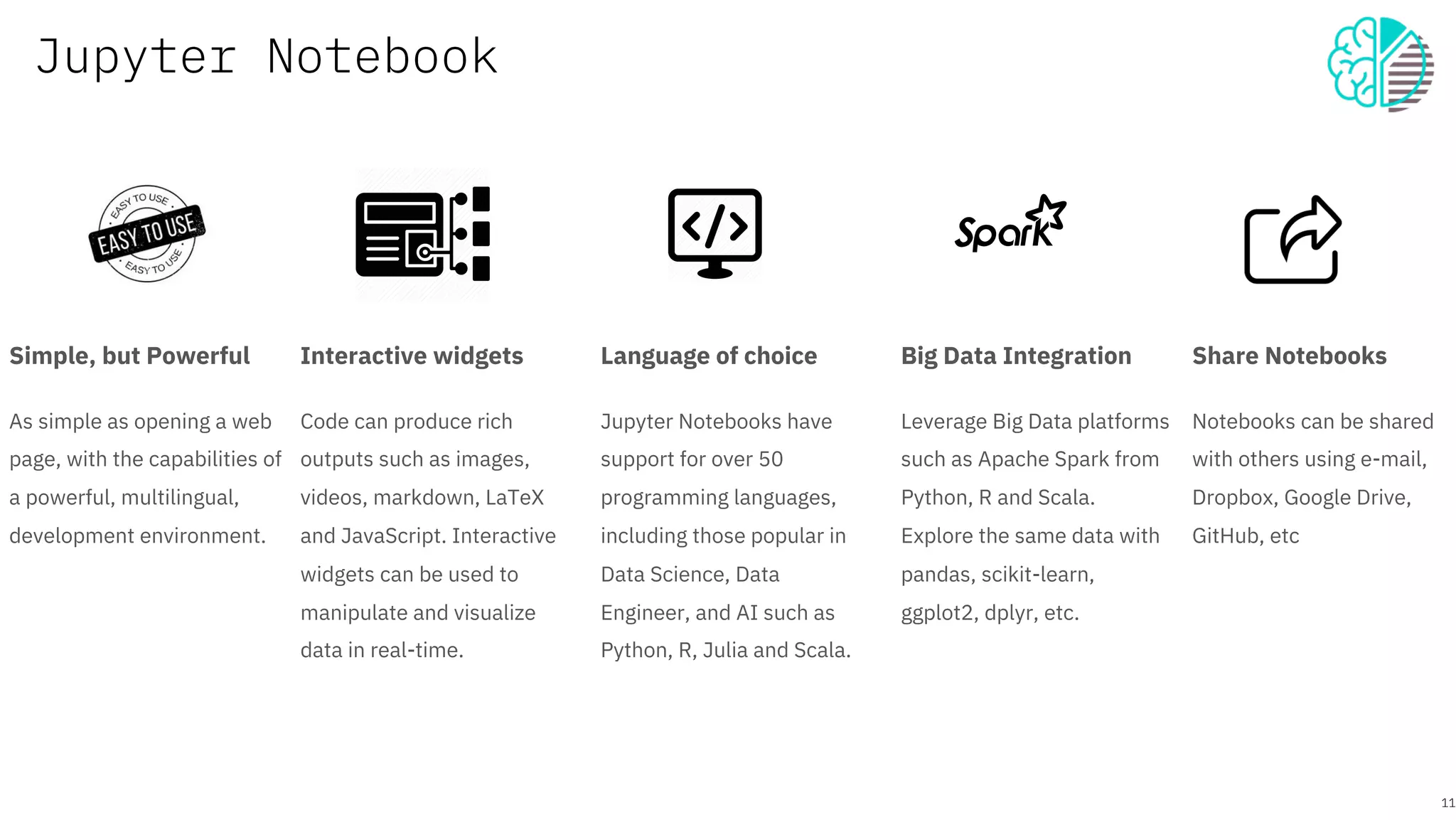

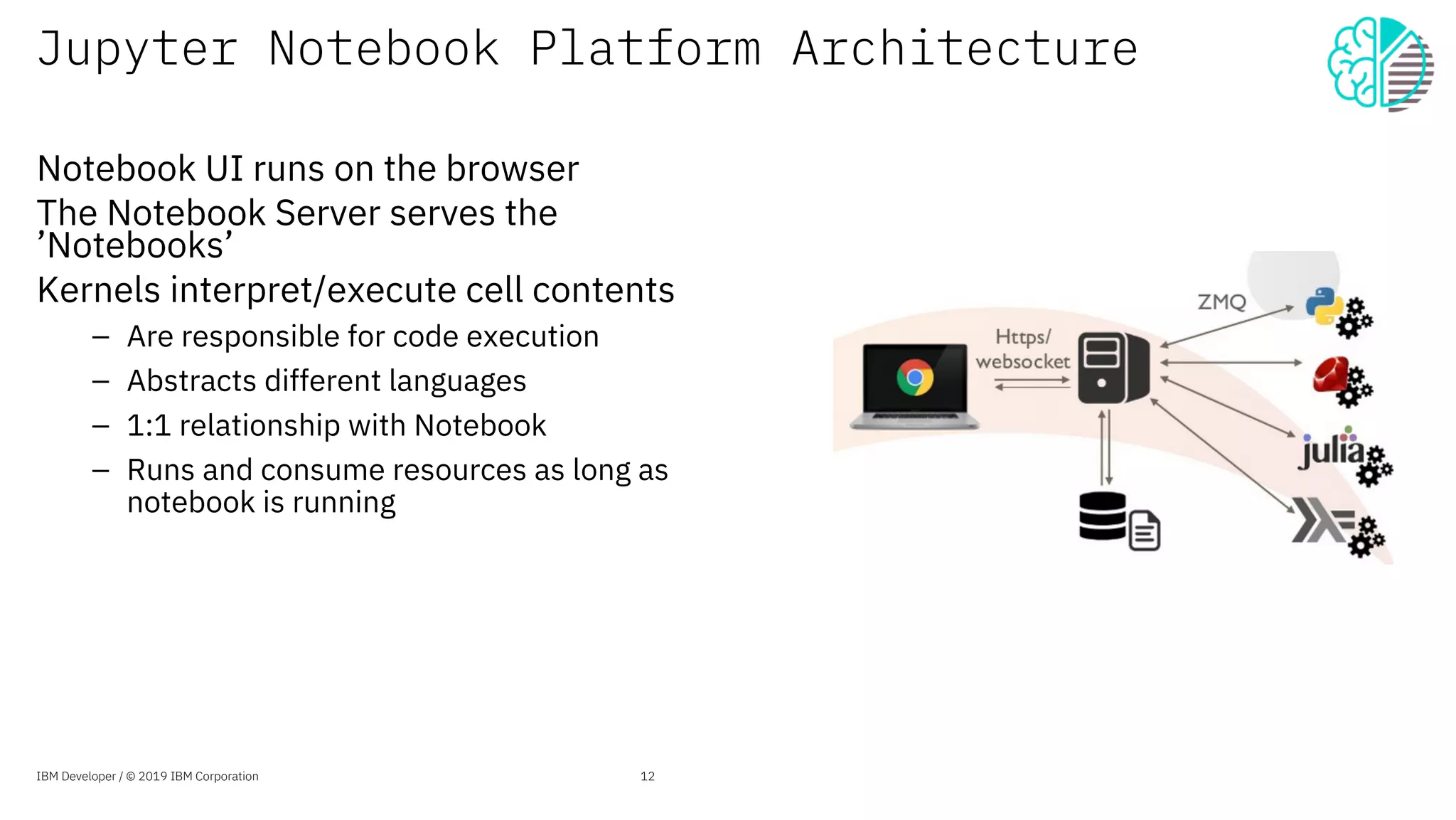

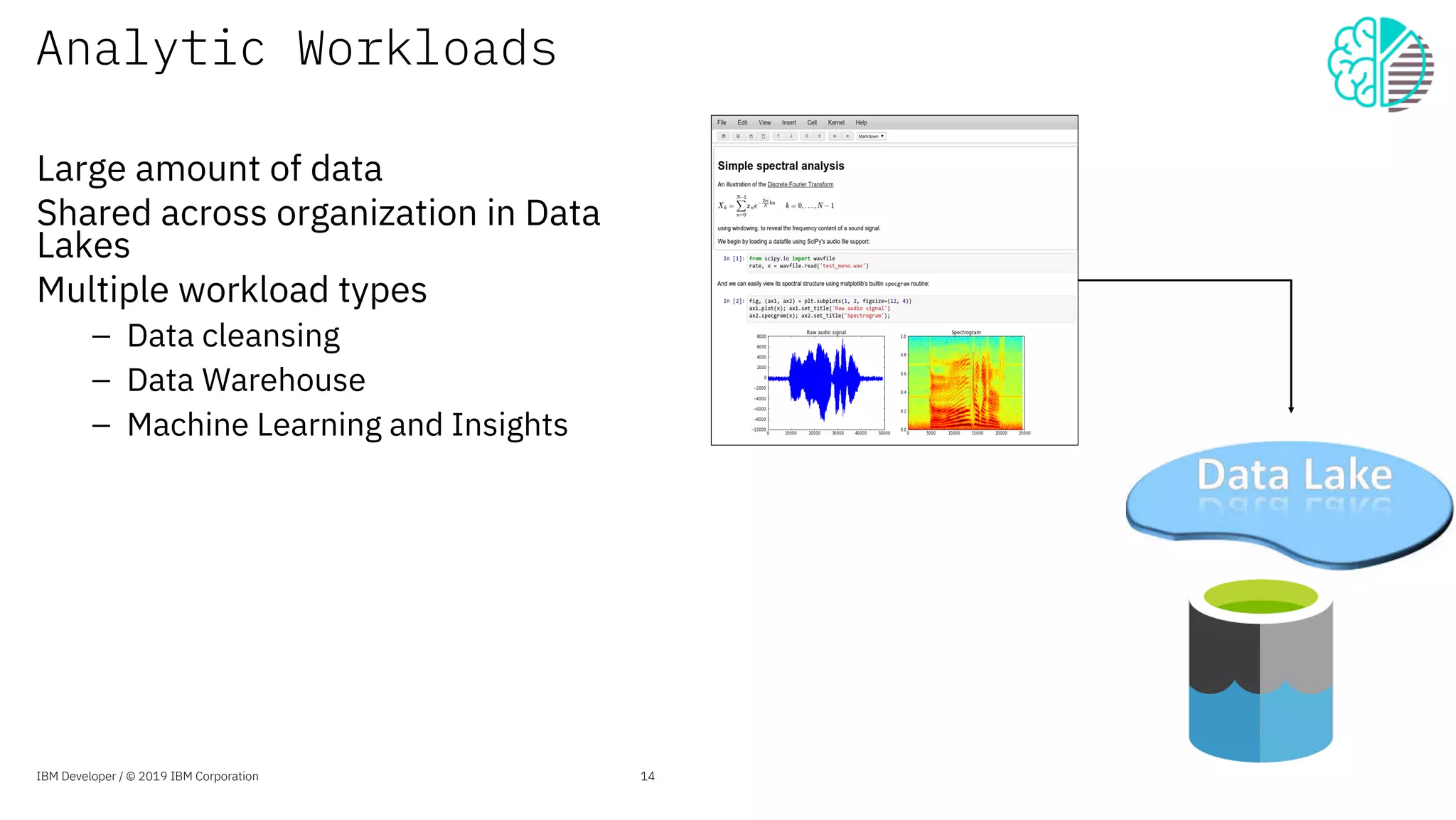

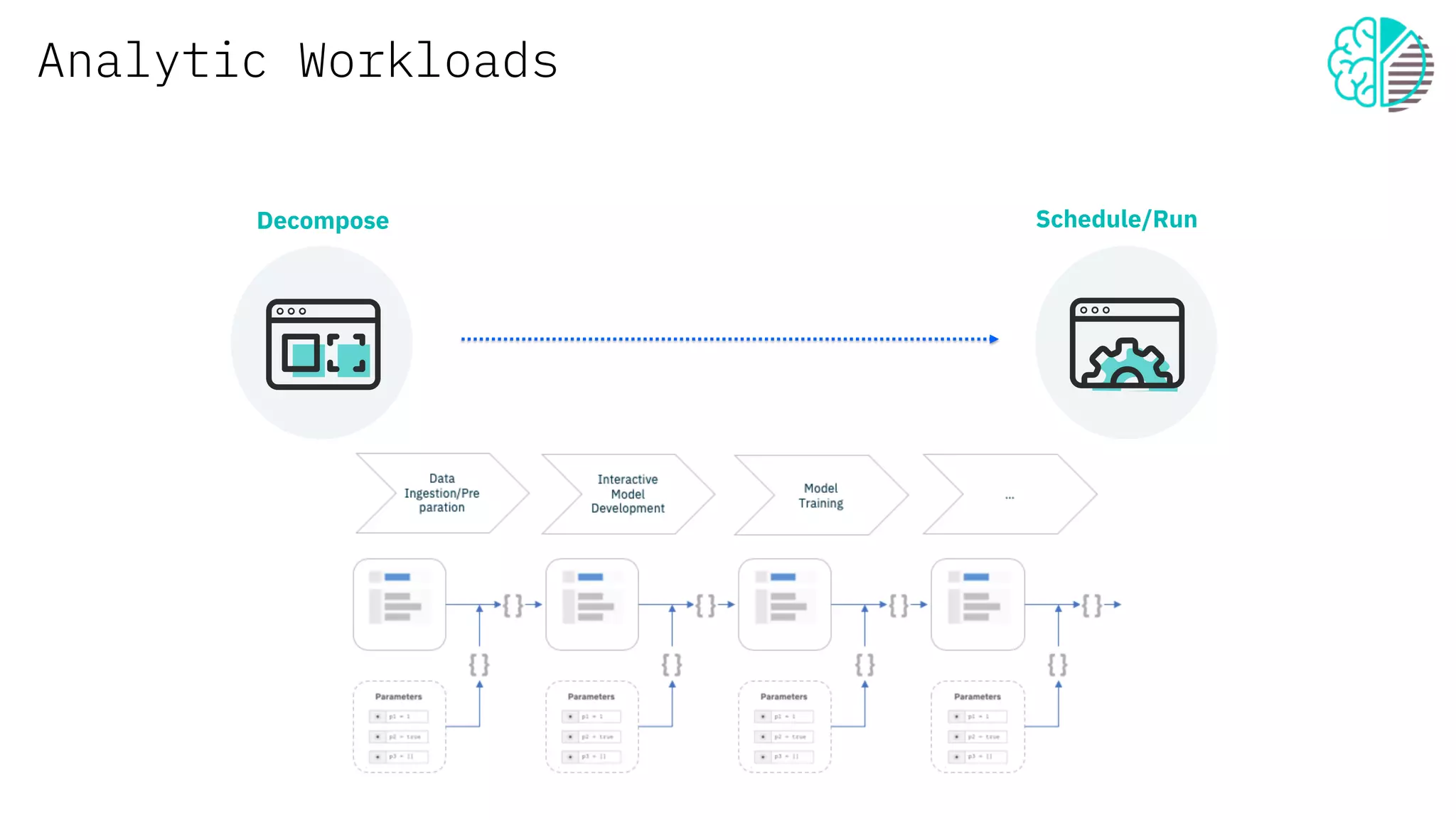

The document outlines the role of Jupyter Notebooks in AI pipelines, emphasizing their interactive capabilities for coding, data visualization, and multi-language support. It discusses IBM's contributions to open source initiatives, the management of AI workloads using platforms like Kubeflow and Apache Airflow, and tools like Papermill for notebook parameterization. Additionally, it highlights the importance of frameworks such as Fabric for Deep Learning in enabling scalable and accessible deep learning solutions.

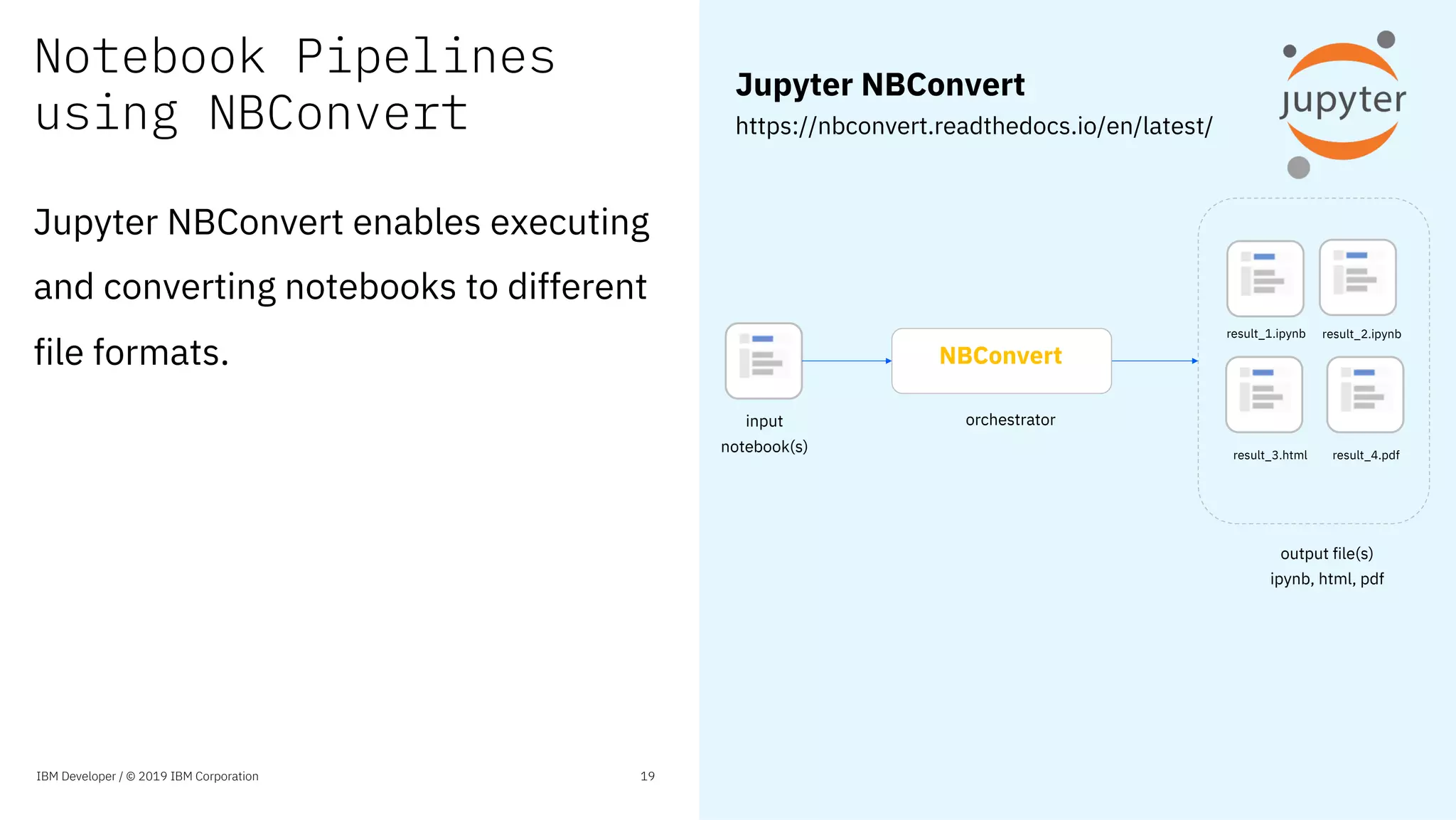

![Notebook Pipelines

using NBConvert

$ pip install nbconvert

$ jupyter nbconvert --to html --execute overview_with_run.ipynb

[NbConvertApp] Converting notebook overview_with_run.ipynb to html

[NbConvertApp] Executing notebook with kernel: python3

[NbConvertApp] Writing 300558 bytes to overview_with_run.html

$ open overview_with_run.html

IBM Developer / © 2019 IBM Corporation 20

Jupyter NBConvert

https://nbconvert.readthedocs.io/en/latest/

Jupyter NBConvert enables executing

and converting notebooks to different

file formats.

Advantages

– Support notebook chaining

– Convert results to immutable formats

Limitations

– No support for parameters](https://image.slidesharecdn.com/aipipelinespoweredbyjupyternotebooks-190717043349/75/Ai-pipelines-powered-by-jupyter-notebooks-20-2048.jpg)