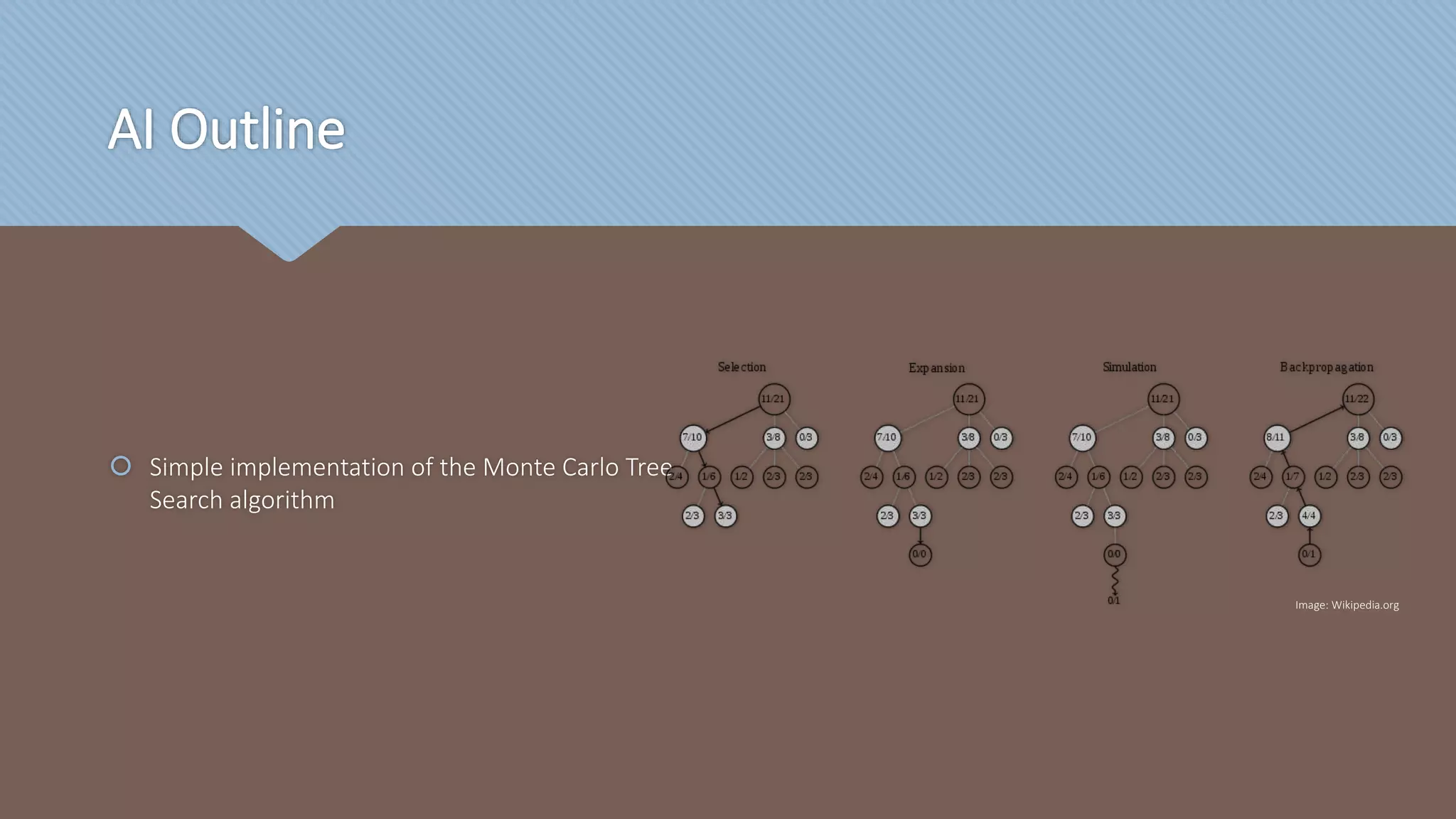

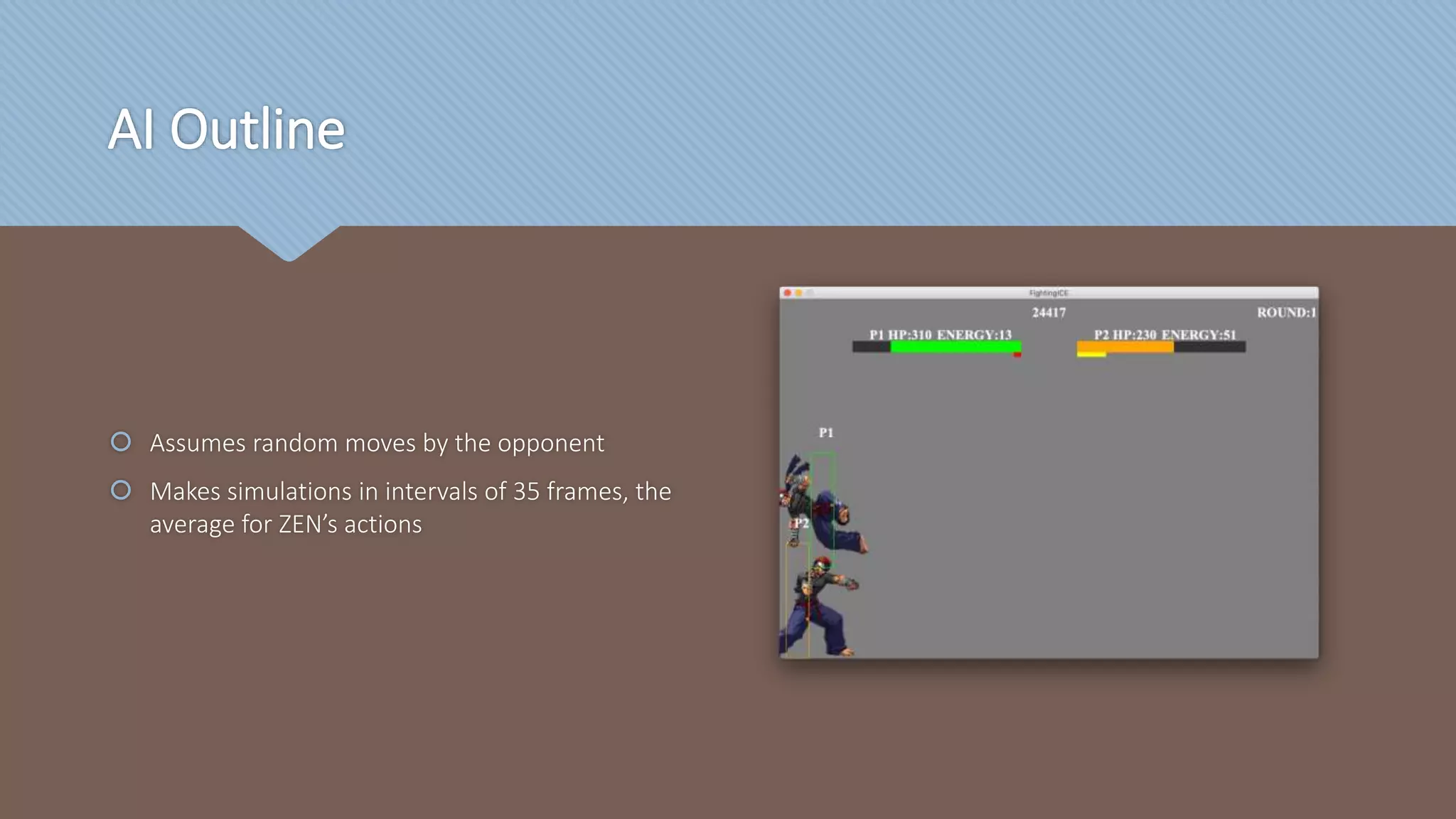

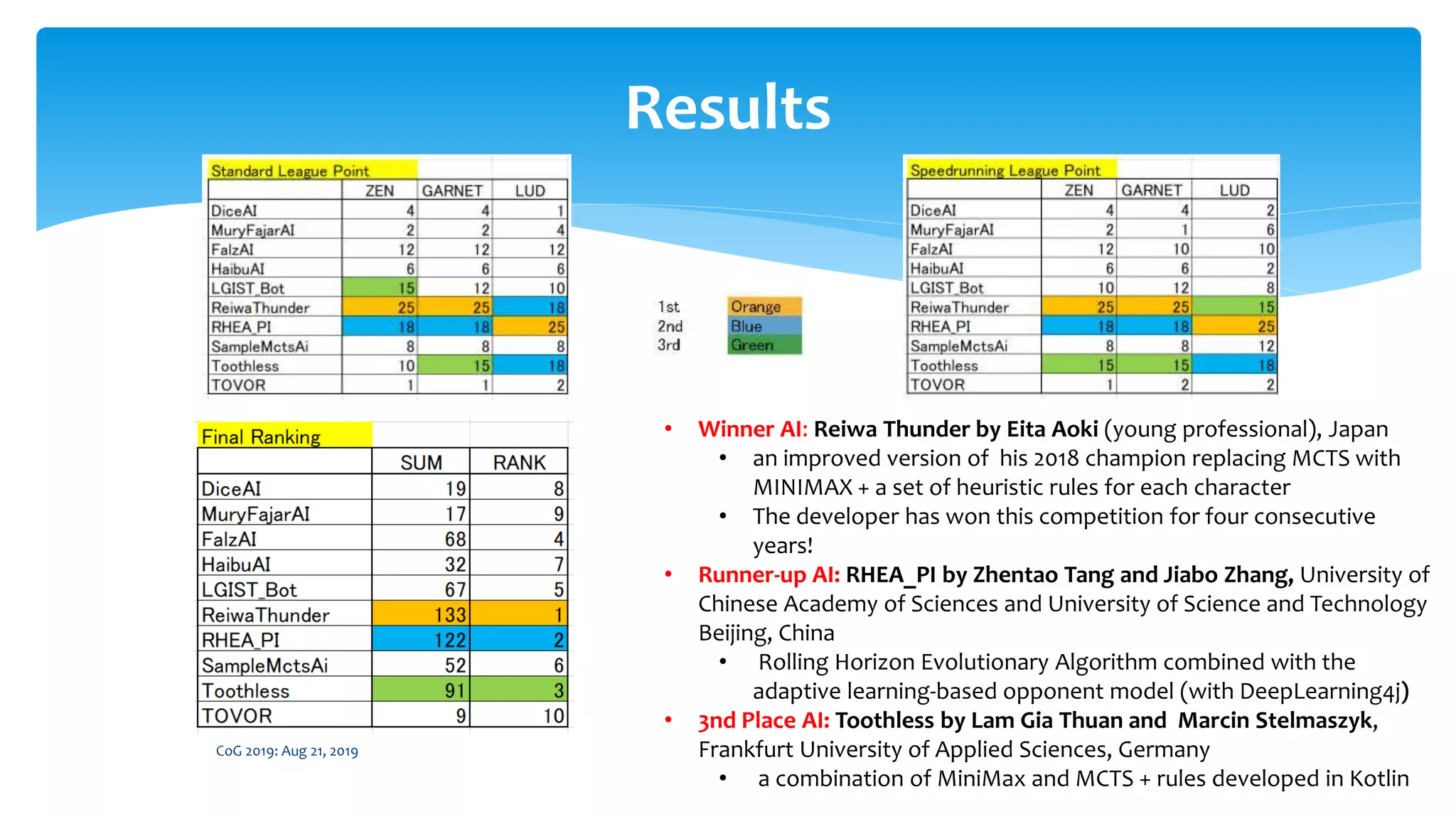

The 2019 Fighting Game AI Competition featured various teams developing AI to compete in gaming challenges, focusing on techniques like Monte Carlo Tree Search and heuristic rules. The winning AI, 'Reiwa Thunder', utilized a combination of minimax and heuristics, marking a fourth consecutive victory for its developer. The event highlighted ongoing research, tournament results, and advancements in AI capabilities against unseen opponents.

![Reference

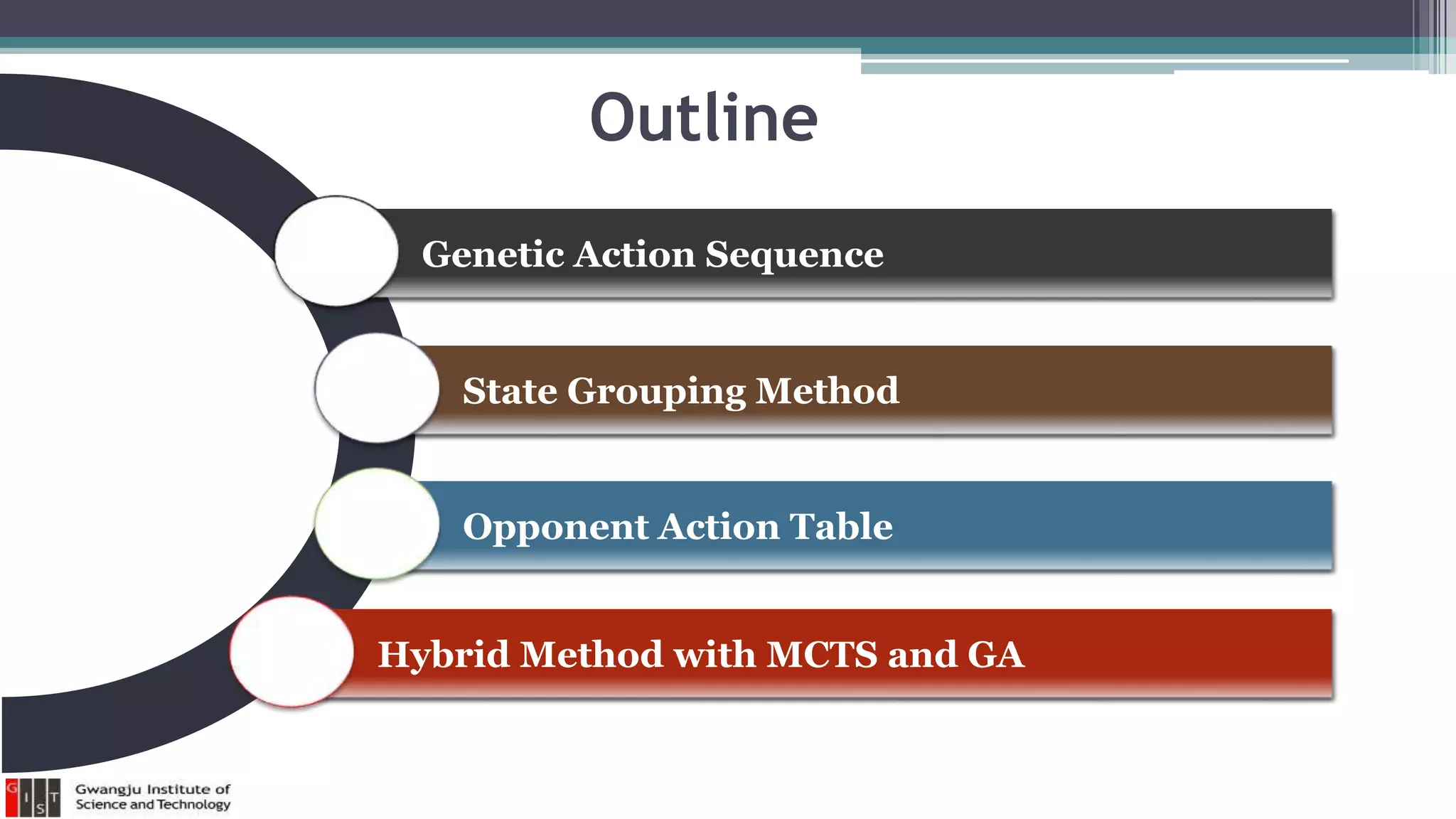

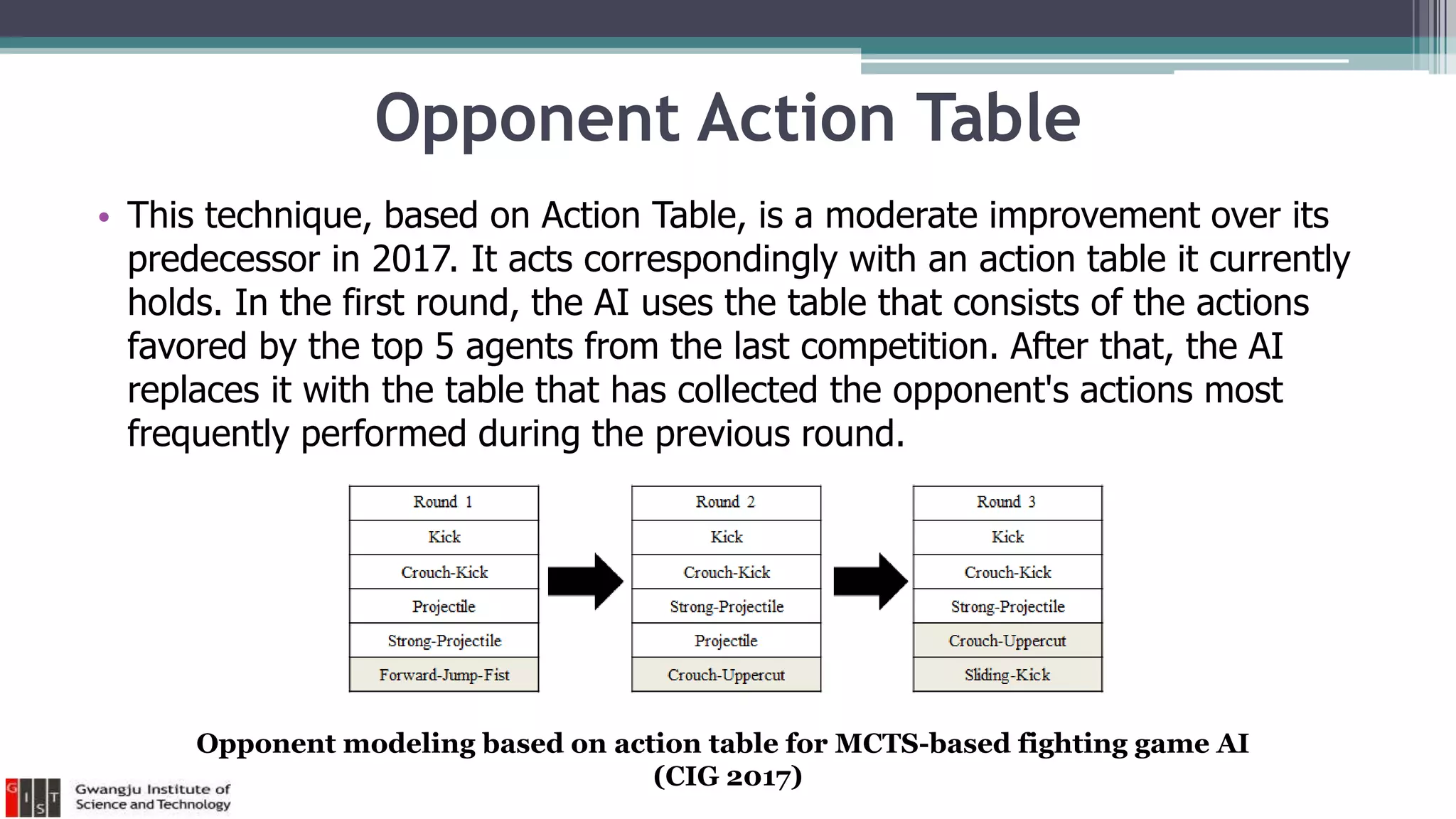

[1] Opponent modeling based on action table for MCTS-based

fighting game AI, CIG 2017 - (Link)

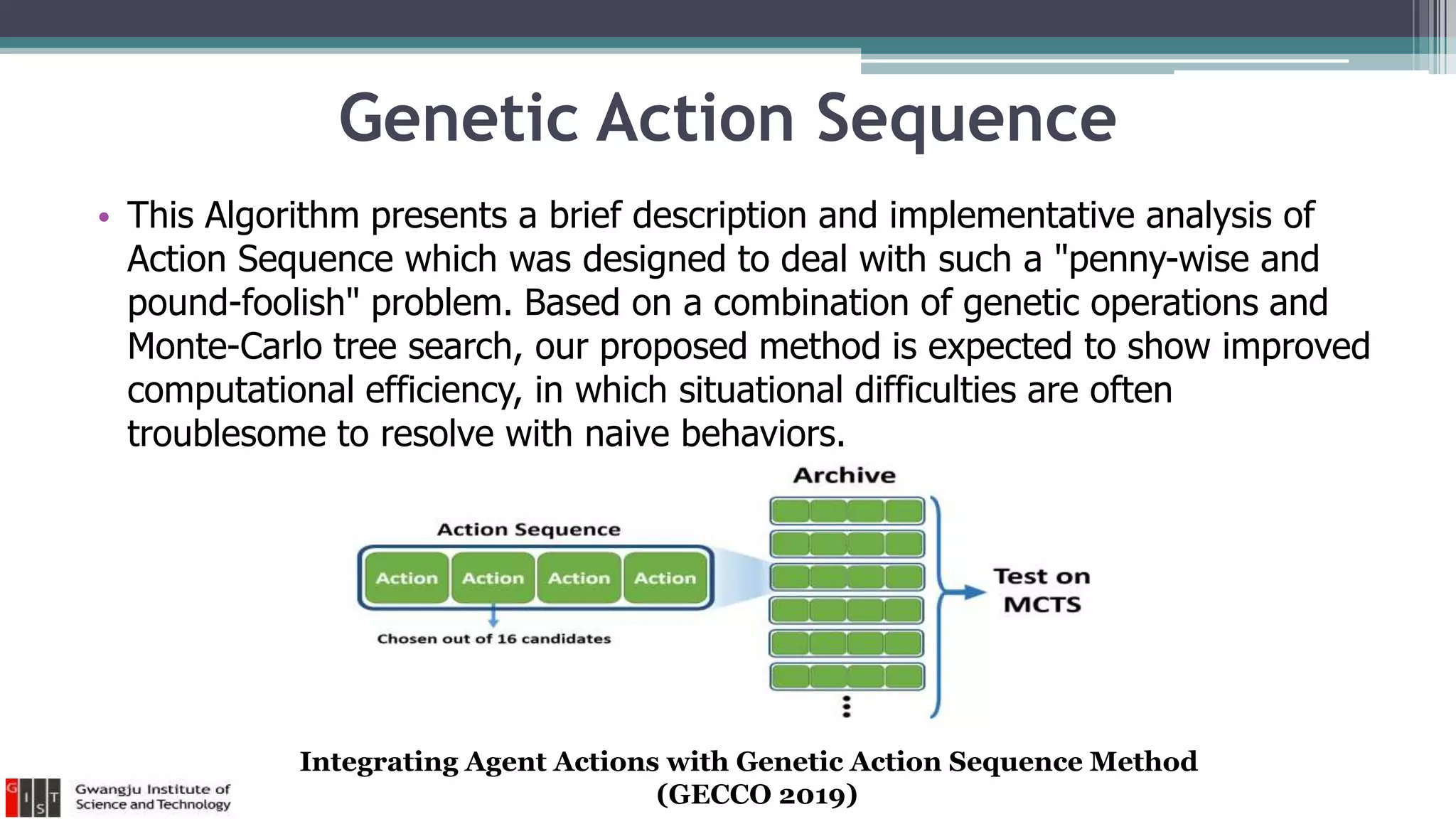

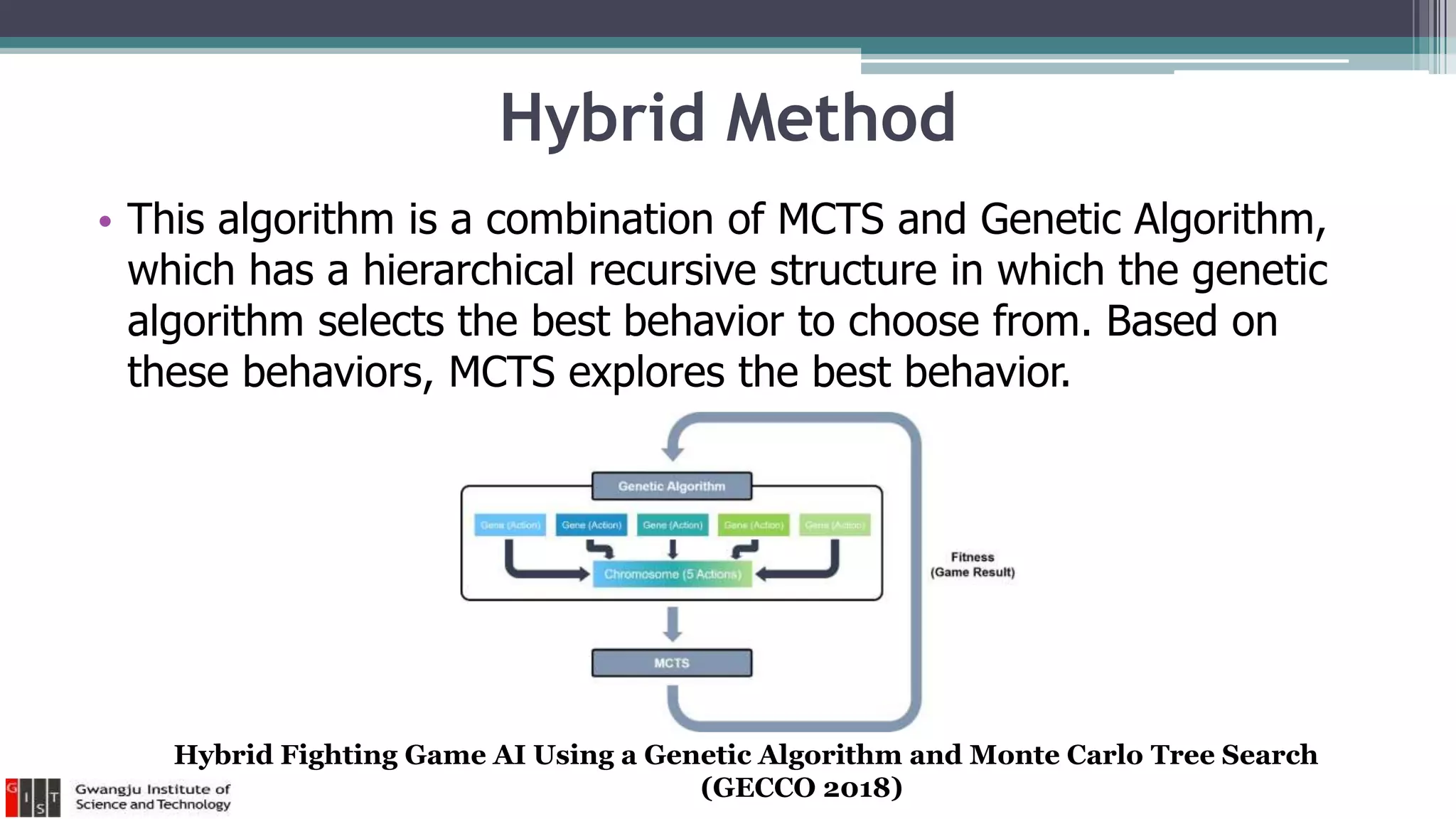

[2] Hybrid fighting game AI using a genetic algorithm and Monte

Carlo tree search, GECCO 2018 – (Link)

[3] Integrating agent actions with genetic action sequence method,

GECCO 2019 – (Link)](https://image.slidesharecdn.com/2019-fighting-game-artificial-intelligence-competitionforupload-190908082601/75/2019-Fighting-Game-AI-Competition-33-2048.jpg)