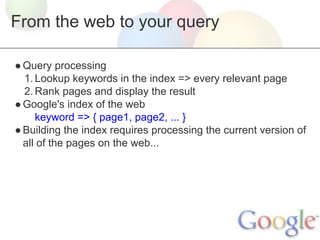

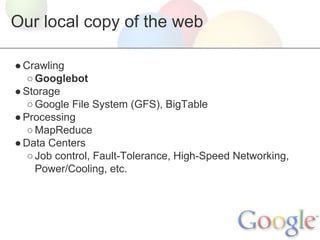

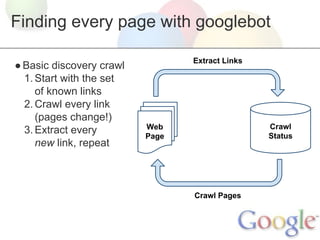

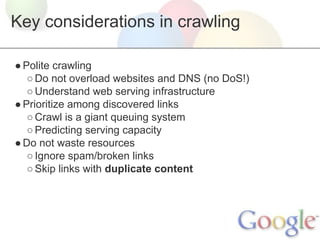

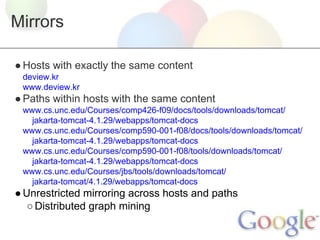

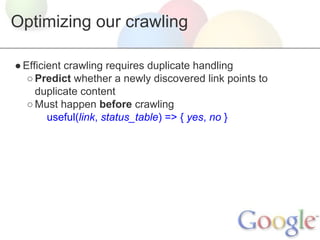

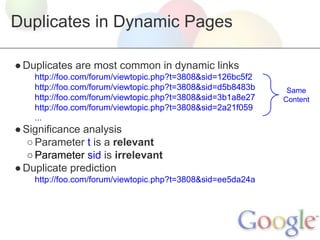

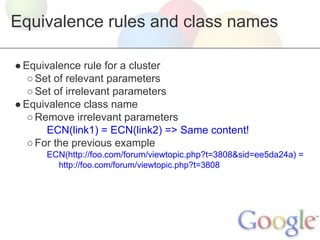

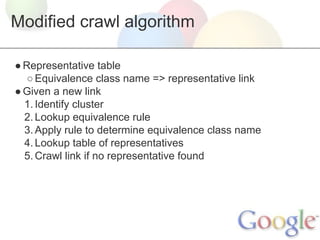

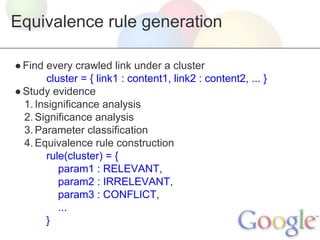

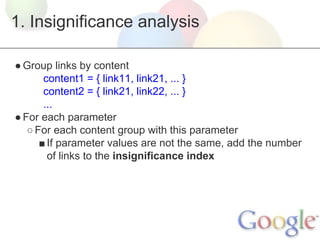

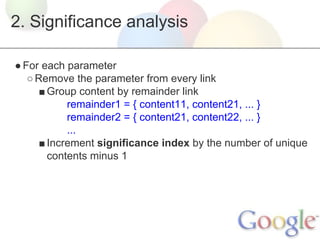

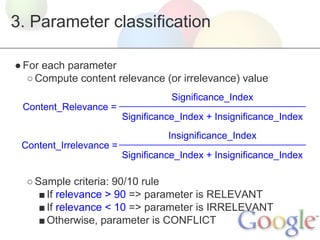

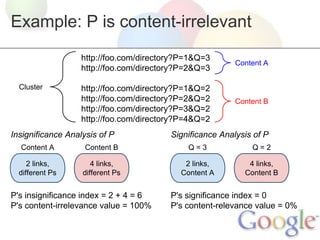

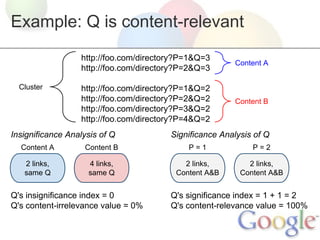

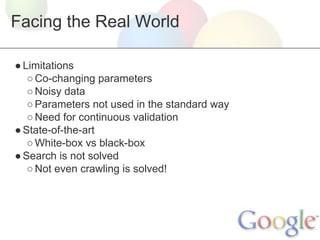

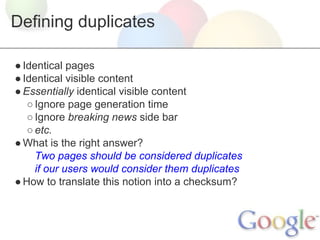

Google uses Googlebot to crawl the web and build an index of web pages. Googlebot crawls billions of web pages to build a copy of the web. It extracts links from each page and prioritizes which links to crawl next. Google developed techniques to efficiently crawl the web at scale, including predicting duplicate content so it doesn't waste resources crawling the same pages repeatedly. It analyzes parameters in URLs to determine which are relevant to a page's content and which are irrelevant or change the content. This allows it to identify when URLs likely contain duplicate content without recrawling them.