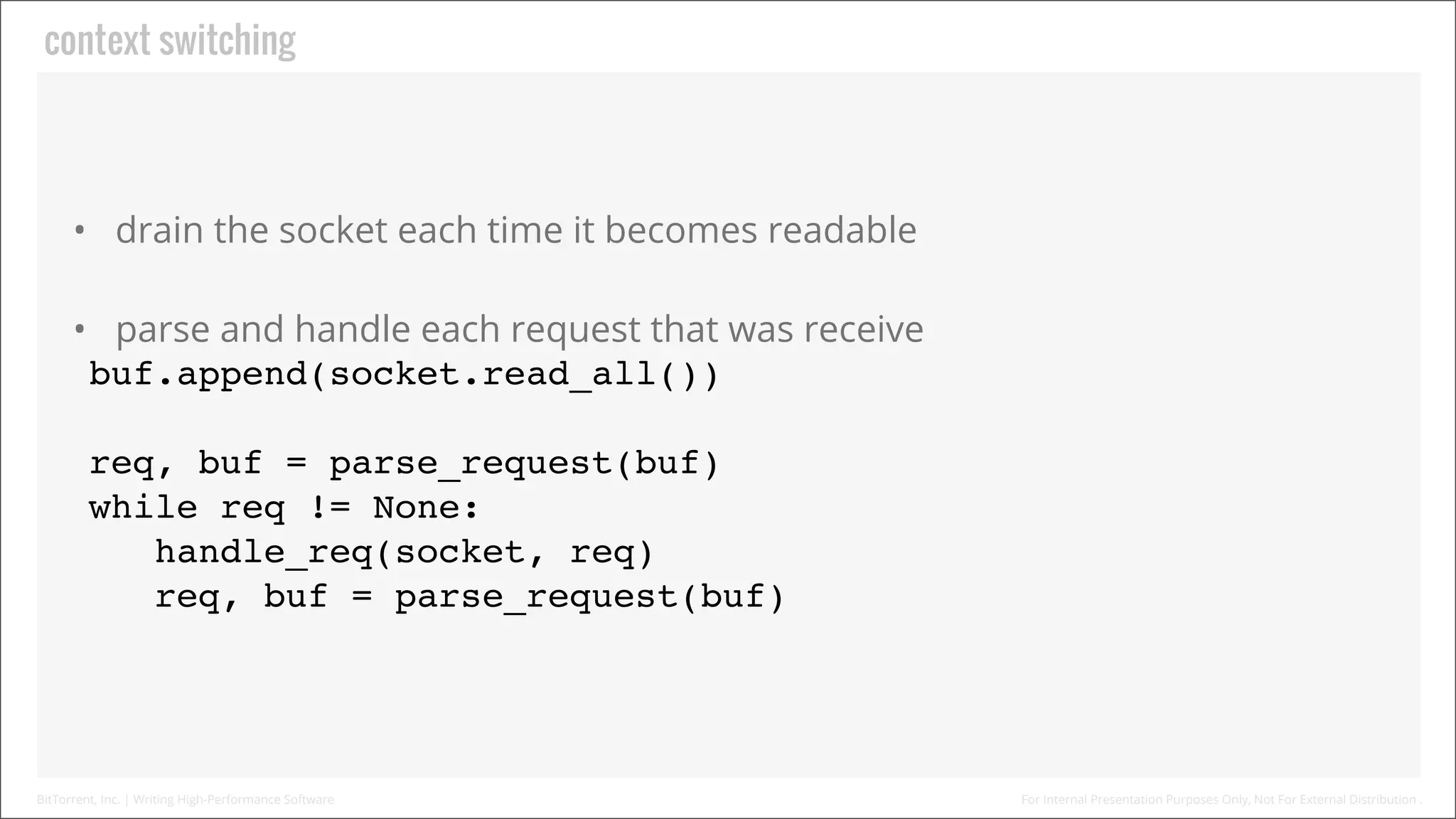

The document discusses techniques for writing high-performance software, focusing on optimizing memory access and reducing context switching. It covers CPU memory hierarchies, data structures, and socket programming. Some key points include organizing data sequentially in memory to improve cache hits, batching work to amortize context switching costs, and using asynchronous I/O to avoid blocking threads on disk or network operations.

![Data Structures

Padding

struct A [24 Bytes]

0: [int : 4] a

--- 4 Bytes padding --8: [void* : 8] b

16: [int : 4] c

--- 4 Bytes padding ---

struct A {

! int a;

! void* b;

! int c;

};

struct

0:

8:

12:

struct B {

! void* b;

! int a;

! int c;

};

B [16 Bytes]

[void* : 8] b

[int : 4] a

[int : 4] c

BitTorrent, Inc. | Writing High-Performance Software

For Internal Presentation Purposes Only, Not For External Distribution .](https://image.slidesharecdn.com/performancesoftware-140203140032-phpapp02/75/Writing-High-Performance-Software-by-Arvid-Norberg-24-2048.jpg)

![Socket Programming

kevent ev[100];

int events = kevent(queue, nullptr

, 0, ev, 100, nullptr);

for (int i = 0; i < events; ++i) {

int size = read(ev[i].ident, buffer

, buffer_size);

/* ... */

}

BitTorrent, Inc. | Writing High-Performance Software

For Internal Presentation Purposes Only, Not For External Distribution .](https://image.slidesharecdn.com/performancesoftware-140203140032-phpapp02/75/Writing-High-Performance-Software-by-Arvid-Norberg-43-2048.jpg)

![Socket Programming

Wait for the socket to

become readable

kevent ev[100];

int events = kevent(queue, nullptr

, 0, ev, 100, nullptr);

for (int i = 0; i < events; ++i) {

int size = read(ev[i].ident, buffer

, buffer_size);

/* ... */

}

Copy data from kernel

space to user space

BitTorrent, Inc. | Writing High-Performance Software

For Internal Presentation Purposes Only, Not For External Distribution .](https://image.slidesharecdn.com/performancesoftware-140203140032-phpapp02/75/Writing-High-Performance-Software-by-Arvid-Norberg-44-2048.jpg)

![Socket Programming

WSABUF b = { buffer_size, buffer };

DWORD transferred = 0, flags = 0;

WSAOVERLAPPED ov; // [ initialization ]

int ret = WSARecv(s, &b, 1, &transferred

, &flags, &ov, nullptr);

WSAOVERLAPPED* ol;

ULONG_PTR* key;

BOOL r = GetQueuedCompletionStatus(port

, &transferred, &key, &ol, INFINITE);

ret = WSAGetOverlappedResult(s, &ov

, &transferred, false, &flags);

BitTorrent, Inc. | Writing High-Performance Software

For Internal Presentation Purposes Only, Not For External Distribution .](https://image.slidesharecdn.com/performancesoftware-140203140032-phpapp02/75/Writing-High-Performance-Software-by-Arvid-Norberg-45-2048.jpg)

![Socket Programming

Initiate async. read into

buffer

WSABUF b = { buffer_size, buffer };

DWORD transferred = 0, flags = 0;

WSAOVERLAPPED ov; // [ initialization ]

int ret = WSARecv(s, &b, 1, &transferred

, &flags, &ov, nullptr);

WSAOVERLAPPED* ol;

Wait

ULONG_PTR* key;

BOOL r = GetQueuedCompletionStatus(port

, &transferred, &key, &ol, INFINITE);

ret = WSAGetOverlappedResult(s, &ov

, &transferred, false, &flags);

BitTorrent, Inc. | Writing High-Performance Software

for operations to

complete

Query status

For Internal Presentation Purposes Only, Not For External Distribution .](https://image.slidesharecdn.com/performancesoftware-140203140032-phpapp02/75/Writing-High-Performance-Software-by-Arvid-Norberg-46-2048.jpg)

![Message Queues

• Events on message queues may come in batches

• Example: we receive one message per 16 kiB block read from disk.

void conn::on_disk_read(buffer const& buf) {

m_socket.write(&buf[0], buf.size());

}

BitTorrent, Inc. | Writing High-Performance Software

For Internal Presentation Purposes Only, Not For External Distribution .](https://image.slidesharecdn.com/performancesoftware-140203140032-phpapp02/75/Writing-High-Performance-Software-by-Arvid-Norberg-52-2048.jpg)

![Message Queues

void conn::on_disk_read(buffer const& buf) {

m_buf.insert(m_buf.end(), buf);

if (m_has_flush_msg) return;

m_has_flush_msg = true;

m_queue.post(std::bind(&conn::flush

, this));

}

void conn::flush() {

m_socket.write(&m_buf[0], m_buf.size());

}

BitTorrent, Inc. | Writing High-Performance Software

For Internal Presentation Purposes Only, Not For External Distribution .](https://image.slidesharecdn.com/performancesoftware-140203140032-phpapp02/75/Writing-High-Performance-Software-by-Arvid-Norberg-54-2048.jpg)

![Message Queues

Instead of writing to the socket,

accumulate the buffers

void conn::on_disk_read(buffer const& buf) {

m_buf.insert(m_buf.end(), buf);

if (m_has_flush_msg) return;

m_has_flush_msg = true;

m_queue.post(std::bind(&conn::flush

, this));

}

If there is no outstanding flush

message, post one

void conn::flush() {

m_socket.write(&m_buf[0], m_buf.size());

}

Flush all buffers when all messages have been handled

BitTorrent, Inc. | Writing High-Performance Software

For Internal Presentation Purposes Only, Not For External Distribution .](https://image.slidesharecdn.com/performancesoftware-140203140032-phpapp02/75/Writing-High-Performance-Software-by-Arvid-Norberg-55-2048.jpg)