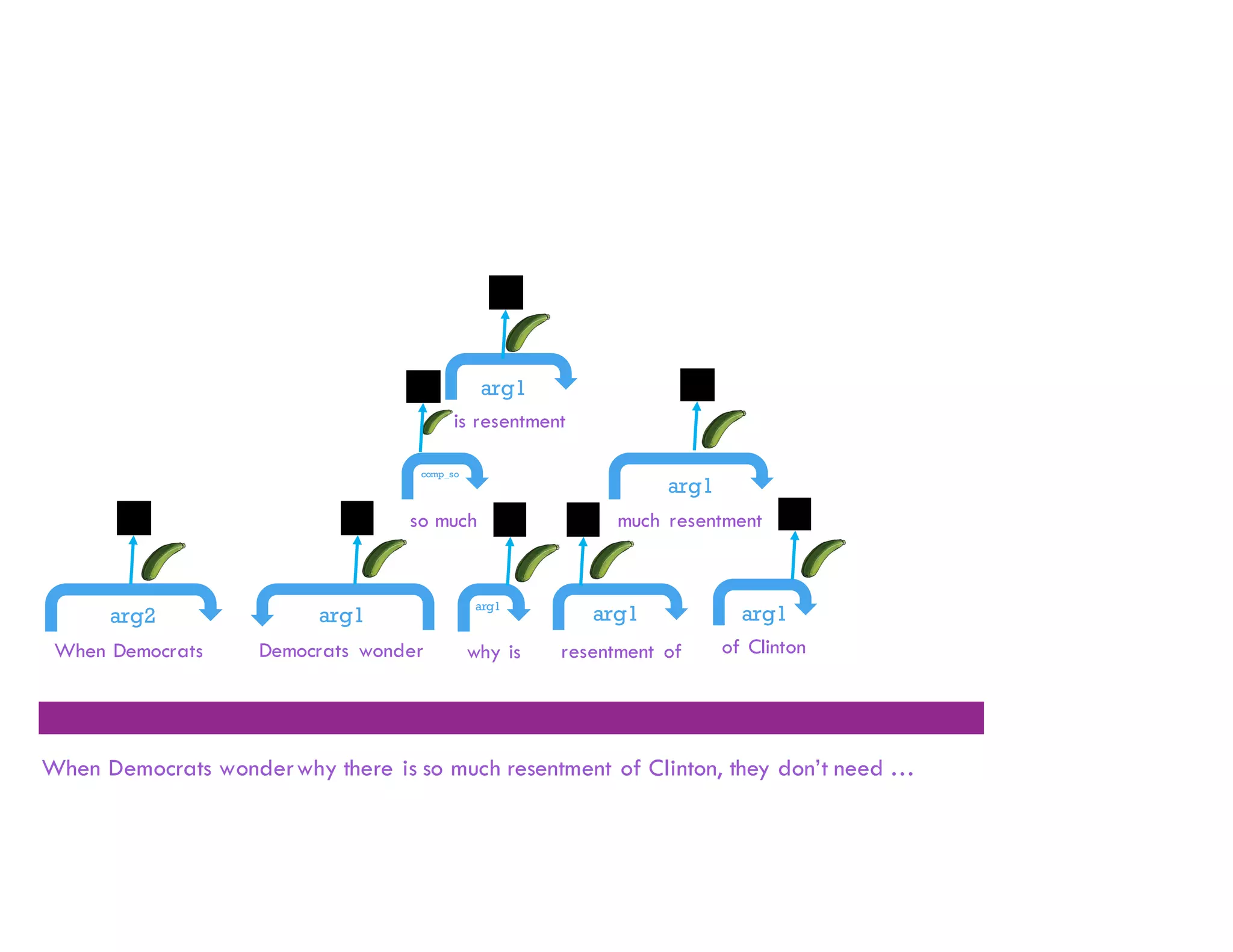

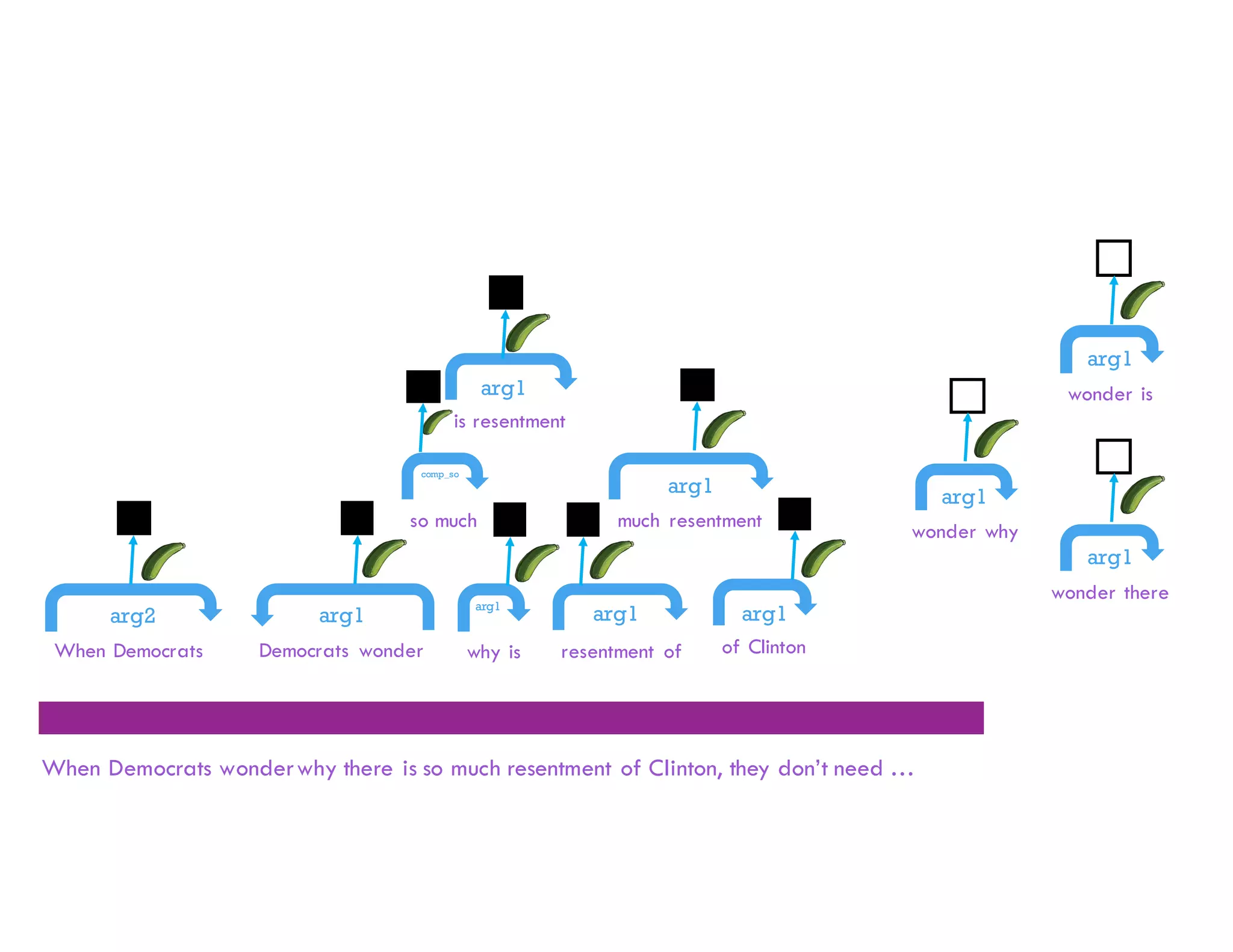

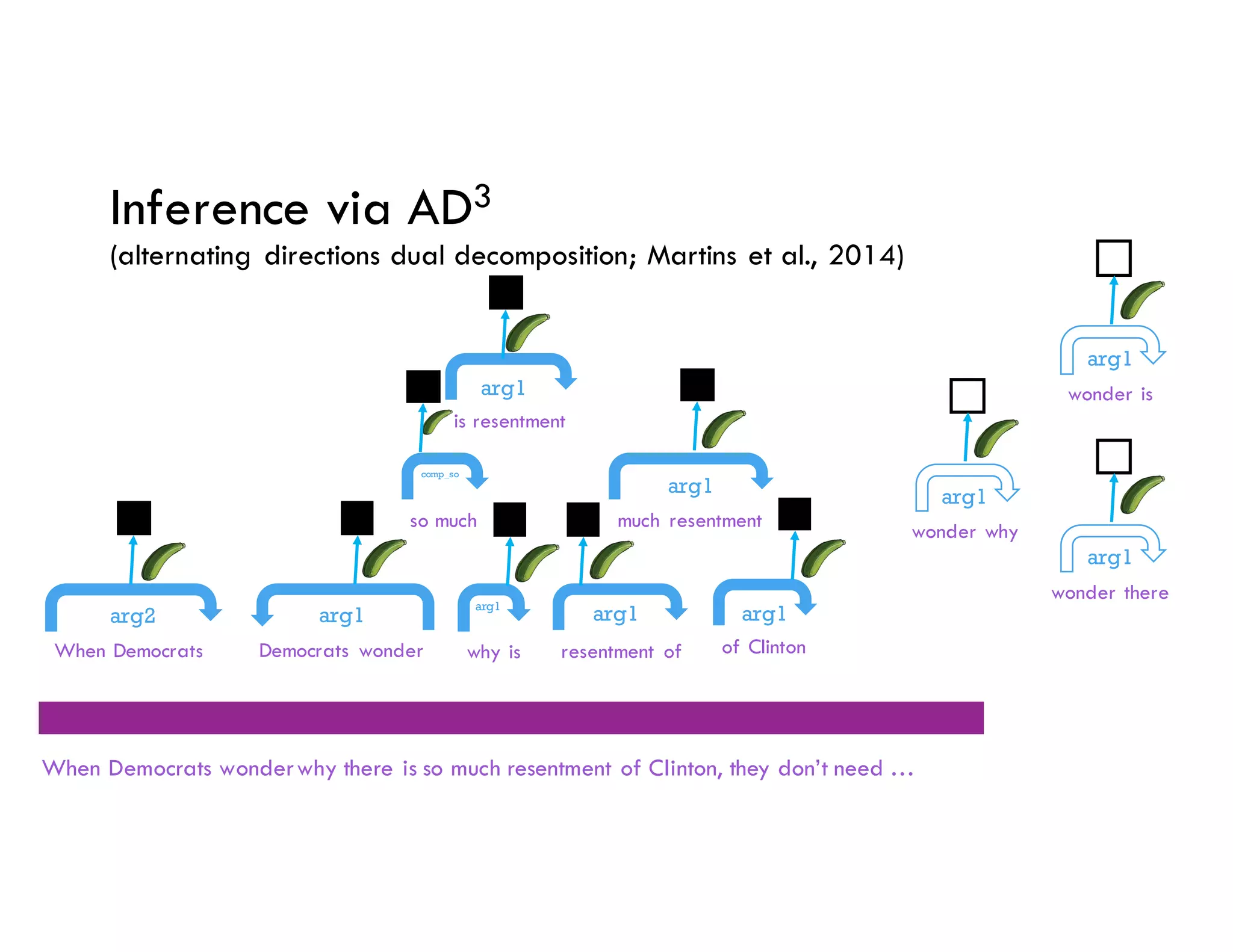

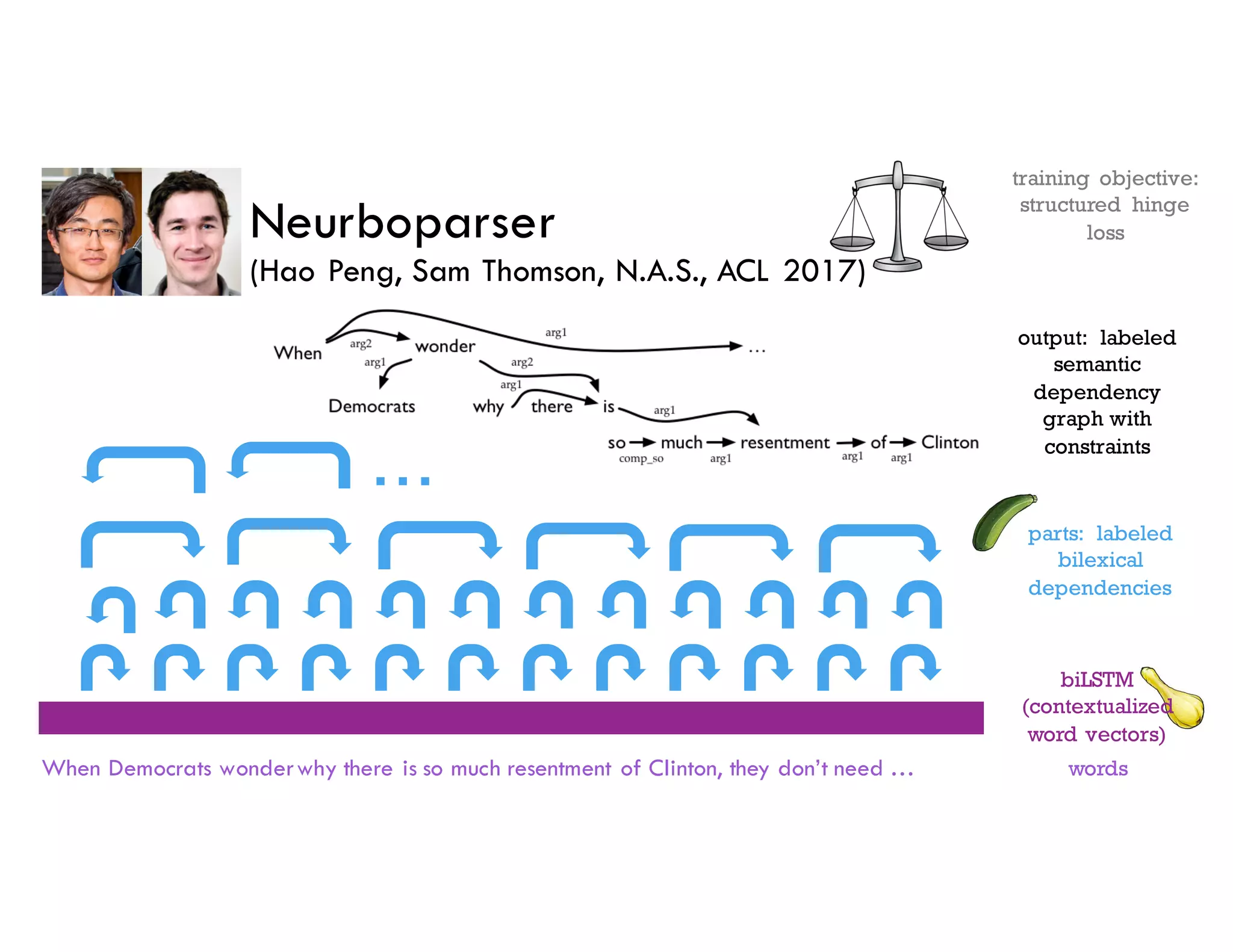

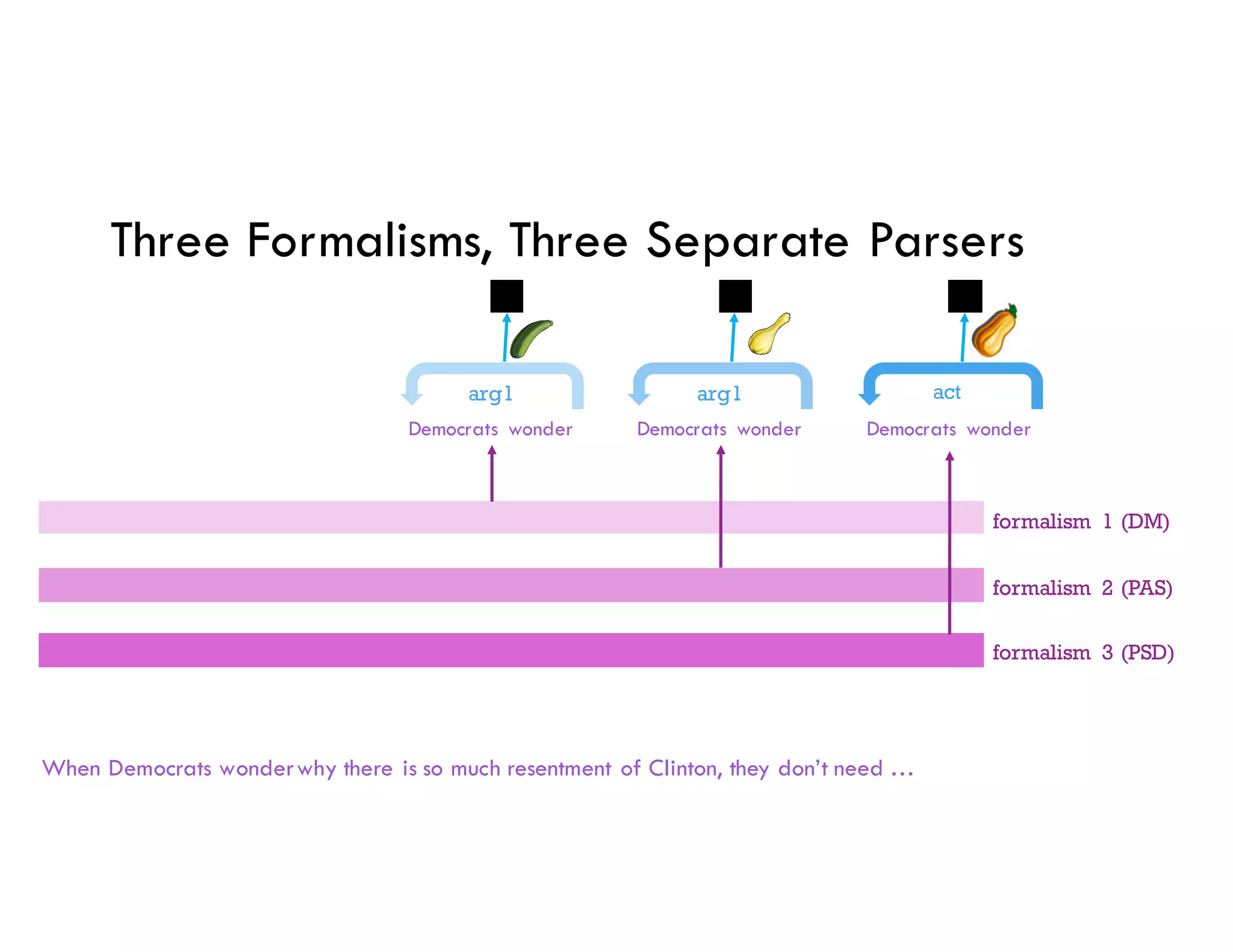

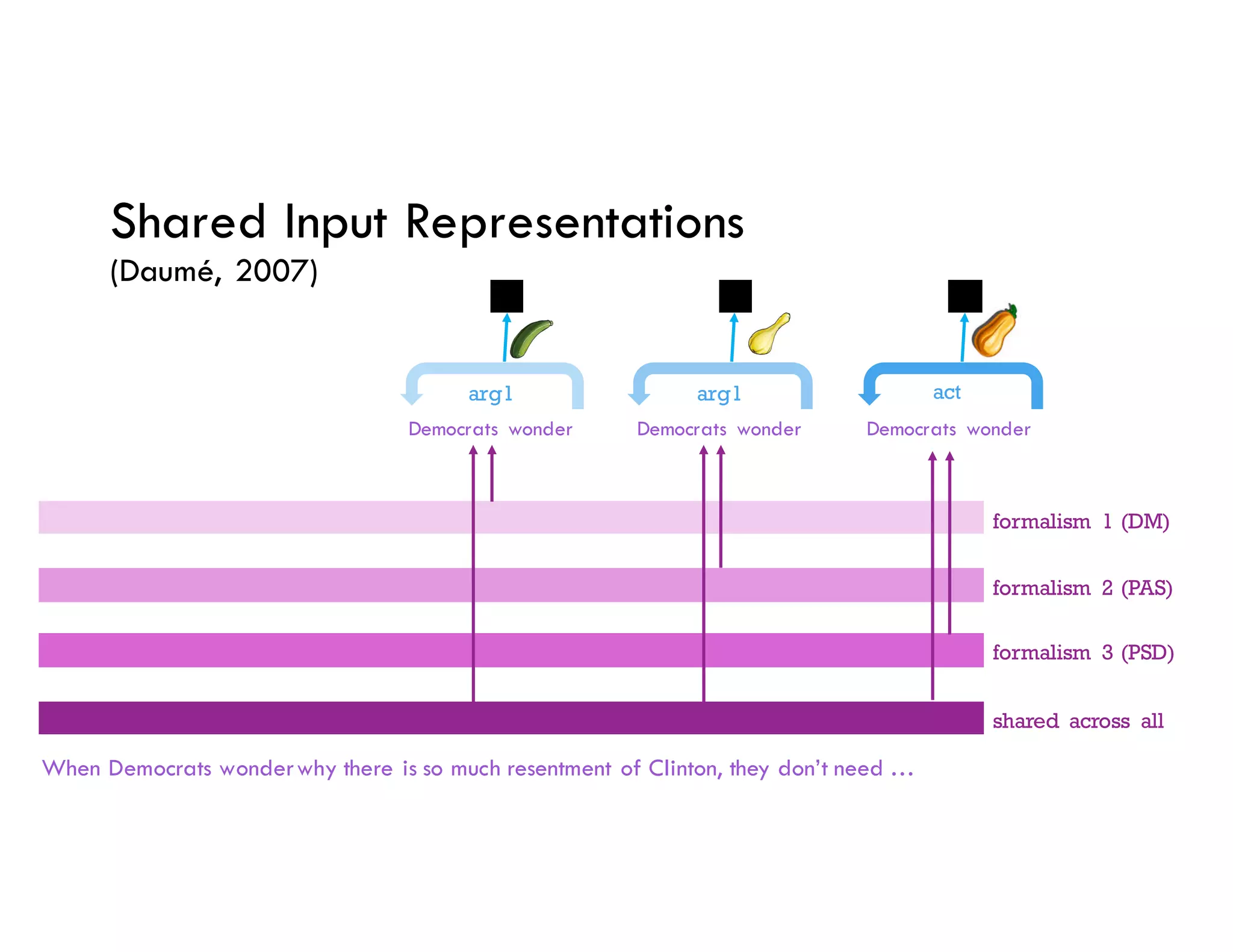

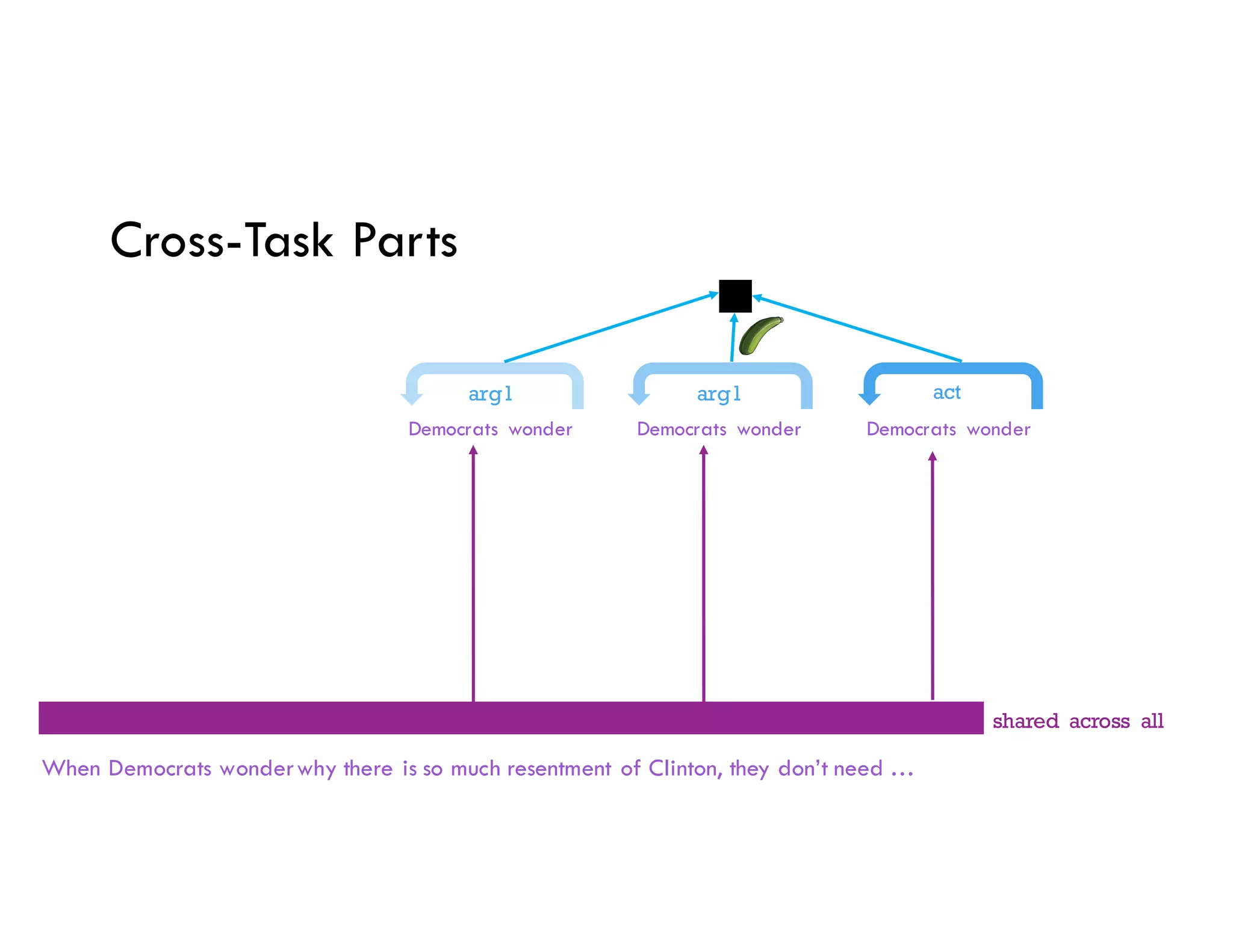

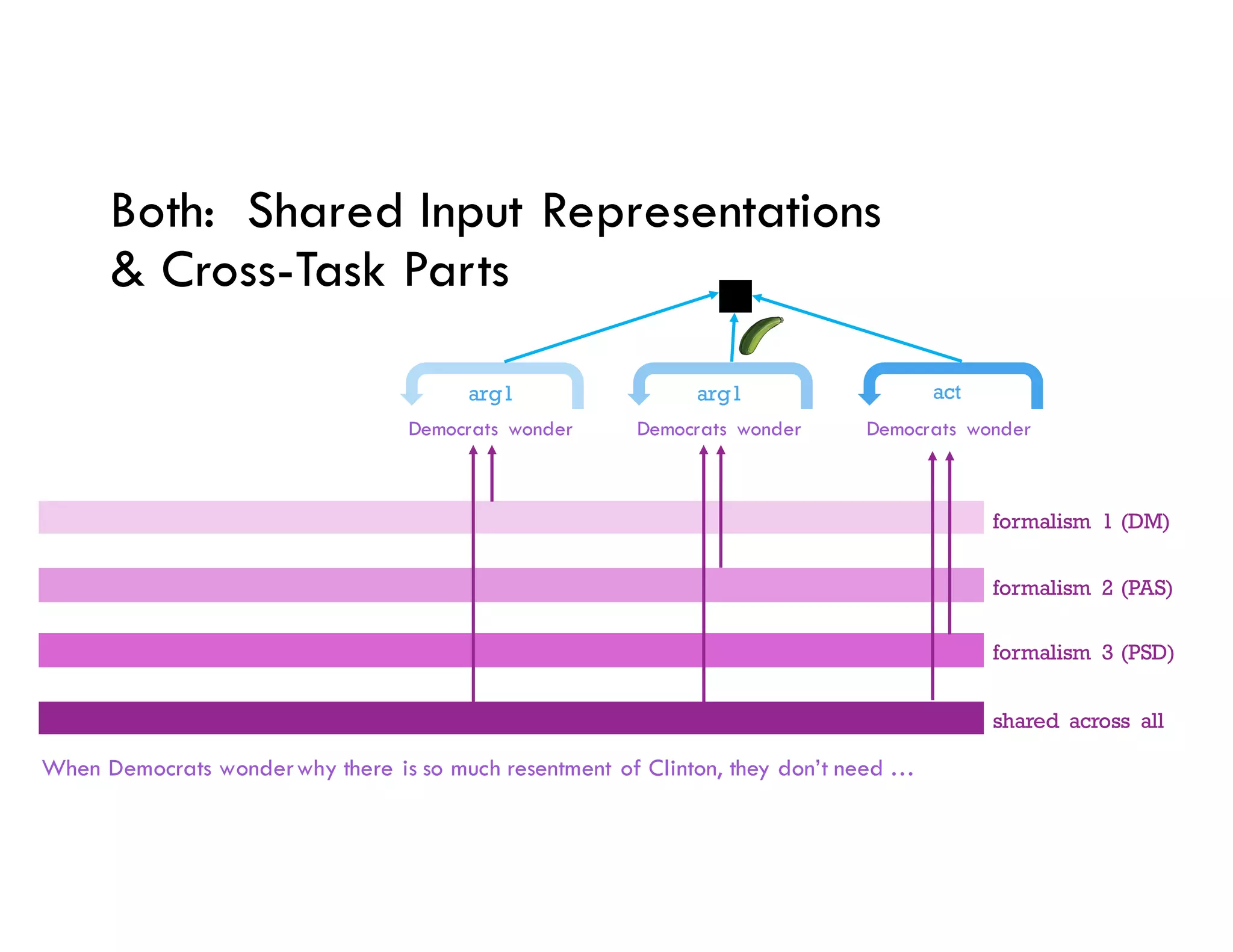

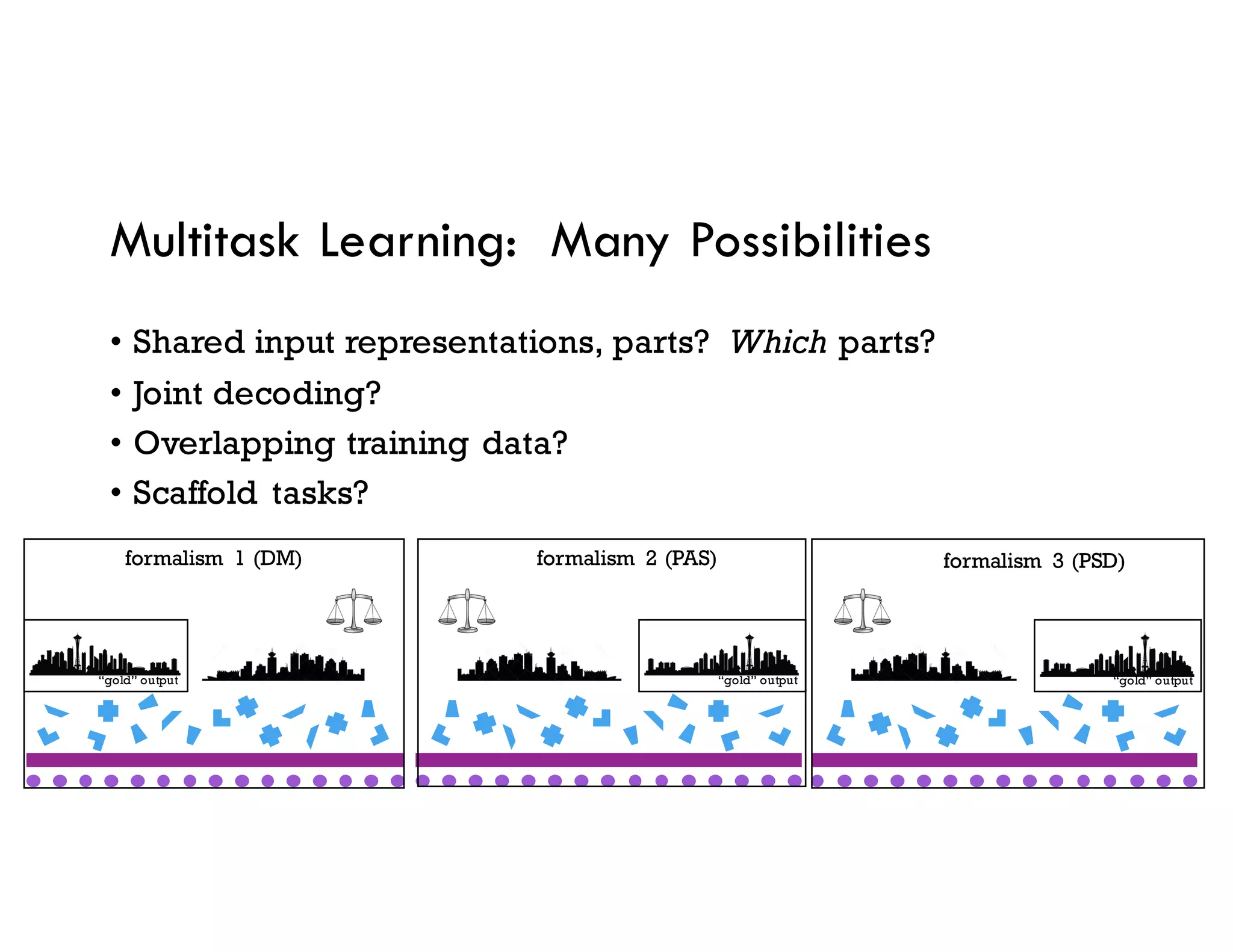

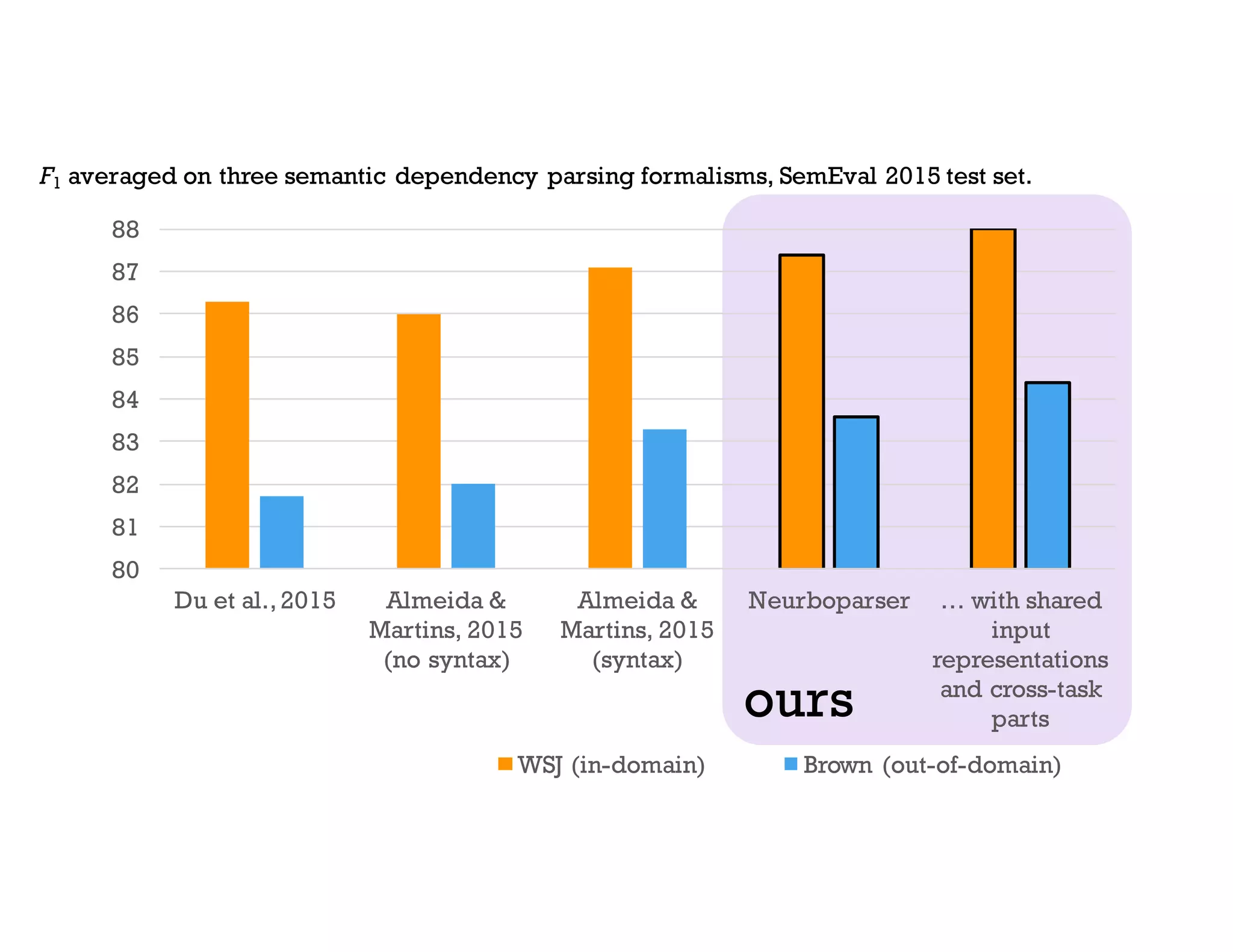

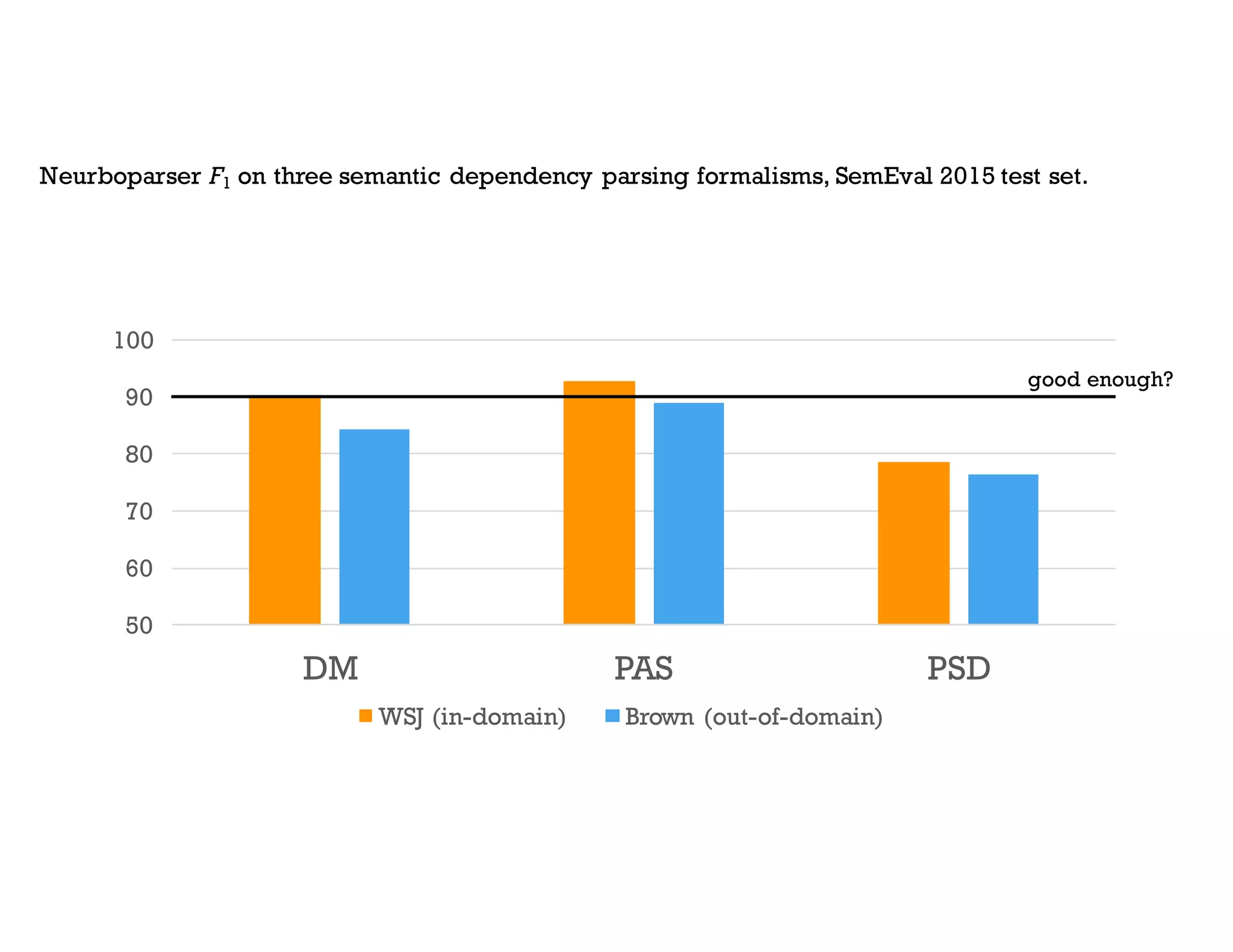

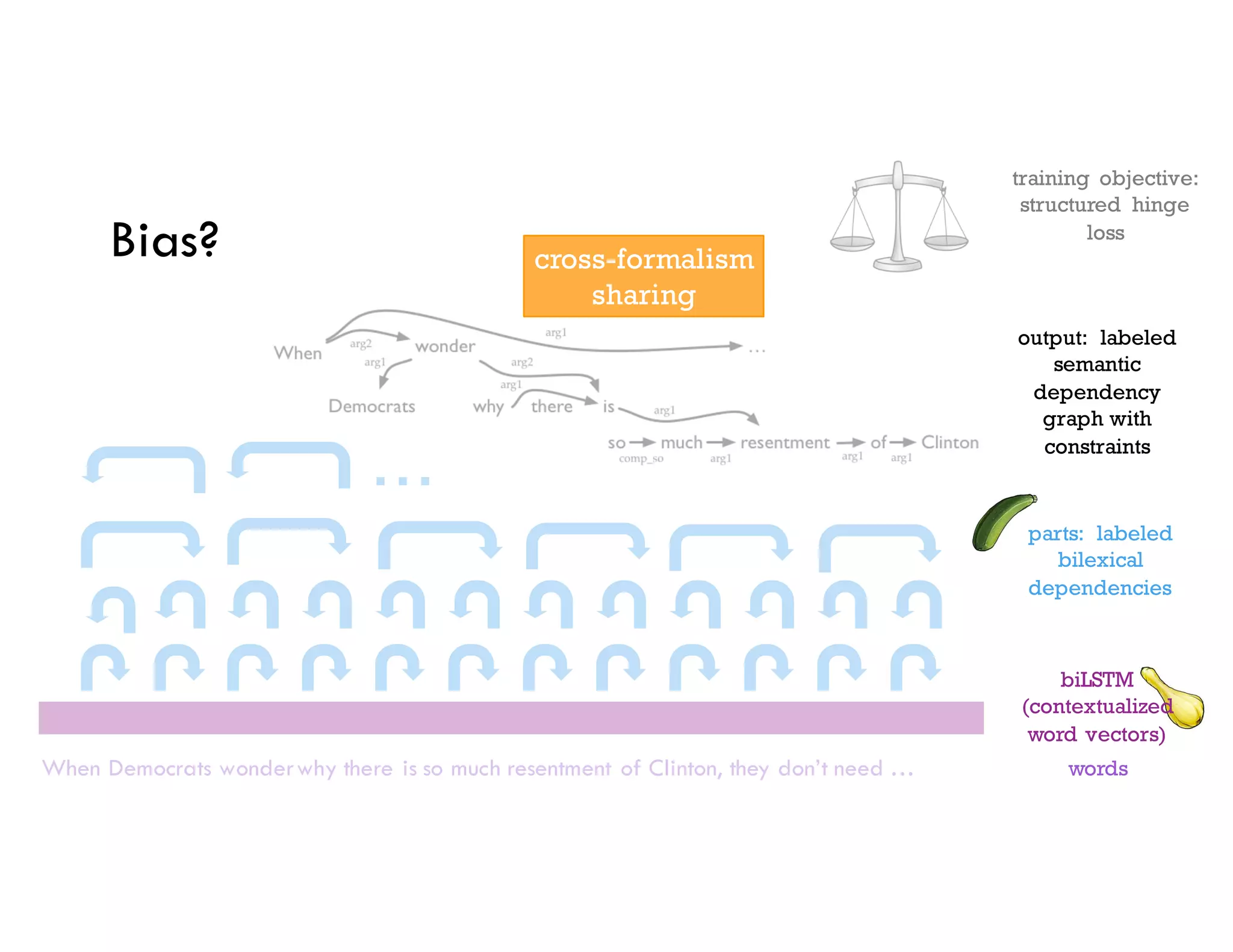

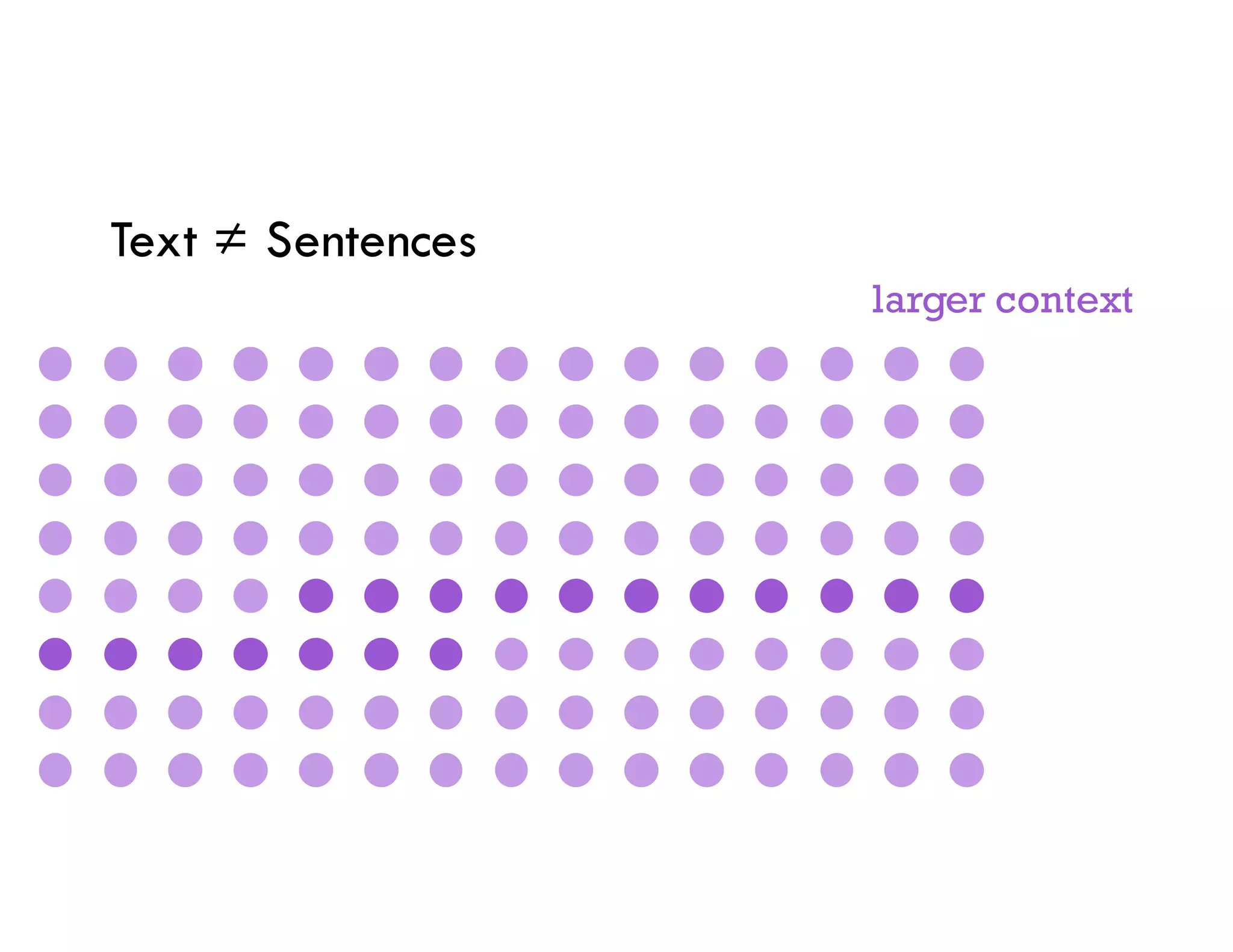

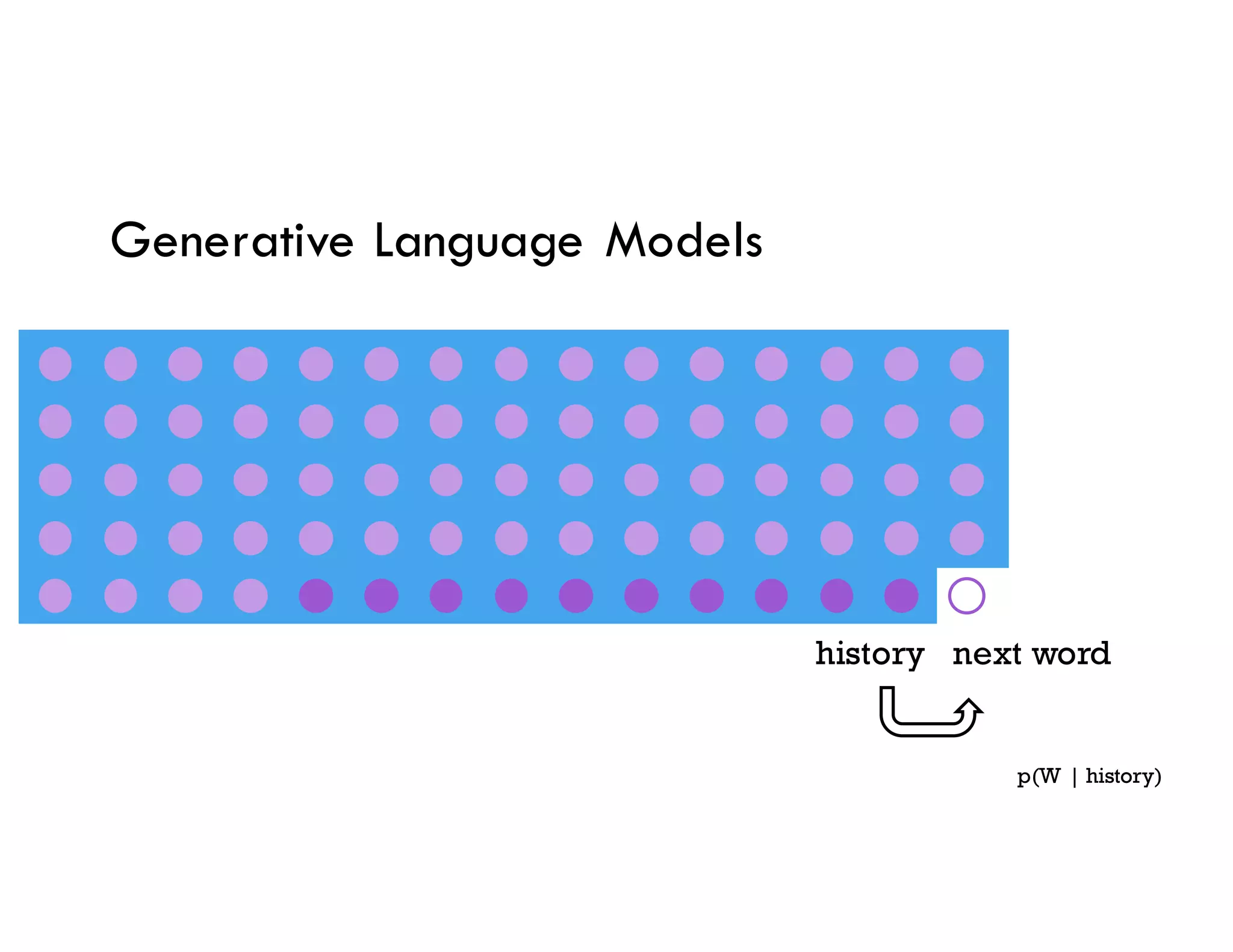

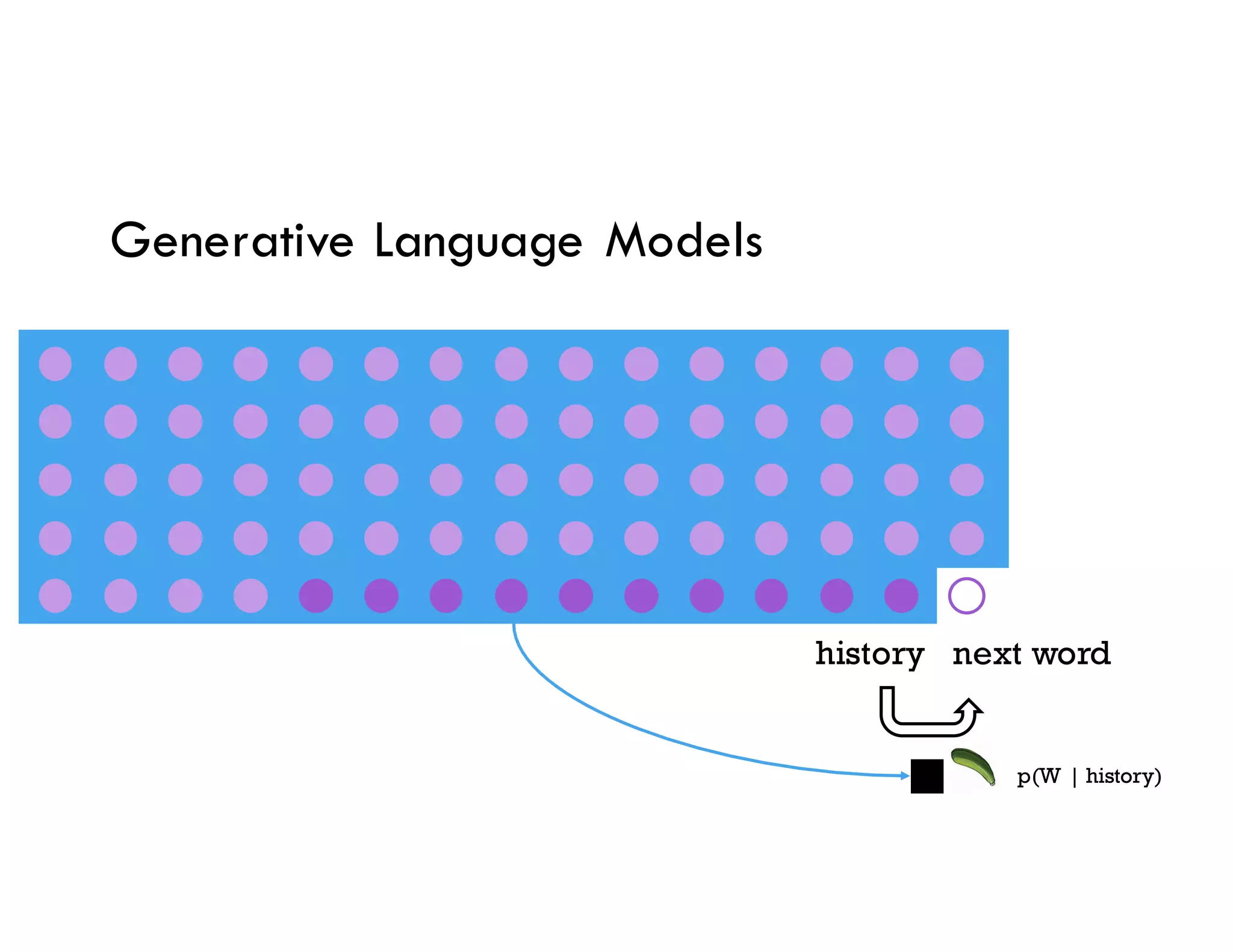

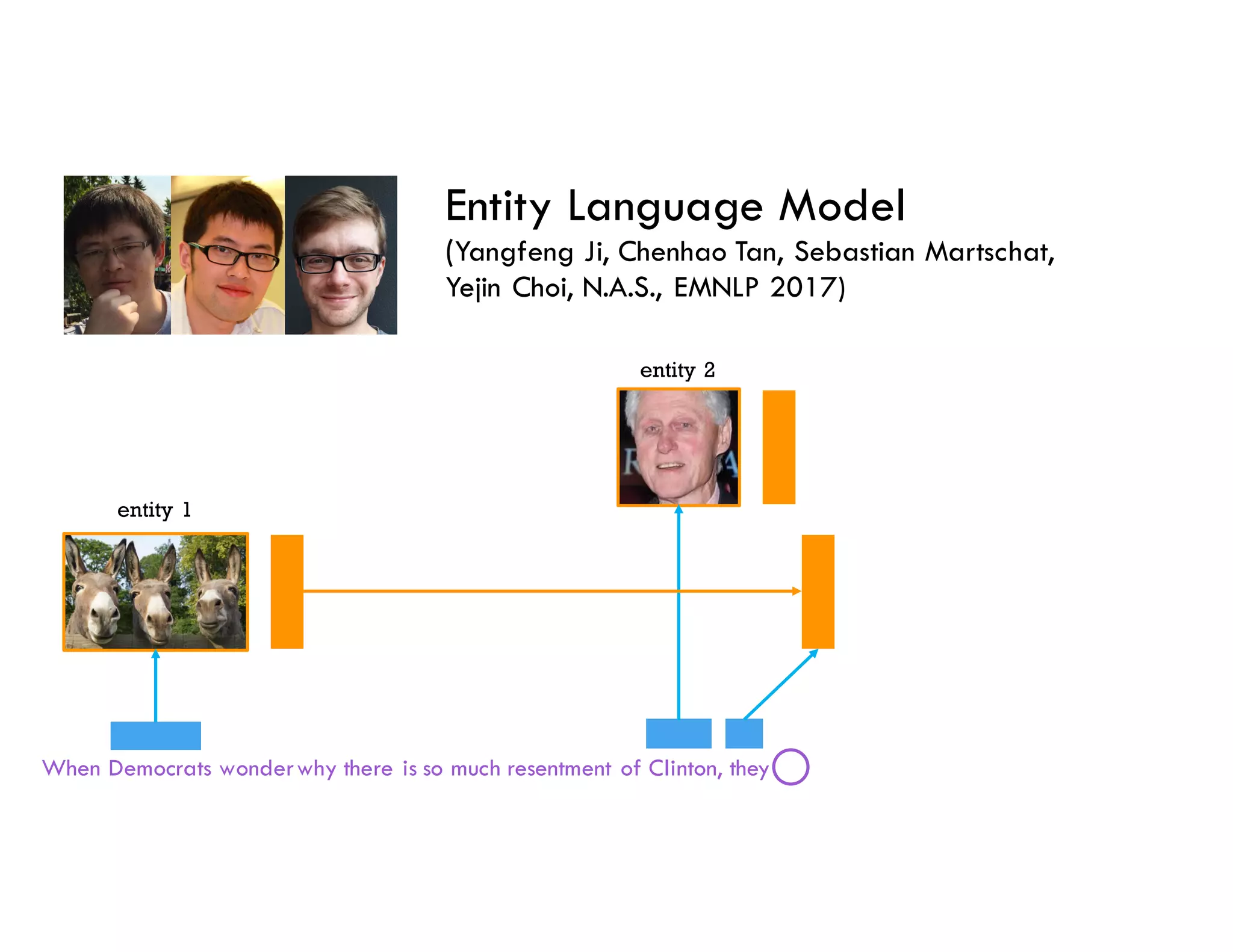

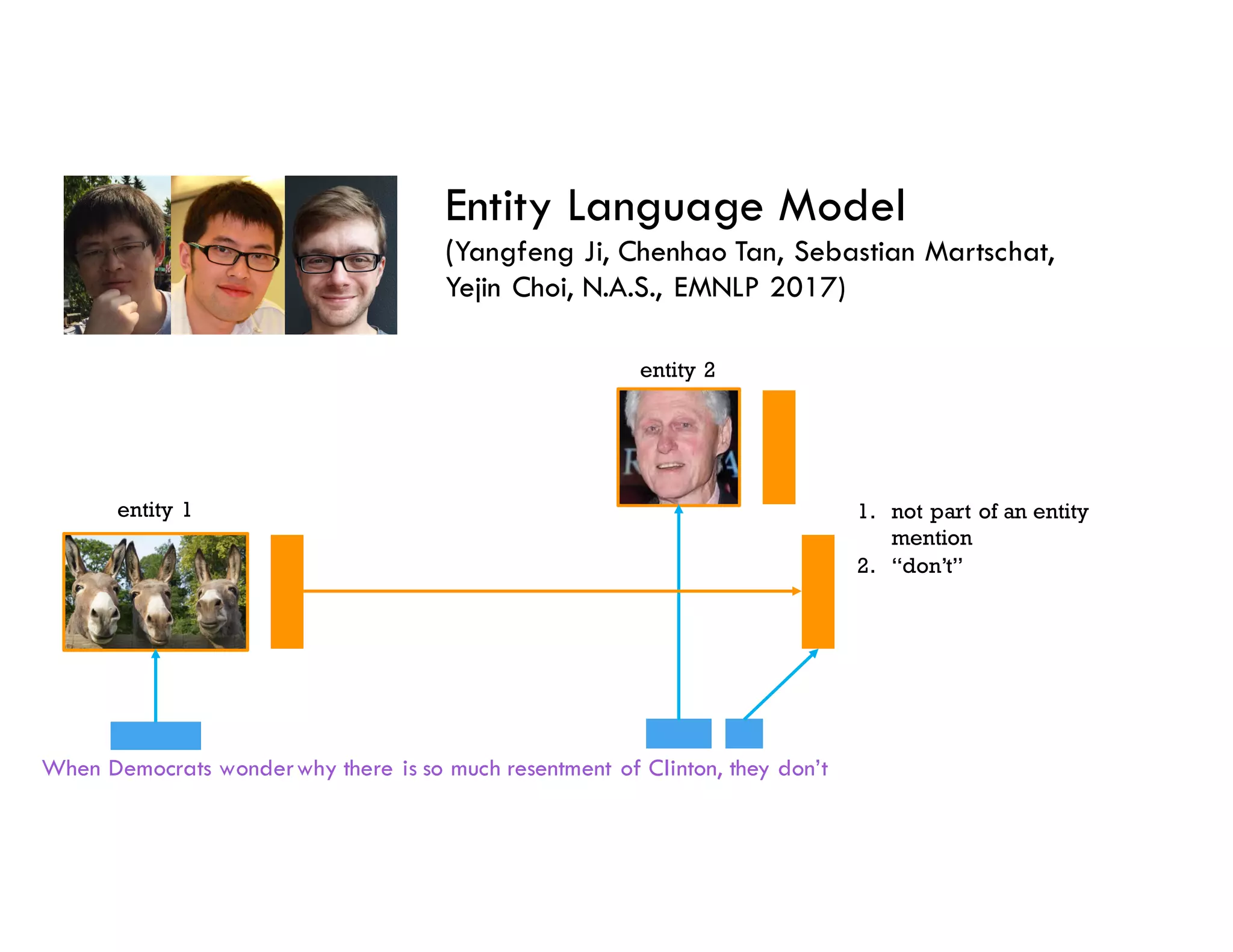

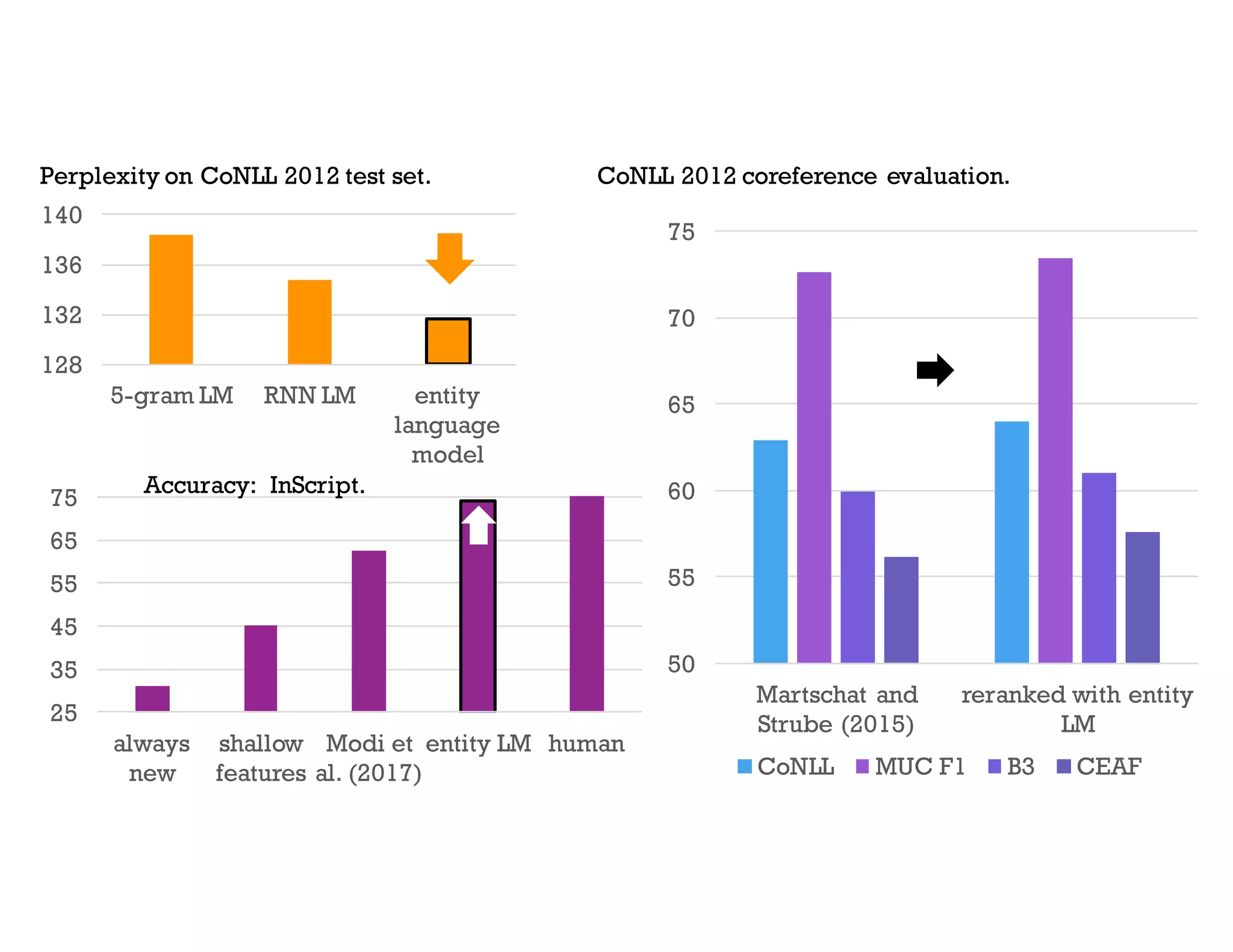

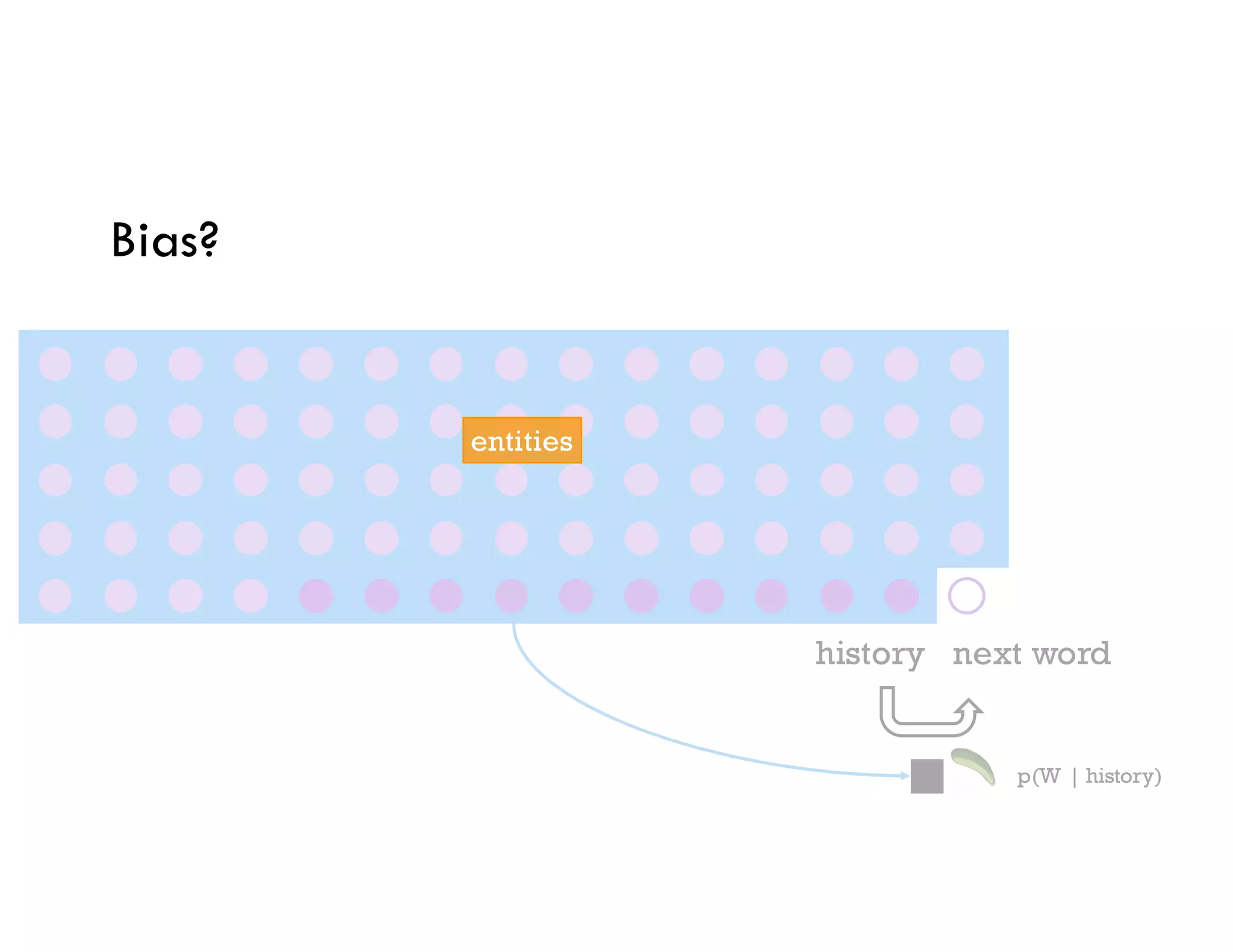

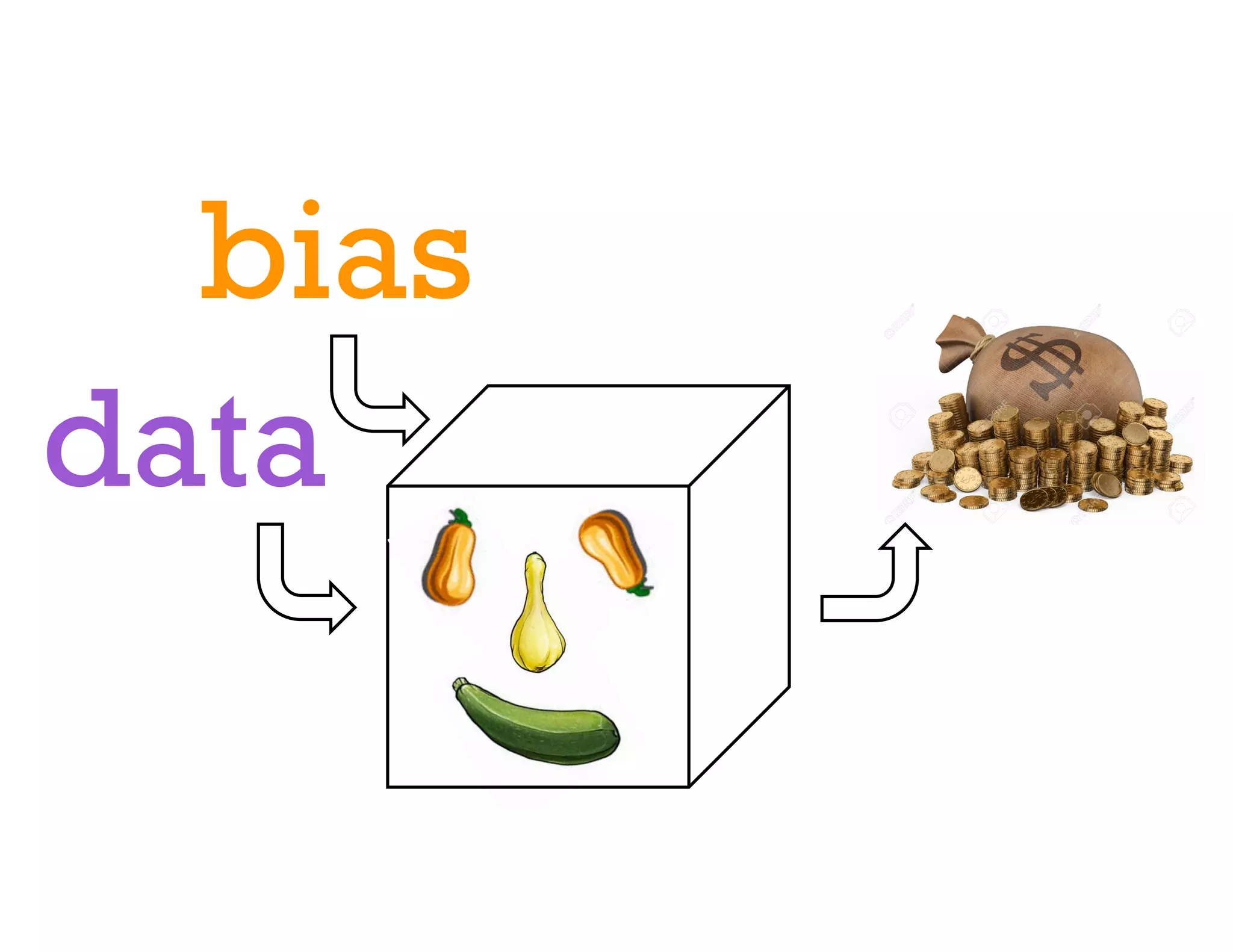

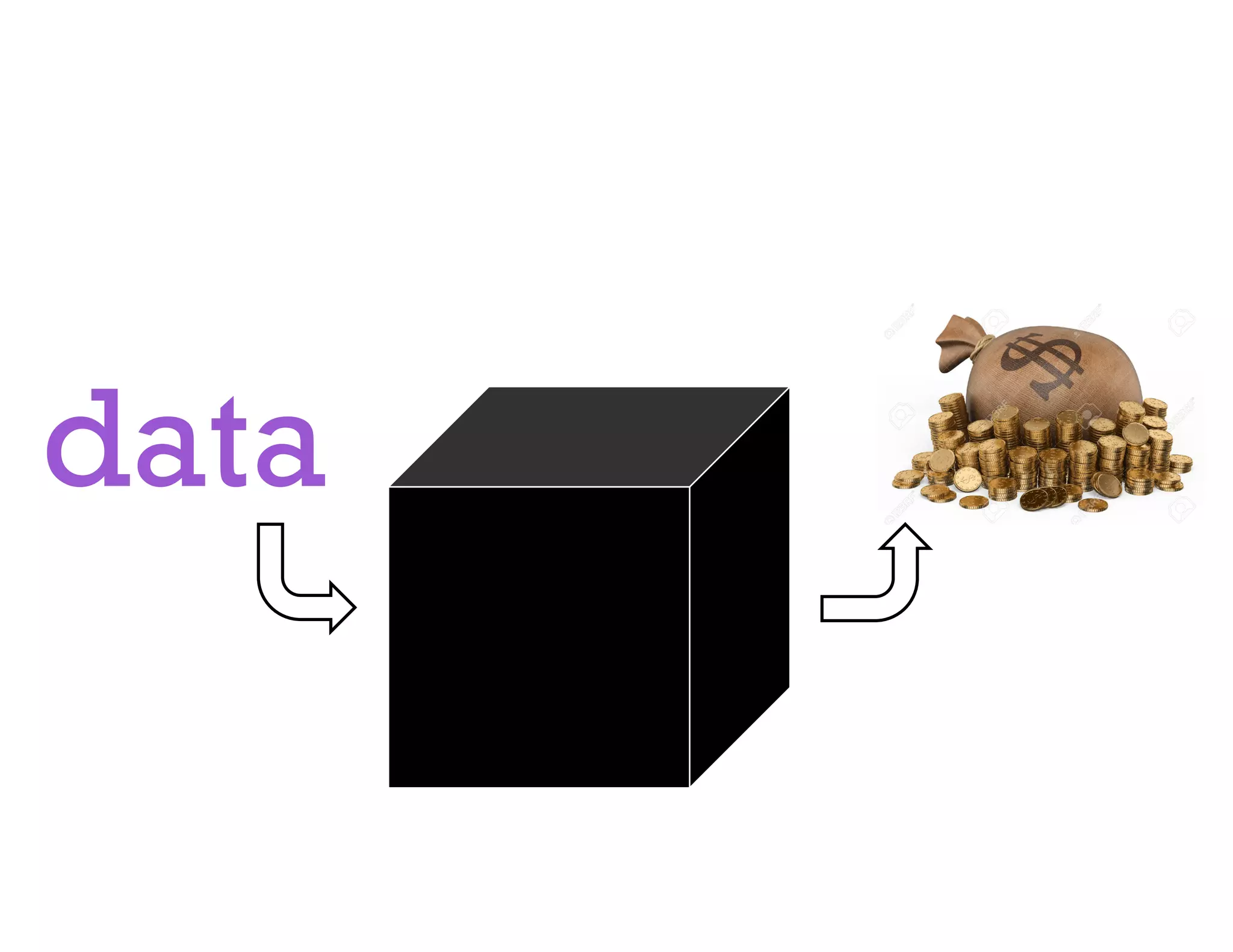

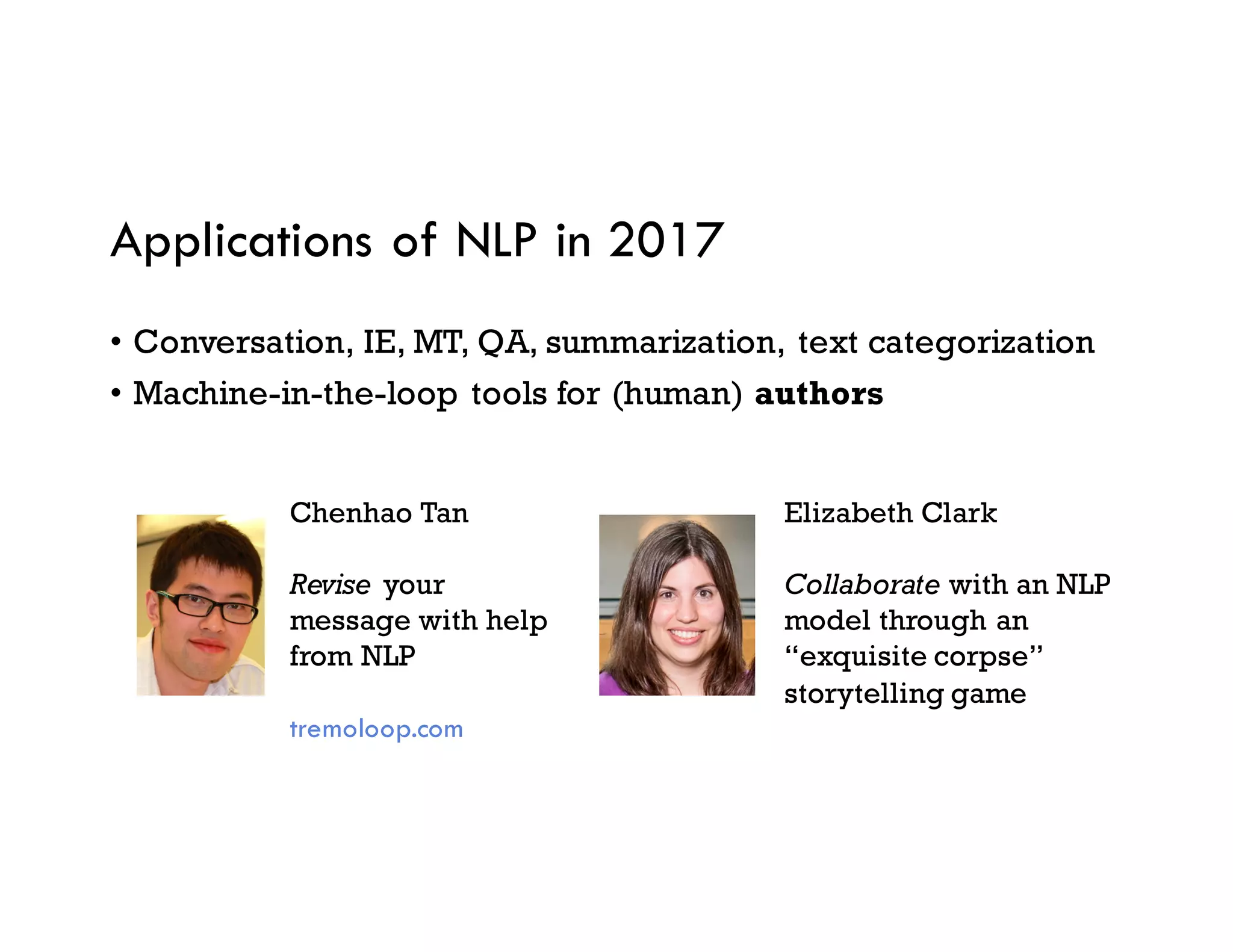

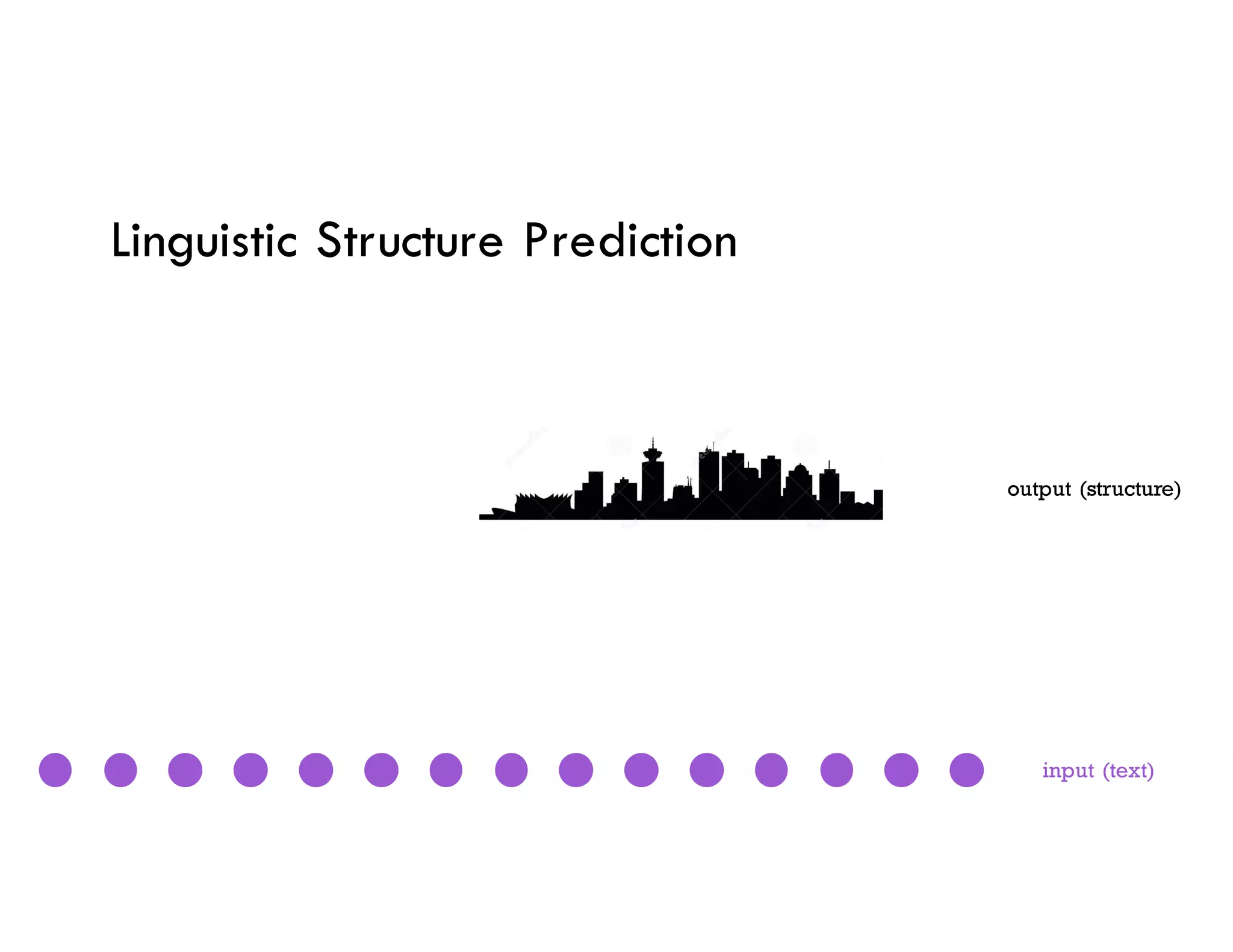

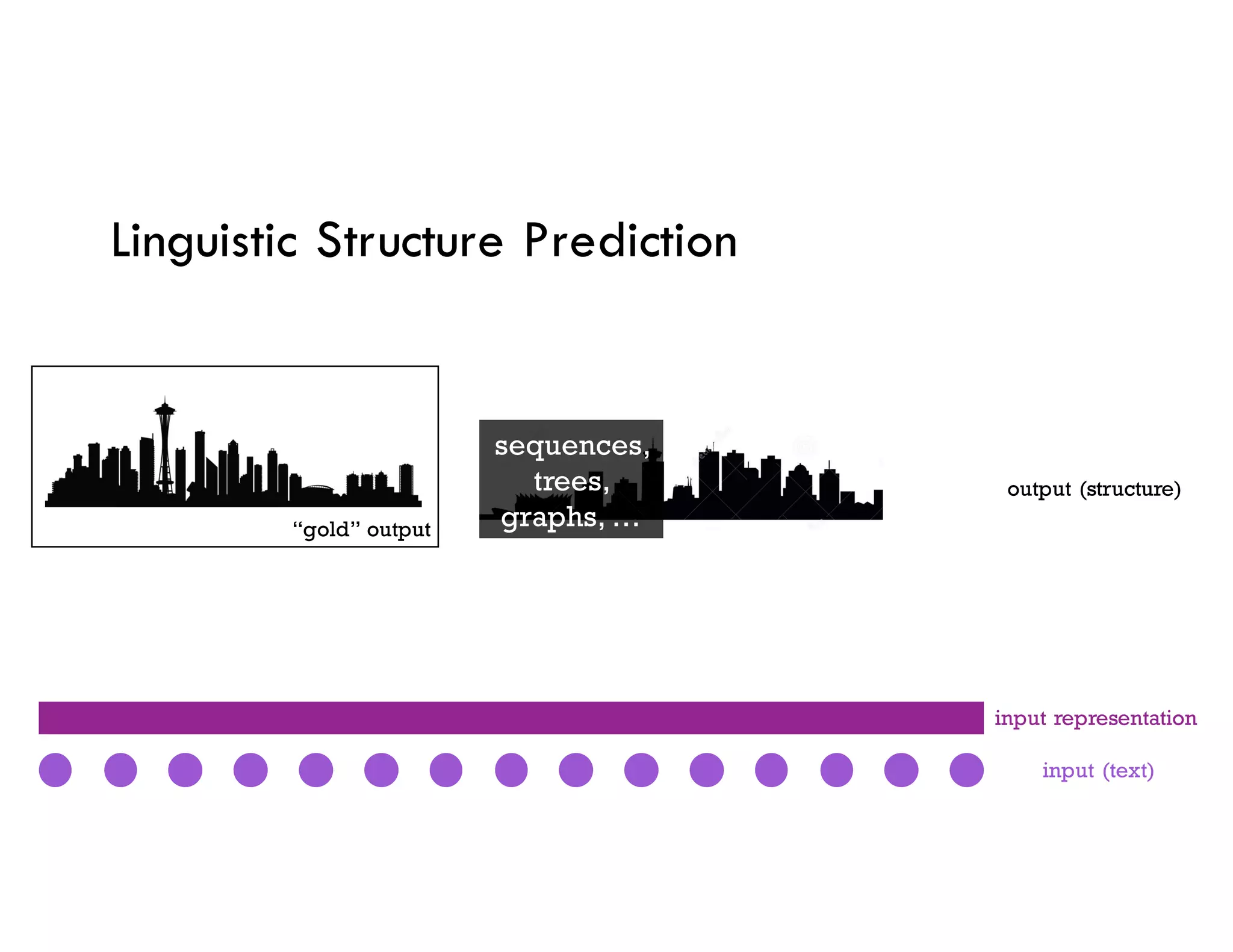

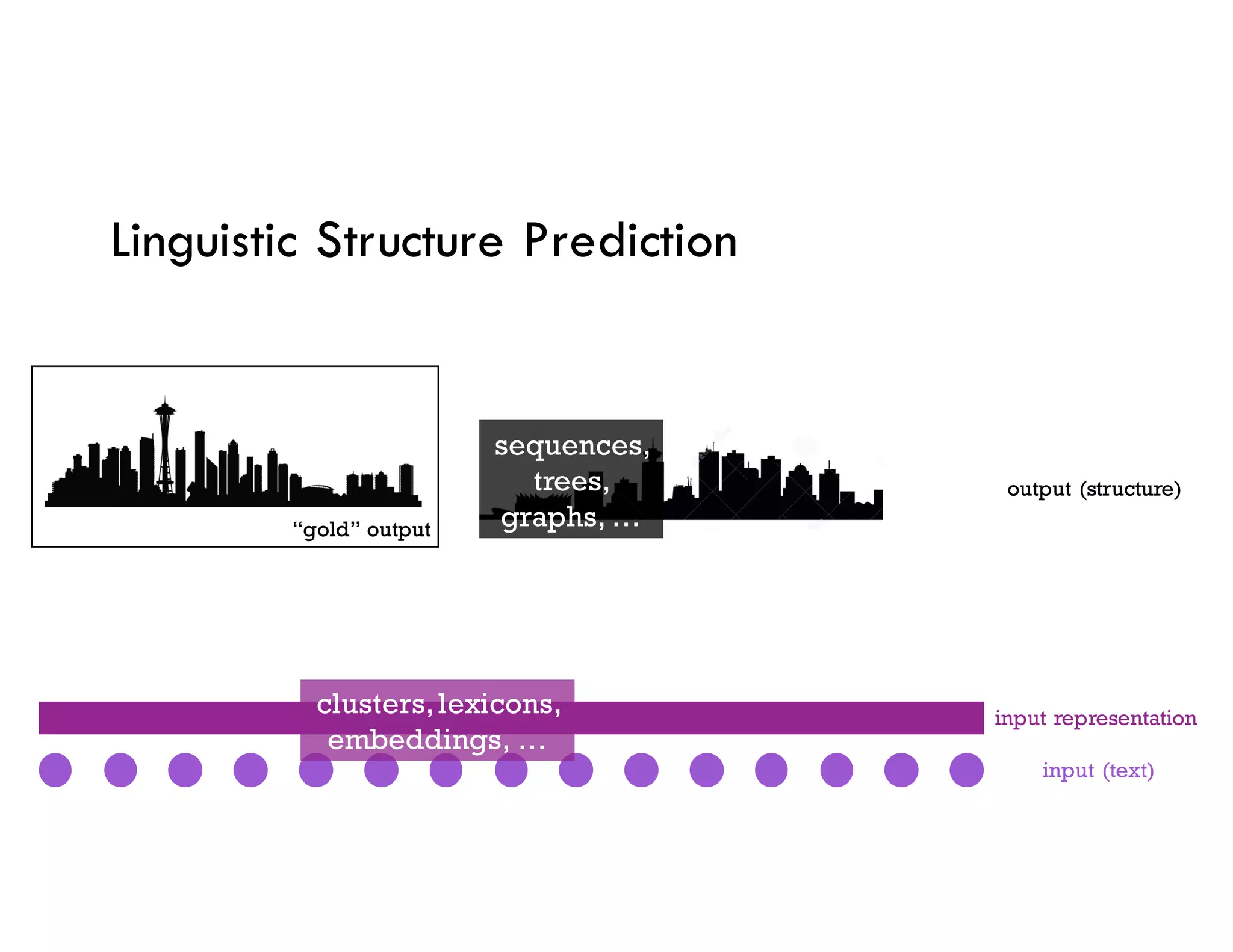

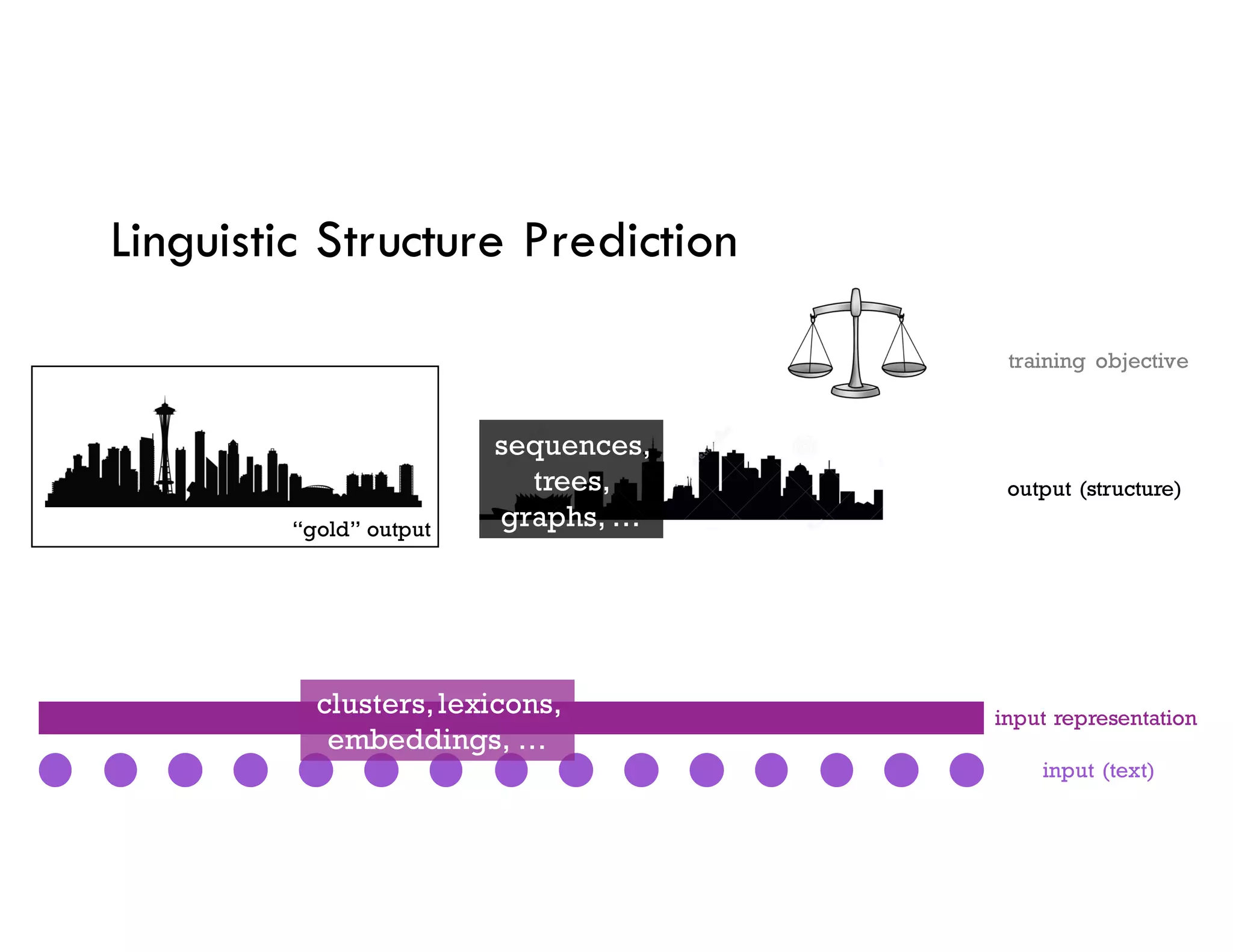

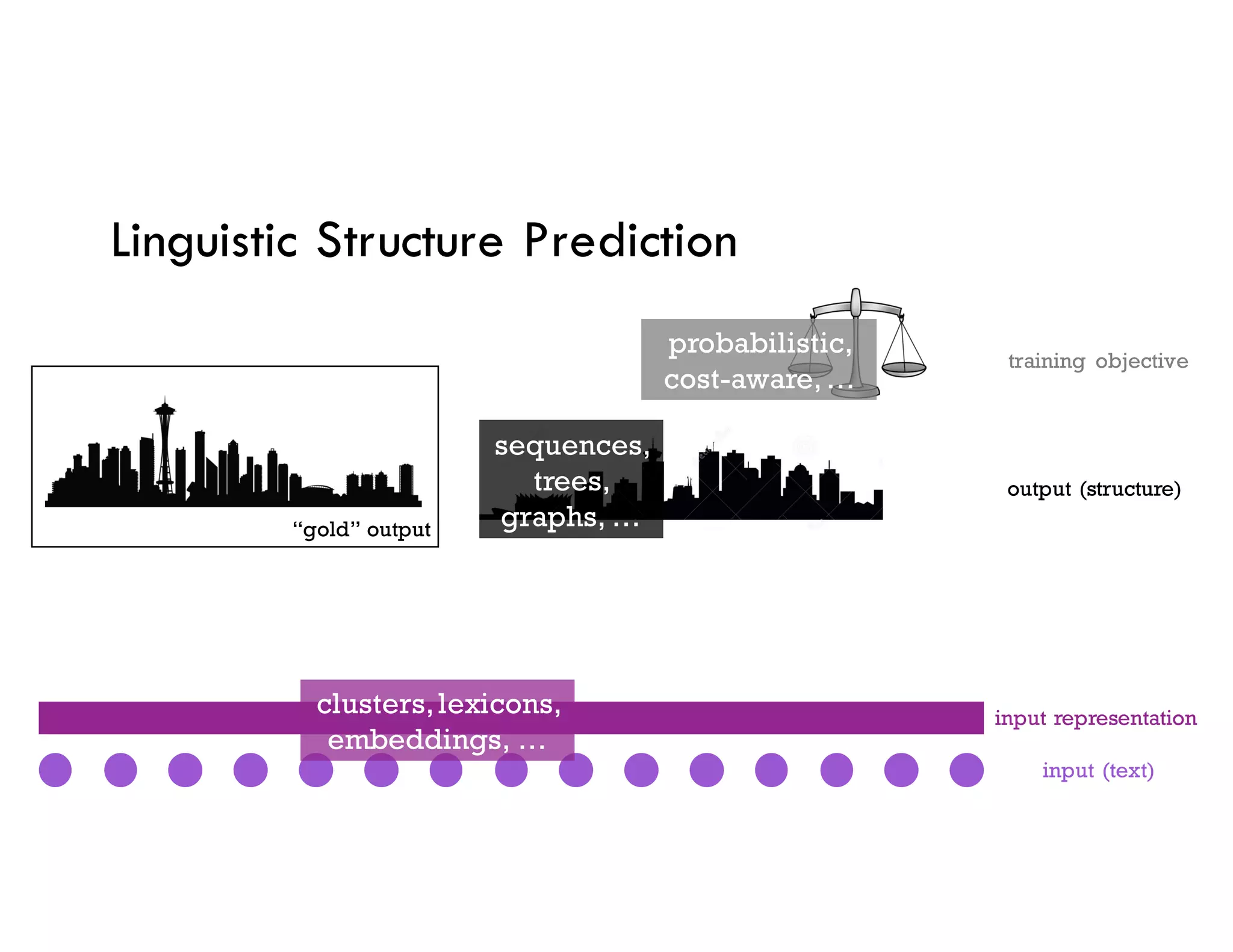

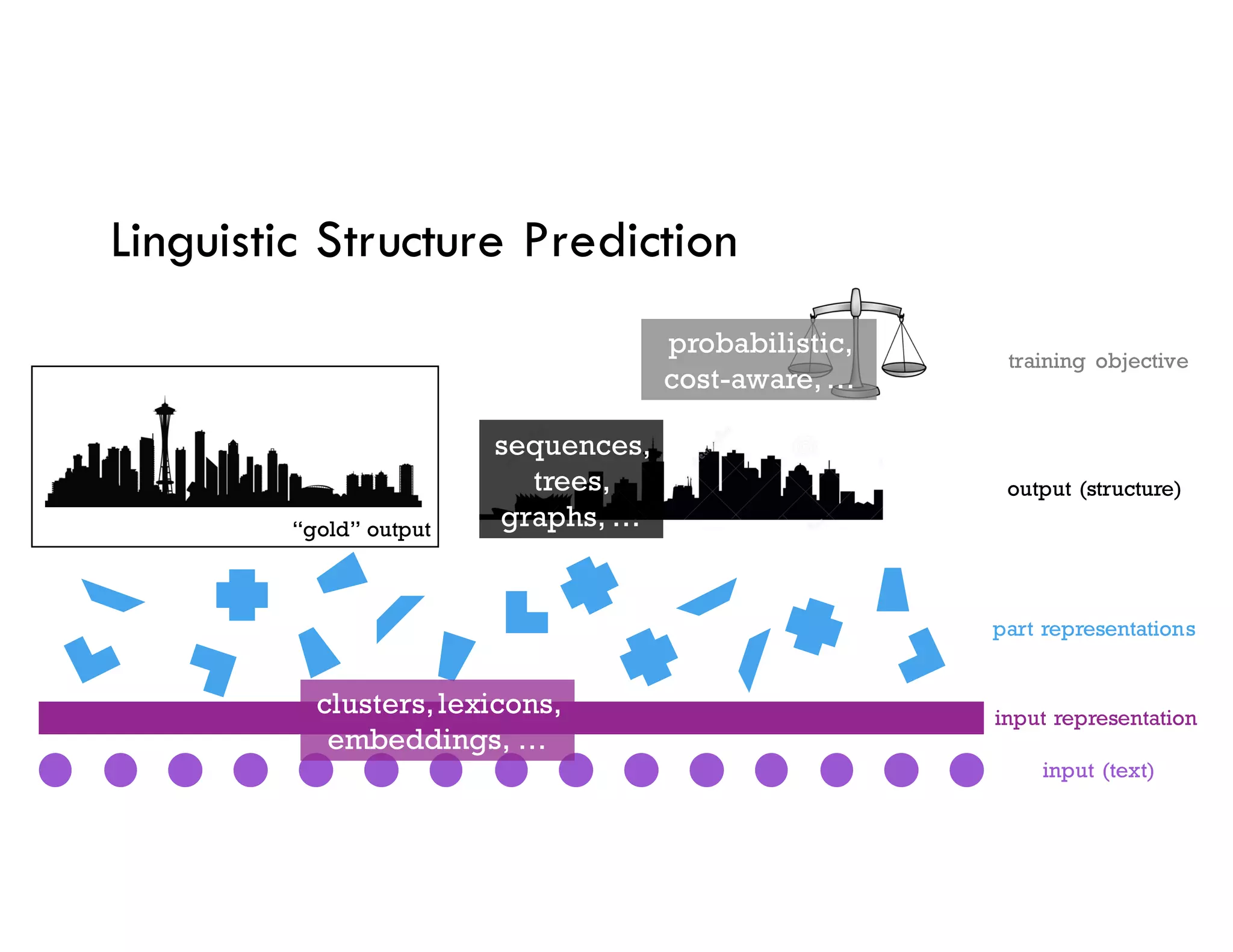

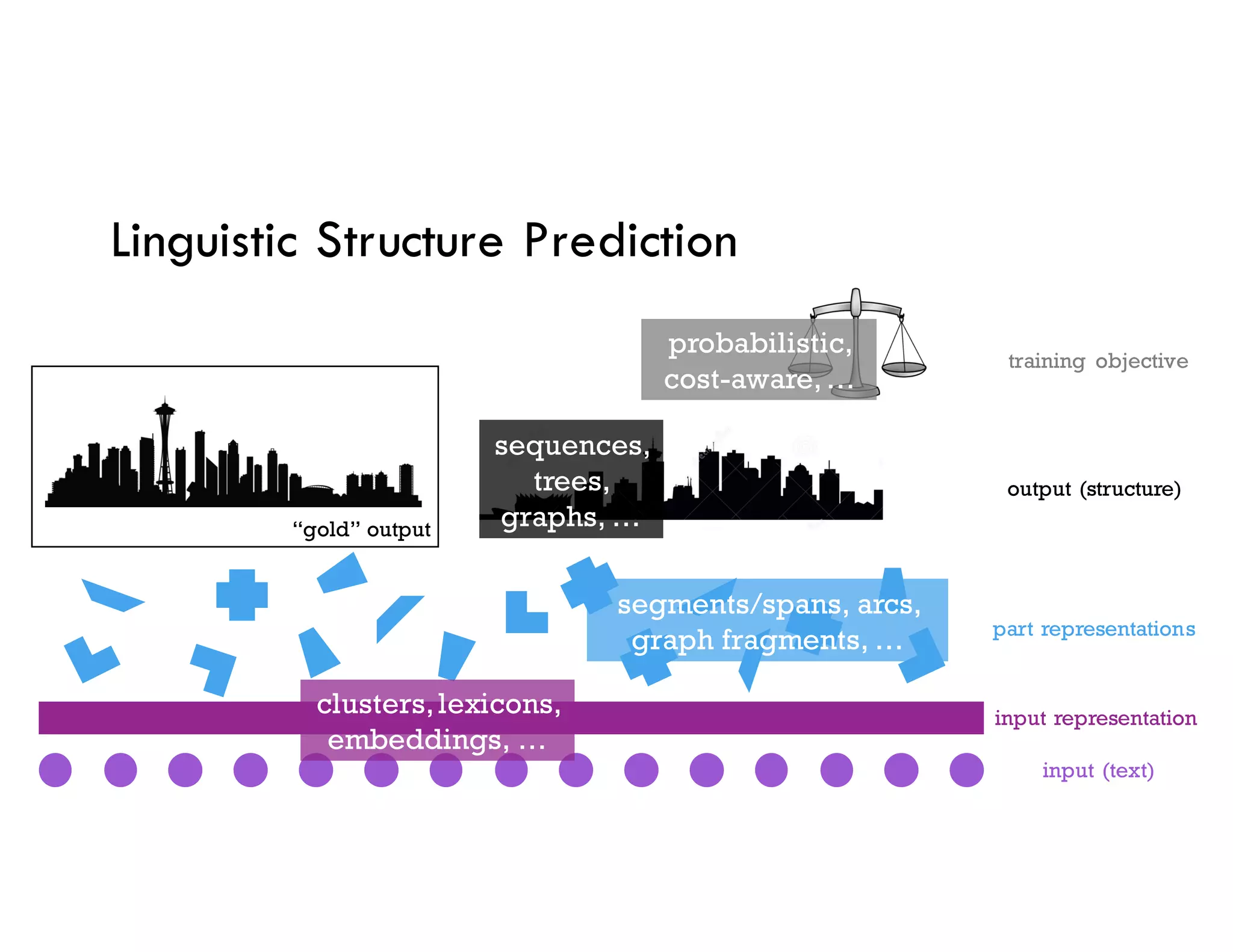

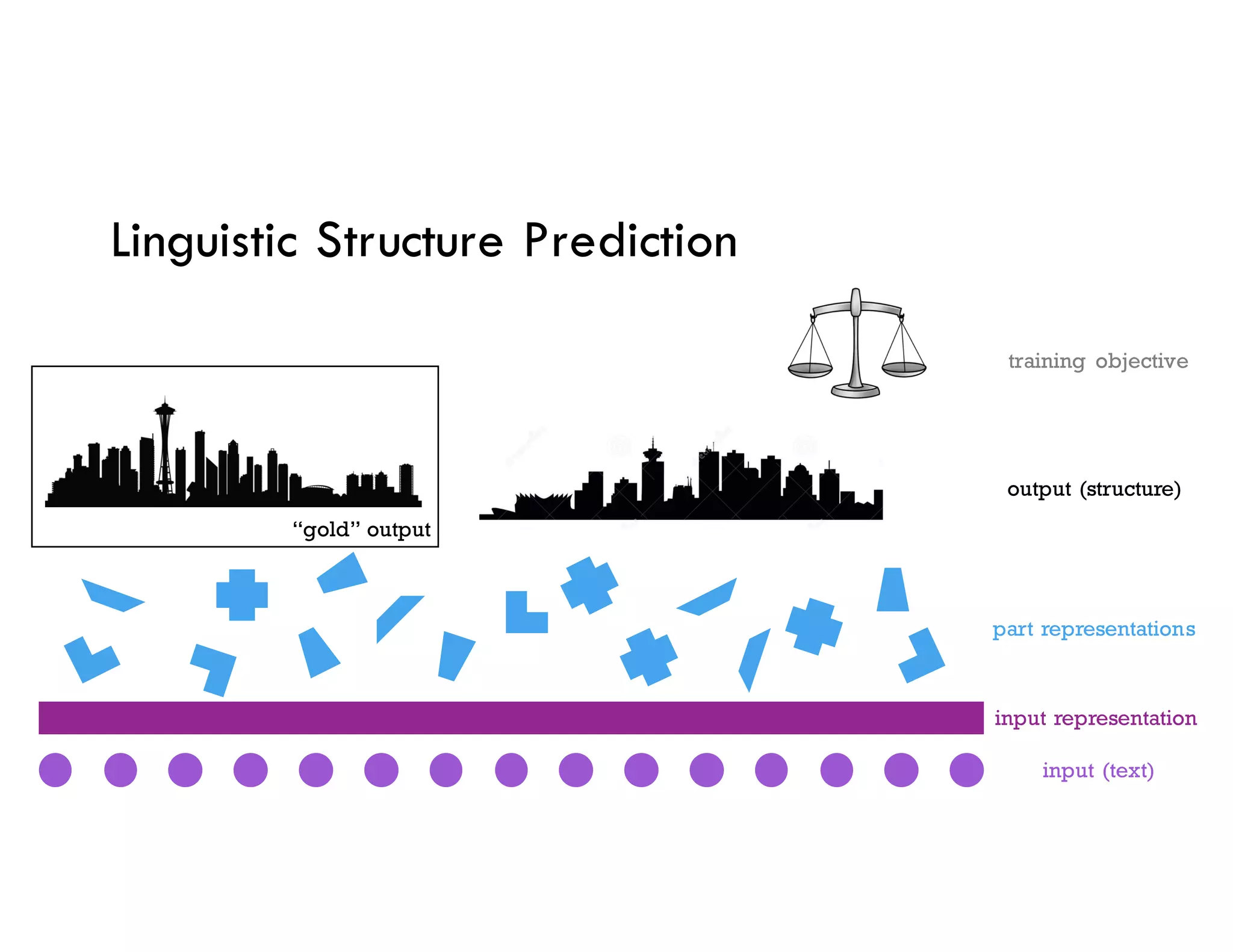

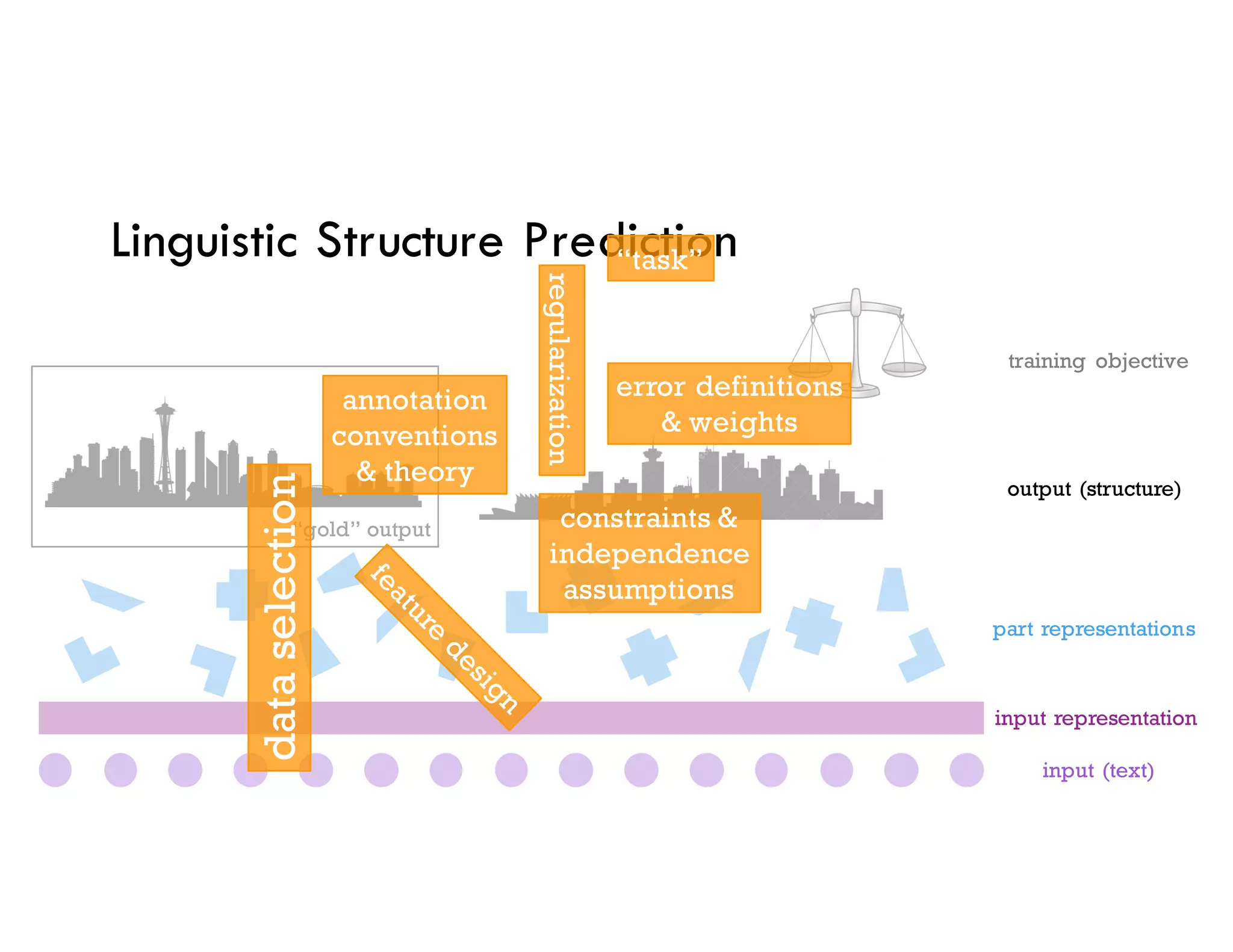

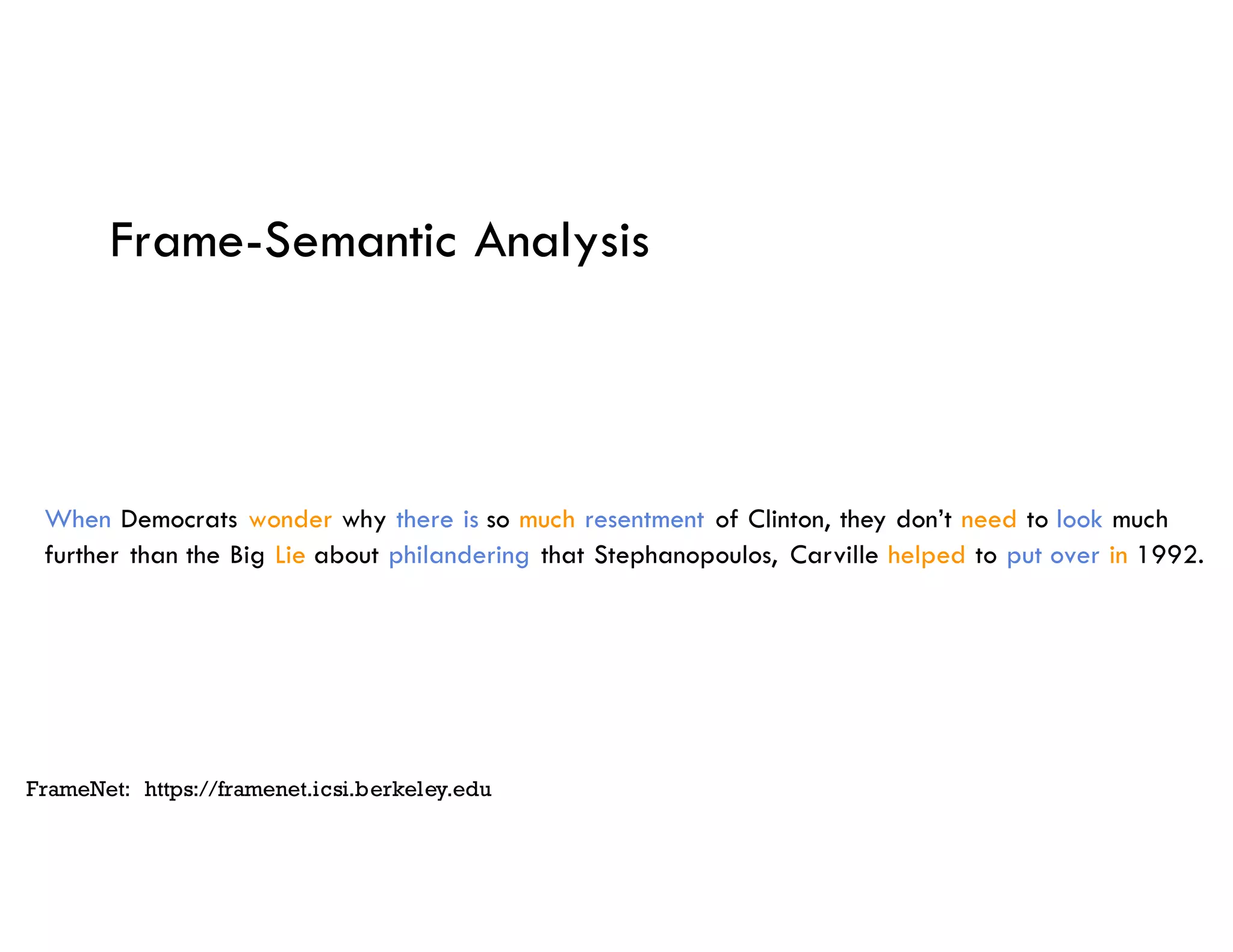

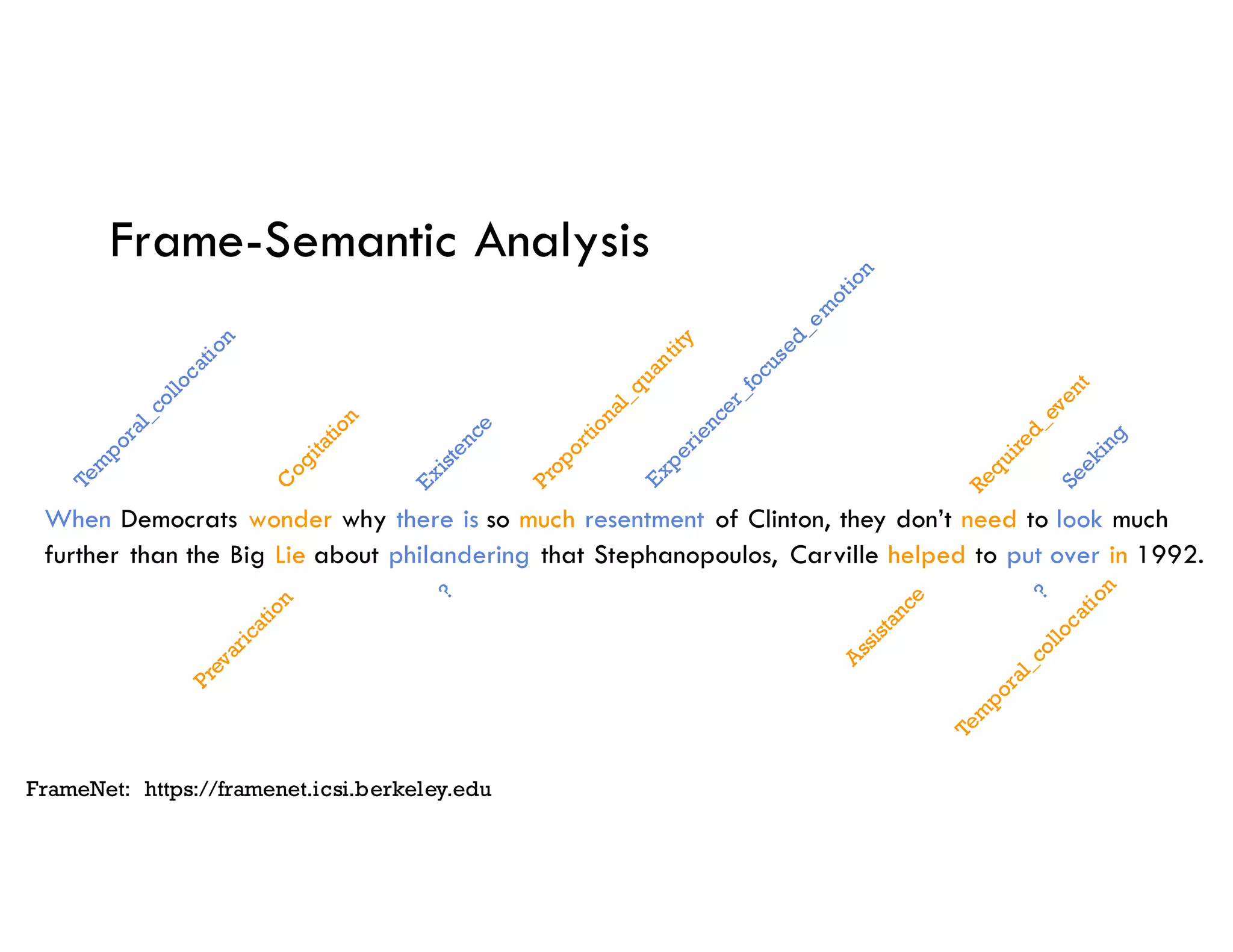

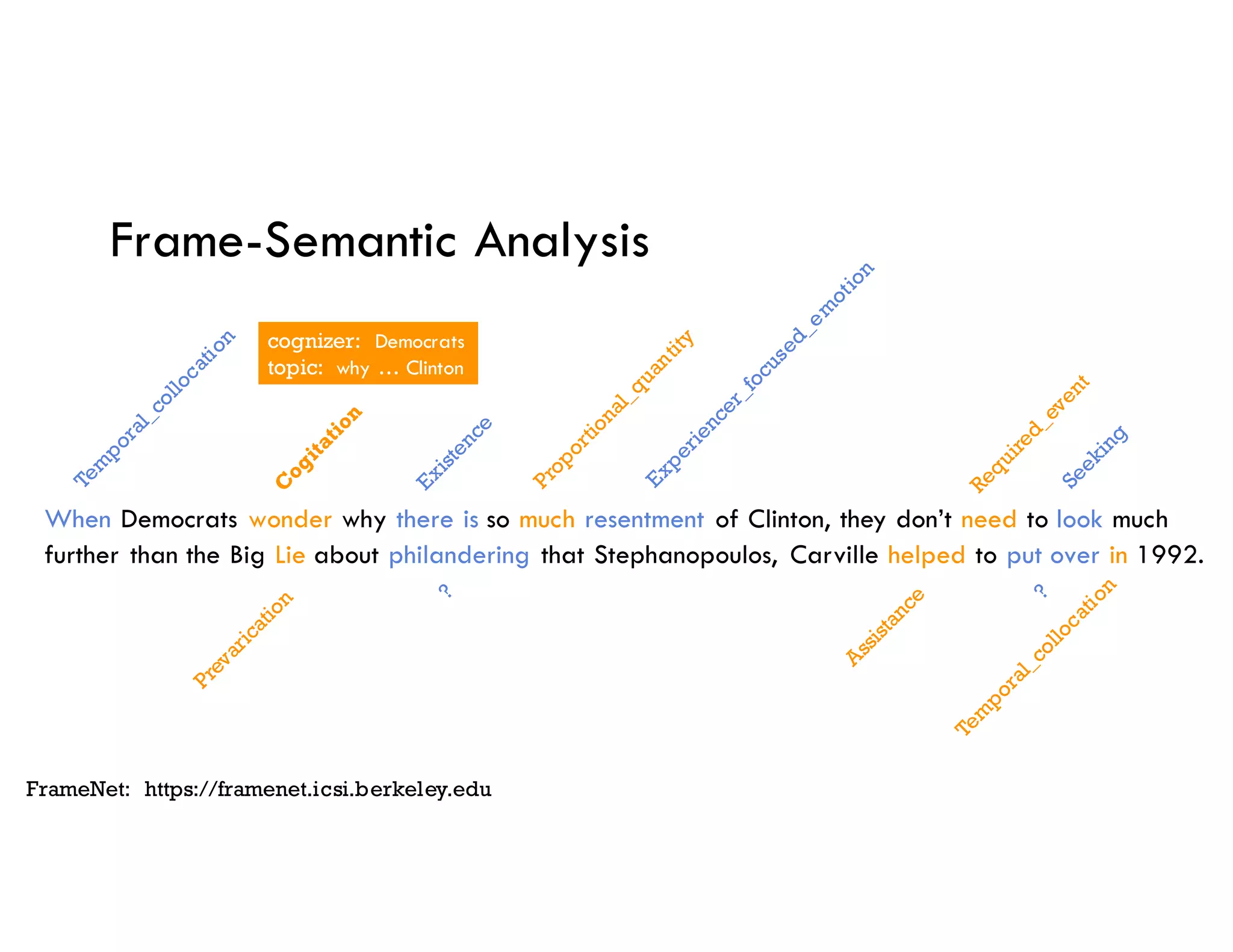

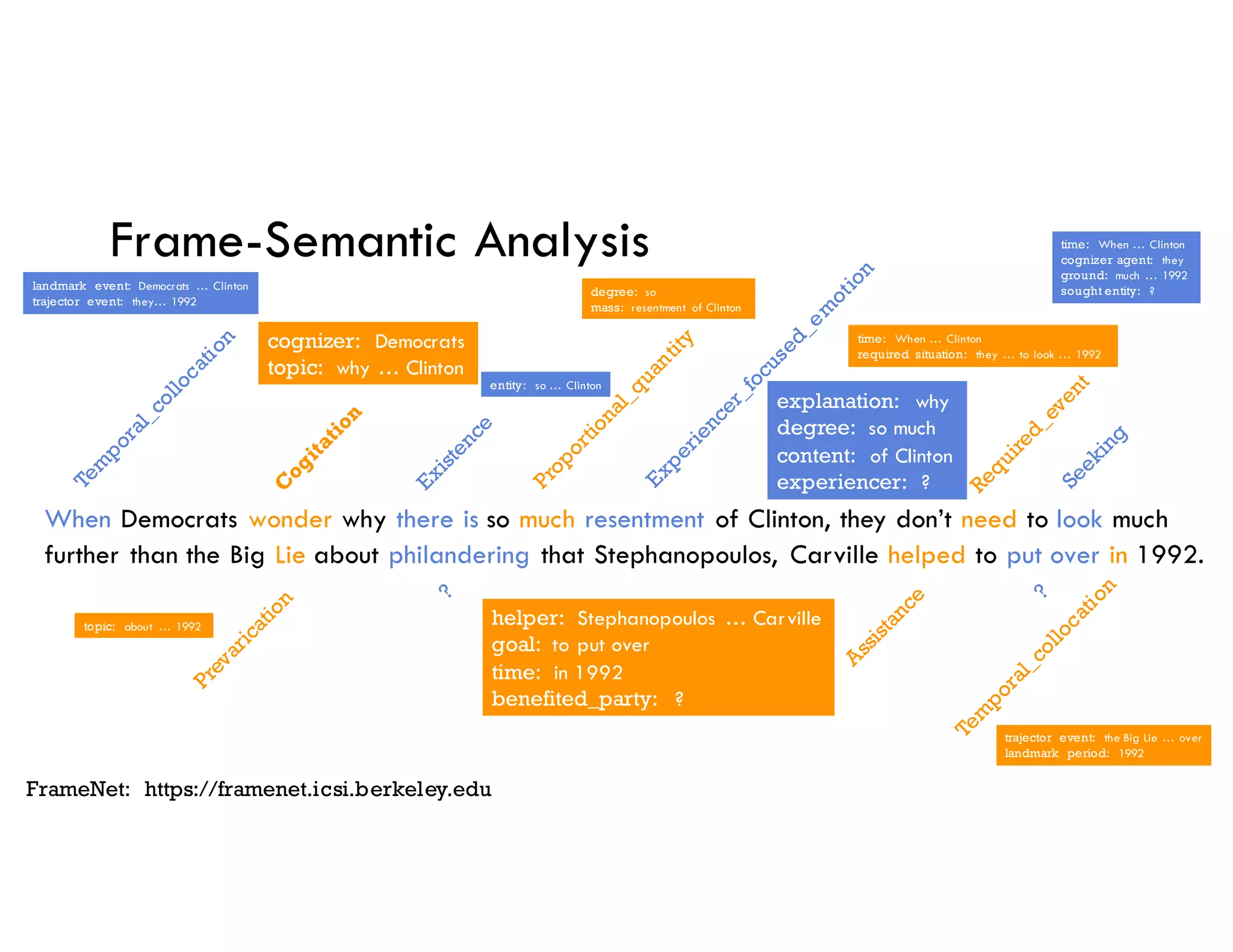

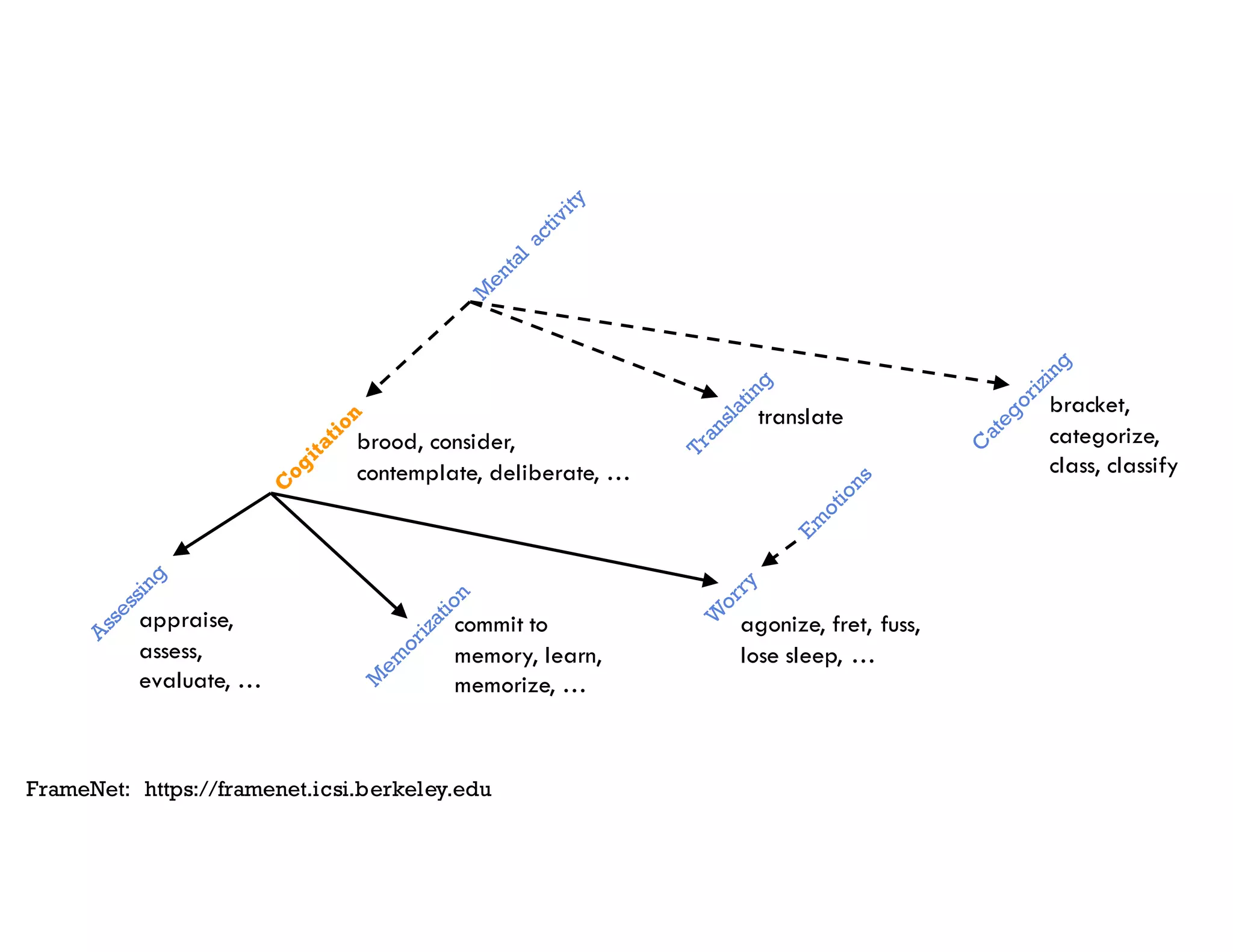

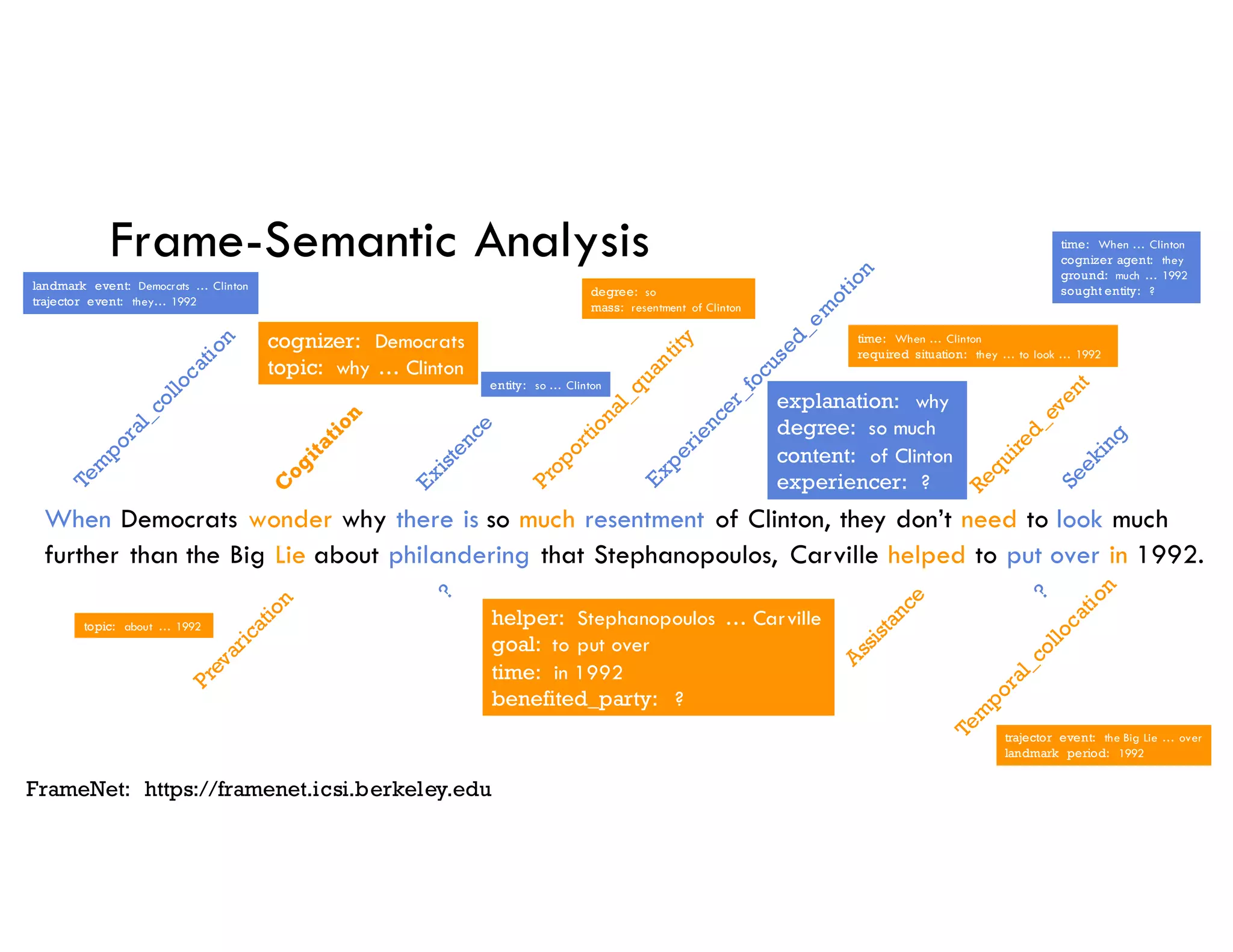

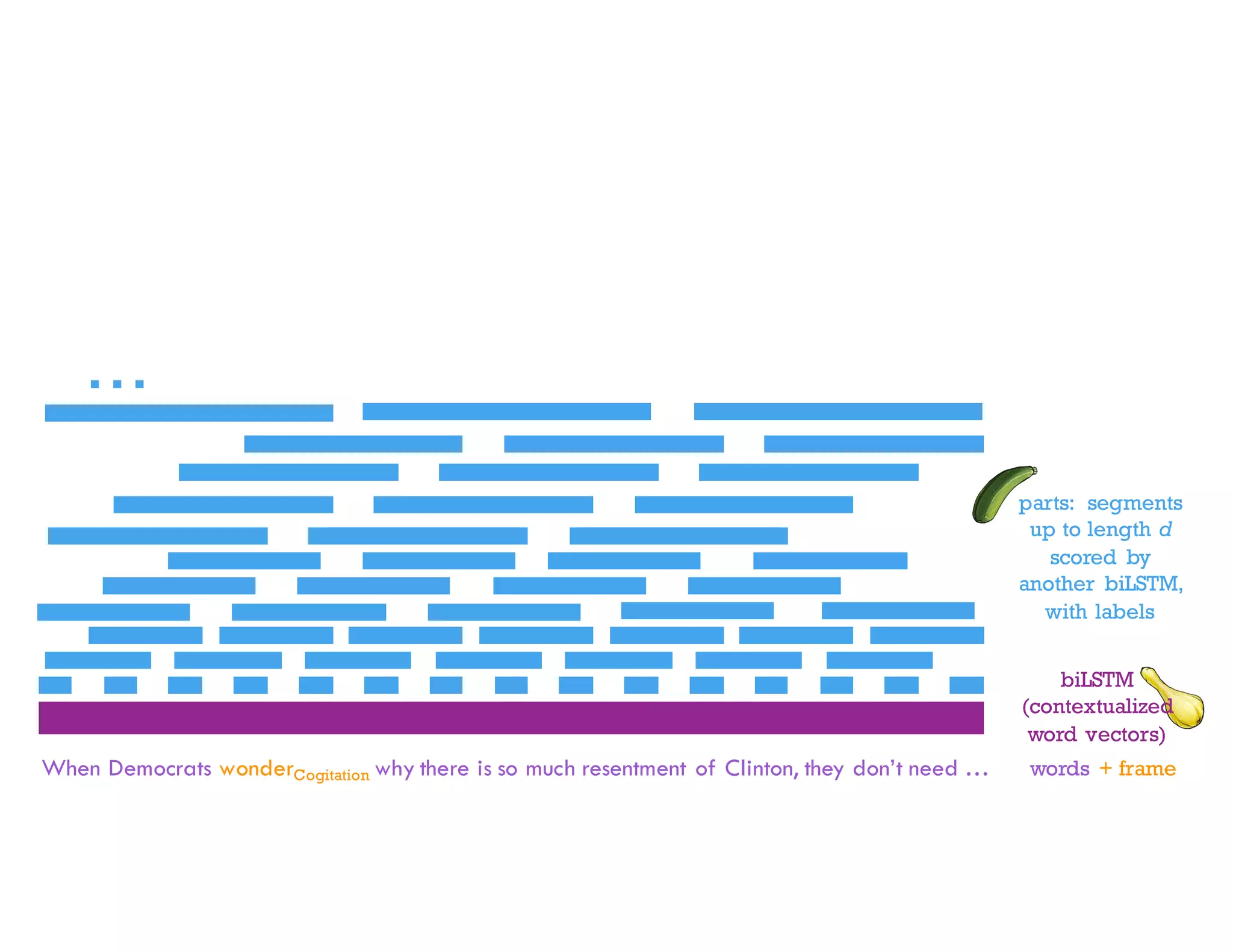

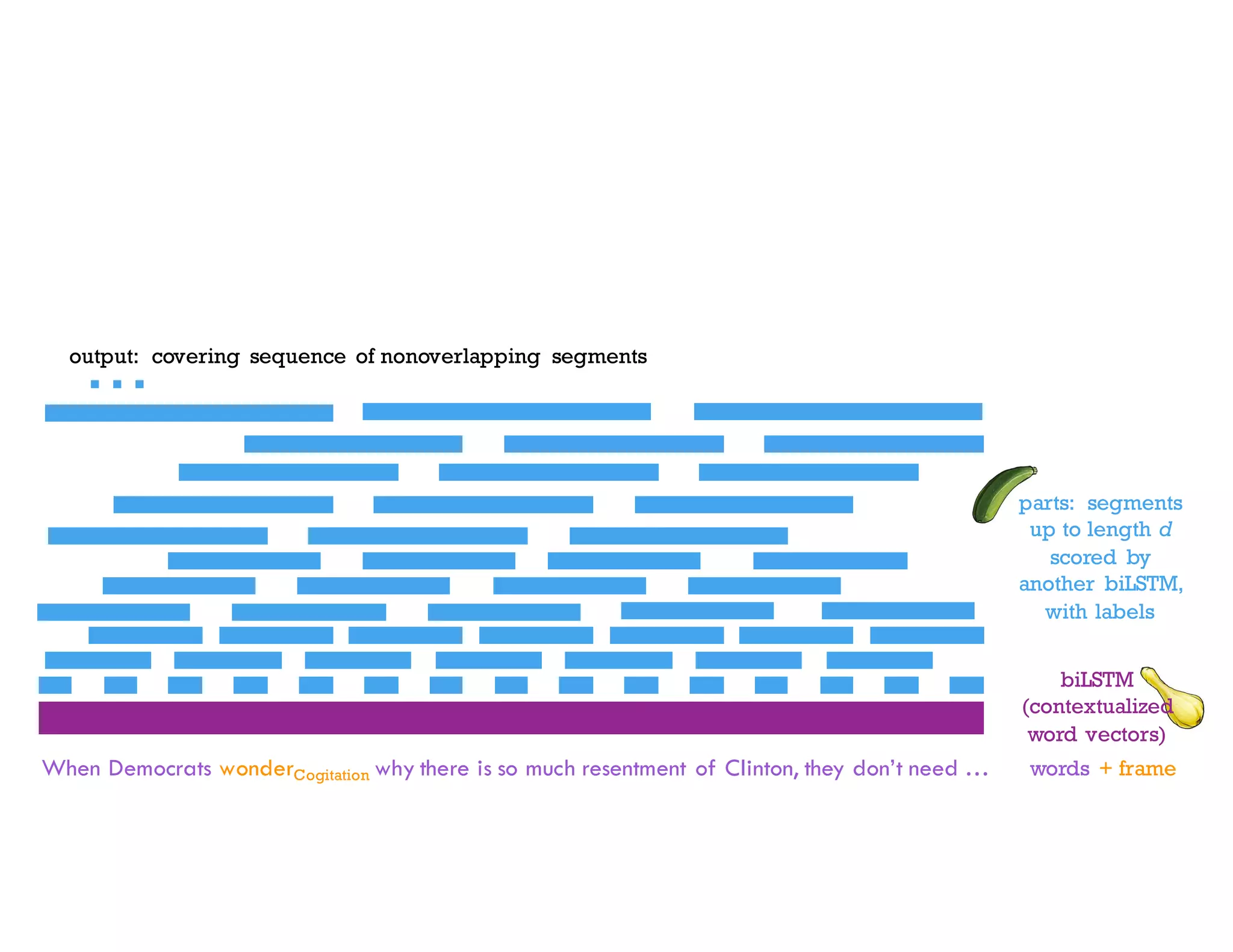

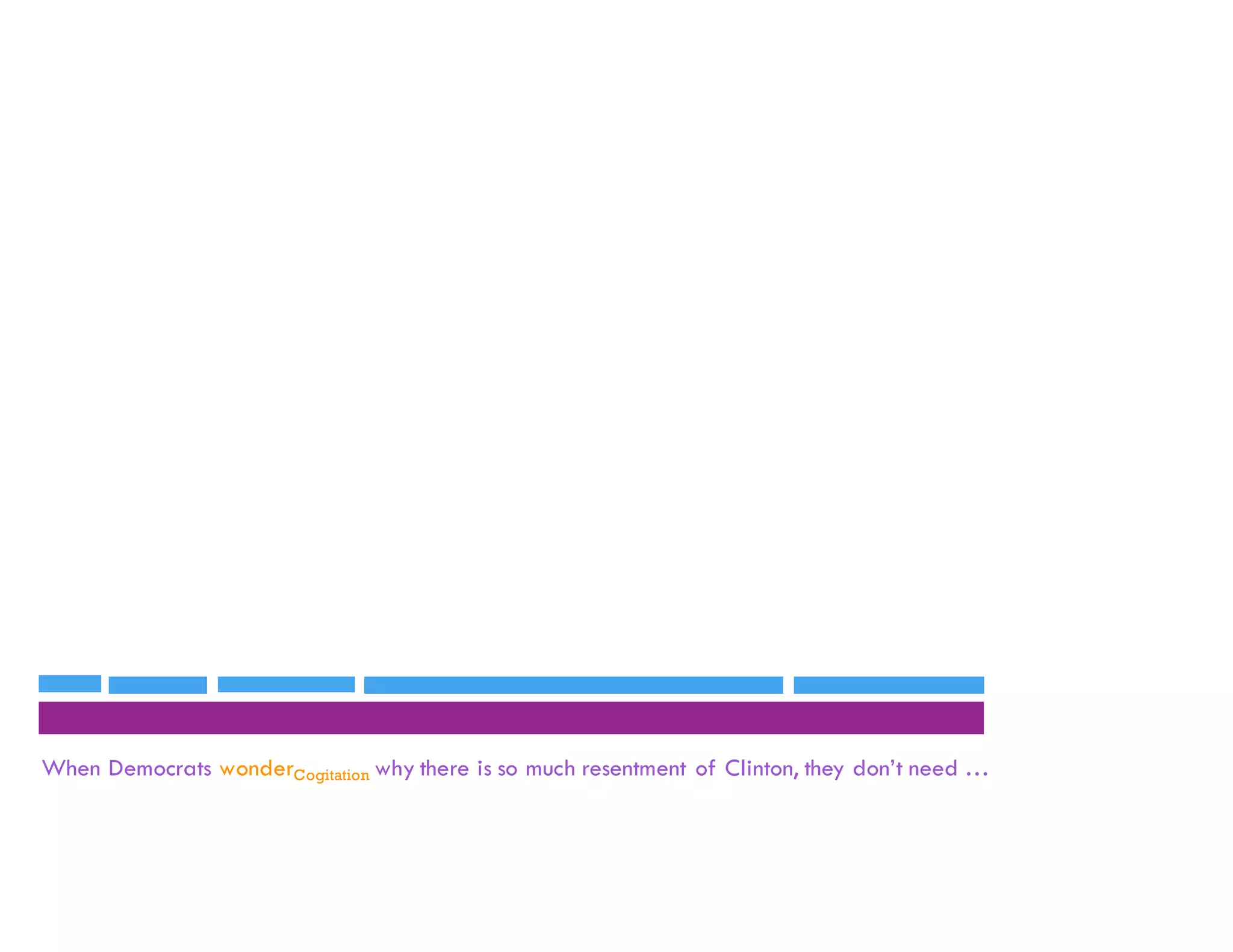

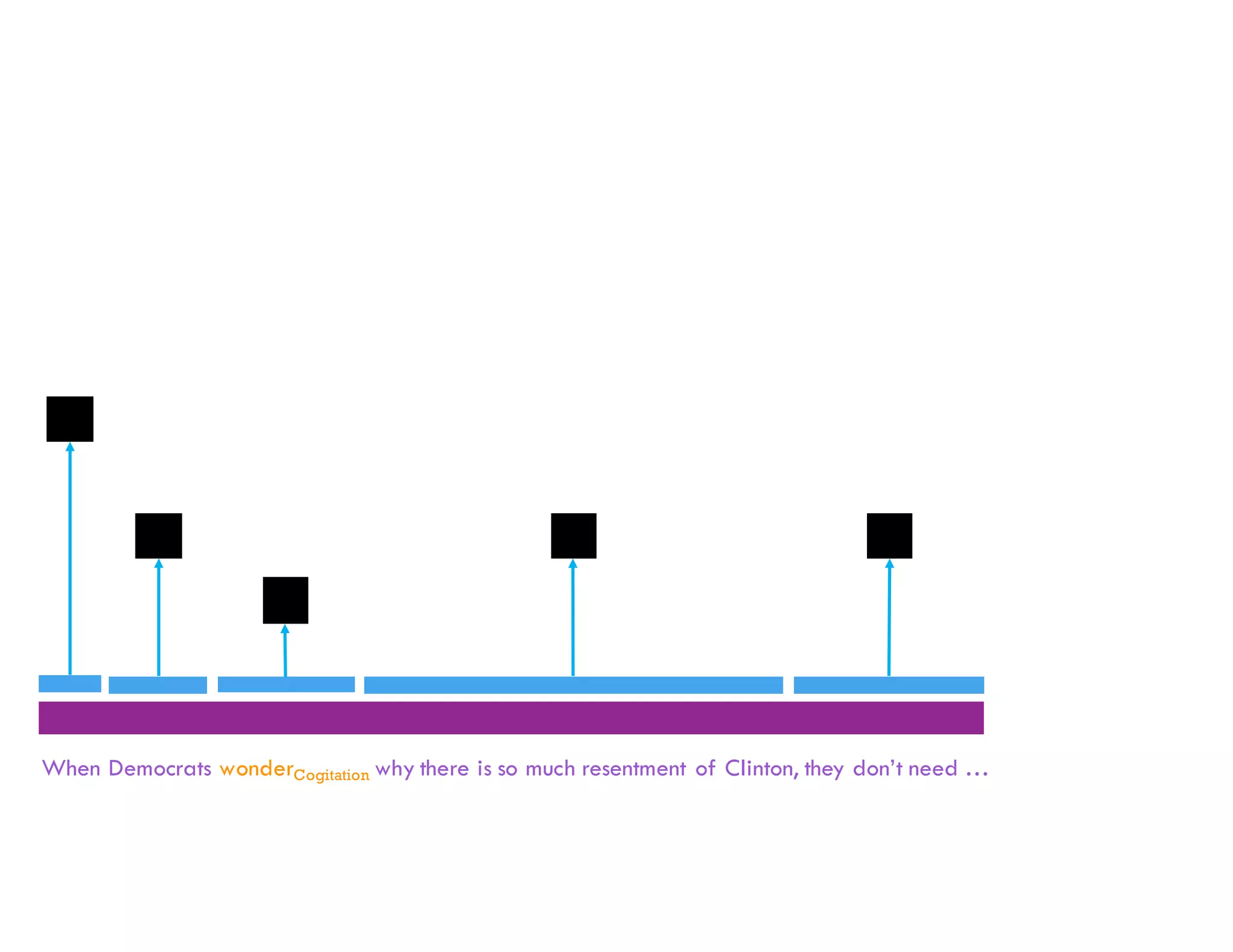

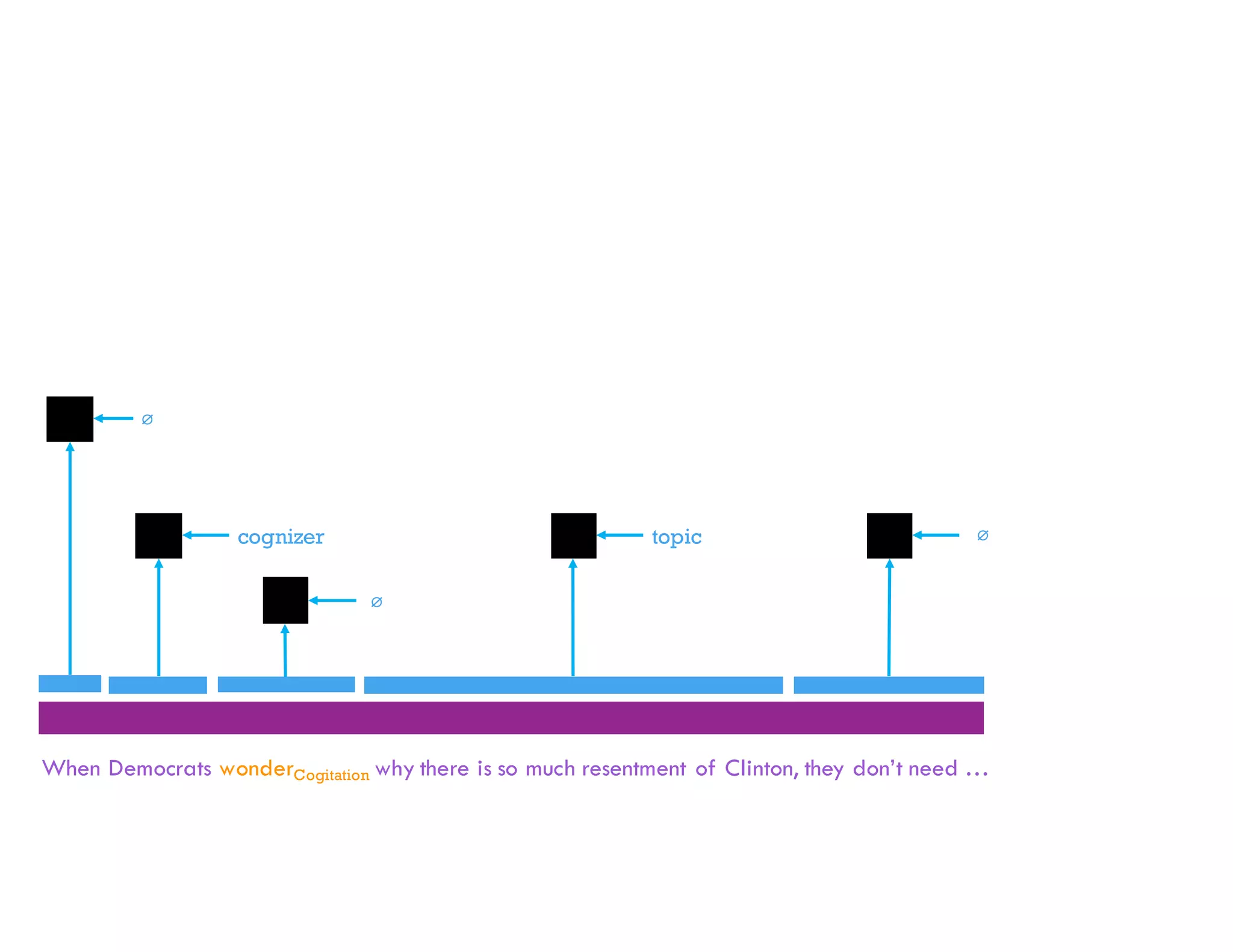

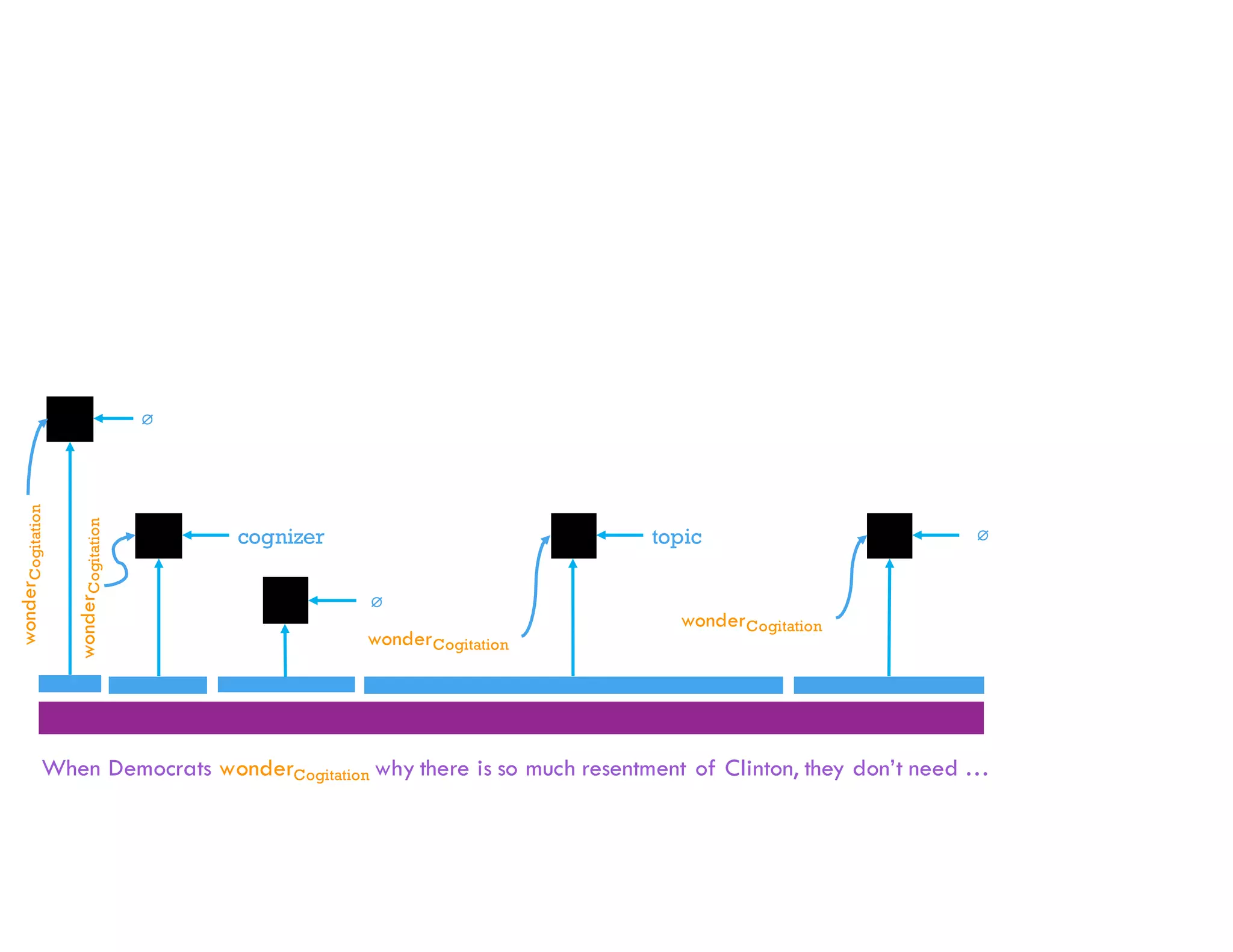

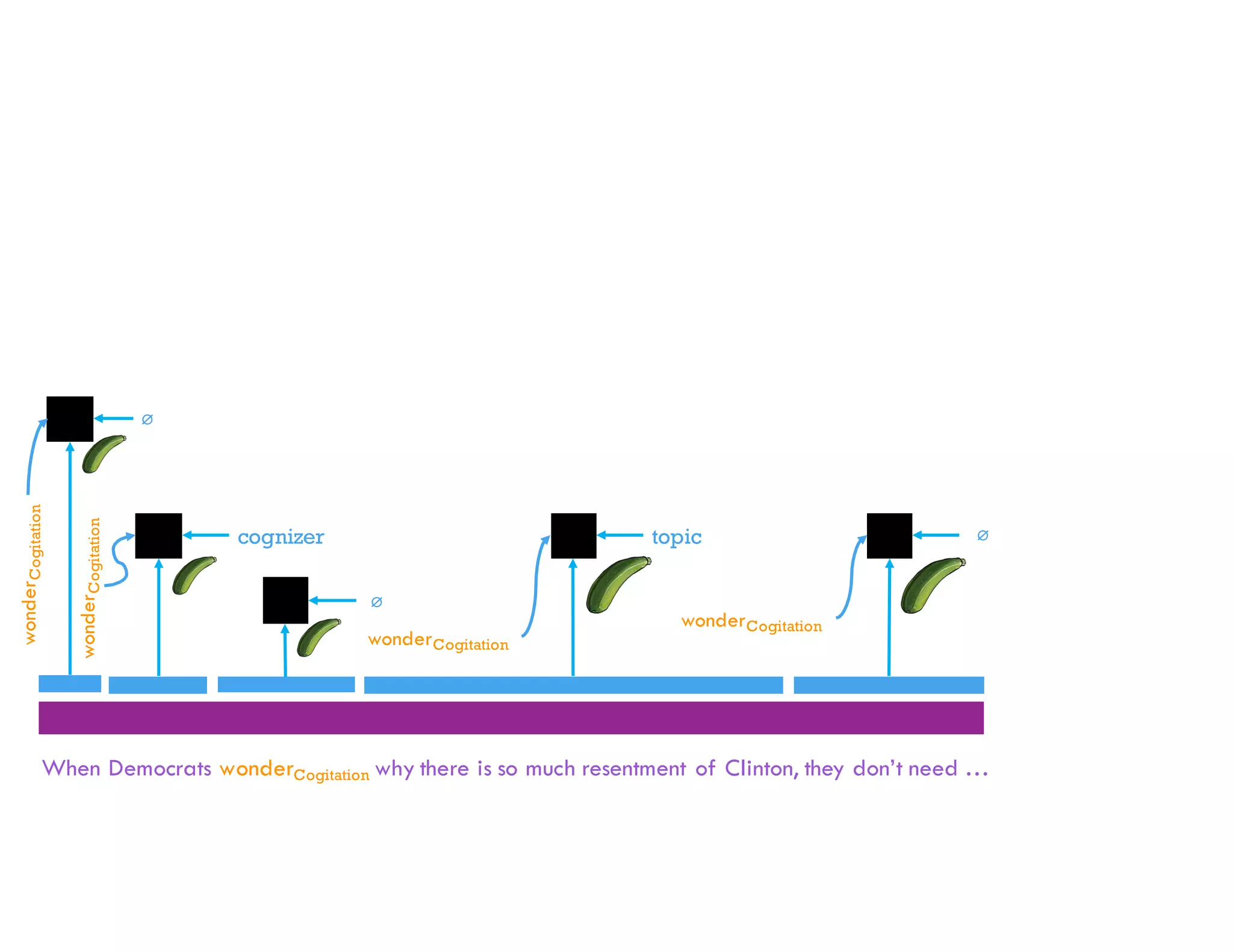

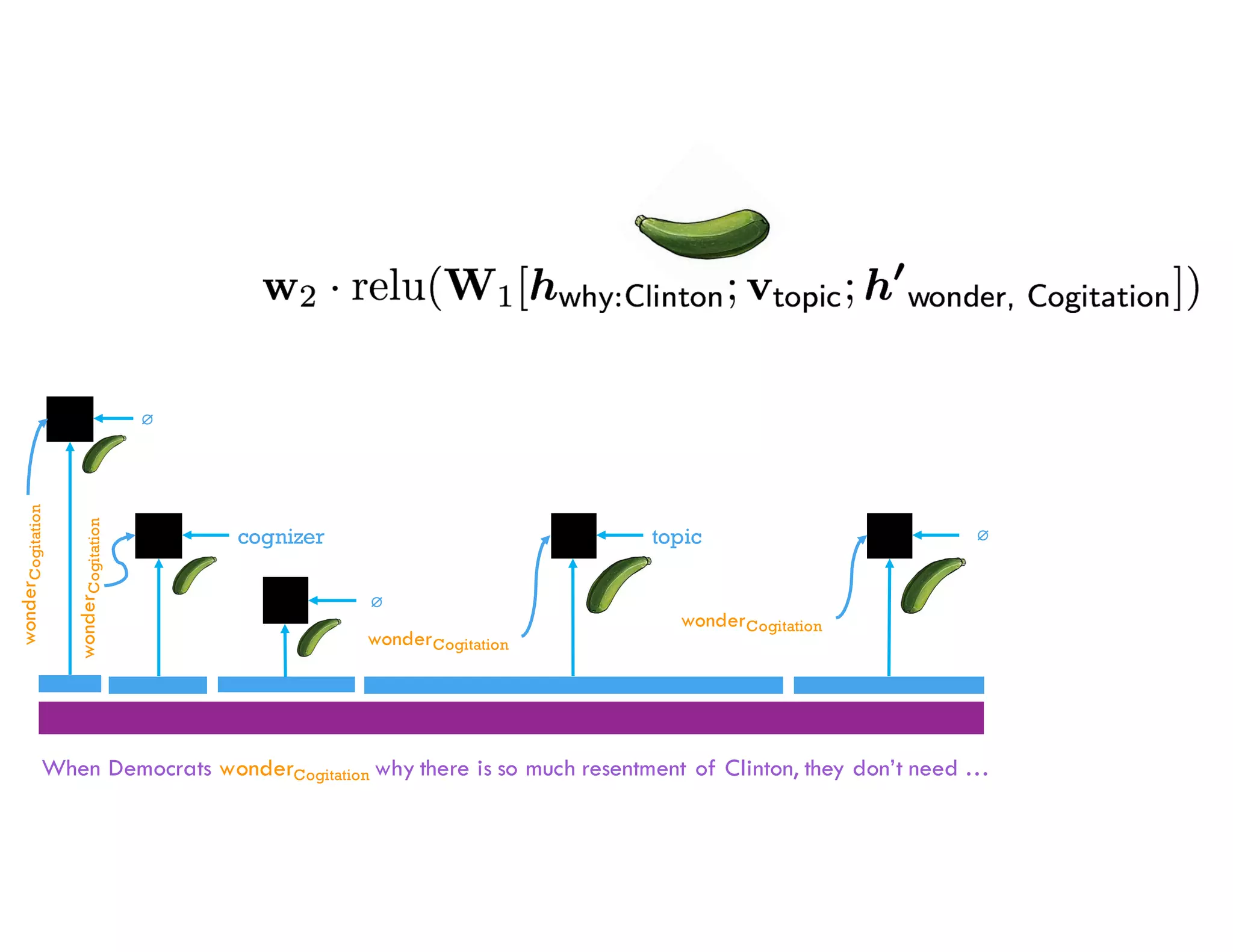

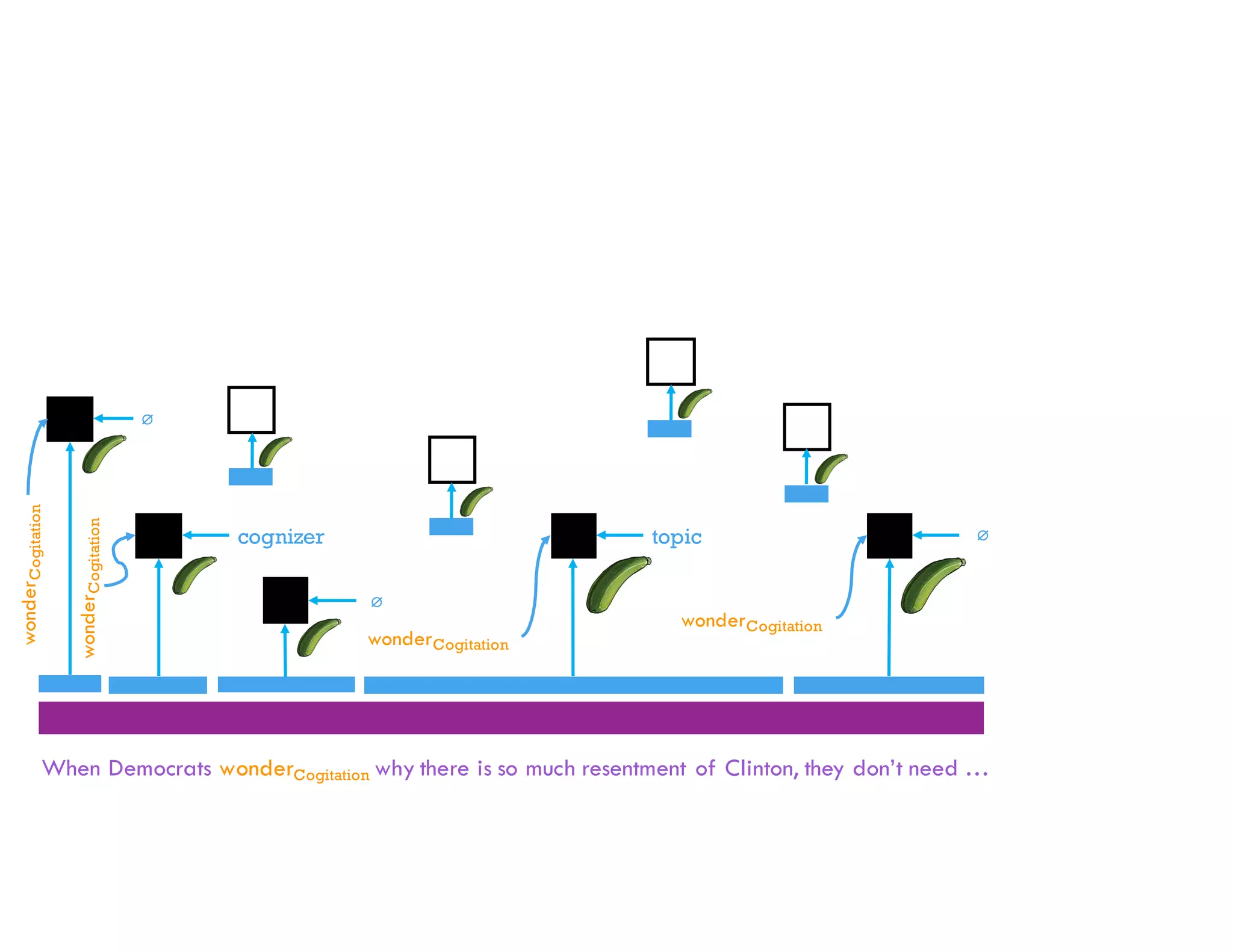

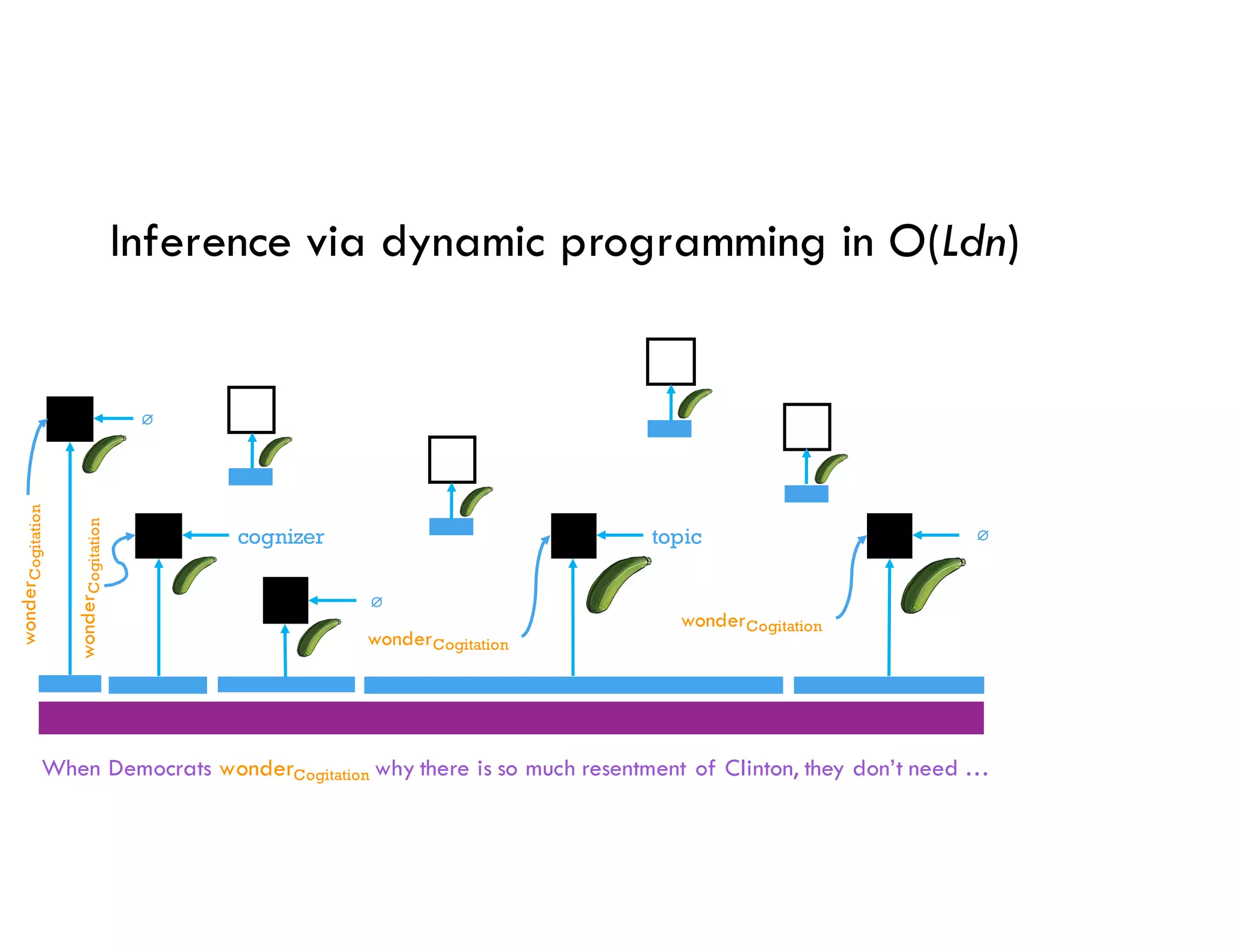

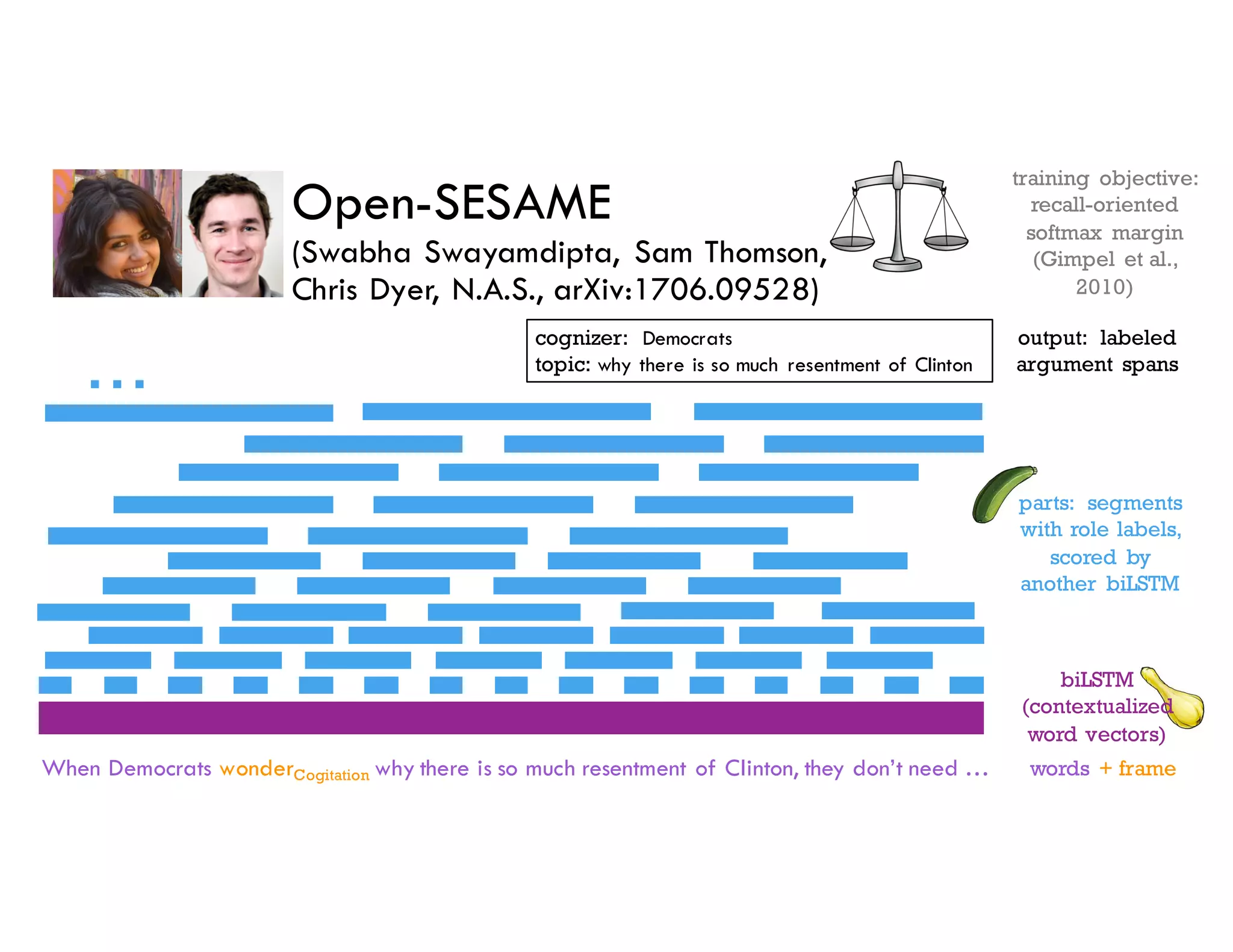

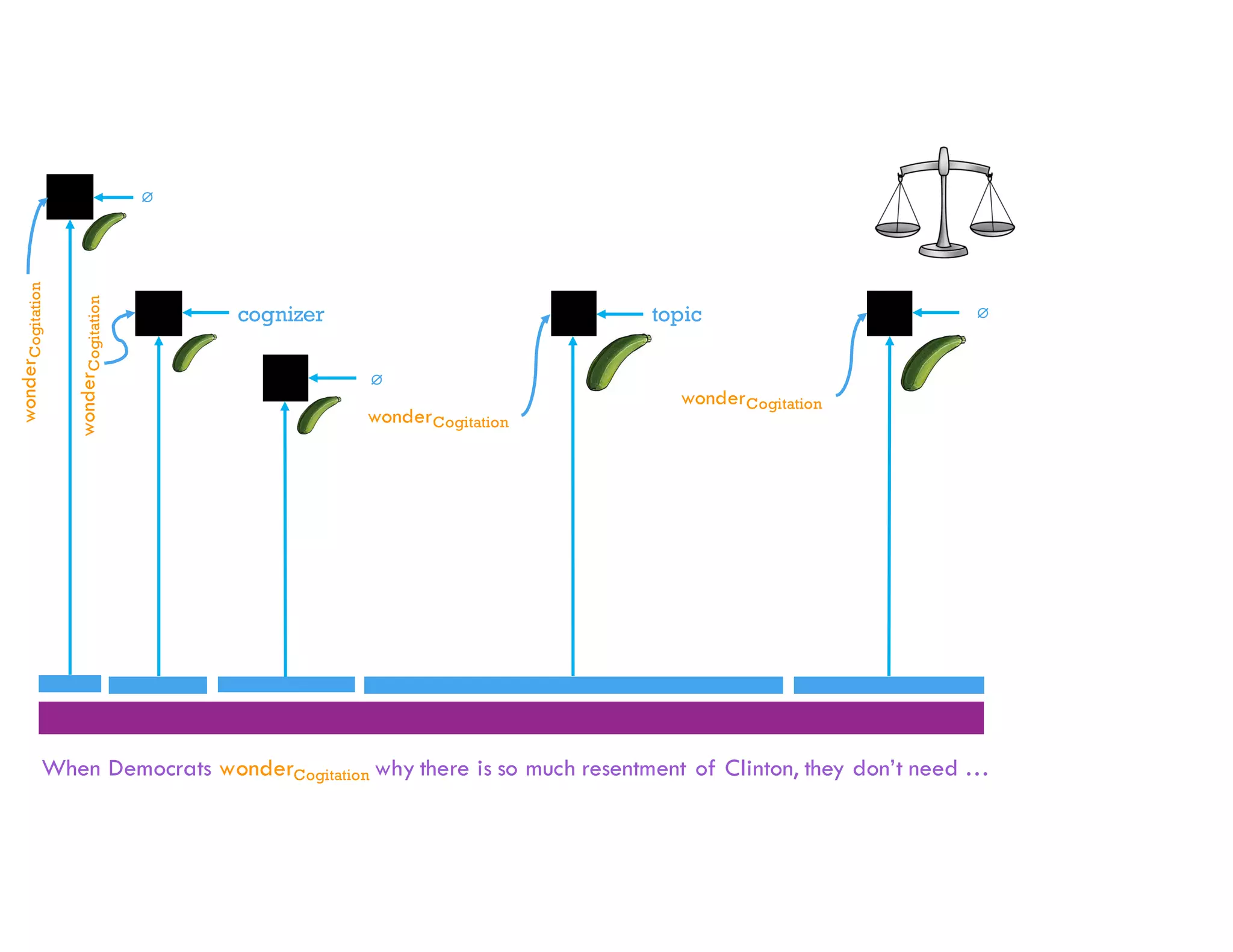

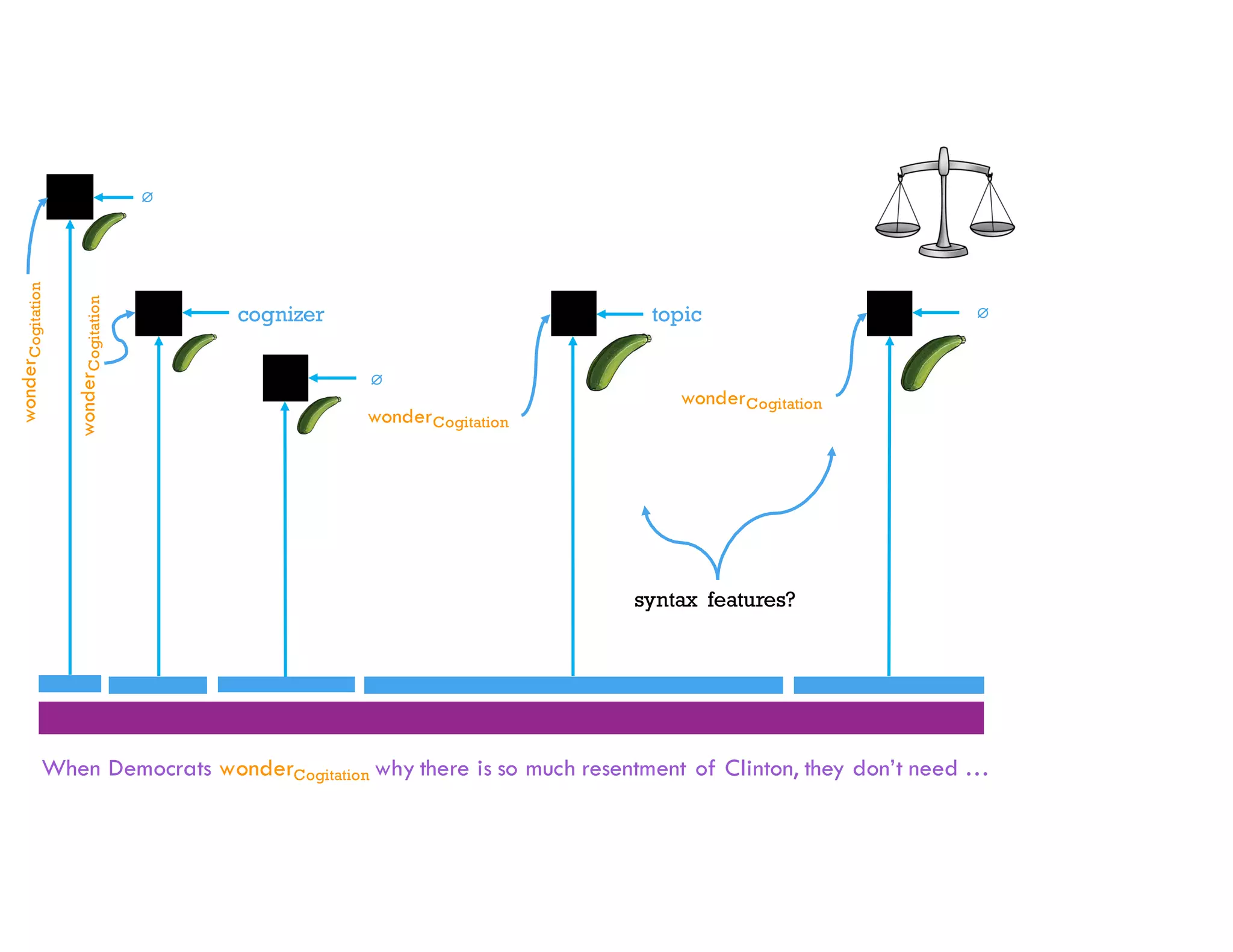

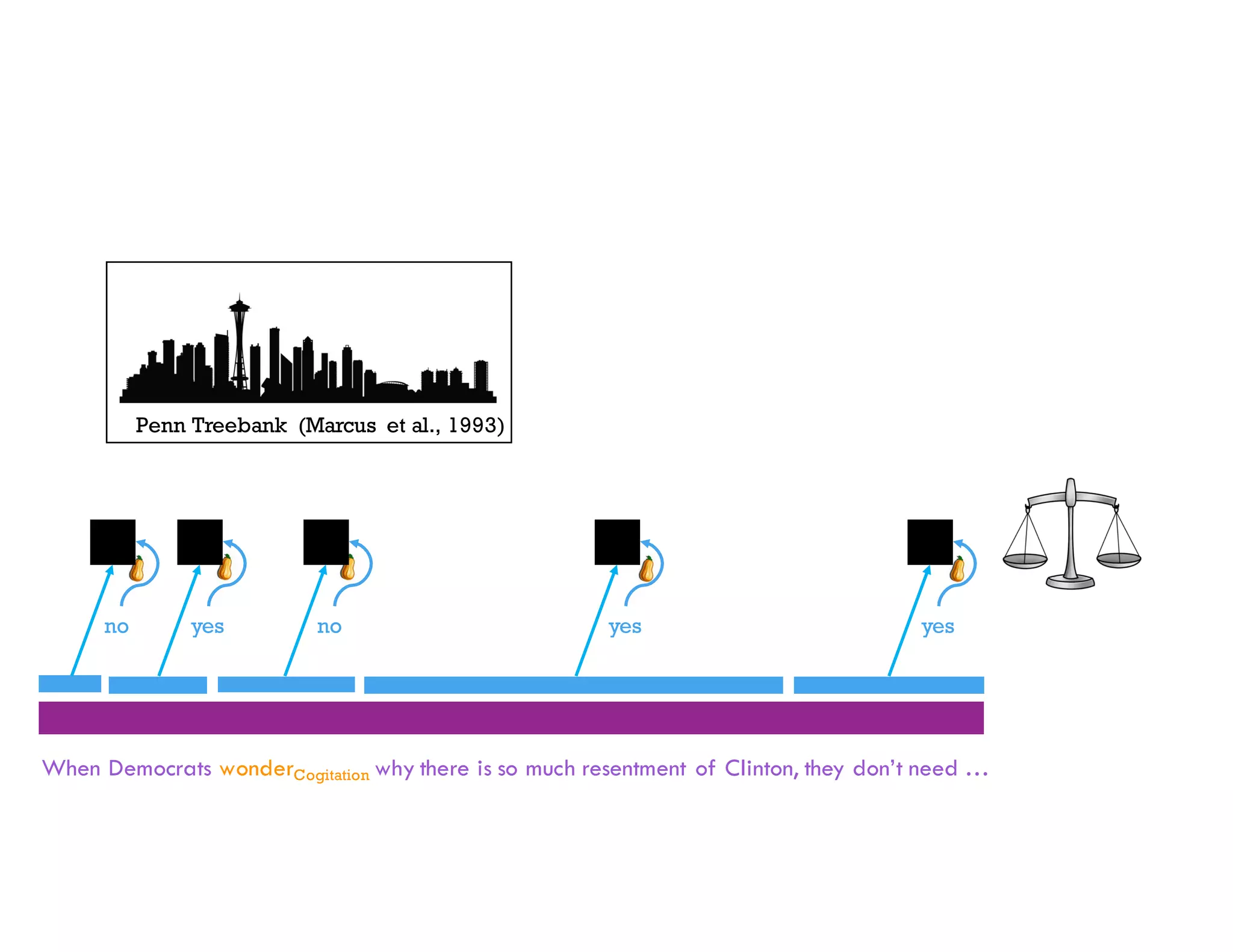

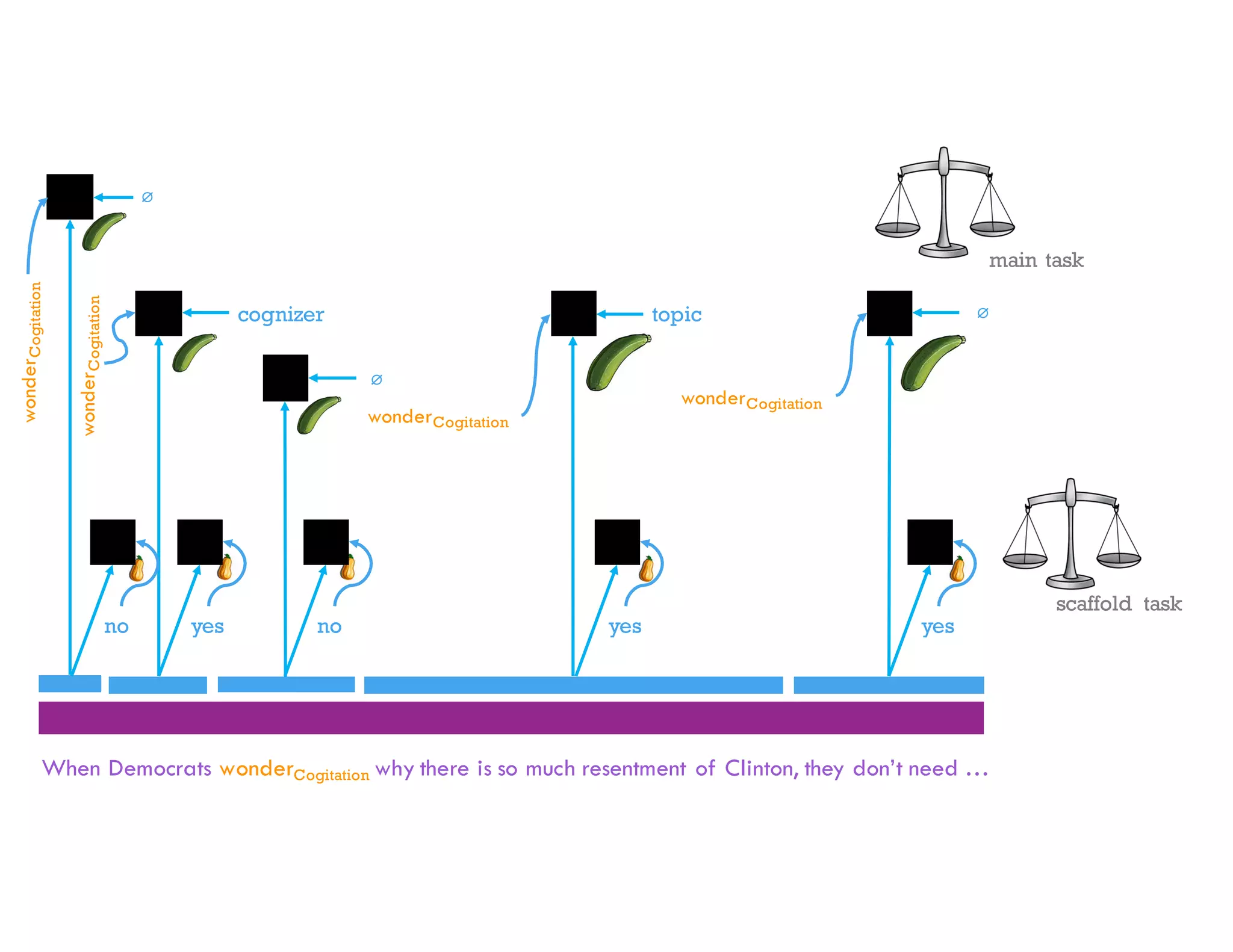

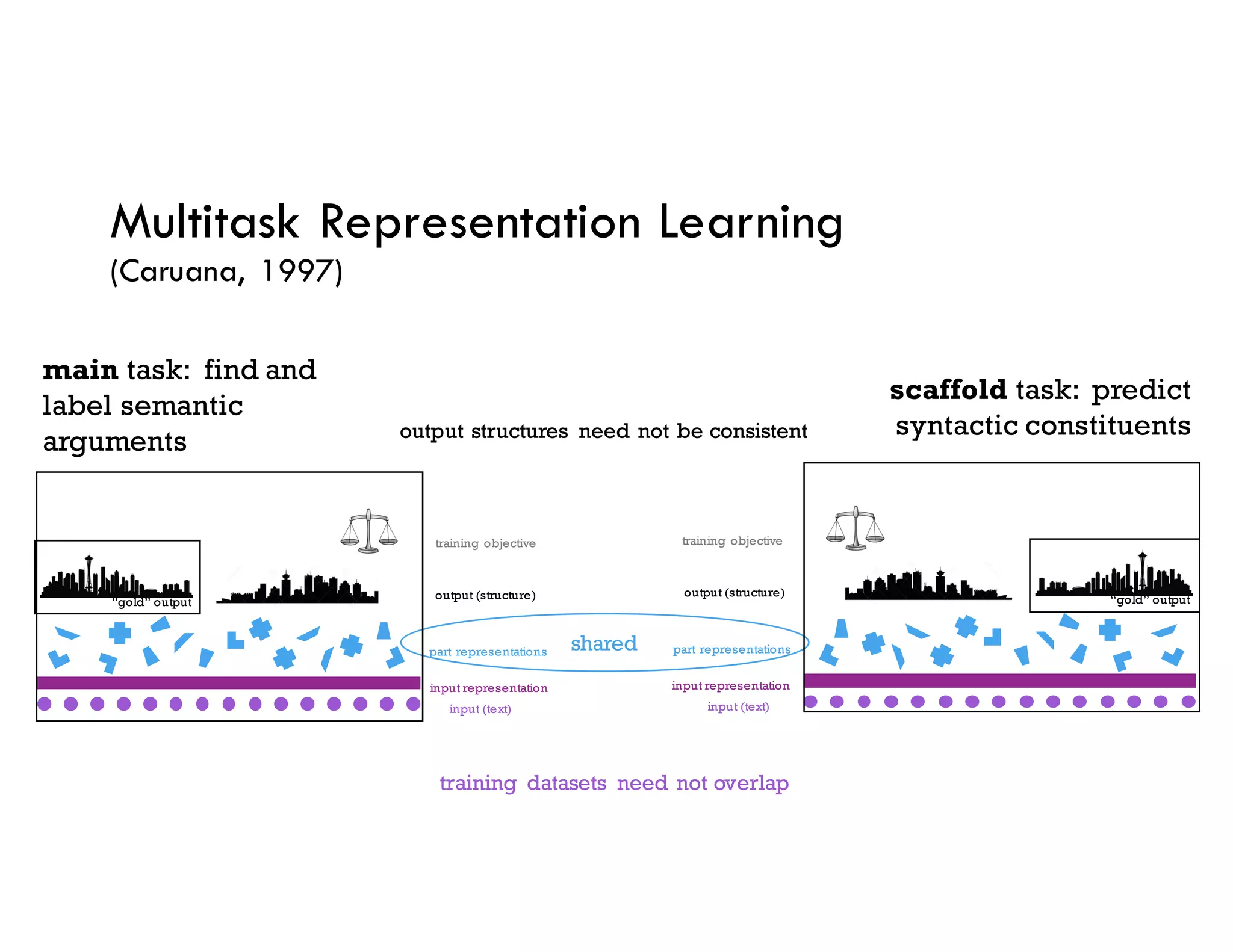

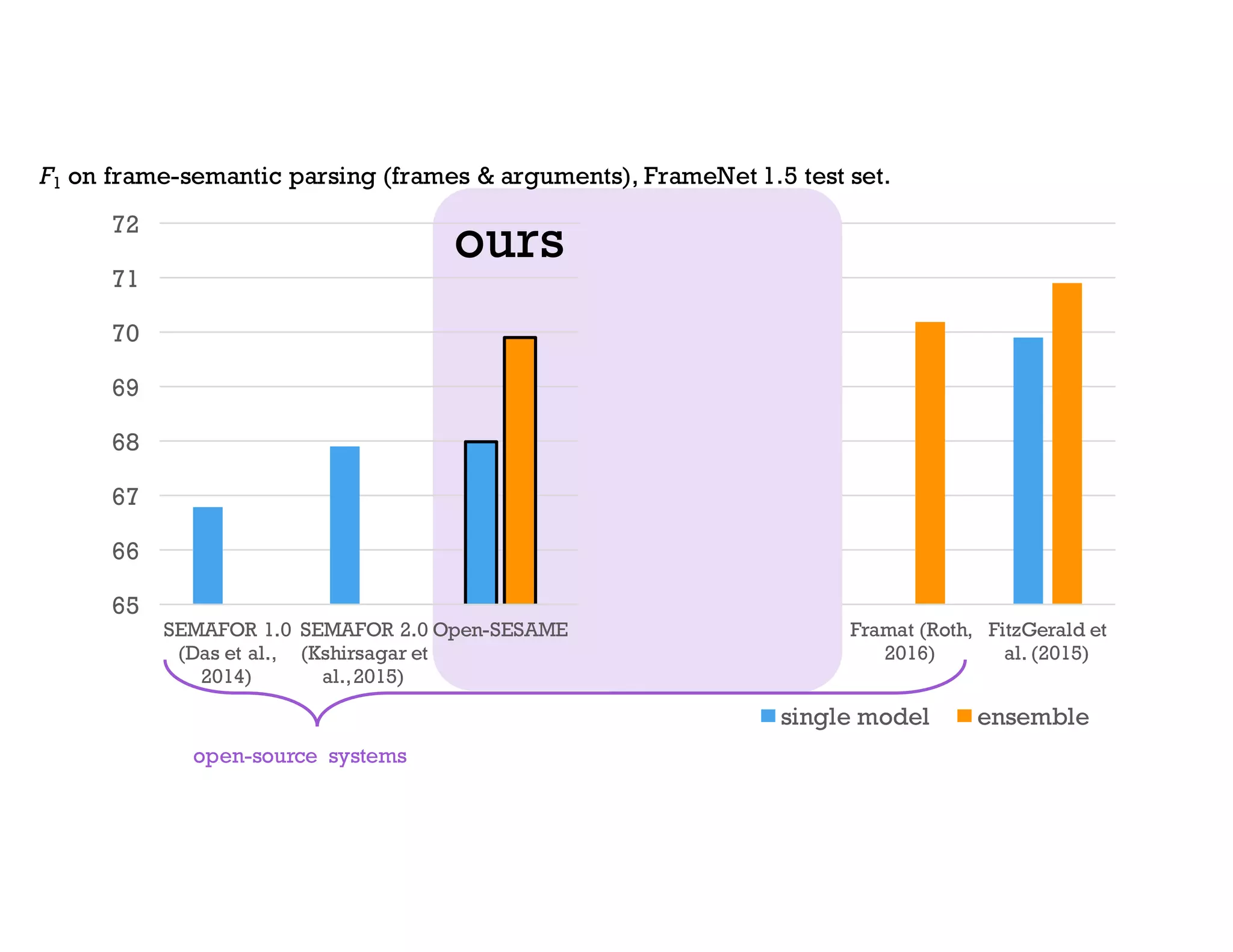

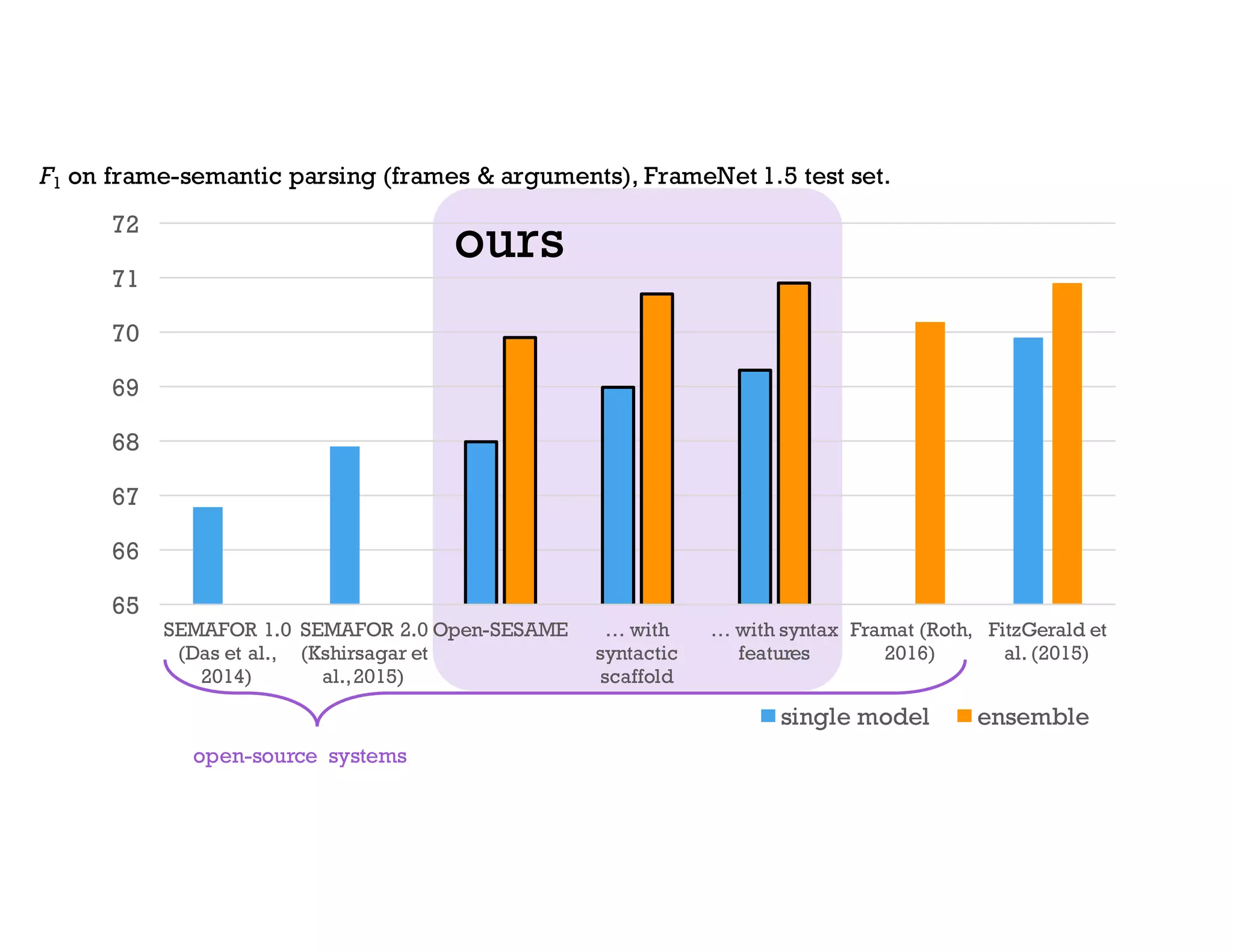

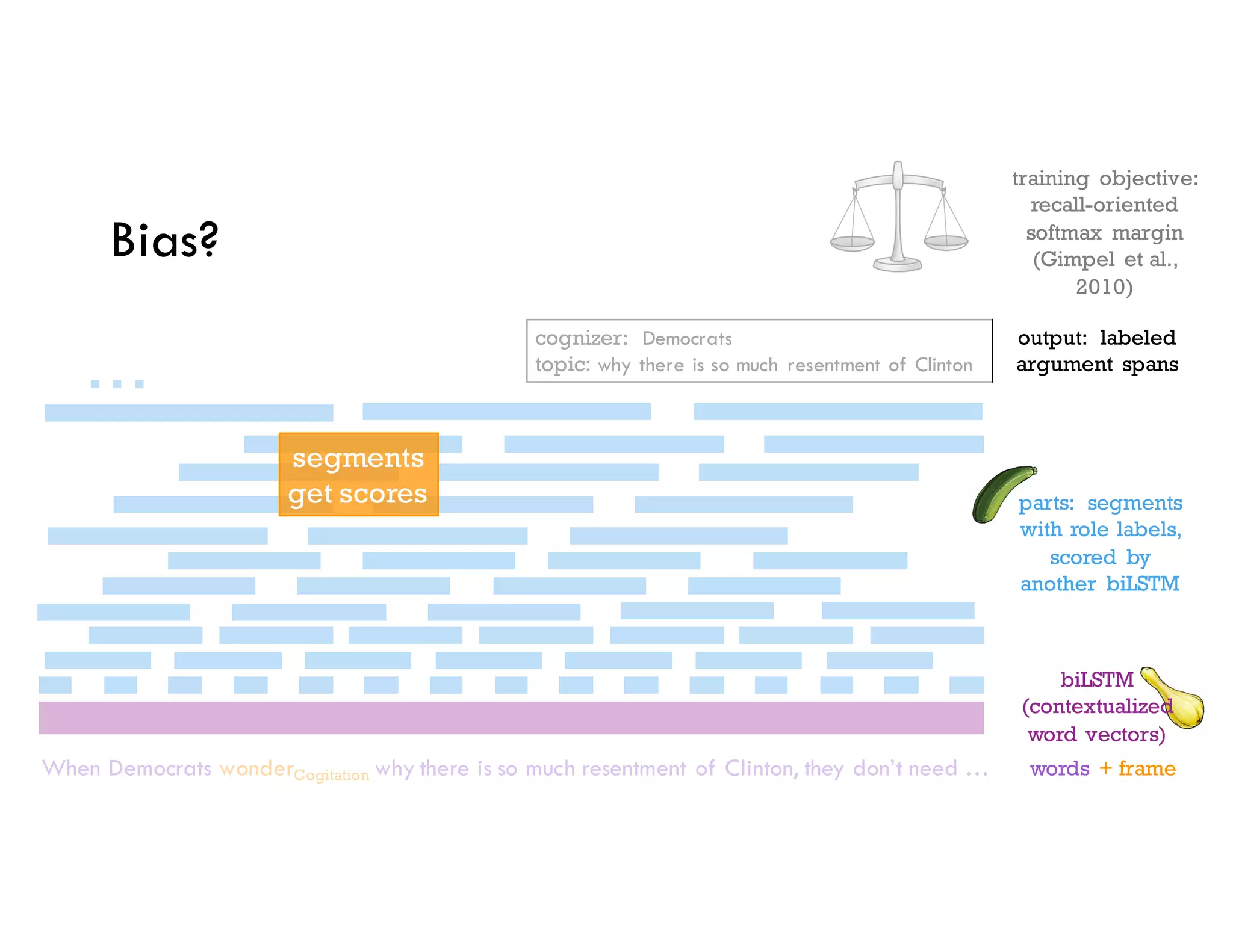

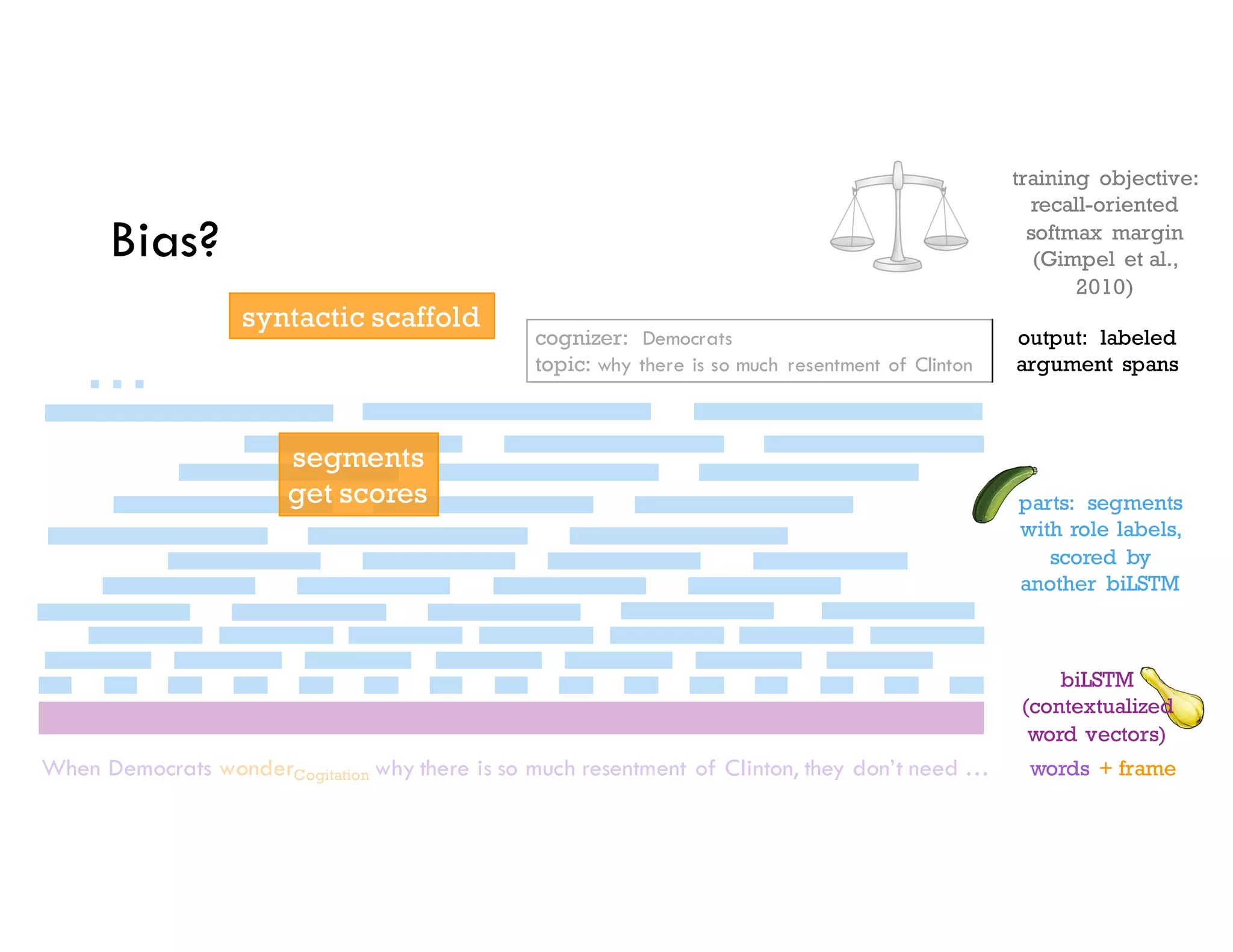

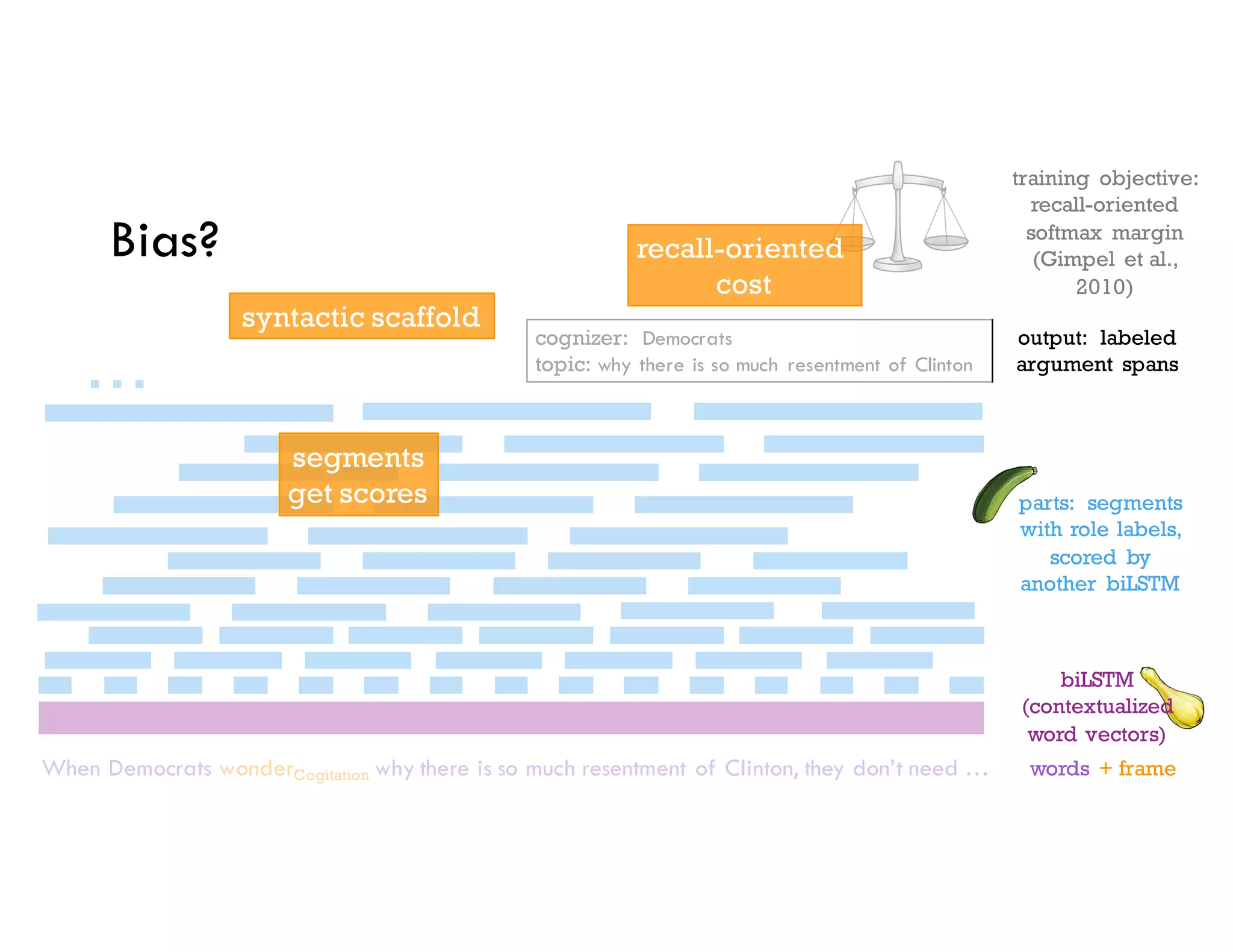

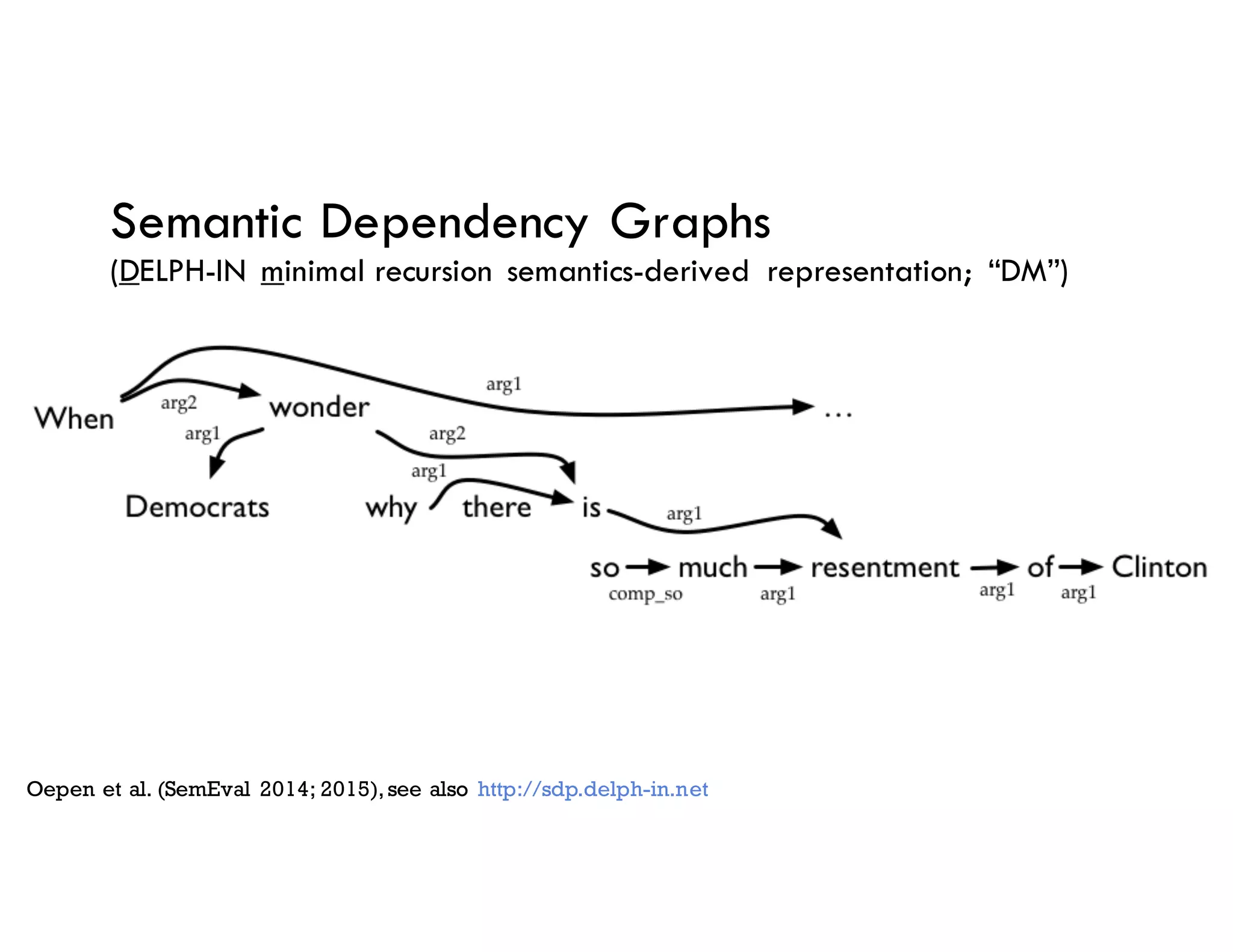

The document discusses advances in natural language processing (NLP) and various applications, such as conversation analysis, summarization, and machine-in-the-loop tools for authors. It presents new models for parsing sentences and analyzes linguistic structures through mechanisms like frame-semantic analysis and contextualized word vectors. Additionally, it explores challenges related to data bias, learning algorithms, and multitask learning possibilities in NLP.

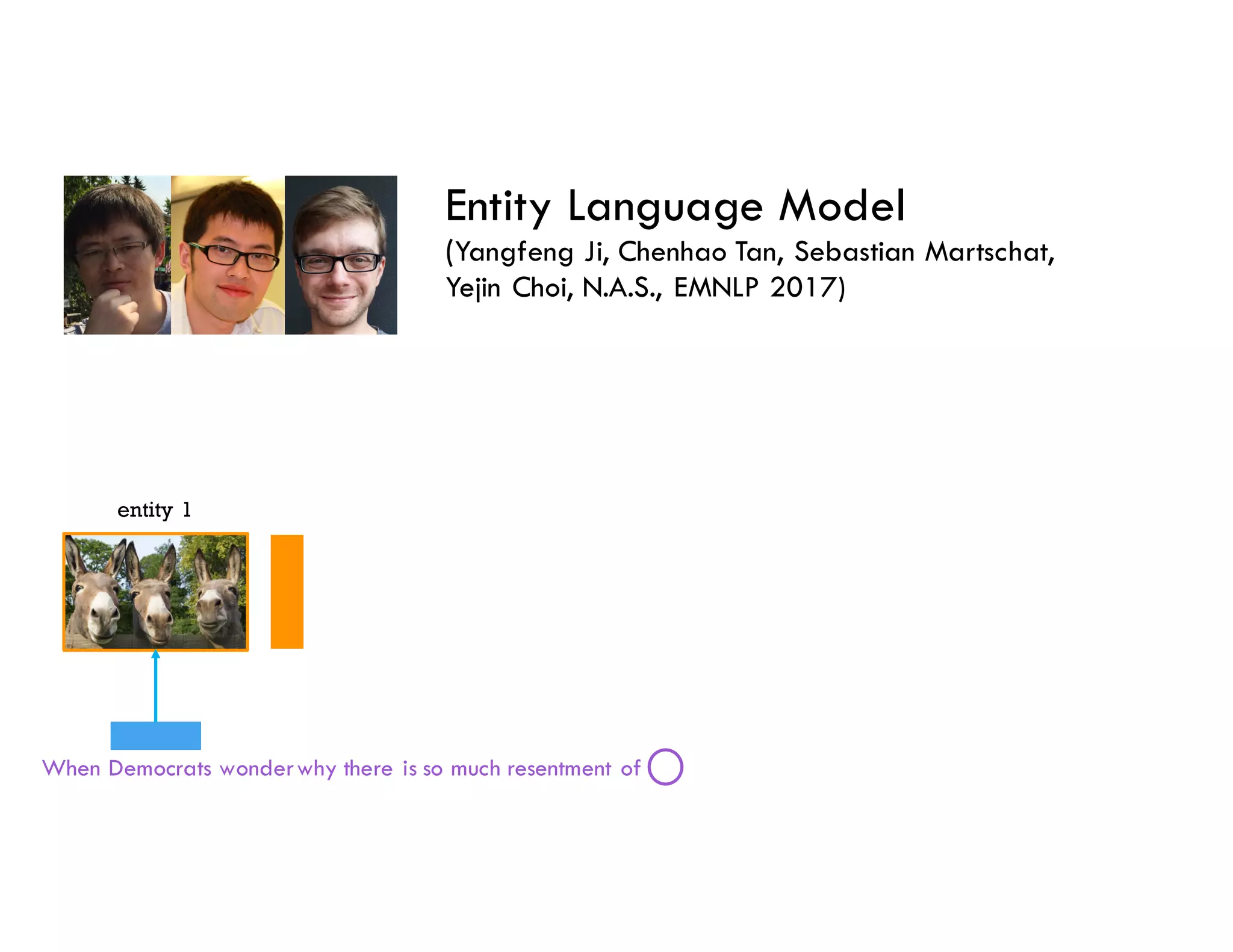

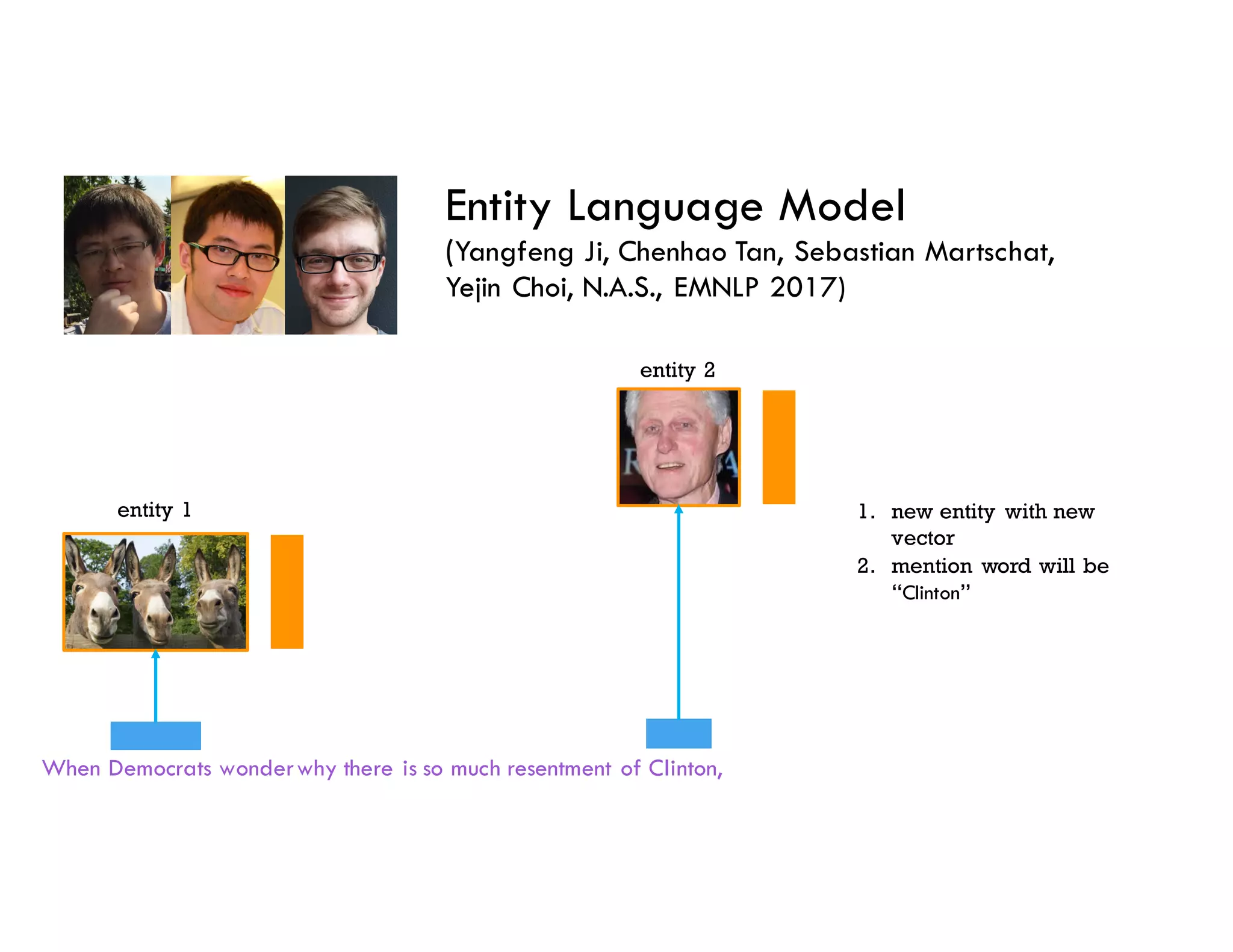

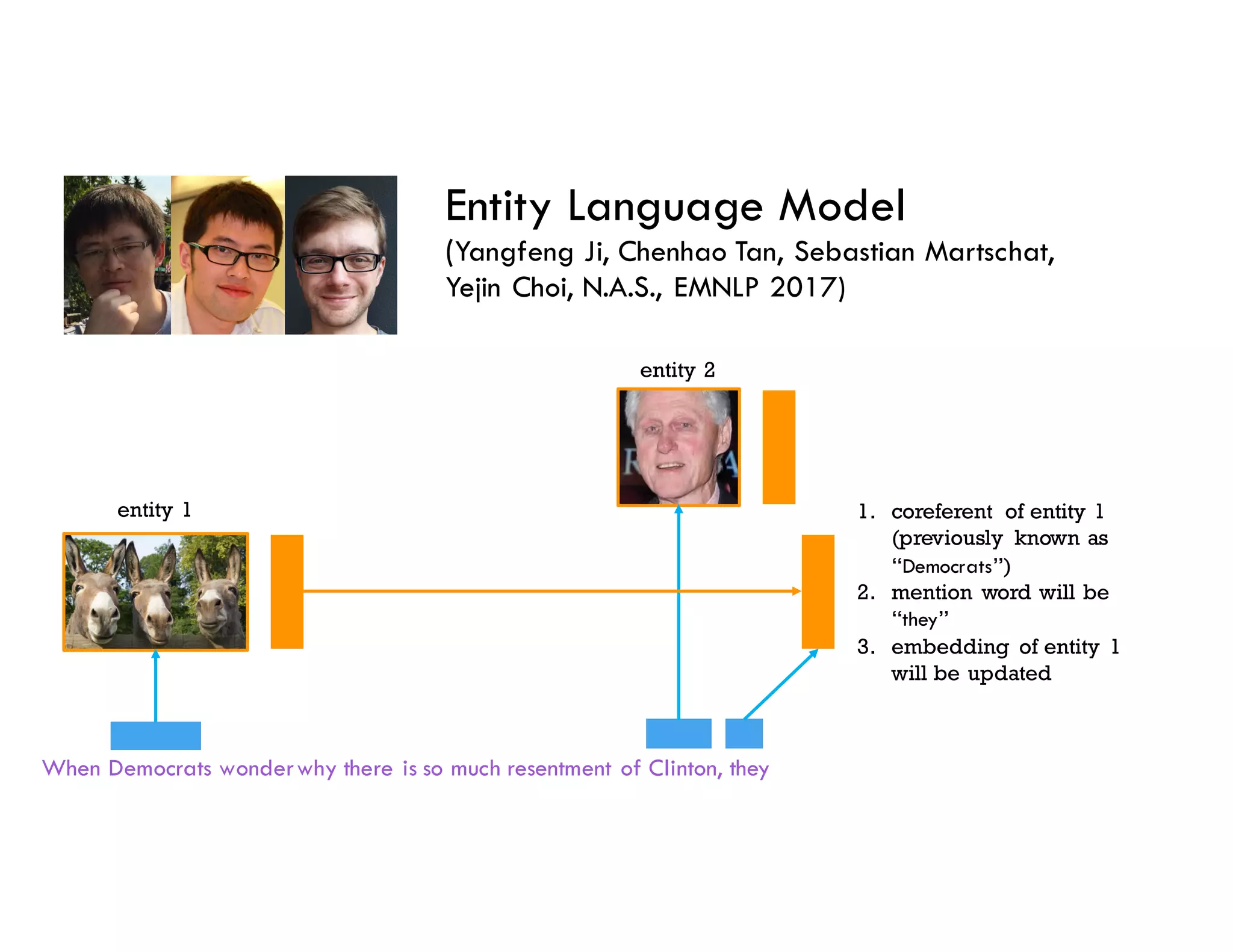

![When Democrats wonderwhy there is so much resentment of Clinton, they don’t need …

Democrats wonder

arg1

tanh(C[hwonder; hDemocrats] + b) · arg1](https://image.slidesharecdn.com/acl-8-1-17-170803200524/75/Noah-A-Smith-2017-Invited-Keynote-Squashing-Computational-Linguistics-59-2048.jpg)