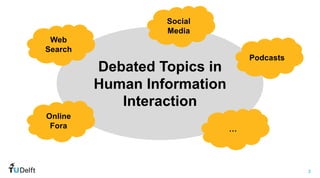

1. The document presents a novel approach to representing viewpoints in debates using viewpoint labels and an ordinal stance scale from -3 to 3.

2. It describes a dataset of 169 tweets annotated with the new viewpoint labels on the topic of pineapple on pizza.

3. Future work discussed includes obtaining viewpoint labels automatically and new methods for analyzing viewpoint diversity in online content.

![5

WIS

Web

Information

Systems

Document

Stance

Ordinal [-3, 3]

Viewpoint Label

References: Draws et al. (2021); Rieger et al. (2021)

-3 3

2

1

0

-1

-2

Strongly opposing Strongly supporting

Neutral

“Pineapple on pizza is the

most scandalous thing. It

doesn’t taste good, but

also it’s just WRONG.”

-3](https://image.slidesharecdn.com/chiir2022timdraws-220624060600-6467e27a/85/Comprehensive-Viewpoint-Representations-for-a-Deeper-Understanding-of-User-Interactions-With-Debated-Topics-5-320.jpg)

![6

WIS

Web

Information

Systems

Document

Stance

Ordinal [-3, 3]

Viewpoint Label

References: Baden and Springer (2014; 2017); Boltanski and Thévenot (2006)

Logic

Multi-Categorical

“Pineapple on pizza is the

most scandalous thing. It

doesn’t taste good, but

also it’s just WRONG.”

-3

civic

functional

inspired](https://image.slidesharecdn.com/chiir2022timdraws-220624060600-6467e27a/85/Comprehensive-Viewpoint-Representations-for-a-Deeper-Understanding-of-User-Interactions-With-Debated-Topics-6-320.jpg)