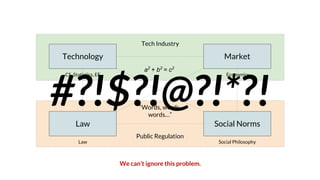

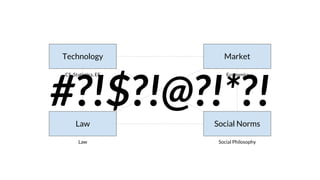

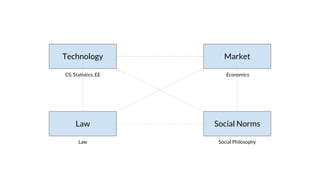

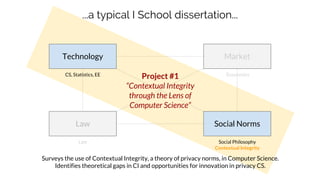

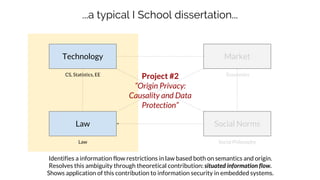

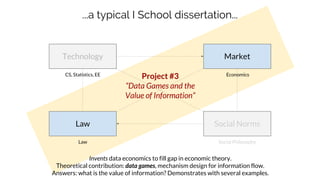

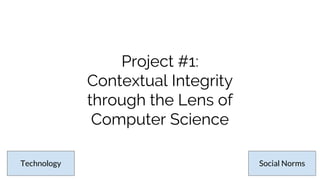

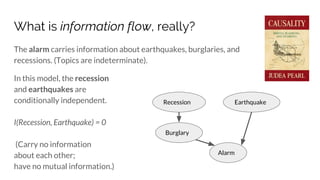

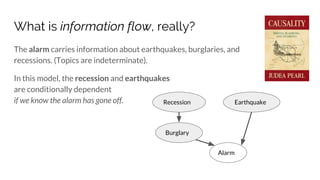

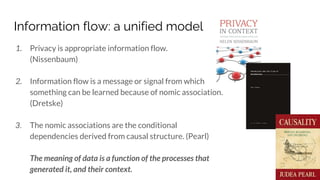

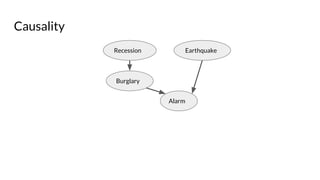

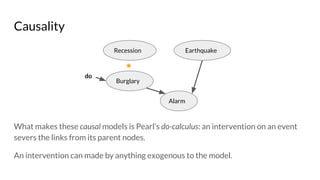

Sebastian Benthall's doctoral research explores the intersections of privacy engineering, security, and data economics through three projects focusing on contextual integrity, origin privacy, and data value. The research identifies gaps in current privacy practices within computer science, proposes novel frameworks for understanding information flow, and examines the normative implications of data usage in societal contexts. By marrying concepts from multiple disciplines, this work aims to enhance privacy through a deeper understanding of information dynamics.

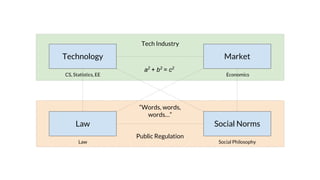

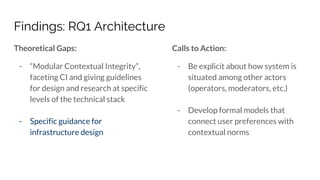

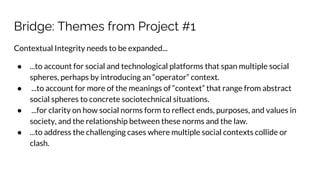

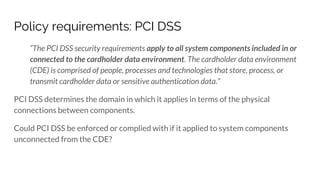

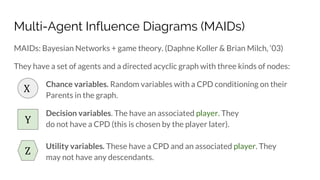

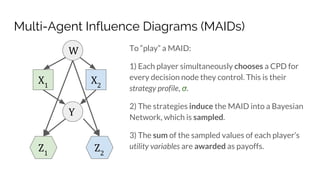

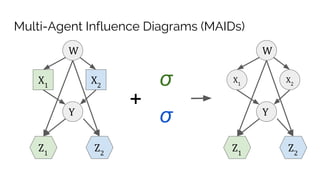

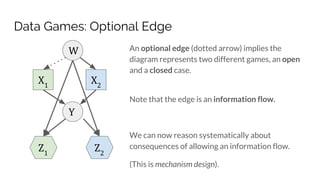

![The problem with information semantics

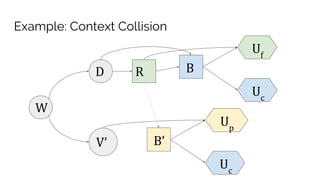

Contextual Integrity says there are five parameters of an information norm:

Sender, Receiver, Subject, attribute, and Transmission Principle.

[Patient, Doctor, Patient, Health, Confidentiality]

But... information topics are indeterminate. E.g.:](https://image.slidesharecdn.com/sbenthall-dissertation-talk-slides-180507231811/85/Context-Causality-and-Information-Flow-Implications-for-Privacy-Engineering-Security-and-Data-Economics-40-320.jpg)

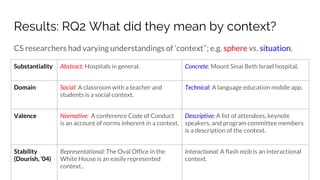

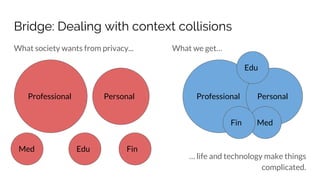

![Origin Privacy: Policy requirements: HIPAA

The HIPAA Privacy Rule defines psychotherapy notes as

notes recorded by a health care provider who is a mental health professional

documenting or analyzing the contents of … [a] counseling session

Psychotherapy notes are more protected than other protected health information,

intended only for use by the therapist.

These restrictions are tied to the provenance of the information: the counseling

session.](https://image.slidesharecdn.com/sbenthall-dissertation-talk-slides-180507231811/85/Context-Causality-and-Information-Flow-Implications-for-Privacy-Engineering-Security-and-Data-Economics-58-320.jpg)

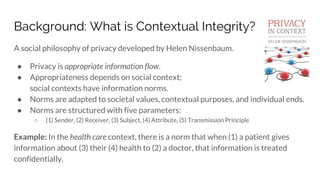

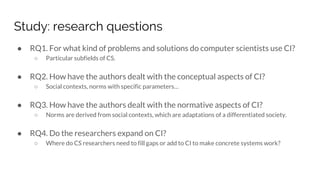

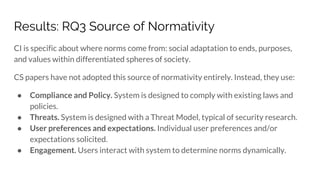

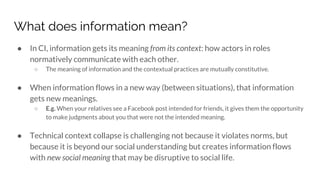

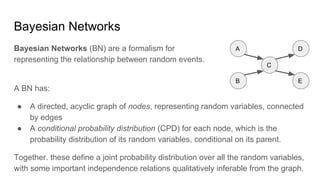

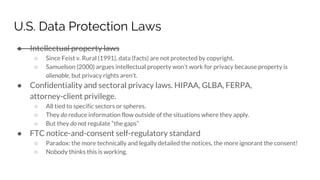

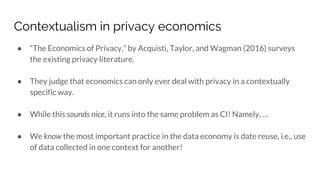

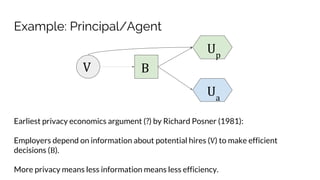

![Example: Principal/Agent

E(U) Open Closed

Principal (E(X | X > w) - w) P[X > w] (x - w)[E(X) > w]

Agent w P[X > w] w[E(X) > w]

Agent, x > w w w[E(X) > w]

Agent, x < w 0 w[E(X) > w]

V B

Up

Ua](https://image.slidesharecdn.com/sbenthall-dissertation-talk-slides-180507231811/85/Context-Causality-and-Information-Flow-Implications-for-Privacy-Engineering-Security-and-Data-Economics-89-320.jpg)

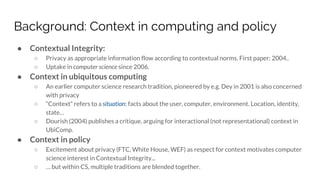

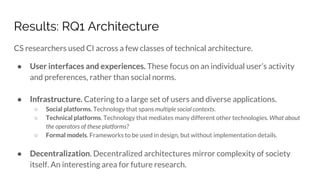

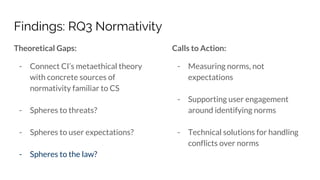

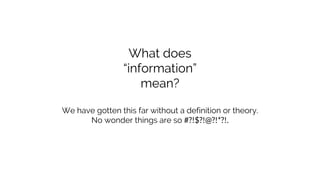

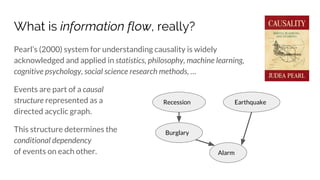

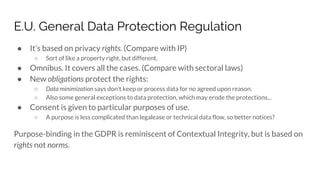

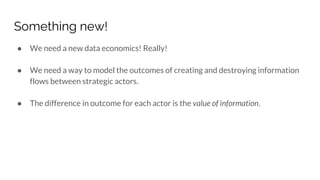

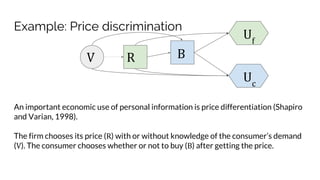

![Example: Price discrimination

E(U) Open Closed

Firm x - ϵ z* P[X > z]

Consumer ϵ (x - z*)P[X > z]

Consumer, x > z* ϵ x - z*

Consumer, x < z* ϵ 0

z* = argmaxz

E[z P(z < x)]

V R B

Uf

Uc](https://image.slidesharecdn.com/sbenthall-dissertation-talk-slides-180507231811/85/Context-Causality-and-Information-Flow-Implications-for-Privacy-Engineering-Security-and-Data-Economics-91-320.jpg)