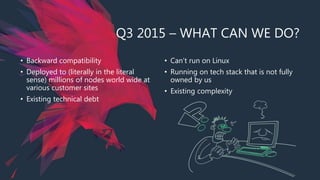

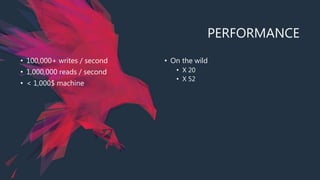

The document details the development and evolution of RavenDB, a database software, from its inception in 2009 through major releases up to 2018. It highlights the challenges faced, including technical debt and the need for backward compatibility, while emphasizing performance improvements and support considerations in system design. Despite a longer-than-anticipated development timeline, the final iterations showcased significant enhancements in performance, support efficiency, and cross-platform capabilities.