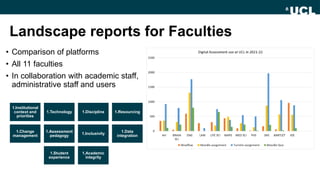

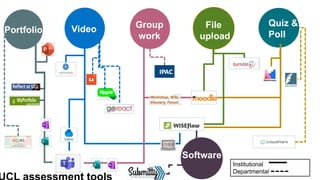

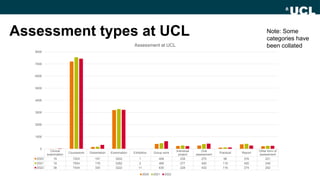

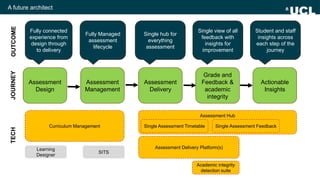

This document summarizes a presentation given by Simon Walker and Marieke Guy about the University College London's (UCL) journey towards digital transformation of assessment and feedback.

Some key points:

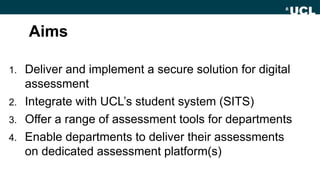

- UCL implemented a secure digital assessment platform called AUCL in response to the COVID-19 pandemic to deliver over 1,000 assessments remotely.

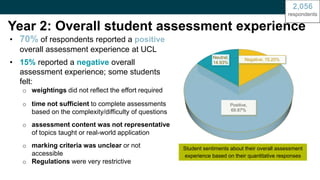

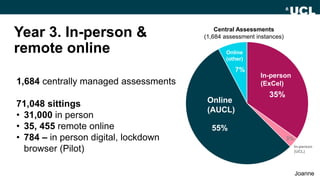

- Since then UCL has expanded usage of AUCL, with over 1,600 exams and 65,000 students using it in year two.

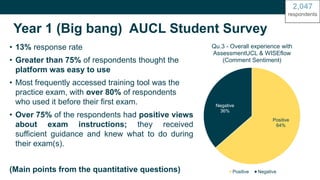

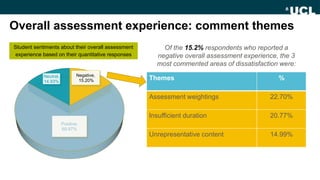

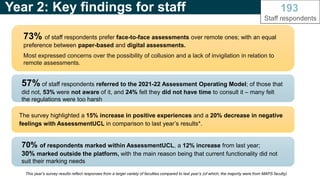

- Student and staff surveys showed mostly positive feedback but also areas for improvement like assessment weightings, duration, and content representation.

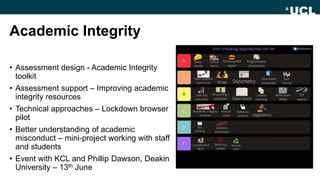

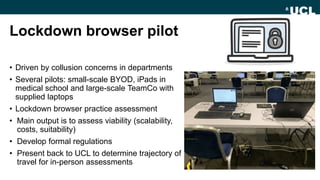

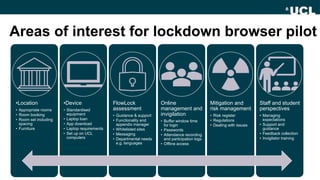

- UCL is piloting lockdown browsers, improving academic integrity, and partnering with

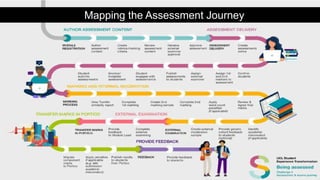

![Lecturer

Examiner

Reviewer

Design

assessment

content and

send for

review

Review

assessment

and approve

Upload exam/

assessment

papers as file

Student

Academic

Services

Requirement

Priority:

MUST

SHOULD

Assessmen

t Design &

Review

Schedule

exams / assign

assessment

deadlines

SCIENTIA

[Exam

Timetable]

SITS / Portico

1.Student data

2. Module data

3. Examiner

data

4. Invigilator

data

5. Marks

Technical

Support/

ISD

Assessment Delivery

Department

s

Systems

Marking and Feedback Interfaces

Reporting

Examinations

Team

Student

Wellbeing &

Welfare Team

Digital

Education TBC

SORA

adjustments:

e.g. additional

time

Complete Mock

Exams / Access

Training

material

Access and

complete exam

Access and

complete

assessment

Lockdown

Browser: Closed

book/Remote

exams

Access

supporting

tools: timer;

ruler; calculator;

etc

Access

reference

material

Receive results

& feedback

Verify ID

Exams:

MCQ

Short

answer/SBA

Long answer

Open book

Closed book

Remote

Assessments:

Coursework

Groupwork

OSCE

Presentations

Video/Aural/Oral

Images/Blogs

Journals/WIKI

Raise support

call

Complete

exams in

different time

zones

Online/remote

Invigilation

Peer

Assessments

External

Examiner

Invigilator

Legend:

Process

Role

Receive

submission

receipt

Import student

assessment

data

System

requirements

& Support

Analytics

Various

roles

Dashboards

Run reports;

export into csv

Design

Rubrics and

marking

scales

Platform

access

Assign PIN /

Passcode for

student access

to exams

Track progress

of exams/

assessments

Access for

external users

[e.g. Token]

Provide remote

assistance to

end user

Turnitin

Role based

access

Single Sign On

access for

internal users

Marking methods:

Double marking

Blind marking

Comparative

Multiple markers Review and

agree marks

Marking against

Rubrics/Marking

scales

Marking modes:

Automated

Semi automated

Manual marking

Marks

reconciliation/

collaborate with

other markers

Provide

feedback to

students

Workflow

driven tasks

Reporting

Automated

upload of marks

into SITS

Network

resilience

[connectivity]

Security / Data

Accessibility

System

Maintenance

and Support

Import Exam

Times/Submissi

on deadlines

Anonymous

marking

Web based

system

System

interfaces

Control access

during exams

Delivery of

STEM

subjects; Art &

Design,

Medical &

Languages

Plagiarism

check

Marking

innovative

assessment

types

Make notes

Flag

assessment

for external

moderation

Active

Directory

What

we

wanted](https://image.slidesharecdn.com/jisc-reimaging-230601114545-41a06521/85/The-UCL-assessment-journey-6-320.jpg)