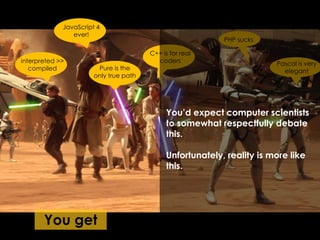

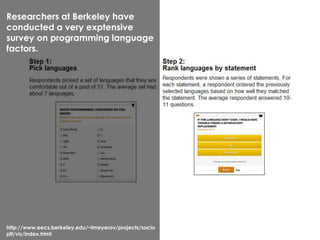

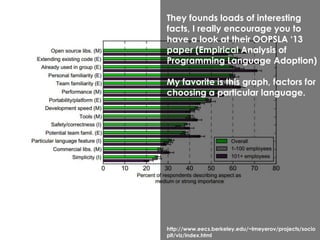

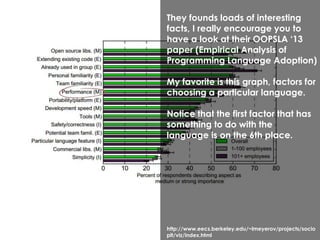

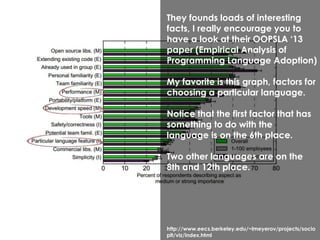

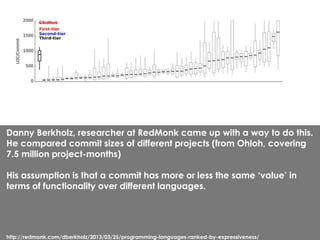

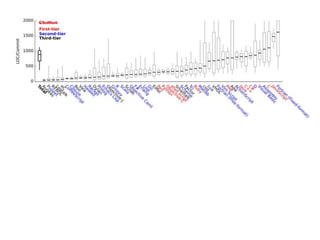

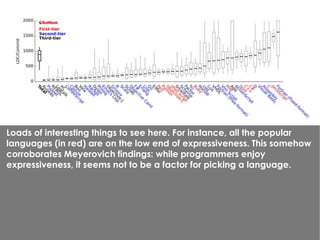

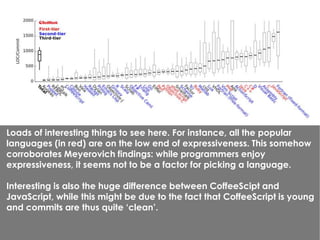

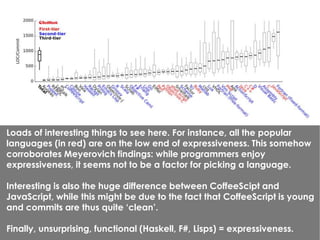

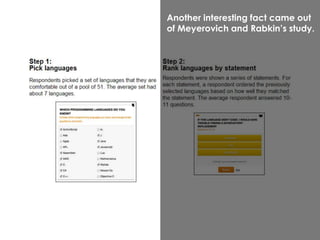

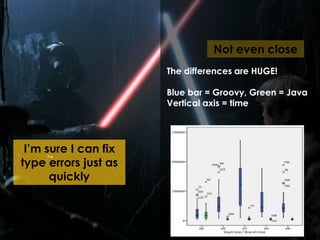

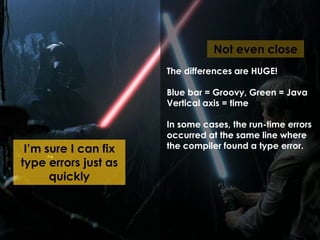

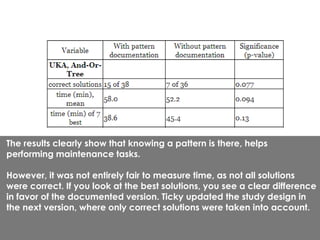

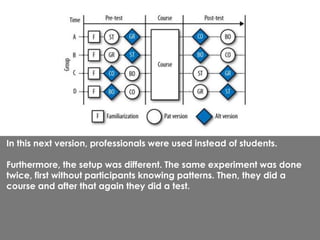

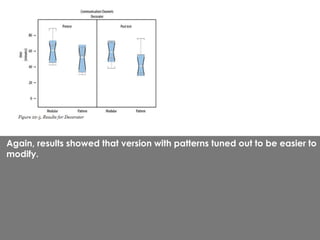

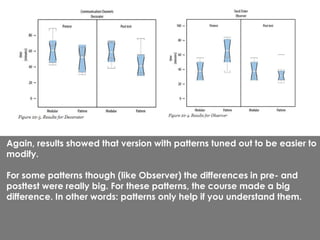

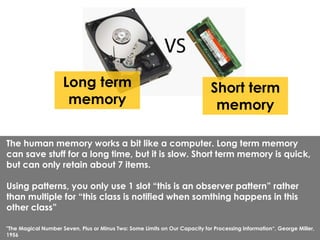

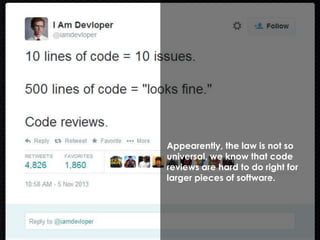

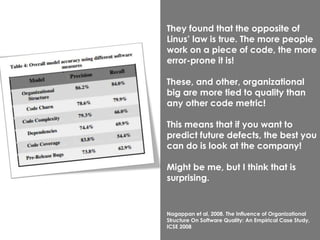

The slidedeck presented by Felienne from Delft University of Technology discusses the application of science in computer science, focusing on empirical studies related to programming languages and developer satisfaction. Key findings include factors for language choice, the impact of expressiveness on programmer enjoyment, and the significance of static typing versus dynamic typing in software development. The presentation emphasizes the importance of understanding cognitive science and organizational dynamics in improving software quality and development practices.