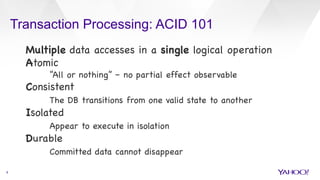

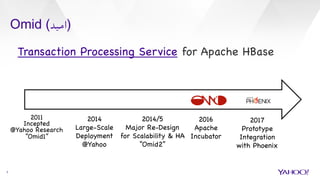

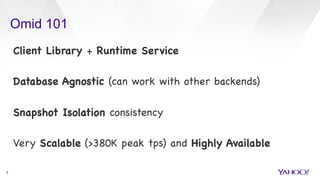

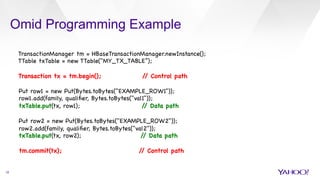

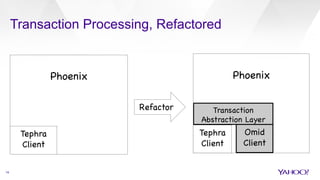

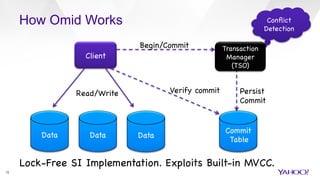

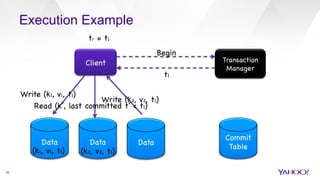

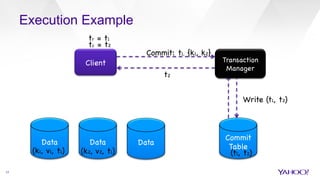

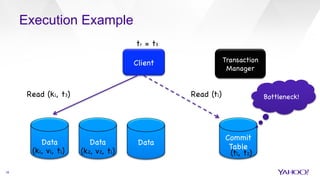

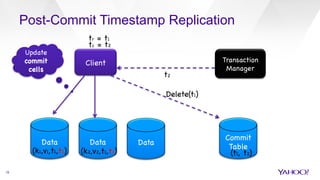

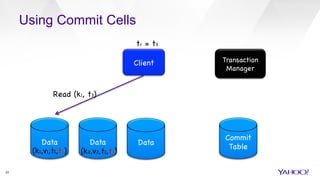

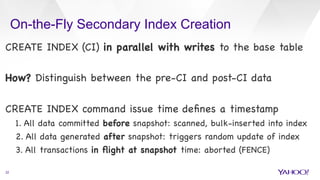

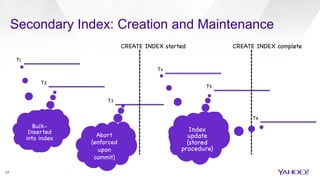

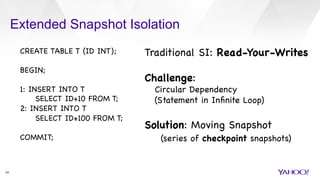

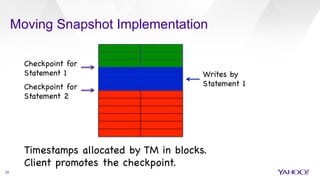

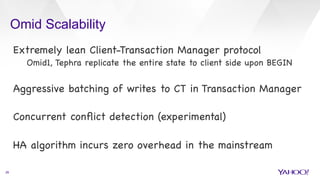

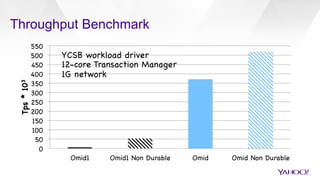

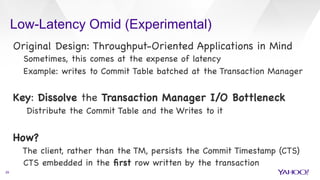

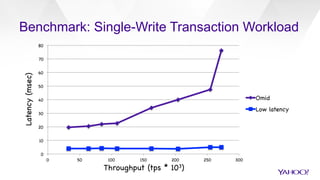

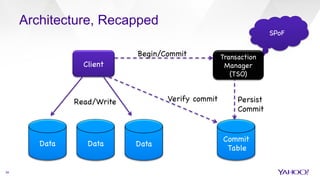

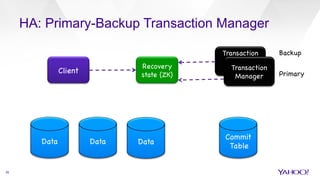

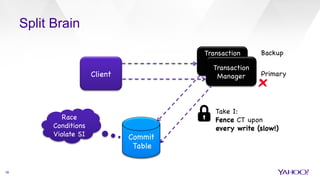

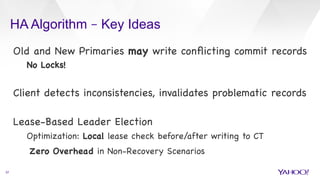

Omid provides scalable and highly available transaction processing for Apache HBase. It allows for atomic, consistent, isolated, and durable transactions across multiple data accesses. Omid is integrated with Phoenix to provide ACID semantics for Phoenix queries and updates. The integration allows Phoenix to leverage either Omid or Tephra for transaction processing in a configurable way. Omid's architecture and algorithms enable it to achieve very high throughput and availability even under failures.