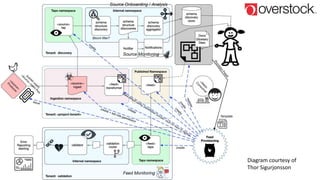

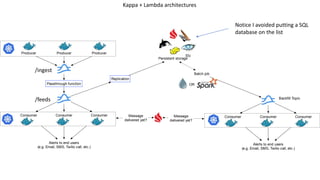

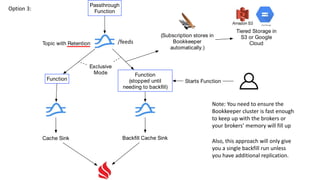

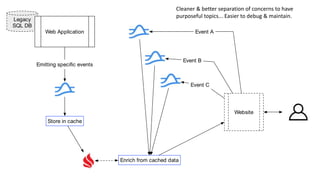

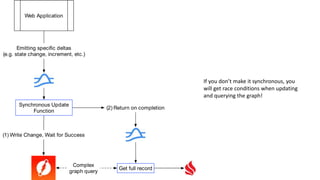

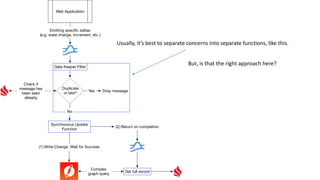

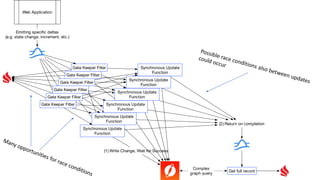

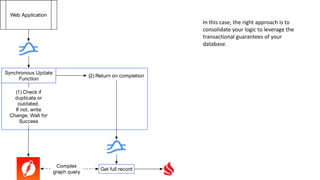

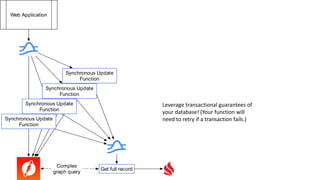

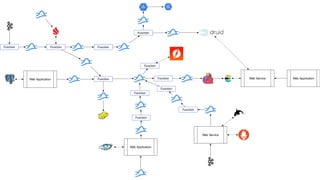

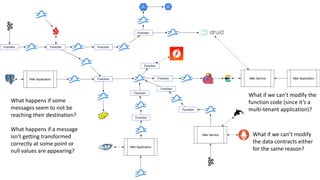

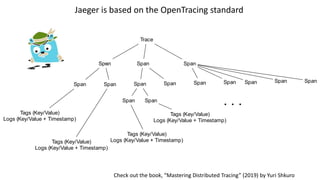

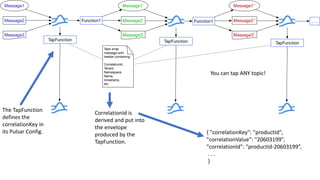

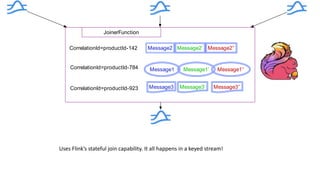

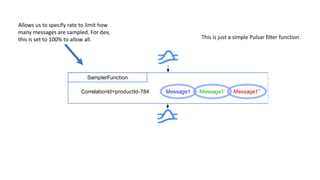

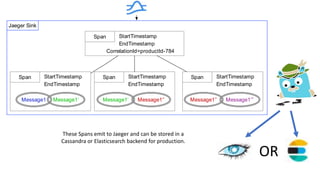

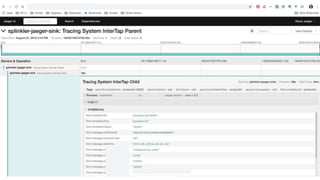

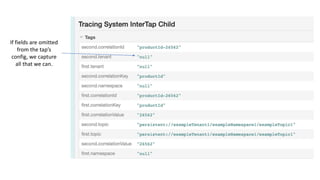

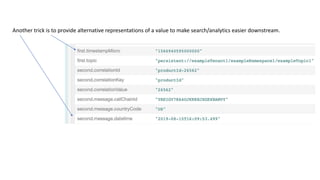

This document discusses real-world architectural patterns for implementing Apache Pulsar, focusing on distributed caching, tracing, and strategies for data ingestion and processing. It highlights various patterns and approaches for enhancing system reliability, data flow management, and message guarantee, while addressing challenges such as message loss, complex query logic, and tracing issues. The author, Devin Bost, shares insights from practical applications developed at Overstock, providing valuable tips for leveraging Pulsar in real-time messaging contexts.