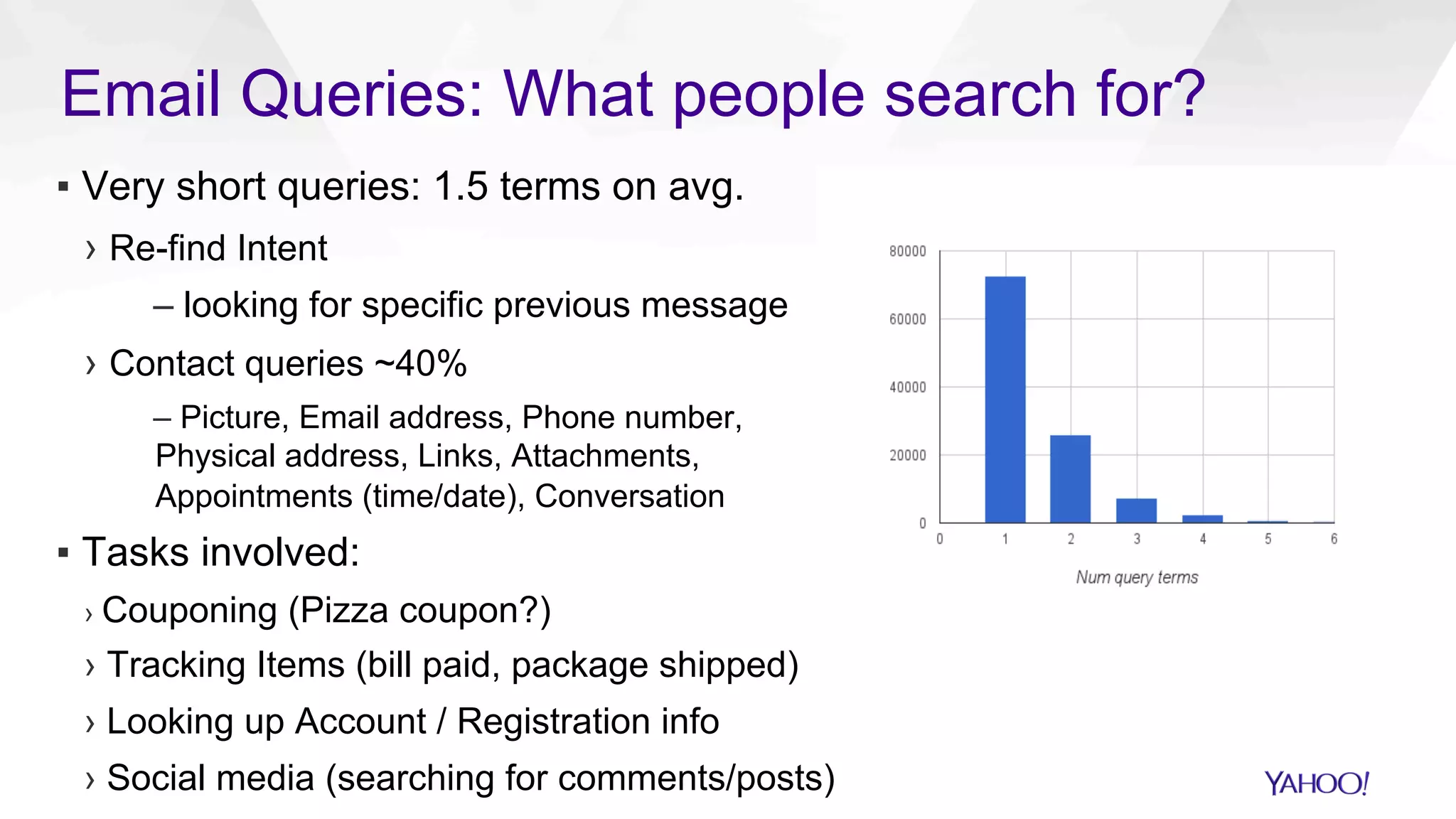

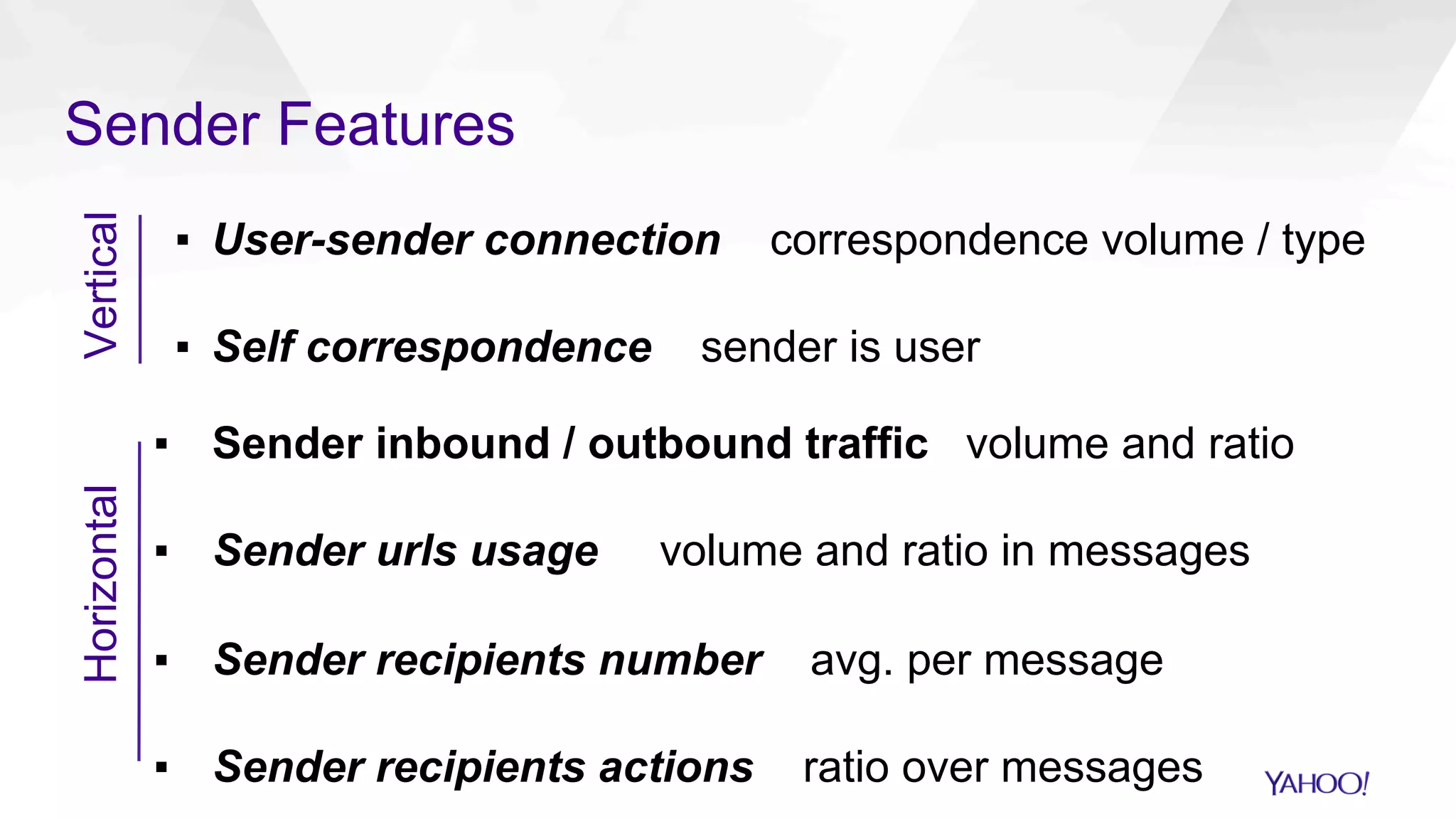

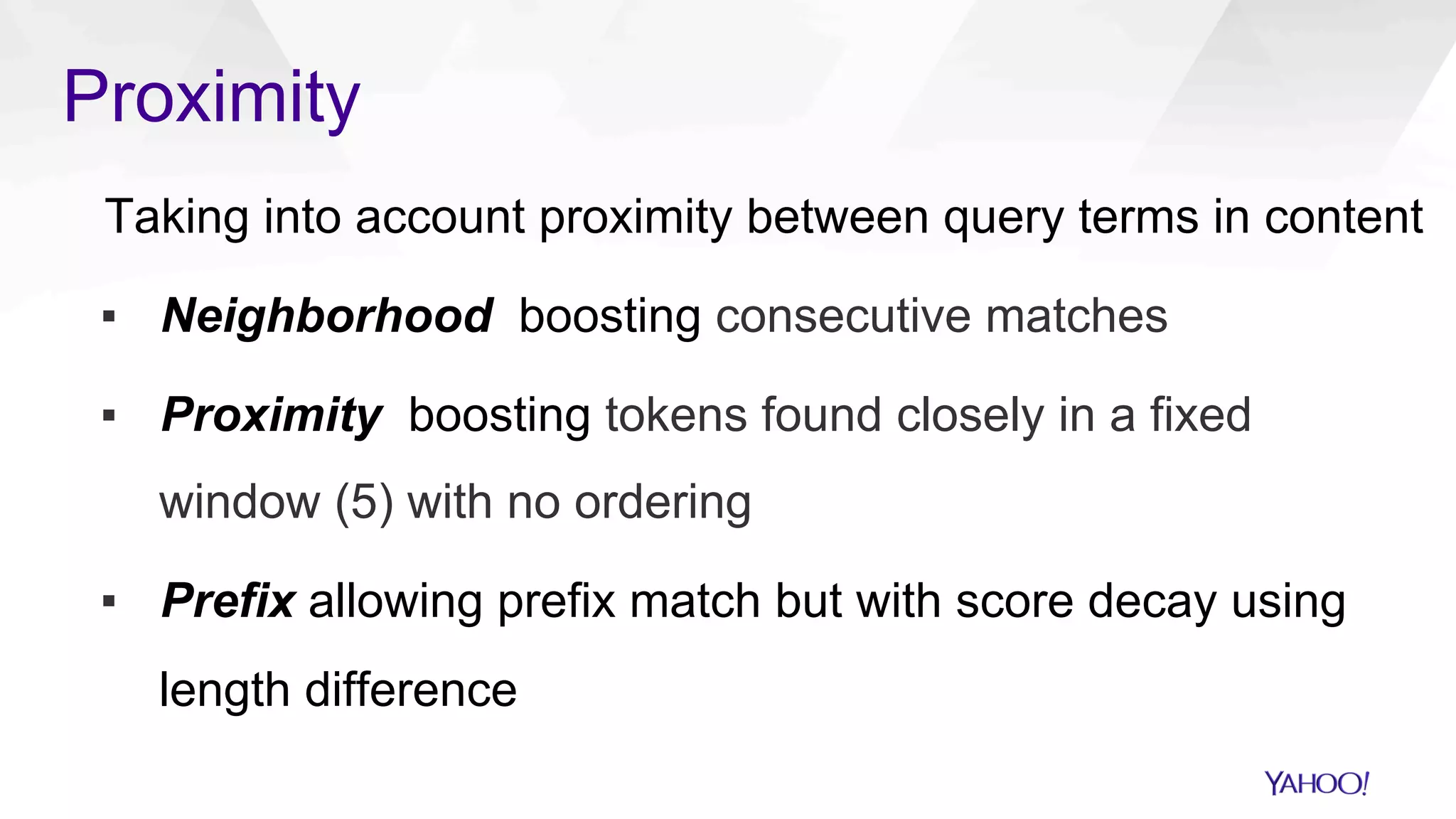

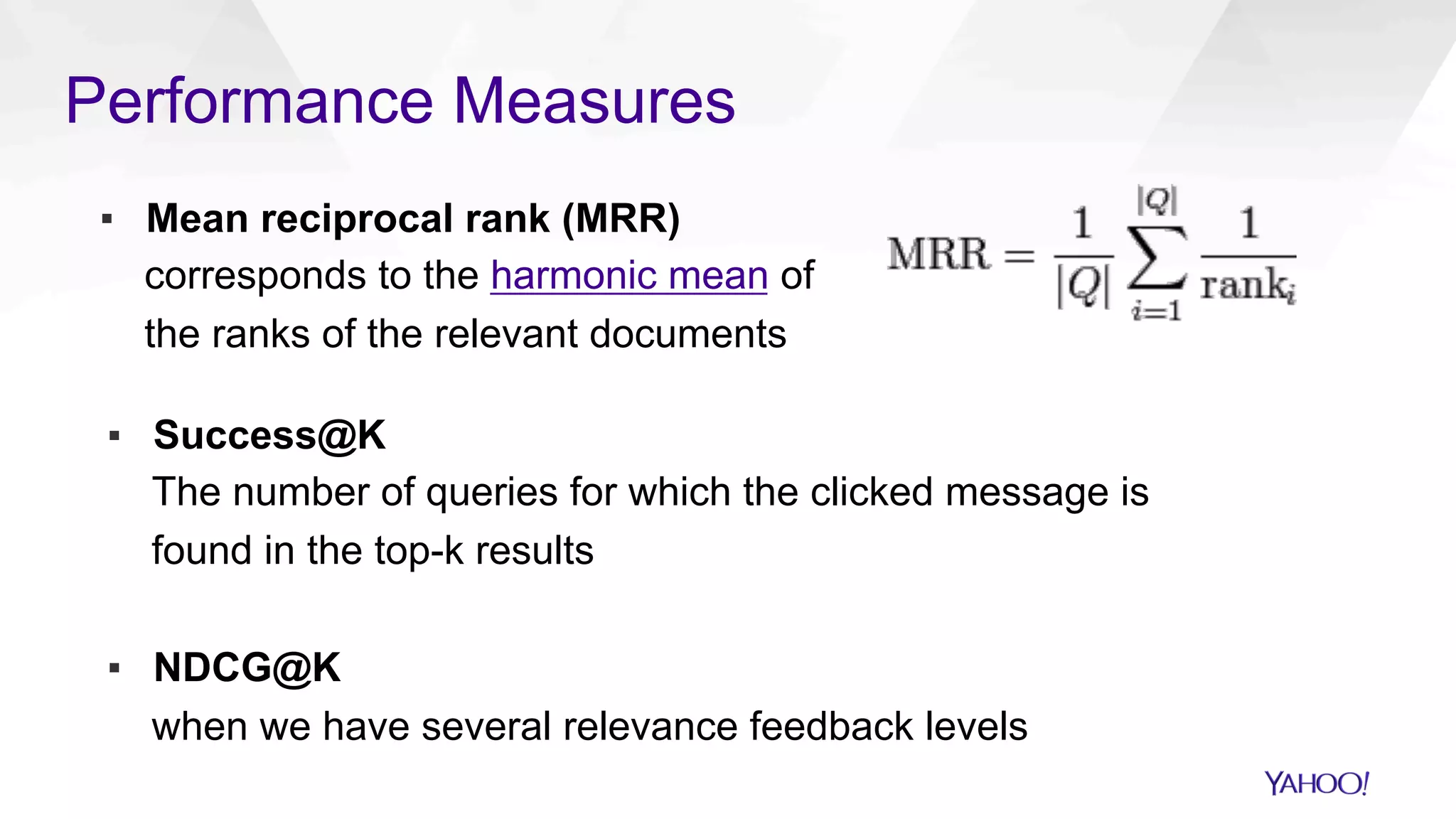

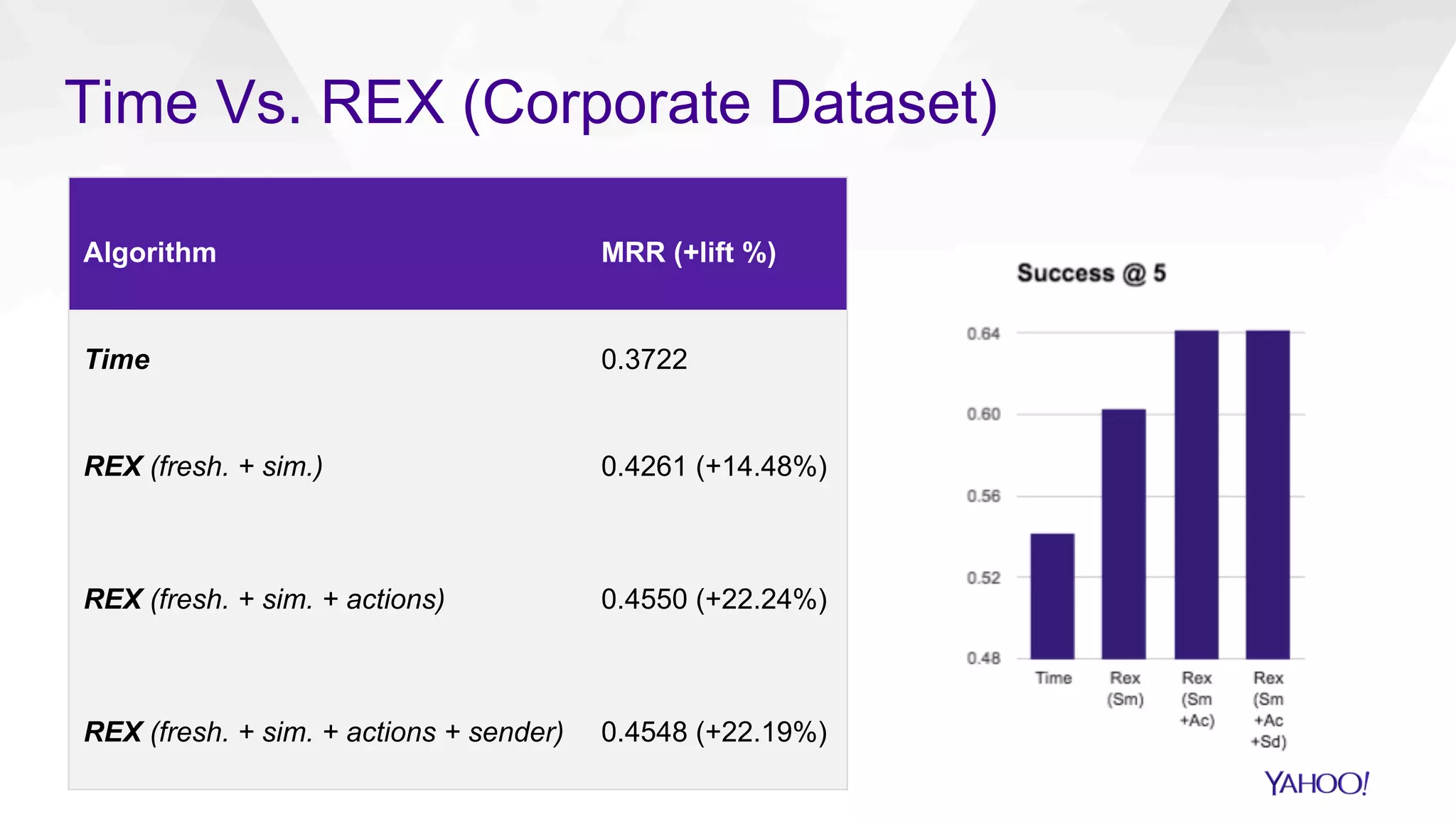

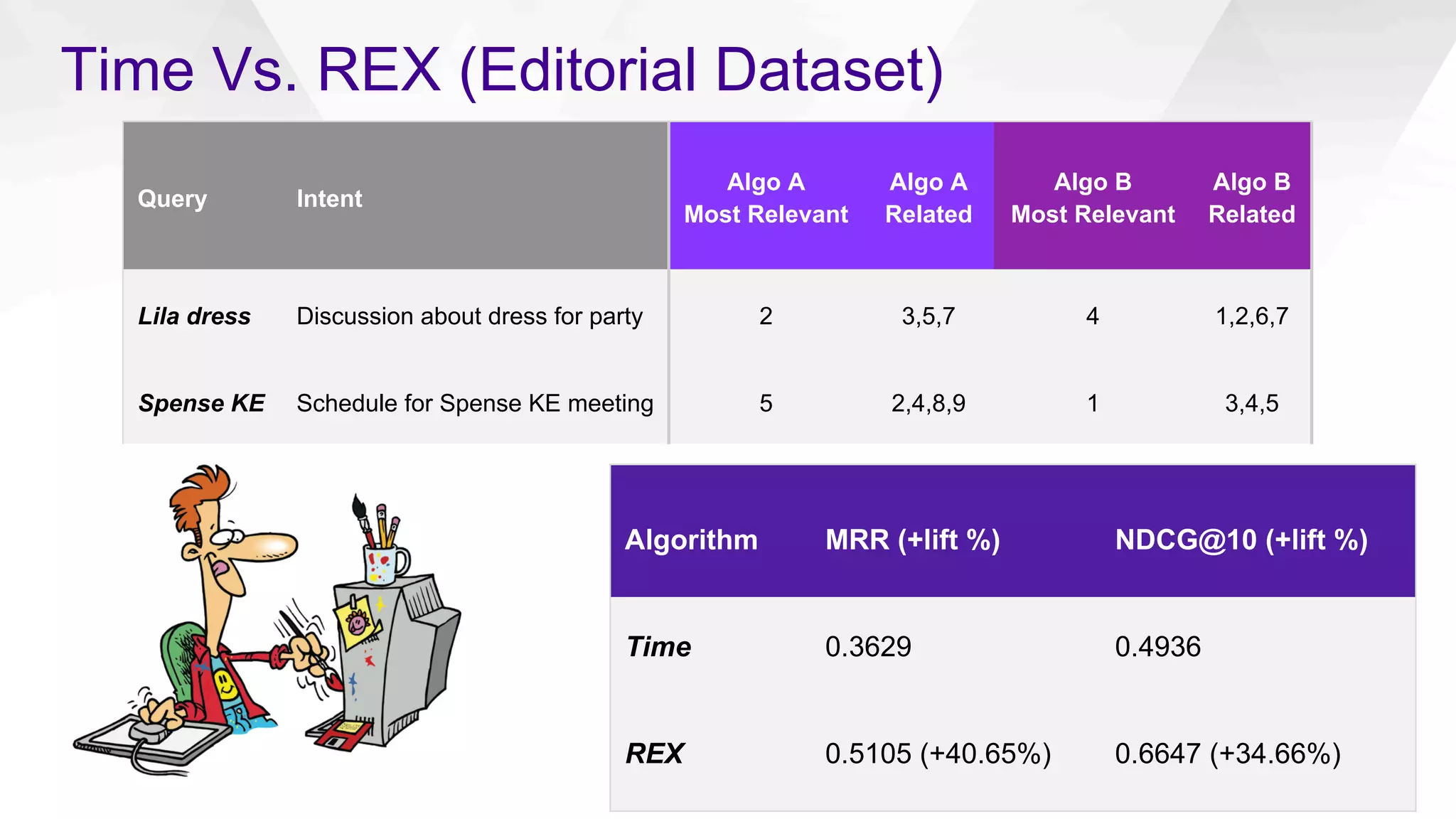

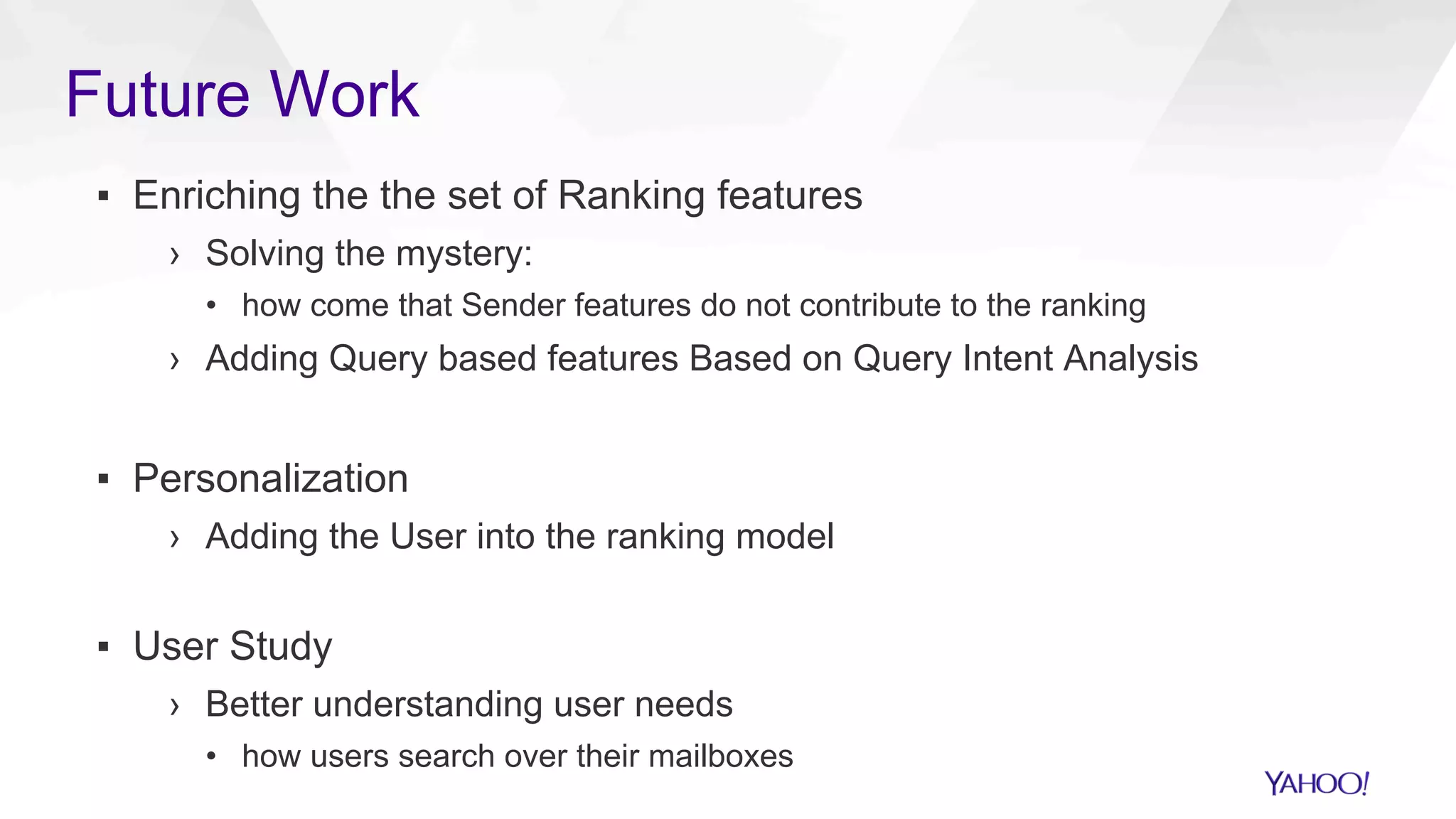

The document discusses the challenges and limitations of traditional chronological ranking in email search, proposing an email-specific relevance ranking model called RE(X) that incorporates multiple features to enhance search accuracy and efficiency. It outlines a two-phase retrieval process for email queries, comparing the performance of RE(X) against standard time-based ranking methods. The findings suggest that RE(X) significantly outperforms chronological ranking, emphasizing the need for future enhancements and user studies to refine the email search experience.