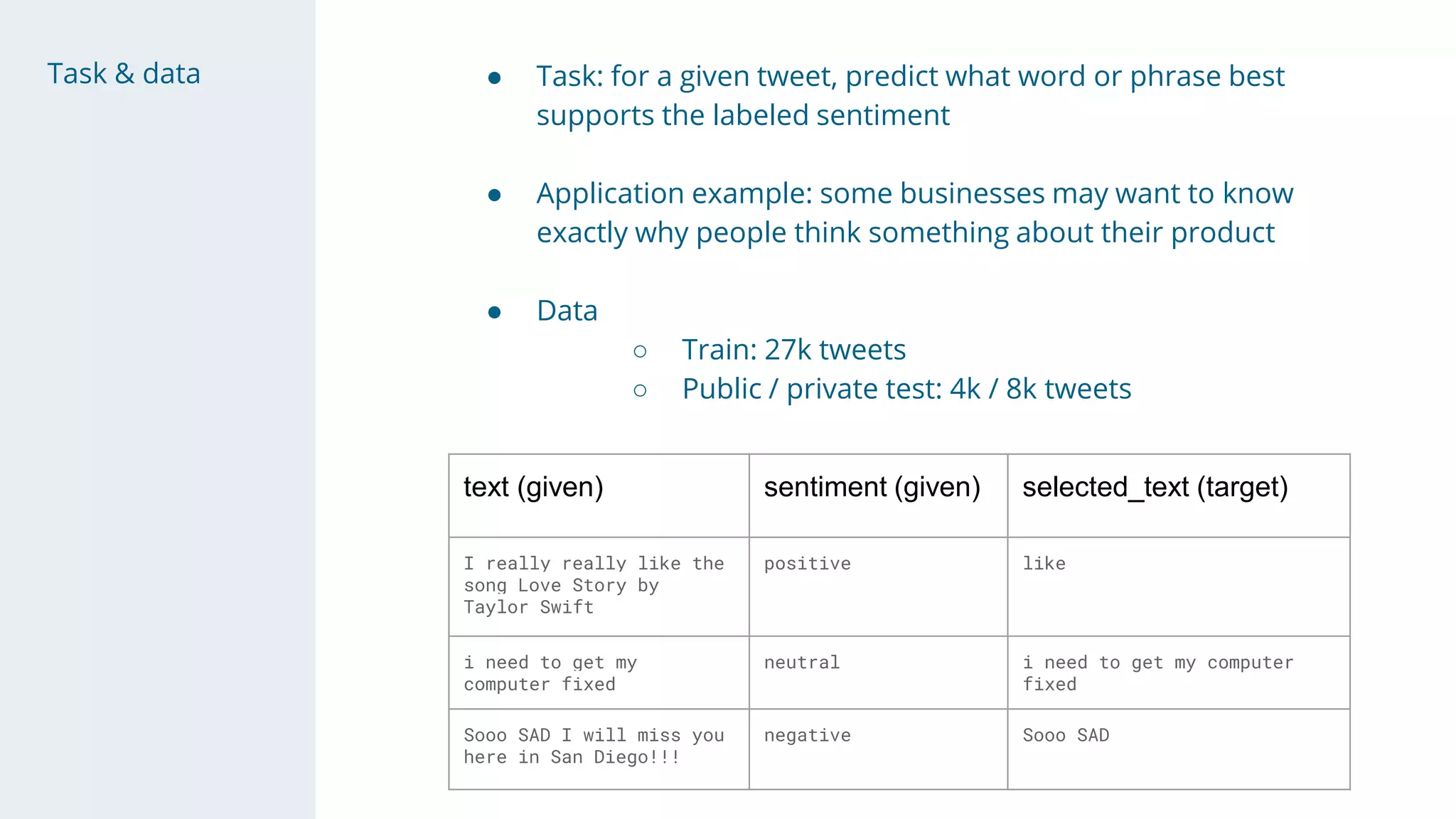

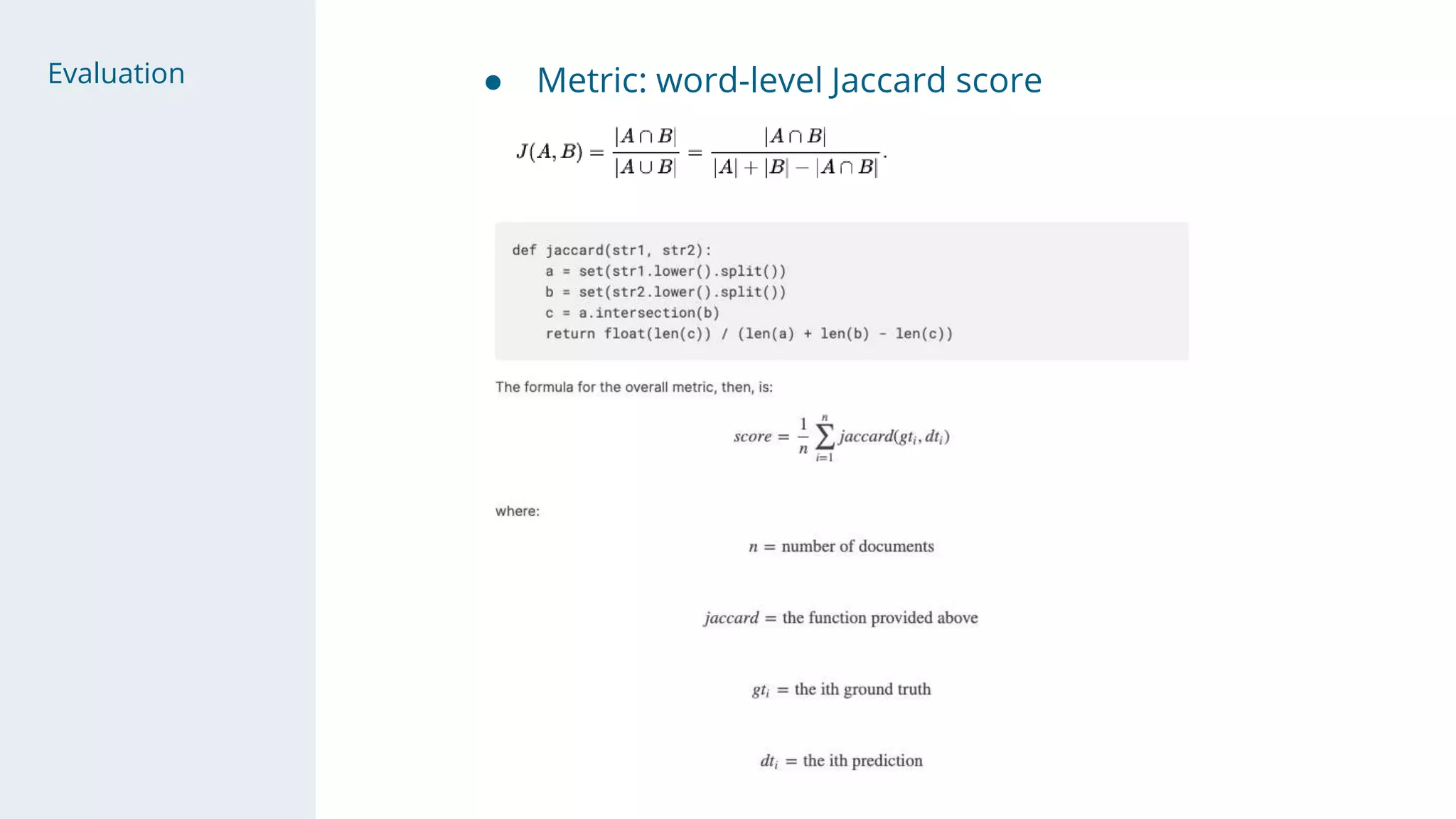

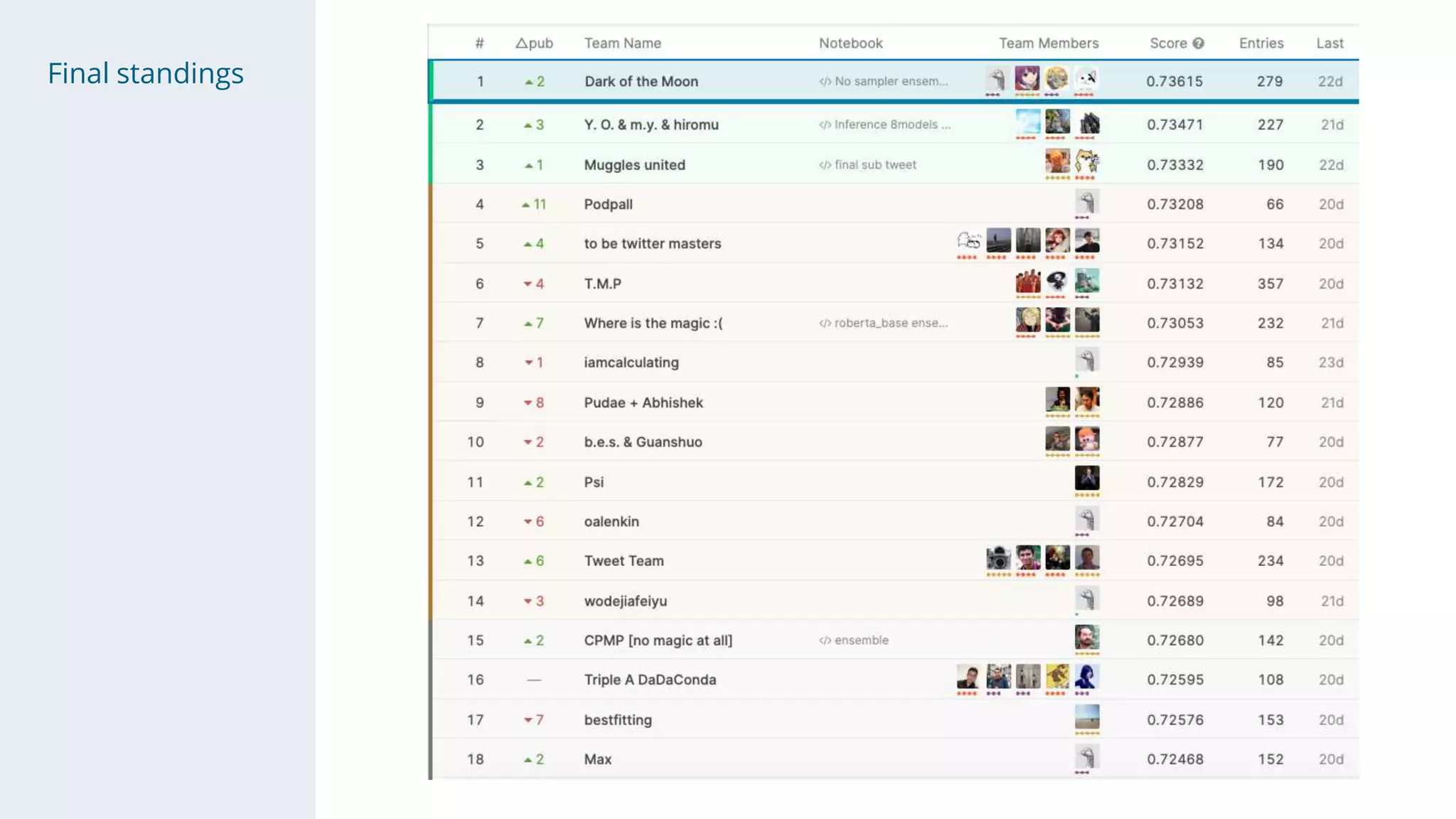

The document summarizes the 1st place solution to a Kaggle competition on sentiment extraction from tweets. Key points:

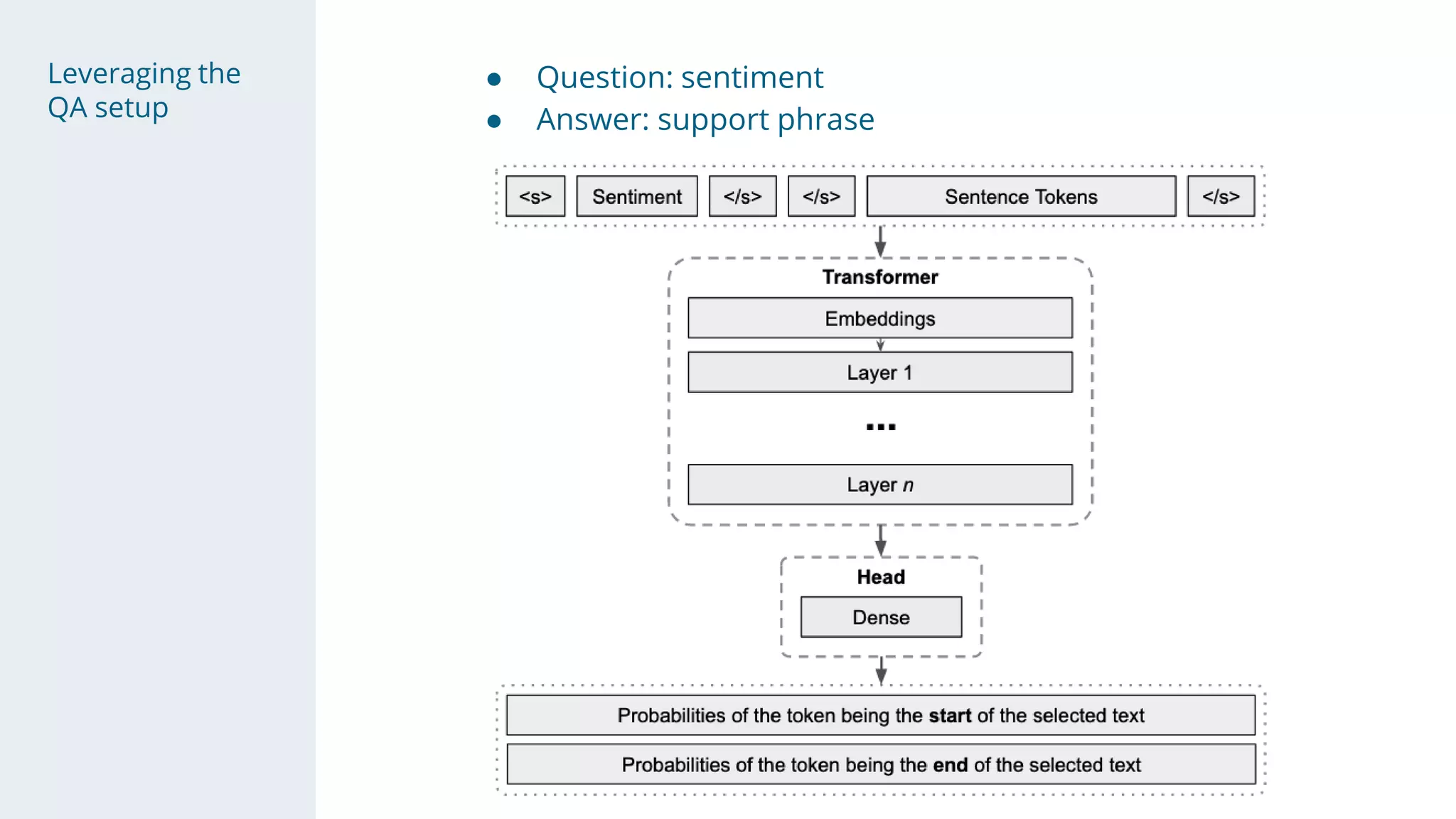

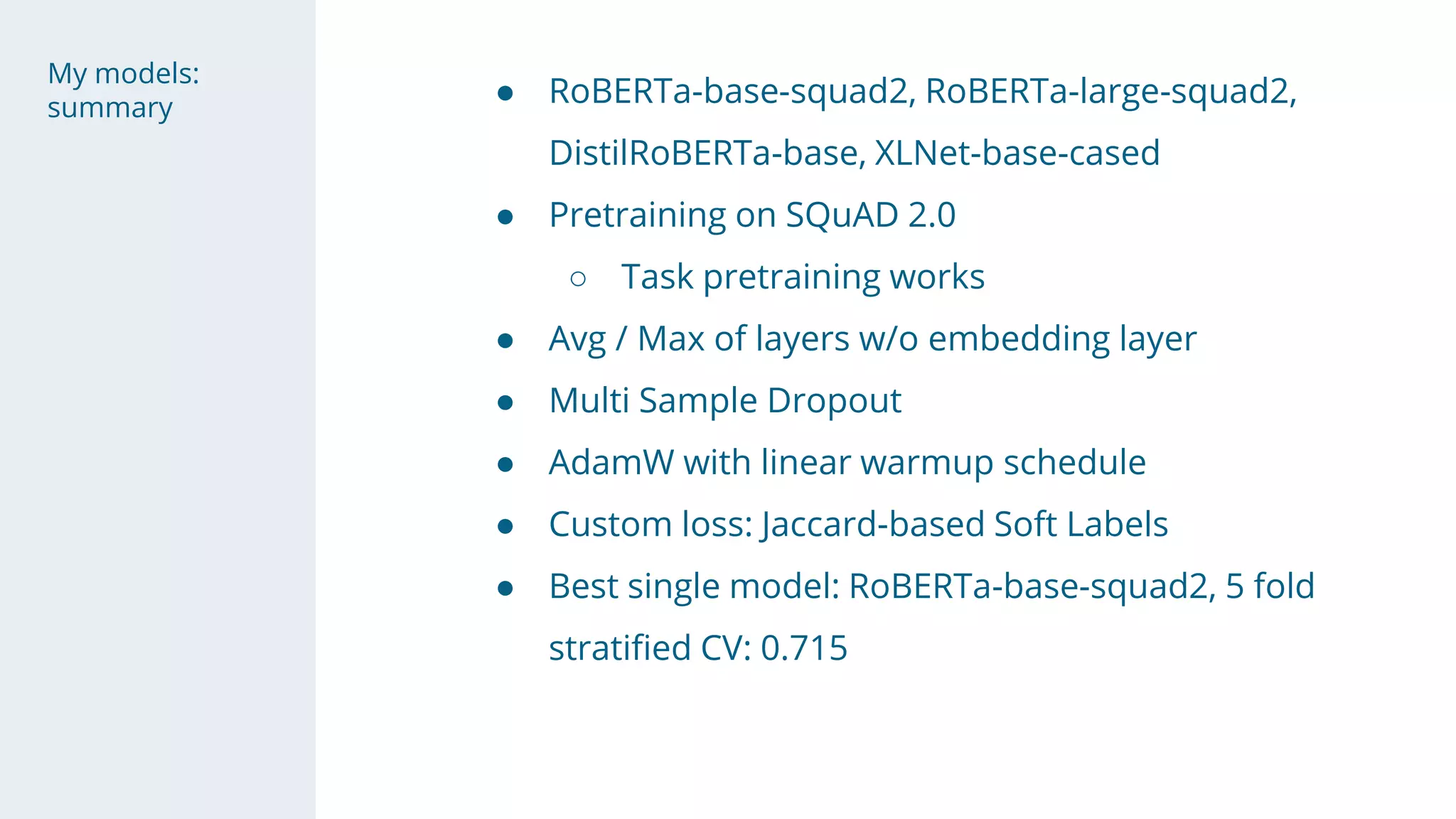

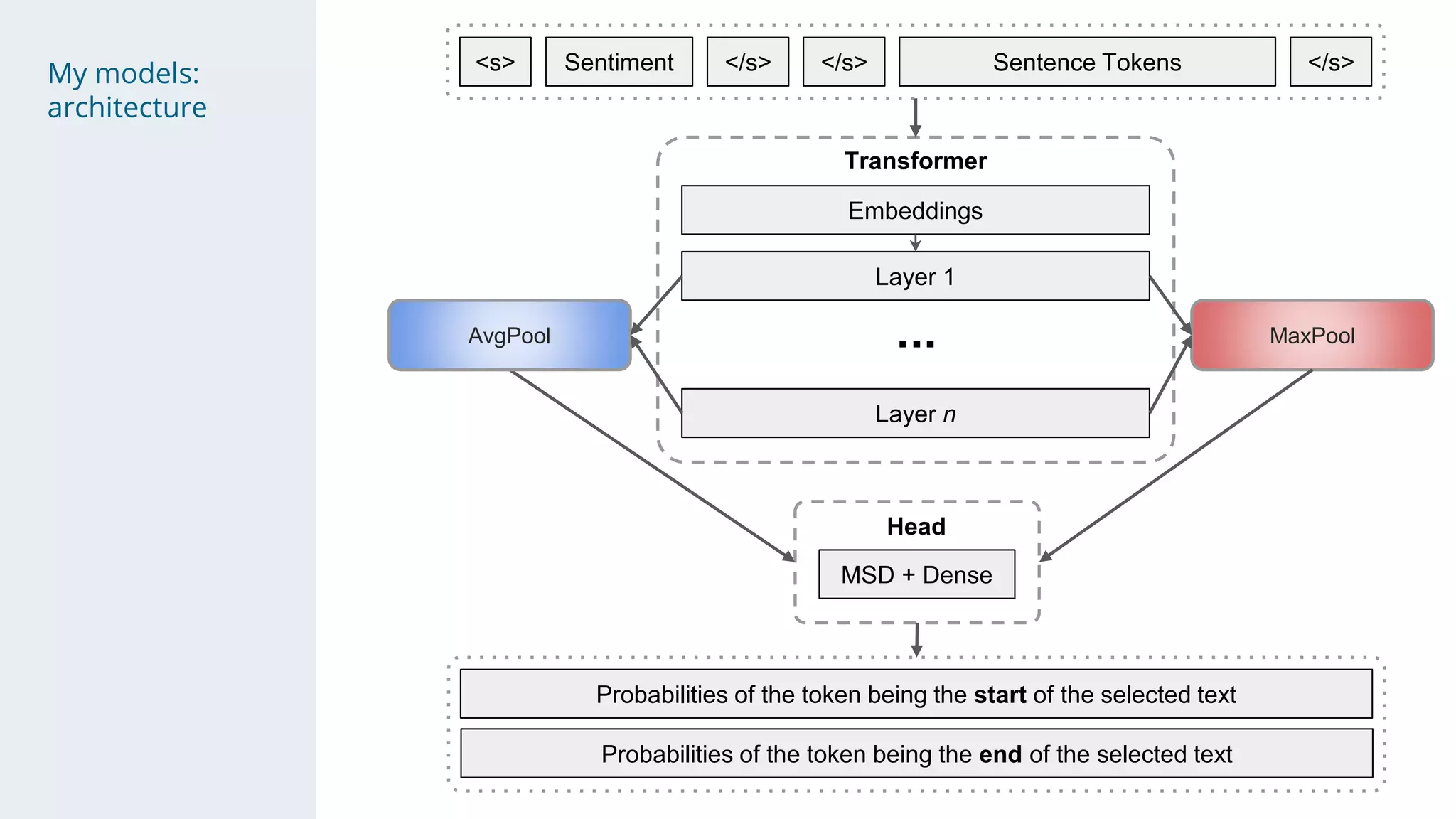

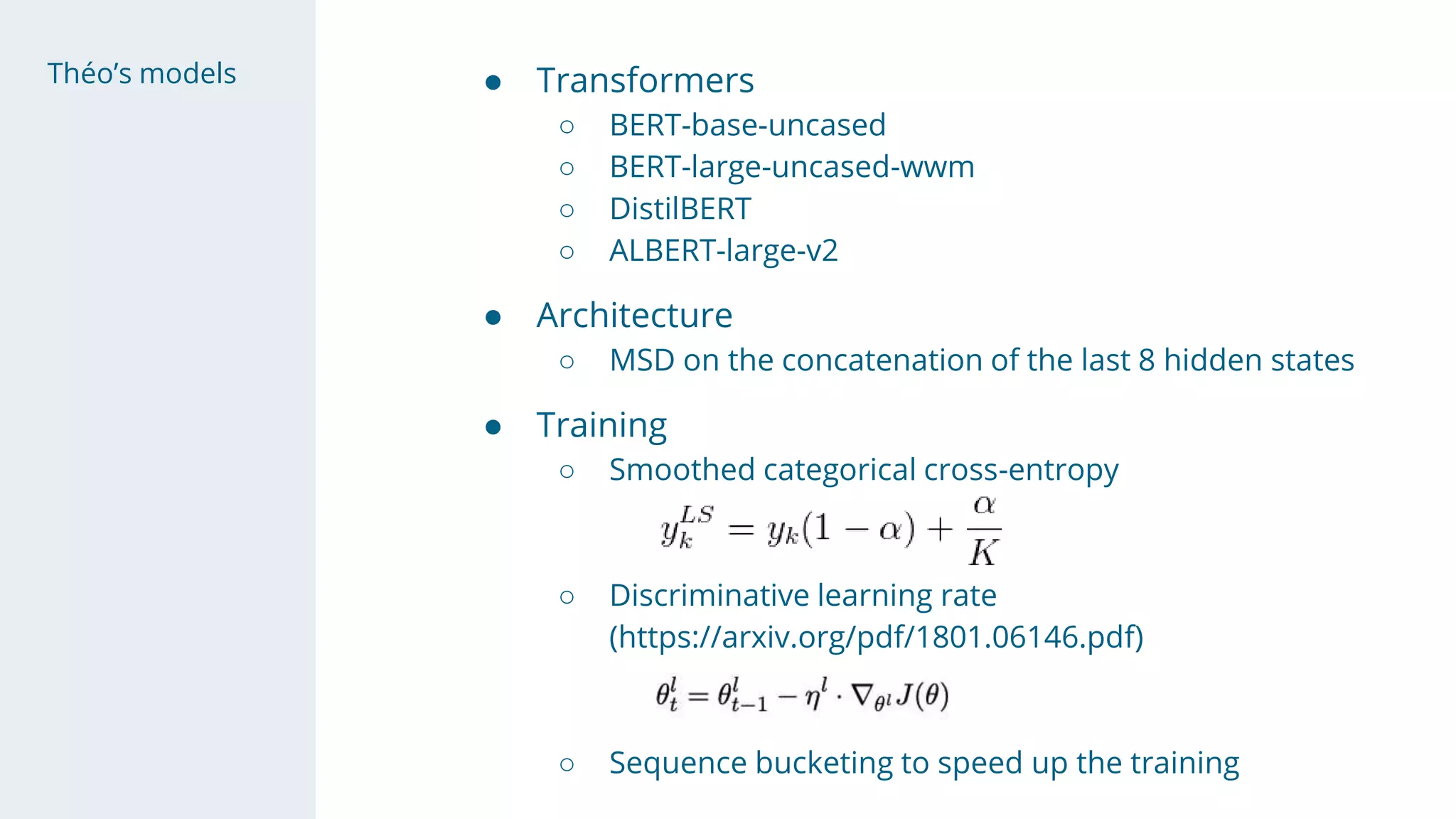

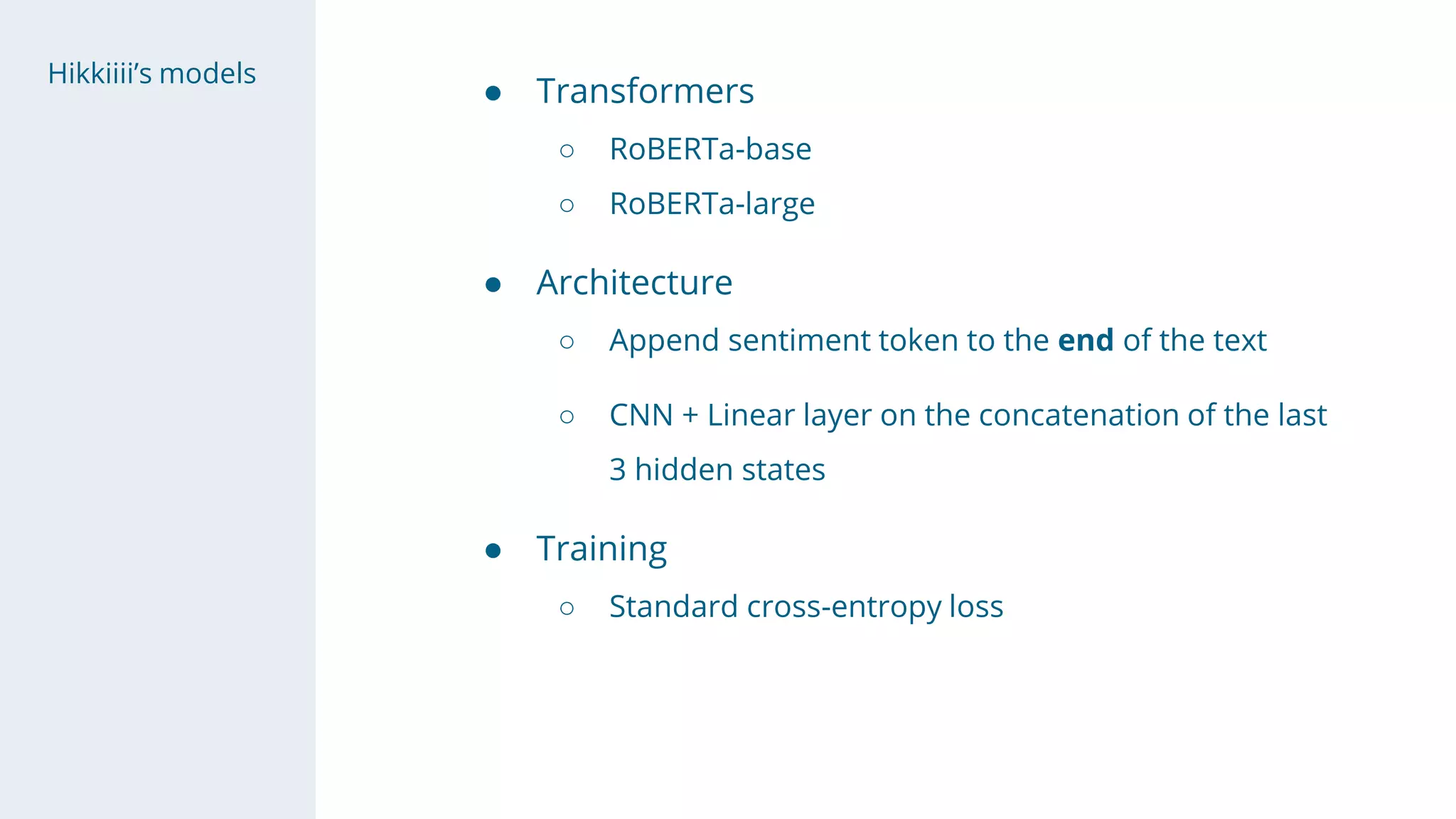

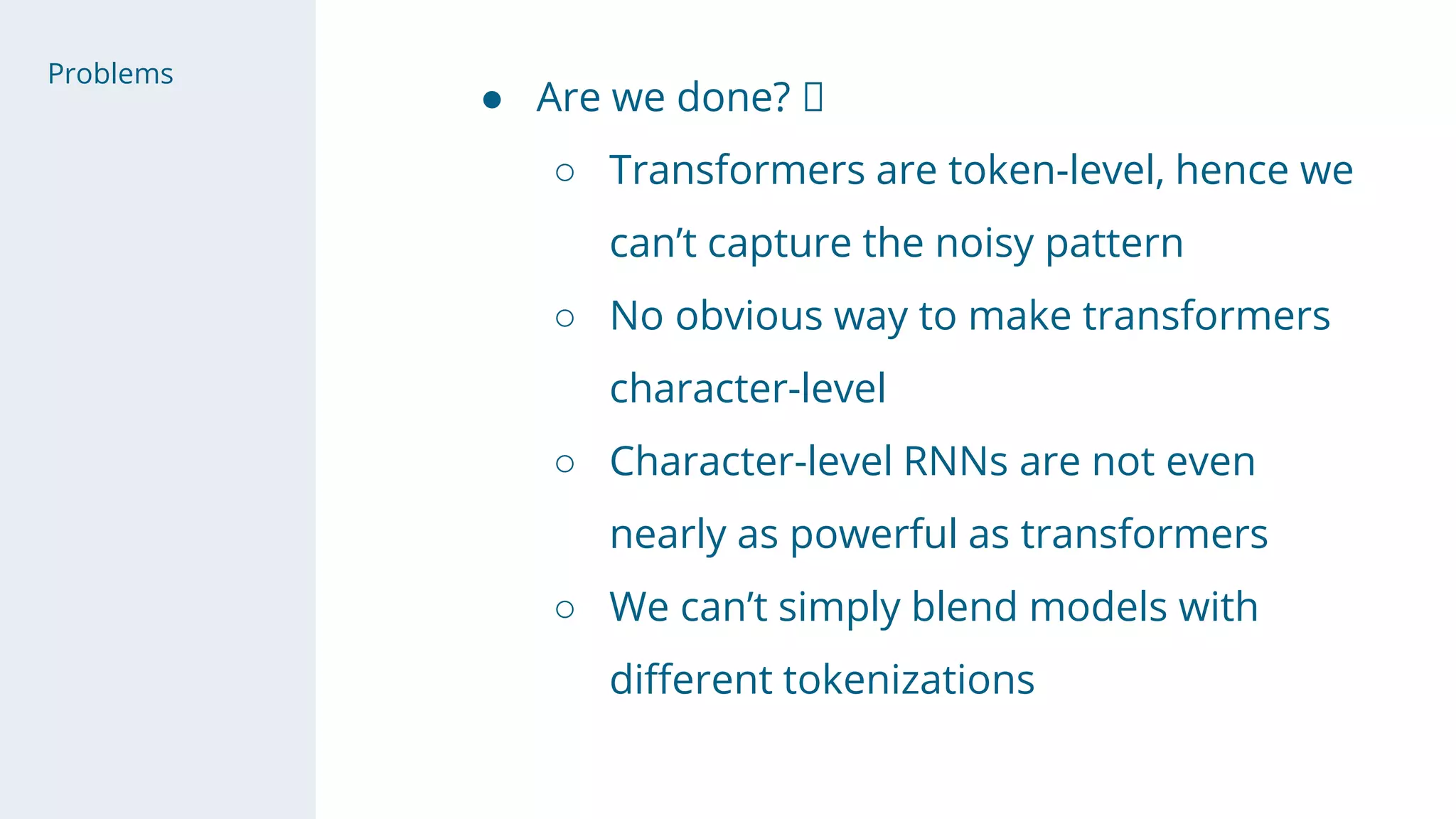

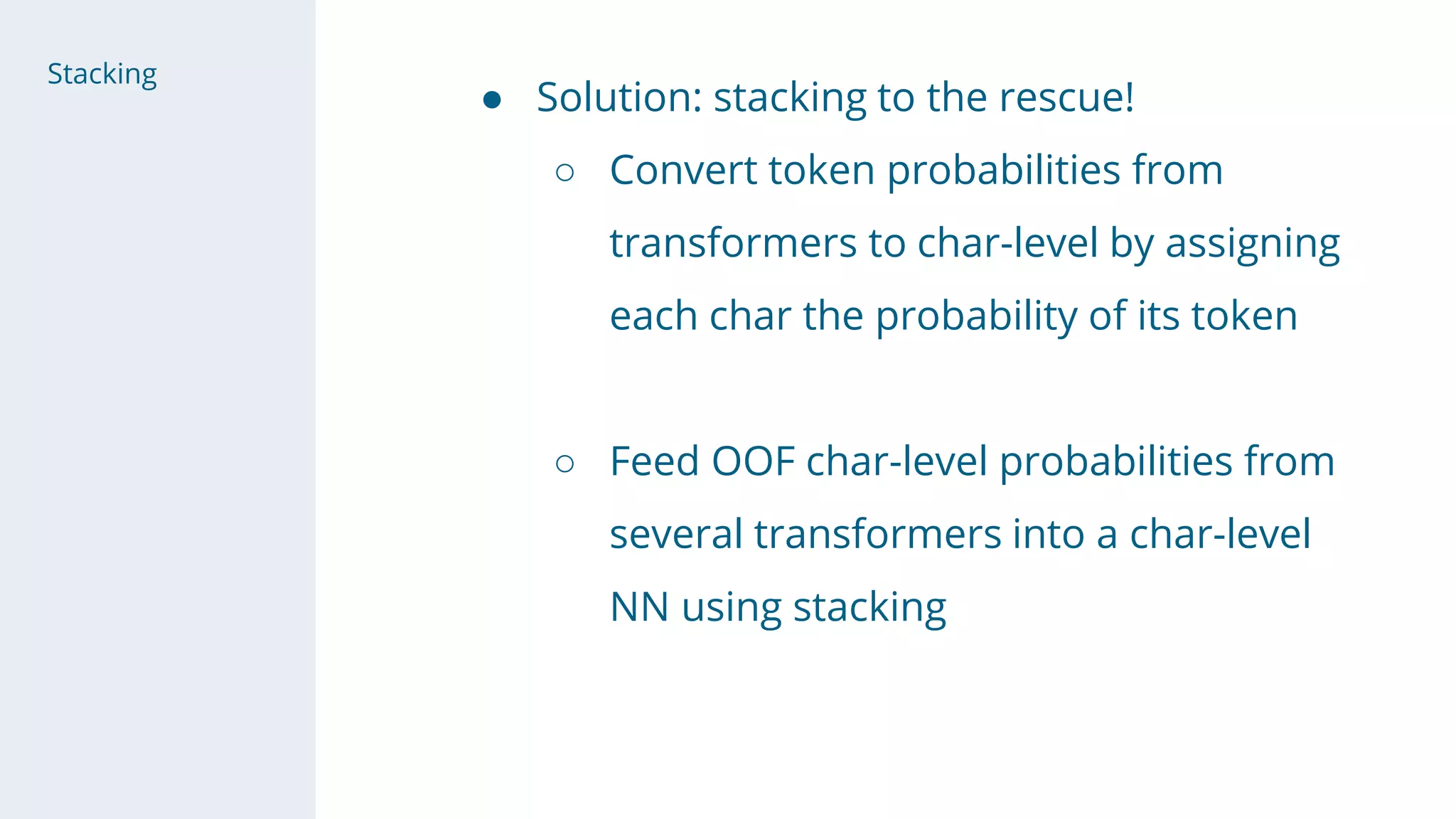

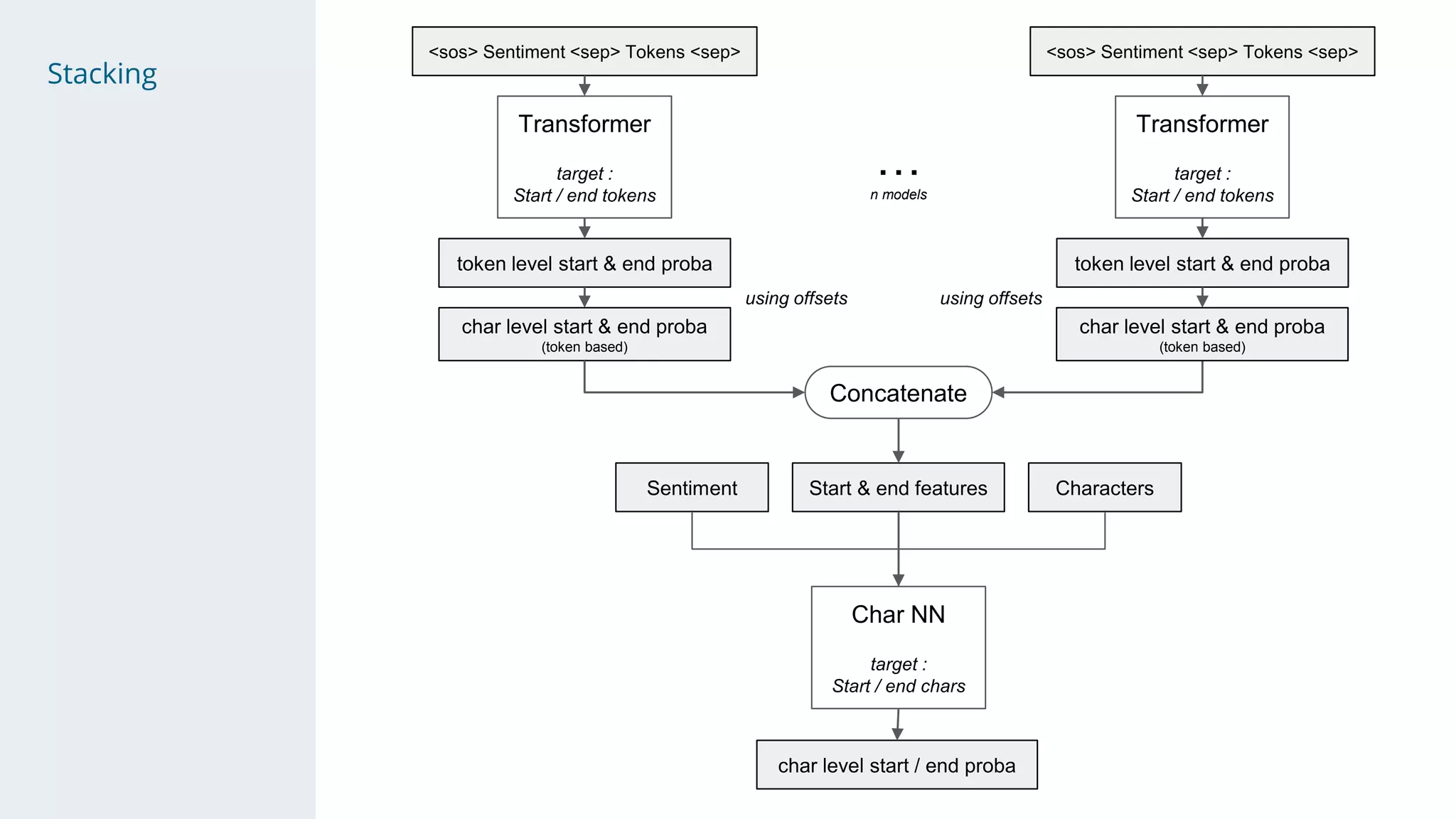

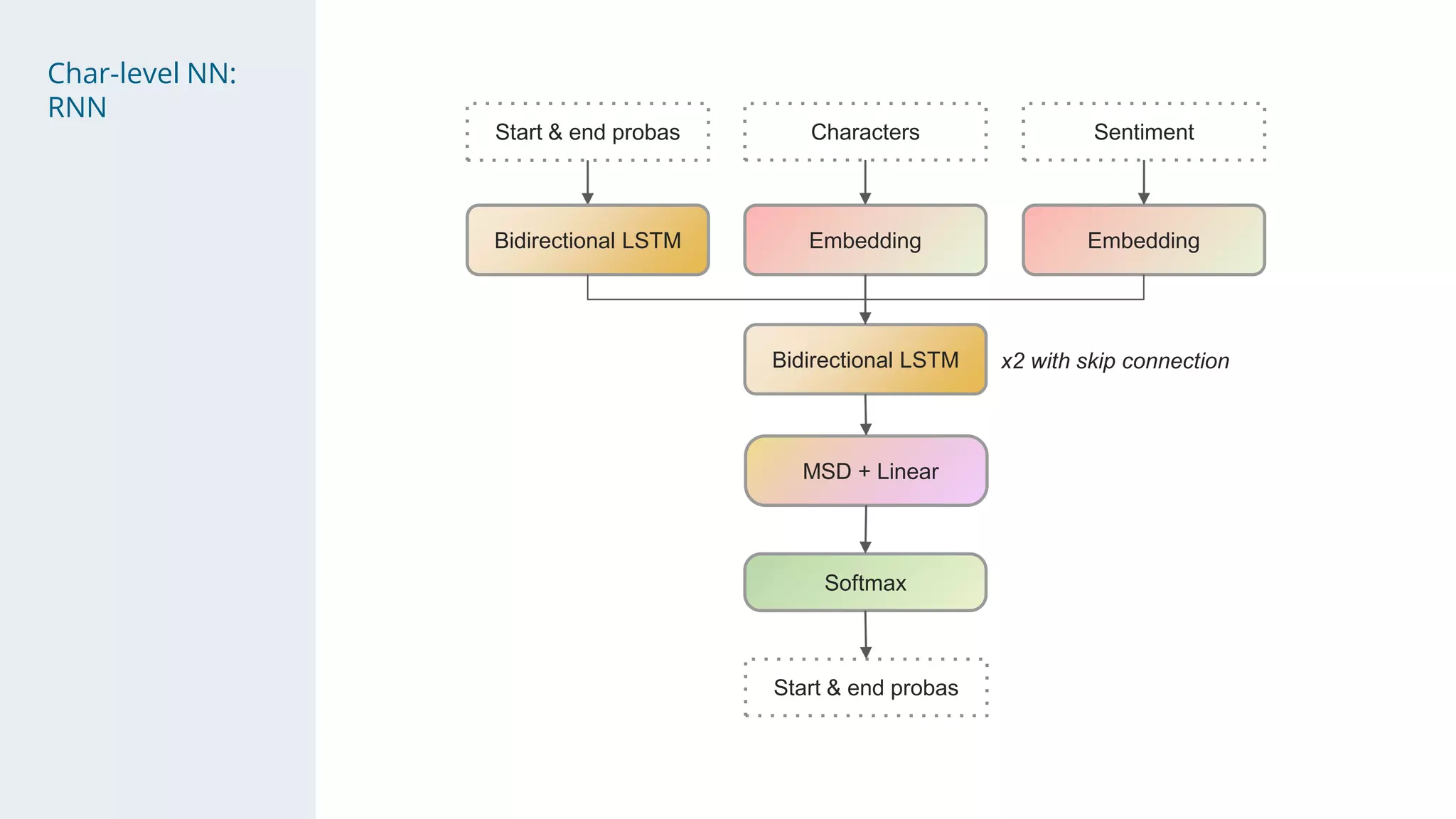

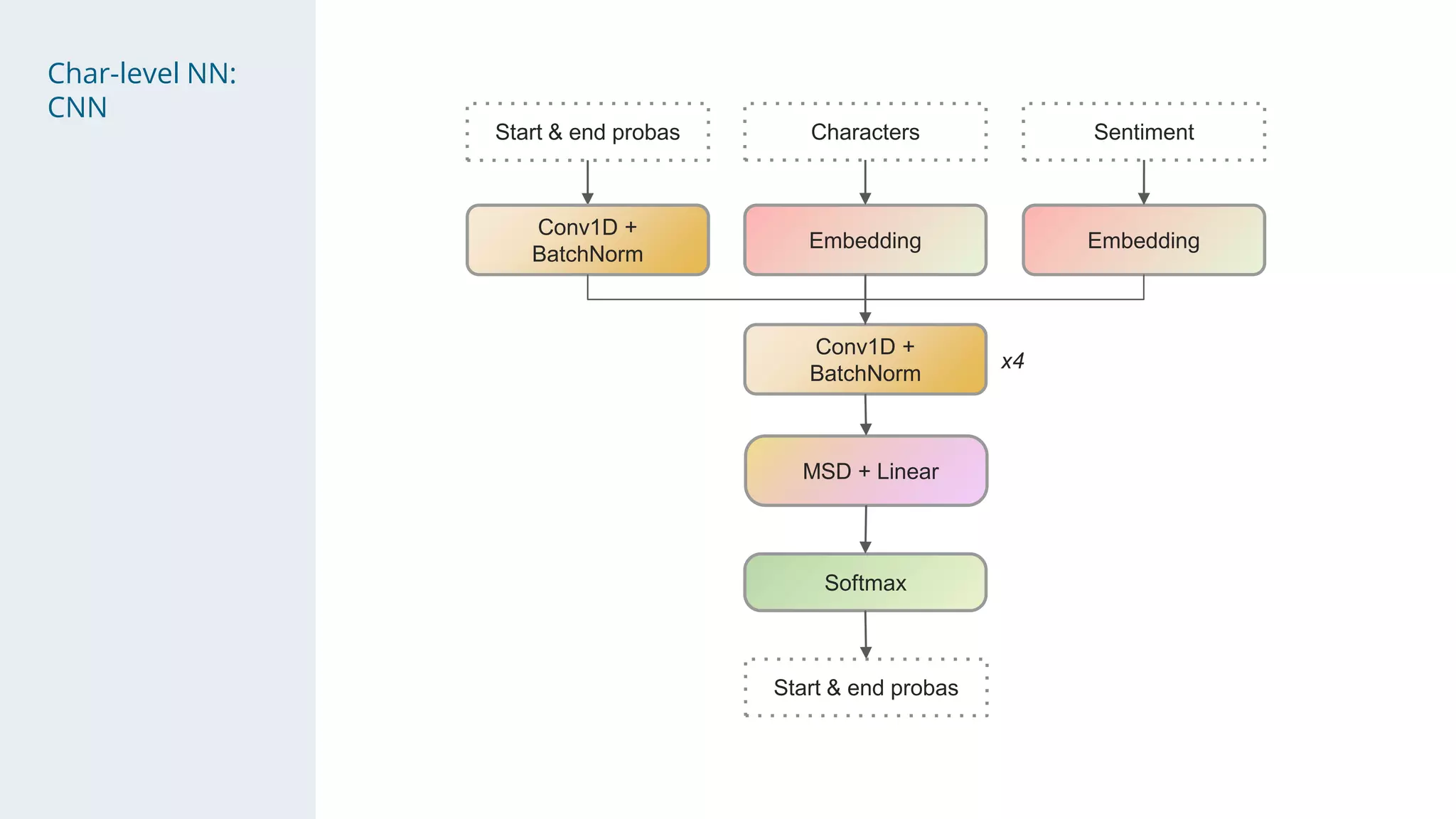

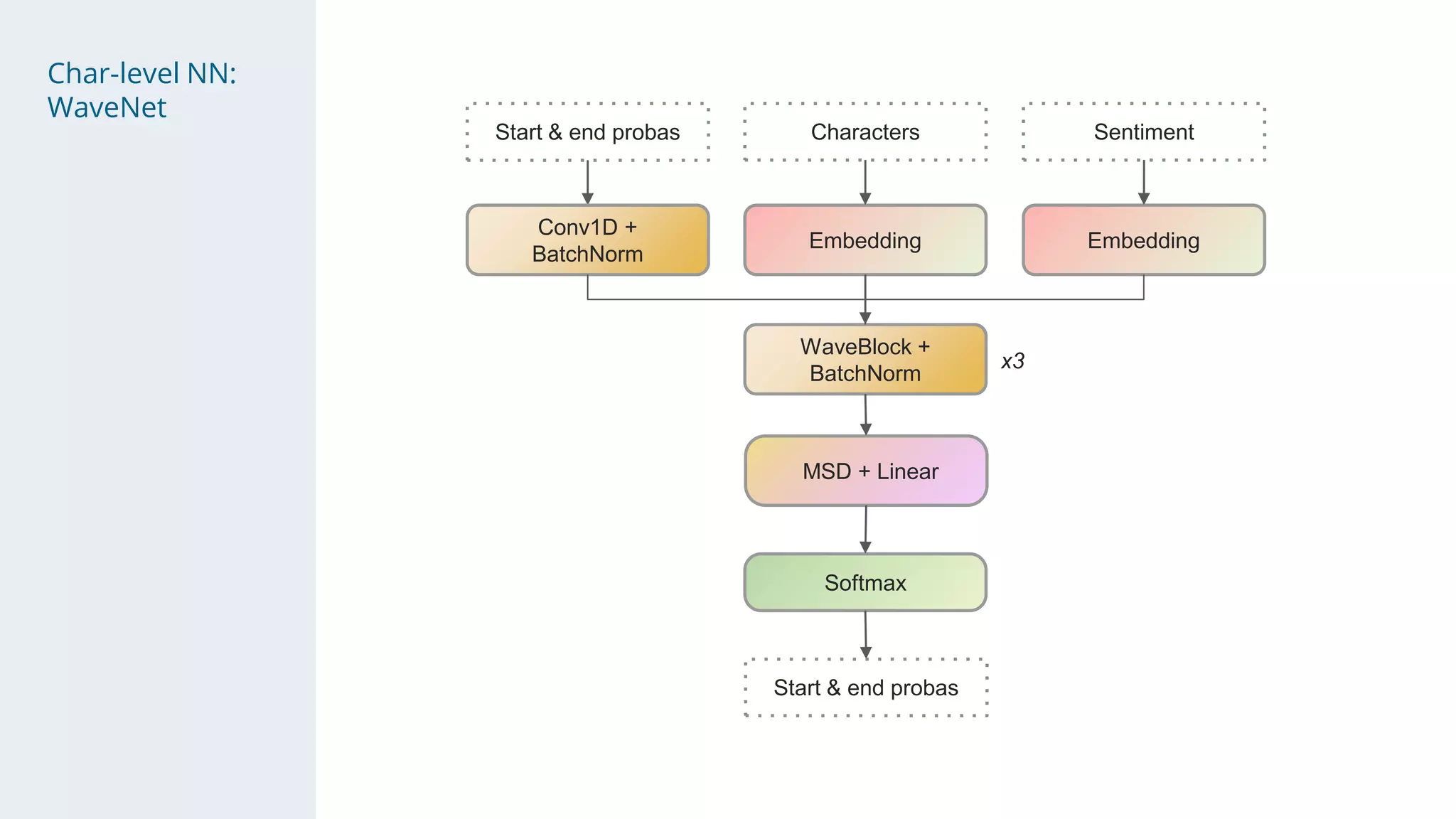

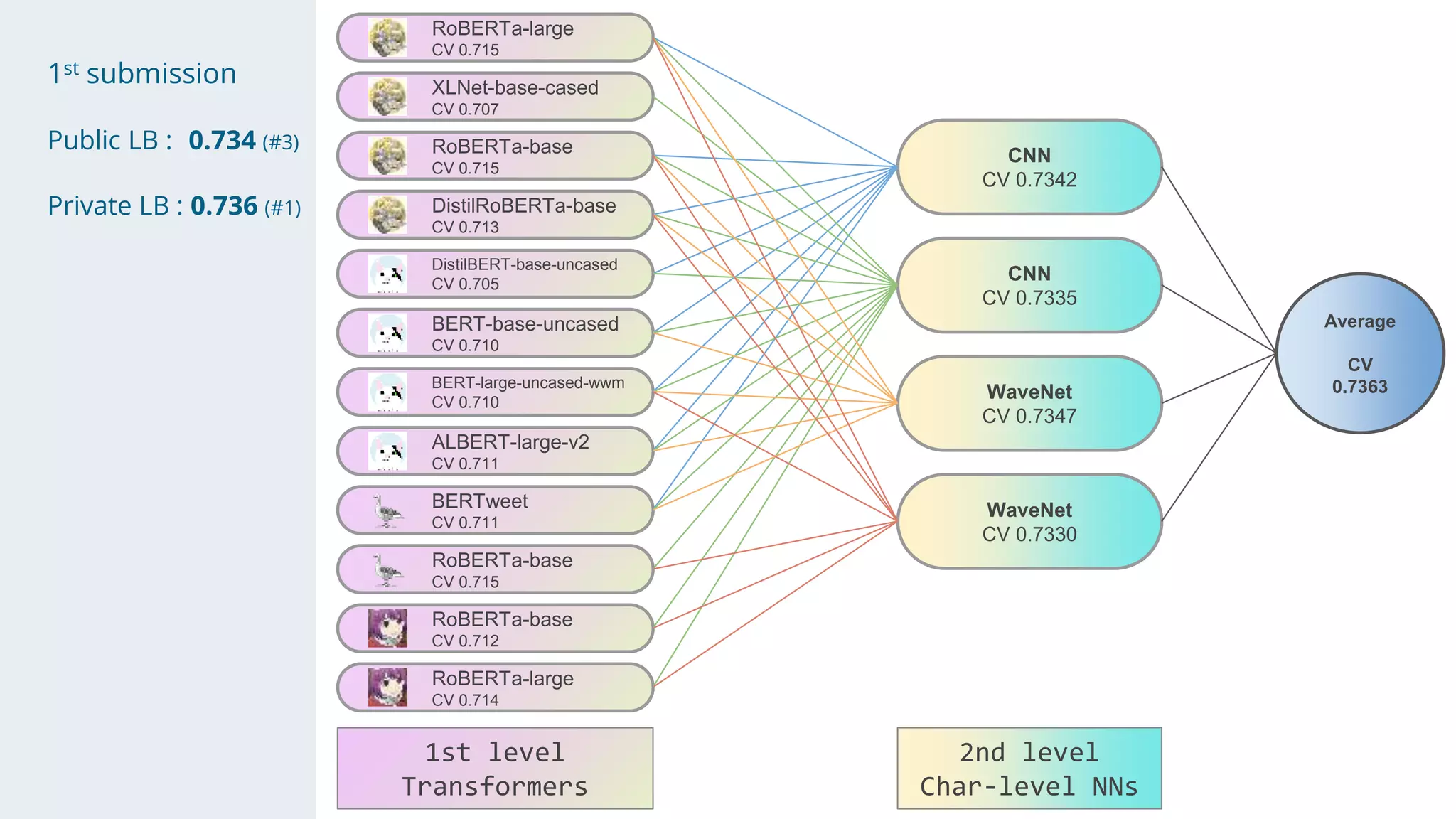

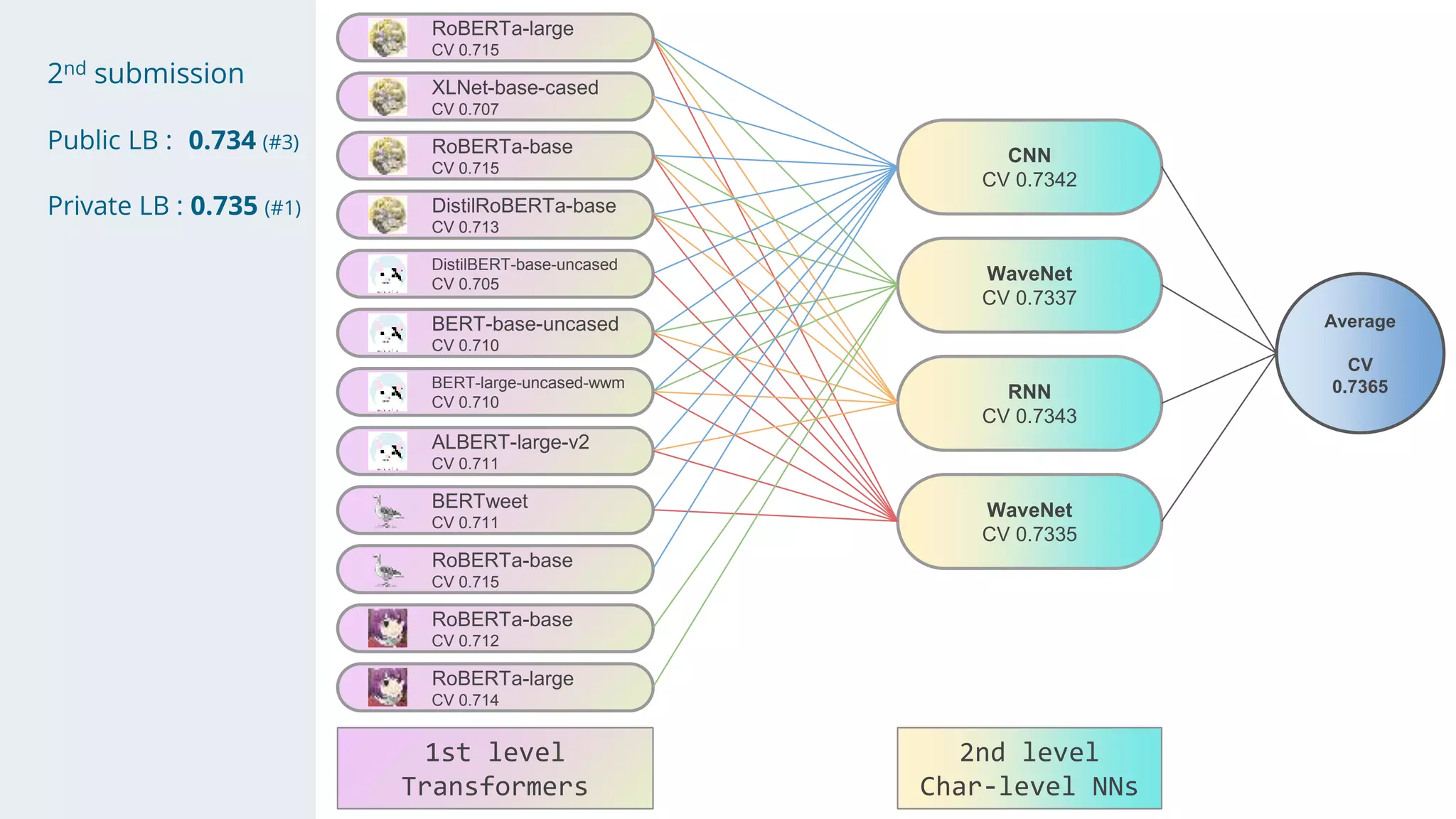

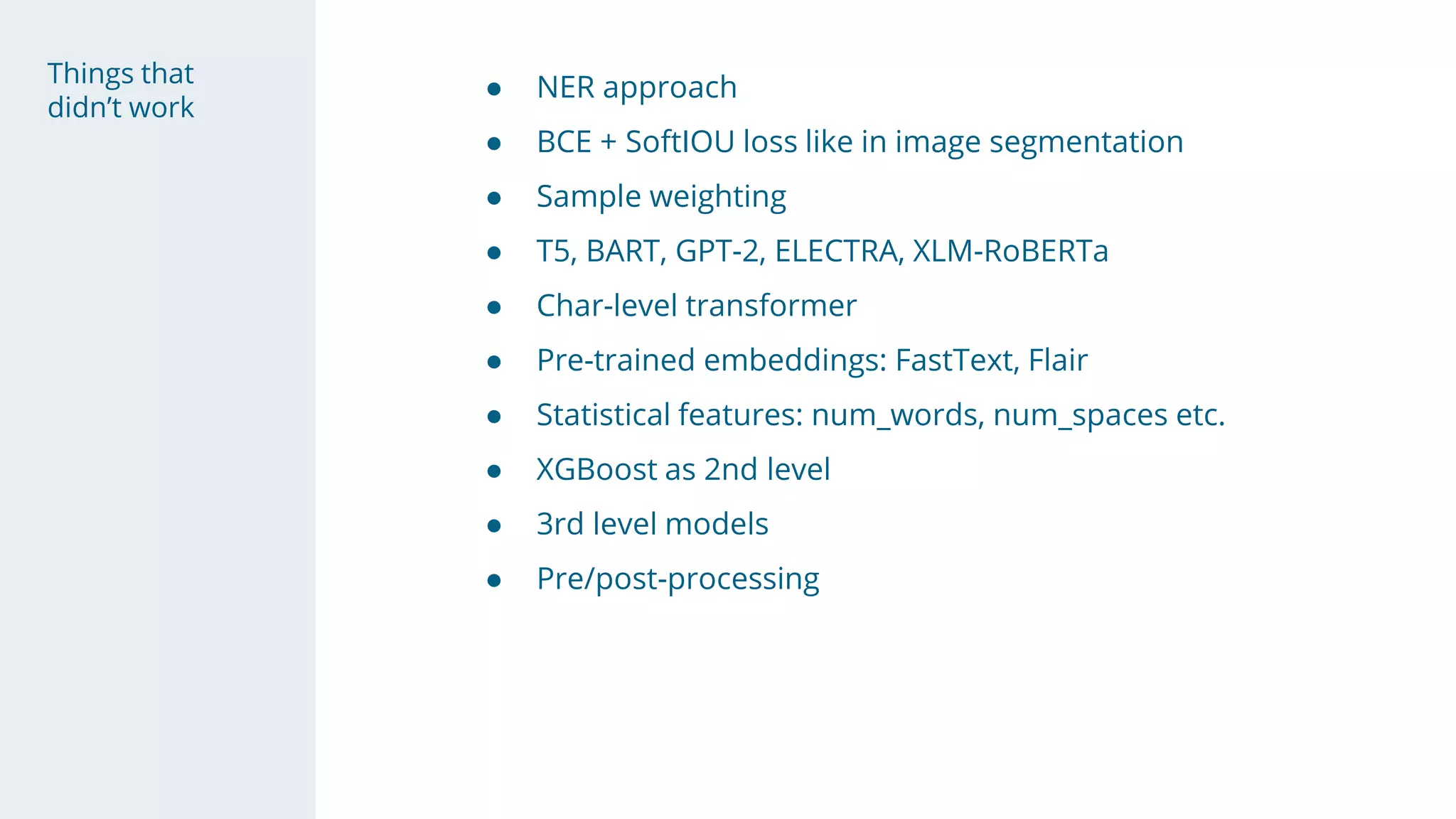

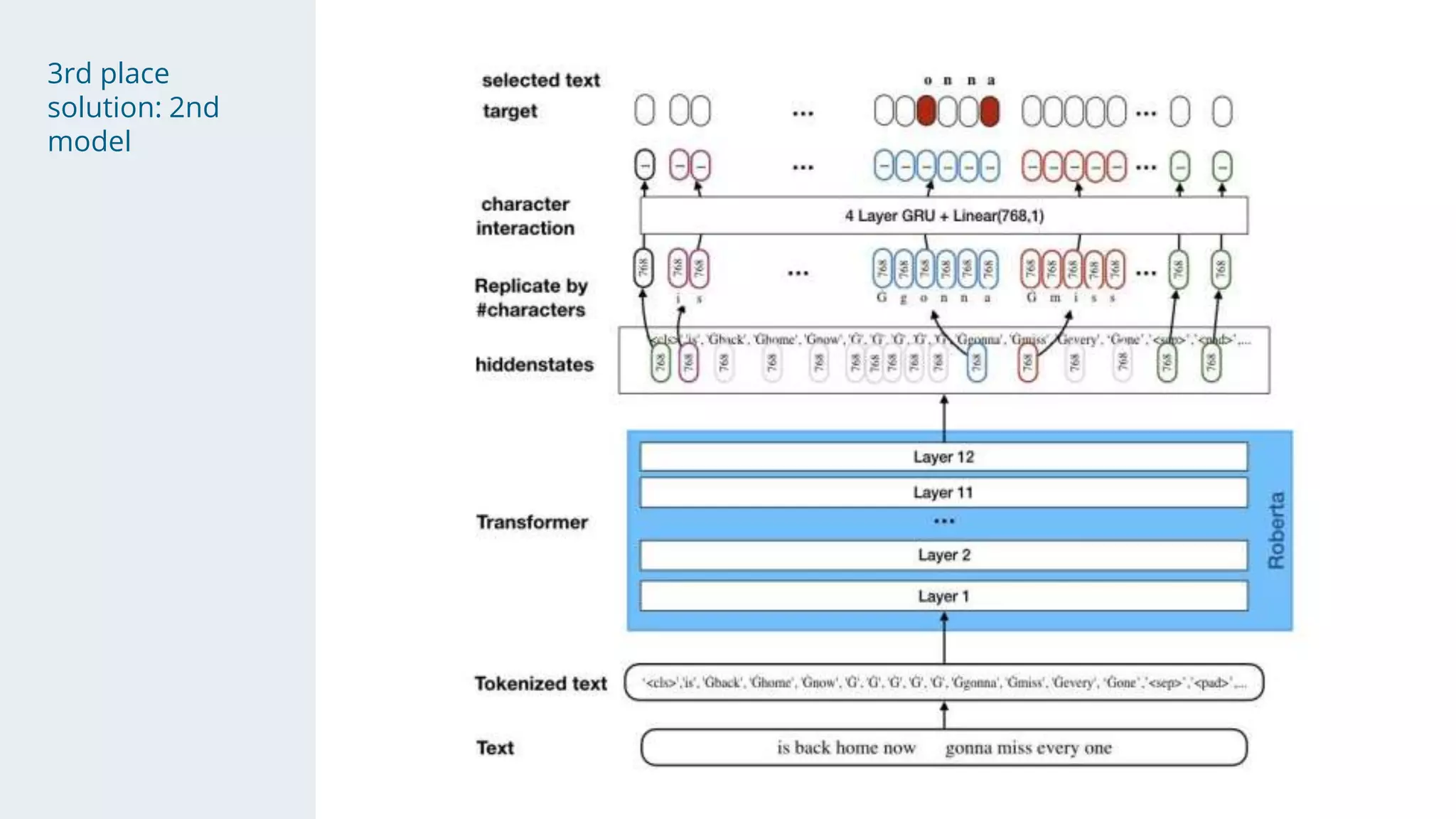

- The solution used a stacking approach, with transformer models like RoBERTa and BERT as the first level to generate token-level predictions, which were then fed as features into character-level neural networks as the second level.

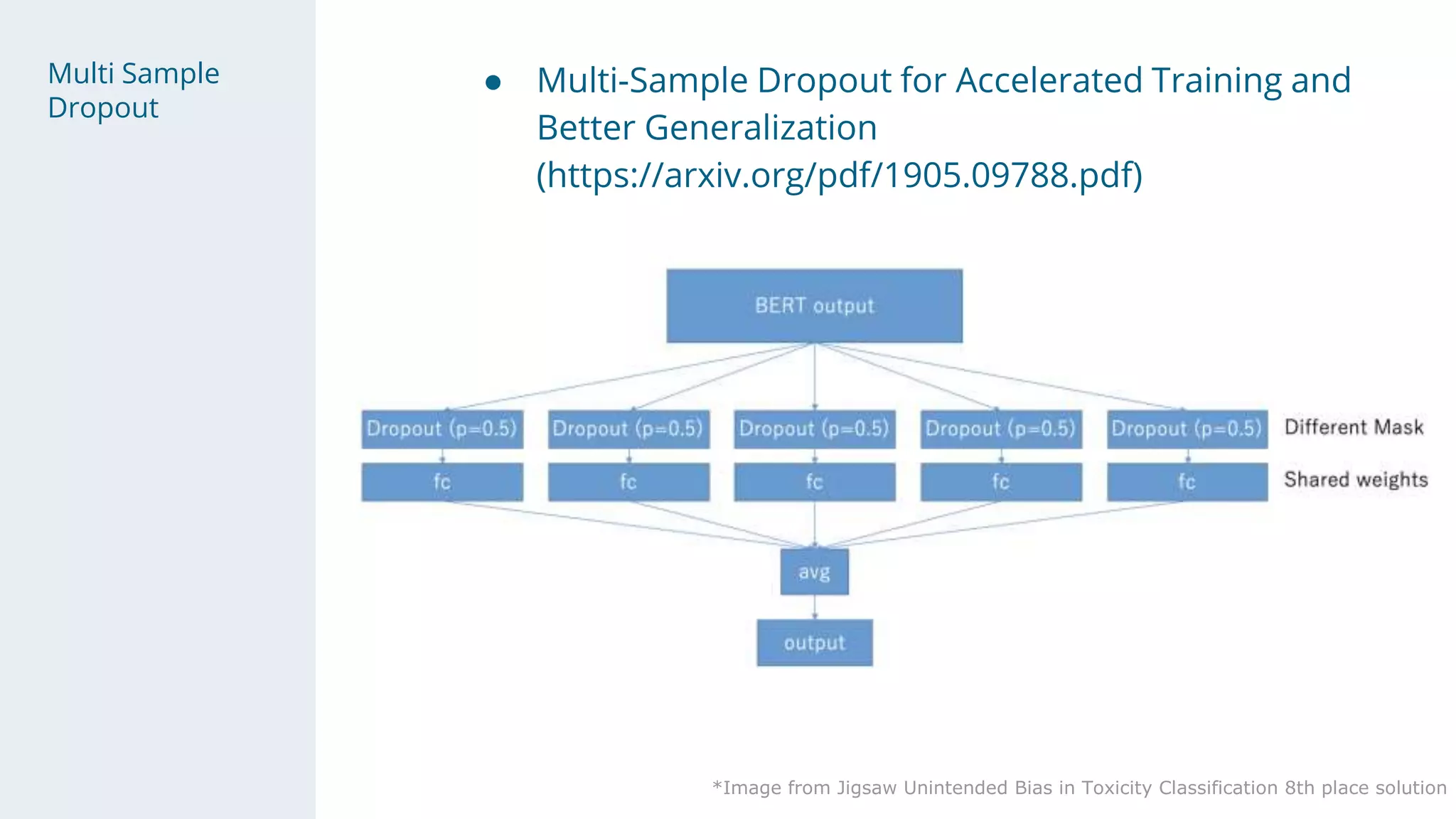

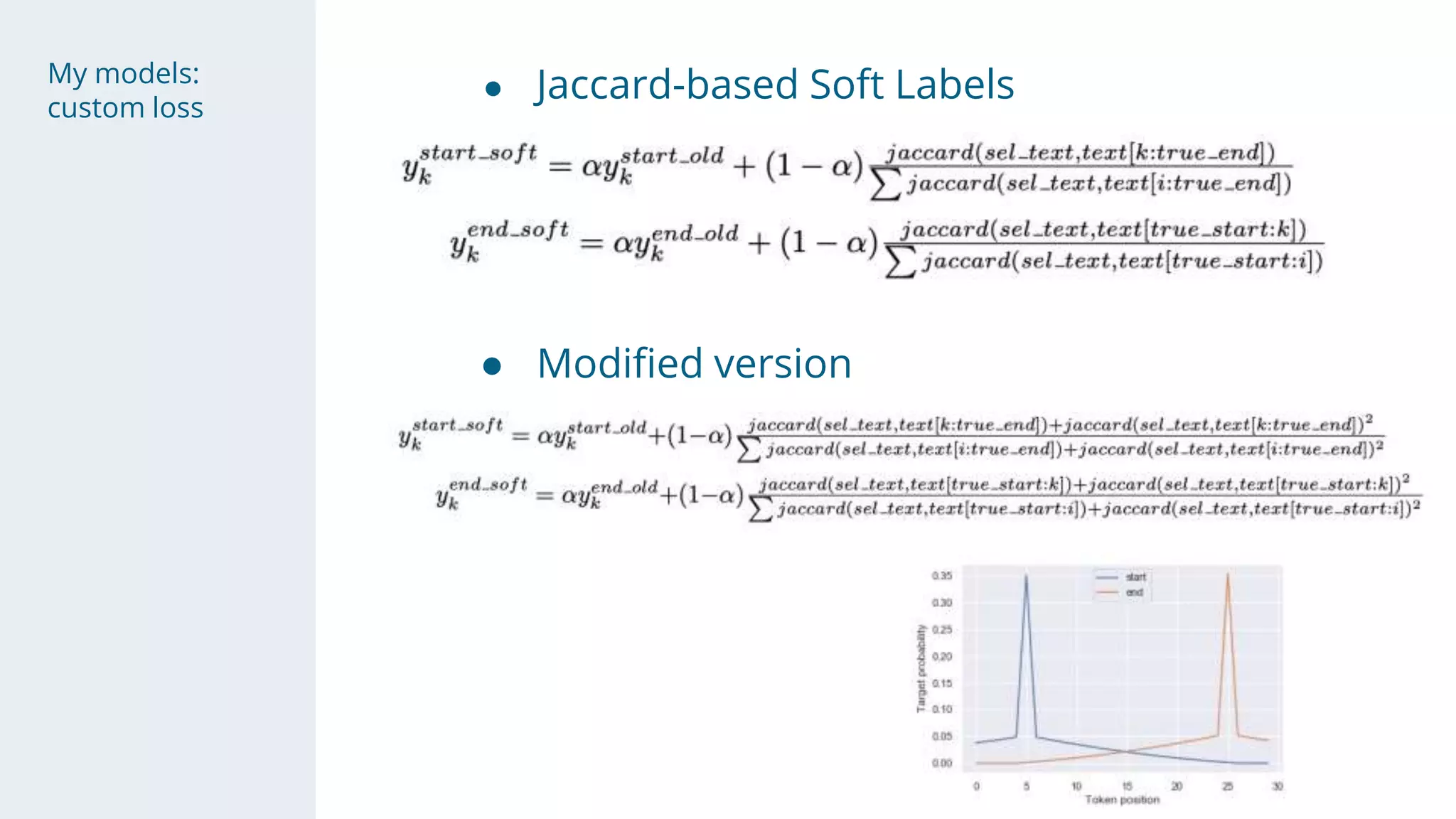

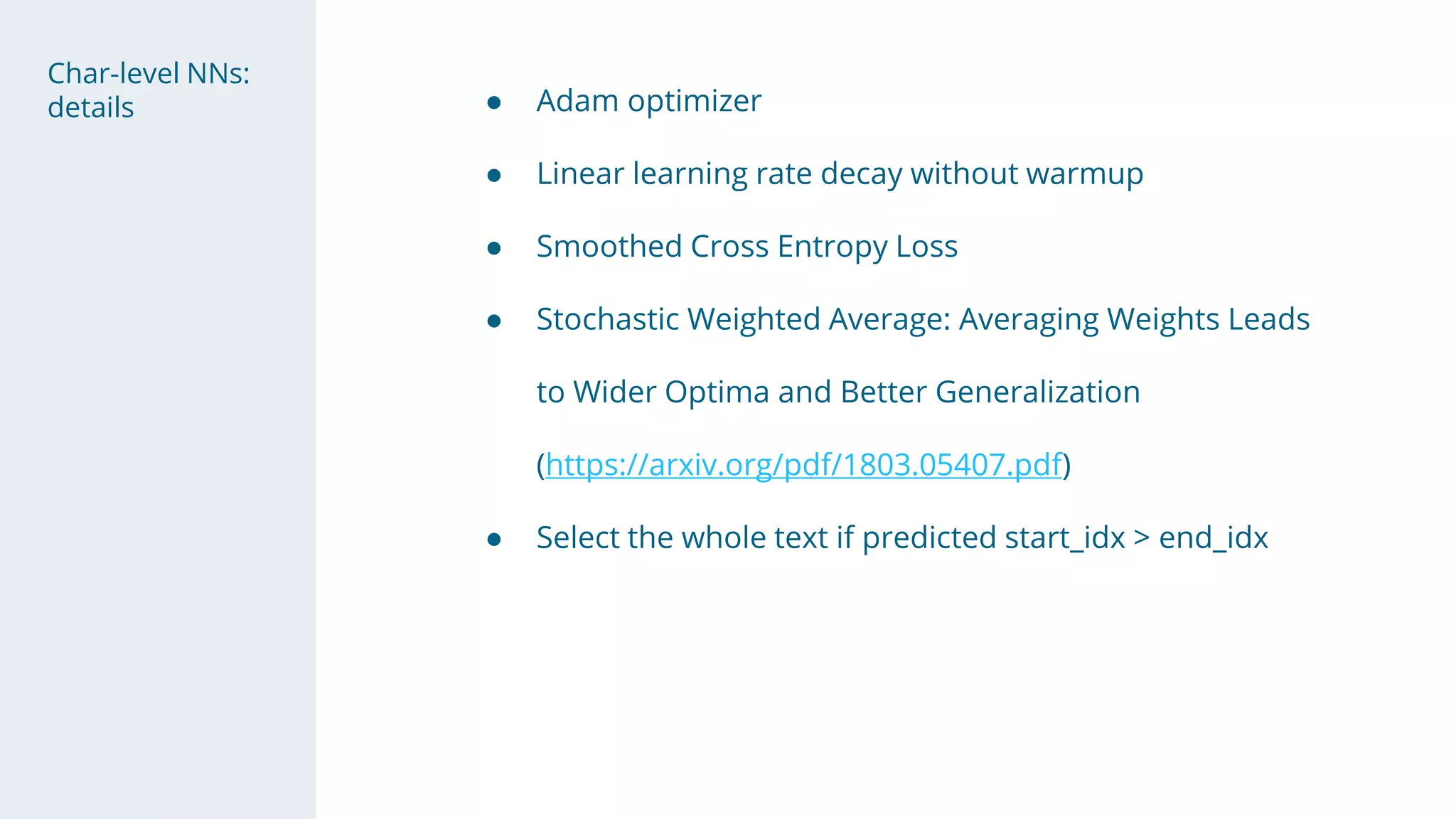

- Character-level models included CNNs, RNNs, and WaveNet, with techniques like multi-sample dropout, custom losses, and model averaging.

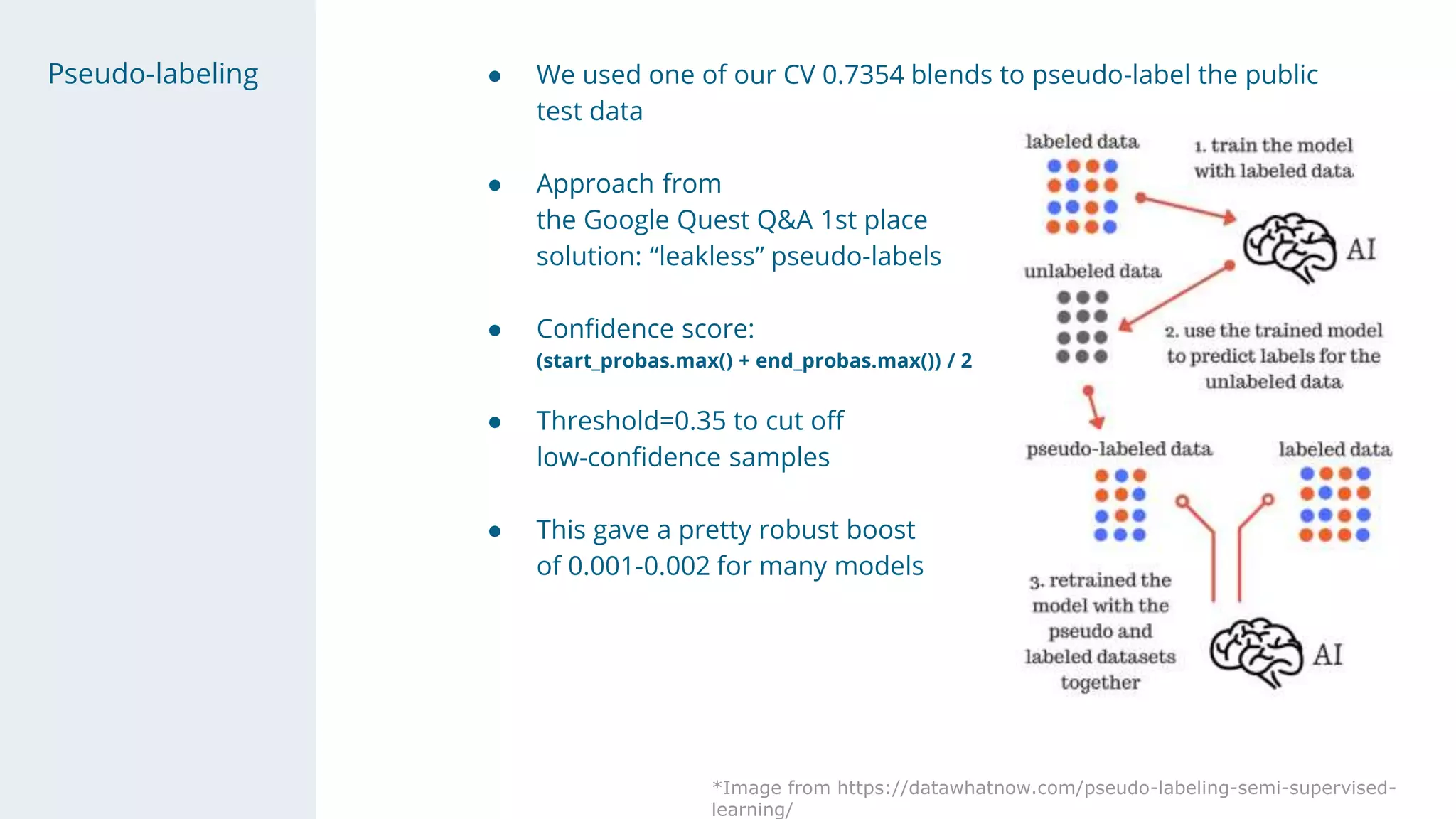

- Pseudo-labeling on public data with a threshold boosted scores.

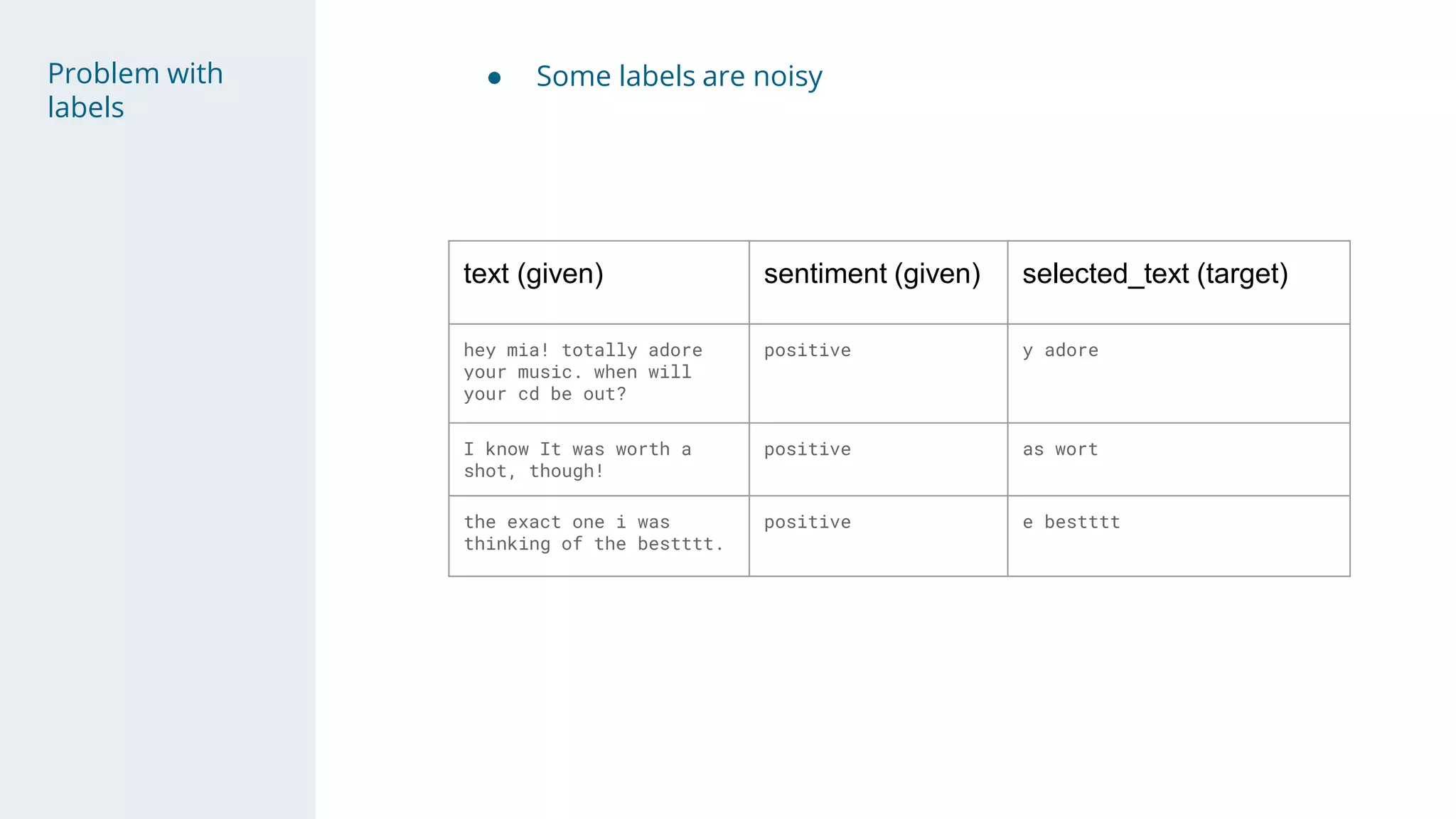

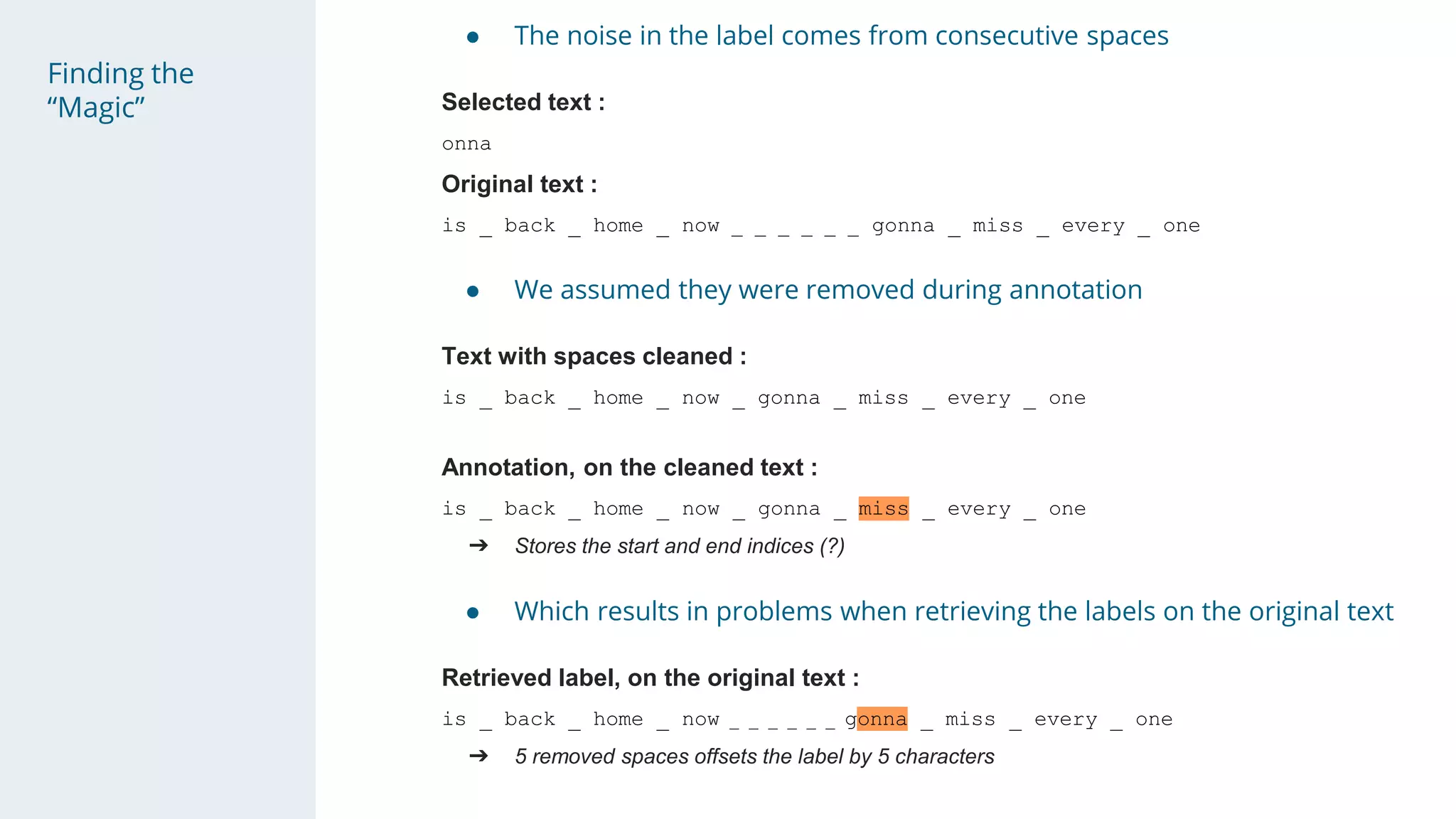

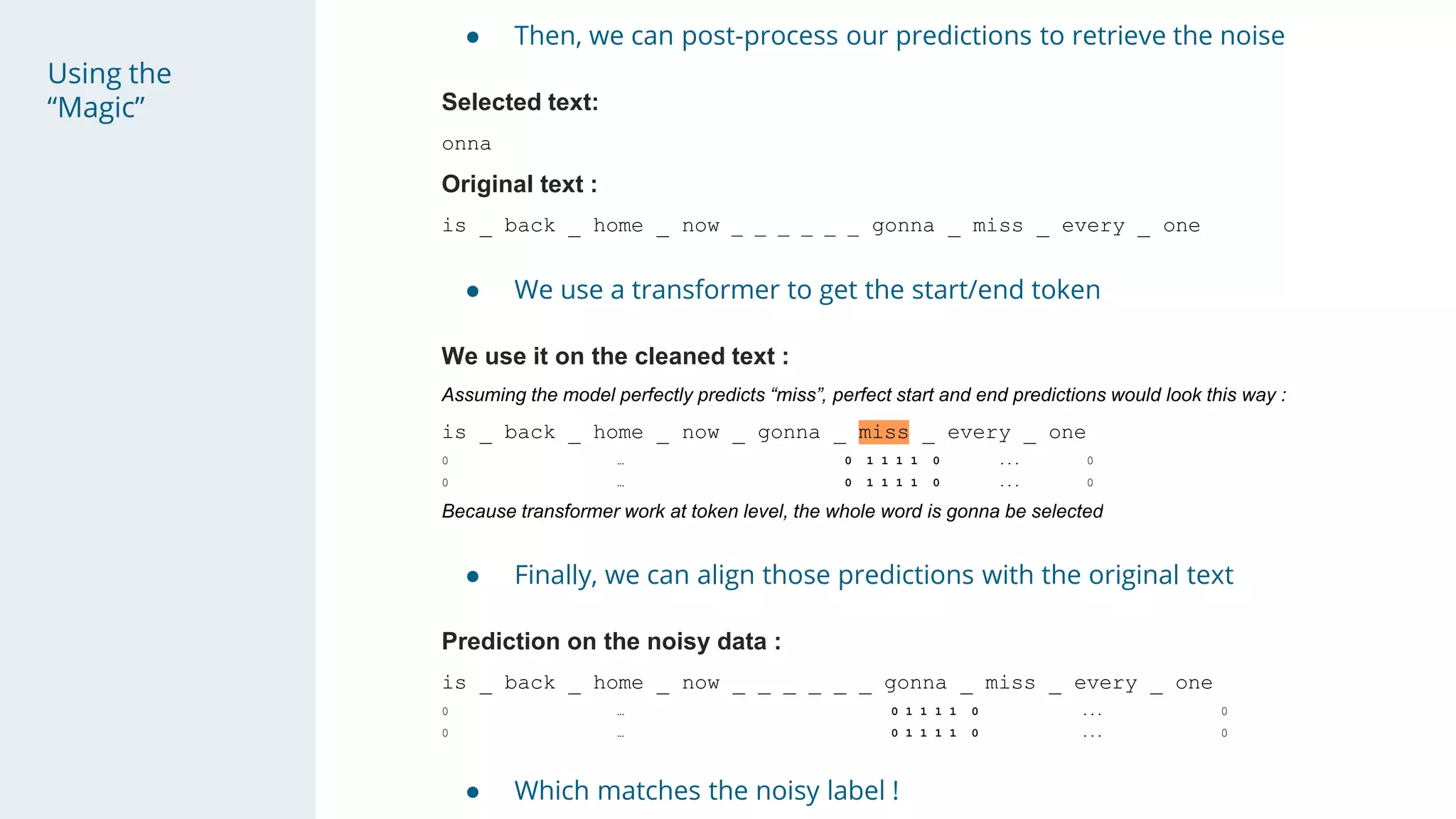

- The "magic" was discovering noisy labels were due to removed spaces, and a post-processing step aligned predictions.