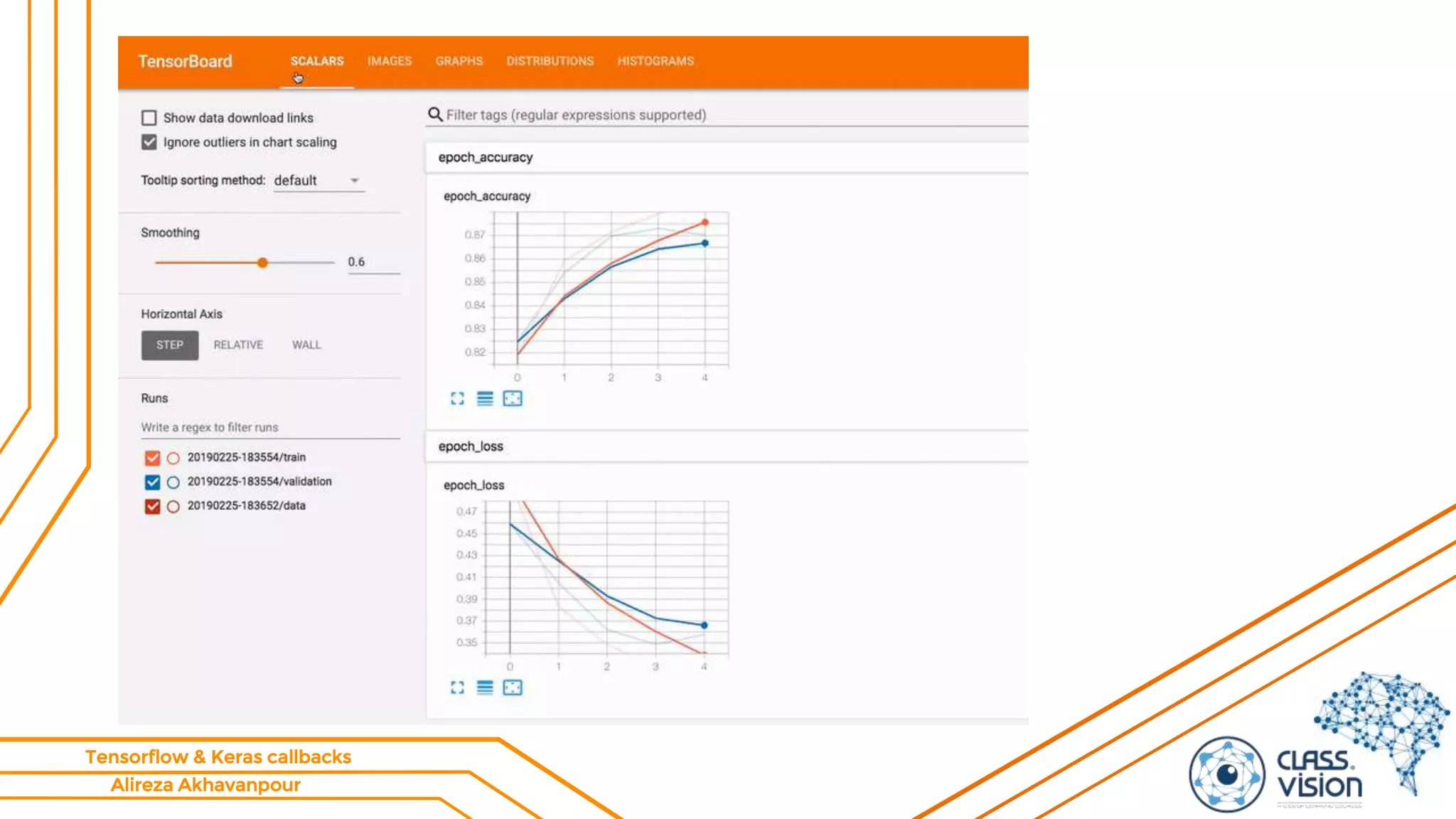

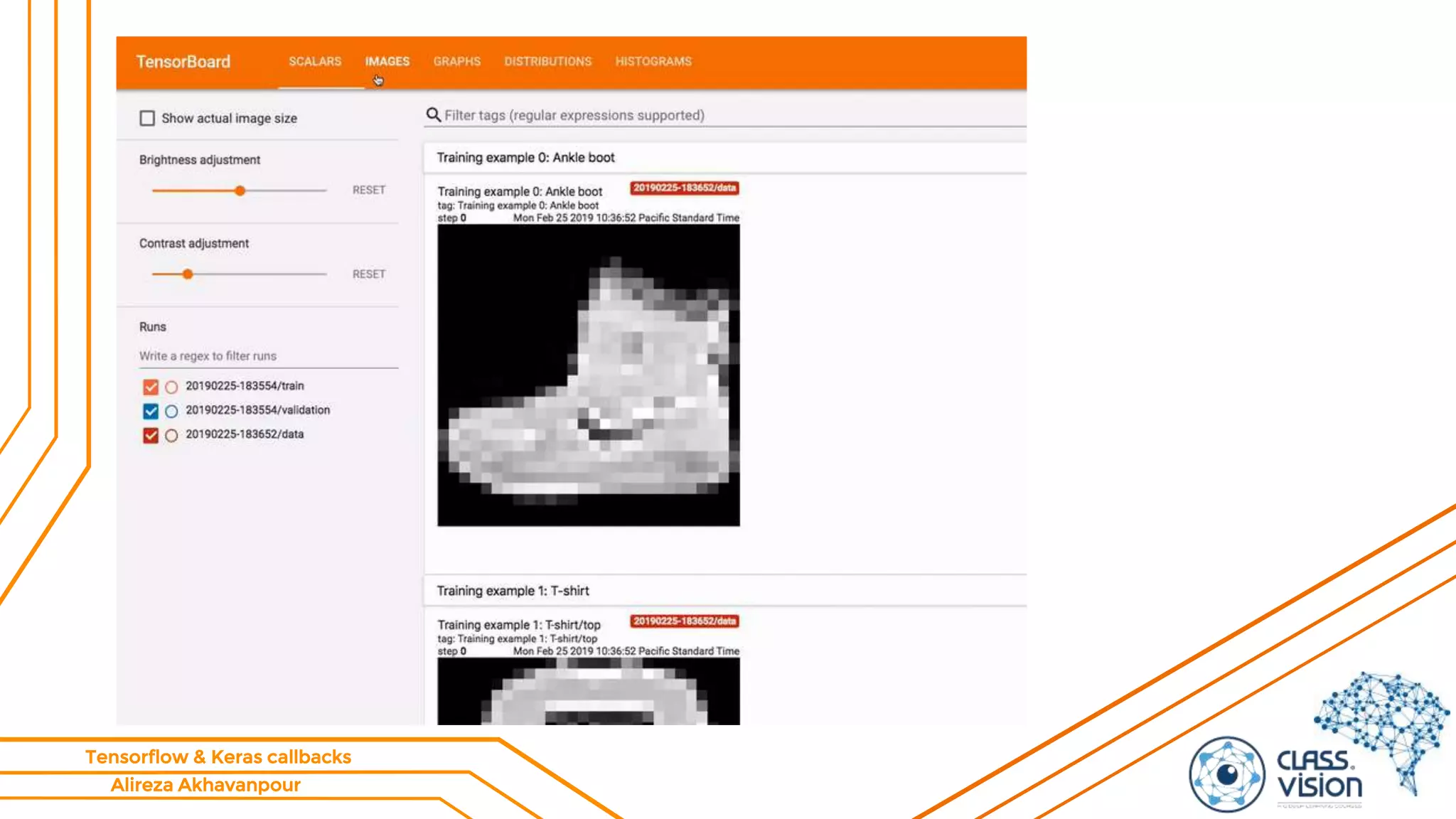

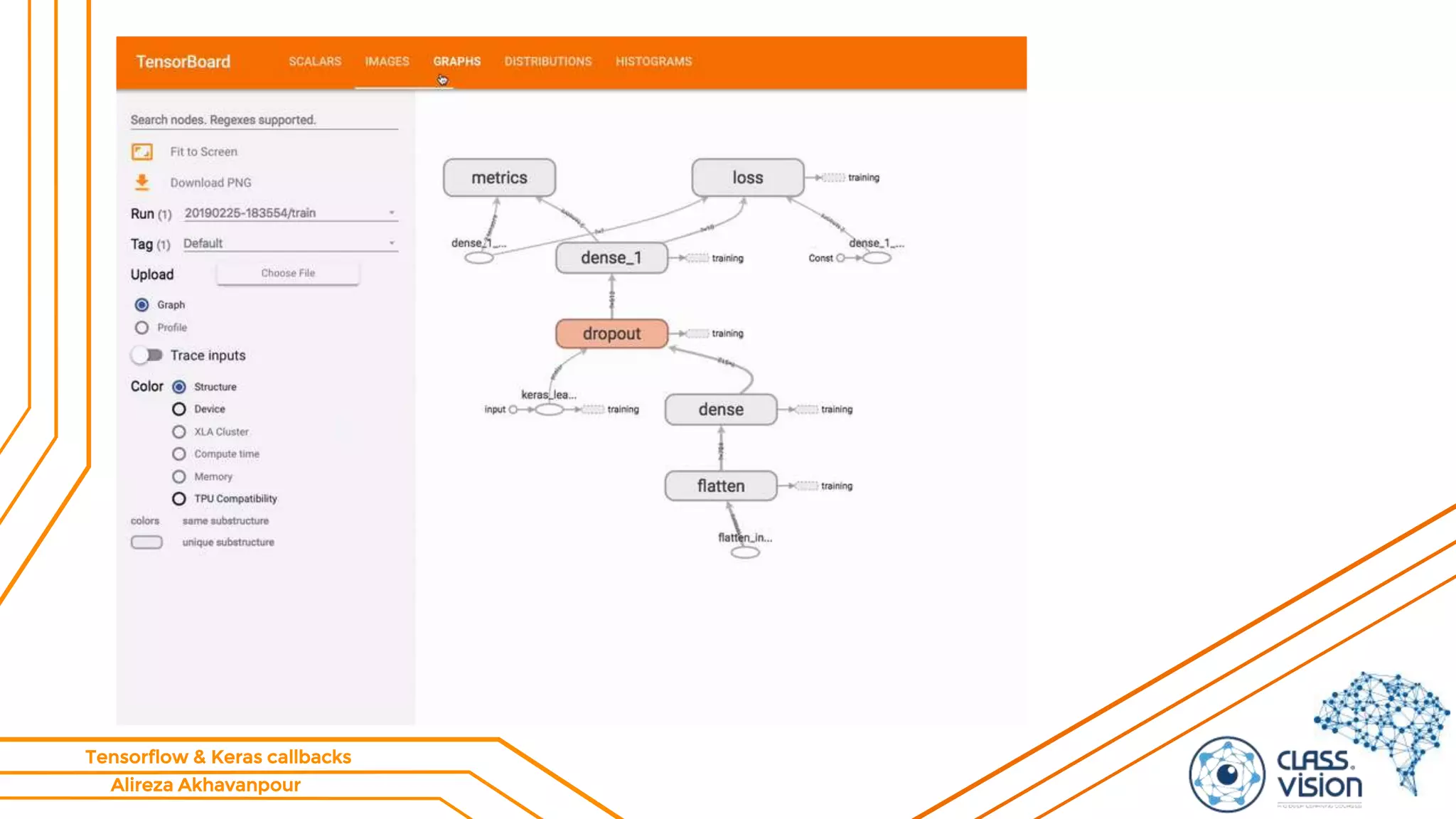

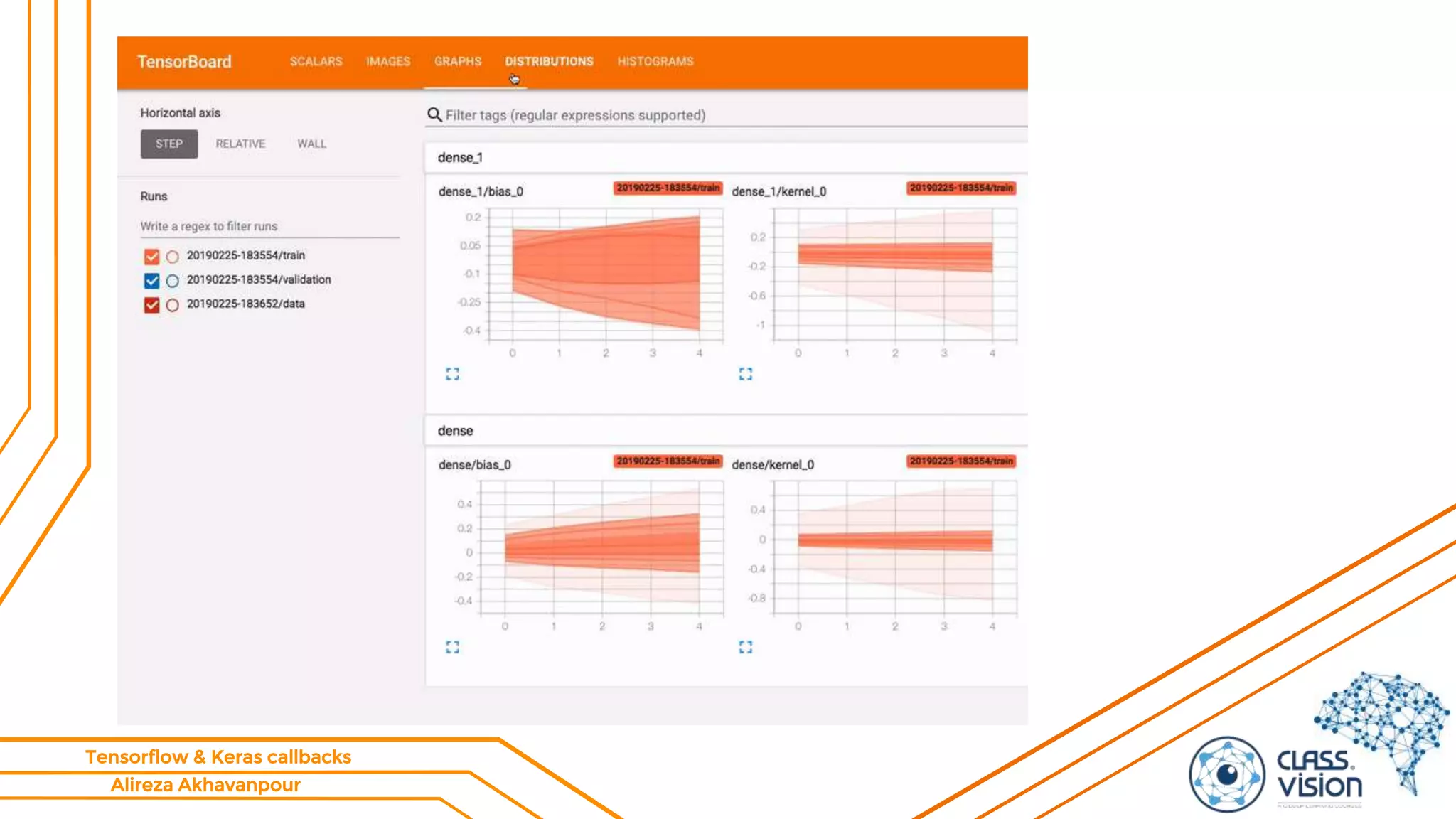

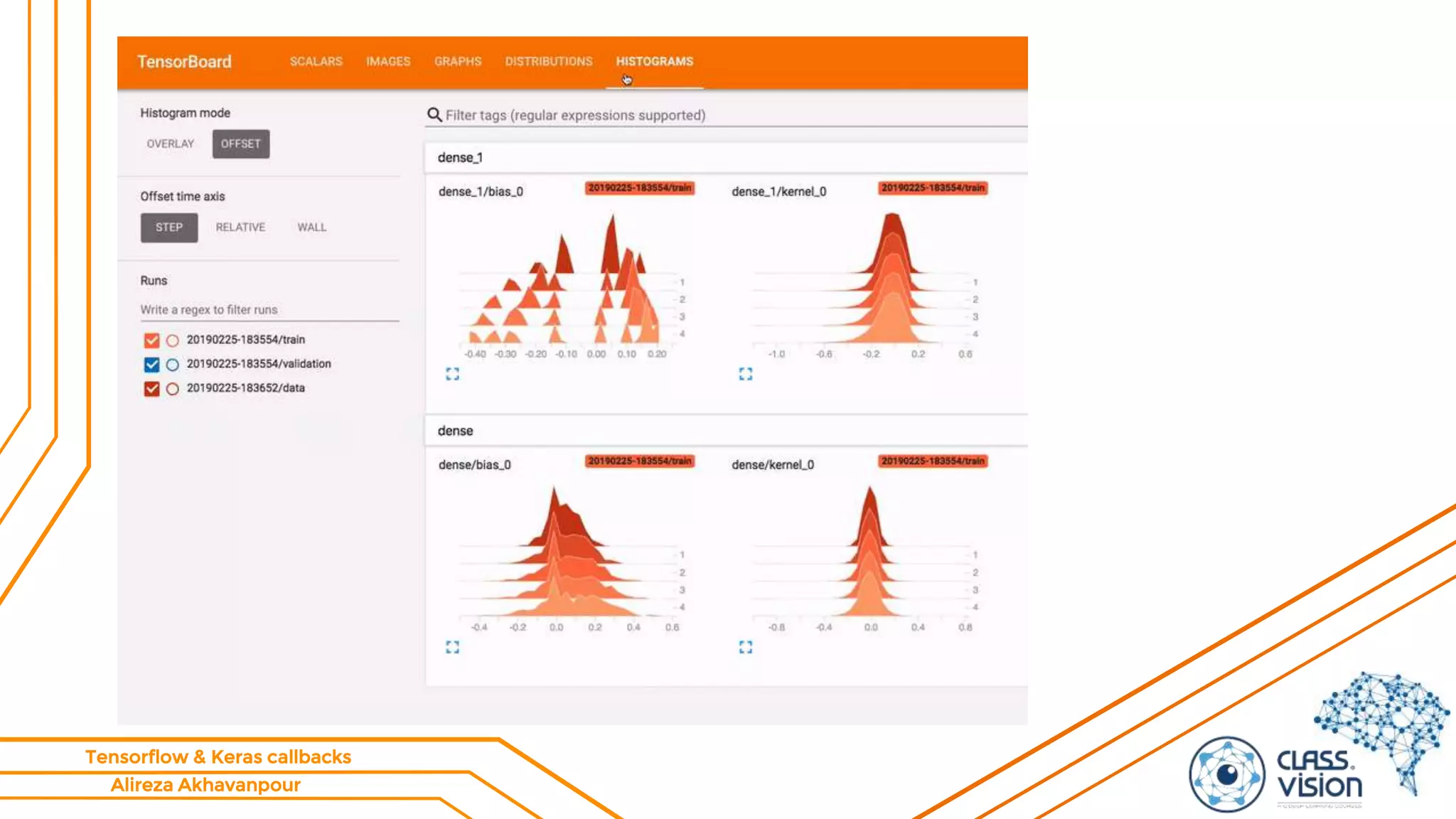

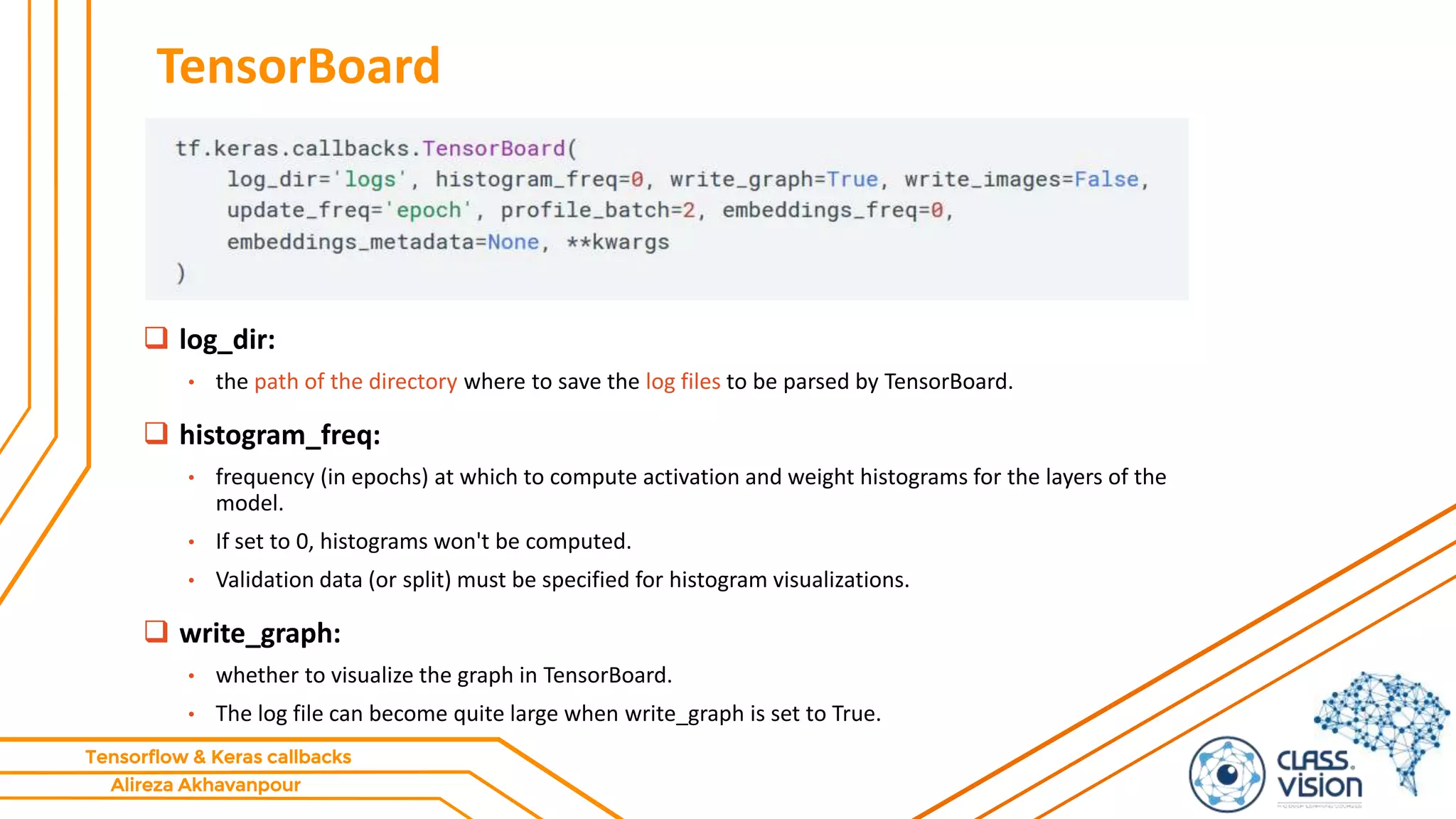

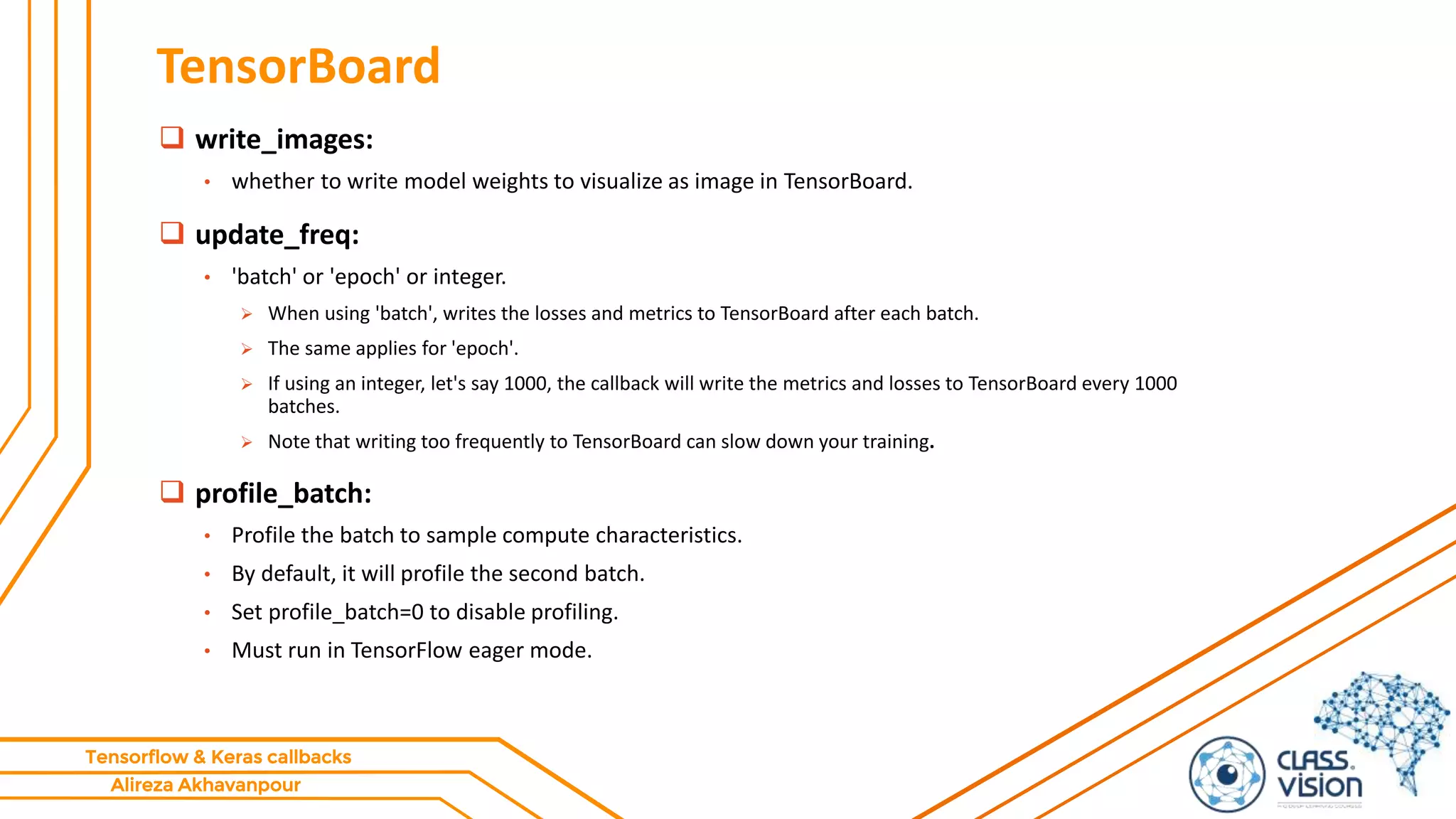

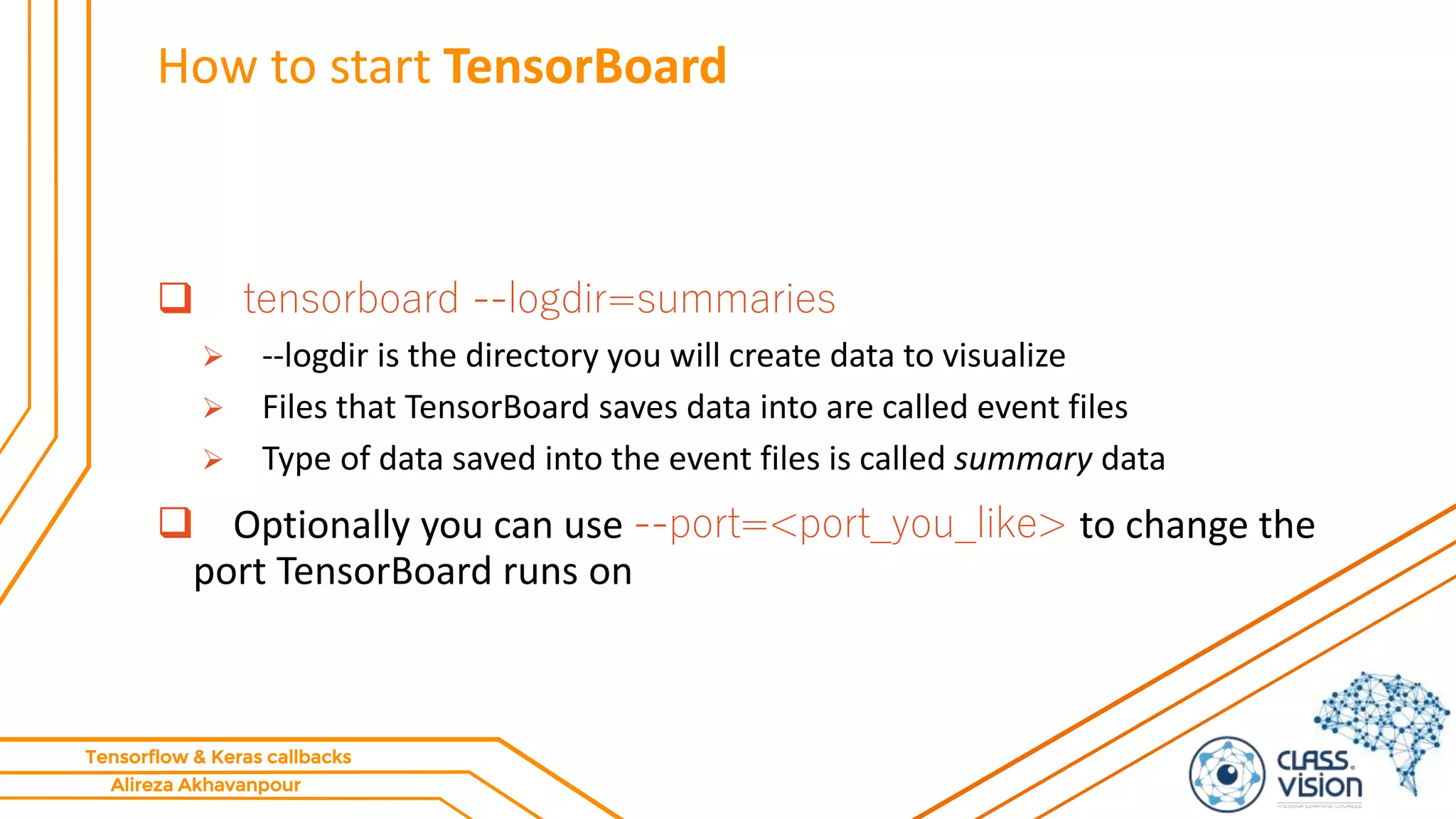

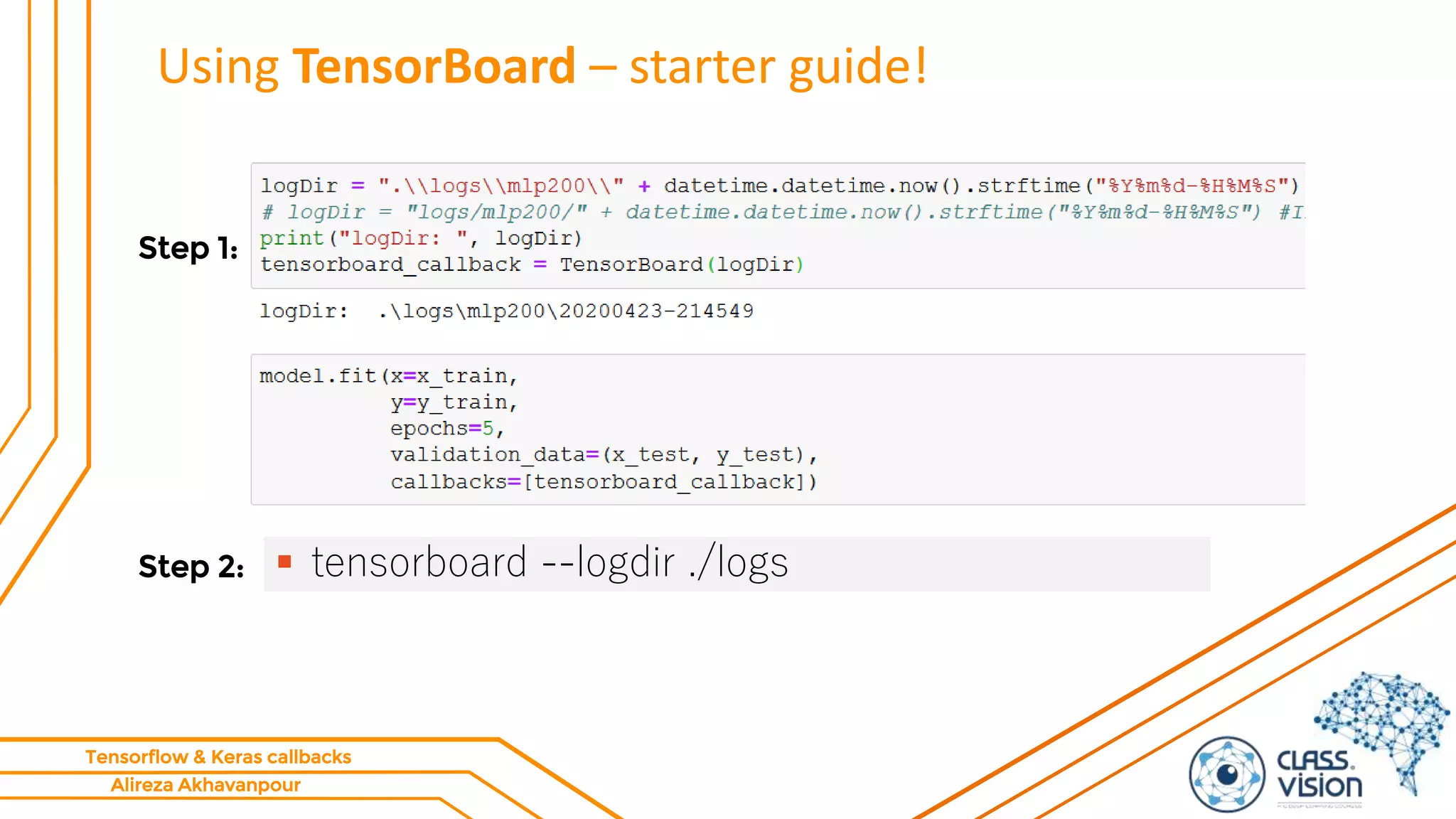

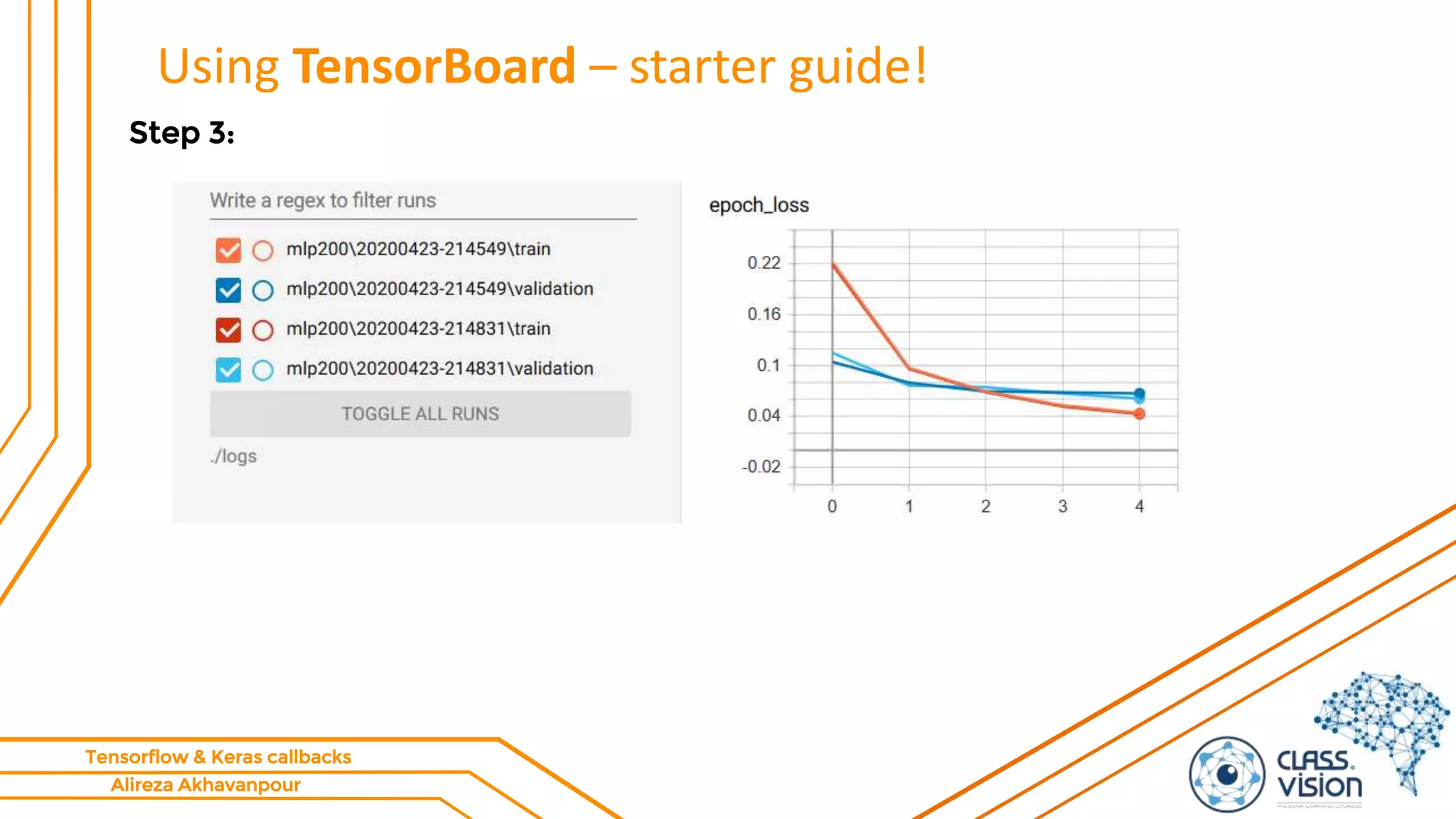

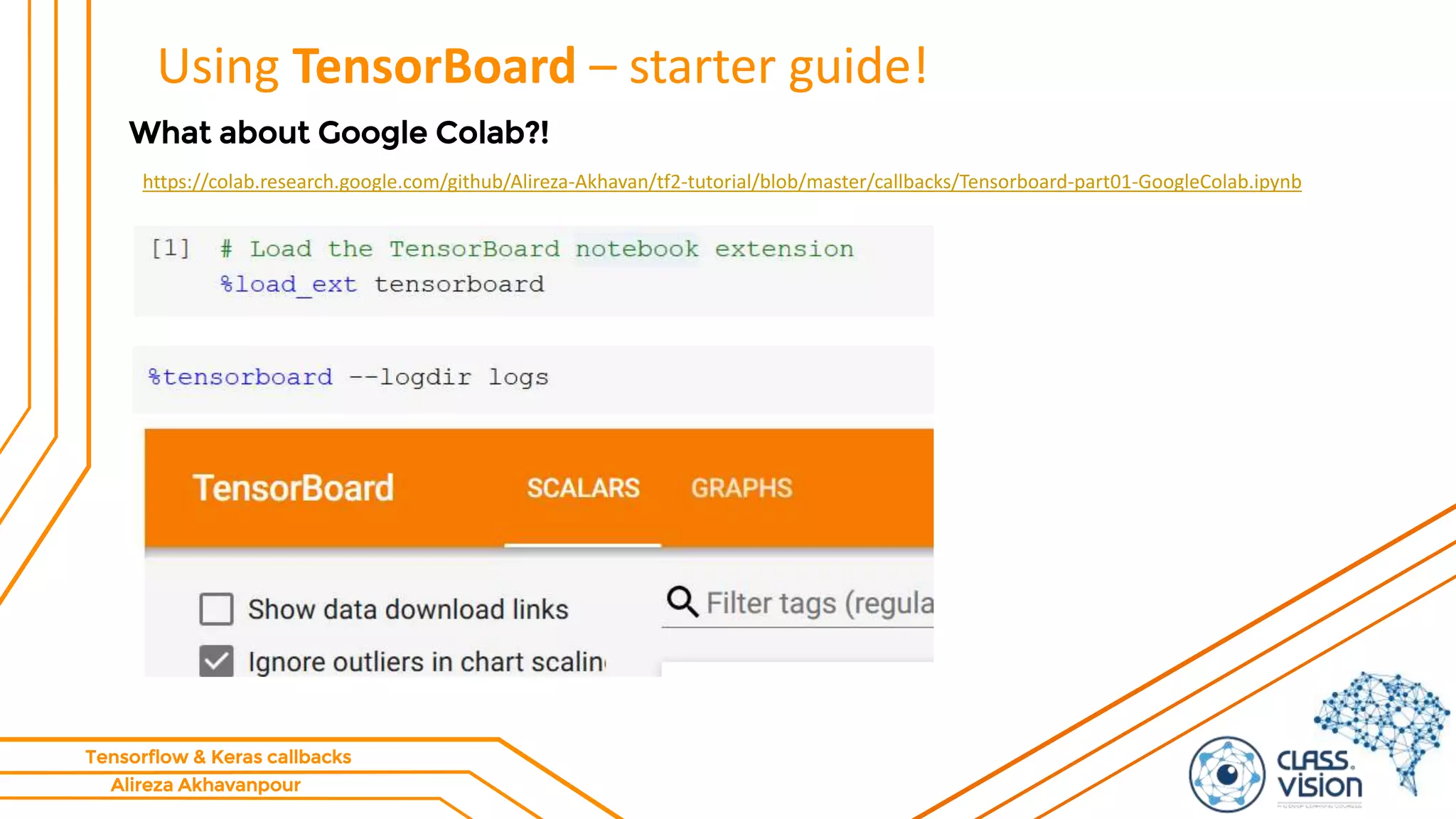

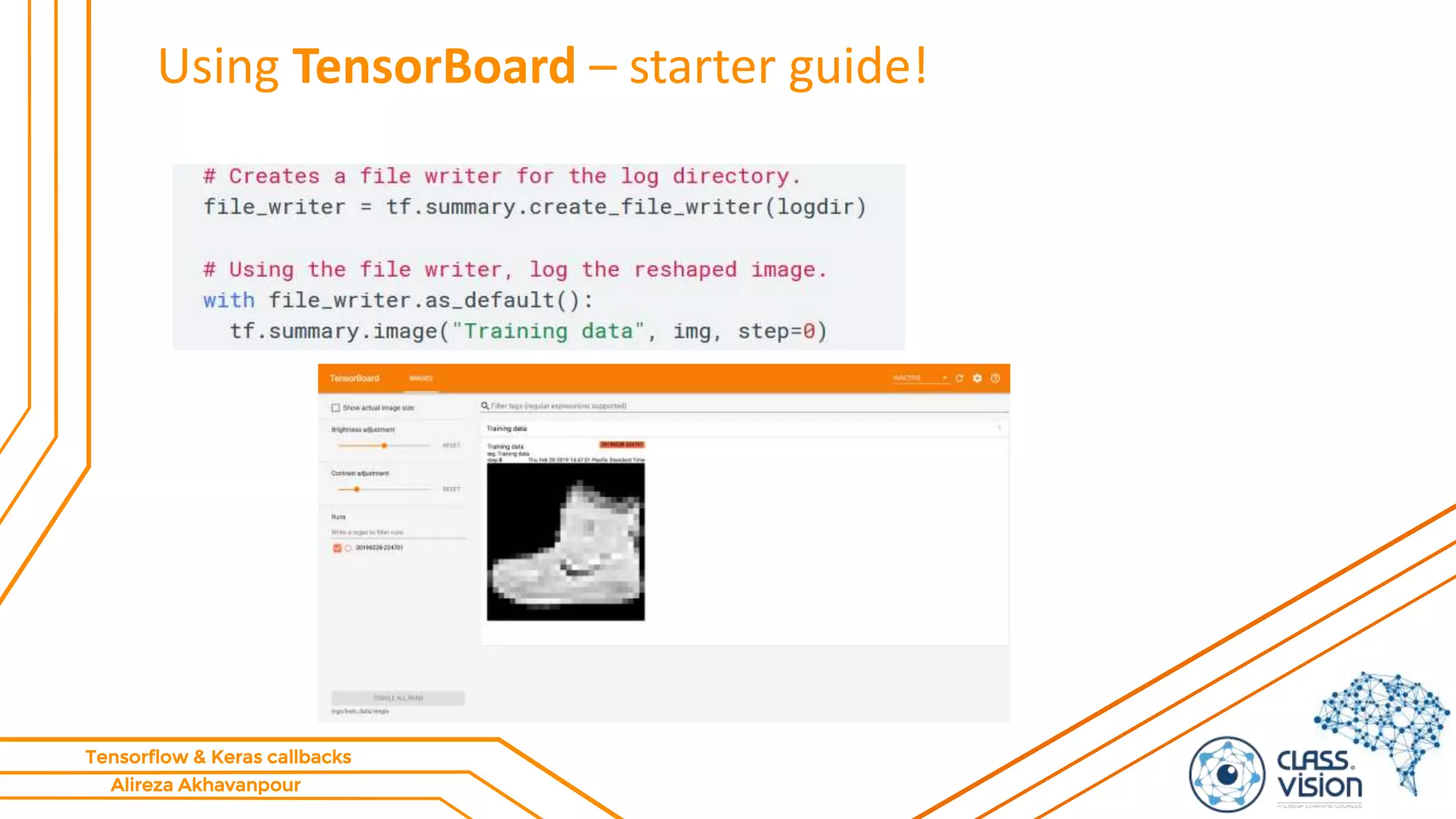

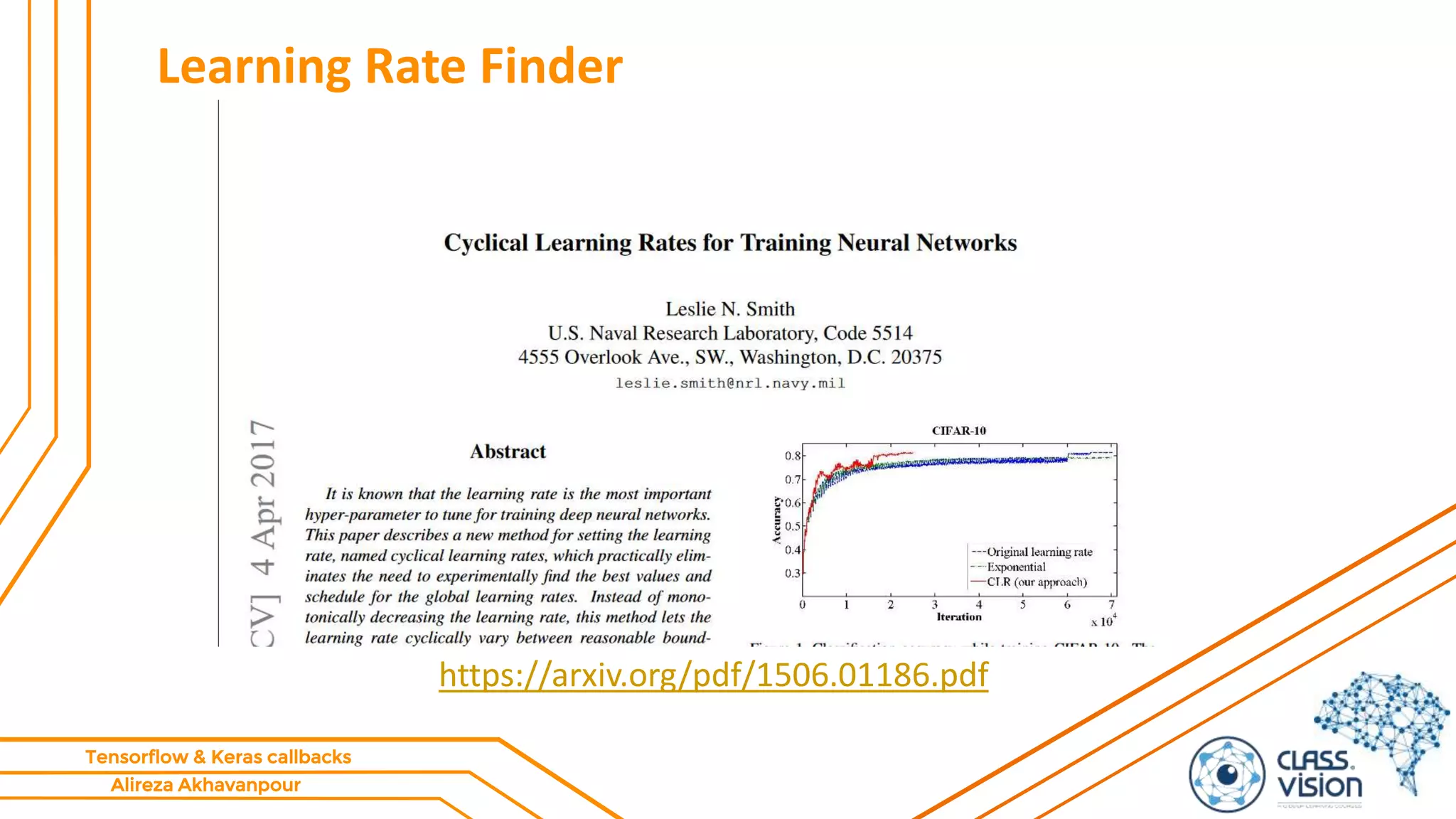

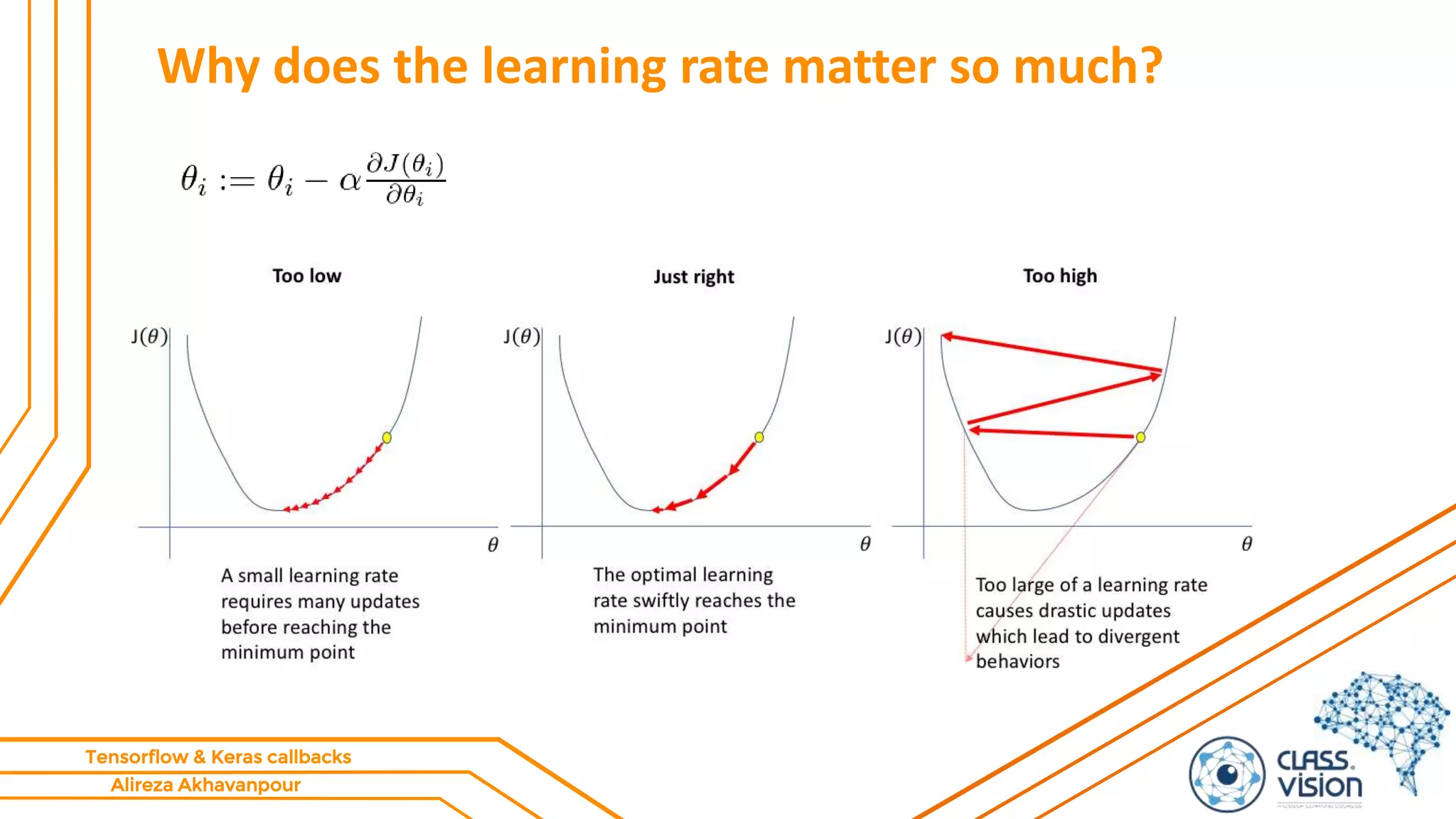

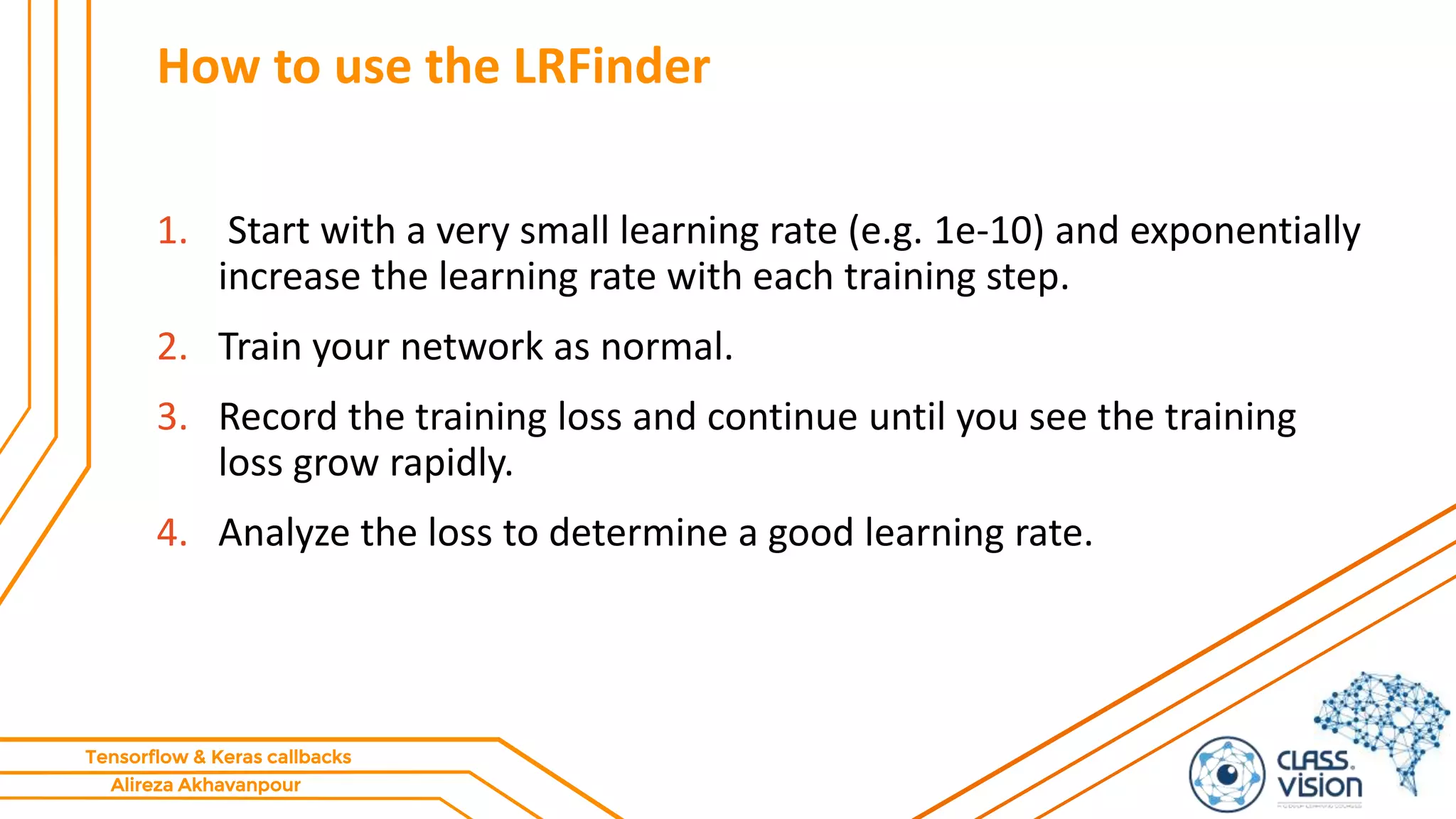

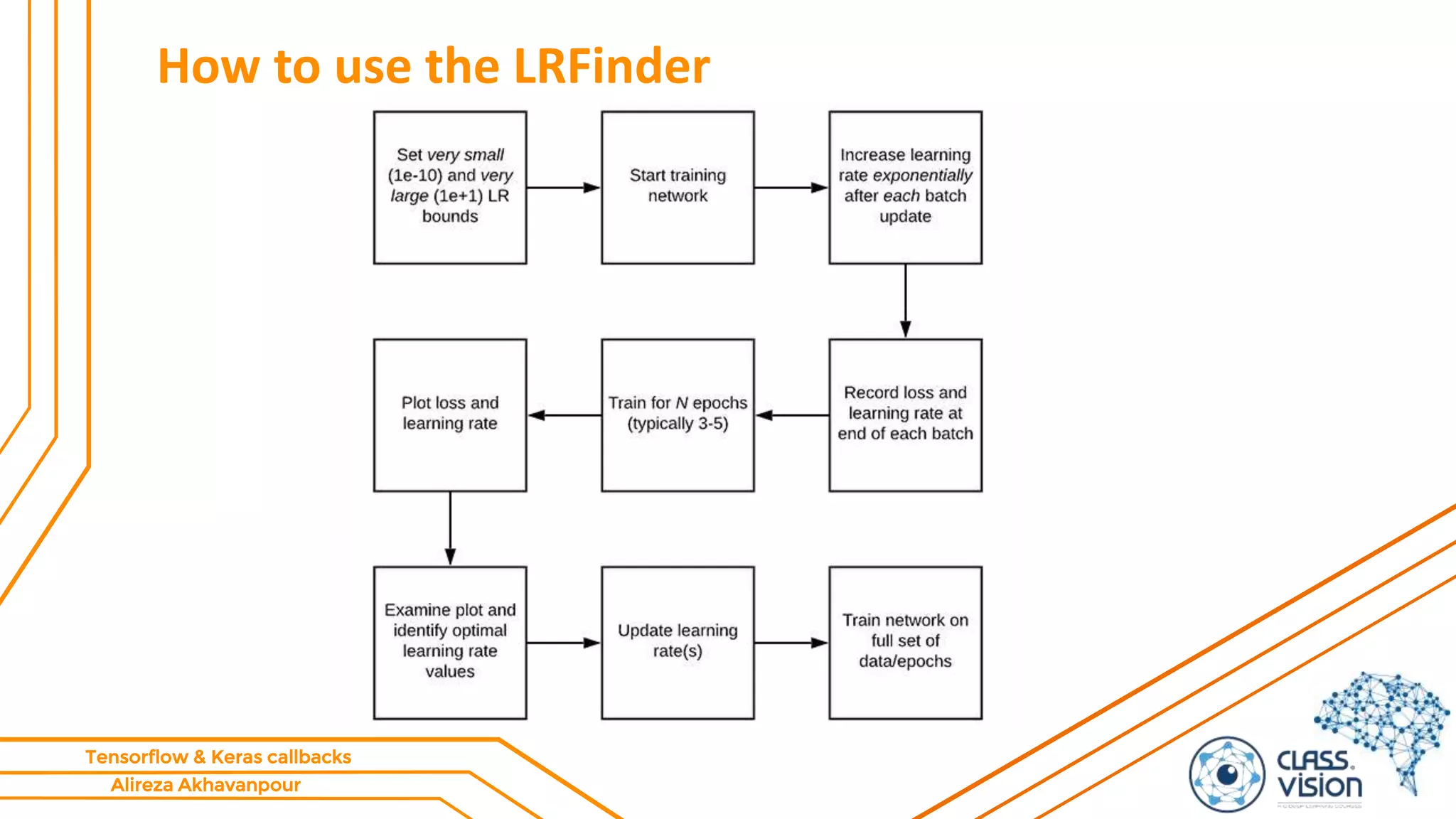

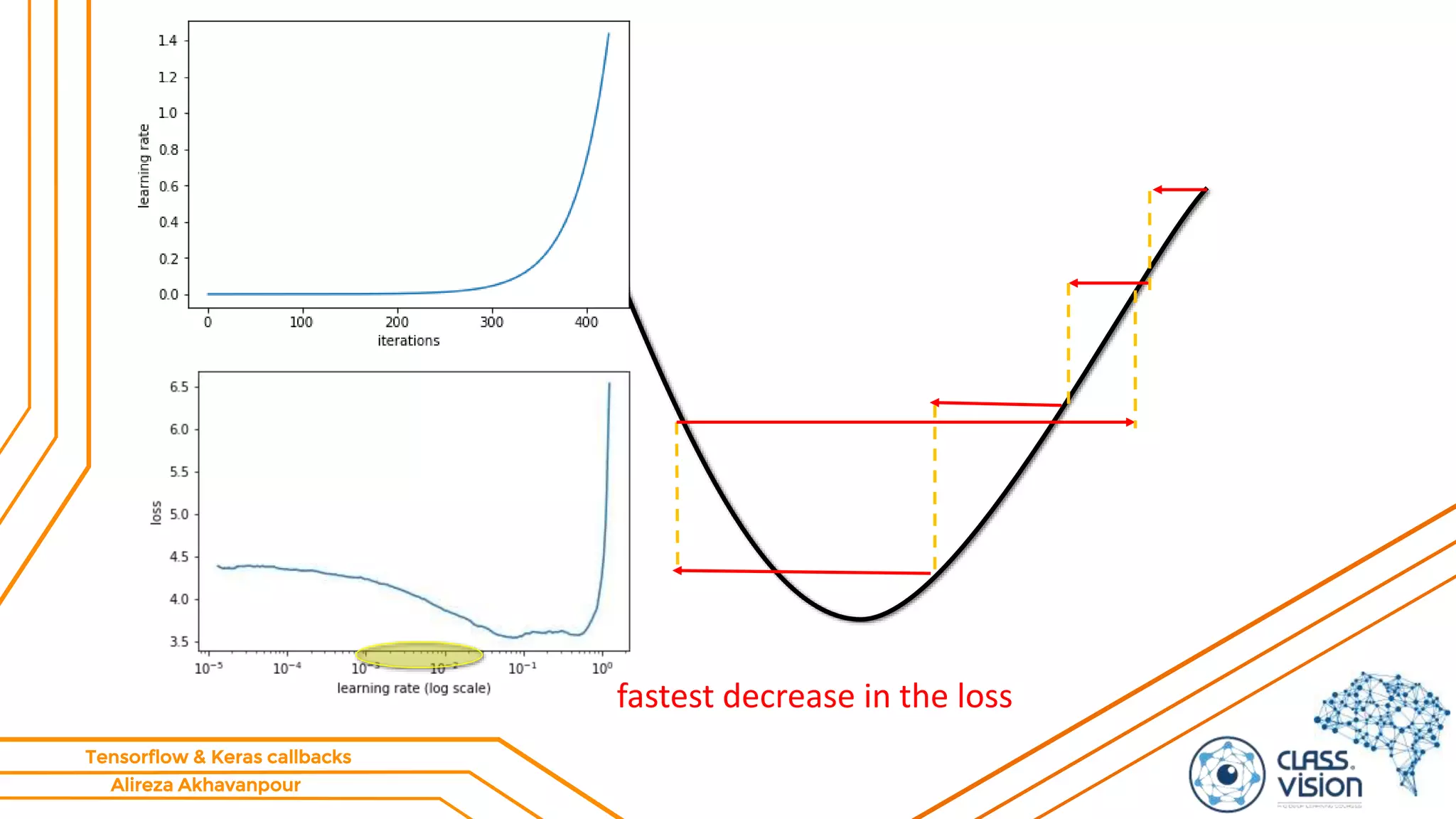

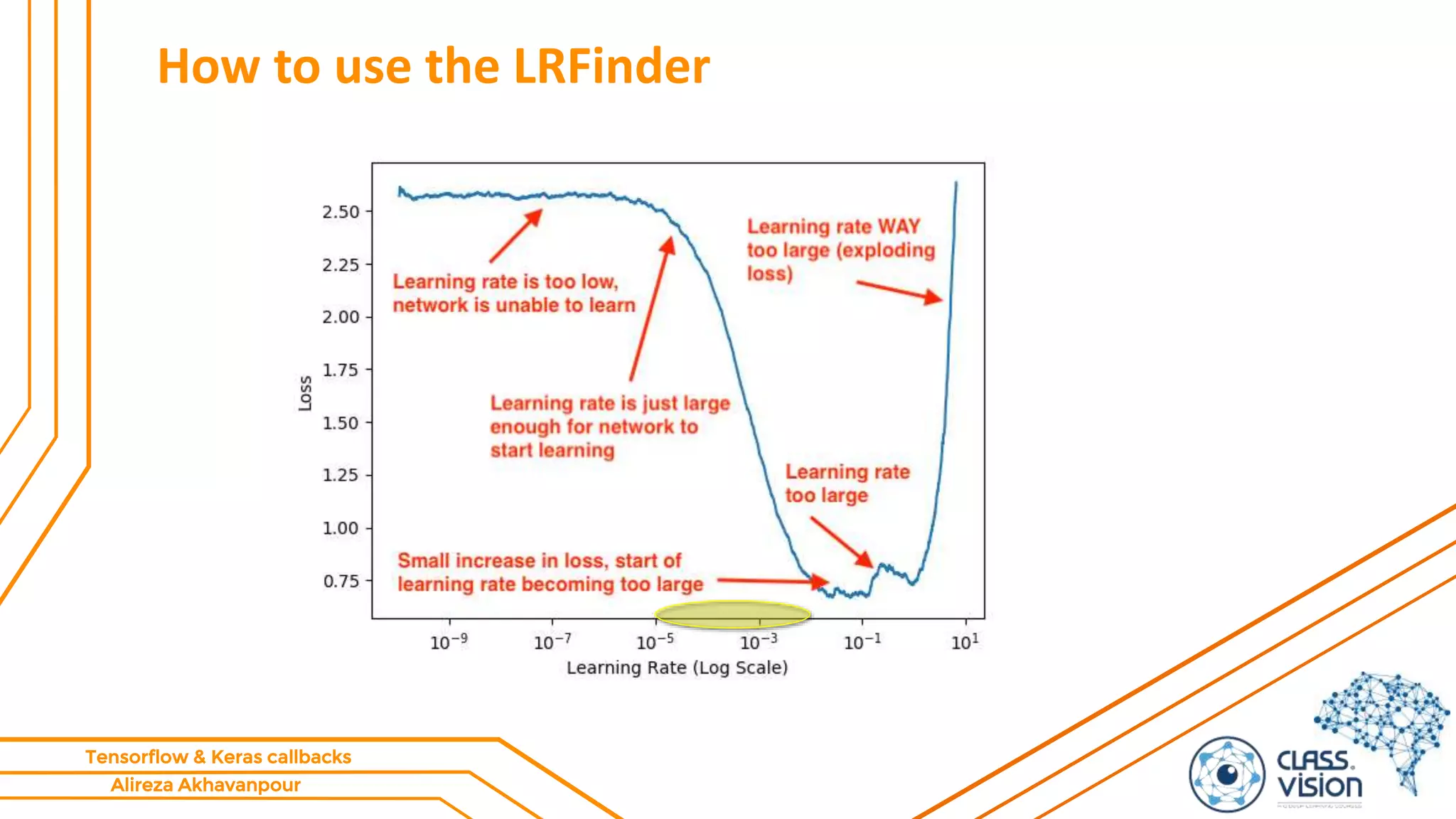

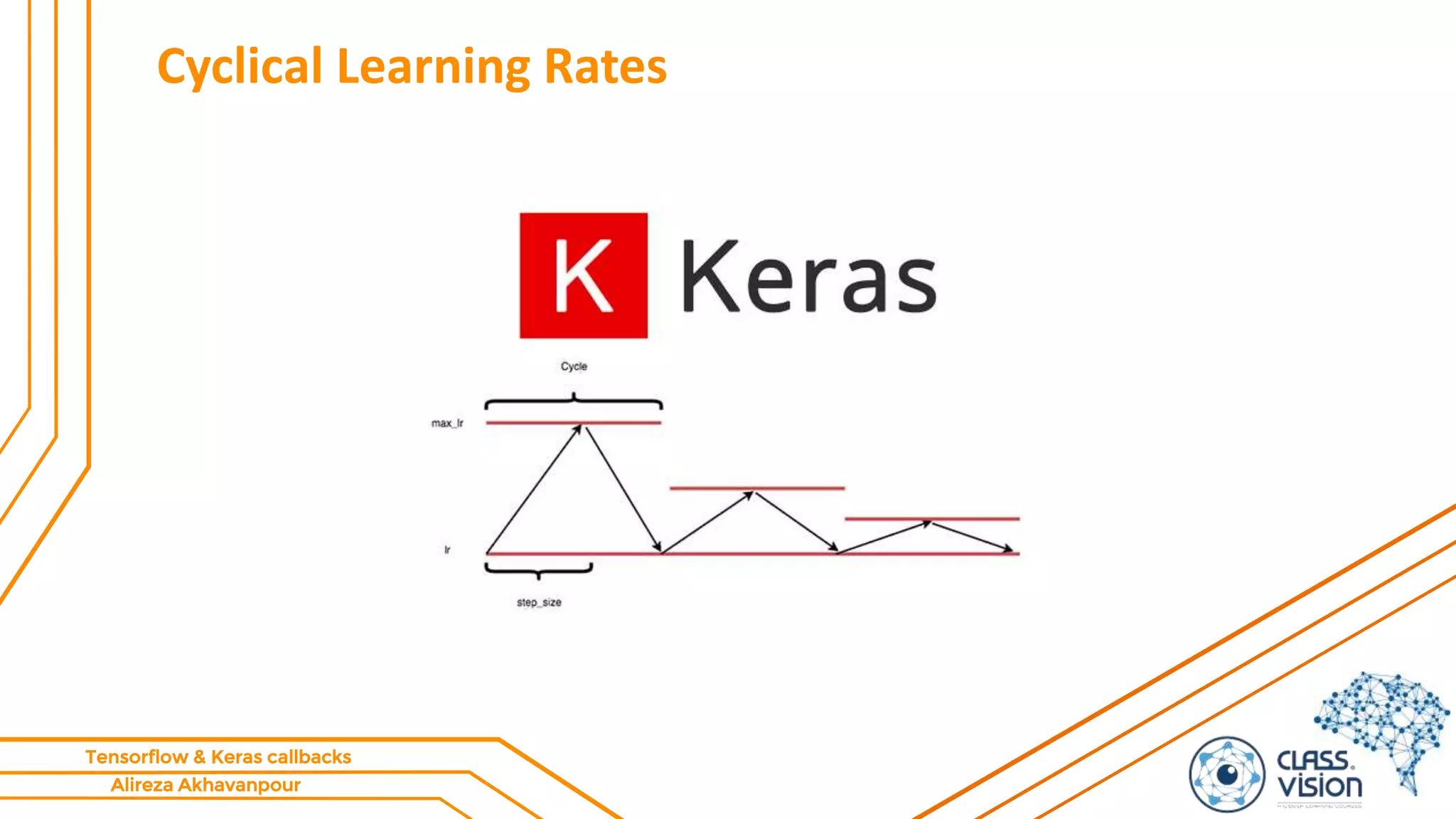

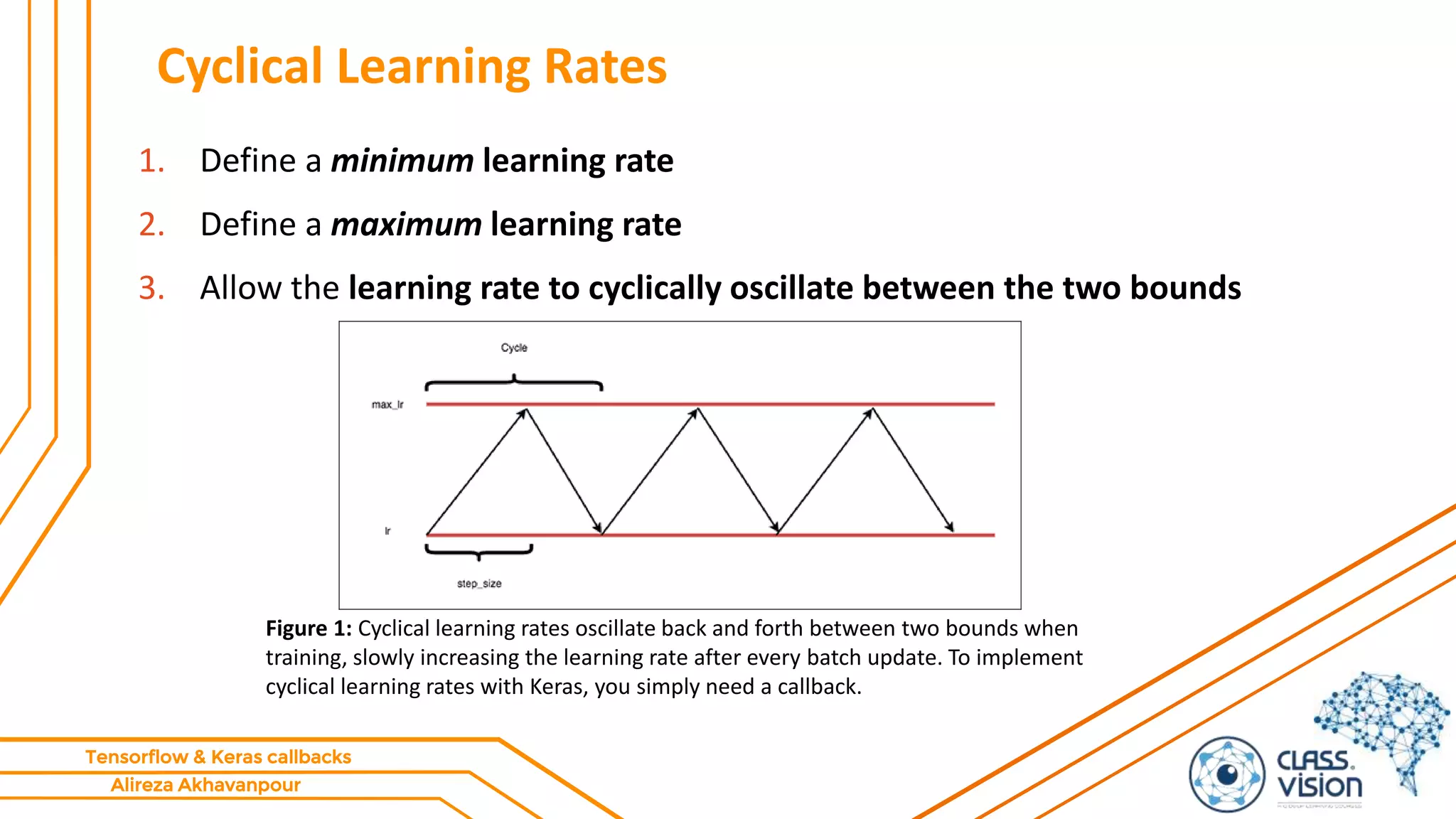

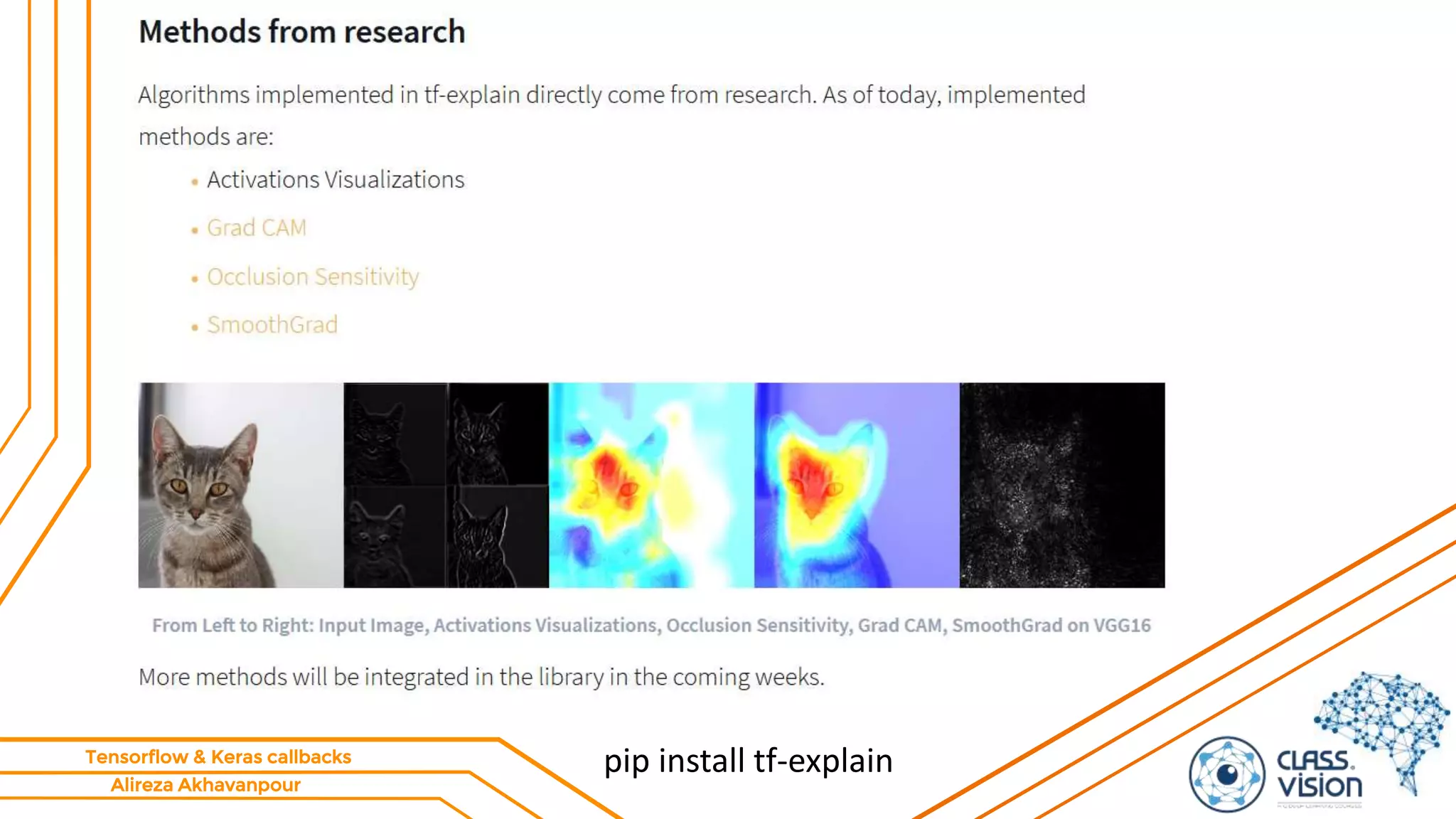

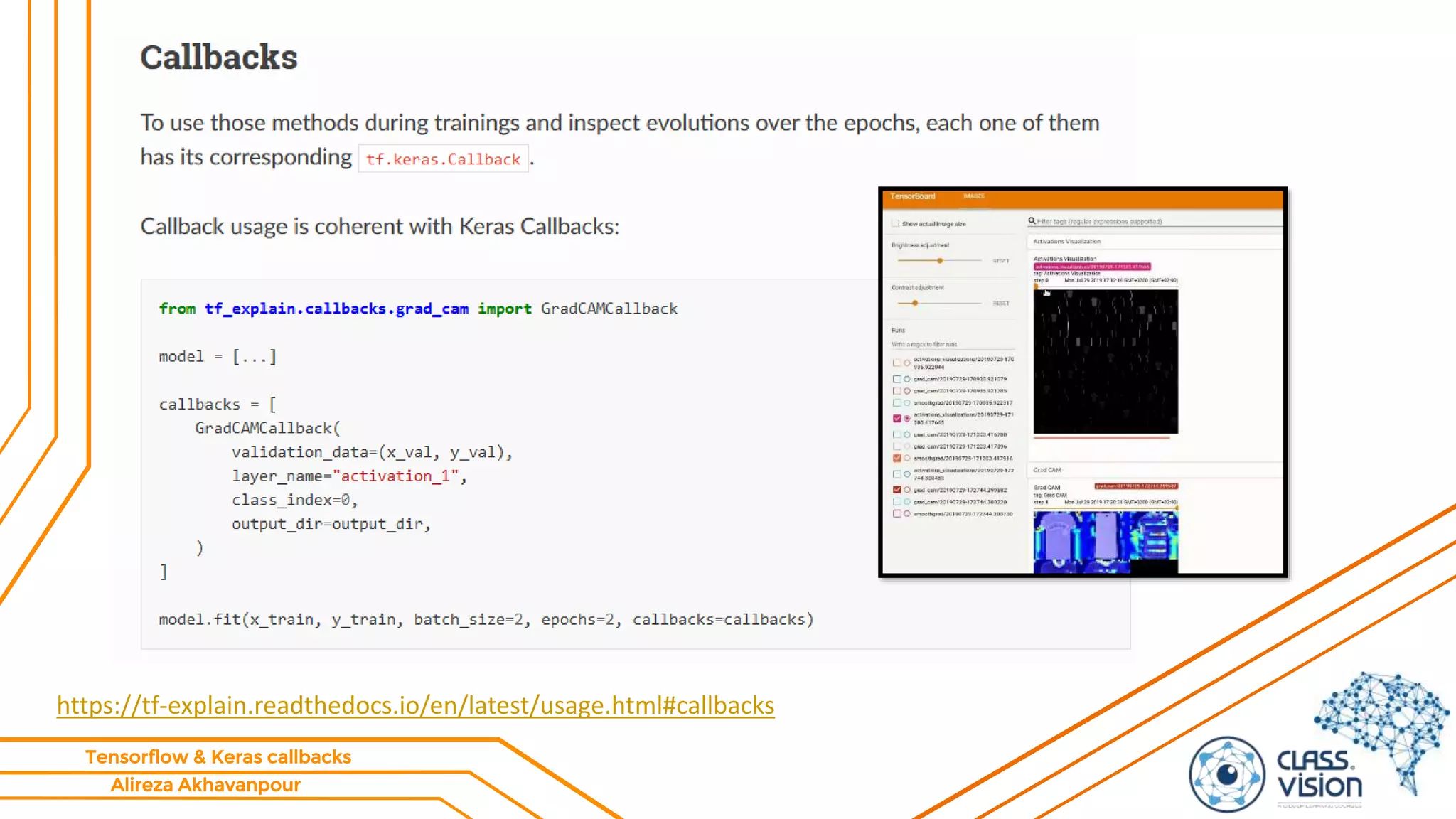

The document provides an overview of TensorFlow and Keras callbacks, specifically focusing on TensorBoard functionalities such as logging, histogram visualization, and model profiling. It introduces the concept of a learning rate finder to determine optimal learning rates and discusses cyclical learning rates implemented through callbacks. Additionally, it includes links to resources for further exploration and guidance on using TensorBoard effectively.