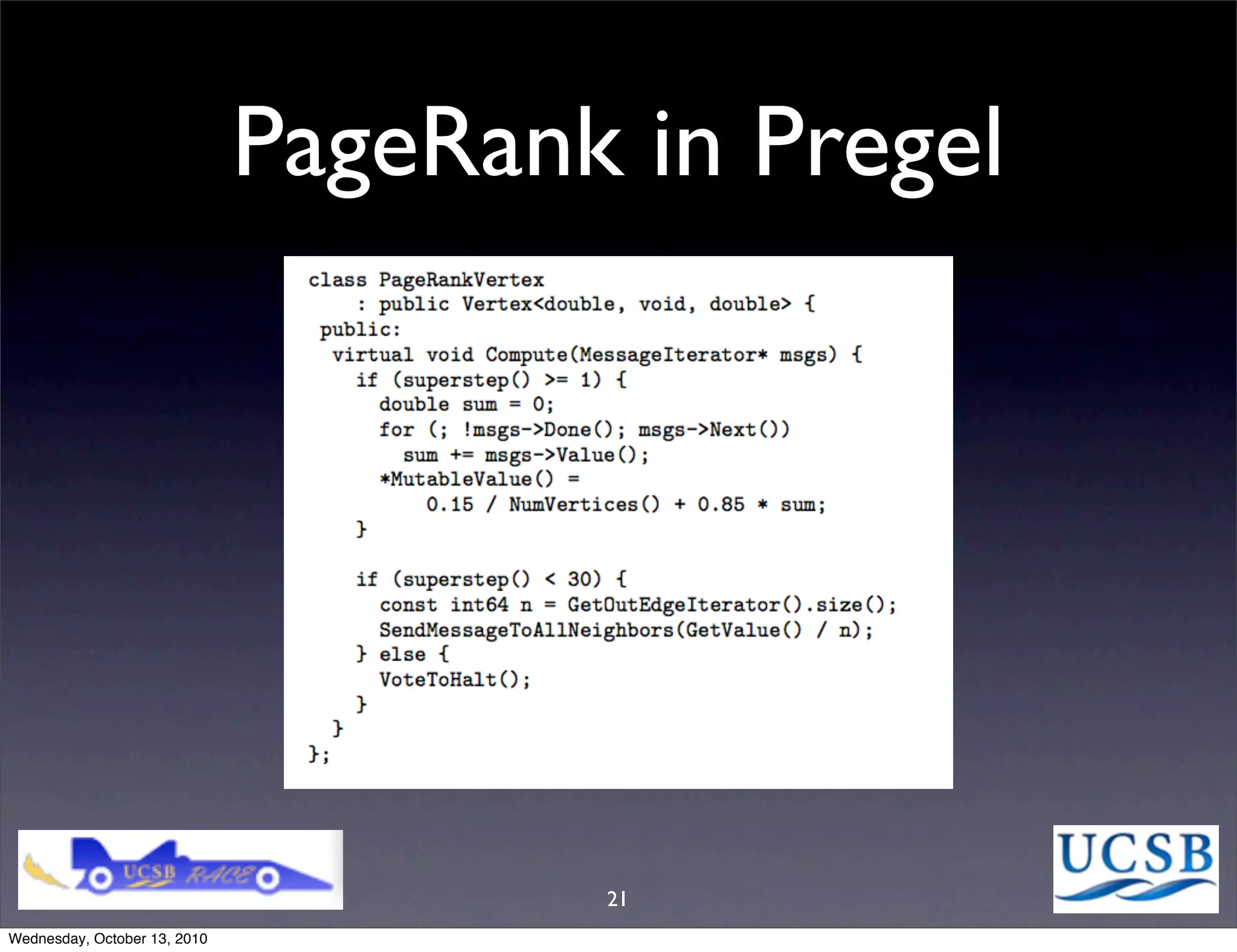

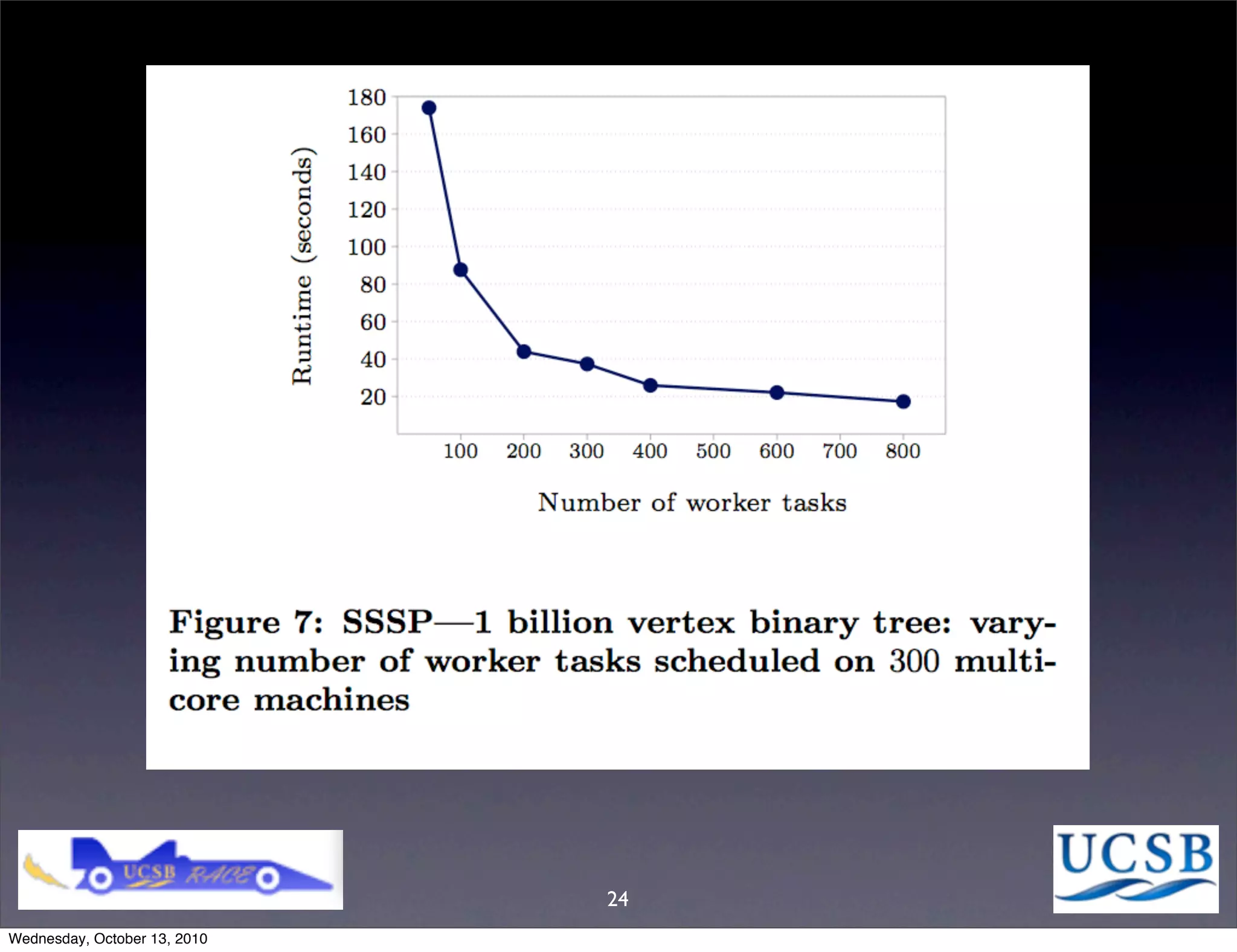

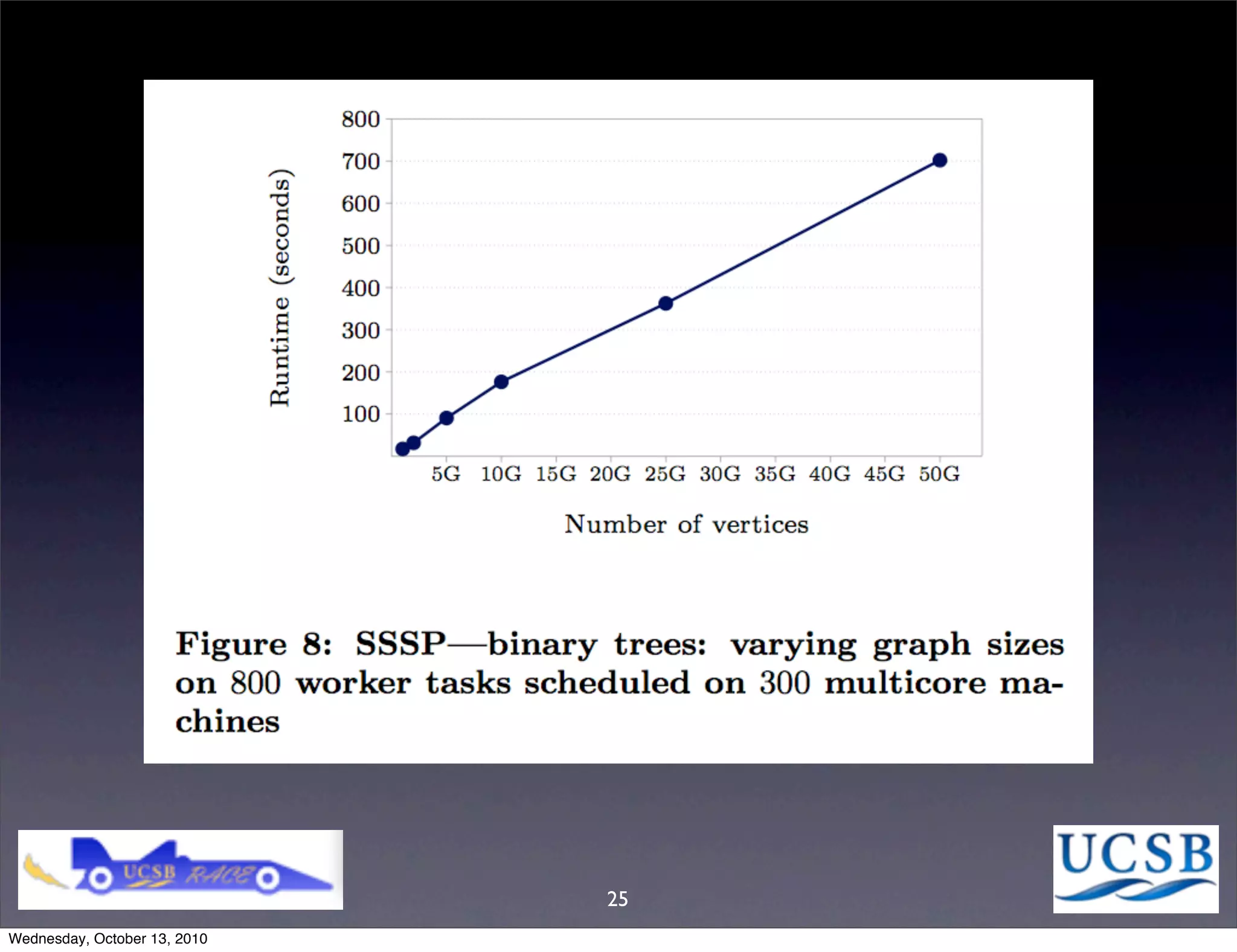

Pregel is a large-scale graph processing system that provides a bulk synchronous parallel model for computations on directed graphs, enabling efficient message passing and dynamic vertex management. It addresses the challenges of traditional graph processing with a focus on scalability and fault tolerance, while being particularly effective for sparse graphs. The system, currently in production use, is designed for straightforward implementation with a short learning curve.