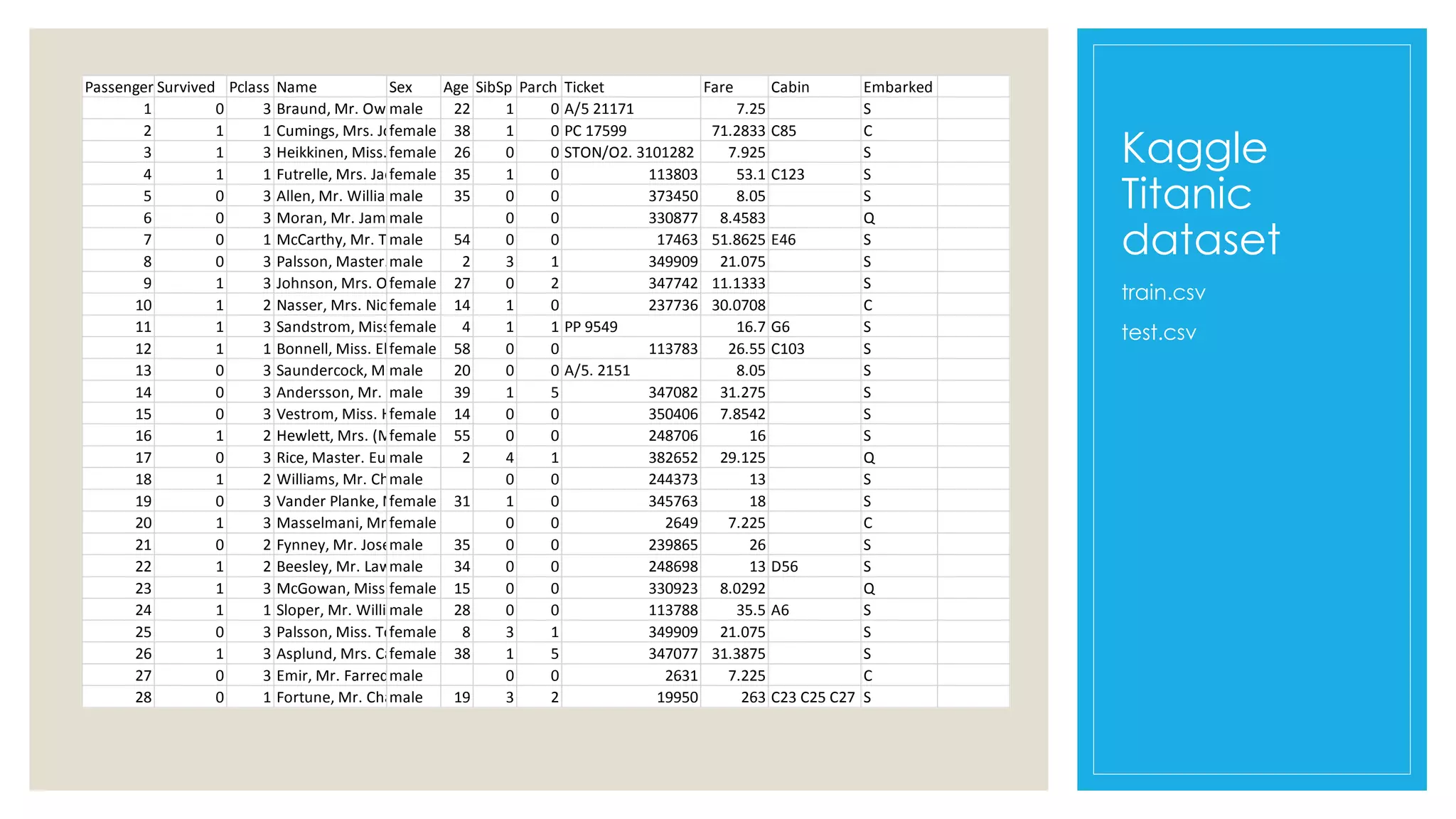

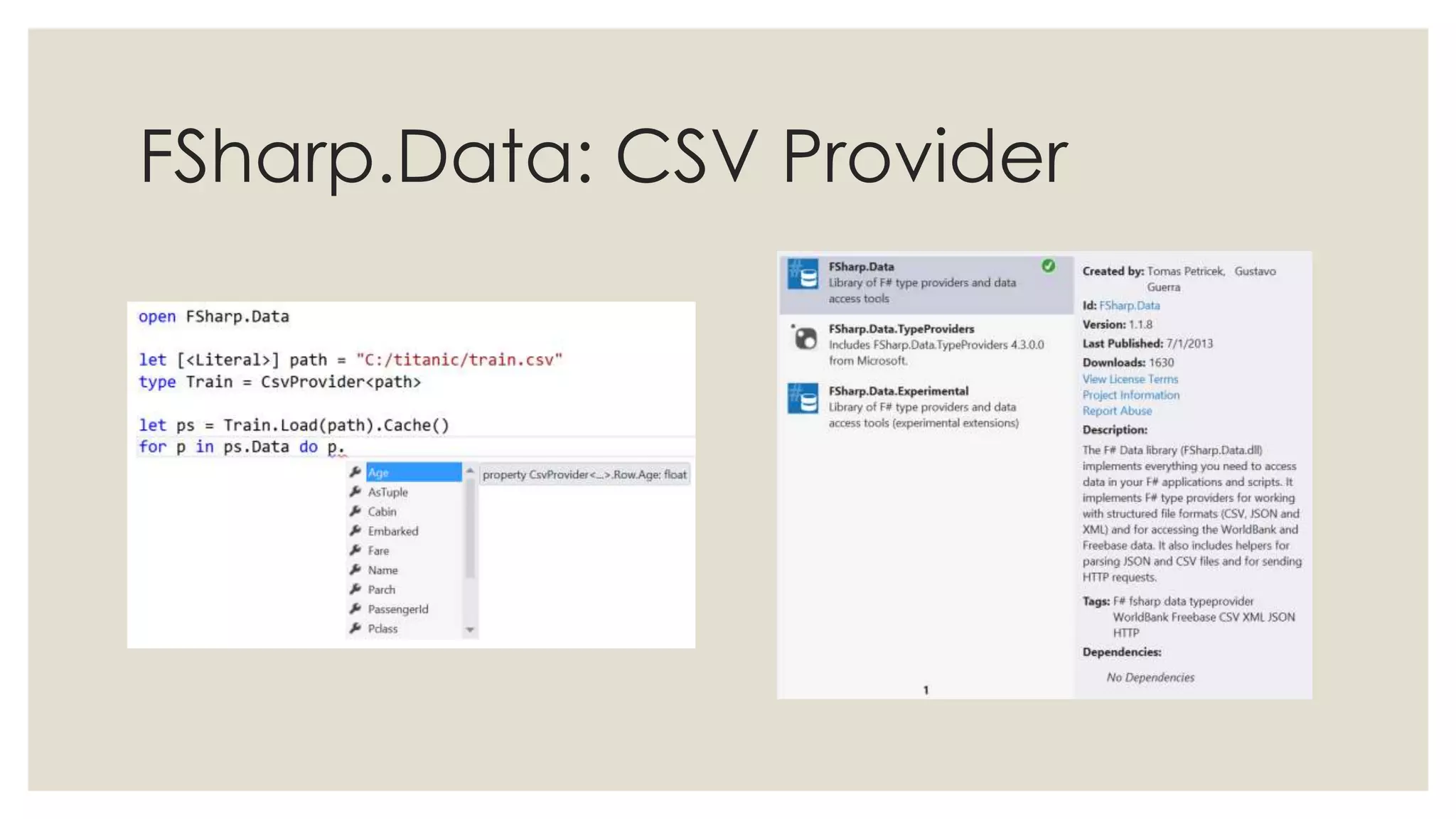

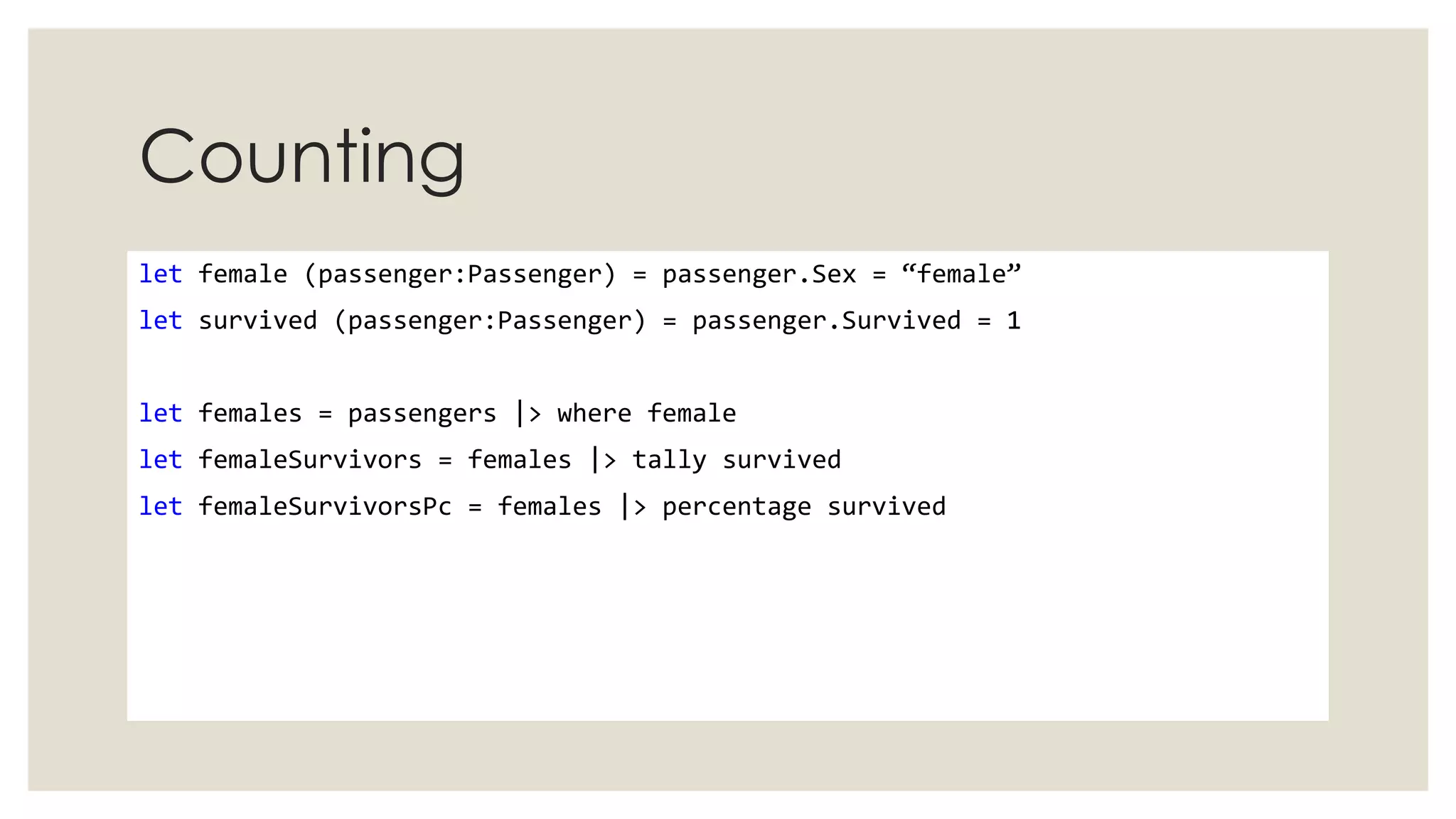

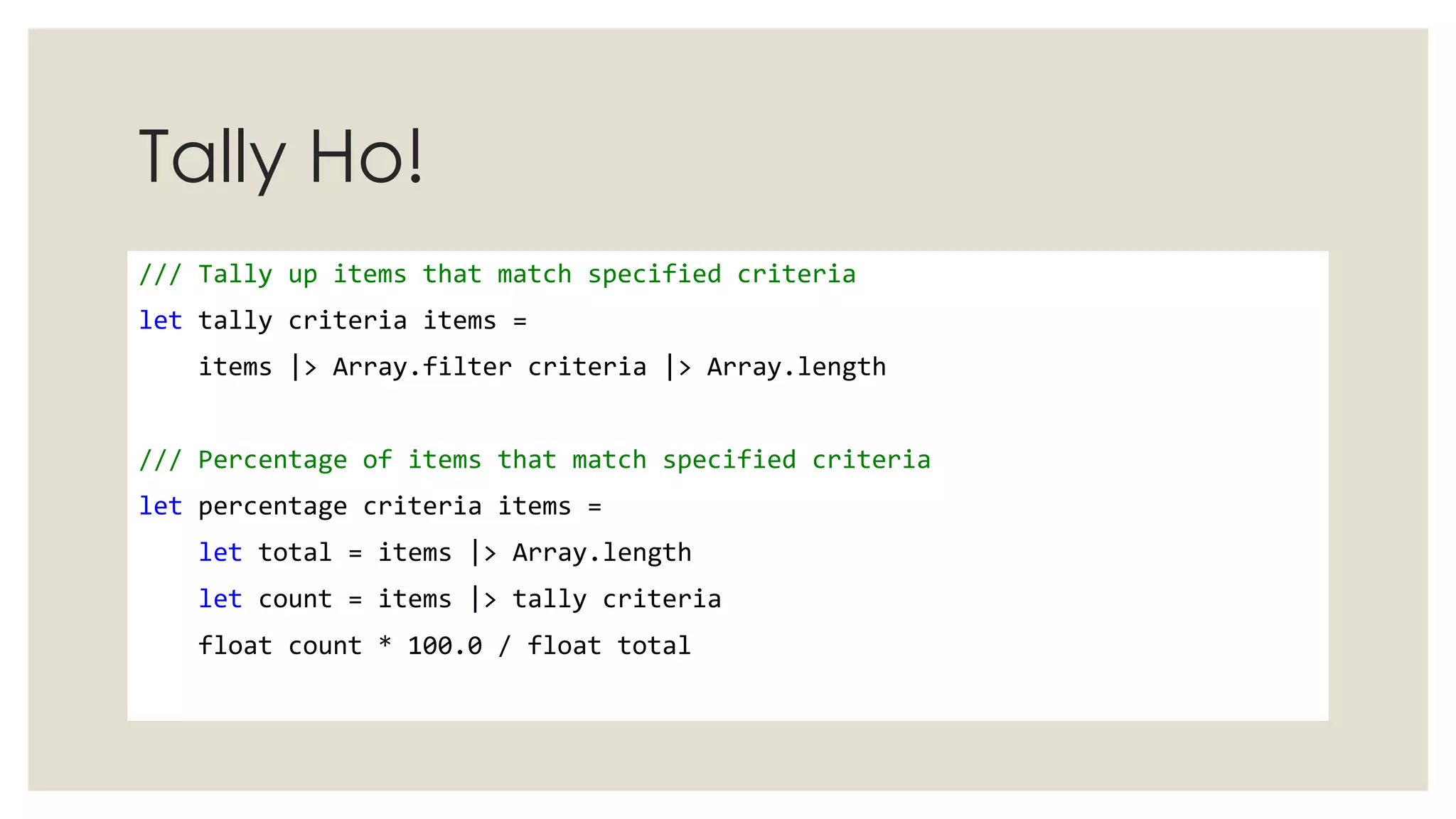

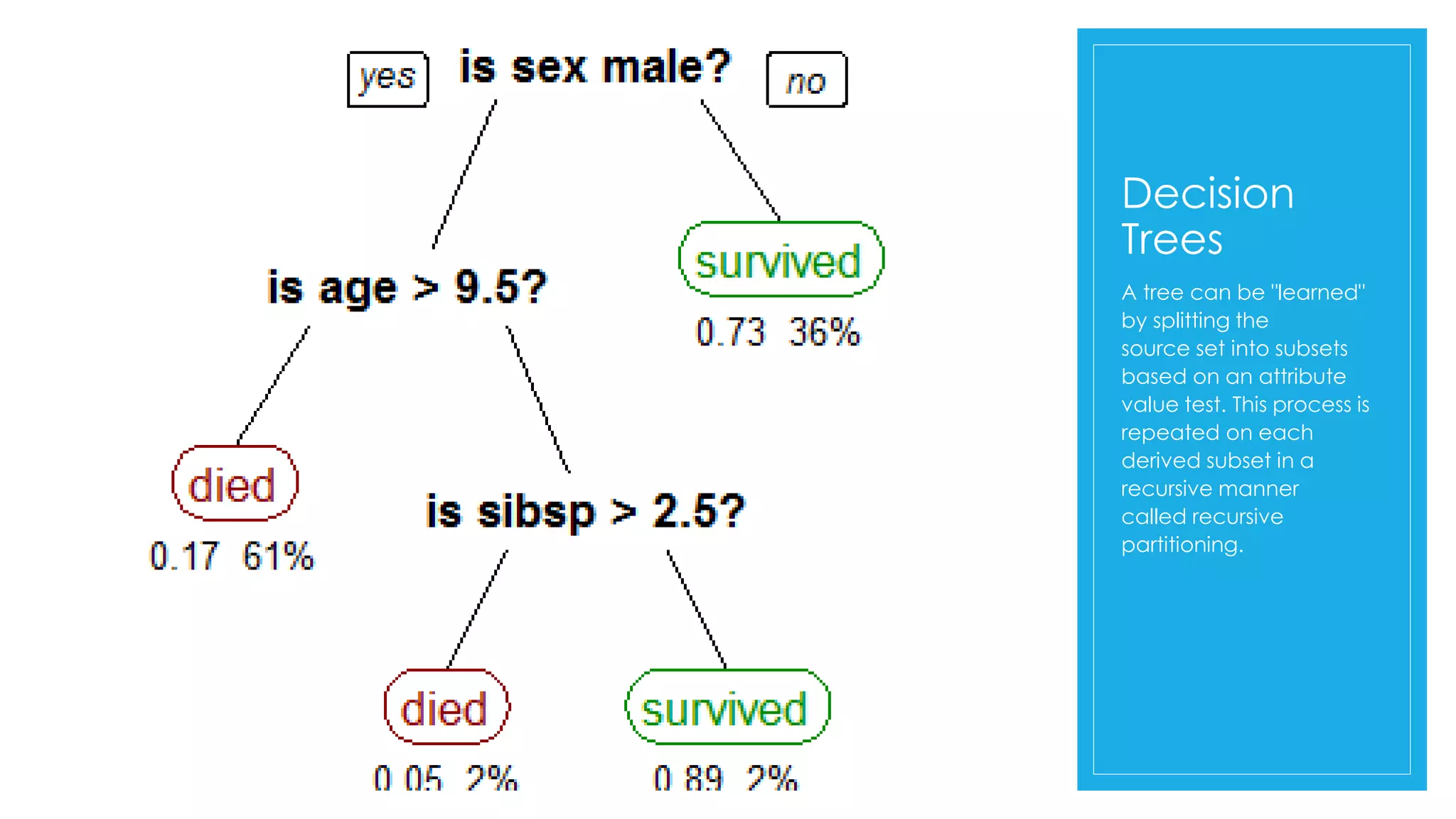

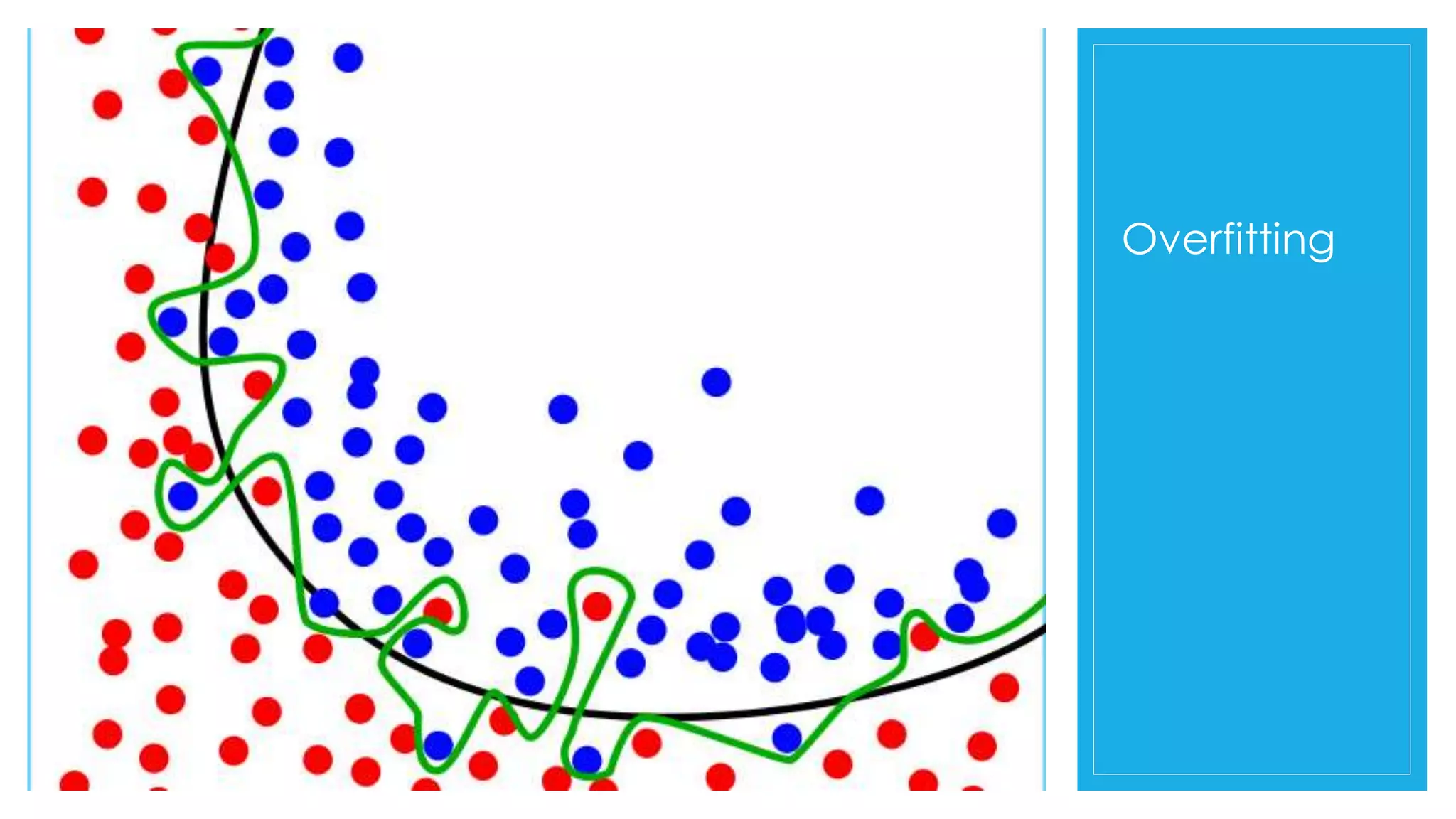

The document discusses machine learning techniques for analyzing passenger data from the Titanic disaster to predict survival. It provides an overview of decision trees and how they can be used to classify passengers by attributes like gender and class. Code examples are given in F# for loading and preprocessing the Titanic dataset, creating a decision tree, and using it to classify new passengers.

![Split data set (from ML in Action)

Python

def splitDataSet(dataSet, axis, value):

retDataSet = []

for featVec in dataSet:

if featVec[axis] == value:

reducedFeatVec = featVec[:axis]

reducedFeatVec.extend(featVec[axis+1:])

retDataSet.append(reducedFeatVec)

return retDataSet

F#

let splitDataSet(dataSet, axis, value) =

[|for featVec in dataSet do

if featVec.[axis] = value then

yield featVec |> Array.removeAt axis|]](https://image.slidesharecdn.com/machinelearningfromdisaster-gl-150217153242-conversion-gate01/75/Machine-learning-from-disaster-GL-Net-2015-15-2048.jpg)

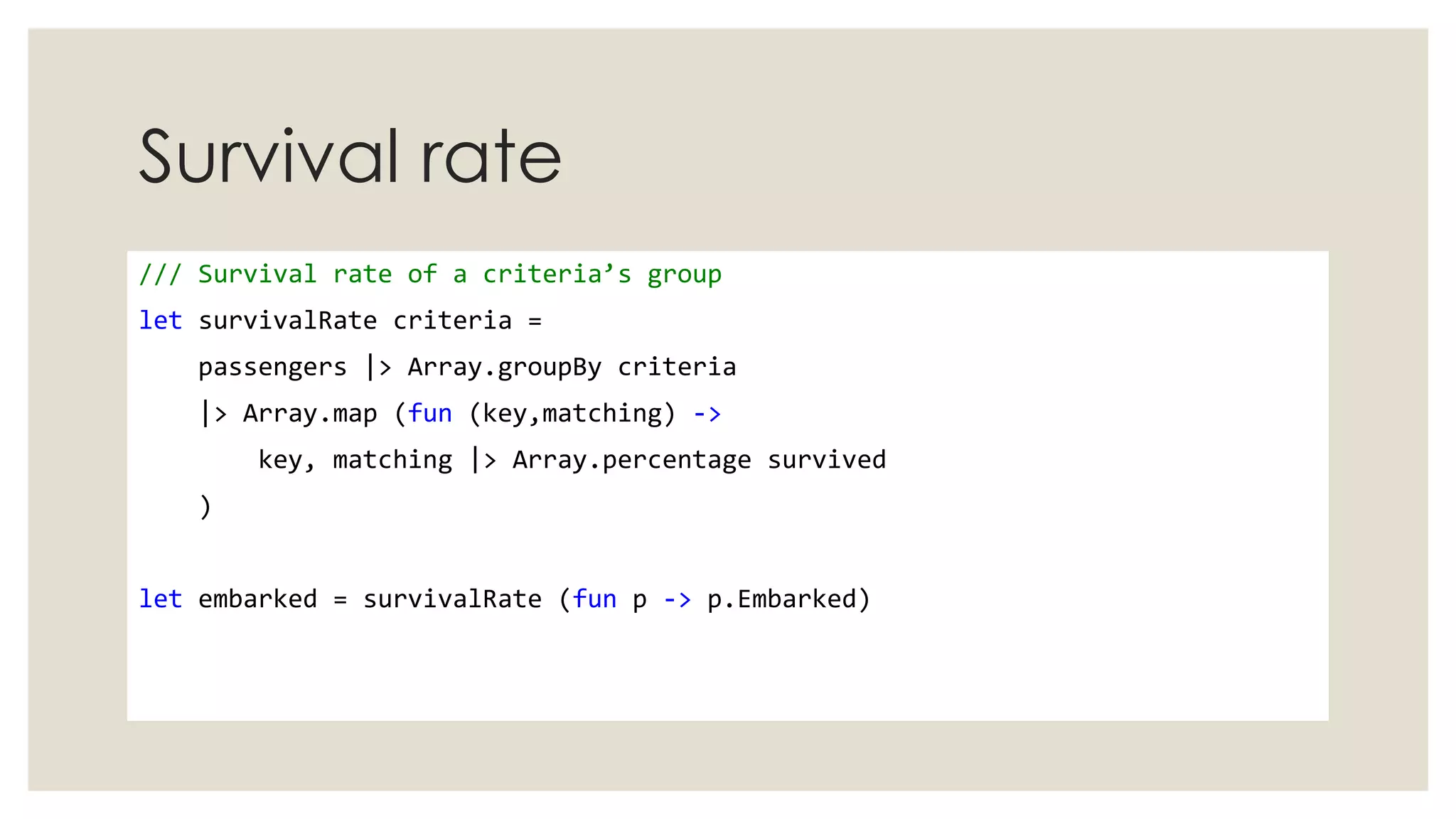

![Decision

Tree

let labels =

[|"sex"; "class"|]

let features (p:Passenger) : obj[] =

[|p.Sex; p.Pclass|]

let dataSet : obj[][] =

[|for passenger in passengers ->

[|yield! features passenger;

yield box (p.Survived = 1)|] |]

let tree = createTree(dataSet, labels)](https://image.slidesharecdn.com/machinelearningfromdisaster-gl-150217153242-conversion-gate01/75/Machine-learning-from-disaster-GL-Net-2015-16-2048.jpg)

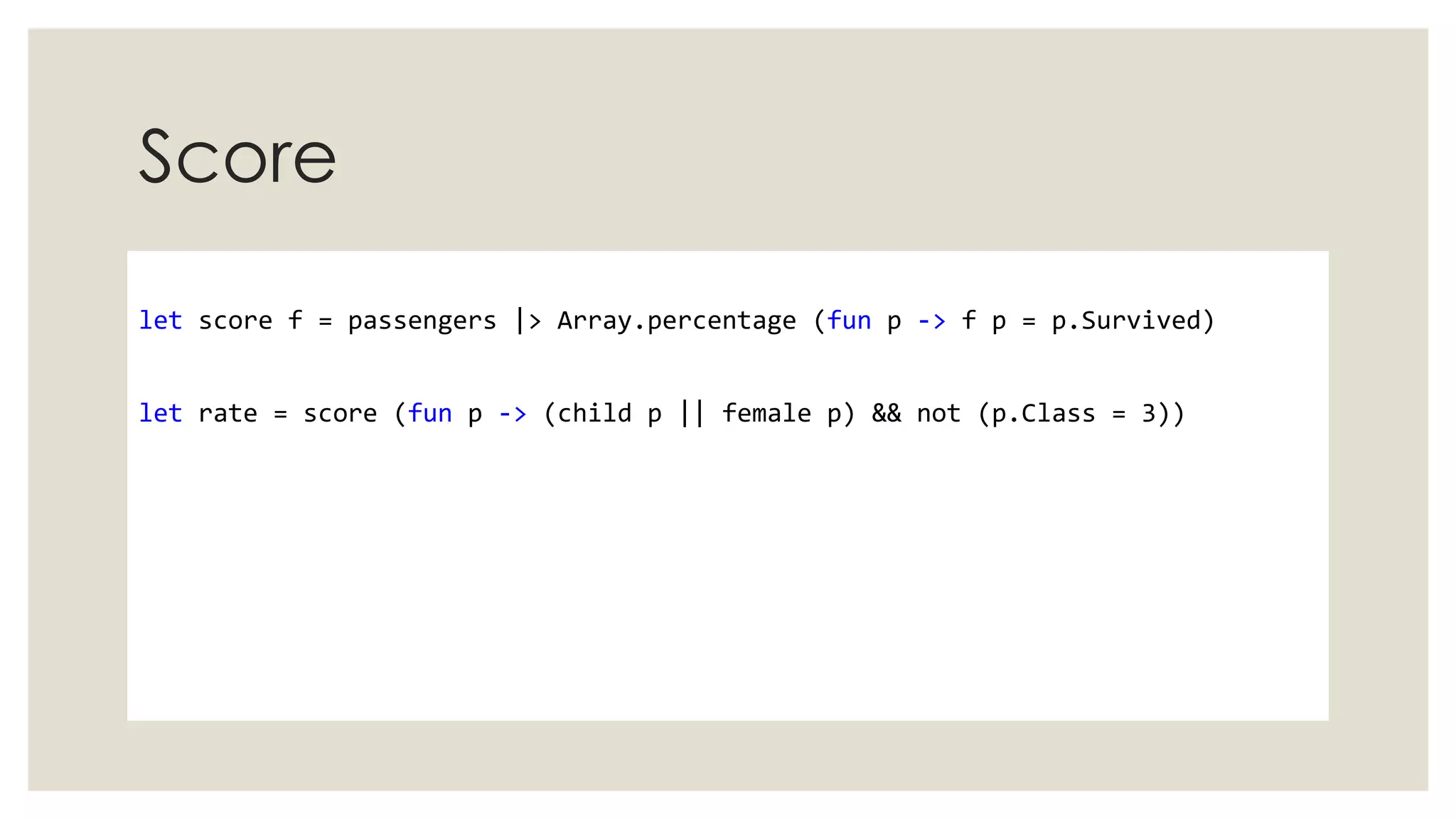

![Decision Tree: Create -> Classify

let rec classify(inputTree, featLabels:string[], testVec:obj[]) =

match inputTree with

| Leaf(x) -> x

| Branch(s,xs) ->

let featIndex = featLabels |> Array.findIndex ((=) s)

xs |> Array.pick (fun (value,tree) ->

if testVec.[featIndex] = value

then classify(tree, featLabels,testVec) |> Some

else None

)](https://image.slidesharecdn.com/machinelearningfromdisaster-gl-150217153242-conversion-gate01/75/Machine-learning-from-disaster-GL-Net-2015-19-2048.jpg)