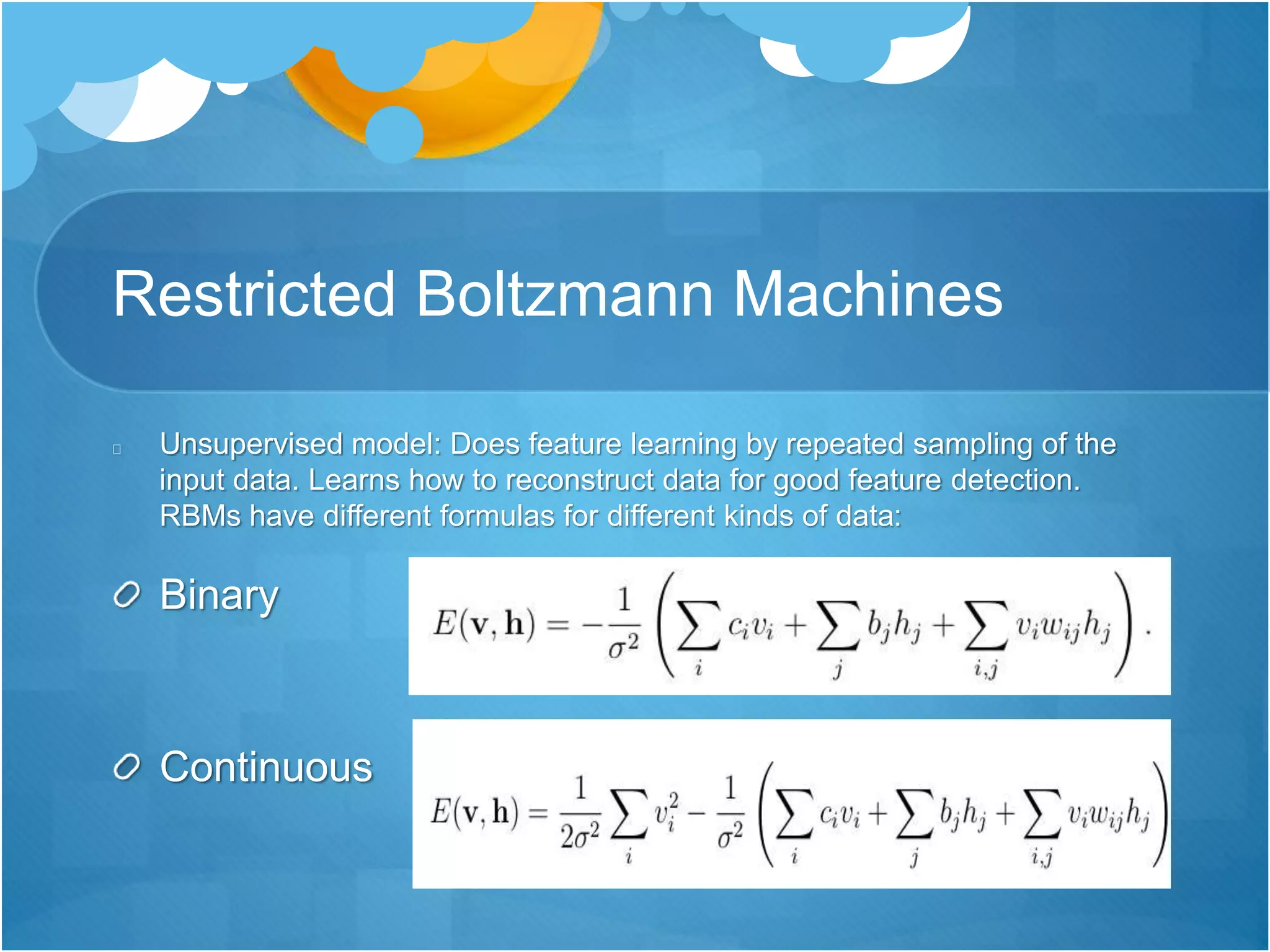

The document discusses deep learning, focusing on deep belief networks and their implementation on Hadoop/YARN. It highlights the advantages of this method in areas such as speech recognition, image processing, and audio processing, and explores how to leverage unsupervised learning. Additionally, it mentions the performance benefits of the deeplearning4j framework over Theano in distributed scenarios.

![Usage From Command Line

Run Deep Learning on Hadoop

yarn jar iterativereduce-0.1-SNAPSHOT.jar [props file]

Evaluate model

./score_model.sh [props file]](https://image.slidesharecdn.com/hadoopsummit2014deeplearningfinal-140605121612-phpapp01/75/Hadoop-Summit-2014-San-Jose-Introduction-to-Deep-Learning-on-Hadoop-28-2048.jpg)