MLlib: Spark's Machine Learning Library

•

28 likes•9,942 views

"MLlib: Spark's Machine Learning Library" by Ameet Talwalkar at AMPCamp 5

Report

Share

Report

Share

Download to read offline

Recommended

Recommended

hive HBase Metastore - Improving Hive with a Big Data Metadata Storagehive HBase Metastore - Improving Hive with a Big Data Metadata Storage

hive HBase Metastore - Improving Hive with a Big Data Metadata StorageDataWorks Summit/Hadoop Summit

More Related Content

What's hot

hive HBase Metastore - Improving Hive with a Big Data Metadata Storagehive HBase Metastore - Improving Hive with a Big Data Metadata Storage

hive HBase Metastore - Improving Hive with a Big Data Metadata StorageDataWorks Summit/Hadoop Summit

What's hot (20)

Building a Feature Store around Dataframes and Apache Spark

Building a Feature Store around Dataframes and Apache Spark

hive HBase Metastore - Improving Hive with a Big Data Metadata Storage

hive HBase Metastore - Improving Hive with a Big Data Metadata Storage

MLFlow: Platform for Complete Machine Learning Lifecycle

MLFlow: Platform for Complete Machine Learning Lifecycle

Performant Streaming in Production: Preventing Common Pitfalls when Productio...

Performant Streaming in Production: Preventing Common Pitfalls when Productio...

Analyzing Flight Delays with Apache Spark, DataFrames, GraphFrames, and MapR-DB

Analyzing Flight Delays with Apache Spark, DataFrames, GraphFrames, and MapR-DB

Flink Forward Berlin 2017: Dongwon Kim - Predictive Maintenance with Apache F...

Flink Forward Berlin 2017: Dongwon Kim - Predictive Maintenance with Apache F...

GlusterFs: a scalable file system for today's and tomorrow's big data

GlusterFs: a scalable file system for today's and tomorrow's big data

Viewers also liked

Viewers also liked (8)

Crab: A Python Framework for Building Recommender Systems

Crab: A Python Framework for Building Recommender Systems

Large-scale Parallel Collaborative Filtering and Clustering using MapReduce f...

Large-scale Parallel Collaborative Filtering and Clustering using MapReduce f...

Recommender Systems with Apache Spark's ALS Function

Recommender Systems with Apache Spark's ALS Function

Collaborative Filtering and Recommender Systems By Navisro Analytics

Collaborative Filtering and Recommender Systems By Navisro Analytics

Netflix's Recommendation ML Pipeline Using Apache Spark: Spark Summit East ta...

Netflix's Recommendation ML Pipeline Using Apache Spark: Spark Summit East ta...

Similar to MLlib: Spark's Machine Learning Library

The machine learning libraries in Apache Spark are an impressive piece of software engineering, and are maturing rapidly. What advantages does Spark.ml offer over scikit-learn? At Data Science Retreat we've taken a real-world dataset and worked through the stages of building a predictive model -- exploration, data cleaning, feature engineering, and model fitting; which would you use in production?

The machine learning libraries in Apache Spark are an impressive piece of software engineering, and are maturing rapidly. What advantages does Spark.ml offer over scikit-learn?

At Data Science Retreat we've taken a real-world dataset and worked through the stages of building a predictive model -- exploration, data cleaning, feature engineering, and model fitting -- in several different frameworks. We'll show what it's like to work with native Spark.ml, and compare it to scikit-learn along several dimensions: ease of use, productivity, feature set, and performance.

In some ways Spark.ml is still rather immature, but it also conveys new superpowers to those who know how to use it.A full Machine learning pipeline in Scikit-learn vs in scala-Spark: pros and ...

A full Machine learning pipeline in Scikit-learn vs in scala-Spark: pros and ...Jose Quesada (hiring)

Similar to MLlib: Spark's Machine Learning Library (20)

A full Machine learning pipeline in Scikit-learn vs in scala-Spark: pros and ...

A full Machine learning pipeline in Scikit-learn vs in scala-Spark: pros and ...

Designing Distributed Machine Learning on Apache Spark

Designing Distributed Machine Learning on Apache Spark

Combining Machine Learning Frameworks with Apache Spark

Combining Machine Learning Frameworks with Apache Spark

Combining Machine Learning frameworks with Apache Spark

Combining Machine Learning frameworks with Apache Spark

Apache Spark MLlib's Past Trajectory and New Directions with Joseph Bradley

Apache Spark MLlib's Past Trajectory and New Directions with Joseph Bradley

Build, Scale, and Deploy Deep Learning Pipelines Using Apache Spark

Build, Scale, and Deploy Deep Learning Pipelines Using Apache Spark

Apache Spark's MLlib's Past Trajectory and new Directions

Apache Spark's MLlib's Past Trajectory and new Directions

Apache Spark-Based Stratification Library for Machine Learning Use Cases at N...

Apache Spark-Based Stratification Library for Machine Learning Use Cases at N...

Apache Spark-Based Stratification Library for Machine Learning Use Cases at N...

Apache Spark-Based Stratification Library for Machine Learning Use Cases at N...

From Pandas to Koalas: Reducing Time-To-Insight for Virgin Hyperloop's Data

From Pandas to Koalas: Reducing Time-To-Insight for Virgin Hyperloop's Data

Building, Debugging, and Tuning Spark Machine Leaning Pipelines-(Joseph Bradl...

Building, Debugging, and Tuning Spark Machine Leaning Pipelines-(Joseph Bradl...

Deploying MLlib for Scoring in Structured Streaming with Joseph Bradley

Deploying MLlib for Scoring in Structured Streaming with Joseph Bradley

More from jeykottalam

More from jeykottalam (8)

SparkR: Enabling Interactive Data Science at Scale

SparkR: Enabling Interactive Data Science at Scale

SampleClean: Bringing Data Cleaning into the BDAS Stack

SampleClean: Bringing Data Cleaning into the BDAS Stack

MLlib: Spark's Machine Learning Library

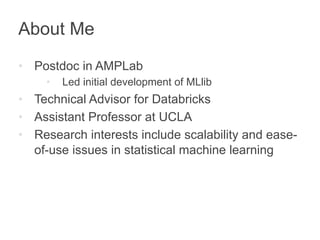

- 1. About Me • Postdoc in AMPLab • Led initial development of MLlib • Technical Advisor for Databricks • Assistant Professor at UCLA • Research interests include scalability and ease-of- use issues in statistical machine learning

- 2. MLlib: Spark’s Machine Learning Library Ameet Talwalkar AMPCAMP 5 November 20, 2014

- 3. History and Overview Example Applications Ongoing Development

- 4. MLbase and MLlib MLOpt MLI MLlib Apache Spark MLbase aims to simplify development and deployment of scalable ML pipelines MLlib: Spark’s core ML library MLI, Pipelines: APIs to simplify ML development • Tables, Matrices, Optimization, ML Pipelines Experimental Testbeds Production MLOpt: Declarative layer to automate hyperparameter tuning Code Pipelines

- 5. History of MLlib Initial Release • Developed by MLbase team in AMPLab (11 contributors) • Scala, Java • Shipped with Spark v0.8 (Sep 2013) 15 months later… • 80+ contributors from various organization • Scala, Java, Python • Latest release part of Spark v1.1 (Sep 2014)

- 6. What’s in MLlib? • Alternating Least Squares • Lasso • Ridge Regression • Logistic Regression • Decision Trees • Naïve Bayes • Support Vector Machines • K-Means • Gradient descent • L-BFGS • Random data generation • Linear algebra • Feature transformations • Statistics: testing, correlation • Evaluation metrics Collaborative Filtering for Recommendation Prediction Clustering Optimization Many Utilities

- 7. Benefits of MLlib • Part of Spark • Integrated data analysis workflow • Free performance gains Apache Spark SparkSQL Spark Streaming MLlib GraphX

- 8. Benefits of MLlib • Part of Spark • Integrated data analysis workflow • Free performance gains • Scalable • Python, Scala, Java APIs • Broad coverage of applications & algorithms • Rapid improvements in speed & robustness

- 9. History and Overview Example Applications Use Cases Distributed ML Challenges Code Examples Ongoing Development

- 10. Clustering with K-Means Given data points Find meaningful clusters

- 11. Clustering with K-Means Choose cluster centers Assign points to clusters

- 12. Clustering with K-Means Assign Choose cluster centers points to clusters

- 13. Clustering with K-Means Choose cluster centers Assign points to clusters

- 14. Clustering with K-Means Choose cluster centers Assign points to clusters

- 15. Clustering with K-Means Data distributed by instance (point/row) Smart initialization Limited communication (# clusters << # instances)

- 16. K-Means: Scala // Load and parse data. val data = sc.textFile("kmeans_data.txt") val parsedData = data.map { x => Vectors.dense(x.split(‘ ').map(_.toDouble)) }.cache() // Cluster data into 5 classes using KMeans. val clusters = KMeans.train( parsedData, k = 5, numIterations = 20) // Evaluate clustering error. val cost = clusters.computeCost(parsedData) println("Sum of squared errors = " + cost)

- 17. K-Means: Python # Load and parse data. data = sc.textFile("kmeans_data.txt") parsedData = data.map(lambda line: array([float(x) for x in line.split(' ‘)])).cache() # Cluster data into 5 classes using KMeans. clusters = KMeans.train(parsedData, k = 5, maxIterations = 20) # Evaluate clustering error. def error(point): center = clusters.centers[clusters.predict(point)] return sqrt(sum([x**2 for x in (point - center)])) cost = parsedData.map(lambda point: error(point)) .reduce(lambda x, y: x + y) print("Sum of squared error = " + str(cost))

- 18. Recommendation ? Goal: Recommend movies to users

- 19. Recommendation Collaborative filtering Goal: Recommend movies to users Challenges: • Defining similarity • Dimensionality Millions of Users / Items • Sparsity

- 20. Recommendation Solution: Assume ratings are determined by a small number of factors. ≈ x 25M Users, 100K Movies ! 2.5 trillion ratings With 10 factors/user ! 250M parameters

- 21. Recommendation with Alternating Least Squares (ALS) Algorithm Alternating update of user/movie factors = x ? ?

- 22. Recommendation with Alternating Least Squares (ALS) Algorithm Alternating update of user/movie factors = x Can update factors in parallel Must be careful about communication

- 23. Recommendation with Alternating Least Squares (ALS) // Load and parse the data val data = sc.textFile("mllib/data/als/test.data") val ratings = data.map(_.split(',') match { case Array(user, item, rate) => Rating(user.toInt, item.toInt, rate.toDouble) }) // Build the recommendation model using ALS val model = ALS.train( ratings, rank = 10, numIterations = 20, regularizer = 0.01) // Evaluate the model on rating data val usersProducts = ratings.map { case Rating(user, product, rate) => (user, product) } val predictions = model.predict(usersProducts)

- 24. ALS: Today’s ML Exercise • Load 1M/10M ratings from MovieLens • Specify YOUR ratings on examples • Split examples into training/validation • Fit a model (Python or Scala) • Improve model via parameter tuning • Get YOUR recommendations

- 25. History and Overview Example Applications Ongoing Development Performance New APIs

- 26. Performance 50 ALS on Amazon Reviews on 16 nodes minutes) 40 (30 Runtime 20 10 0 0M 200M 400M 600M 800M MLlib Mahout Number of Ratings On a dataset with 660M users, 2.4M items, and 3.5B ratings MLlib runs in 40 minutes with 50 nodes

- 27. Performance Steady performance gains Decision Trees ALS K-Means Logistic Regression Ridge Regression ~3X speedups on average Speedup (Spark 1.0 vs. 1.1)

- 28. Algorithms In Spark 1.2 • Random Forests: ensembles of Decision Trees • Boosting Under development • Topic modeling • (many others) Many others!

- 29. ML Pipelines Typical ML workflow Training Data Feature Extraction Model Training Model Testing Test Data

- 30. ML Pipelines Typical ML workflow is complex. Training Data Feature Extraction Model Training Model Testing Test Data Feature Extraction Other Training Data Other Model Training

- 31. ML Pipelines Typical ML workflow is complex. Pipelines in 1.2 (alpha release) • Easy workflow construction • Standardized interface for model tuning • Testing & failing early Inspired by MLbase / Pipelines Project (see Evan’s talk) Collaboration with Databricks MLbase / MLOpt aims to autotune these pipelines

- 32. Datasets Further Integration with SparkSQL ML pipelines require Datasets • Handle many data types (features) • Keep metadata about features • Select subsets of features for different parts of pipeline • Join groups of features ML Dataset = SchemaRDD Inspired by MLbase / MLI API

- 33. MLlib Programming Guide spark.apache.org/docs/latest/mllib-guide. html Databricks training info databricks.com/spark-training Spark user lists & community spark.apache.org/community.html edX MOOC on Scalable Machine Learning www.edx.org/course/uc-berkeleyx/uc-berkeleyx- cs190-1x-scalable-machine-6066 4-day BIDS minicourse in January Resources