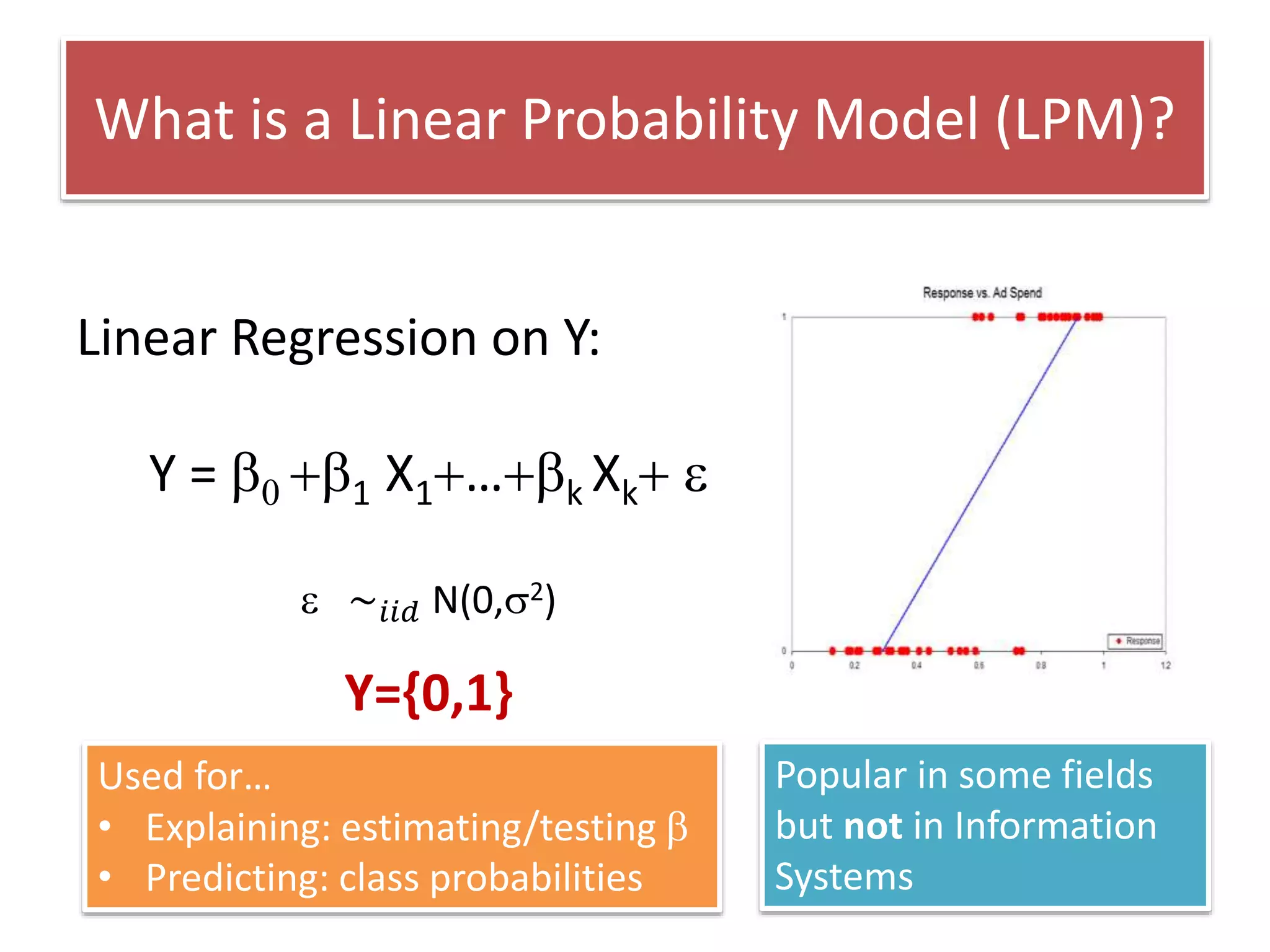

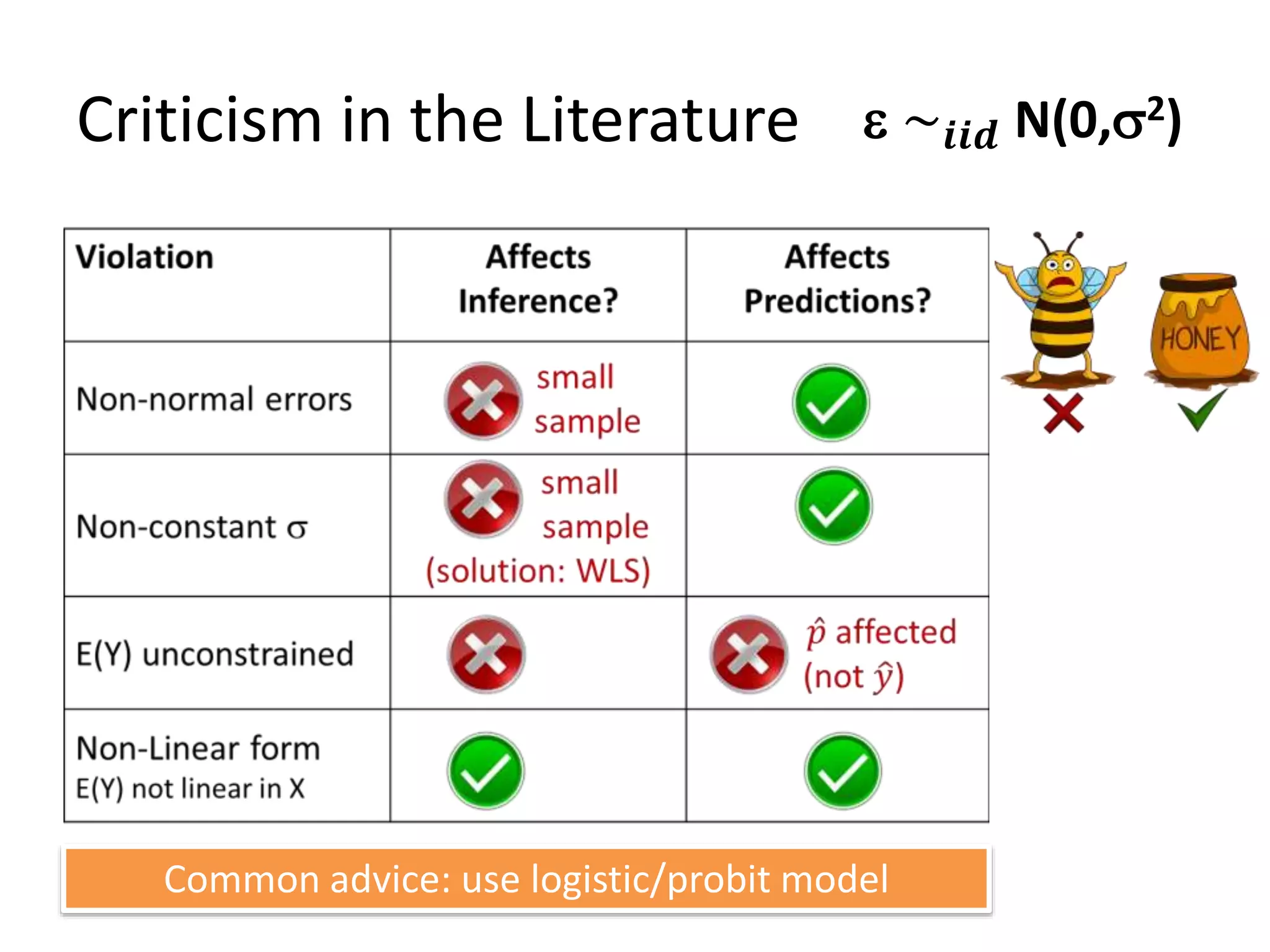

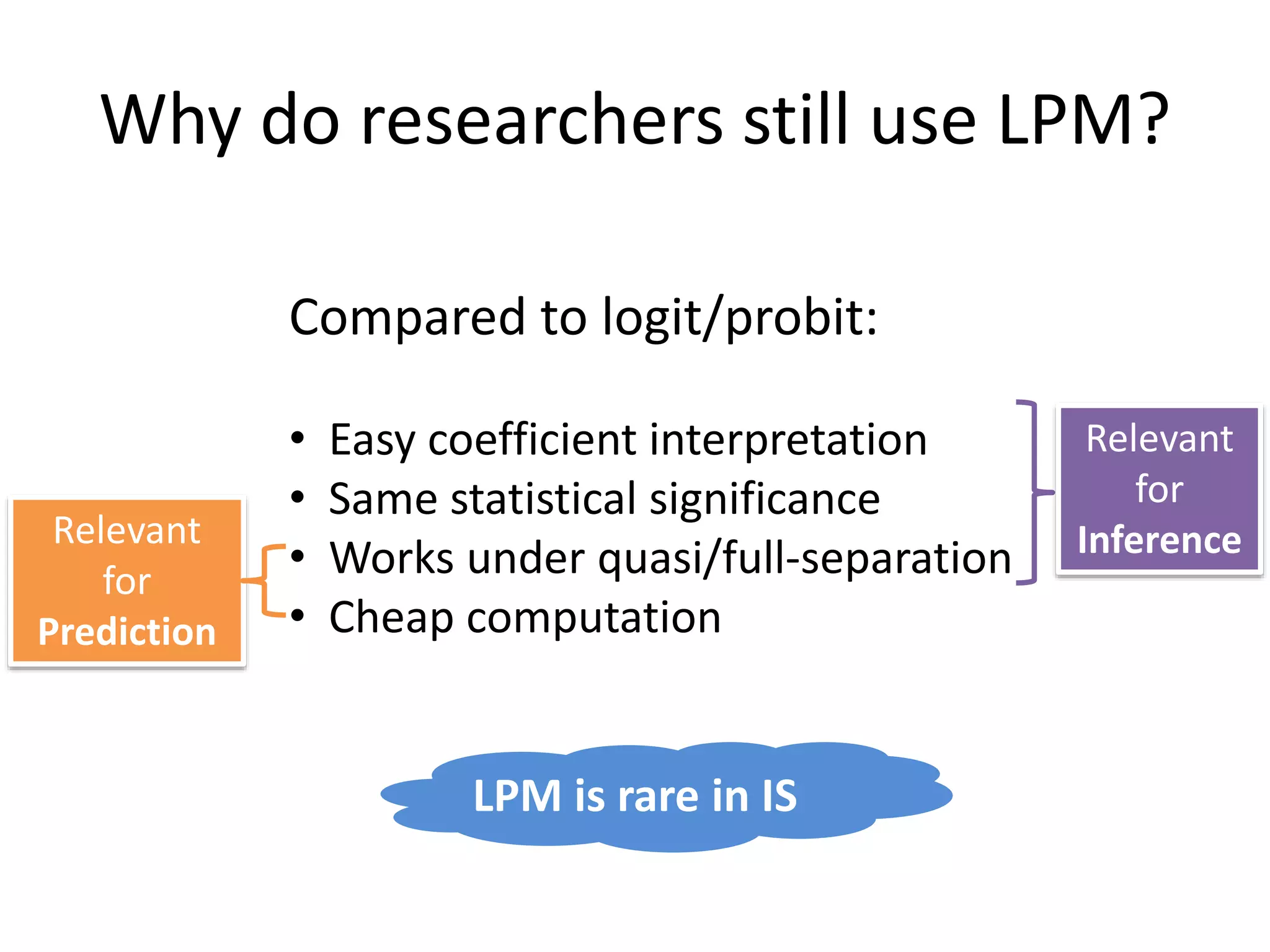

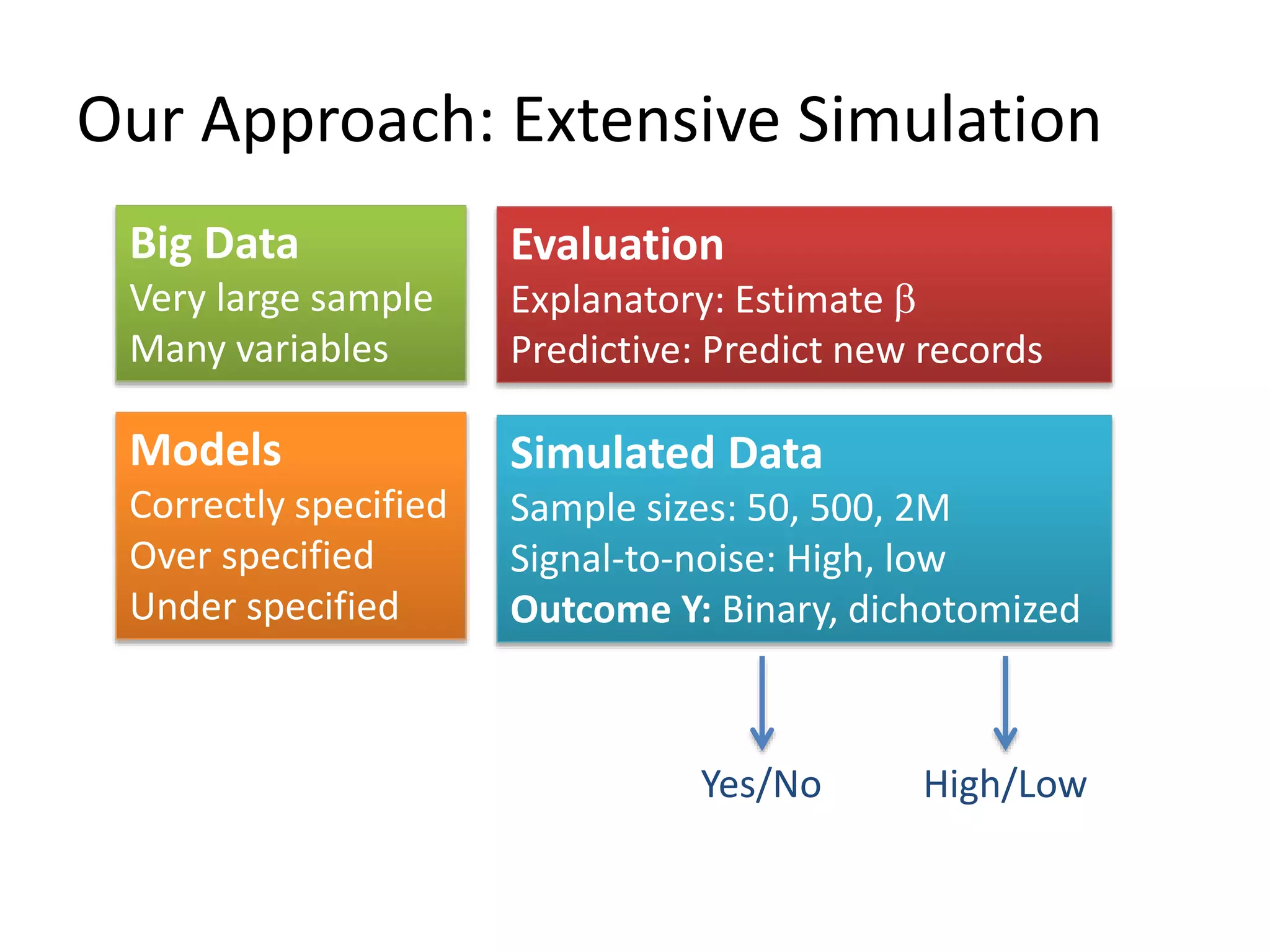

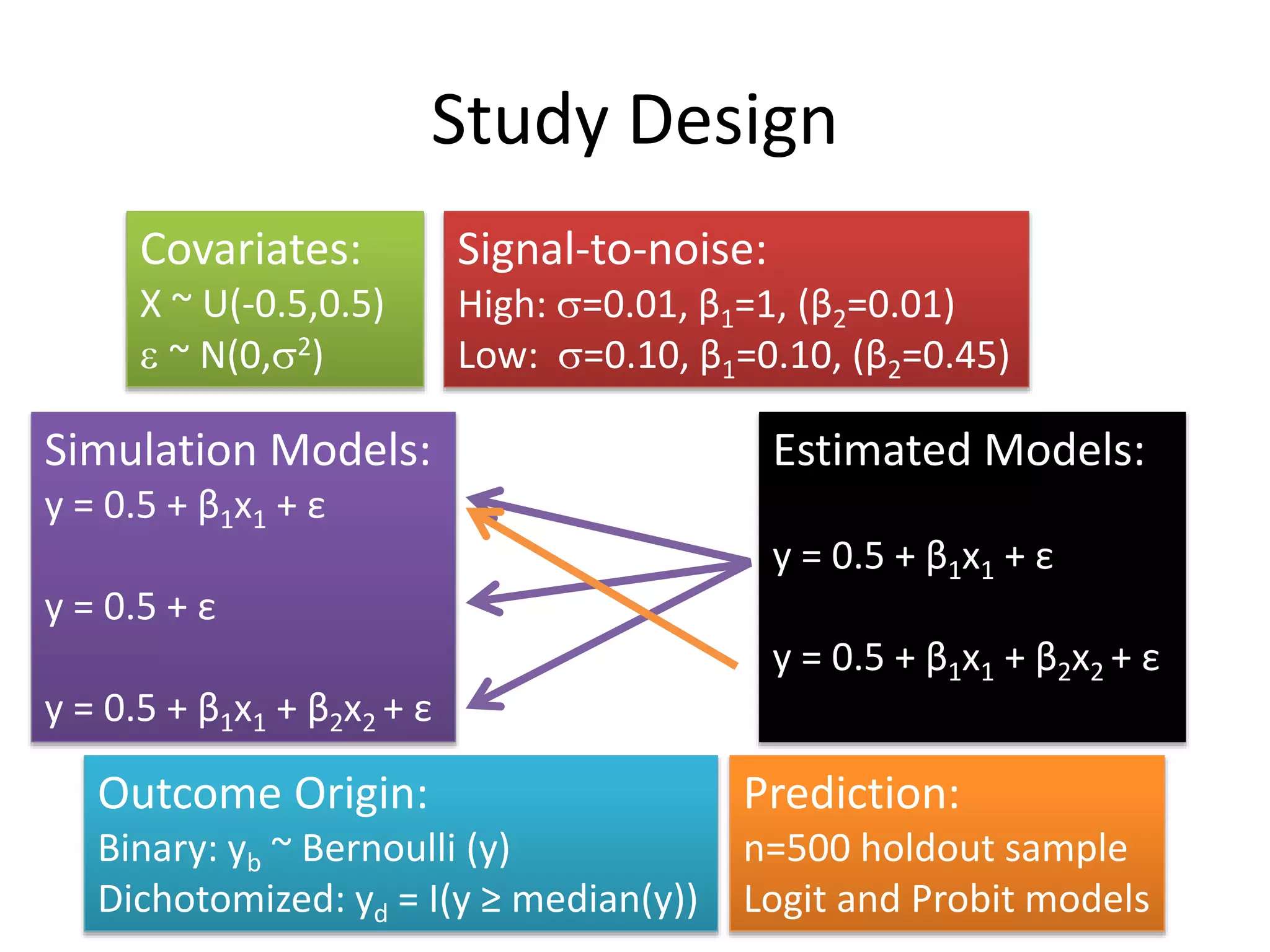

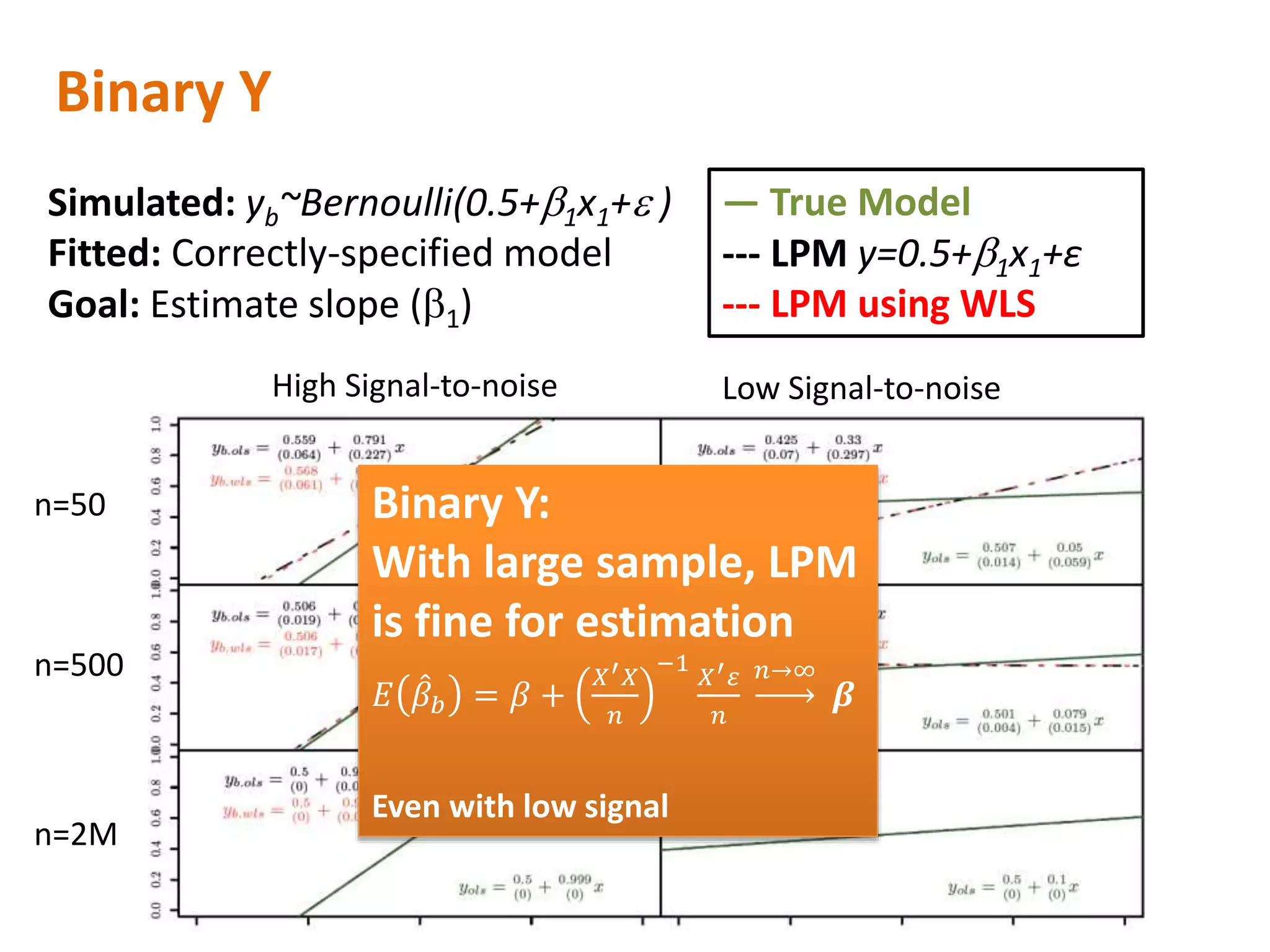

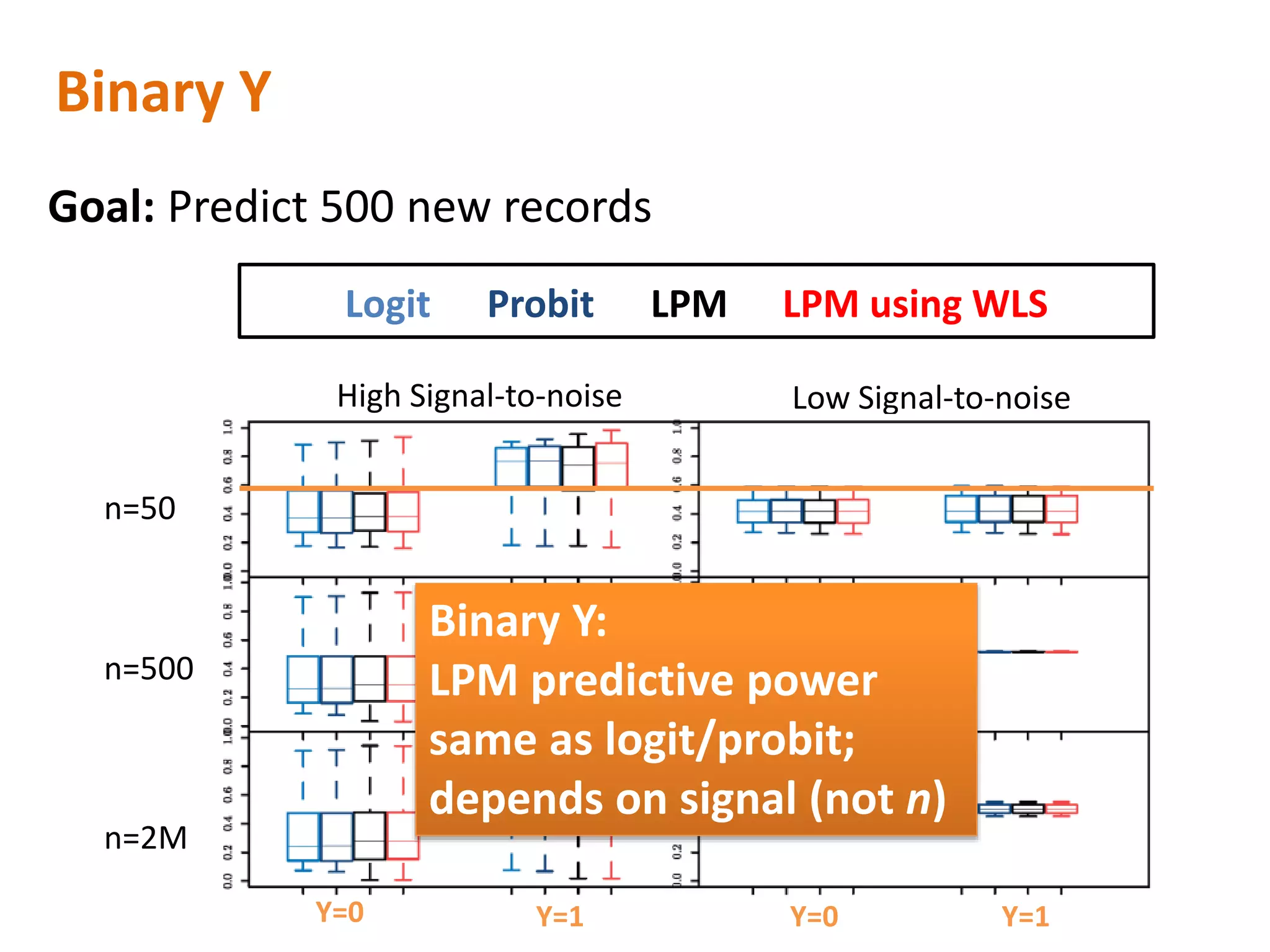

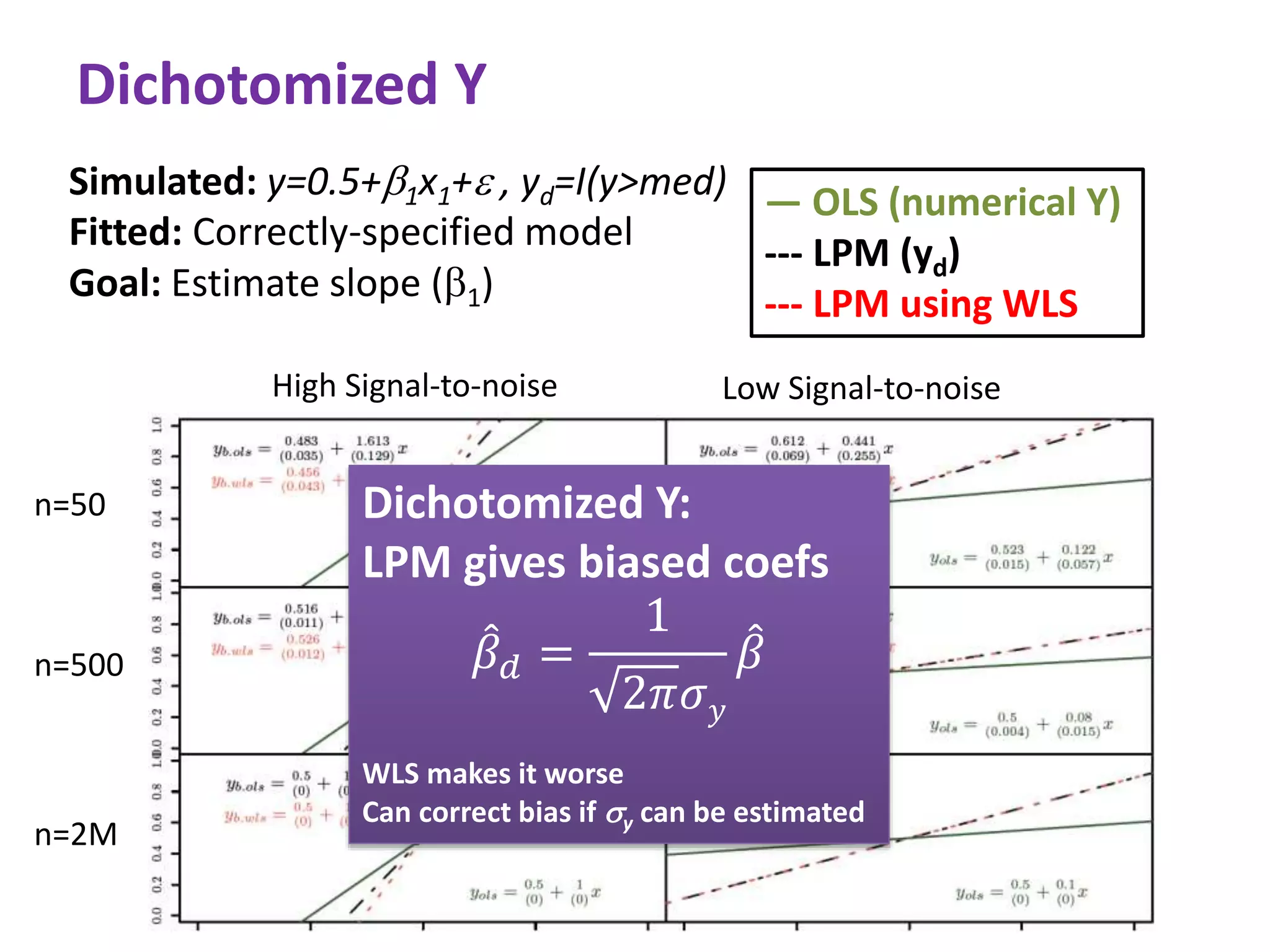

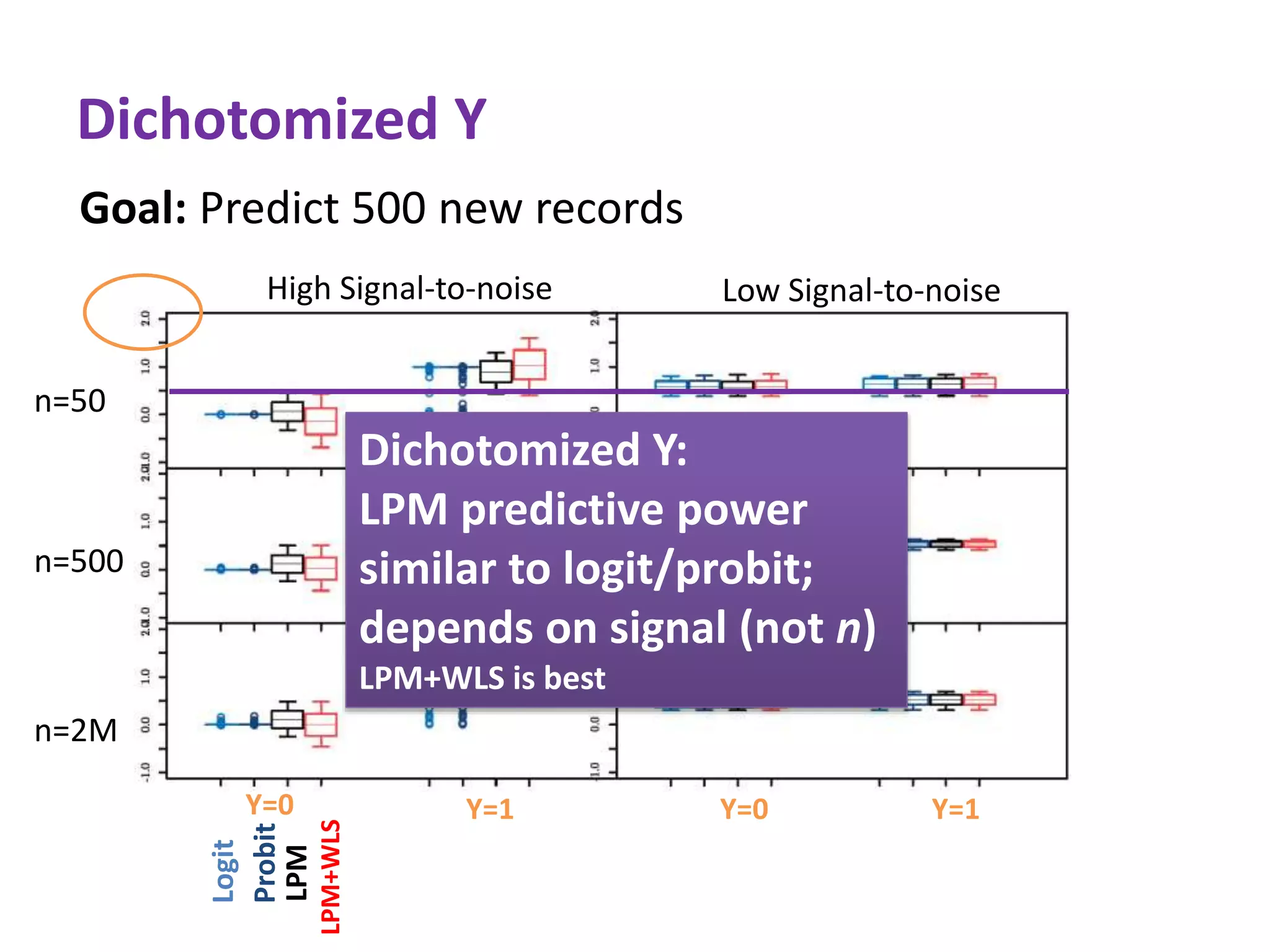

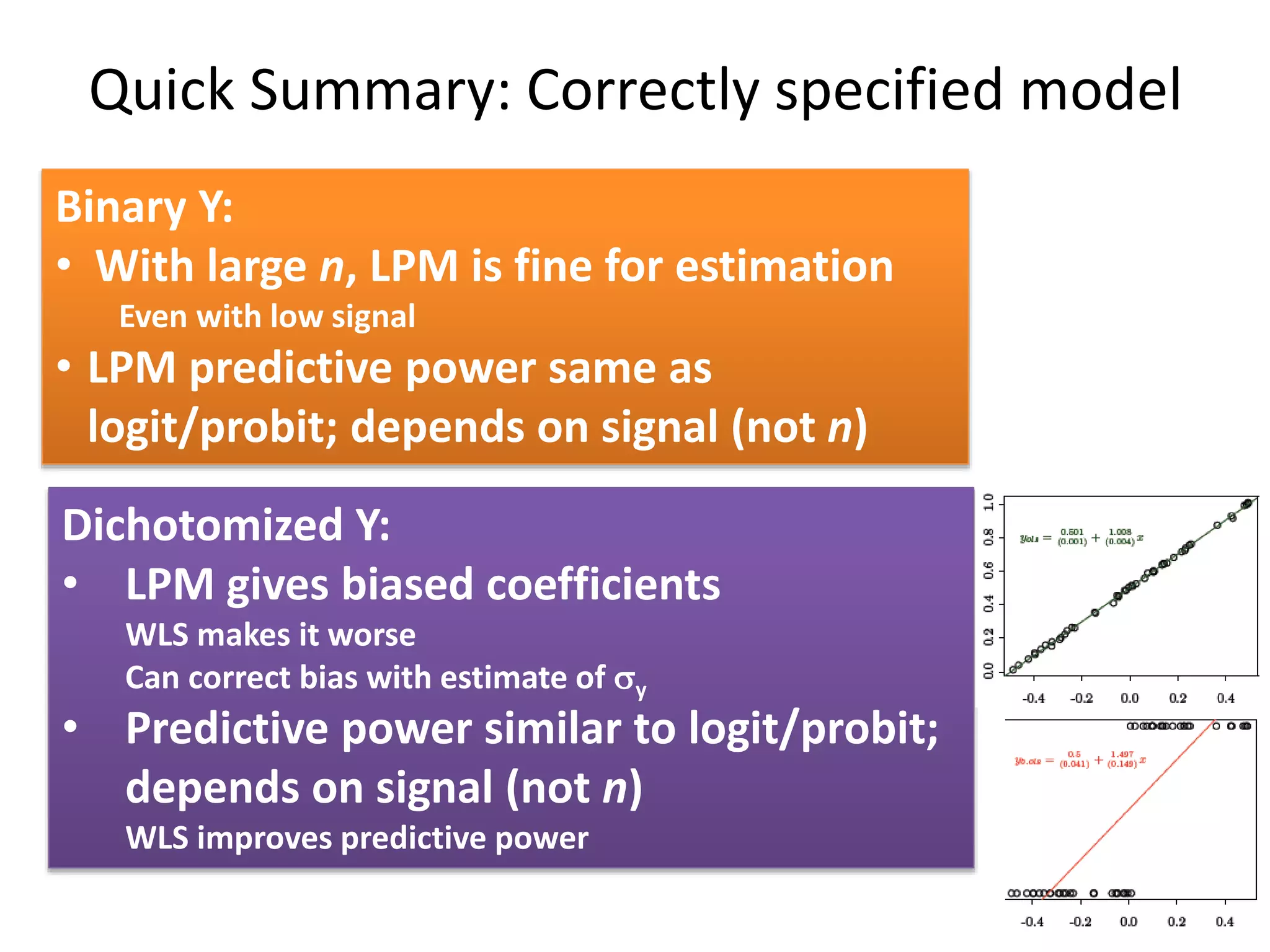

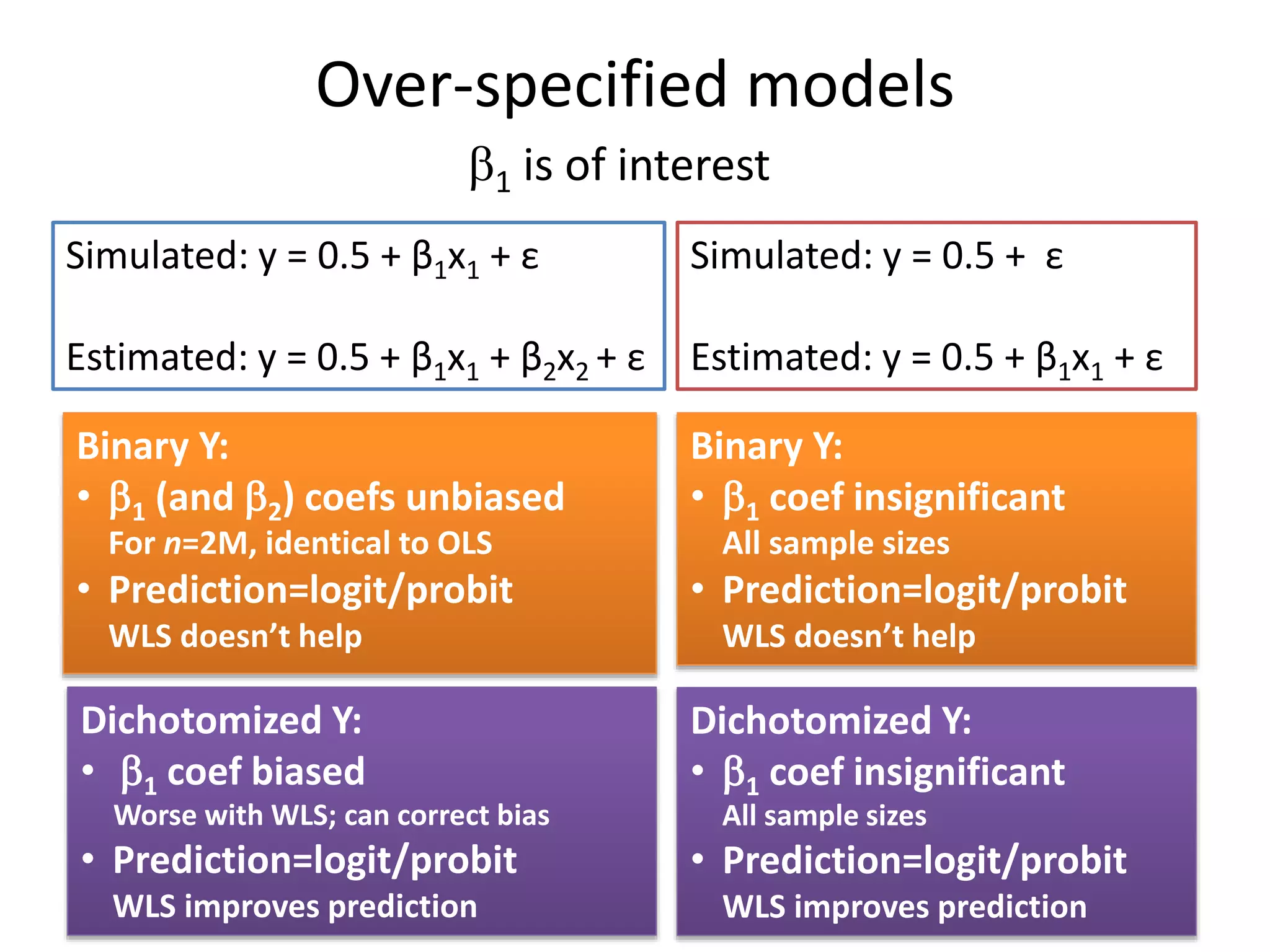

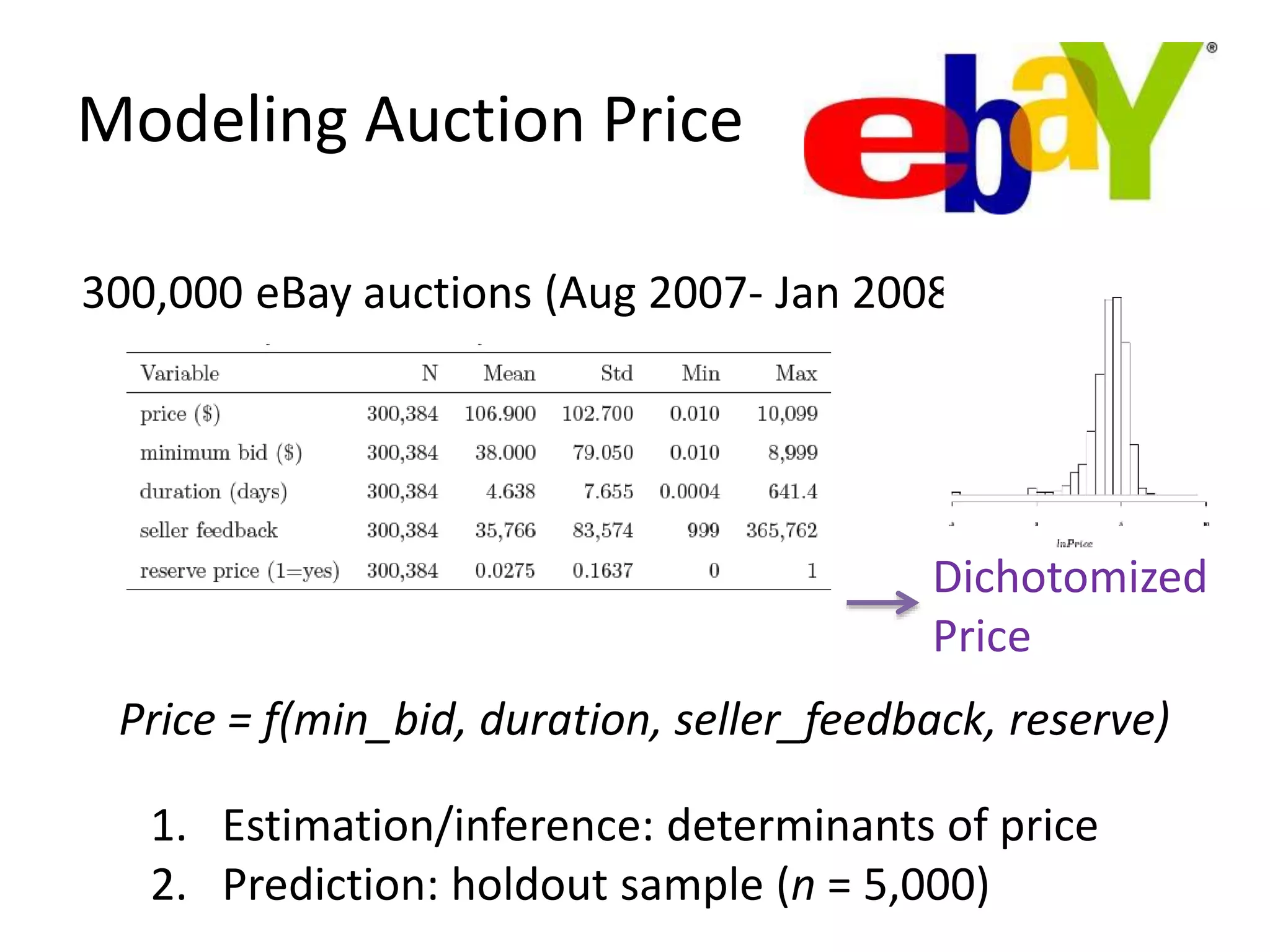

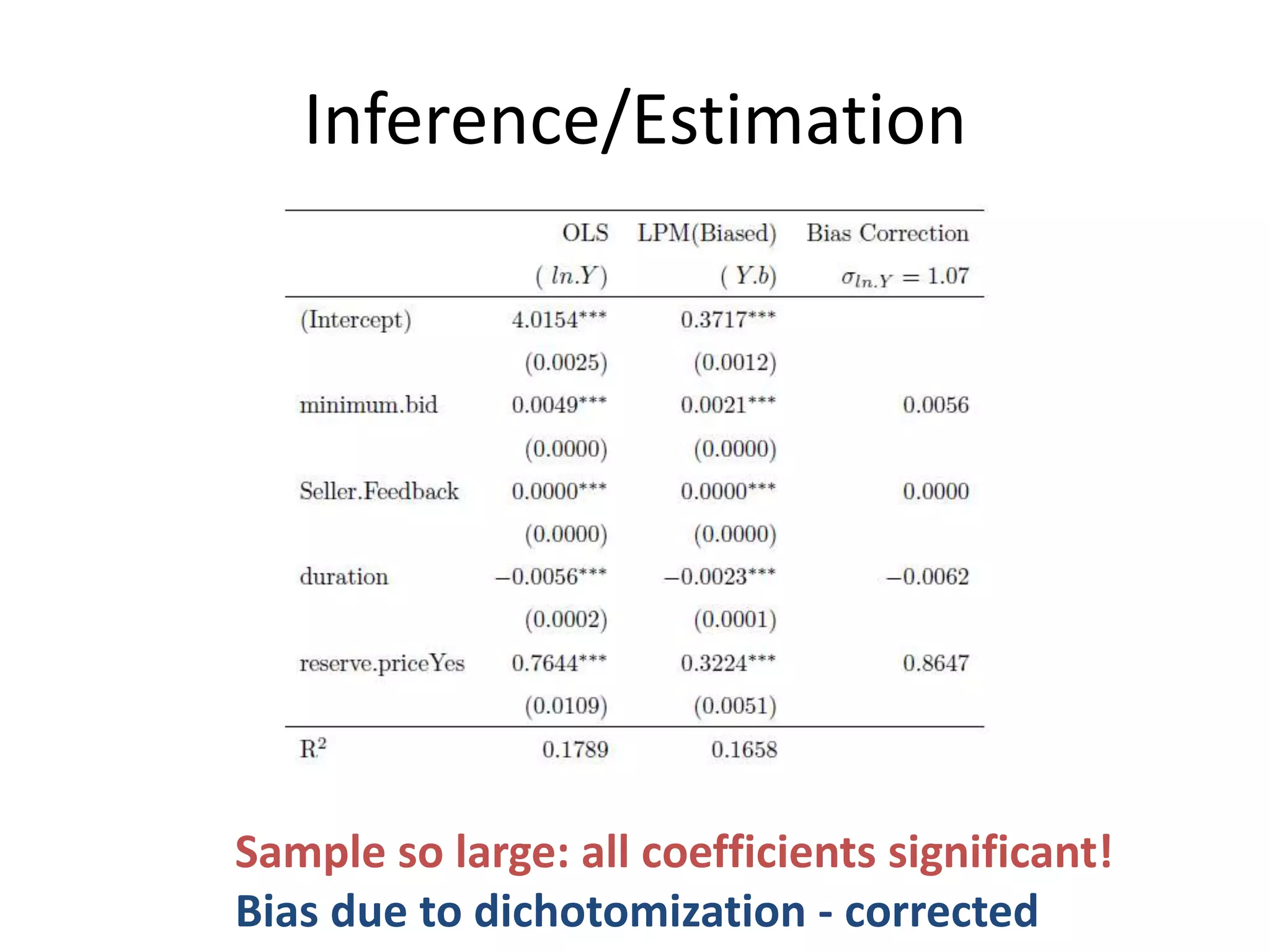

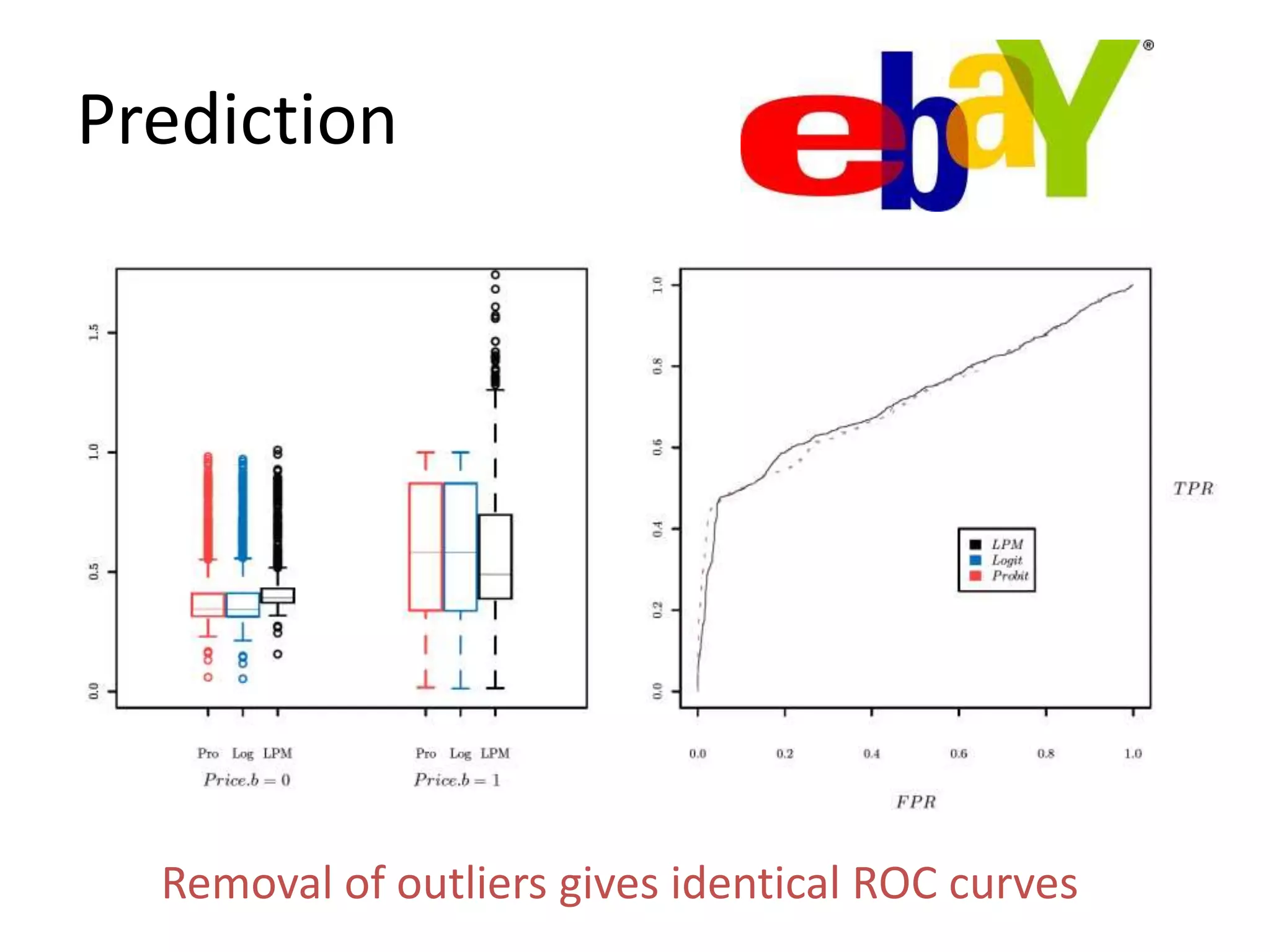

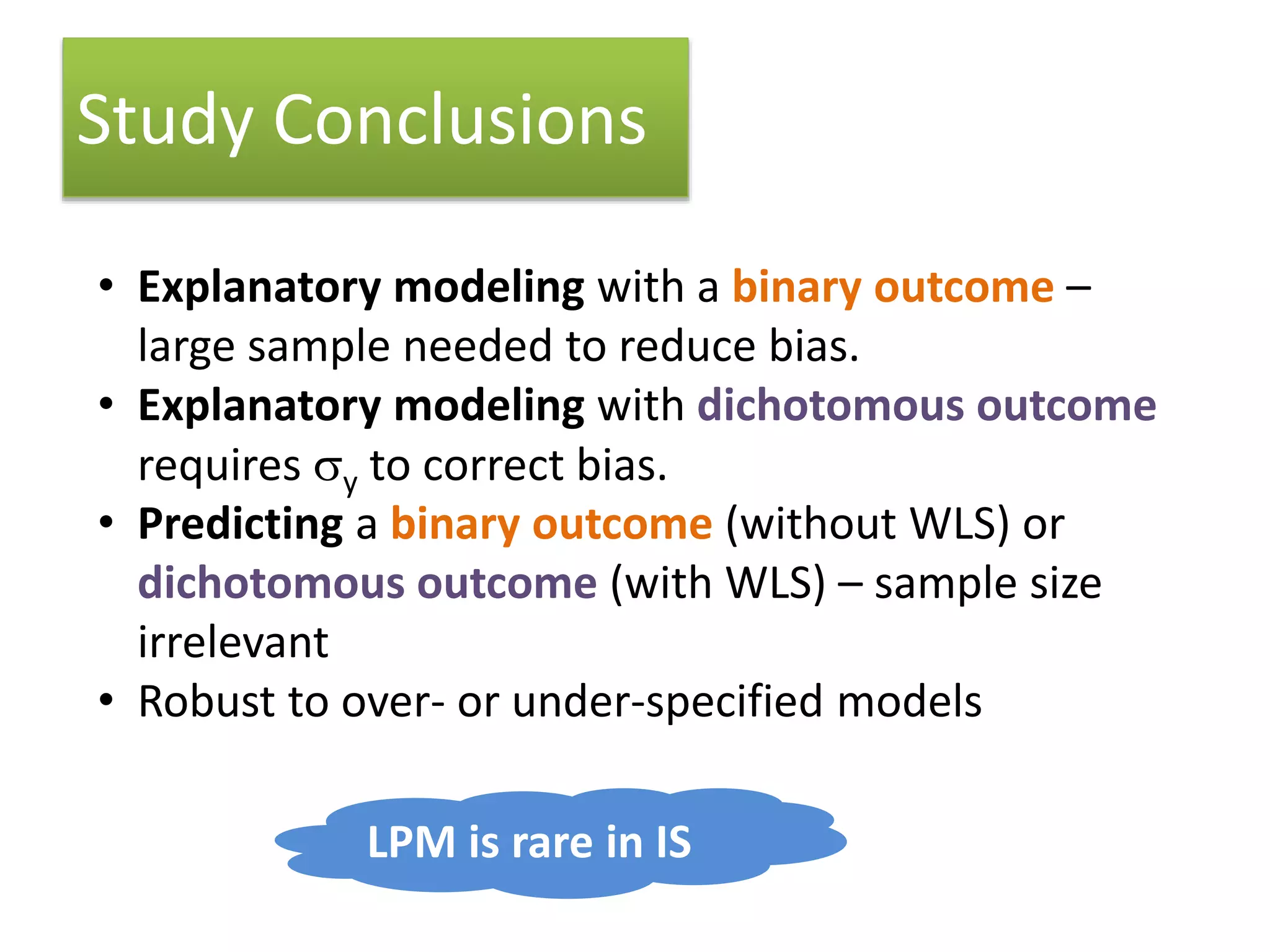

The document discusses the use of linear probability models (LPM) for binary outcomes, highlighting their simplicity and comparable predictive power to logistic/probit models despite frequent criticism. It presents a simulation study that evaluates LPM's effectiveness under varying conditions, revealing that large sample sizes can mitigate bias and yield satisfactory estimation results. The authors conclude that while LPM is rare in information systems, it can be a viable option in certain contexts, particularly with adequately large datasets.