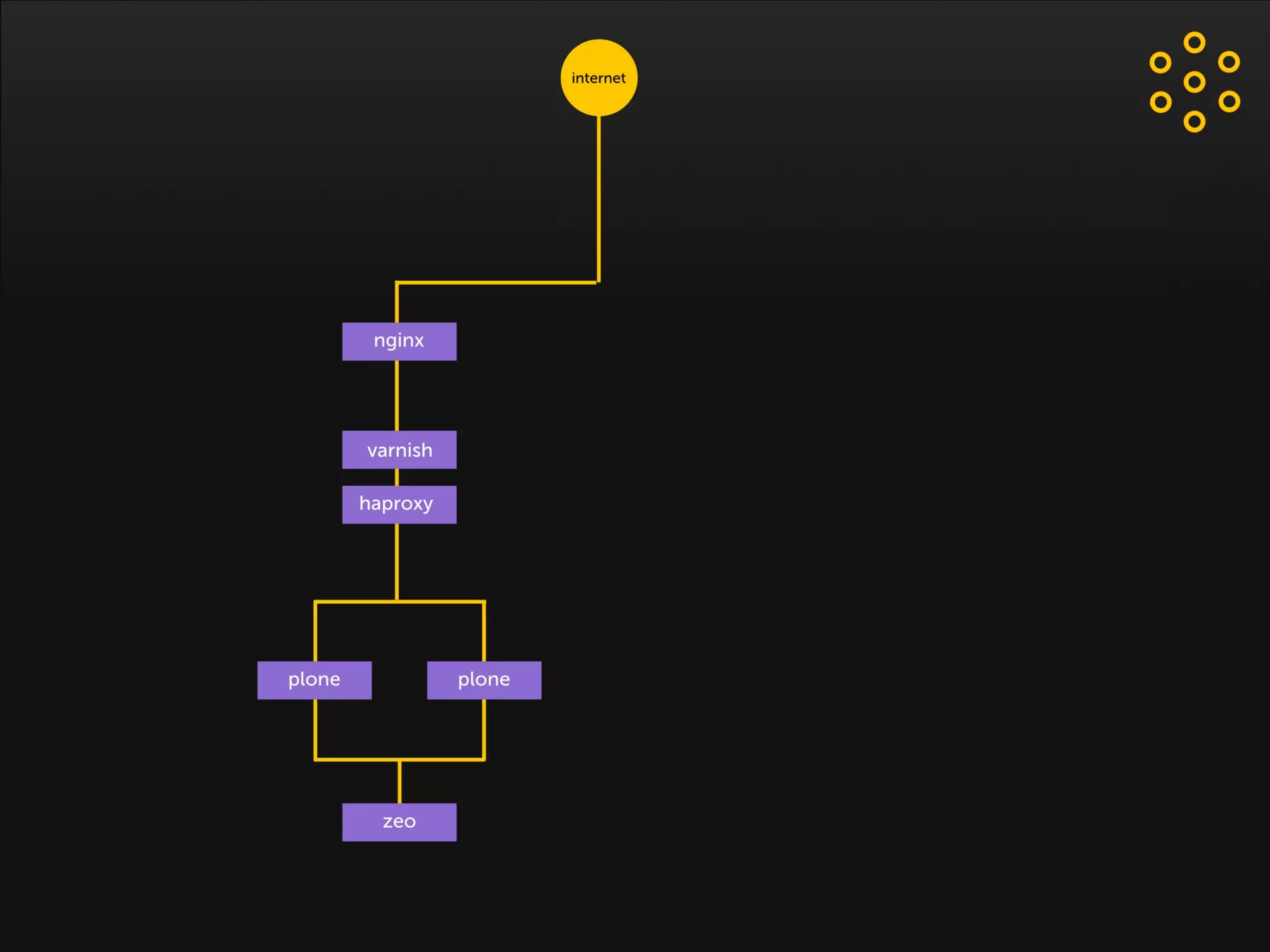

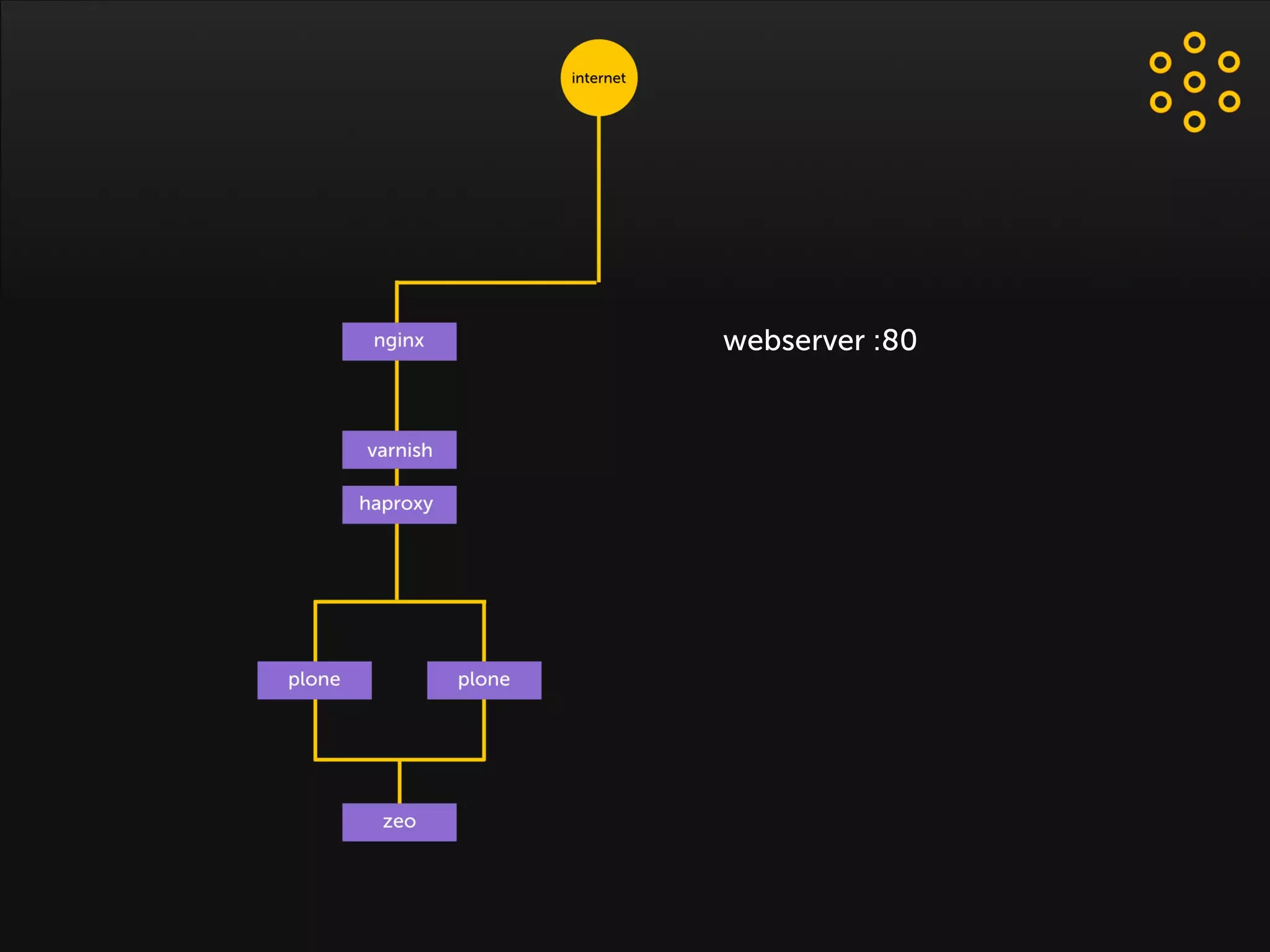

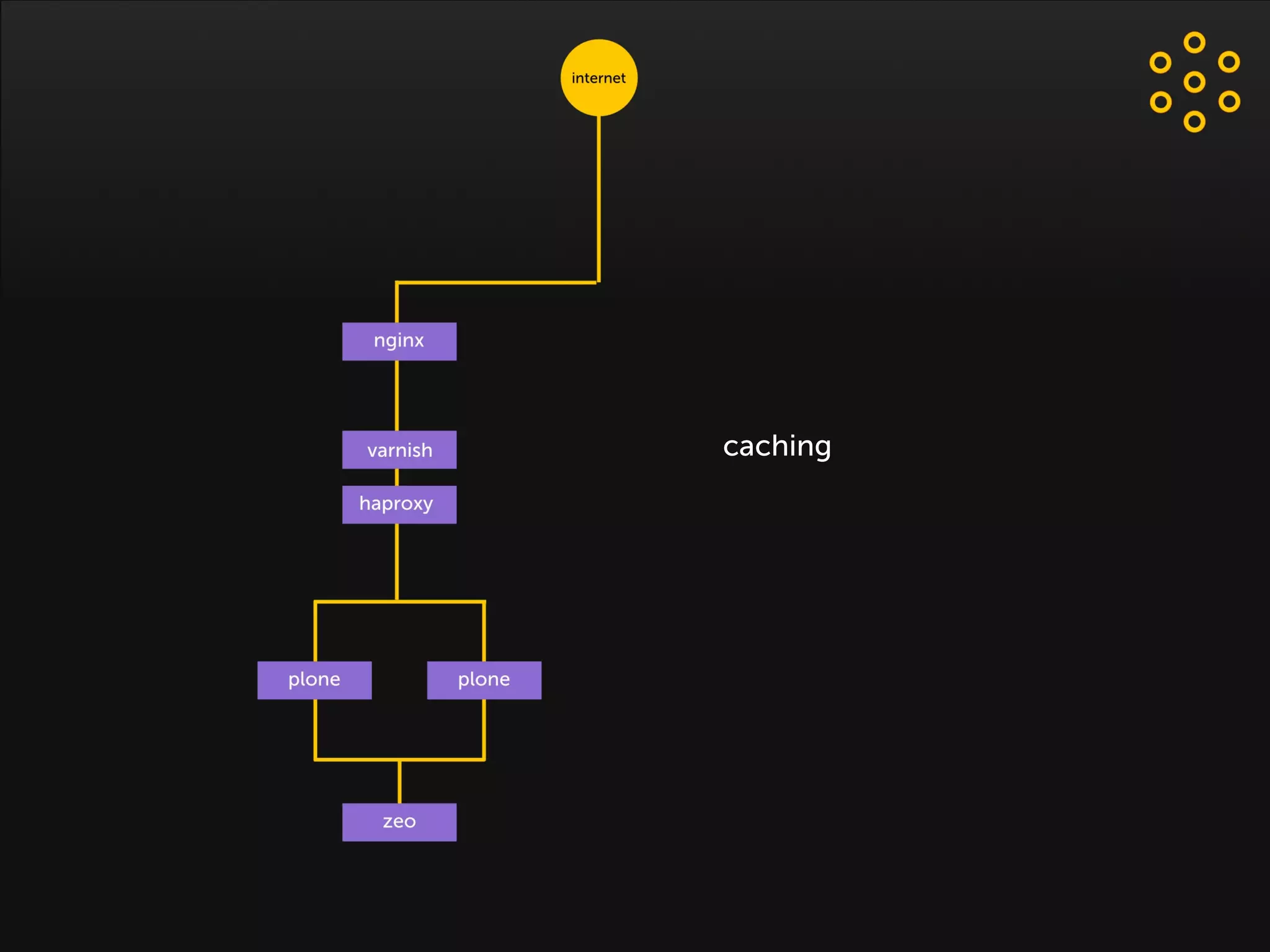

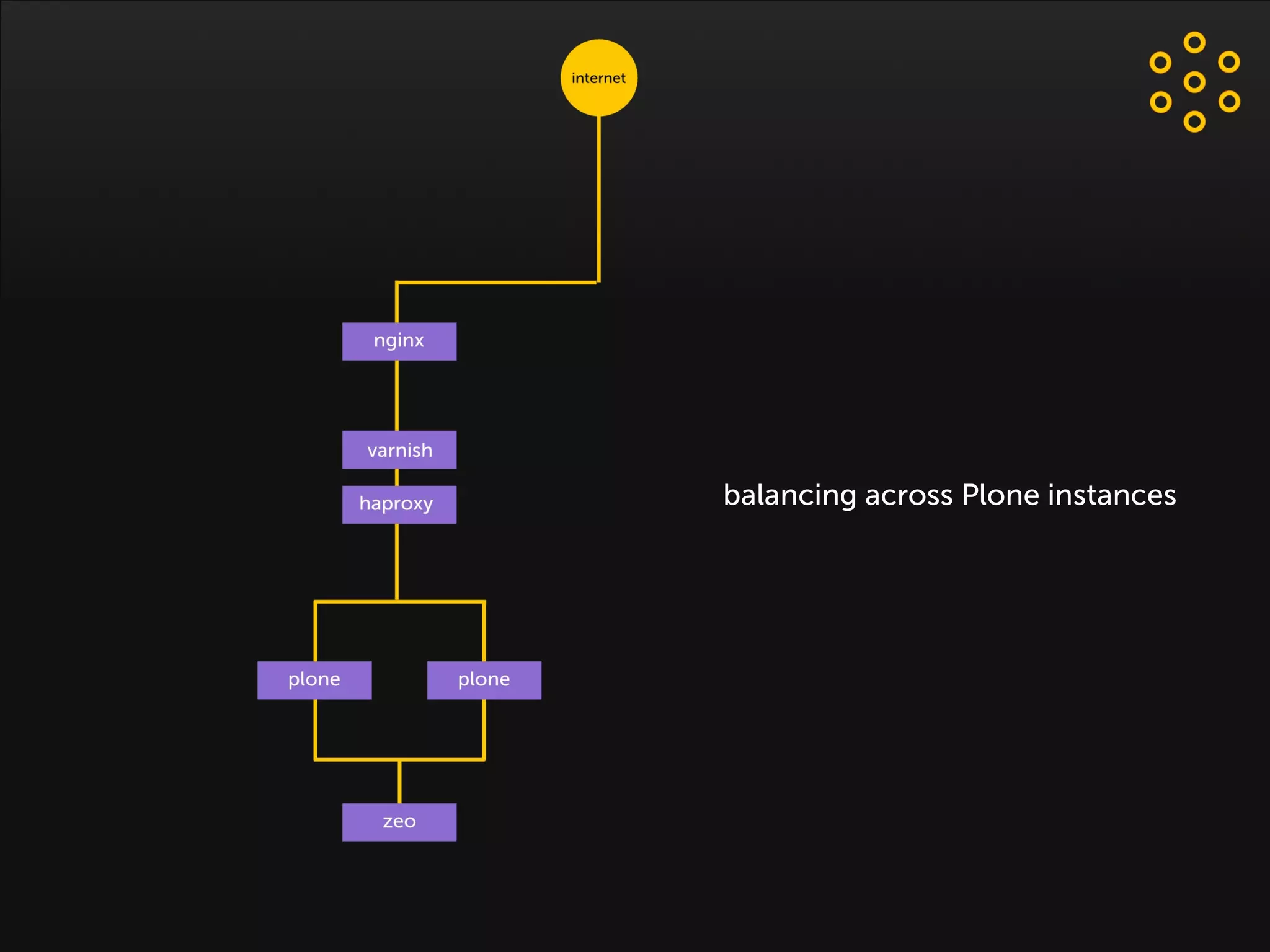

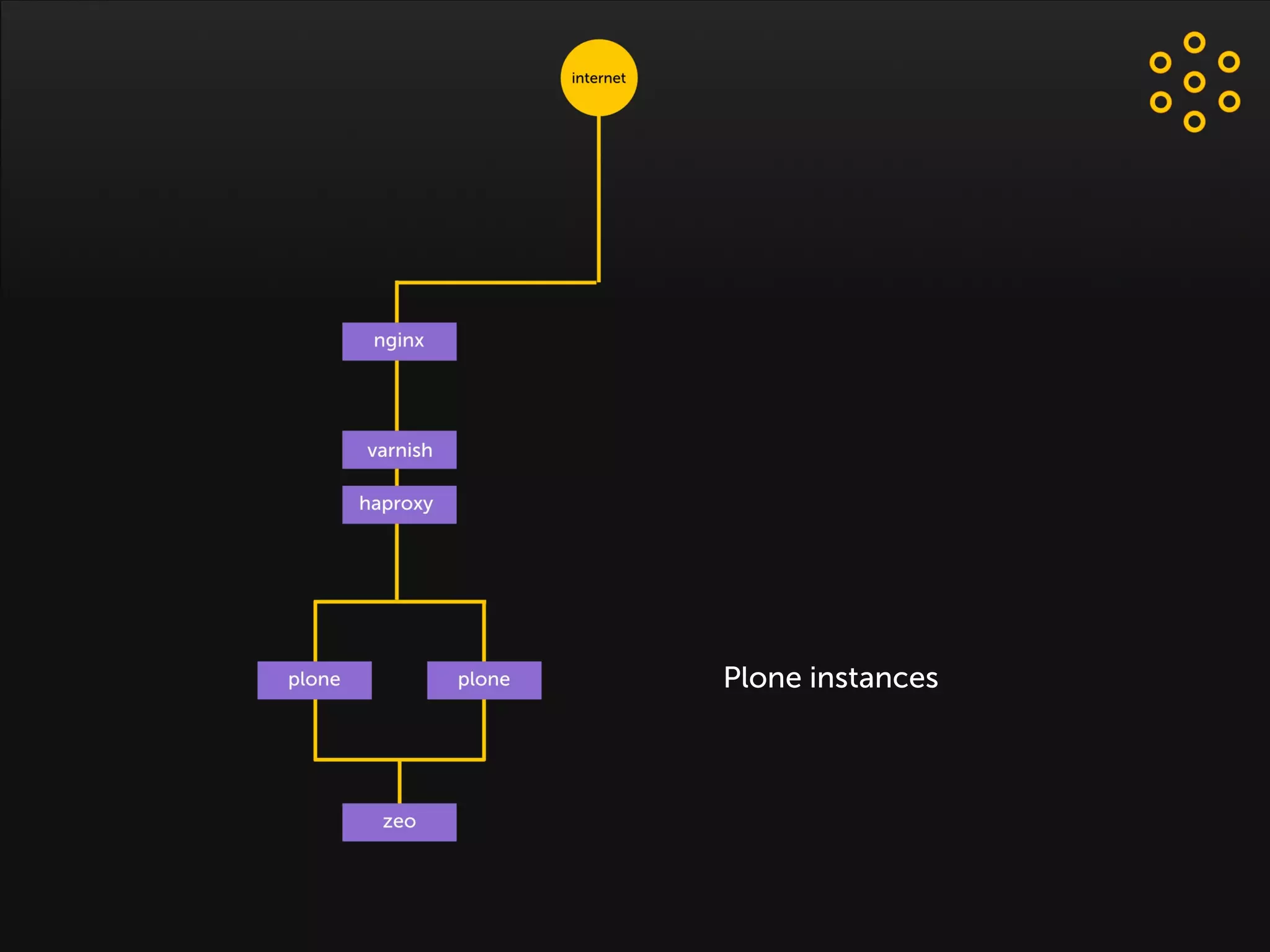

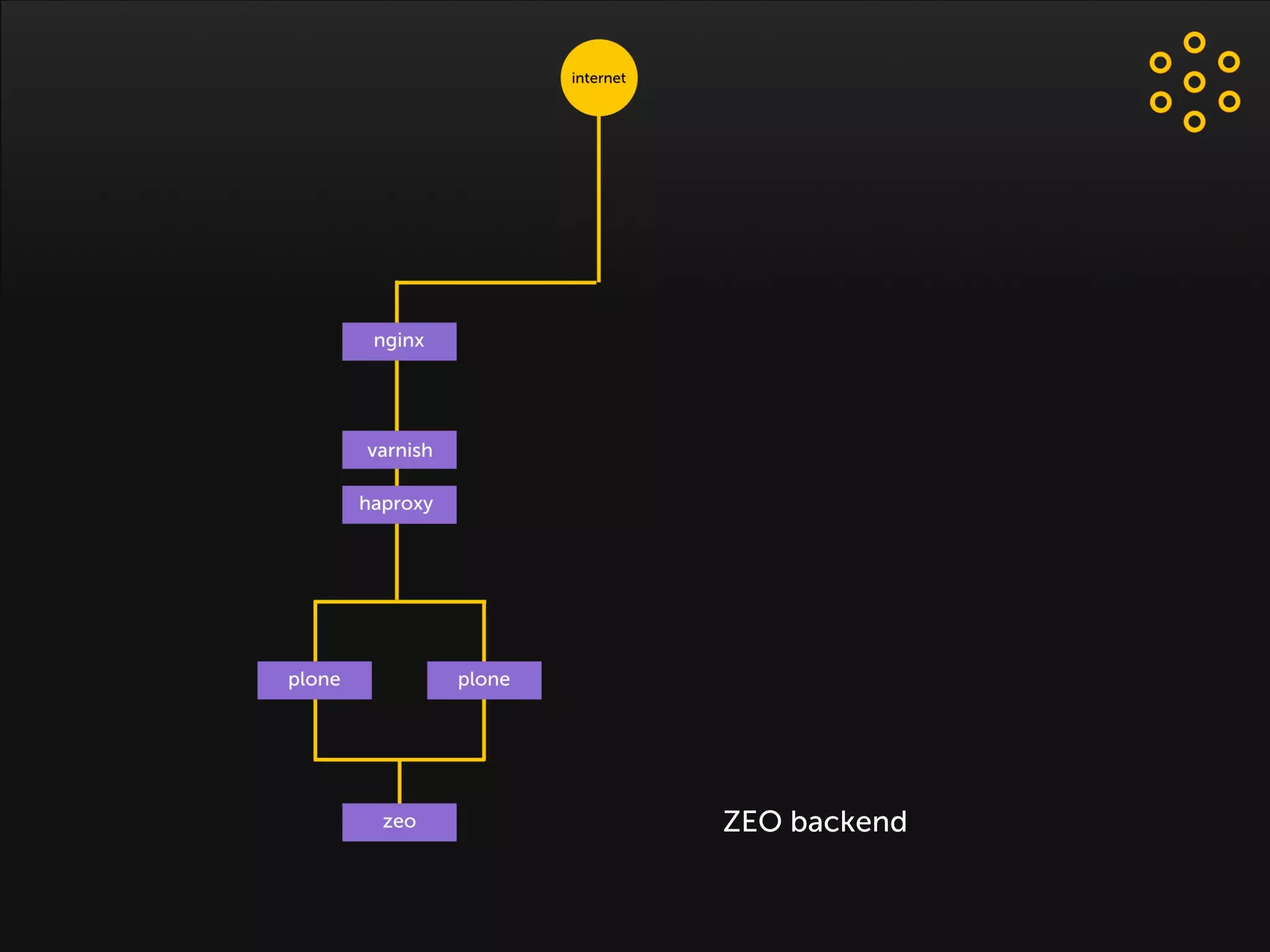

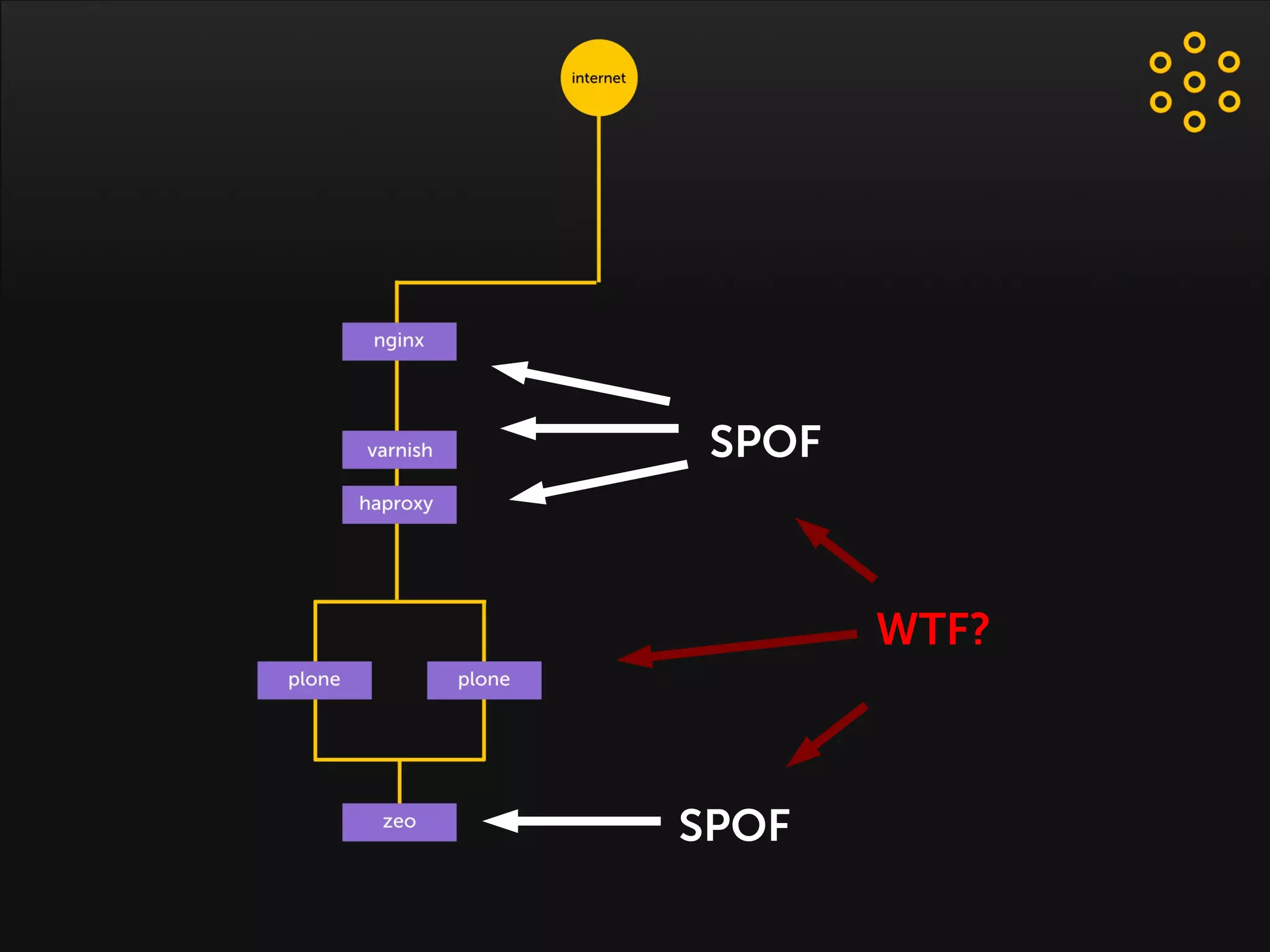

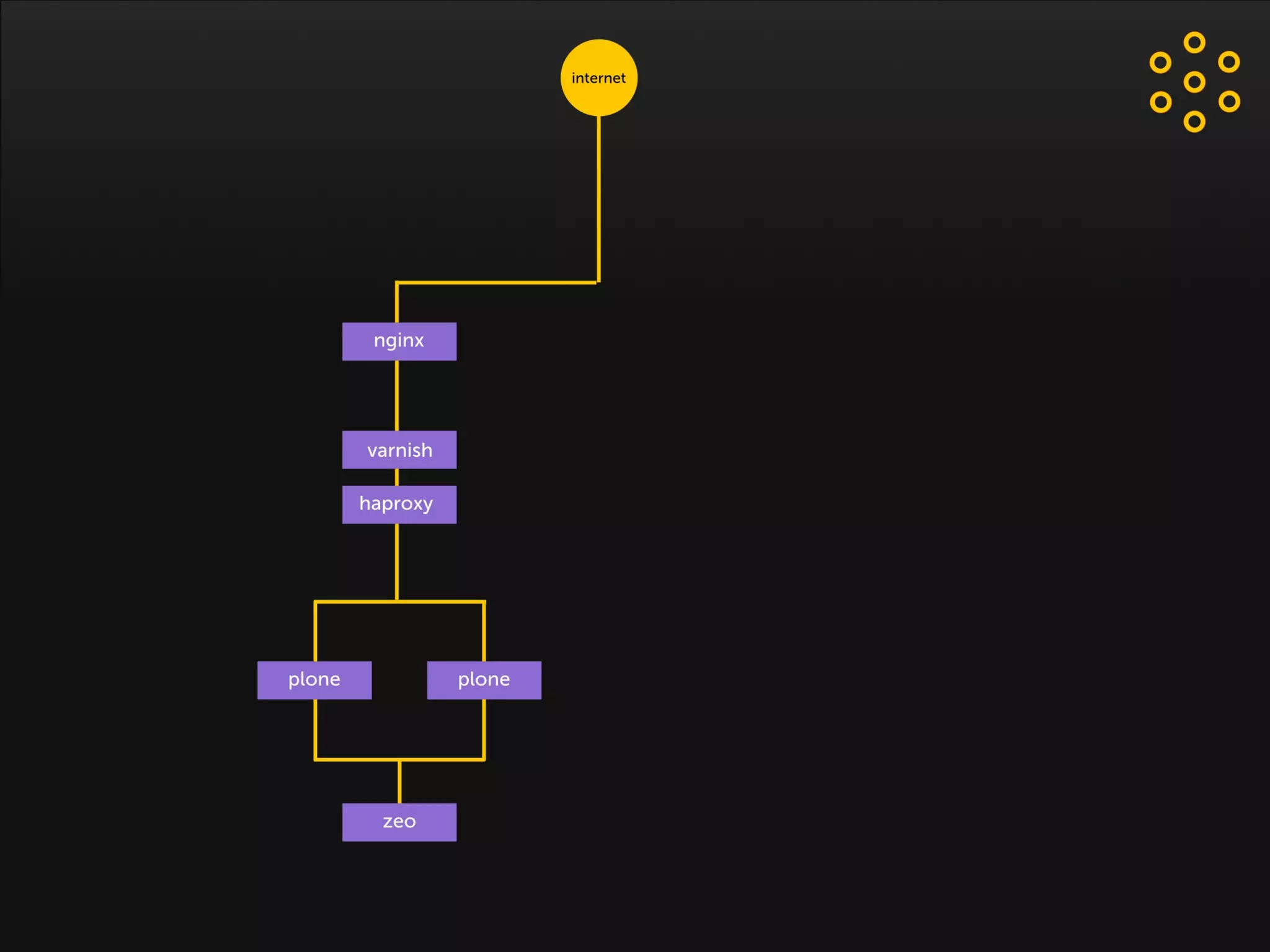

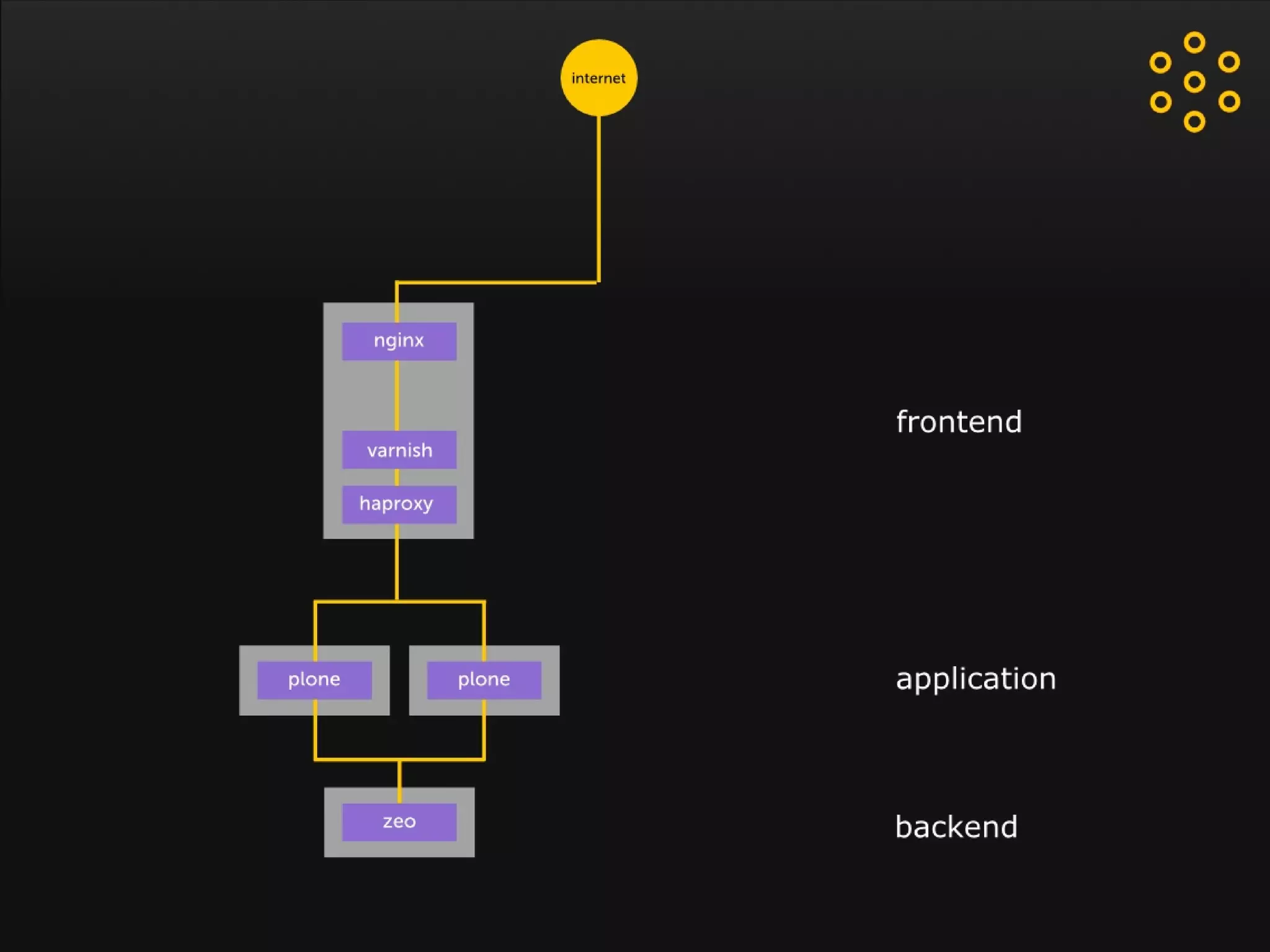

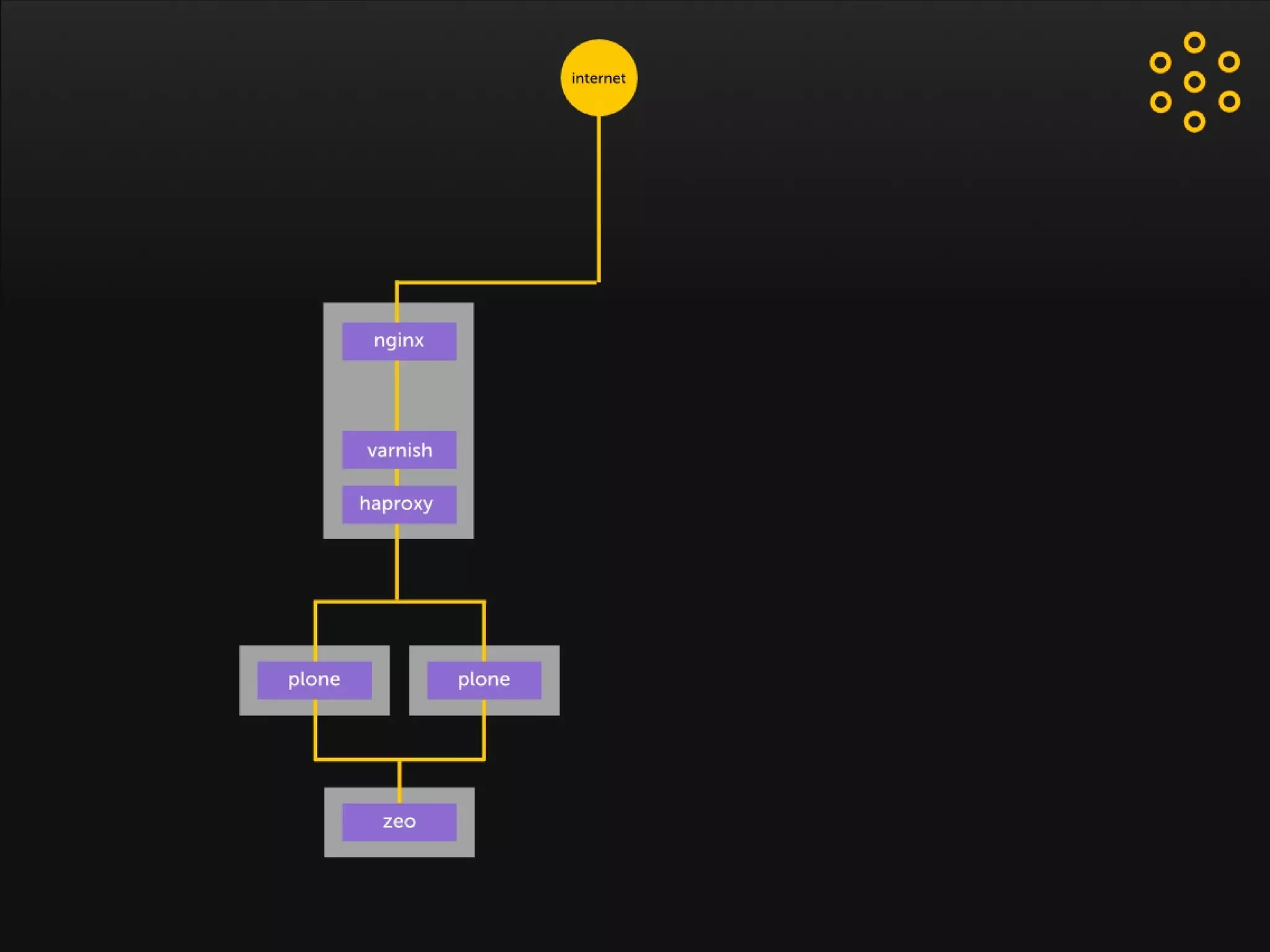

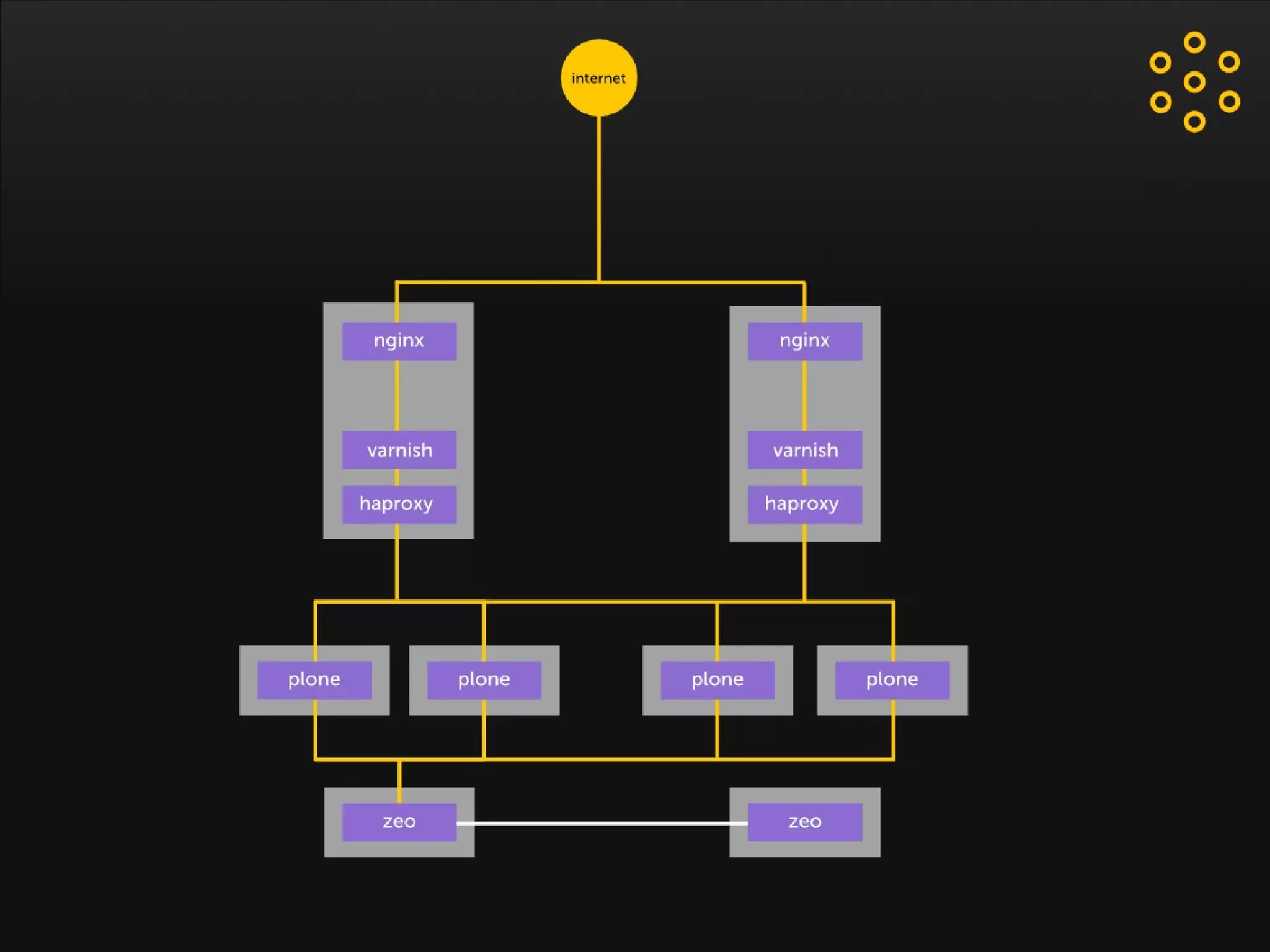

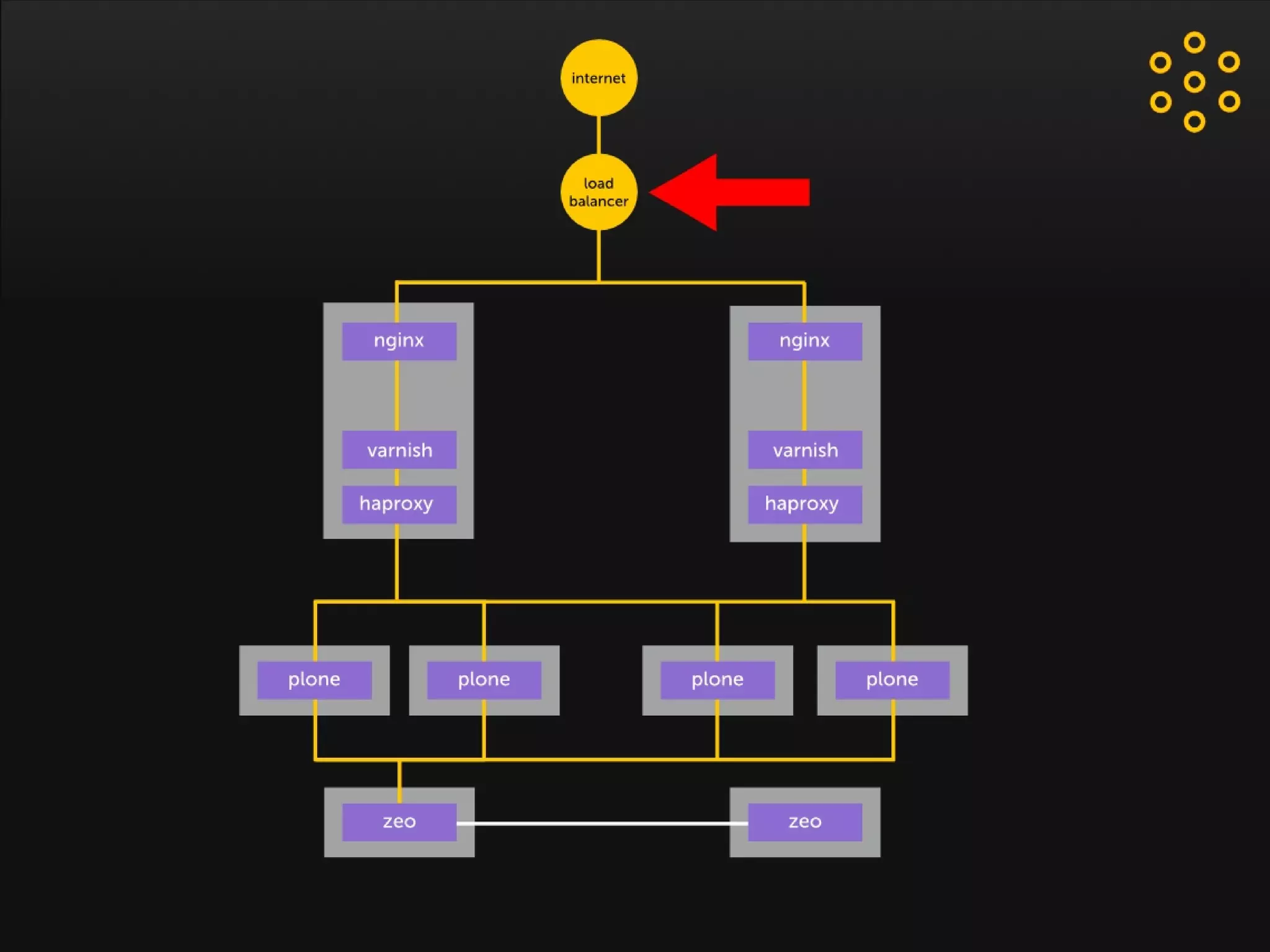

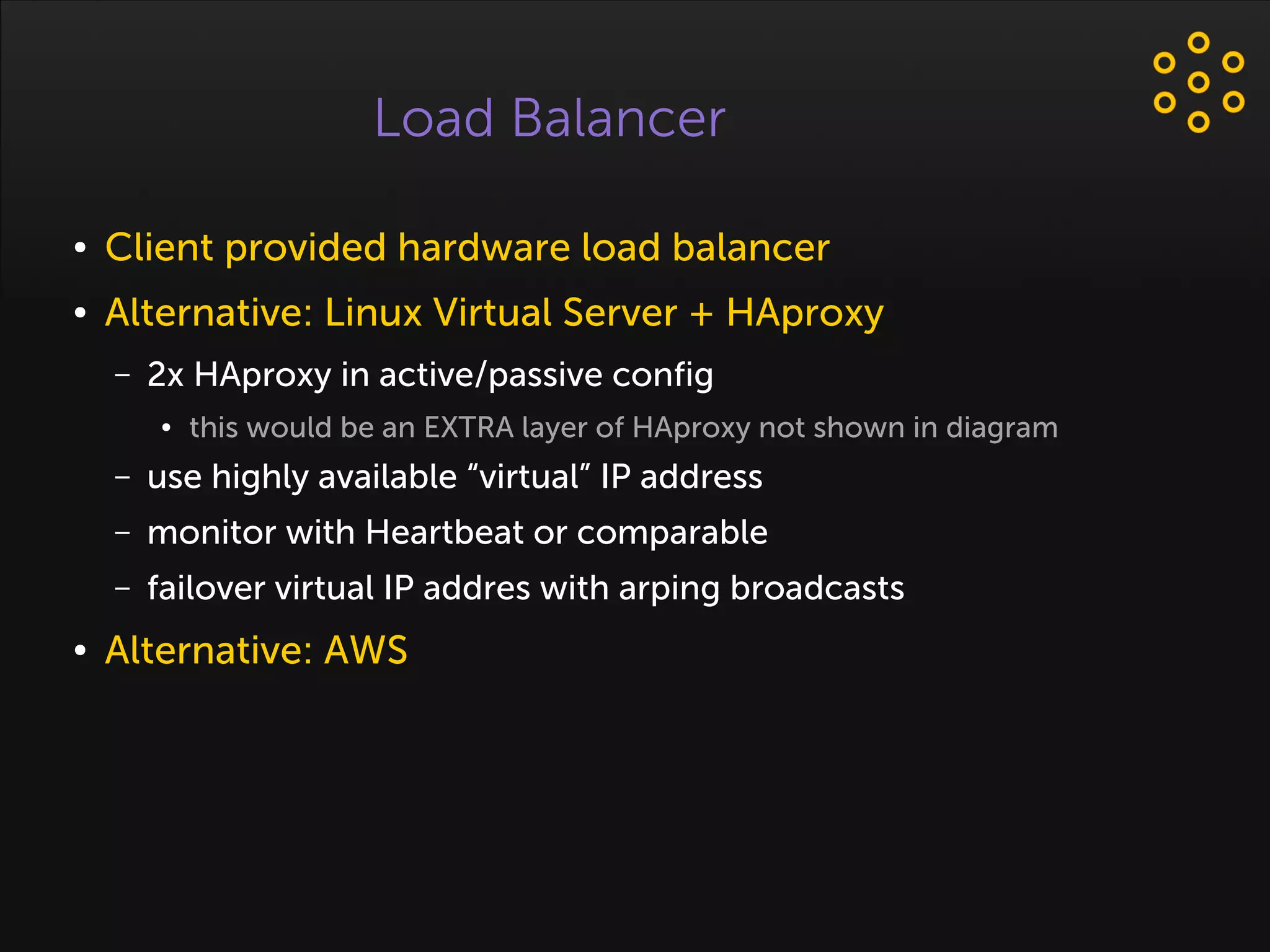

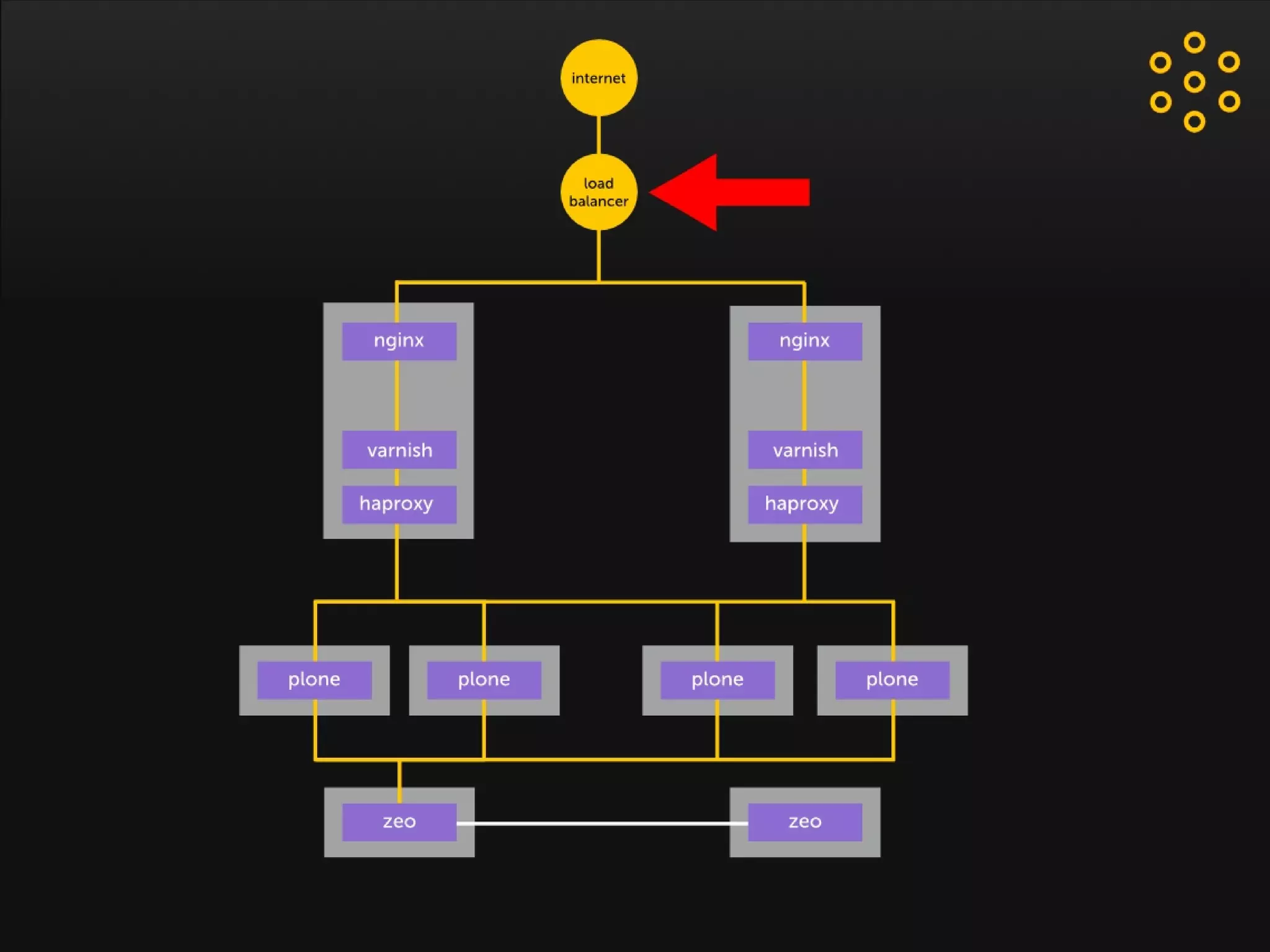

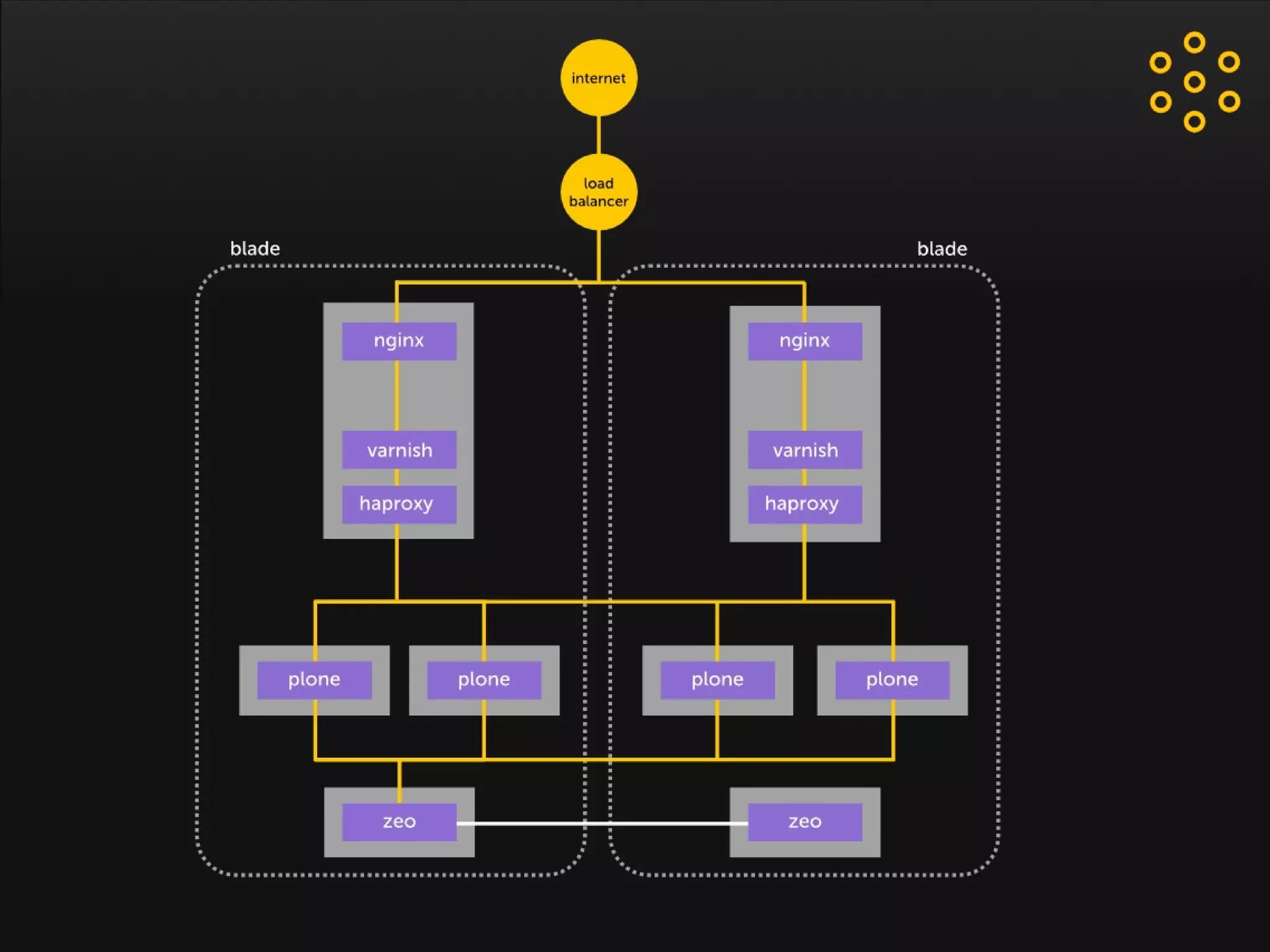

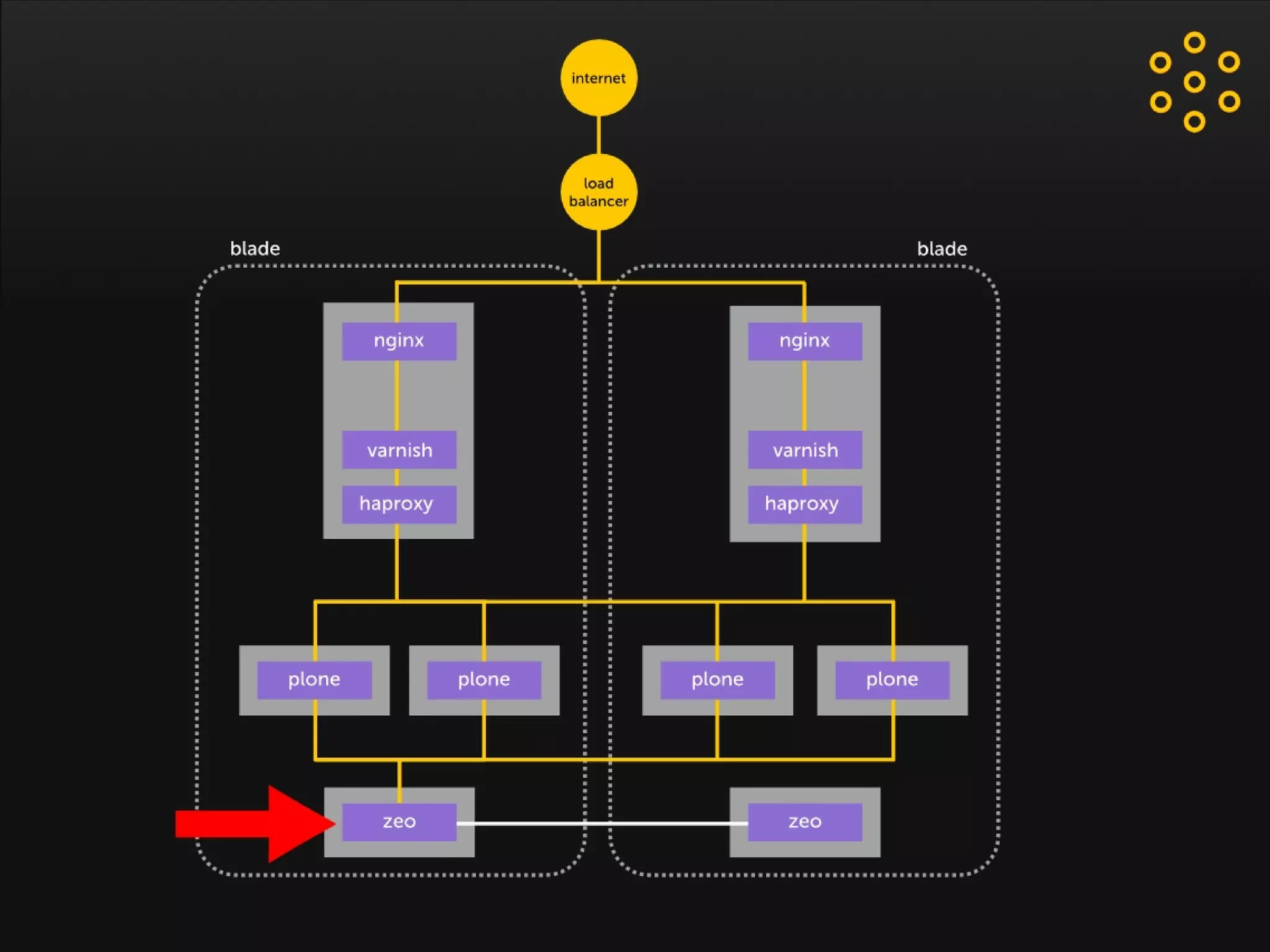

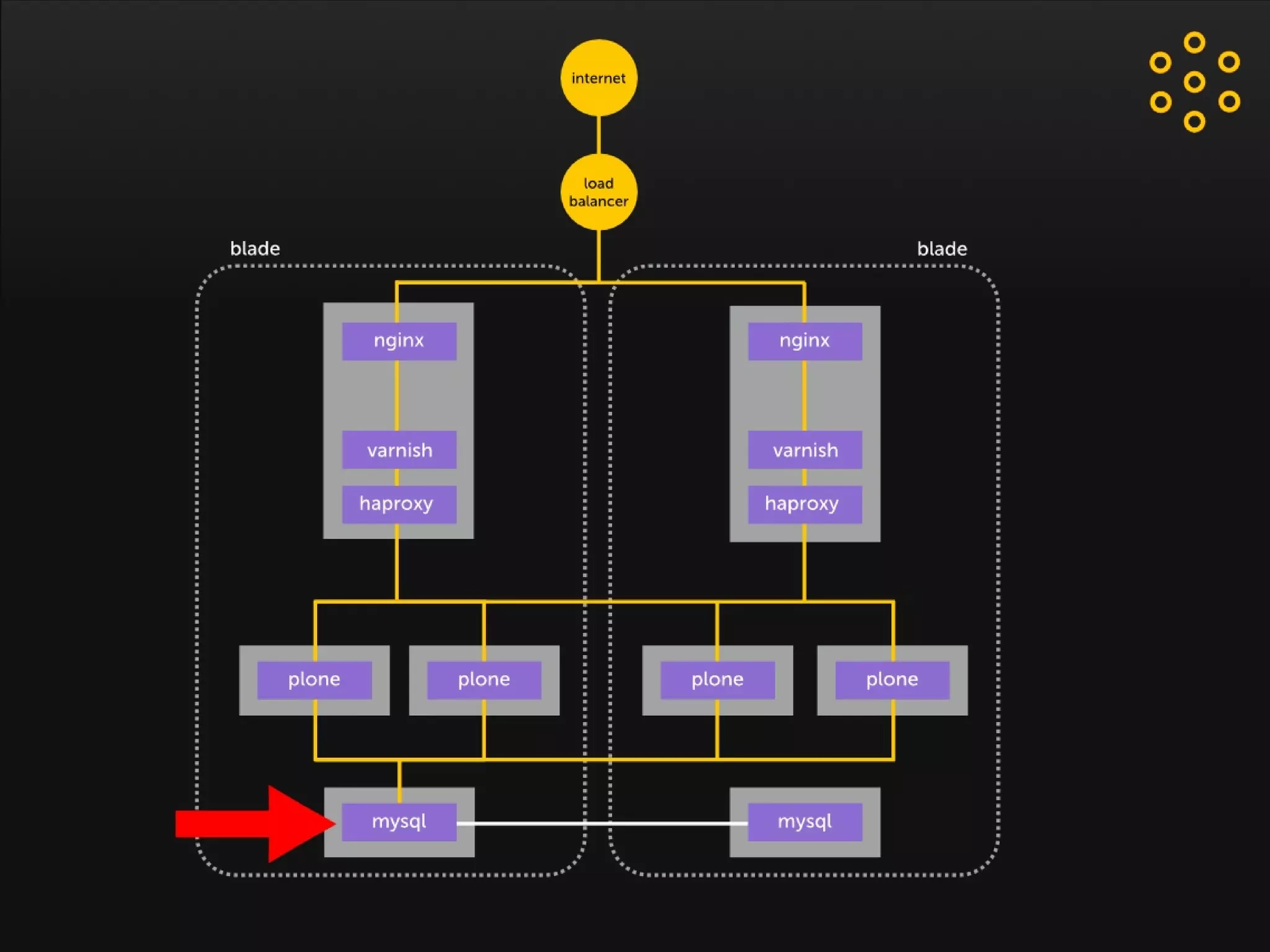

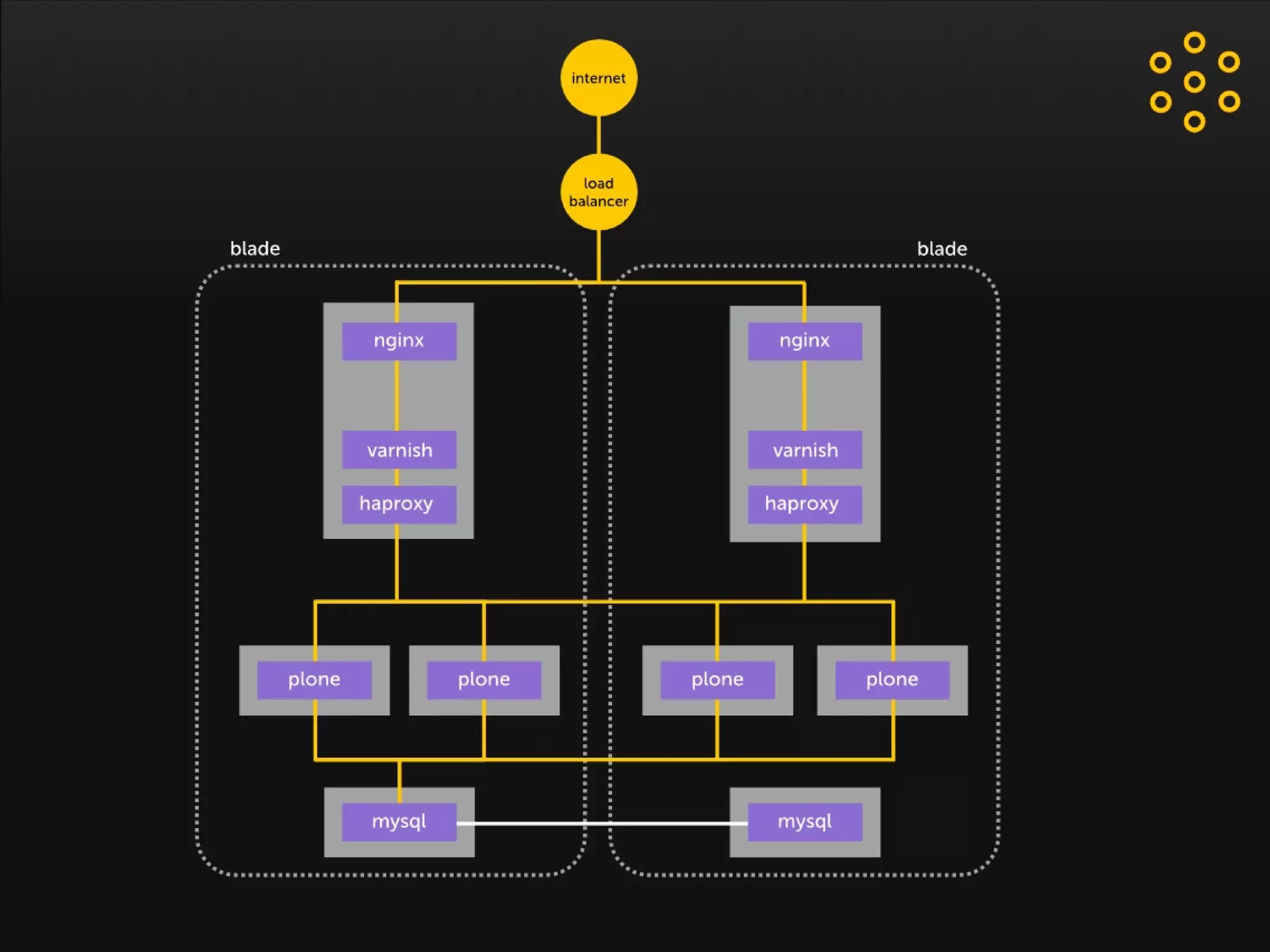

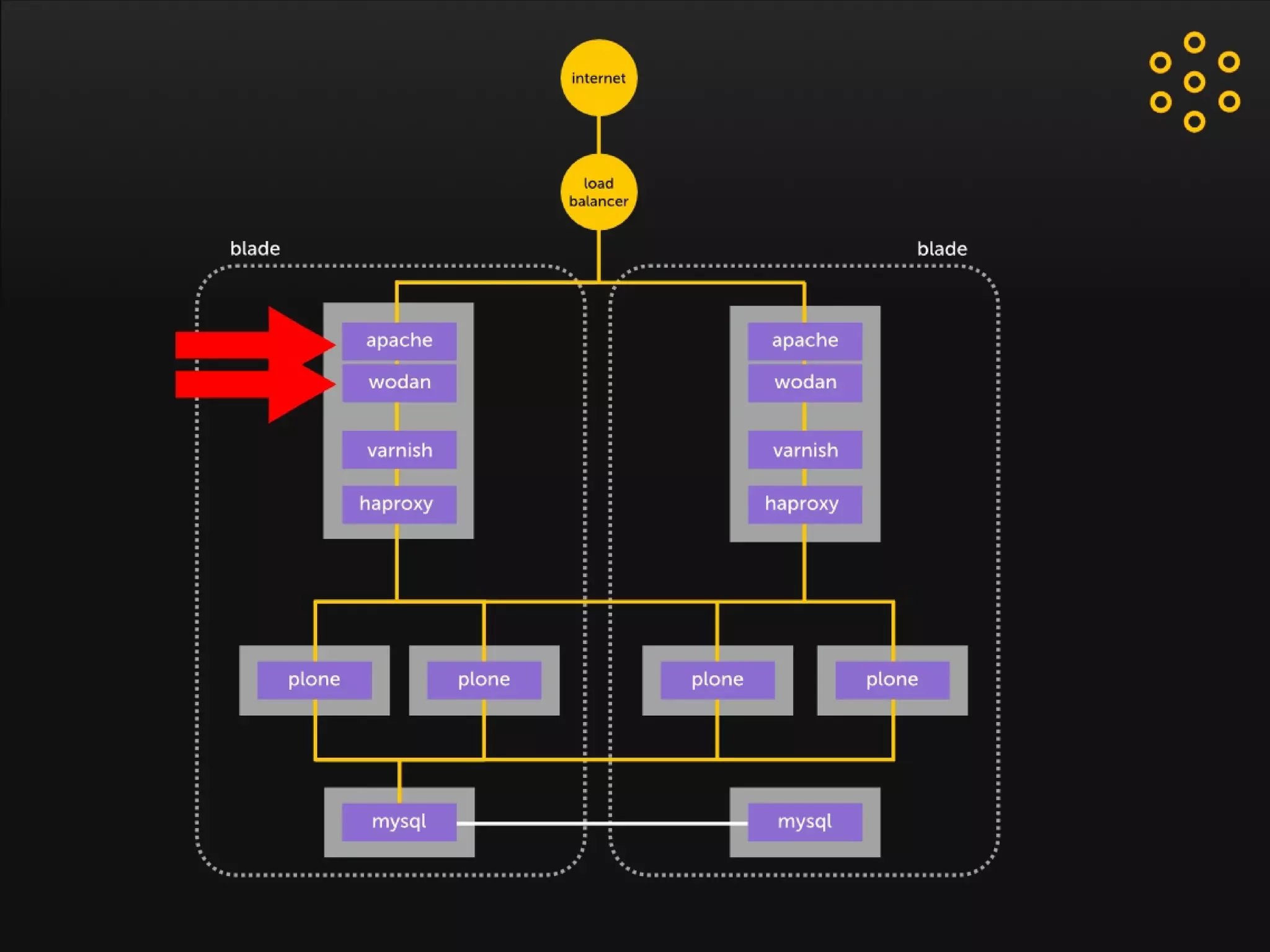

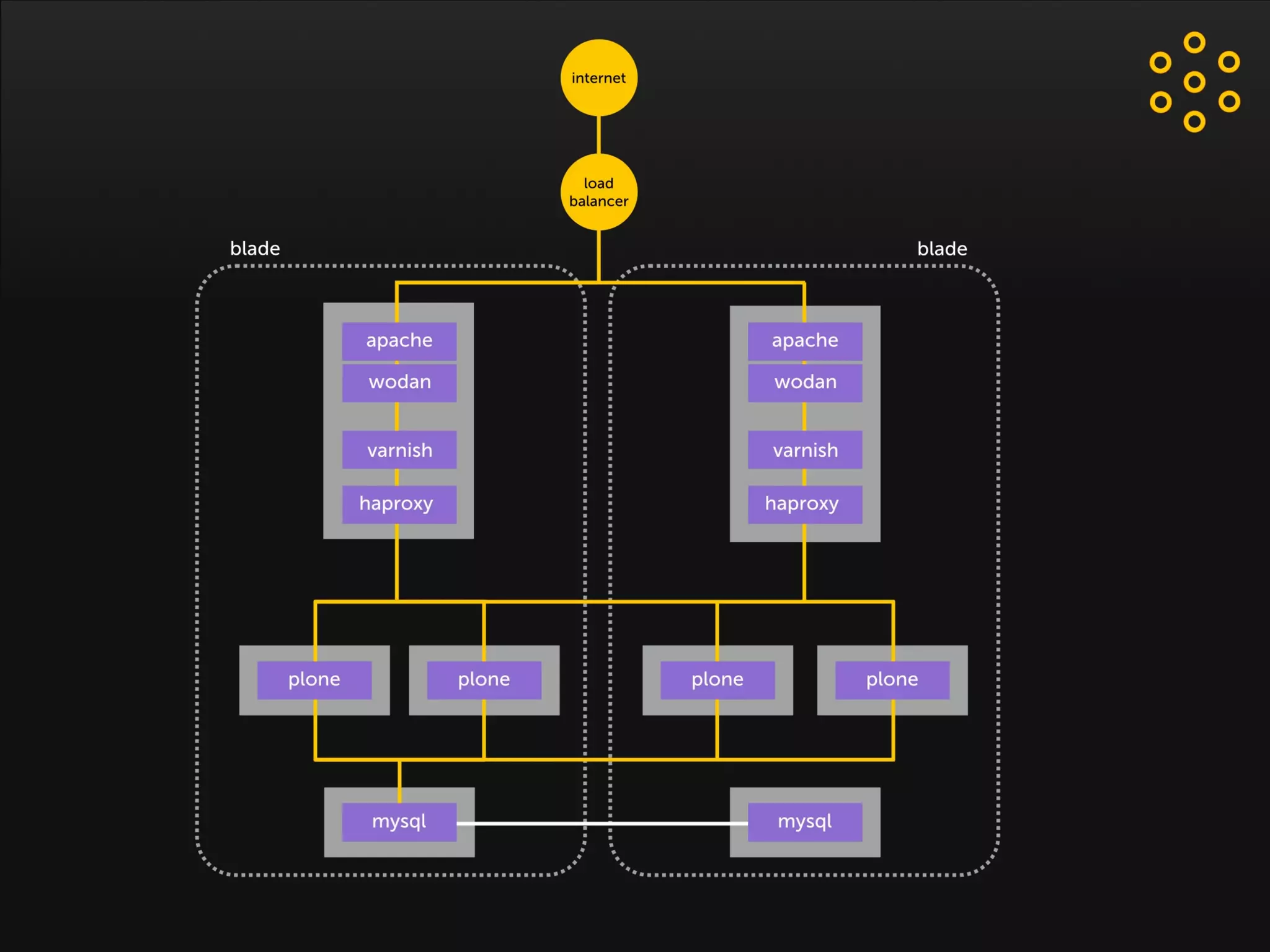

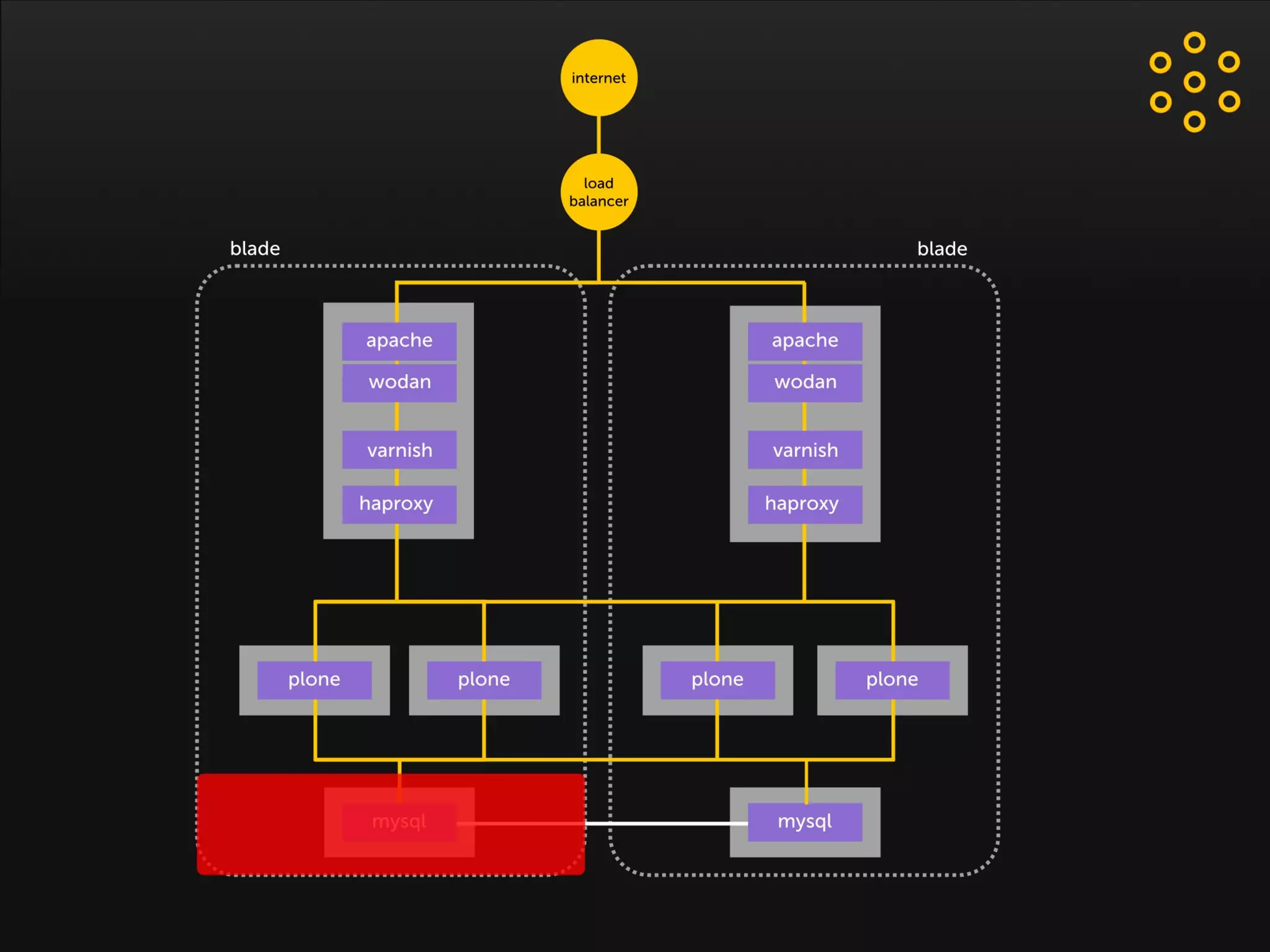

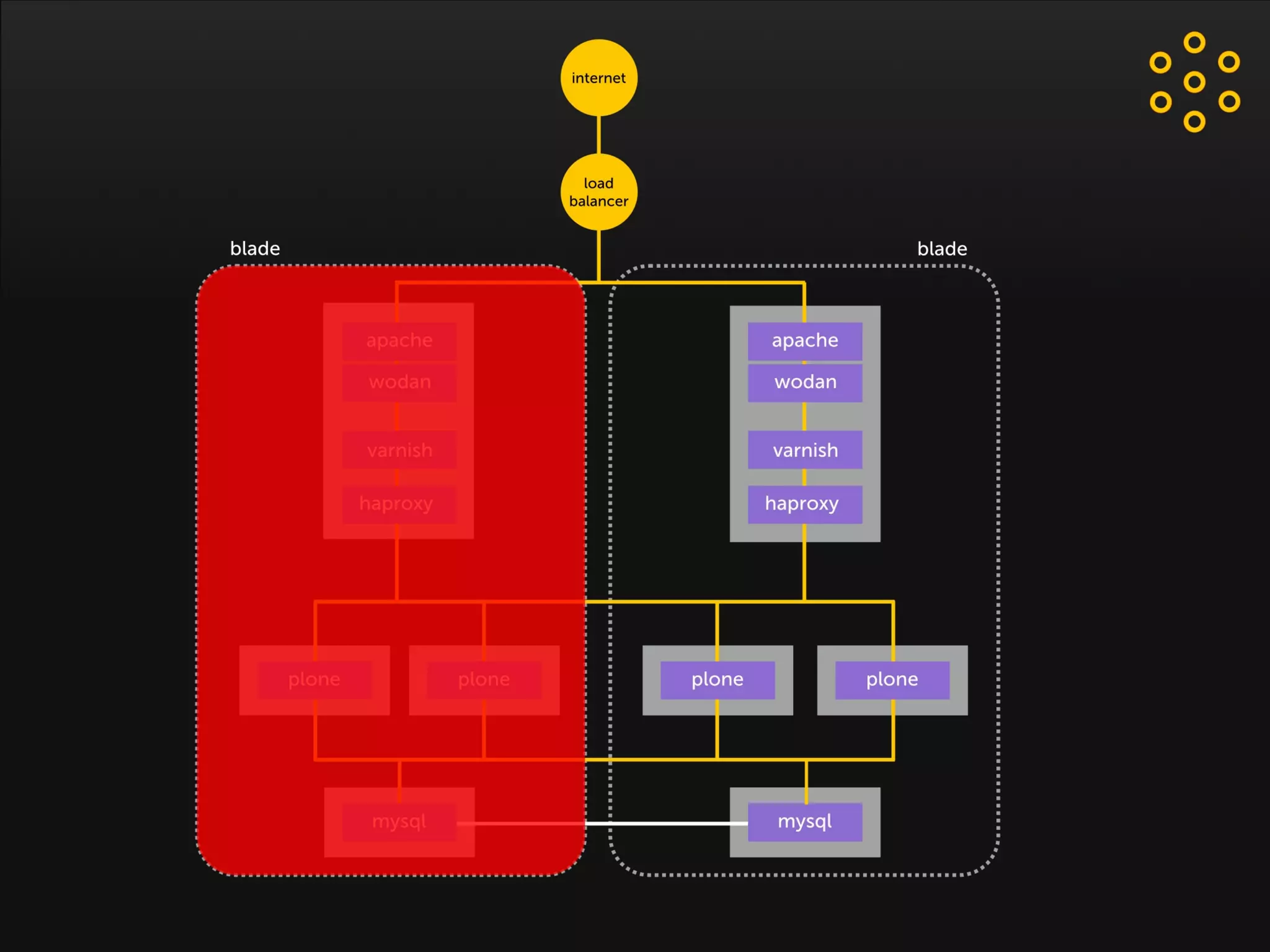

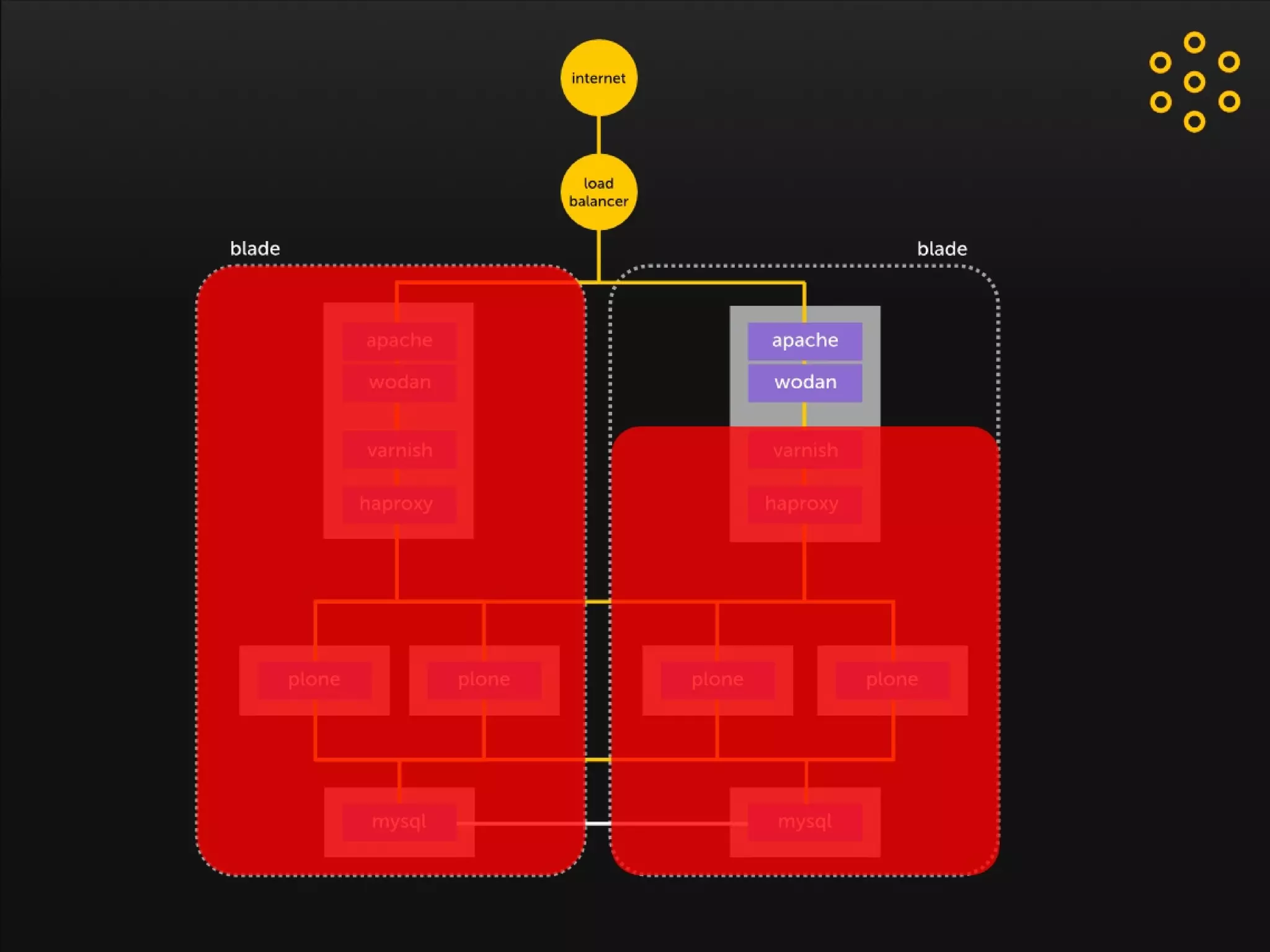

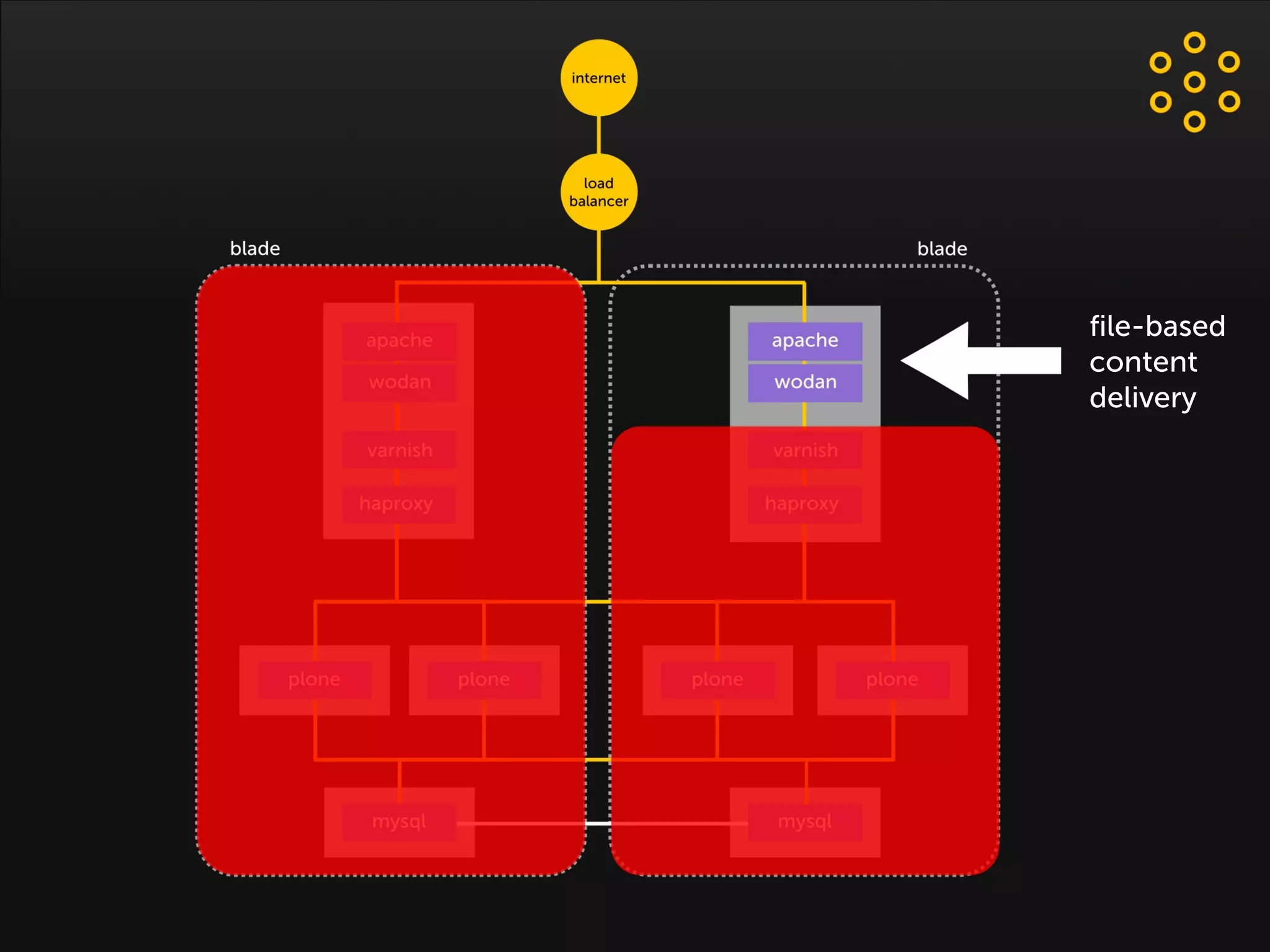

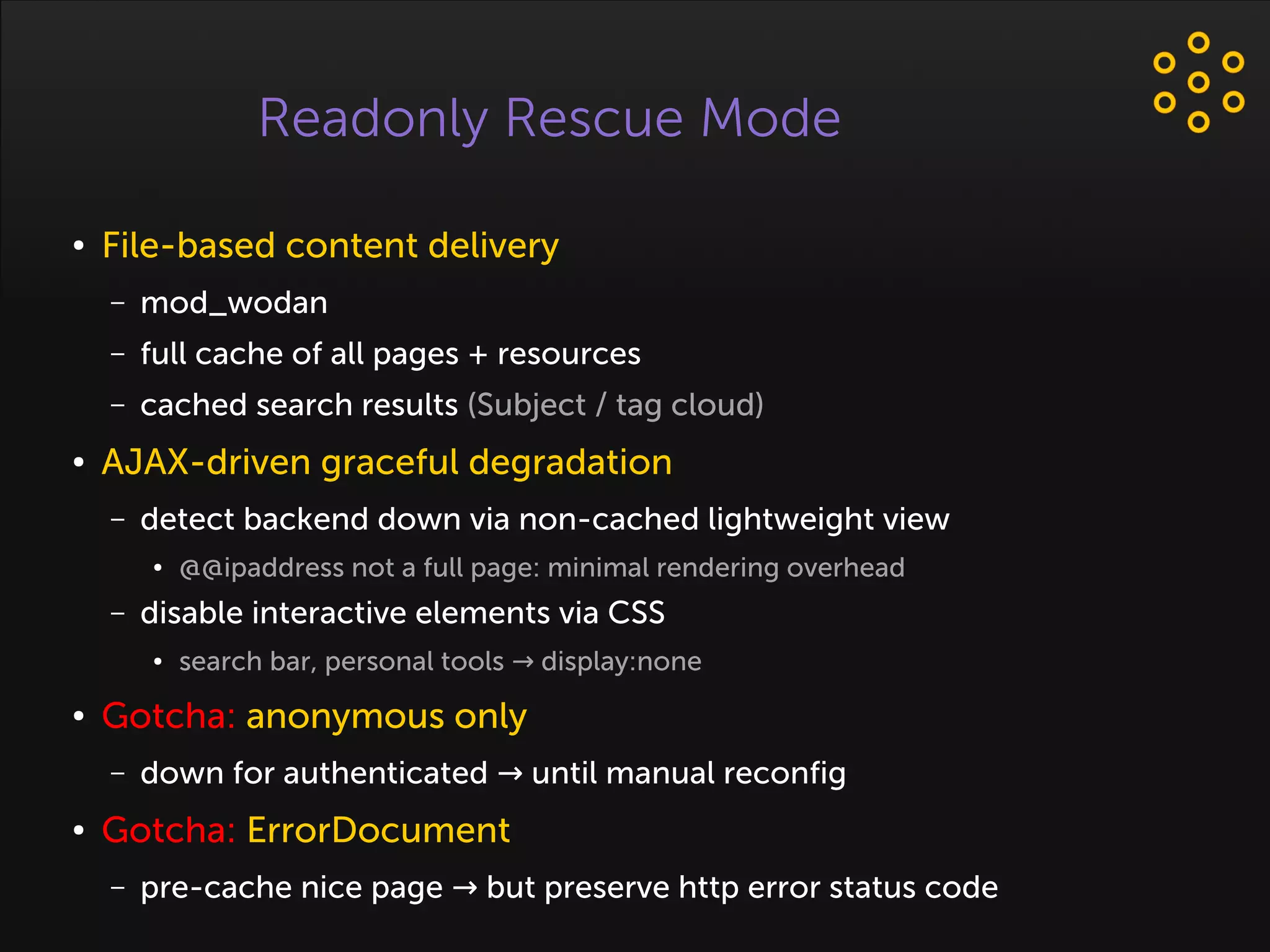

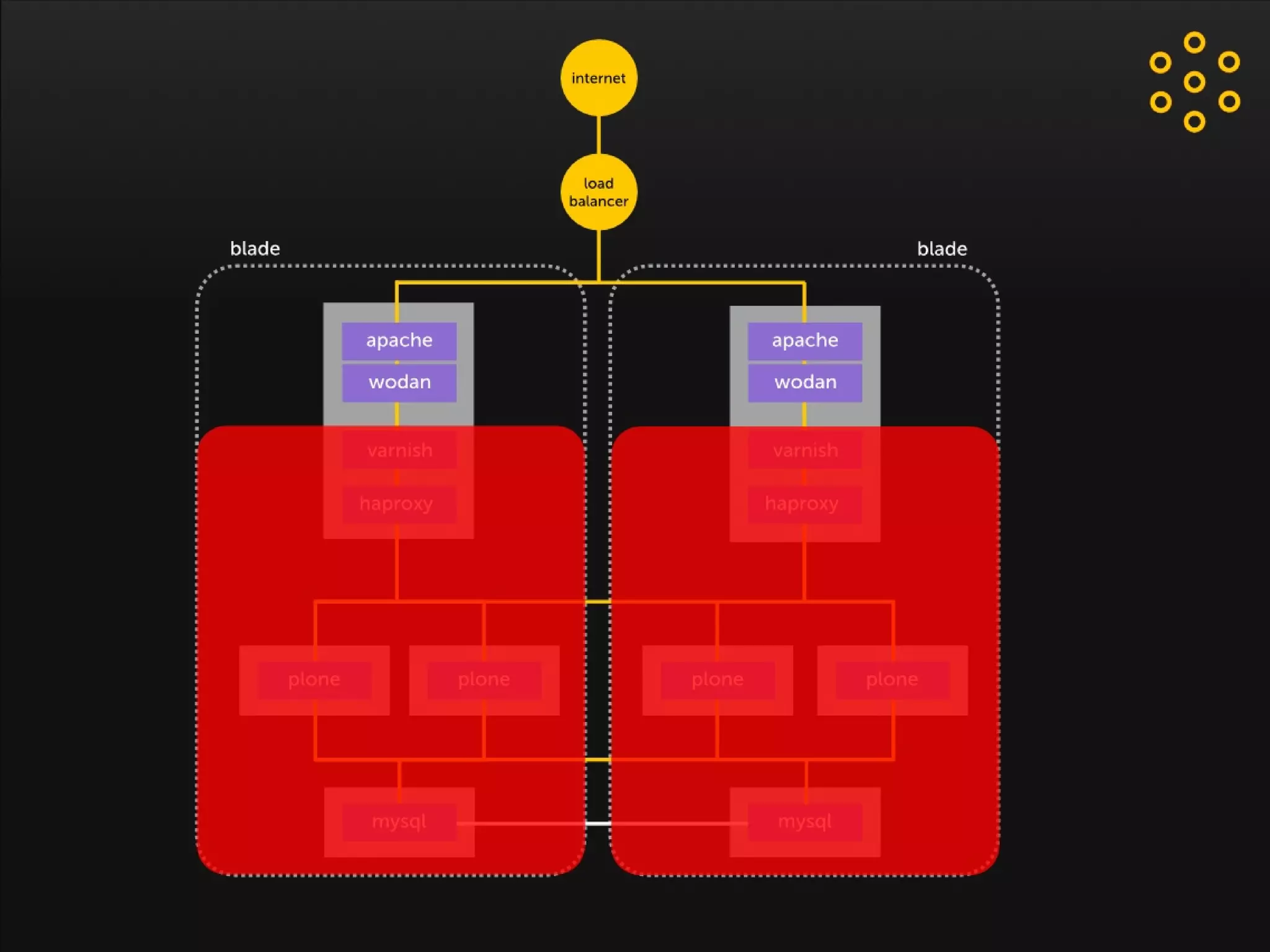

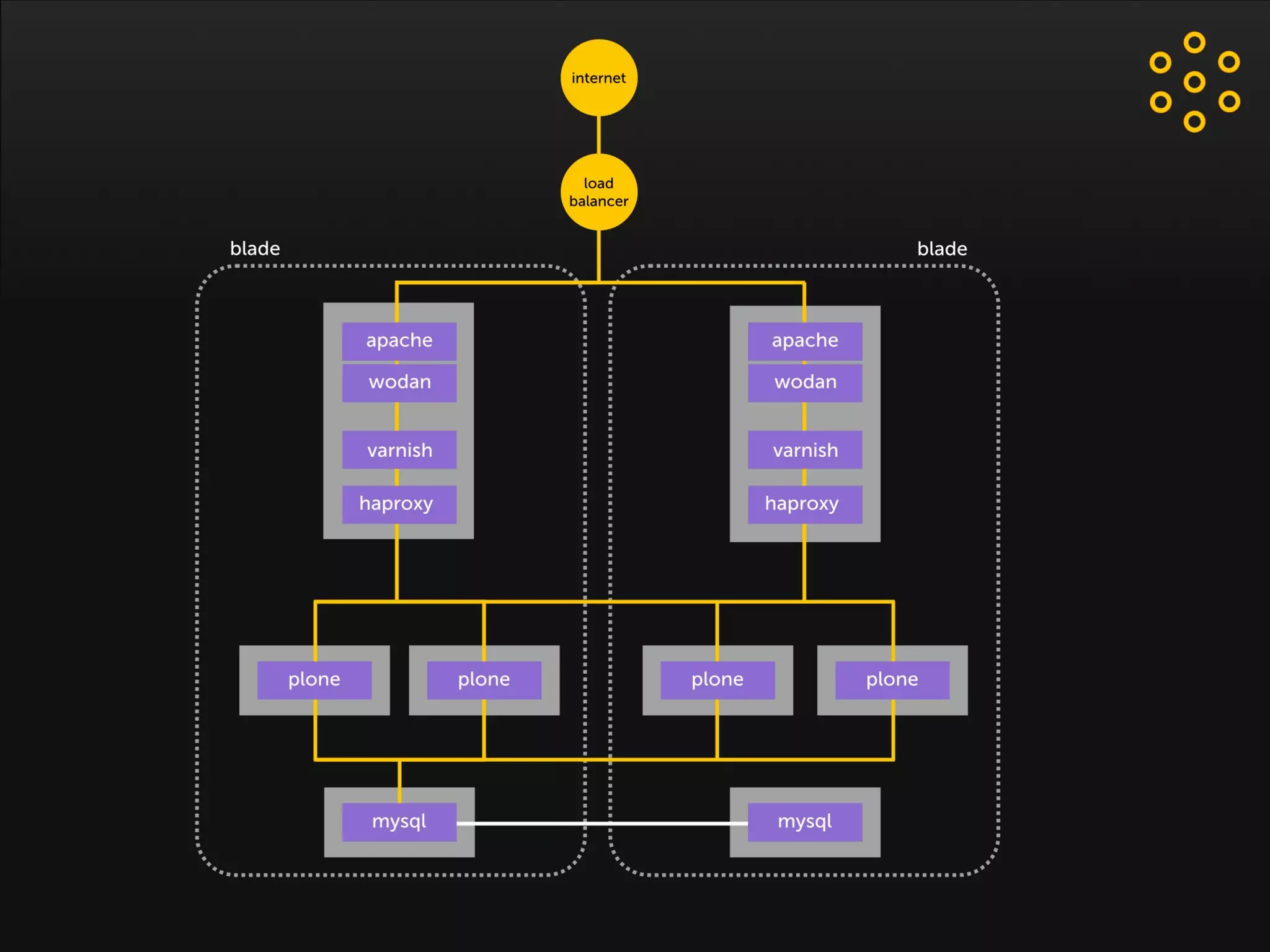

- The document discusses an architecture for providing high availability and performance for a Plone site handling high traffic volumes with a requirement for 100% uptime.

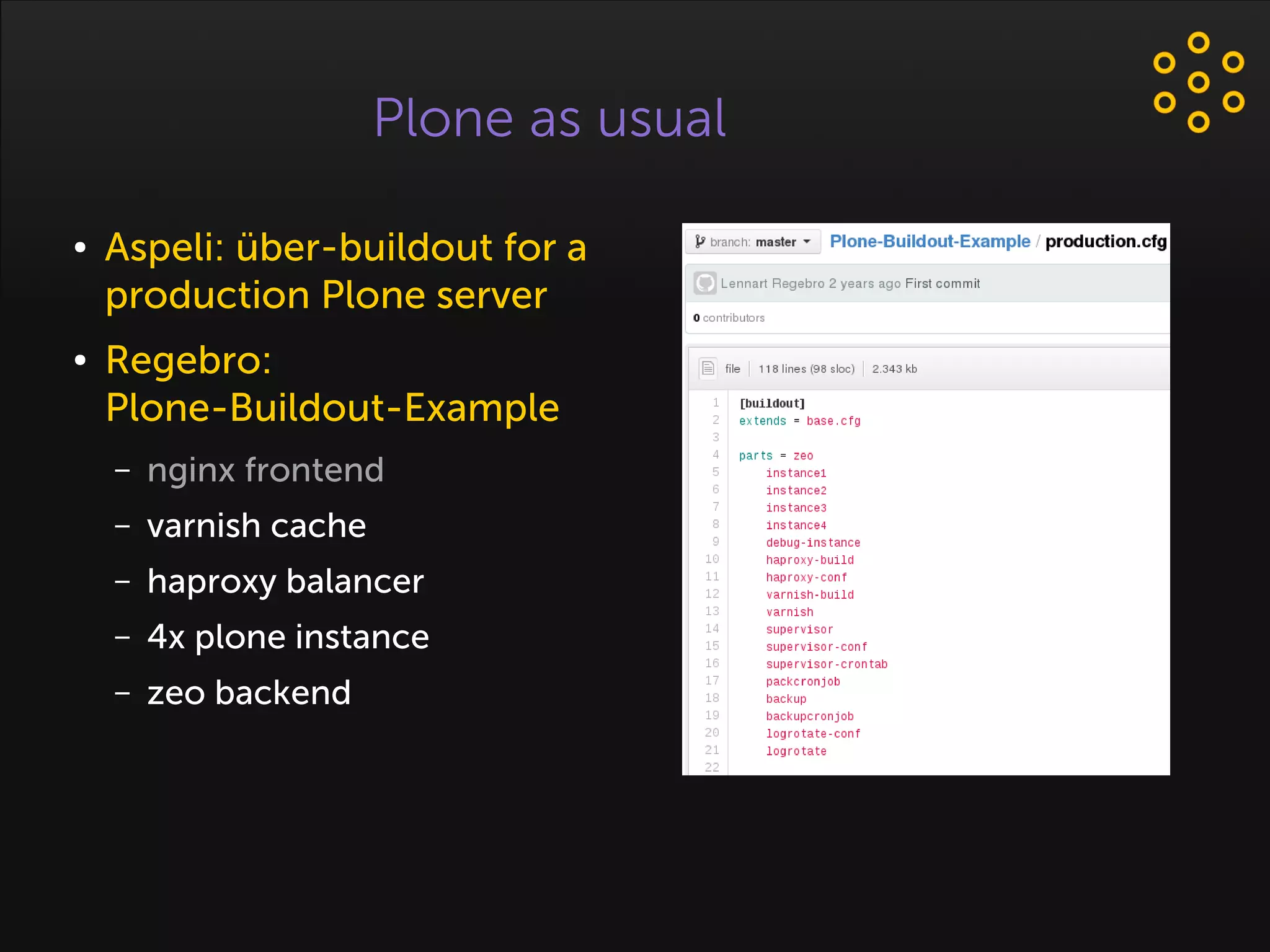

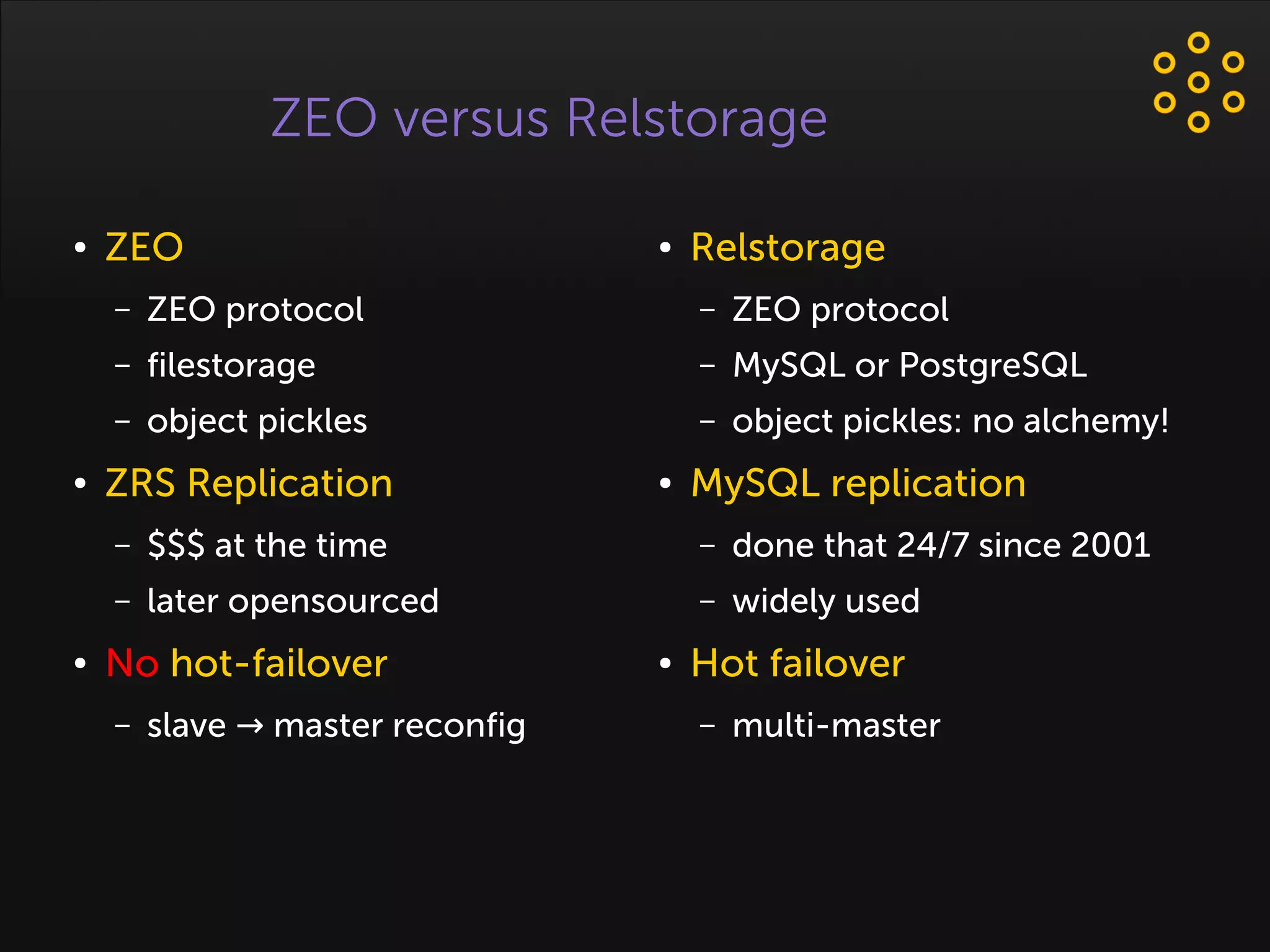

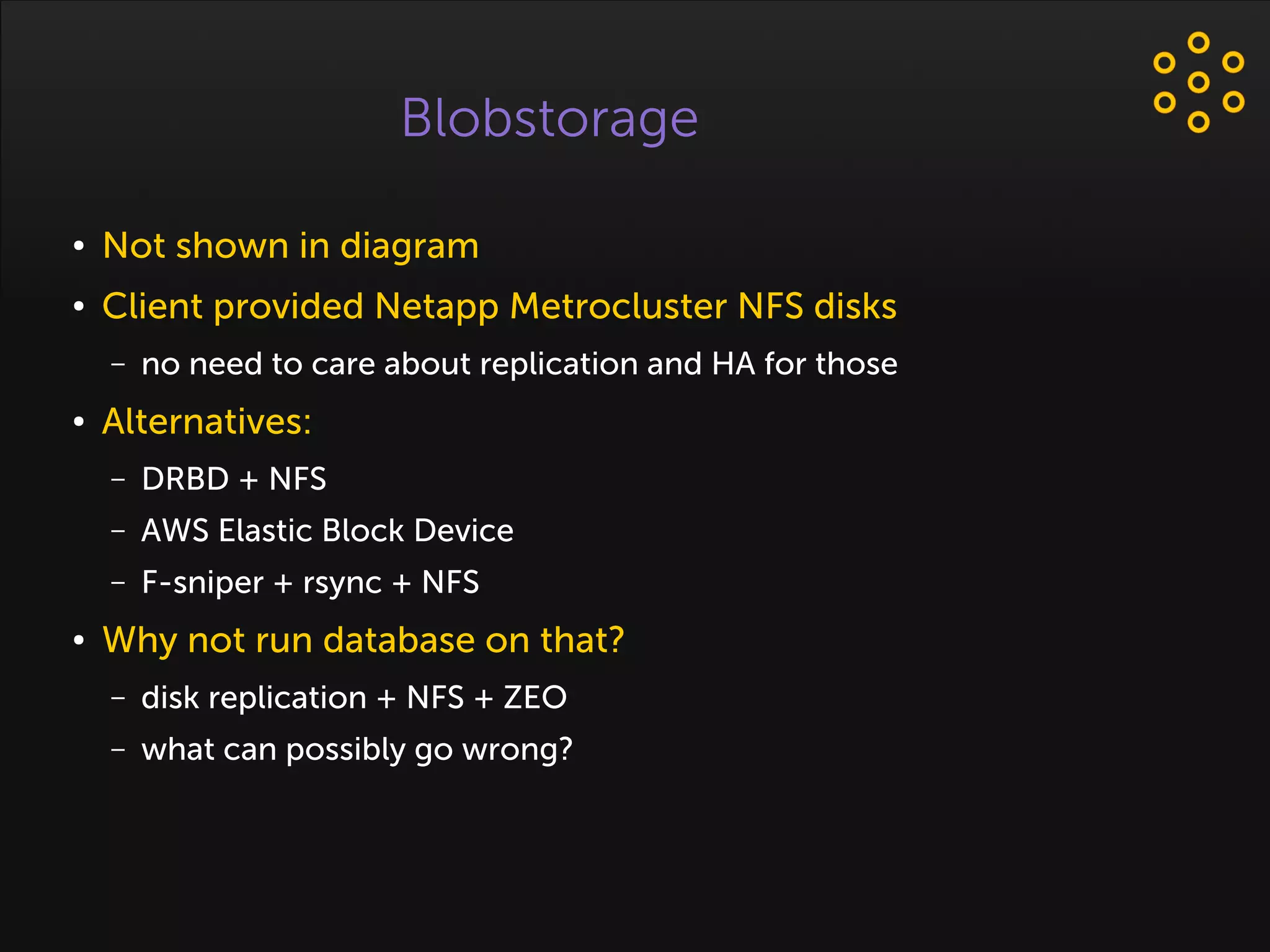

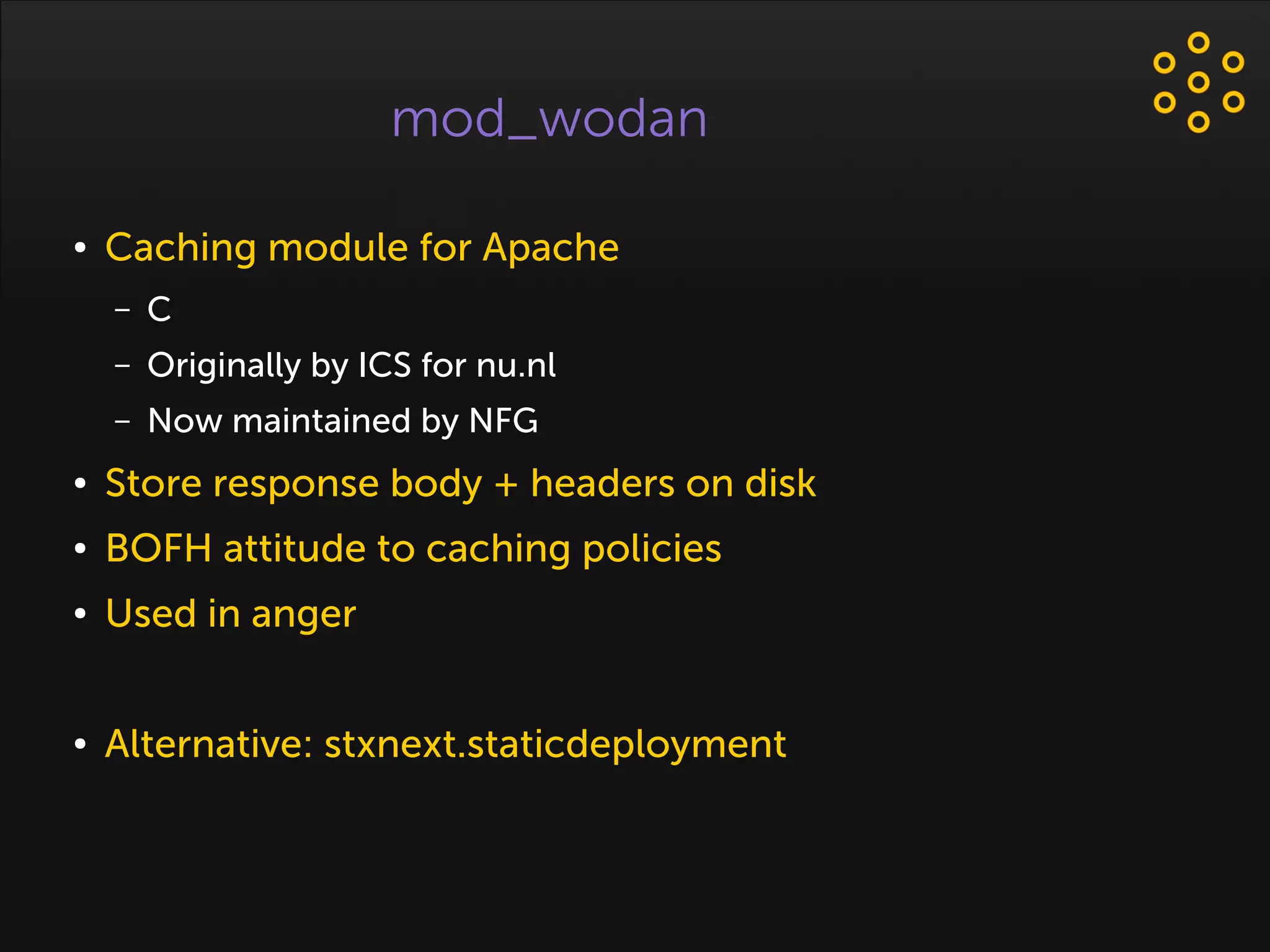

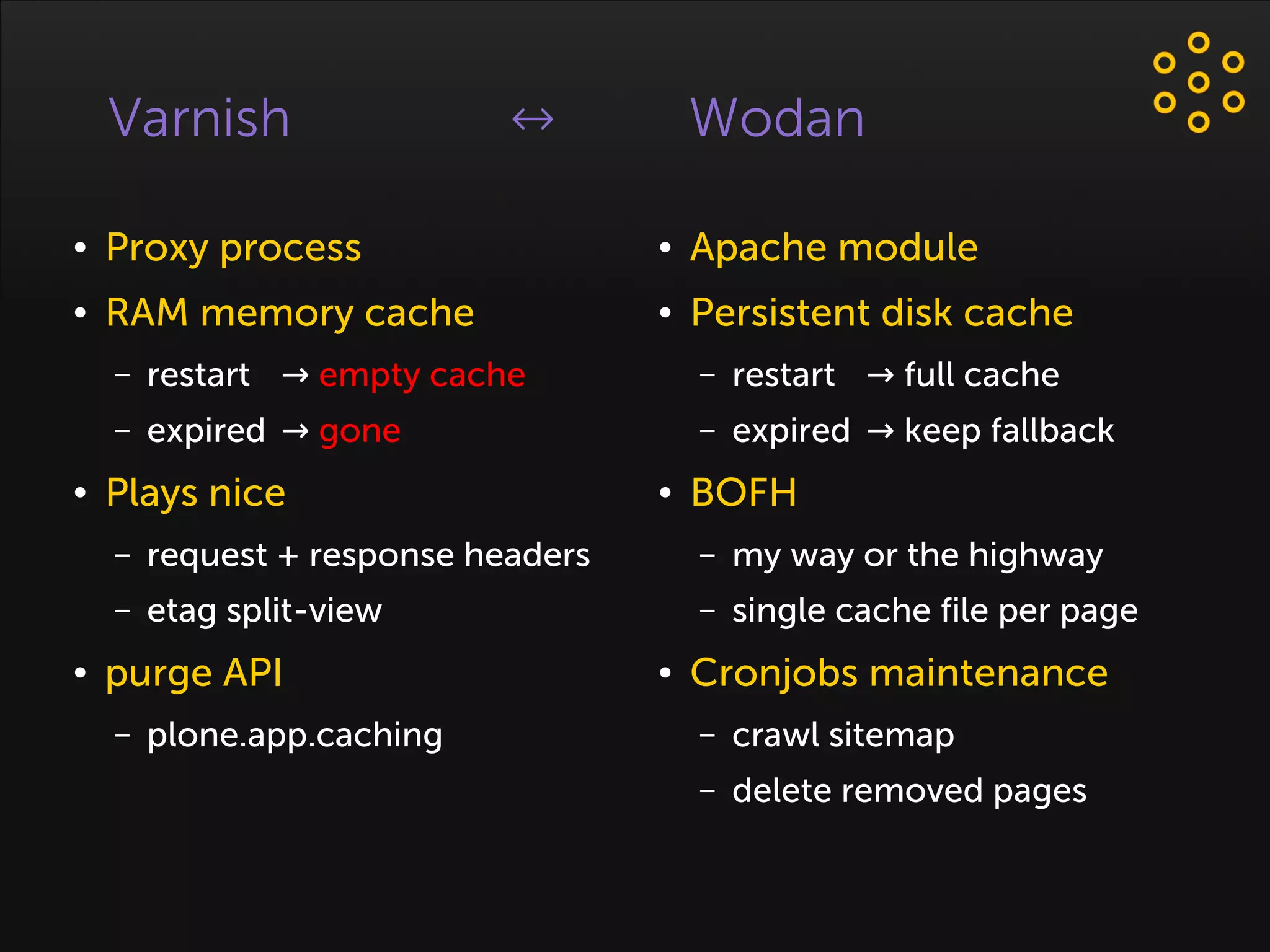

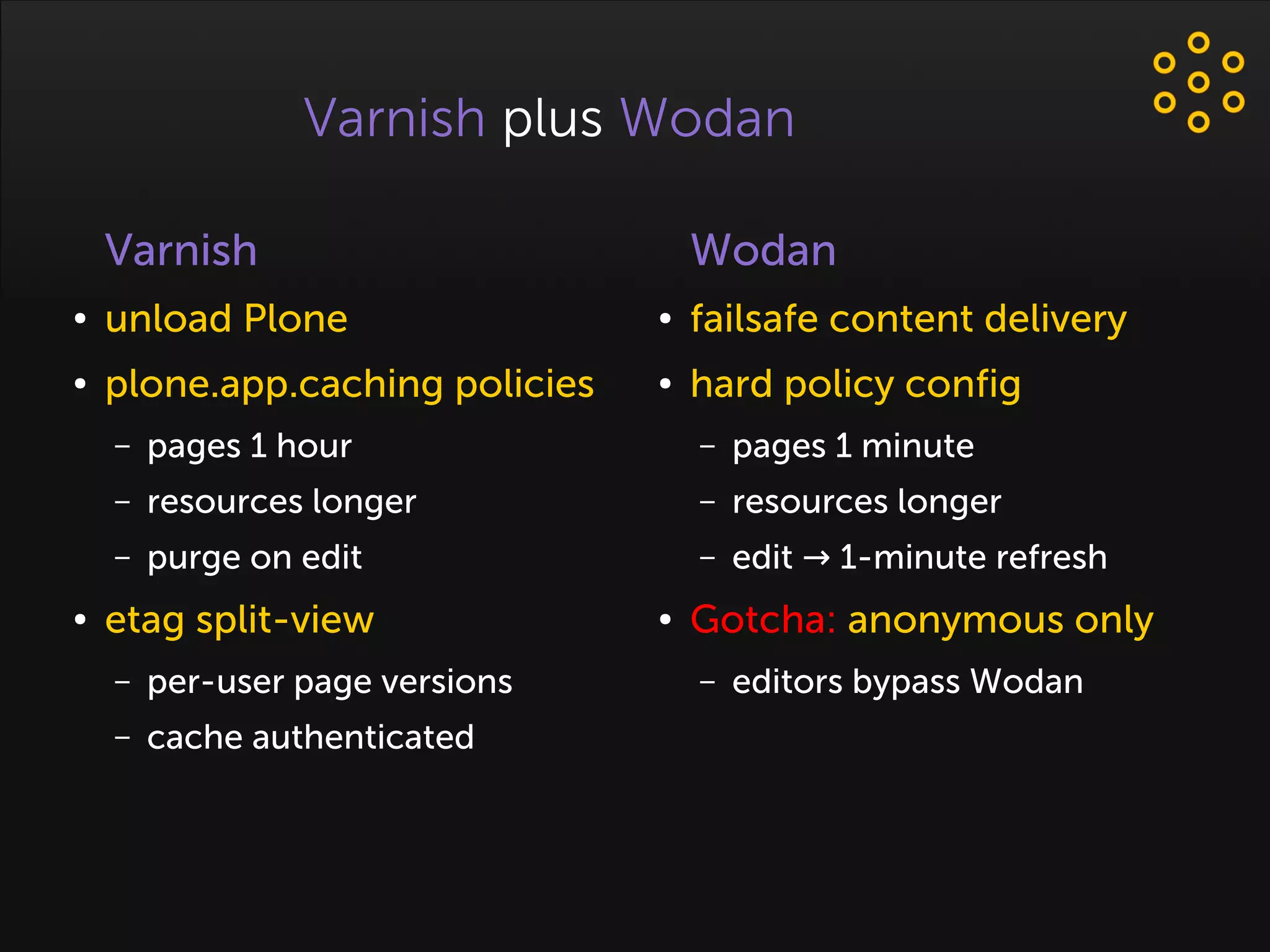

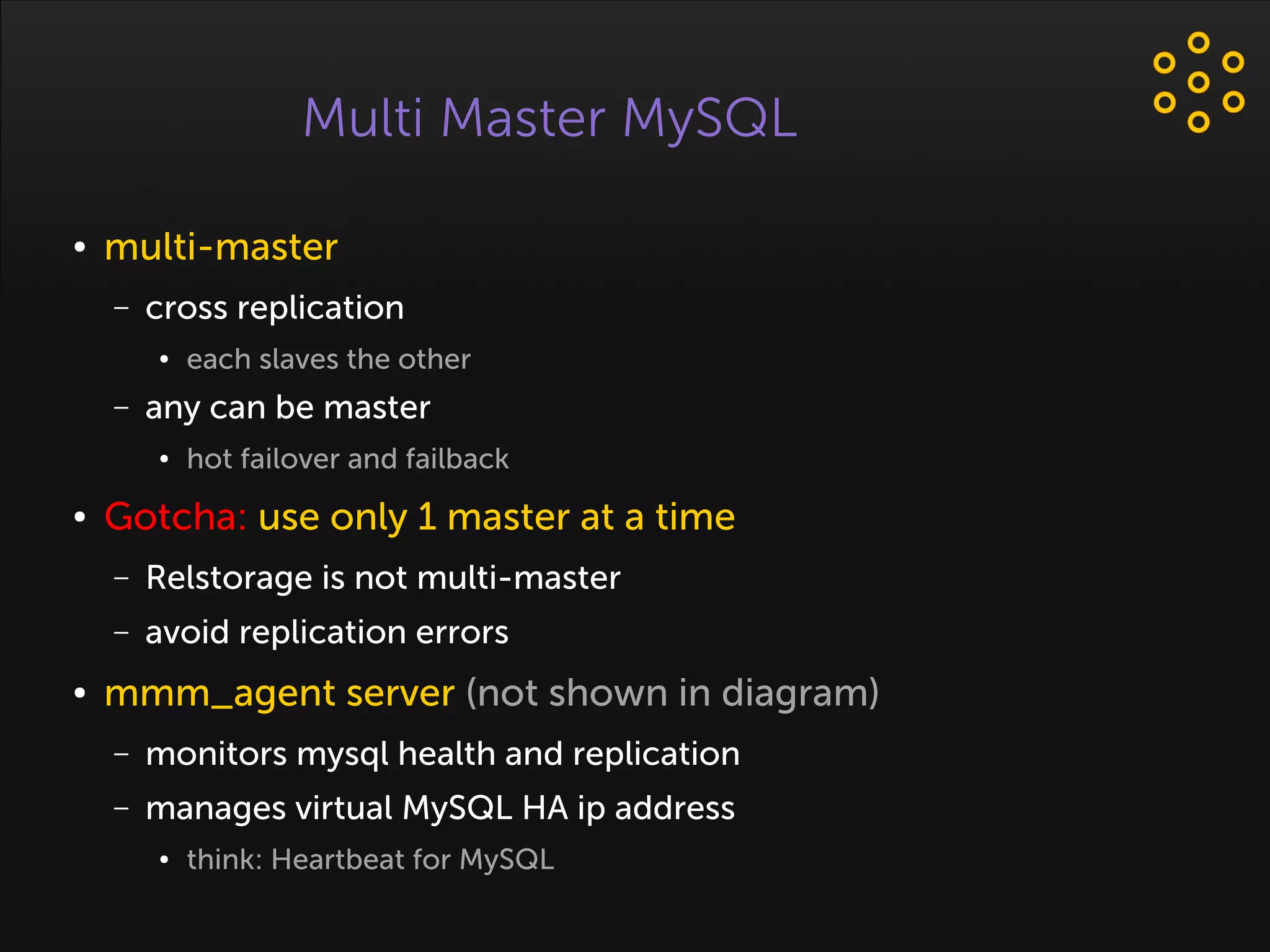

- The proposed architecture uses multiple Plone instances behind a load balancer, with Relstorage (MySQL) replication providing redundancy. Mod_wodan and Varnish are used for caching to improve performance. The design eliminates all single points of failure and allows automated failover.